Gcode Driver for LinuxCNC

justin White

tonyl...@gmail.com

justin White

justin White

justin White

mark maker

Hi Justin,

> My friend is going to help me get a LinuxCNC gcode driver

going for openPnP.

That's good news. It would really be a nice addition.

However, your series of questions has a certain "trajectory", that I'm not sure aims in the right direction.

Telling you this as the developer who added the hole advanced motion stuff to OpenPnP, which includes a 3rd order 7 segment motion planner, plus a simple G-code interpreter for simulated machine testing. I also implemented the Issues & Solutions system that proposes the G-code templates for various G-code controllers. Plus I fixed and extended some of the Open Sources G-code controller firmwares for use with OpenPnP and to better adhere to the NIST standard. I guess I know a thing or two about this stuff 😎

The assumption is you want to use OpenPnP as intended, i.e. for

Pick&Place of electronic components. If that is true, then you

should first ask yourself how LinuxCNC can be employed to

implement OpenPnP's needs, and not the other way around. 😉 Once

you get the basic functionality going, you can always come back a

second time, and think about making special LinuxCNC

goodies available from OpenPnP.

In other words (and with no offense intended): I think you should get your priorities straight. 😅

As an example: Arrow-Jogging will be used in the (early) setup of

the machine to go capture coordinates, but once you've done that,

you will hardly ever use it. Yes, jogging implementation in

OpenPnP is rudimentary, but I don't consider it a problem, simply

because it is adequate for the few uses it has. For finer

navigation there is camera view "drag"-jogging, which I consider

quite sophisticated, i.e. beats arrow-jogging every time. Ergo, I

recommend you don't lose time and sleep over some jogging

features.

Much in OpenPnP is computer vision based, i.e. stored coordinates

are only approximations, and OpenPnP need to interactively and

sometimes iteratively center in on things it "sees". This means

that sophisticated logic inside OpenPnP must interact with the

controller, i.e., with LinuxCNC, in a tight and rapid manner.

Consequently, G-code is generated on the fly, in reaction to the

computer vision results, to adjust the alignment of a part, or

center in on a fiducial on a PCB, for instance. This even includes

deciding/branching on what happens next, e.g. when a pick failed

and vision size check detects a part is missing or tomb-stoned on

the nozzle tip, so it has to discard and retry. This is completely

different from almost all other NC applications, where the whole

G-code is generated up front, and where "interactiveness" (if

there is any at all) is restricted to simple canned cycles like

probing.

This also explains your next question: PICK_COMMAND and

PLACE_COMMAND are no longer used, because the complexity and

interactiveness of these operations has since become much more

sophisticated. We optionally use vacuum sensing to establish the

required vacuum levels in minimal time. And to check if a part has

been successfully picked, and again this decides what happens next

(discard and retry a few times, then set error state and skip). We

integrate Contact Probing into the pick and place operations, to

auto-learn part heights or the precise PCB + solder paste height

for instance. All this is also highly configurable and parametric,

e.g. by the nozzle tip that is currently loaded.

There isn't a chance we can implement all the required logic in

once piece of G-code, hence PICK_COMMAND and PLACE_COMMAND that

are simply defined globally or per nozzle are no longer adequate.

Instead this is decomposed into the smaller machine objects that

are involved. The vacuum valve as an actuator, the vacuum sensing

actuator, the contact probing actuator, the pump actuator, etc.

OpenPnP Issues & Solutions will create and wire up all those

properly for you.

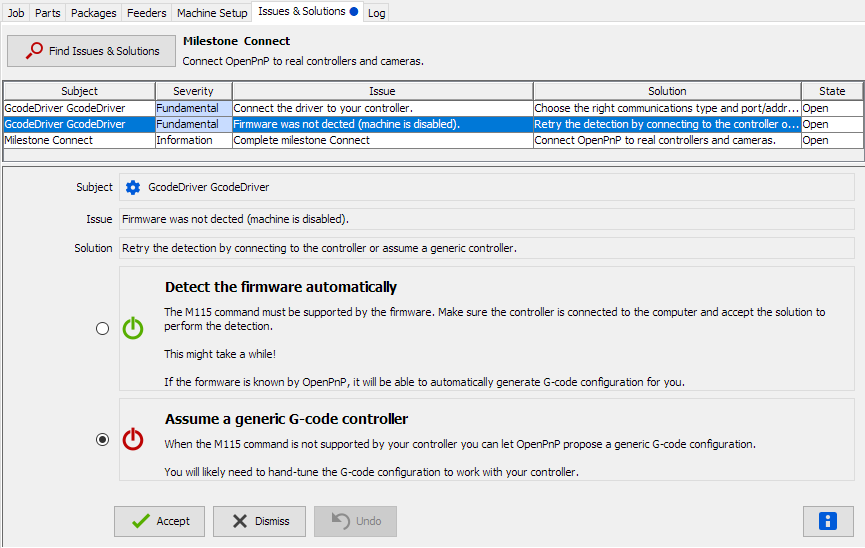

Issues & Solutions will then go through all this and propose

G-code snippets for you. Some are mostly automatic, others you

must tell it. Issues & Solutions will query the type of the

controller (M115) and adapt to known firmware dialects. For

unknown firmwares, you can use the "Generic" profile.

> Accelleration is not considered in Gcode commands so I

don't quite understand what this is doing. Either way I'd need

to turn this kind of thing off since LinuxCNC handles all of

this internally.

OpenPnP is dynamically setting the allowable feed-rate,

acceleration and (optionally) jerk limits.

Example:

When you pick & place small passives you want it to be as fast as possible, because you have dozens or hundreds of these per board. So you want to configure your machine to have the highest feed-rate, acceleration and (optionally) jerk limits.

On the other hand, when you pick & place a heavy inductor or

a large fine-pitch IC you need to make sure it does not slip on

the nozzle tip. So you need to tone down on feed-rate,

acceleration and (optionally) jerk limits.

That's why OpenPnP can set a per part speed limit. If you set 50% it will limit the feed-rate to 50%, the acceleration to 25% and the jerk to 12.5%, which due to the linear/quadratic/cubic nature of these limits, will result in exactly half the overall motion speed, i.e. double the motion time.

The importance of controlling these limits to make moves "gentler" is illustrated by these experiments:

https://www.youtube.com/watch?v=6SBDApObbz0

Not surprising, the actual "jerking around" that creates

vibration in a machine or might make a part slip on the nozzle

tip, comes from the jerk limit, not from the feed-rate

limit.

The speed control is also typically used for gentler nozzle tip changer moves and gentler feeder actuation (drag, push-pull, etc.) without shaking parts out.

https://youtu.be/5QcJ2ziIJ14?t=240

The following shows you the principle of simulated 3rd order

motion control ("jerk control") on a controller that does not have

jerk control but only acceleration control. It is very important

to reduce vibrations etc. on affordable, i.e. mechanically not

very stiff and heavy machines. It is based on shaping acceleration

limits to mimic jerk controlled ramps:

https://www.youtube.com/watch?v=cH0SF2D6FhM

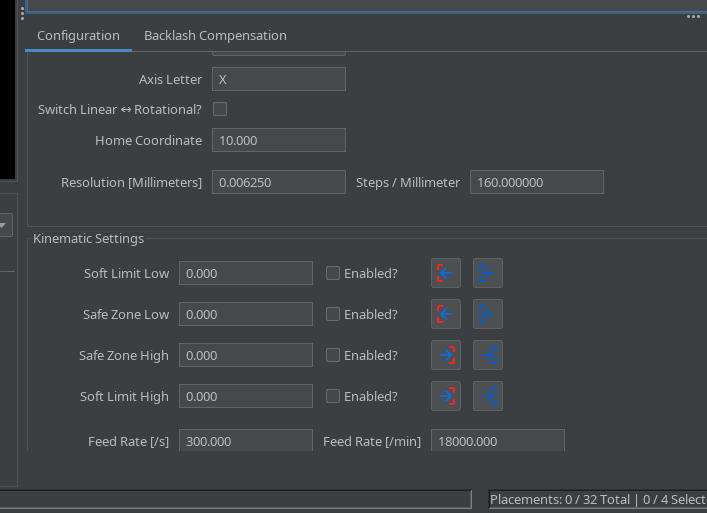

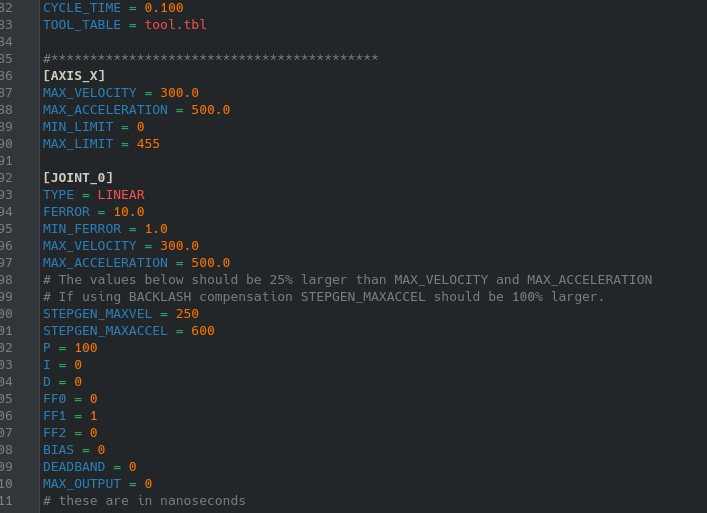

A quick search shows that LinuxCNC does not seem to have G-code (M201, M204)

to set acceleration limits dynamically. I only found static

settings in an .ini file. Without acceleration control, you can

still use OpenPnP, but it would be a severe limitation.

Frankly, I'm surprised and I hope I missed something! If this is confirmed, and given the available alternatives of external controllers, I would consider this a no-go.

_Mark

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/ab96c695-3954-473a-b631-c49569ad7f8cn%40googlegroups.com.

justin White

However, your series of questions has a certain "trajectory", that I'm not sure aims in the right direction.

.....Open Sources G-code controller firmwares for use with OpenPnP and to better adhere to the NIST standard.

This also explains your next question: PICK_COMMAND and PLACE_COMMAND are no longer used, because the complexity and interactiveness of these operations has since become much more sophisticated. We optionally use vacuum sensing to establish the required vacuum levels in minimal time. And to check if a part has been successfully picked, and again this decides what happens next (discard and retry a few times, then set error state and skip). We integrate Contact Probing into the pick and place operations, to auto-learn part heights or the precise PCB + solder paste height for instance. All this is also highly configurable and parametric, e.g. by the nozzle tip that is currently loaded.

There isn't a chance we can implement all the required logic in once piece of G-code, hence PICK_COMMAND and PLACE_COMMAND that are simply defined globally or per nozzle are no longer adequate. Instead this is decomposed into the smaller machine objects that are involved. The vacuum valve as an actuator, the vacuum sensing actuator, the contact probing actuator, the pump actuator, etc. OpenPnP Issues & Solutions will create and wire up all those properly for you.

A quick search shows that LinuxCNC does not seem to have G-code (M201, M204) to set acceleration limits dynamically. I only found static settings in an .ini file. Without acceleration control, you can still use OpenPnP, but it would be a severe limitation.

[JOINT_1]

MAX_VELOCITY = 6

MAX_ACCELERATION = 15

setp hm2_[HOSTMOT2](BOARD).0.stepgen.01.maxaccel [JOINT_1]STEPGEN_MAXACCEL

setp hm2_[HOSTMOT2](BOARD).0.stepgen.01.maxvel [JOINT_1]STEPGEN_MAXVEL

#!/bin/bash

maxaccel=$1

maxvel =$2

halcmd setp hm2_[HOSTMOT2](BOARD).0.stepgen.01.maxaccel $maxaccel

halcmd setp hm2_[HOSTMOT2](BOARD).0.stepgen.01.maxvel $maxvel

exit 0

You received this message because you are subscribed to a topic in the Google Groups "OpenPnP" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/openpnp/IshRY1IM80w/unsubscribe.

To unsubscribe from this group and all its topics, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/3700f37e-1085-35c2-2d2f-771e25d4751a%40makr.zone.

mark maker

> Everytime LinuxCNC is mentioned around here it's sort of shunned off rather than taken seriously.

I beg to differ. I tried to help multiple times now, but then

never heard back. There are hurdles to be taken seriously, that's

all I'm saying.

Regarding your bash file, setting the acceleration:

Be aware that these need to be settable fully "on the fly",

during ongoing motion. They must only be effective for the motion

commands following later, not the ones already executing,

and not the ones already in the queue. I'm not saying it won't

work the way you said, just that you should double check.

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CA%2BQ02MN%3DeDWbY2rXddtwcY-9P29GBZGb-KUu%2B5mcaCKf-0xCXQ%40mail.gmail.com.

justin White

Regarding your bash file, setting the acceleration:

Be aware that these need to be settable fully "on the fly", during ongoing motion. They must only be effective for the motion commands following later, not the ones already executing, and not the ones already in the queue. I'm not saying it won't work the way you said, just that you should double check.

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/b03e73c6-2034-dbd0-6879-22090fd0be18%40makr.zone.

justin White

Jarosław Karwik

justin White

Jarosław Karwik

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/6531de0f-59e9-487e-9ab1-dddd90af0e0cn%40googlegroups.com.

justin White

tonyl...@gmail.com

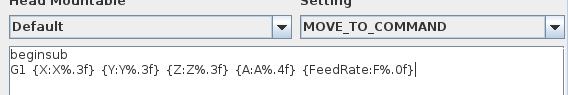

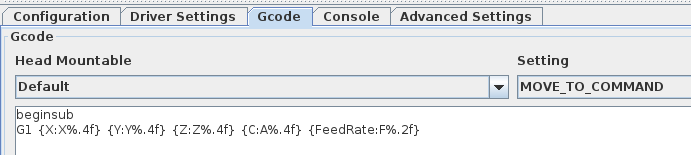

G1 {X:X%.4f} {Y:Y%.4f} {Z:Z%.4f} {A:A%.4f} {FeedRate:F%.2f}

G1 X6.3005 Y1.2391 F4841.10

justin White

set mdi home -1

tonyl...@gmail.com

mark maker

Like I said before (quoting myself):

> Issues & Solutions will then go through all this and

propose G-code snippets for you. Some are mostly automatic,

others you must tell it. Issues & Solutions will query the

type of the controller (M115) and adapt to known firmware

dialects. For unknown firmwares, you can use the generic

profile.

https://github.com/openpnp/openpnp/wiki/Issues-and-Solutions#connect-milestone

This will propose "standard" G-code and Regex and as LinuxCNC

seems to have a full G-code dialect, this is certainly a good

start. If you really (?) need to prepend all these with set

mdi it is still easier to do it after the generic

proposal has been made.

Side note: I'm still somewhat reluctant to believe that in

the whole LinuxCNC universe, nobody made a genuine G-code

server, where you can send commands without the

proprietary "set mdi" stuff. With such a program as an

intermediary, given it is Open Source, it should be easy to add

new G- and M-codes that do stuff outside the built-in commands.

Note: M204 is likely not your only problem. I haven't seen ways

to actually report stuff back, like M105

for (analog) sensors, and M114

for position, on other controllers.

> Most of that I get, if you look at the docs it says not to use the old PICK and PLACE methods and to use the new method which was basically described as being alot more complicated.

You should just let Issues & Solutions guide you, using the

above generic profile. I'm sure you'll find it easy. I made

I&S to make things easy, but also to reduce the support load

in this group here. If you continue second-guessing everything, I

will no longer bother you with my unwanted help.

> M204 ... but I'd rather not deal with it right away if it's possible to avoid that specific Mcode. Is the "old" method possible to use?

Yes. As long as you use the primitive ToolpathFeedRate

method, any acceleration control is switched off:

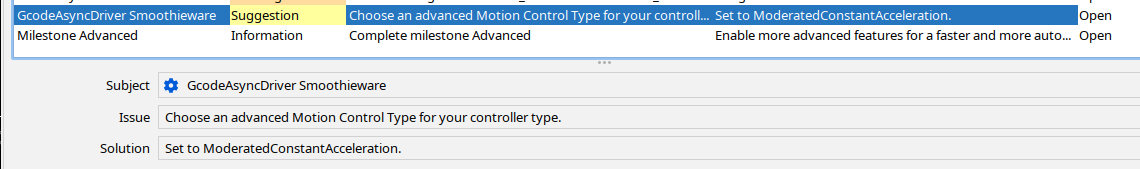

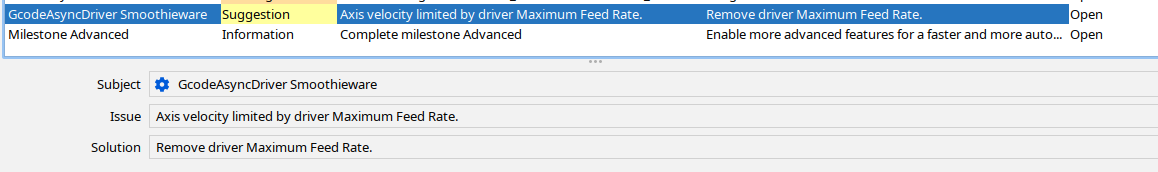

https://github.com/openpnp/openpnp/wiki/GcodeAsyncDriver#motion-control-type

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/7bec9c19-2006-454d-b956-d8407fc54c3dn%40googlegroups.com.

justin White

Like I said before (quoting myself):

> Issues & Solutions will then go through all this and propose G-code snippets for you. Some are mostly automatic, others you must tell it. Issues & Solutions will query the type of the controller (M115) and adapt to known firmware dialects. For unknown firmwares, you can use the generic profile.

When I had marlin firmware on the Octopus I initially used I went through the issues and solutions wizard, all I was getting was "not supported on this platform" and when I selected the gcodeAsync driver it just told me to use the normal GcodeDriver. OpenPnP could read the firmware on the Marlin and I could issue G0 moves so I know things were connected.

Side note: I'm still somewhat reluctant to believe that in the whole LinuxCNC universe, nobody made a genuine G-code server, where you can send commands without the proprietary "set mdi" stuff. With such a program as an intermediary, given it is Open Source, it should be easy to add new G- and M-codes that do stuff outside the built-in commands.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/5bc82320-5312-d880-de72-280fa0a4c782%40makr.zone.

Chris Campbell

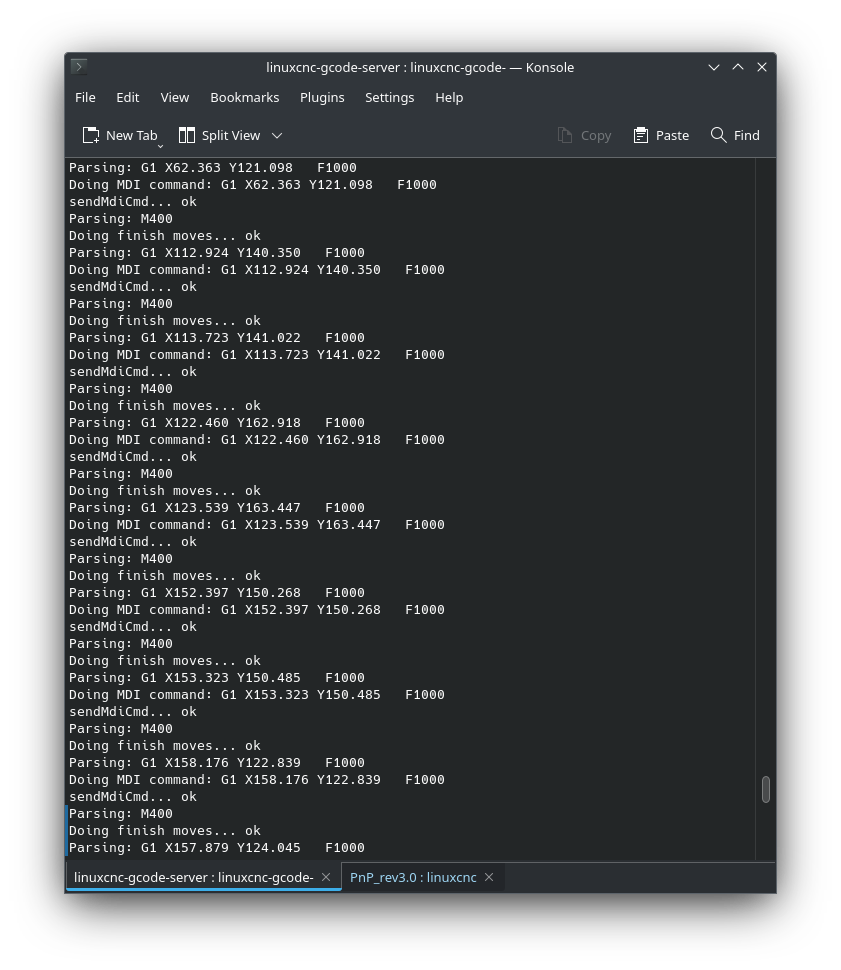

For now it functions like linuxcncrsh, listening on a regular socket (telnet), checking if the input matches some specific keyword (eg. "pause", "abort", "m42", "M400" etc) and intercepting this with special behavior, otherwise it passes the input through as an MDI command to let LinuxCNC deal with it. In future I'm planning to check if the first character is "{" in which case it will be treated as JSON, and make a websocket server for convenience with web interfaces.

The code is a bit messy right now but working fairly well so far, hopefully I can tidy it up and put it on github soon. Anyway if Justin can wait a week or two this discussion might be mostly taken care of.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CA%2BQ02MOjDJyA%3DmY9Ym2DTVCgc%2B4MSH4HS%3Dp9hbzee_Gz5VjjCw%40mail.gmail.com.

justin White

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CAOMuCzBvM_SUw_tZQYZU9VRFDtNzZ%2Bf9jnQBDd3e4ZsNbChrYg%40mail.gmail.com.

Chris Campbell

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CA%2BQ02MPnvzeDBnKrUVhG8DSWZX1JfuEgNt2DgJE1YZwT%2BT7vWA%40mail.gmail.com.

bert shivaan

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CAOMuCzBY65H_PR-68QoSQ9x5AkafTirRKt9GBg5AOvsr49Me_Q%40mail.gmail.com.

Chris Campbell

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CA%2BKNHNz%2BKS2Vj3CoGd2akXuoxg%2BBYZMW1uzWoW-EgPNpthG64Q%40mail.gmail.com.

mark maker

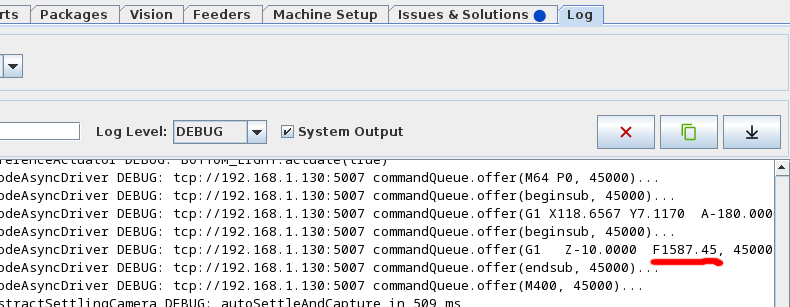

> Here are some movements commanded by OpenPNP, seems mostly ok so far

Cool!

> Seems like 'ConstantAcceleration' would be more appropriate, and that setting does command acceleration values, but unfortunately they are given between every single segment which currently interrupts blending. With a little more work I can make my server ignore acceleration commands where the acceleration value is the same as last time, so blending can be maintained.

In this case you should be using EuclideanAxisLimits.

It will still send them each time, but they should stay stable

until the Speed % is really changed.

https://github.com/openpnp/openpnp/wiki/GcodeAsyncDriver#motion-control-type

> If I understand correctly, OpenPNP will always send a MOVE_TO_COMMAND for every individual segment? Maybe it already exists, but it would be great if there was a way to pass the overall "intent" of a movement as a whole, allowing the motion controller to deal with it in a more optimal way. For example, instead of issuing multiple G0 commands to raise the head, then move it laterally, then lower it, the 'overall intent' command would just give the target destination, acceleration, and safe height(s)

Given LinuxCNC has the cool G64 command, this should already give you good results. See also here:

https://groups.google.com/g/openpnp/c/y9mnpG-YXOI/m/kLvqwFieAAAJ

And I'm open to help optimize this. Send G64 commands and Safe Z tailored to X/Y move distance, i.e. the curving should be higher on longer X/Y moves, and lower on shorter X/Y moves, and it should never start the curving while still under Safe Z.

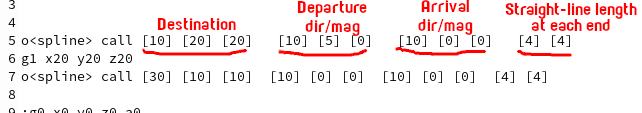

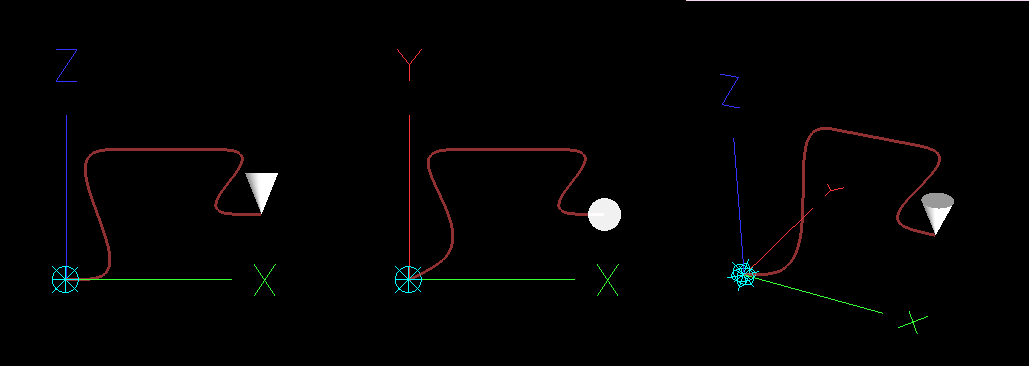

The following is for a 3rd order 7 segment controller and using a different concept. This is not blending, but letting moves be uncoordinated above Safe Z. I think you can see I spent some time thinking about and simulating this stuff. This is what the internal motion planner of OpenPnP can do. Still waiting for the controller that can do it for real:

Here, I discussed what is needed to do more or less the same with

blending:

https://groups.google.com/g/openpnp/c/Zs6PBCyBI9o/m/S9vEz1TrAQAJ

Quoting myself:

I would do it as follows (numbered for easy referencing):

- We want to save time, so it is better to think the blending in terms of time, not distance.

- By overlapping the time to decelerate Z, and the time to accelerate X/Y, we can (more or less) save that amount of time per corner (the other corner is just the same in reverse).

- If the X/Y displacement is large, then the overlap time is determined by the time it takes to decelerate Z.

- We want to drive the nozzle up as fast as possible, this means that ideally, we do not want to decelerate before we hit the minimum Safe Z height (at whatever speed and acceleration we can achieve).

- If our head-room (Safe Z Zone) is large enough to fully decelerate Z, we can take the full Z deceleration time as the overlap time. The upper Z of the arc is determined by the braking distance.

- If our head-room (Safe Z Zone) is not large enough to decelerate Z, we need to start decelerating earlier. The overlap time is then just the fraction of the deceleration time that happens above Safe Z. The upper Z of the arc is then determined by the headroom (Safe Z Zone).

- If the X/Y displacement is small, then the overlap time is no longer determined by Z deceleration, but by half the X/Y motion time.

- In that case we can take this half X/Y time and calculate the Z braking distance that is achieved in this time (reverse from still-stand). This braking distance added to Safe Z gives us the upper Z of the arc.

- This basically means that at some point smaller X/Y moves will result in less and less "overshoot" into the Z headroom, the arc becomes lower and lower. It is logical: if we just have a tiny, tiny move in X/Y, then the fastest way to go to Safe Z and then back is still to just go to Safe Z and not higher.

- Finally, simply start the motion of X/Y earlier by this overlap time, and vector-add its relative displacement to the still decelerating Z motion.

- This bleding in time gives us the shape of the arc. For large X/Y moves it will just round the corners. For small X/Y moves (7) it will create a true arc.

- With 2nd order motion control, the above rule set can directly be used to create the blending.

- With true 3rd order motion control, this is complicated by jerk control, i.e. the switch from accelerating Z to decelerating Z is not instantaneous. So computing (5) and especially (8) is probably best done using some sort of iteration/numerical solver. It does not have to be super accurate, so the solver can terminate early to avoid a computation bottleneck.

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CAOMuCzBY65H_PR-68QoSQ9x5AkafTirRKt9GBg5AOvsr49Me_Q%40mail.gmail.com.

mark maker

Visual homing is usually just handled as G92 offsets, and

LinuxCNC has that:

http://linuxcnc.org/docs/html/gcode/g-code.html#gcode:g92

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CAOMuCzAbK7_Lkc-0jhRm2faUo3fQfOLfW5GLLvv7DxtKODpUrg%40mail.gmail.com.

Chris Campbell

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/f59c4f95-4a53-2130-e961-4723d3490399%40makr.zone.

mark maker

> When commands are entered en-masse by pasting into telnet or via OpenPNP, in most cases all the commands can get into the queue before the first one has begun execution, and the full blend will succeed. Depending on the timing of events though, there is about a 20% failure rate, and some segments will end up with squared off corners instead of blending. I'm not aware of any way to make LinuxCNC defer execution until the queue is fully ready.

I'm so glad you clearly understand the important points. 😁

This is a common problem. Some controllers have a grace period,

where they wait for more commands to come, before they start

planning and motion. It's not only about blending, but also about

premature ramp deceleration.

For OpenPnP you can wait until you get an M400, as a marker that

the motion sequence is complete. M400 should immediately start the

planning and motion. The only exception here is manual

jogging, where OpenPnP cannot know if more jog steps will come,

and therefore leaves it to the controller to start planning/motion

(no M400 sent).

Just to see how common the problem is, see this for Duet:

https://github.com/Duet3D/RepRapFirmware/pull/471

> If each desired movement was passed as a set of

parameters rather than individual segments, the full information

for a move would be available in a single command and blends

could not get skipped or mixed up.

I believe that the M400 marker gives you plenty opportunity to do

this. You can record the motion sequences until the M400 arrives

(with timeout). Then you got the intent perfectly. You can

then make anything out of the recorded motion, recognize the

OpenPnP moveToLocationAtSafeZ()

pattern easily and apply any blending and needed Z overshoot as

needed.

I'm rather reluctant to make OpenPnP speak some special dialect instead. There is a very strong Open Source idealism behind all this, compatibility, standardization, interchangeability. I'm allergic against all kinds of lock-in. Making controllers speak mostly standardized G-code is the way to go, and your work clearly follows that path too, which is great! 😁

Note, I'm not counting G64 as proprietary. This type of motion

blending is clearly as intended by the NIST

RS274 standard (sections "2.1.2.16 Path Control Mode", and

"3.5.14 Set Path Control Mode — G61, G61.1, and G64"). Therefore,

for OpenPnP smartly support it, would be ideal. Other Open Source

controllers can then also implement G64 if they want to keep up.

😎 That's the spirit I'm after!

> Just some thoughts I had. For the time being, I think the standard behavior with G64 is pretty nice.

Definitely already much better than anything before.

Yep, also watch his cool videos:

From theoretical...

https://www.youtube.com/watch?v=hb4kSznglo0

... to practical:

https://www.youtube.com/watch?v=LTfe2ljmRpU

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CAOMuCzADm-tFR%3DHLUv7N_Yh3D7cLans4FhqmaUFBqm6avAR0rQ%40mail.gmail.com.

Chris Campbell

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/9621ae2f-98fe-6a68-c049-1cfd8cc0f80c%40makr.zone.

mark maker

Hi Chris

interesting discussion.

The problem from an OpenPnP perspective is that - like in any good software - the overall problem is broken up into many sub-problems, then individually solved, and then synthesized back into the final composite solution.

One issue you actually already picked up is Backlash

Compensation. It needs to be done. Could it be done on the

controller instead? Sure. Can we rely on each controller to

support it, and properly so. No. Would a controller that

supports it, know to do it right? Not sure. For instance, backlash

compensation for X/Y must be done "in the air", before actually

coming into contact with parts in feeders, or before pushing a

part into solder paste, otherwise you can imagine what happens.

This directly plays into motion blending, or transfer of intent,

for instance. The controller likely says "hey, let's do that at

the end of the move, so it can still be fluid", and that would be

bad, obviously. So you would actually have to blend it into the

down-going move, but assure it is done before actually arriving.

Because not all controllers support it, a solution must still be

implemented inside OpenPnP. In order to support it, those moves

must be de-composited. It would still be possible for it to be

disabled and deferred to controllers that are known to do it

right, but this makes the overall proposition of keeping the

"intent" intact more complex, you would need to defer

decomposition too.

Add to that other concerns like Runout

Compensation, Rotation

Wrap-around, Rotation

Mode, including nozzle Alignment,

Contact

Probing, including Tool-changer

Z calibration, and whatnot. We also want to be open for

innovation.

Transferring all that application knowledge and functionality to the controller, I would say, is bad design. Separation of Concerns.

So IMHO, sending the right G-code to do all that is still the

right way to go. And I see no reason why this should not be

possible, using well-timed G64, G1 sequences (or any textual

command language you like), assuming LinuxCNC is clever enough to

actually support parametrizing that stuff (including acceleration)

on the fly. I see no reason why externalizing such things into

scripts, as "our fried" (as you call him) did, is any different,

conceptually, from externalizing them into OpenPnP.

Also note that OpenPnP supports using multiple controllers for

the same machine, so LinuxCNC could just be one of them, perhaps

just driving the X, Y, Z but not the C axes. It must still work in

a quasi-coordinated way (this is clearly not hard realtime

coordination but enough for PnP).

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CAOMuCzBXgx5UWmWCNtTLYQfBbPKr-SVOHJitVwxZnP8JiAOTag%40mail.gmail.com.

justin White

One issue you actually already picked up is Backlash Compensation. It needs to be done. Could it be done on the controller instead? Sure. Can we rely on each controller to support it, and properly so. No. Would a controller that supports it, know to do it right? Not sure. For instance, backlash compensation for X/Y must be done "in the air", before actually coming into contact with parts in feeders, or before pushing a part into solder paste, otherwise you can imagine what happens. This directly plays into motion blending, or transfer of intent, for instance. The controller likely says "hey, let's do that at the end of the move, so it can still be fluid", and that would be bad, obviously. So you would actually have to blend it into the down-going move, but assure it is done before actually arriving.

Add to that other concerns like Runout Compensation, Rotation Wrap-around, Rotation Mode, including nozzle Alignment, Contact Probing, including Tool-changer Z calibration, and whatnot. We also want to be open for innovation.

Transferring all that application knowledge and functionality to the controller, I would say, is bad design. Separation of Concerns.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/bfcf67e5-22ca-6140-2a2b-85ff79cad872%40makr.zone.

mark maker

You should perhaps read the links I provided. It would also help

to test your machine with the Backlash Calibration, you can do

that as soon as something moves. The fact that we need to support

many different backlash compensation methods should make it

clear(er) to you, why it is not so simple.

> So just prior to moving negative LinuxCNC will snap it

out.

Yes, LinuxCNC also cares about compensating while moving, because most NC applications do the actual work while moving (milling, laser cutting, 3D printing). And doing so at a constant and regulated feed-rate, which is adjusted to the work being done, so the stuff will not go up in smoke or blobs of plastic (i.e. generally slow, but not too slow). For some applications, the preprocessor will even add little extra run up paths, so the nominal feed-rate is attained before the tool hits the work piece, starts lasering, starts extruding, etc. All this also makes it easier to predict the needed backlash compensation.

Pick and Place is quite different though. We don't care at all

about what happens during motion. We care about the position at

the end. We even don't care about the position up at Safe Z. we

care about the position down on the feeder, camera focal plane,

PCB. On the other hand, we do care about CPH, we simply want the

maximum speed from A to B. This means that we have completely

different situations regarding momentum, belt flex, friction,

overshoot etc. depending on how far a move is. This in turn means

that for many pragmatical machines, the Backlash Compensation must

be explicit, either one of the OneSided methods or

DirectionalSneakUp. Only few machines (if

really tuned for high speed/acceleration) can use the DirectionalCompensation.

I have yet to see one.

https://github.com/openpnp/openpnp/wiki/Backlash-Compensation#backlash-compensation-methods

I'm not even sure DirectionalCompensation is a hallmark

of the mechanically best/most expensive machines. Even if you have

(balled) lead screws, for instance, they have quite some backlash,

apparently (and "stiction" too). Heavy machines (made from metal)

or those with extreme servos supporting hefty deceleration may

more likely have overshoot, which means innertia is then

overcoming friction, which reverses backlash, but likely not

consistently across speeds and distances. I could also imagine

that aggressively tuned closed loop systems are unpredictable, you

never know which way they last nudge the motor. Maybe those with linear

encoders can make it go away.

> "blend it into the downgoing move" is a different concept from what LinuxCNC uses.

Yeah, it was specifically my argument that LinuxCNC does not know

about the peculiarities of picking and placing and therefore

cannot and should not care about this. OpenPnP should. It is the

only way I see we can mix advanced options like motion blending and

advanced Backlash Compensation and top speeds. We just

need a clever way of telling LinuxCNC how.

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CA%2BQ02MPQ1d7%3D_GY2MGGxvW1z4zXhq_Ox2TddaQaqJbbGZPw8fg%40mail.gmail.com.

Chris Campbell

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/051e0ef9-ca17-2558-29de-8a943bb0866d%40makr.zone.

mark maker

Nice, I see you understand perfectly what I meant. 😁

The "intent" reconstruction is only needed, if (or as long as) it

is impossible to teach OpenPnP to send the blending

commands/parameters directly. Can you provide examples of these

scripts? Is there anything "magic" in them that prohibits us from

doing the same math and sending the same commands from OpenPnP? Is

there a performance issue?

> Yes, it would certainly be bad design to have features like runout compensation, rotation wrap-around, rotation mode, nozzle alignment, contact probing, and tool-changer Z calibration transferred to the motion controller. But I didn't suggest any of that.

The problem is, that all of these are interwoven with the moves

we are talking about (or rather individual move segments).

All the rotation stuff must happen "in the air" only, for obvious

reasons, the contact probing should seamlessly happen on the down

leg (without stopping between positioning and probing), etc.

That's why I would like to keep the "brains" inside OpenPnP,

rather than to send "intent", with a gazillion of parameters

applying all those issues to it. Among other things it would be a

configuration and versioning nightmare to get the scripts on the

LinuxCNC side in sync with the OpenPnP version.

If we absolutely need certain scripts / canned cycles for

performance reasons, is there a way, perhaps, to declare them via

your client? So they could be added to the ENABLE_COMMAND, for

instance, or even generated on demand.

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CAOMuCzC%2Bncoe_mbD4E%3DqW_-Q81UcC0_-m3dP-ySi7m0iDZgvKw%40mail.gmail.com.

Chris Campbell

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/9c9ac85a-6b8b-24d0-d455-f8edfdaf8e40%40makr.zone.

justin White

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CAOMuCzBmE8AmQ87qEOi5BT1pbv6trxqUoV68Wg-6wVmXOu%3DpYA%40mail.gmail.com.

mark maker

> Everytime I read one of your posts I'm imagining you

working on the software in one of those things.

Wish I was. Nah, the software we're making is much more boring.

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CA%2BQ02MPyYPME_VaJZ%3DMd5kEASPQo_m_T6%3Dppdq%3DNmWF91weyKA%40mail.gmail.com.

mark maker

Hi Chris,

thanks!

Just to be sure: the only three commands actually "doing something" are the last three in the sub, right?

So you are not adjusting G64, but working with waypoints

to ensure straight lines.

But what about moves where the blending is not wanted? Or where

it is wanted on one knee but not the other? Can you command G64 in

the middle of a move sequence and it will adapt without stopping

in between? And btw. did you manage to control acceleration in

mid-motion?

I'm talking about moves like feeder actuation moves (could also be a drag feeder for instance):

https://youtu.be/5QcJ2ziIJ14?t=248

Blinds Feeder cover opening:

https://youtu.be/dGde59Iv6eY?t=382

Nozzle tip changer moves:

I'm still convinced, OpenPnP should do the math and control waypoints and shape G64 from its side, as only it has the semantic knowledge of what is happening now, and in future versions. And frankly, it is much easier to code all that in Java, don't you think? 😁

Also I'd like for this to be machine universal, and available for

all users, even if they are not "ngc programmers". There is

existing UI to configure/capture the waypoints of these

feeder/nozzle tip changer motion sequences, and new UI could be

added for blending options, etc. If you want LinuxCNC to be a

valid option for many OpenPnP users, you cannot assume they are

able to hack their own canned cycles.

If it turns out we need to generate subs to make sure motion

sequences are executed in one fluid go, I hope we can declare them

on the fly, through your client, right?

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CAOMuCzBmE8AmQ87qEOi5BT1pbv6trxqUoV68Wg-6wVmXOu%3DpYA%40mail.gmail.com.

Chris Campbell

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/a73c7e54-f82b-9845-9d9b-0ee636635b36%40makr.zone.

Chris Campbell

mark maker

Hi Chris

> The subroutines are read in from files on the LinuxCNC

system, so no, my server would not be able to create them on the

fly.

Are you sure? Have you tried? Isn't your server just another

command source, internally handled the same way as files?

> Yes, coding in Java is nicer, but it doesn't solve the problem of failed/missed blends.

Are you sure the failed blending isn't simply a network latency issue, that could be solved by proper buffering?

Like I said before the M400 could be your buffer flush signal:

collect everything in a string and only feed it to LinuxCNC once

you get M400, or once you did not receive new commands for 100ms

or so.

Every simple MCU controller that I worked with, has a sort of

grace period to avoid rushing into premature deceleration. So I

would be astonished if LinuxCNC hasn't.

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CAOMuCzDMyN4-jMb3wVR2yAOu%3DTBFysiQP-peVCsrD9gavG6a1g%40mail.gmail.com.

mark maker

> I would still like to test this on a real job log sometime...

You can load the sample test job in OpenPnP and run it:

- Replace your machine.xml with this one:

https://github.com/openpnp/openpnp/blob/test/src/test/resources/config/SampleJobTest/machine.xml

- It simulates a more realistic test machine, including simulated imperfections like nozzle run-out, non-squareness and camera vibrations. Unlike the default machine.xml it also uses real Z heights. It is connected against an internal G-code controller (GcodeServer)

- Start OpenPnP. Change the driver to connect to LinuxCNC

instead of the GcodeServer.

- Load the test job:

https://github.com/openpnp/openpnp/wiki/Quick-Start#your-first-job

- Run it.

- It should now generate G-code in real time. It reacts to the

simulated cameras of course, instead of really something

LinuxCNC does, but because of the simulated imperfections it

needs all the right compensations, part alignment corrections,

camera settling to wait for the camera to stop shaking, etc.

Unfortunately, there is no backlash simulation yet ;-) but you

can configure backlash compensation anyways.

- You can configure the machine speed on the axes, to make it

more realistic, e.g. like your planned machine.

- You can configure the imperfections on the Simulation

Mode tab.

- Alternatively, you can record a G-code script with the Log G-code option on the driver. However, IMHO this is not a real test, as it does not simulate the timing and the hand-shaking.

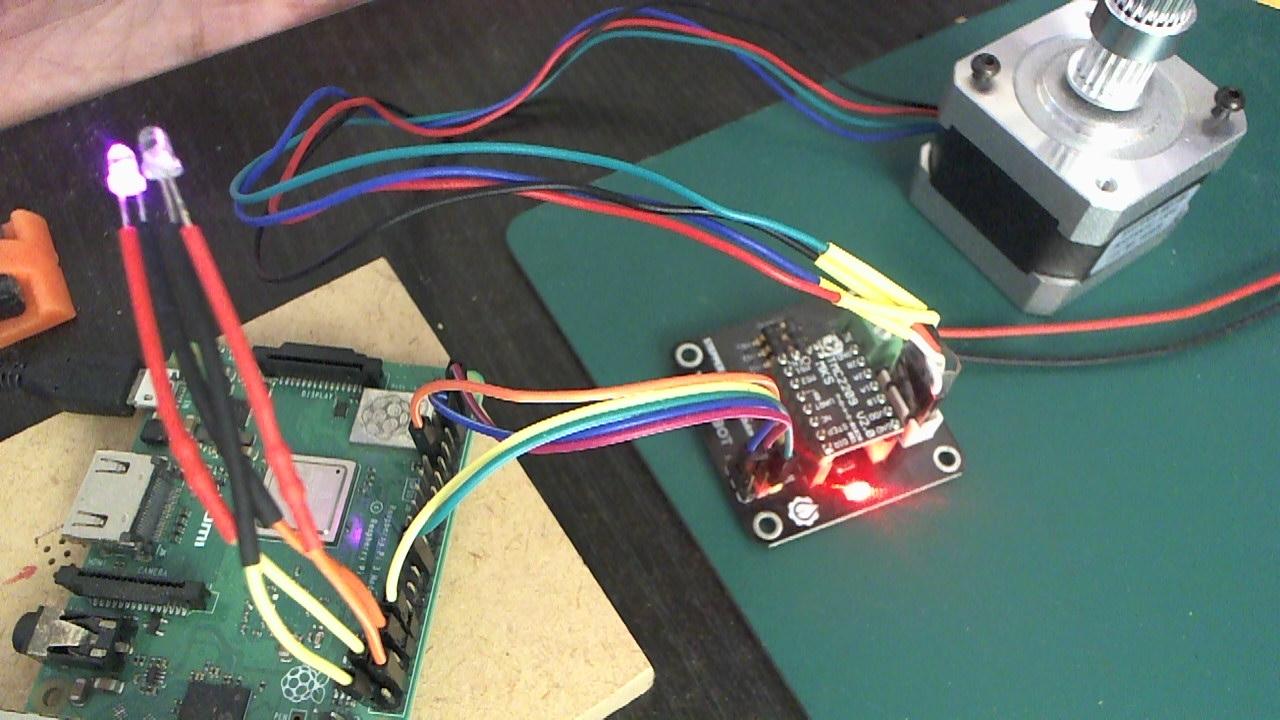

I did something similar, i.e., simulate a job, and at the same time drive a real controller/stepper, here (albeit for a different testing purpose of course):

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CAOMuCzDAMZSQNx1LVbPD5mGxZHsH9GvwA4aiFd%3D30a1-V6%3DbYw%40mail.gmail.com.

Jan

On 16.05.2023 16:53, mark maker wrote:

[...]

> 1. Replace your machine.xml with this one:

> https://github.com/openpnp/openpnp/blob/test/src/test/resources/config/SampleJobTest/machine.xml

> 2. It simulates a more realistic test machine, including simulated

> vibrations. Unlike the default machine.xml it also uses real Z

> heights. It is connected against an internal G-code controller

> (GcodeServer)

developers Wiki page?

Jan

Chris Campbell

When I tried with the simulated machine.xml, it seems like the

simulated GCodeDriver will always be in effect regardless of any other

settings made in the GUI. I made all all the required settings the

same as I did before, but it never connects to my server and the log

shows lines like:

GcodeAsyncDriver DEBUG: simulated: port 38445 commandQueue.offer(M204

S187.71 G1 X6.2580 F600.00 ; move to target, 5000)

The g-code logging does work though, so I'll try using that for some

experiments.

Regarding your earlier questions, my server uses a feature called NML

(Network Message Layer) to communicate with the underlying LinuxCNC

system over the network. Any interface that manipulates a LinuxCNC

machine will use this in some way or another, typically via Python

bindings but mine uses C++ directly. Messages like openfile, run,

pause, resume, abort, start jog, stop jog, real-time feedrate

adjustments etc are passed over a socket, which is typically running

on the same computer as the LinuxCNC core but could also be a remote

endpoint, or multiple remote endpoints. In any case it's not handled

the same way as files.

Among the messages that can be sent is one called MDI (Manual Data

Input). This allows individual g-code commands to be executed

independently of any program, which provides a convenient way to

perform ad-hoc operations or make adjustments. But by far the bulk of

commands will be executed by reading a complete program file up-front

and running through the file with full knowledge of all future

commands. Since the usage scenario for MDI messages is occasional

tweaks that the operator would be typing in by hand, there was never

any notion that these would be expected to be processed as a coherent

group. They will always be executed immediately, and no amount of

"proper buffering" by my server can prevent that.

So although the capability to provide individual commands from a

remote system does exist, it was not intended to be used as the main

source of instructions. LinuxCNC can read in programs many megabytes

in size, so the 'simple MCU controller' approach of drip-feeding

commands from some other system was probably never even conceived of.

Consequently, a 'grace period' is also a foreign concept.

Fortunately the 'o-code' subroutines allow a way to intentionally

group commands together, and have reliable blending. The catch is that

these must be files on disk. So the awkward workaround my server ended

up having to do is create a temporary subroutine file on disk, and

then issue an MDI command (eg. "o<tmp> call") to execute the

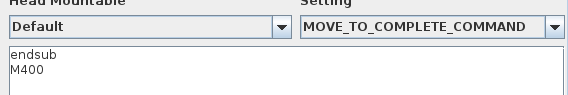

subroutine. My server will group commands inside beginsub/endsub

keywords. To ensure jogging via OpenPNP works in a timely manner (and

that clients disconnecting without giving the 'endsub' don't cause

problems) the subroutine will be finalized after some timeout even

when no further commands are given. It's possible that creating a file

as a regular job and running it might work too, but that has more

overhead involved so I suspect it would be slightly slower.

This is probably more than you cared to know about the innards of

LinuxCNC, but it might help explain some of the reasoning that went on

in my experiments.

> You received this message because you are subscribed to the Google Groups "OpenPnP" group.

> To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

mark maker

> This is probably more than you cared to know about the innards of LinuxCNC, but it might help explain some of the reasoning that went on in my experiments.

No, on the contrary, it is the only way to be sure, in order to rule options out, thanks. 😁

> Among the messages that can be sent is one called MDI

(Manual Data Input). This allows individual g-code commands to

be executed

independently of any program, which provides a convenient

way to perform ad-hoc operations or make adjustments.

Are these strictly one line only? No way to send some escaped line delimiters? Any chance for a PR to add that capability?

Even if the temp file approach turns out to be the only way, I'm

not overly concerned about performance, on a Linux system. Sharing

files is handled extremely efficiently (basically via virtual

memory page mapping), and if M400 delimiting is used, the overhead

is only incurred once per motion sequence. Compared to the usual

physical machine delays, this is nothing. The only small concern

would be a shoddy implementation on the LinuxCNC side, perhaps not

properly freeing files, memory, etc. as we would be generating,

using and dismissing (tens of) thousands of scripts.

> LinuxCNC can read in programs many megabytes in size, so

the 'simple MCU controller' approach of drip-feeding commands

from some other system was probably never even conceived of.

What about this "interactive session" mdi usage?

http://linuxcnc.org/docs/devel/html/man/man1/mdi.1.html

_Mark

Chris Campbell

multiple commands are required, normal procedure would be to read them

from a file. The man page you linked to is for a "shell" type of

utility, that again only accepts one line at a time.

https://forum.linuxcnc.org/48-gladevcp/29354-multiple-mdi-commands-in-a-vcp-action-mdi-widget

Yes I think performance will be fine. The location for subroutine

files can easily be set to a tmpfs path. I'm overwriting the same file

name every time, so I assume it's not occupying much new memory over

time. This Raspberry Pi is kinda gutless but it seems to be running

things surprisingly well.

Here is a run with two boards of the simulated job where I pasted the

log into my server to replay it. I omitted set-acceleration commands

because they break blending, so acceleration is the same for all

movements.

https://youtu.be/F68xNnZgNAk

mark maker

> he man page you linked to is for a "shell" type of > utility, that again only accepts one line at a time.

interactive session

$mdi

MDI> m3 s1000

MDI> G0 X100

MDI> ^Z

$stopped

_Mark

justin White

$ mdi

MDI> G0 X10

Traceback (most recent call last):

File "/usr/bin/mdi", line 40, in <module>

mdi = eval(input("MDI> "))

^^^^^^^^^^^^^^^^^^^^

File "<string>", line 1

G0 X10

^^^

SyntaxError: invalid syntax

You received this message because you are subscribed to a topic in the Google Groups "OpenPnP" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/openpnp/IshRY1IM80w/unsubscribe.

To unsubscribe from this group and all its topics, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/2ff12ef3-a97e-0971-2cc7-7c6d0fea4e8d%40makr.zone.

Chris Campbell

https://github.com/LinuxCNC/linuxcnc/blob/master/src/emc/usr_intf/axis/scripts/mdi.py

Calling subroutines in separate files is also more convenient than having to edit the main program. Right now I'm making a soldering bot for through-hole pins, where the actual repetitive soldering motion will be a subroutine that I can highly optimize as a separate file, and the main program can remain succinct and easy to set up for different boards, eg.:

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CA%2BQ02MPJj8xuEZworRUUd_Z7auudtDOh9Lor5CoKoAxZtr%3D14A%40mail.gmail.com.

Mark

> On LinuxCNC gcode commands are expected to be read from some kind of disk file 99.9% of the time, and the socket connection is for non-gcode controls like start, pause, abort, jog etc. I think MDI would have been created merely as a convenience to not require a disk file for the occasional adjustment where manual jogging wasn't quite precise enough.

Agree. I have since browsed LinuxCNC source a bit and I guess it

has evolved over many iterations/generations, has "sedimentary"

layers upon layers, including, if I understand correctly, quite

some Python stuff calling back and forth from/to C/C++ with some

ugly looking string based bindings. Does not make it any simpler

to understand, let alone modernize it. This seems to confirm what

you say: LinuxCNC is designed to spool off prerecorded G-code

files, where it doesn't matter if some cumbersome (and slow)

preprocessing takes place. Guess you'd have to live with generated

temp files.

Needless to say, this is not ideal. The same brain effort

invested into extending/developing a modern MCU based controller

firmware, i.e. to add blending, combine it with 3rd order motion

control (or similar), looks ever more promising to me.

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CAOMuCzBJNUuZQj%2BRduy8pq3B%2BBhKunTYM0SdfU48r3d1RyAZnw%40mail.gmail.com.

justin White

btw that utility does actually work for me, at least on the RPi.

Needless to say, this is not ideal. The same brain effort invested into extending/developing a modern MCU based controller firmware, i.e. to add blending, combine it with 3rd order motion control (or similar), looks ever more promising to me.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/238326fd-c017-215f-2020-9b1190de574f%40makr.zone.

mark maker

> Honestly the work Chris has done is more than I expected to be necessary and it seems he's done a hell of a job.

In case this was somehow unclear (was it?): in no way was it intended to denigrate Chris' work, or whatever. On the contrary, I was asking myself if his obvious talent was somewhat "wasted" on the wrong approach. 😇

> Just out of curiosity, what CNC controllers actually support the "send me Gcode" idea, besides the 3D printer firmwares?

I only know Open Source controllers. It could be argued that

Grbl, the grandfather of them all, and TinyG are not per se 3D

printing controllers, AFAIK they are used for NC routers first and

foremost.

> AFAIK this is a relatively new trend that started with 3D printers

Actually, the "reactiveness" is not needed for 3D printing, at

all.

But I guess it is a very important hallmark of any robotic use

case that employs computer vision or other forms of "sensing",

"reasoning" and "reacting" in ways beyond mere touch probing. It

just opens up massive new possibilities. Think machine learning.

I'm not saying these use cases can't be done with LinuxCNC. But

based on the facts available to me, after having browsed the code

a bit, this would be highly proprietary, in many ways rigid and

old style (files only?), unnecessarily complex, and still

quite limited, for instance in not supporting third order motion

control, or even fluid acceleration control. And those features

would be almost impossible to add, given this system is clearly

"grown organically" with a gazillion layers and module

dependencies.

The "proprietary" in there is also very important to me. Open

Source can only thrive if interfaces are more or less

standardized, as G-code is. So stuff in every shape and form can

be put to work together. So an enthusiast community can evolve an

NC router into a 3D printer, into a laser cutter, into a PnP

machine. "Entry cost" is also important. The simplest controller

can be an Arduino and driver shield for a few bucks.

All this leads me to conclude that Chris should not spend too much time into making this work in too elaborate ways. There would be more worthwhile endeavors! 😎

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CA%2BQ02MOVxT%3D%3DVUjQFztSM_3JnrZS9WAxG412yoAZmbzk8Omo1w%40mail.gmail.com.

justin White

All this leads me to conclude that Chris should not spend too much time into making this work in too elaborate ways. There would be more worthwhile endeavors! 😎

The simplest controller can be an Arduino and driver shield for a few bucks

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/eddfbde2-3bd0-cc61-fb11-f35a2e148ab3%40makr.zone.

mark maker

Thanks for the effort. I think, I understand all that more or less, and still come to a different conclusion, maybe because ultimately, I am talking about finding a nice lean and mean solution for PnP, not about finding new use cases for LinuxCNC.

But frankly, that Remora project (quote:

"Remora was primarily developed to use LinuxCNC for 3D

Printing") is like rectal dentistry. It can be done, I'm

sure, and it is amazing that it can be done. But it is not

reasonable to do so. The supported

controllers are perfectly capable of 3D-Printing all by

themselves, offline, while you can do something else on your

computer, or switch it off. I for one have two 3D-printers, and I

would not want to only use one at a time.

And yes, after browsing the source code, I do believe that

everything LinuxCNC can do

in its motion planner core, can be done on these modern

32bit controllers. We'd need a reasonable subset of (inverse)

Kinematics. Plus a reasonable subset of the G-code

interpreter. You would, of course, do away with all the

Python back and forth, intermediate file handling, and that nasty

user space to kernel interface, plus the HAL would have to be much

more focused. You have to realize, that when LinuxCNC was

implemented (with about these core capabilities), PCs' CPUs were

weaker than today's MCUs (at least those with FPUs, although I'm

not sure the first LinuxCNC PCs necessarily had FPUs). The lines

even blur more if you talk about the Raspi.

Note that I still left out a whole lot of the LinuxCNC universe.

If you absolutely insist on using an FPGA card for Servo closed

loop driving, instead of buying a dedicated servo driver, then

LinuxCNC it is.

The Arduino I only mentioned because it is actively used with OpenPnP now (Grbl). Certainly I would not build a new controller firmware with it. 😇

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CA%2BQ02MNDRhQdbLwRJtGgH_NP-KMxy-kURtV3SDve1EUU1fXkLA%40mail.gmail.com.

justin White

But frankly, that Remora project (quote: "Remora was primarily developed to use LinuxCNC for 3D Printing") is like rectal dentistry. It can be done, I'm sure, and it is amazing that it can be done

You have to realize, that when LinuxCNC was implemented (with about these core capabilities), PCs' CPUs were weaker than today's MCUs (at least those with FPUs, although I'm not sure the first LinuxCNC PCs necessarily had FPUs).

If you absolutely insist on using an FPGA card for Servo closed loop driving, instead of buying a dedicated servo driver, then LinuxCNC it is.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/c15fedcb-97df-b0c6-9e2e-b19f3eaaa939%40makr.zone.

Bert Lewis

On May 19, 2023, at 5:33 AM, justin White <blaz...@gmail.com> wrote:

<Selection_1200.png>

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CA%2BQ02MNjJsWqVLq%3D-go599d-E4UDcEDg0-wHDFLChdEBaO%2B%3Dqw%40mail.gmail.com.

mark maker

It almost seems, you deliberately try to misunderstand and misrepresent what I'm saying. 😭 I hope others following this, read what I really said, and in the context.

The retrofit argument I don't get. Reading this group for years, I remember people have retrofitted (old) PnP machines with the common MCU based controllers many times. Why should LinuxCNC be easier?

Remember: Unless when I said otherwise, I was and

still am always talking about PnP. Conversely, I do see how

LinuxCNC can be an ideal candidate for retrofitting a router etc.

as most of its peripheral software and GUIs seem ready-made for

that (I'm not a router guy, so this may be a false impression).

But make no mistake: I do welcome LinuxCNC as a valuable option

for OpenPnP. I provided help and information to Chris in many

iterations. Yes, I'm a tech guy, I want to know facts, rather than

opinions, so I asked hard questions too. Chris provided the

answers quick and very competent. We might have had different

opinions about some things, but as far as I understood, this was

all in the realm of constructive tech talk. Chris must say.

Yes, I do become extra critical, when OpenPnP is supposed to

somehow handle LinuxCNC differently than other controllers. When

we are effectively asked to break up the existing motion model and

partially transfer it to LinuxCNC, and in proprietary ways. I'm

not completely ruling such solutions out, but it must provide a

super-duper advantage, and no alternative way to achieve the same

result, in order to justify the breaking of the current model. So

I'm asking hard questions about the super duper advantage, and

about the complete ruling-out of alternative ways, and when I

don't see both, I do speak my mind.

My current take is that Chris can make the connection to LinuxCNC

working through G-Code. In order to support stable blending (and

likely also to avoid premature deceleration), he likely needs to

script the recorded commands into files and then execute them,

when M400 is received, or after a small timeout (for manual

jogging).

Then OpenPnP would be extended (by me) to support the G64

blending parametrization on the fly (corners with and without

allowable blending), and to control how far the Z overshoot should

be (effective Safe Z), based on X/Y move distance, and Z travel

(run up) at the beginning and end. All that can be communicated

through standard G-code. These cool options would be configurable

in the OpenPnP GUI, and thus available to non-expert users.

The blending is a big speed winner for Pick & Place, so the

LinuxCNC option would be duly noted, once the first videos are

published. I do like this all the more, because it actually

benefits the simpler DIY machines too, those would be able to run

faster, due to much reduced vibrations.

Anything more elaborate, like transferring "intent" for larger

move sequences, and by necessity then also transferring

responsibility for all the different backlash compensation

methods, runout-compensation etc. pp. as listed earlier, and thus

breaking up OpenPnP's motion model, I don't see. I would have been

more open to such options, if LinuxCNC turned out to be sooooo

advanced in comparison, that it would have made sense to

practically "bow to it". Based on my current information, it does

not. That's all I'm really saying.

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CA%2BQ02MNjJsWqVLq%3D-go599d-E4UDcEDg0-wHDFLChdEBaO%2B%3Dqw%40mail.gmail.com.

mark maker

> When I tried with the simulated machine.xml, it seems like the simulated GCodeDriver will always be in effect regardless of any other settings made in the GUI.

Sorry, yes, I completely forgot about how this works inside. Will fix it.

_Mark

mark maker

Hi Chris,

It is now possible to connect to a real controller, while still simulating everything else. Details here:

https://github.com/openpnp/openpnp/pull/1561

You need to switch off the checkbox:

Please download/upgrade the newest test version, allow

some minutes to deploy.

https://openpnp.org/test-downloads/

Note, I wasn't actually able to test with a real external controller, so your giving this a quick test job run and reporting back would be very welcome, thanks! 💯 😁

_Mark

Chris Campbell

https://youtu.be/cxrg2g4NGII

Since this ping-pong interaction actually tests the M400 properly, it

uncovered some issues where an unexpected endsub would be

inadvertently passed through to LInuxCNC, and the lack of 'ok' reply

for beginsub/endsub would cause OpenPNP to timeout waiting. After

fixing these issues it seems to be fine, although it took many hours

to get a clean run for the video. Even after setting "ideal machine"

and disabling all the simulated problems like runout, noise, homing

error etc I still had huge problems with constant errors from the

vision complaining it couldn't find tape fiducials or see the picked

part with the bottom cam.

After a looooong time I realised the problem was that the vision

detection will trigger immediately when the M400 reply arrives, but

the simulated camera frame might not yet have caught up. This was

caused by me lowering the axis feedrates to closely match the (slow!)

display of the RPi LinuxCNC, in an attempt to make the video look

better. The fix was to just make the OpenPNP side move faster, so that

the camera frames would always be ready for detection when the M400

arrived. So in the video the two sides are not synchronized very well,

but at least I didn't have to keep manually prodding it to get a job

finished. This would not happen in an actual installation where the

camera always sees reality.

After running some real job gcode with it, the main inconvenience I

see is that acceleration settings cannot be handled well. The reason

is that changing the acceleration will interrupt blending. This is

another consequence of LinuxCNC being created for a different world,

where the tool does not pick up and carry things, and the mass of an

endmill is miniscule compared to the mass of the machine itself. So

the acceleration for each axis would typically be defined just once

after building the machine, the only consideration being that the

motors can actually perform the

demanded acceleration without losing steps. Anyway, acceleration can

be set only once per sequence of contiguous blended segments.

To ensure that blending occurred in my video I set the acceleration

manually before the job, low enough that blended corners will be

visible, and the job does not issue any acceleration changes at all. I

suspect it would be ok to do this for real jobs, either by setting it

low enough to accommodate whatever the heaviest package requires and

taking a speed hit on the smaller parts, or by setting up a separate

job where smaller parts are free to accelerate at warp speed.

I could modify my server so that only one acceleration per 'batch'

would actually be applied, and the move would remain blended. But this

would then rely on OpenPNP supplying the first acceleration as one

that could be suitable across the whole movement, which I don't think

is how it works at all right now. It also starts to look like passing

an intent which we don't talk about. I'm kinda thinking this type of

workaround is not really necessary, although I have never actually

used a PnP to know how annoying it would be to not have accelerations

individually tailored for every move.

On Sat, May 20, 2023 at 6:54 AM mark maker <ma...@makr.zone> wrote:

>

> Hi Chris,

>

> It is now possible to connect to a real controller, while still simulating everything else. Details here:

>

> https://github.com/openpnp/openpnp/pull/1561

>

> You need to switch off the checkbox:

>

mark maker

Great news, Chris, and thanks for the testing!

> After running some real job gcode with it, the main

inconvenience I see is that acceleration settings cannot be

handled well.

I think I could optimize the Motion Control Type EuclideanAxisLimits

in OpenPnP, where the axis accelerations are constant as long as

the desired speed factor does not change. Consequently, I could

make sure the acceleration command is only sent once, when the

speed factor was deliberately changed by code (or user).

But I would need an M-code that sets acceleration limits for individual axes, something like this:

https://reprap.org/wiki/G-code#M201:_Set_max_acceleration

Change of speed factor only happens at specific waypoints:

- In normal Pick&Place it is only changed when a

particularly delicate or heavy part is picked (Speed % setting

on part), and then reset once that part is placed. Both at

still-stand, so no penalty there.

- In the nozzle tip changer motion sequence, as well as in

PushPullFeeder and BlindsFeeder motion sequences, the speed

factor may change on individual legs of the motion sequence, but

these are so rare it won't matter and I guess we don't want

blending there anyways.

https://github.com/openpnp/openpnp/wiki/GcodeAsyncDriver#motion-control-type

Regarding G64. I can parametrize G64 (P

word) specifically for the OpenPnP moveToLocationAtSafeZ()

move sequence, along with the optimal Z overshoot. This move

sequences are from still-stand to still-stand (except in case of

contact probing, which I guess is difficult anyways). I'm not yet

sure how the G64 P word must be set to ensure straight Z

up until it reaches nominal Safe Z, and only then allow

it to blend. The inverted consideration happens at the diving end.

We don't want it to knock over parts that were already placed:

think of a large electrolyte condenser on the nozzle and being

inserted between two already placed. Also note that the optimal

overshoot curve may be lopsided, if the Z raise/dive is

asymmetric, or even twisted (S shaped) on dual nozzle shared Z

machines.

I'm also not entirely sure if LinuxCNC can blend more than two

segments (I think I've seen some evidence in the source, that it

can only blend the deceleration/acceleration phases of two

subsequent segments, but there could be some overreaching

optimization, I missed). If it can't blend beyond segments, we can

work with extra waypoints at normal Safe Z, and just allow full

Safe Z Zone blending via P word.

to fix your problems. Especially the section on Satisfying Build Dependencies." 😕

_Mark

justin White

If I understand this correctly:

I could just install the user space part on my Linux, right? This would likely give me the best graphical simulation speed.

Or is a VM better? If yes, which .iso should I use? And would a VM (hosted on a powerful PC) be fast enough to simulate graphically with true speeds (unlike the Raspi)?

You received this message because you are subscribed to a topic in the Google Groups "OpenPnP" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/openpnp/IshRY1IM80w/unsubscribe.

To unsubscribe from this group and all its topics, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/e5938744-5cae-9c9e-13d9-be5c3cee5e00%40makr.zone.

Chris Campbell

Hi Mark,

Any further 'beginsub' will be ignored if a buffer is already in progress. Full details are outlined in my github readme near the bottom.

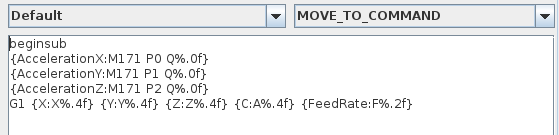

To set acceleration I'm using M171 which simply calls a bash script I made with file name "M171", and passes up to two parameters. See "M Codes" in the LinuxCNC docs.

https://linuxcnc.org/docs/html/gcode/m-code.html#mcode:m100-m199

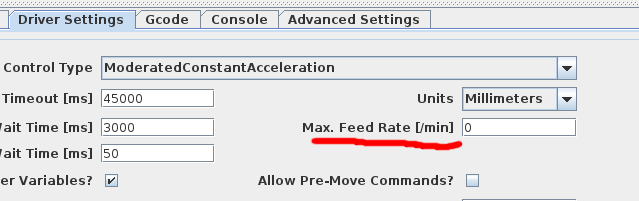

Currently I'm only using a single parameter and setting the same acceleration value for all axes. Since this functionality only allows two parameters, it would require separate commands to set all three axes separately. It's no problem to make M172, M173 etc to handle all axes individually, or maybe use one parameter for XY and one for Z, I'm assuming the X and Y accelerations would not actually be different? Eg.

M171 {AccelerationXY:P%.0f} {AccelerationZ:Q%.0f}

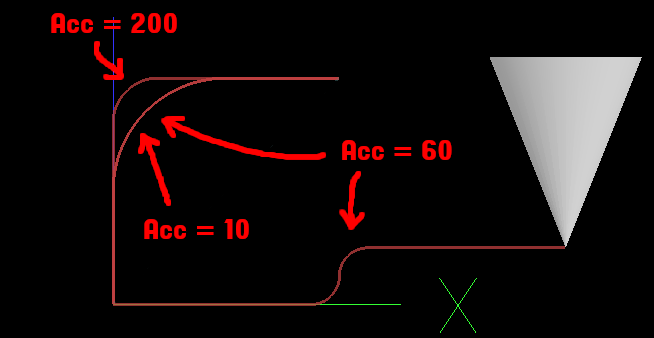

Yes, LinuxCNC will not blend more than two segments, that's correct. The path will pass through the midpoint of each segment tangentially. This is a little more primitive than would be optimal in many cases, on the other hand it makes waypoints a predictable way to direct the path around obstacles. For example in this screenshot, all four paths start from x0y0. The three paths on the left are:

g1 z20

There is no difference between accelerations 10 and 60 because the path is constrained to pass through the midpoint of the up-going segment. If there was more room (longer segments) the acceleration of 10 would follow a larger radius.

g1 x20

g1 z5

g1 x40

Note here that the radius is much smaller than the other case where acceleration was also 60, because it is constrained to be vertical at the midpoint. Obeying this constraint affects velocity. The line on the right needed to slow down to about half the velocity to achieve that tighter radius within acceleration limits. Anyway, for segments that start and end a blend sequence, we can depend on the first (or last) half of those segments being straight. So for example if the safe-Z was 15mm, you could set the Z-rise to 30mm and not have to worry about what blending will do.

If your Linux is a Debian variant I think those uspace installs would work fine (unless you want to actually spin a motor smoothly), although I have not tried that myself. There is an RPi image floating around somewhere which might also be a convenient way to quickly try it. Building on Debian is actually not too bad, the section about satisfying build dependencies just lets you know how to generate a list of required packages which you can then pass to "apt install". My main development computer is Fedora which is *not* easy to build LinuxCNC on, which is why I'm using RPi for these tests (and also because I want to run my solderbot with RPi). If your Linux is not a Debian variant and you don't have any RPi around, then I suppose VM it would be.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/e5938744-5cae-9c9e-13d9-be5c3cee5e00%40makr.zone.

justin White

You received this message because you are subscribed to a topic in the Google Groups "OpenPnP" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/openpnp/IshRY1IM80w/unsubscribe.

To unsubscribe from this group and all its topics, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CAOMuCzBfJvuxzhGH5i679MsbY17wbMiuKiVFnN4RS5dxvqgZHg%40mail.gmail.com.

mark maker

> Best bet if you are running Debian Bookworm or

maybe Ubuntu Kinetic is just install it from apt since it's in

the repos

> $ apt install linuxcnc-uspace linuxcnc-uspace-dev.

Thanks Justin, will investigate (my Kubuntu LTS is too old, but

maybe it is time to upgrade...).

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CA%2BQ02MM2Gag-D6Pz7X5oUBV6dpZYATiyh4894kEz67ZVrR0w2w%40mail.gmail.com.

mark maker

> I'm assuming the X and Y accelerations would not actually

be different?

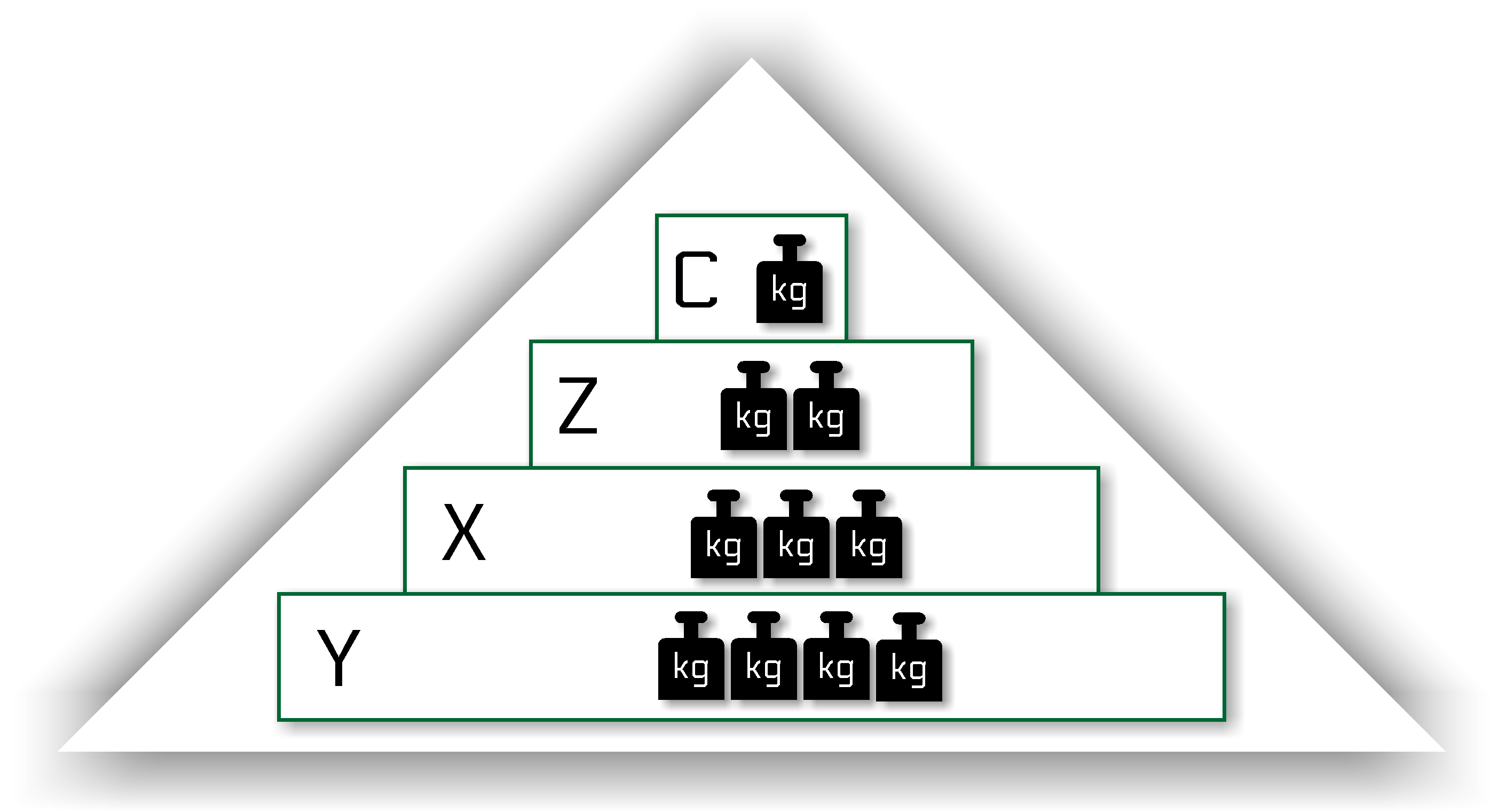

They will often be quite different, as we want to accelerate as quick as possible. But because in a Cartesian machine the whole portal is much heavier than just the head, and unless a design compensates with a much stronger Y motor, this means maximum possible Y acceleration will be much lower than X acceleration.

https://makr.zone/thinking-machine/82/

So we really need individual control for best performance.

> See "M Codes" in the LinuxCNC docs.

This is really a strange limitation. In that case I suggest using

M117 P<axis/joint> Q<accel>

with the axes/joints numbered according to "Trivial Kinematics":

http://linuxcnc.org/docs/html/motion/kinematics.html#_trivial_kinematics

> Yes, LinuxCNC will not blend more than two segments,

that's correct.

Good to know.

> So for example if the safe-Z was 15mm, you could set the Z-rise to 30mm and not have to worry about what blending will do.

That would not be optimal for short distances. You'd want part of

the deceleration taking place in the straight leg, otherwise the

excessive blending might actually lengthen the overall

move. But perhaps we can still rely on the blending algo to do the

right thing, because it simply cannot blend more, due to the short

X/Y deceleration/acceleration phases. Or like I said, we simply

add waypoints at nominal Safe Z. The following also shows how it

could be asymmetric (just hand drawn):

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CAOMuCzAaz%2BFWGBV85dOyBa3bpF%2Bsd_AEPdt%2B%2BDaCaTAd_TCfyQ%40mail.gmail.com.

justin White

You received this message because you are subscribed to a topic in the Google Groups "OpenPnP" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/openpnp/IshRY1IM80w/unsubscribe.

To unsubscribe from this group and all its topics, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/db30db19-2ab4-d84e-0fbb-18be0cc418e7%40makr.zone.

mark maker

Interesting video. If these methods are still the same used

today, then different acceleration in X, Y, Z are likely not

really useful. I thought it used parabolic blending, but it

actually uses circular arcs (constant R, constant max feed-rate),

which means accelerations will have to be uniform across axes (it

likely takes the minimum of all involved axes).

Chapter

"Future Work": Is it still limited to X, Y, Z? That would be

bad, as we routinely need to rotate nozzles while moving. But I

guess that was in the test job, and it still blended, right?

All important to know!

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CA%2BQ02MNP9Vt88%3D9RwbfbF7i4m-VZteO5%3DcZr-CuMi7M_yd6_3g%40mail.gmail.com.

Chris Campbell

But anyway yes, a format like this would work fine:

M171 P<axis> Q<accel>

Regarding the simultaneous blending of the A axis, yeah I also read somewhere that blending would not function when more than XYZ were involved. So I was expecting to see that in my experiments, but it looks like the 4th axis is moving just fine, at least on the GUI display. Not really sure what's happening there, maybe that info is old...?

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/e21fc1e9-3969-e4d5-592e-739ed4caf04e%40makr.zone.

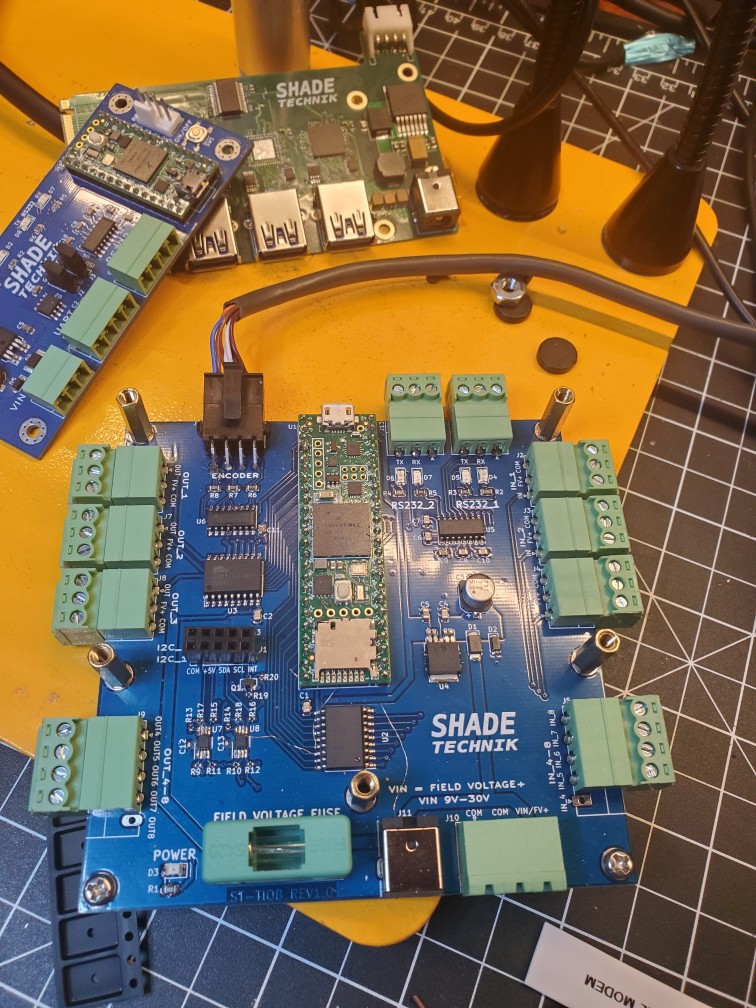

justin White

Not really sure what's happening there, maybe that info is old...?

I'm using software stepgen, going straight into a TMC2209 breakout/mount board. With a RPi 3B the speed you see in the video is about as fast as it can go without following errors.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CAOMuCzCuWc9of2MxOdiRc_yEa%3D9EdvNZ0vbWMxRndgivoNZJrA%40mail.gmail.com.

mark maker

> My understanding was that the reason OpenPNP needs to set

accelerations is because some parts require lower acceleration

in order not to fall off the nozzle. Surely that's the same for

X and Y? I don't see why OpenPNP should be responsible for (or

even aware of) the maximum limits of the machine, which would be

a permanent setting in the machine configuration (even for

TinyG/grbl etc), and not something g-code could violate anyway.

In an ideal world that would probably be true, but we are catering to real world machines, often on a low budget (numbers for easy reference):

- We have Machines that flex, wobble and vibrate. Infinite jerk

is for the math book, in the real world it translates to complex

elastic responses, with resonances in the machines, often

different per axis. For instance my Liteplacer resonates on a

much lower frequency on Y than on X. And it being a simple,

affordable DIY extrusion and belt machine, that (unnecessarily)

happens to be very badly balanced, it resonates a lot!

- You kinda see it here (this video is about something

completely different, but that scene shows how the head and

nozzle shakes on 1mm Y moves, which kinda hit the resonance):

https://youtu.be/5QcJ2ziIJ14?t=166