DSpace 6.2 with collection home page too slow to load if items have lots of bitsteams

Ying Jin

2018-02-07 00:43:17,724 DEBUG org.hibernate.stat.internal.ConcurrentStatisticsImpl @ HHH000117: HQL: null, time: 1ms, rows: 1

2018-02-07 00:43:17,882 INFO org.hibernate.engine.internal.StatisticalLoggingSessionEventListener @ Session Metrics {

237746 nanoseconds spent acquiring 1 JDBC connections;

0 nanoseconds spent releasing 0 JDBC connections;

552698751 nanoseconds spent preparing 48017 JDBC statements;

12590561333 nanoseconds spent executing 48017 JDBC statements;

0 nanoseconds spent executing 0 JDBC batches;

929992 nanoseconds spent performing 52 L2C puts;

188492 nanoseconds spent performing 10 L2C hits;

1935100 nanoseconds spent performing 42 L2C misses;

133422494 nanoseconds spent executing 2 flushes (flushing a total of 43868 entities and 58348 collections);

562373235433 nanoseconds spent executing 20136 partial-flushes (flushing a total of 235915143 entities and 235915143 collections)

}

2018-02-07 00:43:17,884 INFO org.hibernate.engine.internal.StatisticalLoggingSessionEventListener @ Session Metrics {

0 nanoseconds spent acquiring 0 JDBC connections;

0 nanoseconds spent releasing 0 JDBC connections;

0 nanoseconds spent preparing 0 JDBC statements;

0 nanoseconds spent executing 0 JDBC statements;

0 nanoseconds spent executing 0 JDBC batches;

0 nanoseconds spent performing 0 L2C puts;

0 nanoseconds spent performing 0 L2C hits;

0 nanoseconds spent performing 0 L2C misses;

0 nanoseconds spent executing 0 flushes (flushing a total of 0 entities and 0 collections);

0 nanoseconds spent executing 0 partial-flushes (flushing a total of 0 entities and 0 collections)

}

2018-02-17 20:30:27,534 INFO org.hibernate.engine.internal.StatisticalLoggingSessionEventListener @ Session Metrics {

341778 nanoseconds spent acquiring 1 JDBC connections;

0 nanoseconds spent releasing 0 JDBC connections;

15974134 nanoseconds spent preparing 1189 JDBC statements;

271327429 nanoseconds spent executing 1189 JDBC statements;

0 nanoseconds spent executing 0 JDBC batches;

717412 nanoseconds spent performing 45 L2C puts;

3906724 nanoseconds spent performing 247 L2C hits;

2081066 nanoseconds spent performing 855 L2C misses;

6829028 nanoseconds spent executing 2 flushes (flushing a total of 3916 entities and 5072 collections);

578786157 nanoseconds spent executing 261 partial-flushes (flushing a total of 345641 entities and 345641 collections)

}

.....

2018-02-17 20:30:27,536 INFO org.hibernate.engine.internal.StatisticalLoggingSessionEventListener @ Session Metrics {

120941 nanoseconds spent acquiring 1 JDBC connections;

0 nanoseconds spent releasing 0 JDBC connections;

19210 nanoseconds spent preparing 1 JDBC statements;

190340 nanoseconds spent executing 1 JDBC statements;

0 nanoseconds spent executing 0 JDBC batches;

0 nanoseconds spent performing 0 L2C puts;

0 nanoseconds spent performing 0 L2C hits;

0 nanoseconds spent performing 0 L2C misses;

0 nanoseconds spent executing 0 flushes (flushing a total of 0 entities and 0 collections);

0 nanoseconds spent executing 0 partial-flushes (flushing a total of 0 entities and 0 collections)

2018-02-17 20:31:25,062 INFO org.hibernate.engine.internal.StatisticalLoggingSessionEventListener @ Session Metrics {

168784 nanoseconds spent acquiring 1 JDBC connections;

0 nanoseconds spent releasing 0 JDBC connections;

12220421 nanoseconds spent preparing 1276 JDBC statements;

282597546 nanoseconds spent executing 1276 JDBC statements;

0 nanoseconds spent executing 0 JDBC batches;

237448 nanoseconds spent performing 32 L2C puts;

4220734 nanoseconds spent performing 300 L2C hits;

2013993 nanoseconds spent performing 1008 L2C misses;

7370699 nanoseconds spent executing 2 flushes (flushing a total of 4078 entities and 5434 collections);

708646098 nanoseconds spent executing 301 partial-flushes (flushing a total of 419170 entities and 419170 collections)

}

.....

2018-02-17 20:31:25,064 INFO org.hibernate.engine.internal.StatisticalLoggingSessionEventListener @ Session Metrics {

102177 nanoseconds spent acquiring 1 JDBC connections;

0 nanoseconds spent releasing 0 JDBC connections;

15913 nanoseconds spent preparing 1 JDBC statements;

195207 nanoseconds spent executing 1 JDBC statements;

0 nanoseconds spent executing 0 JDBC batches;

0 nanoseconds spent performing 0 L2C puts;

0 nanoseconds spent performing 0 L2C hits;

0 nanoseconds spent performing 0 L2C misses;

0 nanoseconds spent executing 0 flushes (flushing a total of 0 entities and 0 collections);

0 nanoseconds spent executing 0 partial-flushes (flushing a total of 0 entities and 0 collections)

Kim Shepherd

Cheers

Ying Jin

Bill T

Ying Jin

loglevel.other=DEBUG

log4j.logger.org.hibernate=INFO, hb

log4j.logger.org.hibernate.SQL=DEBUG

log4j.logger.org.hibernate.type=TRACE

log4j.logger.org.hibernate.hql.ast.AST=info

log4j.logger.org.hibernate.tool.hbm2ddl=warn

log4j.logger.org.hibernate.hql=debug

log4j.logger.org.hibernate.cache=info

log4j.logger.org.hibernate.jdbc=debug

log4j.logger.org.hibernate.stat=debug

Also, I added extra lines to hibernate.cfg.xml file:

<property name="hibernate.generate_statistics">true</property>

<property name="org.hibernate.stat">debug</property>

<property name="show_sql">true</property>

<property name="format_sql">true</property>

<property name="use_sql_comments">true</property>

Bill T

Bill T

Kim Shepherd

0CCB D957 0C35 F5C1 497E CDCF FC4B ABA3 2A1A FAEC

--

You received this message because you are subscribed to a topic in the Google Groups "DSpace Technical Support" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/dspace-tech/VIofW7EwEXY/unsubscribe.

To unsubscribe from this group and all its topics, send an email to dspace-tech+unsubscribe@googlegroups.com.

To post to this group, send email to dspac...@googlegroups.com.

Visit this group at https://groups.google.com/group/dspace-tech.

For more options, visit https://groups.google.com/d/optout.

Tom Desair

| Tom Desair 250-B Suite 3A, Lucius Gordon Drive, West Henrietta, NY 14586 Gaston Geenslaan 14, Leuven 3001, Belgium www.atmire.com |

--

You received this message because you are subscribed to the Google Groups "DSpace Technical Support" group.

To unsubscribe from this group and stop receiving emails from it, send an email to dspace-tech+unsubscribe@googlegroups.com.

Ying Jin

Hugo Carlos

Ying Jin

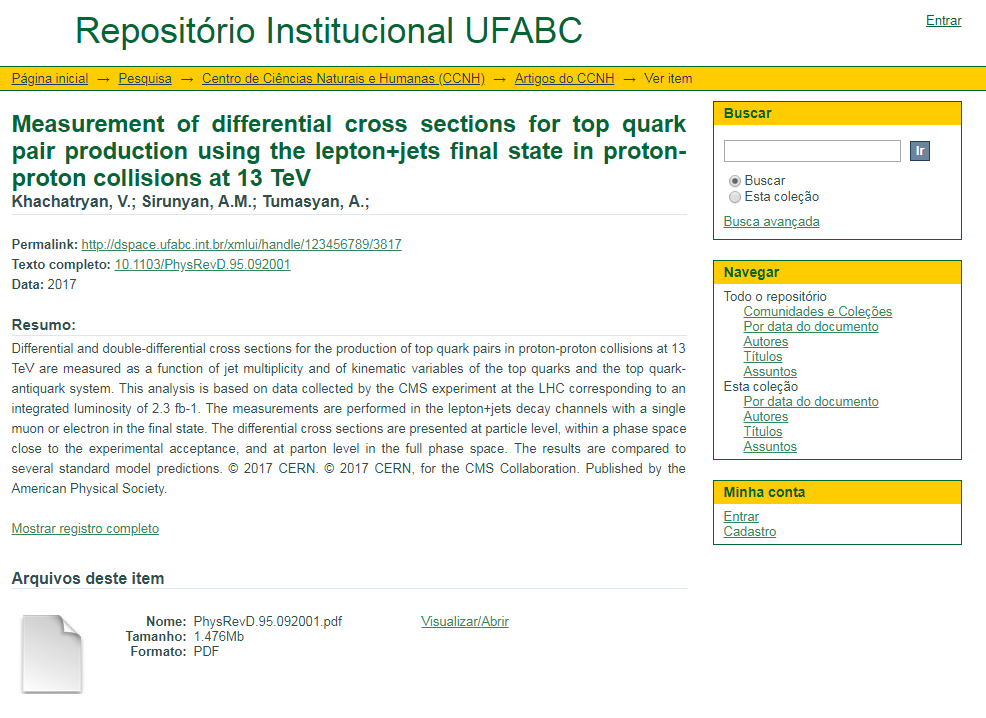

Hi,I think we had similar issues with xmlui at the exhibition of some itens we imported from scopus, where there are a large group of author (2000 people at the CERN workgroup, for example,as you can see from the attached image). We had modified the item-view.xml to look only for 3 of the author. (as seen in second image with the same registry), and it apparently solved the issue of performance when to exhibit those itens. Maybe if you limit the bitstreams to look when xml is building the exhibition could solve your issue?

_________________________________

Hugo da Silva Carlos

Bibliotecário

Universidade Federal do ABC

55+ (11) 4996-7934

Terry Brady

Tim Donohue

We now have a possible solution to this Collection homepage slowness in DSpace 6.2. It does require minor Java code changes to the XMLUI (in a single class). But, if you have time/willingness, it might be worth testing on your end prior to the new DSpace release (version 6.3) -- that way we can ensure it completely solves the problem on your site.

Here's the Pull Request (PR) including the necessary code changes: https://github.com/DSpace/DSpace/pull/2016

To unsubscribe from this group and all its topics, send an email to dspace-tech...@googlegroups.com.

To post to this group, send email to dspac...@googlegroups.com.

Visit this group at https://groups.google.com/group/dspace-tech.

For more options, visit https://groups.google.com/d/optout.

--

You received this message because you are subscribed to the Google Groups "DSpace Technical Support" group.

To unsubscribe from this group and stop receiving emails from it, send an email to dspace-tech...@googlegroups.com.

To post to this group, send email to dspac...@googlegroups.com.

Visit this group at https://groups.google.com/group/dspace-tech.

For more options, visit https://groups.google.com/d/optout.

--

You received this message because you are subscribed to a topic in the Google Groups "DSpace Technical Support" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/dspace-tech/VIofW7EwEXY/unsubscribe.

To unsubscribe from this group and all its topics, send an email to dspace-tech...@googlegroups.com.

To post to this group, send email to dspac...@googlegroups.com.

Visit this group at https://groups.google.com/group/dspace-tech.

For more options, visit https://groups.google.com/d/optout.

--

You received this message because you are subscribed to the Google Groups "DSpace Technical Support" group.

To unsubscribe from this group and stop receiving emails from it, send an email to dspace-tech...@googlegroups.com.

To post to this group, send email to dspac...@googlegroups.com.

Visit this group at https://groups.google.com/group/dspace-tech.

For more options, visit https://groups.google.com/d/optout.

--

You received this message because you are subscribed to the Google Groups "DSpace Technical Support" group.

To unsubscribe from this group and stop receiving emails from it, send an email to dspace-tech...@googlegroups.com.

To post to this group, send email to dspac...@googlegroups.com.

Visit this group at https://groups.google.com/group/dspace-tech.

For more options, visit https://groups.google.com/d/optout.

--Terry BradyApplications Programmer AnalystGeorgetown University Library Information Technology425-298-5498 (Seattle, WA)

--

You received this message because you are subscribed to the Google Groups "DSpace Technical Support" group.

To unsubscribe from this group and stop receiving emails from it, send an email to dspace-tech...@googlegroups.com.

To post to this group, send email to dspac...@googlegroups.com.

Visit this group at https://groups.google.com/group/dspace-tech.

For more options, visit https://groups.google.com/d/optout.

Technical Lead for DSpace & DSpaceDirect

DuraSpace.org | DSpace.org | DSpaceDirect.org

Tim Donohue

In the HTML source, where each Item is displayed (in the Recent Submissions section) you'll see an HTML comment that says:

<!-- External Metadata URL: cocoon://metadata/handle/10673/80/mets.xml?sections=dmdSec,fileSec&fileGrpTypes=THUMBNAIL-->

http://demo.dspace.org/xmlui/metadata/handle/10673/80/mets.xml?sections=dmdSec,fileSec&fileGrpTypes=THUMBNAIL

The key point here is that URL includes a parameter "fileGrpTypes=THUMBNAIL". That parameter restricts the theme to ONLY using the THUMBNAIL bundle of Items when creating the Recent Submissions lis on a Collection/Community homepage.

If your theme is based on the original dri2xhtml.xsl base theme (which is less likely), you'd find that setting here: https://github.com/DSpace/DSpace/blob/dspace-6_x/dspace-xmlui/src/main/webapp/themes/dri2xhtml/structural.xsl#L3600

Tim

Hi Tim,I have the code applied and tested on our development site. It's a great improvement for the XMLUI code. Unfortunately, most of our collections still slow loading even we have only 3 recent submissions for the collection home page.

We have our master files preserved with items, that's the most of our bitstreams come from. The masters are hidden from end users and have no thumbnails or txt derivatives. Since the code change is limiting number of thumbnails to load, it doesn't quite apply to our situation.Some other situations is, we have one collection with gallery theme which we have to load all 300+ thumbnails; Another is TEI theme, which we have more about 790 original bitstreams and masters each item, we need to load all the thumbnails for the TEI generation too.Hope this helps,Best,Ying

On Tue, Apr 10, 2018 at 9:20 AM, Tim Donohue <tdon...@duraspace.org> wrote:

Hi Ying (and all),

We now have a possible solution to this Collection homepage slowness in DSpace 6.2. It does require minor Java code changes to the XMLUI (in a single class). But, if you have time/willingness, it might be worth testing on your end prior to the new DSpace release (version 6.3) -- that way we can ensure it completely solves the problem on your site.

Here's the Pull Request (PR) including the necessary code changes: https://github.com/DSpace/DSpace/pull/2016And here's a downloadable "diff" of the changes included in that PR: https://github.com/DSpace/DSpace/pull/2016.diffLet us know if you are able to test it out. If so, please comment either in this email thread or on the ticket and let us know if it solves the issue: https://jira.duraspace.org/browse/DS-3883Thanks,Tim

On Wed, Apr 4, 2018 at 10:59 AM Terry Brady <Terry...@georgetown.edu> wrote:

This sounds like a significant issue that should be tracked for the next DSpace release. I created a Jira ticket to track it. I referenced this thread in the ticket.

On Tue, Apr 3, 2018 at 12:15 PM, Ying Jin <elsa...@gmail.com> wrote:

Hi Hugo,Thanks for the advice. Somehow, we don't have problem with item page but collection page.We updated the collection page with 3 recent submissions instead of 20 and zipped most of our masters so that the number of bitstreams for each item decreased. It helped a lot but its a temporary solution. It would be great if DSpace performs well with the setup we used to have.Best,Ying

On Tue, Apr 3, 2018 at 8:15 AM, Hugo Carlos <hugo....@ufabc.edu.br> wrote:

Hi,I think we had similar issues with xmlui at the exhibition of some itens we imported from scopus, where there are a large group of author (2000 people at the CERN workgroup, for example,as you can see from the attached image). We had modified the item-view.xml to look only for 3 of the author. (as seen in second image with the same registry), and it apparently solved the issue of performance when to exhibit those itens. Maybe if you limit the bitstreams to look when xml is building the exhibition could solve your issue?

--

Ying Jin

Tim Donohue

Looking forward to hearing what you find out.

In the meantime, I'd like to better understand this statement:

"For the thumbnail part, it is still a problem for us as I mentioned earlier, we have special theme needs to load all the thumbnails for the Gallery theme and TEI theme."

Ying Jin

Bill Tantzen

Tim Donohue

The issue you are seeing doesn't sound to be the same as the original issue in this thread. The behavior that was fixed in 6.3 was a behavior where the *Collection homepage* loaded extremely slowly if you had Items that had large number of bitstreams/thumbnails. The fix was to be much smarter about when to load Thumbnails/bitstreams into memory (and it was a fix to the Java code under the XMLUI, so it applied to all themes): https://github.com/DSpace/DSpace/pull/2016

--

All messages to this mailing list should adhere to the DuraSpace Code of Conduct: https://duraspace.org/about/policies/code-of-conduct/

---

You received this message because you are subscribed to the Google Groups "DSpace Technical Support" group.

To unsubscribe from this group and stop receiving emails from it, send an email to dspace-tech...@googlegroups.com.

To post to this group, send email to dspac...@googlegroups.com.

Visit this group at https://groups.google.com/group/dspace-tech.

For more options, visit https://groups.google.com/d/optout.

kar...@gmail.com

On Wednesday, February 21, 2018 at 4:02:06 PM UTC-6, Ying Jin wrote:

Bill Tantzen

only on pages with a large number of records, for instance

community-list or on community pages with a large number of

sub-communities and collections.

For me, it is most apparent for /community-list, which takes a couple

minutes to display.

-- Bill

> All messages to this mailing list should adhere to the DuraSpace Code of Conduct: https://duraspace.org/about/policies/code-of-conduct/

> ---