Scheduled Compaction is not trigger any Task

Alon Shoshani

I'm running Druid 0.14 with Kafka indexing service.

I'm trying to run schedule compaction, and I would like the segments will be around 500MB as recommended in the documentation.

My middle manager has 2 workers and the ratio is 0.5, means 1 compaction job at a time.

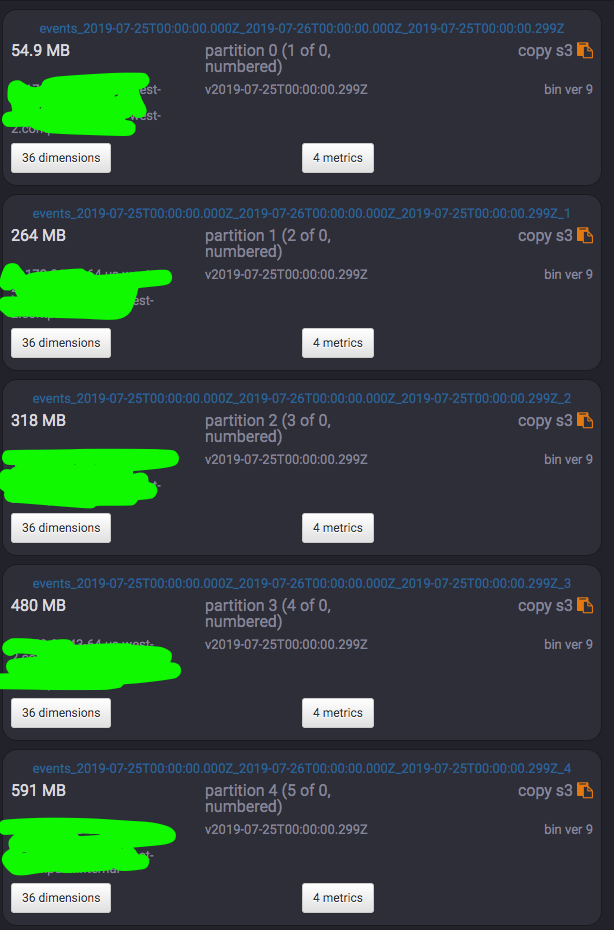

This is my configuration, and I have an interval time which has the following segments size,

I expect the first segment will be merged with the second one.

and the tasks just not started...

Any ideas?

{ "compactionConfigs": [ { "dataSource": "events", "keepSegmentGranularity": true, "taskPriority": 25, "inputSegmentSizeBytes": 536870912, "targetCompactionSizeBytes": 419430400, "maxRowsPerSegment": null, "maxNumSegmentsToCompact": 150, "skipOffsetFromLatest": "P3D", "tuningConfig": null, "taskContext": null } ], "compactionTaskSlotRatio": 0.5, "maxCompactionTaskSlots": 1}Gaurav Bhatnagar

Jihoon Son

--

You received this message because you are subscribed to the Google Groups "Druid User" group.

To unsubscribe from this group and stop receiving emails from it, send an email to druid-user+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/druid-user/8bb64b43-b5fa-4b6b-be84-833310d17c0a%40googlegroups.com.

Alon Shoshani

According to your claim, the coordinator will compact the same interval over and over ( I already had this issue ),

https://druid.apache.org/docs/0.15.0-incubating/design/coordinator.html#segment-search-policy

You received this message because you are subscribed to a topic in the Google Groups "Druid User" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/druid-user/sTwbjtLm5Uc/unsubscribe.

To unsubscribe from this group and all its topics, send an email to druid-user+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/druid-user/CACZfFK4ec89YwNfPLKb6KFWHfhxeuyXCx%3DA_NzP5iv5fvPaVhQ%40mail.gmail.com.

Alon Shoshani

Alon Shoshani

Hi,

According to your claim, the coordinator will compact the same interval over and over ( I already had this issue ),

https://druid.apache.org/docs/0.15.0-incubating/design/coordinator.html#segment-search-policy

Hi Alon,Currently in auto compaction, the compaction happens atomically per time chunk, which means, all segments in the same time chunk are compacted together or not.From the screenshot you shared, this time chunk looks have 5 segments and their total size is greater than the configured "inputSegmentSizeBytes" which is 512MB.You need to raise this to more than the total size of segments in each time chunk (probably 1.5 - 2GB?).Jihoon

To unsubscribe from this group and stop receiving emails from it, send an email to druid...@googlegroups.com.

Venkat Poornalingam

I think it’s a documentation error. It should have been `targetCompactionSizeBytes` rather than `inputSegmentSizeBytes` in that documentation link you referred - https://druid.apache.org/docs/0.15.0-incubating/design/coordinator.html#segment-search-policy. Would await Jihoon’s reply though.

Same interval would be compacted again and again, until the optimal size is reached (`targetCompactionSizeBytes`). If this is not the case, then may be its an issue.

From: "druid...@googlegroups.com" <druid...@googlegroups.com> on behalf of Alon Shoshani <al...@oribi.io>

Reply-To: "druid...@googlegroups.com" <druid...@googlegroups.com>

Date: Tuesday, July 30, 2019 at 12:14 PM

To: "druid...@googlegroups.com" <druid...@googlegroups.com>

Subject: Re: [druid-user] Re: Scheduled Compaction is not trigger any Task

Hi,

According to your claim, the coordinator will compact the same interval over and over ( I already had this issue ),

because the sum of the segments will be always lower or equal than 2.5G.

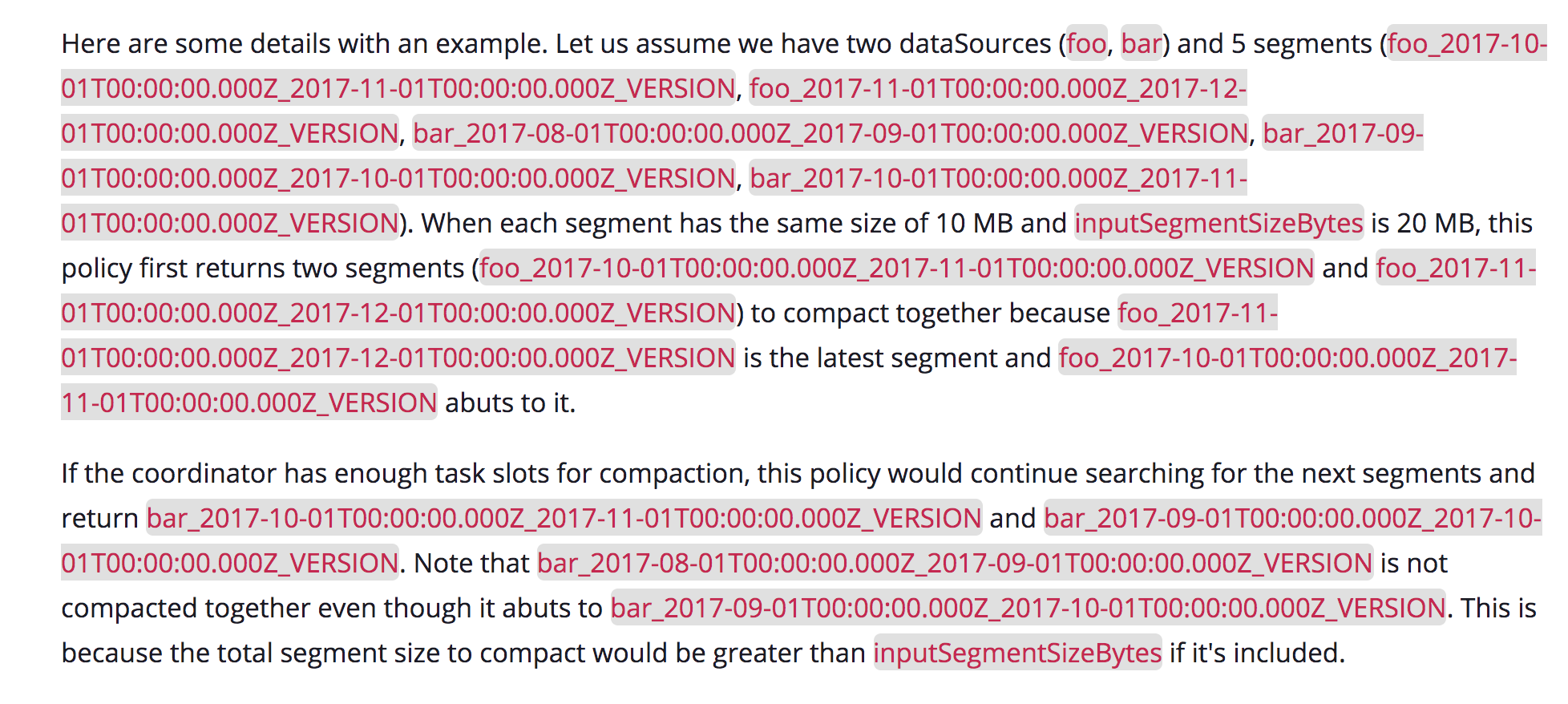

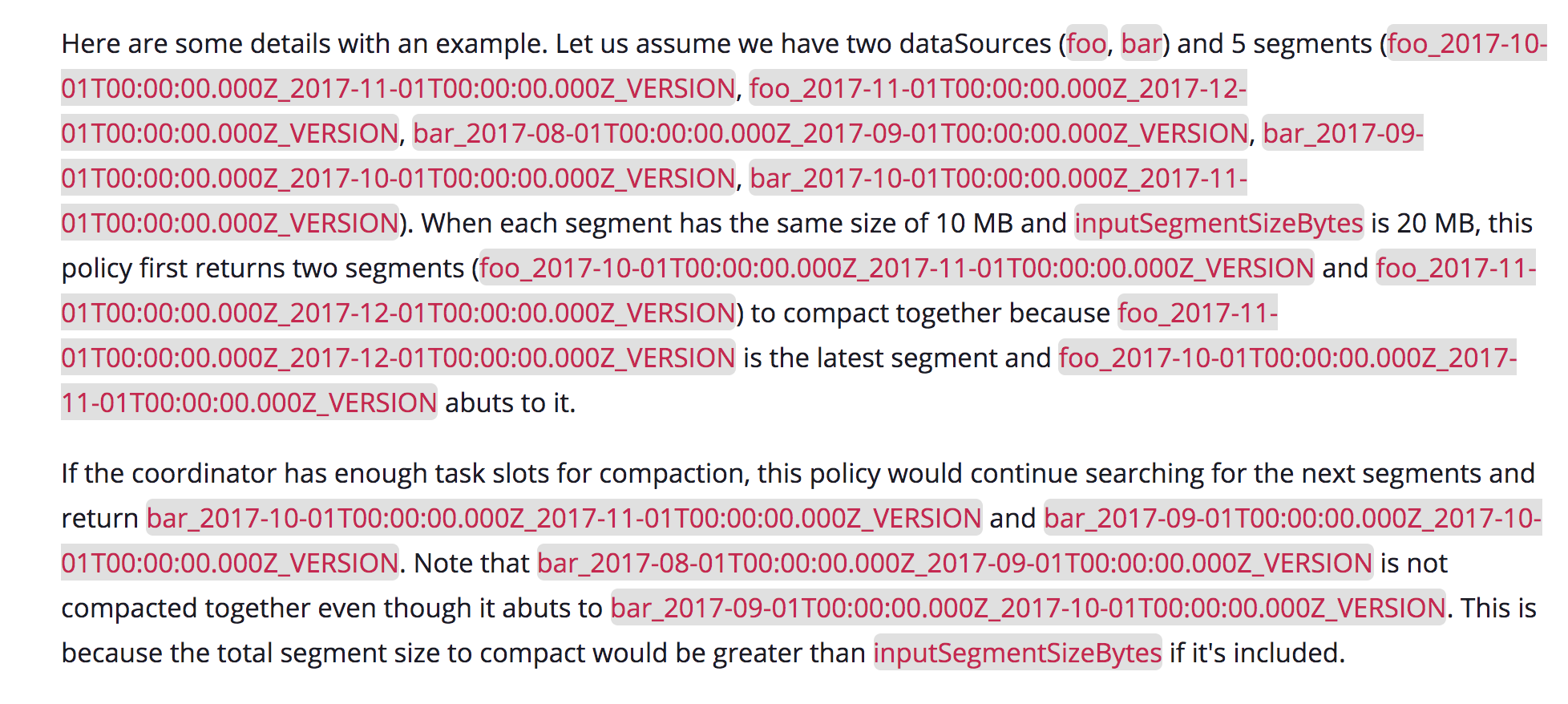

According to the documentation here and the example:

look at "bar" datasource (, it has 3 segments and only the sum of two are 20MB so it will merge them and not the third one

https://druid.apache.org/docs/0.15.0-incubating/design/coordinator.html#segment-search-policy

--

You received this message because you are subscribed to the Google Groups "Druid User" group.

To unsubscribe from this group and stop receiving emails from it, send an email to druid-user+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/druid-user/8bb64b43-b5fa-4b6b-be84-833310d17c0a%40googlegroups.com.

--

You received this message because you are subscribed to a topic in the Google Groups "Druid User" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/druid-user/sTwbjtLm5Uc/unsubscribe.

To unsubscribe from this group and all its topics, send an email to druid-user+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/druid-user/CACZfFK4ec89YwNfPLKb6KFWHfhxeuyXCx%3DA_NzP5iv5fvPaVhQ%40mail.gmail.com.

--

You received this message because you are subscribed to the Google Groups "Druid User" group.

To unsubscribe from this group and stop receiving emails from it, send an email to

druid-user+...@googlegroups.com.

To view this discussion on the web visit

https://groups.google.com/d/msgid/druid-user/CAL_eGv79MMKAXd5%2BSeeMt9FVPVfZeV8rbOmWJqRi8G2xtG4MmA%40mail.gmail.com.

Alon Shoshani

the segment scan algorithm, look at one interval and it's segments. if it found two segments in the same interval, which their sum is less than inputSegmentSizeBytes

it will merge them.

In the next iteration, if in the same interval there are candidates for merging, merge !

make sense?

To unsubscribe from this group and stop receiving emails from it, send an email to druid...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/druid-user/8bb64b43-b5fa-4b6b-be84-833310d17c0a%40googlegroups.com.

--

You received this message because you are subscribed to a topic in the Google Groups "Druid User" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/druid-user/sTwbjtLm5Uc/unsubscribe.

To unsubscribe from this group and all its topics, send an email to drui...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/druid-user/CACZfFK4ec89YwNfPLKb6KFWHfhxeuyXCx%3DA_NzP5iv5fvPaVhQ%40mail.gmail.com.

--

You received this message because you are subscribed to the Google Groups "Druid User" group.

To unsubscribe from this group and stop receiving emails from it, send an email to druid...@googlegroups.com.

Venkat Poornalingam

Yes. It makes sense to me.

Note: I re-read the documentation and it is correct.

Error! Filename not specified.

--

You received this message because you are subscribed to the Google Groups "Druid User" group.

To unsubscribe from this group and stop receiving emails from it, send an email to druid...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/druid-user/8bb64b43-b5fa-4b6b-be84-833310d17c0a%40googlegroups.com.

--

You received this message because you are subscribed to a topic in the Google Groups "Druid User" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/druid-user/sTwbjtLm5Uc/unsubscribe.

To unsubscribe from this group and all its topics, send an email to drui...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/druid-user/CACZfFK4ec89YwNfPLKb6KFWHfhxeuyXCx%3DA_NzP5iv5fvPaVhQ%40mail.gmail.com.

--

You received this message because you are subscribed to the Google Groups "Druid User" group.

To unsubscribe from this group and stop receiving emails from it, send an email to druid...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/druid-user/CAL_eGv79MMKAXd5%2BSeeMt9FVPVfZeV8rbOmWJqRi8G2xtG4MmA%40mail.gmail.com.

--

You received this message because you are subscribed to the Google Groups "Druid User" group.

To unsubscribe from this group and stop receiving emails from it, send an email to

druid-user+...@googlegroups.com.

To view this discussion on the web visit

https://groups.google.com/d/msgid/druid-user/50c352b5-7817-4338-bd46-80ffdd08ab71%40googlegroups.com.

Alon Shoshani

To unsubscribe from this group and all its topics, send an email to druid-user+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/druid-user/MN2PR05MB66859C1B5423011E205DDF8AAEDC0%40MN2PR05MB6685.namprd05.prod.outlook.com.

Venkat Poornalingam

Hi Alon

I meant, there is no bug in Documentation.

But, yes, druid keeps checking for compaction possibilities when inputSegmentBytes condition is met and sometimes druid might land up doing compaction again.

This is expected to be fixed next edition of auto compaction.

Thanks & Rgds

Venkat

Error! Filename not specified.

To view this discussion on the web visit

https://groups.google.com/d/msgid/druid-user/CAL_eGv4LfPXpMm95_rGCLnrJrtqO50dz%3DLn8gCcXPcsFARYNuA%40mail.gmail.com.

Alon Shoshani

To view this discussion on the web visit https://groups.google.com/d/msgid/druid-user/MN2PR05MB6685AB583AE543CFFB927600AEDF0%40MN2PR05MB6685.namprd05.prod.outlook.com.

Venkat Poornalingam

Alon

Could you please let me know the number of segments for the given interval and the total size of the segments?

Is the total size of all the segments in the interval < inputSegmentSizeBytes? This is an expected condition.

To view this discussion on the web visit

https://groups.google.com/d/msgid/druid-user/CAL_eGv72ZLGEa8XfyaLZ192y_PgLDLK38f4EJcH1bd6byG4_Tw%40mail.gmail.com.

Alon Shoshani

I have 7 segments in this interval of time, and the total size is 2.5G.

My first segment is 54MB and my second is 264MB, so I expect the compaction will find these two in the same interval and merge them.

According to the documentation, if you read the "bar" Datasource example it merges the first two without the third one

To view this discussion on the web visit https://groups.google.com/d/msgid/druid-user/MN2PR05MB668517D146AB0703F2675583AEDF0%40MN2PR05MB6685.namprd05.prod.outlook.com.

Venkat Poornalingam

Can you pleas set it to 2.5Gb and check if your segments are being auto compacted?

To view this discussion on the web visit

https://groups.google.com/d/msgid/druid-user/CAL_eGv76PBYnde-pGzS%3DHtqCQ-Xj3JjG7VPFf6sHh0vK6SkHJQ%40mail.gmail.com.

Alon Shoshani

But Druid Keeps executing the same task on the same interval again and again, why?

To view this discussion on the web visit https://groups.google.com/d/msgid/druid-user/MN2PR05MB6685A645FF0E6C1873F8CEE6AEDE0%40MN2PR05MB6685.namprd05.prod.outlook.com.

Venkat Poornalingam

That’s a bug expected to be fixed in an upcoming version.

To view this discussion on the web visit

https://groups.google.com/d/msgid/druid-user/CAL_eGv6kn2d6JZa8RDCbMYT%3DFnBiahCTWSjCeBhSDSPweo-Z0A%40mail.gmail.com.

Alon Shoshani

Maybe you can help me with another issue with druid 0.14.

I'm not able to send graphite metrics using graphite emitter it's just ignoring the host I provide in the configuration,

while when the emitter is logging the metrics are logged to file....

I will appreciate it.

I also open bug on github, but no response...

https://groups.google.com/forum/#!searchin/druid-user/graphite$20emitter%7Csort:date/druid-user/ikI6aKkjmc0/ealRd390AgAJ

To view this discussion on the web visit https://groups.google.com/d/msgid/druid-user/MN2PR05MB6685EDA639EBABC7CA40E869AEDE0%40MN2PR05MB6685.namprd05.prod.outlook.com.

Venkat Poornalingam

Alon,

Please post a new thread for this. So that some one who is working on graphite emitter could also reply.

To view this discussion on the web visit

https://groups.google.com/d/msgid/druid-user/CAL_eGv471VKESYHraf%3Db123sLBCq-BKLJHZnUAf9LL_OnmuzYg%40mail.gmail.com.

Alon Shoshani

To view this discussion on the web visit https://groups.google.com/d/msgid/druid-user/MN2PR05MB66855A2D8BC4449E7D8EF6DDAEDE0%40MN2PR05MB6685.namprd05.prod.outlook.com.

Venkat Poornalingam

Can you please try with Druid 0.15? I hope you have the right loading lists in the common_runtime_properties.

To view this discussion on the web visit

https://groups.google.com/d/msgid/druid-user/CAL_eGv407OuP04FVa%2B2FtYRJ%2BwnEy87J9--2LhES5yZsJ47rQQ%40mail.gmail.com.

Alon Shoshani

To view this discussion on the web visit https://groups.google.com/d/msgid/druid-user/MN2PR05MB66859BD1C1ACE04CC744C1B9AEDE0%40MN2PR05MB6685.namprd05.prod.outlook.com.

Venkat Poornalingam

Alon, I’,m not sure about it. I haven’t tried Graphite Emitter.

To view this discussion on the web visit

https://groups.google.com/d/msgid/druid-user/CAL_eGv4CoiFvcVpoW_DFAvrBddhcPneEMUy2HBDoHgy9OKWN%2BA%40mail.gmail.com.