Spray performance issue: new TCP connections not being accepted fast enough

Richard Bradley

Johannes Rudolph

On Thu, Jul 17, 2014 at 3:54 PM, Richard Bradley

<richard.brad...@gmail.com> wrote:

> My questions are:

> 1. How can I diagnose what bottleneck is causing our app to not accept new

> TCP connections fast enough? (and how can I relieve that bottleneck?)

the wire by running wireshark / tcpdump on the server and checking

where exactly the delays are introduced into the TCP conversation.

It can also make sense to look into the system logs to check if there

are any log messages. E.g. here's one issue I've observed when working

with many connections:

http://lserinol.blogspot.de/2009/02/iptables-and-connection-limit.html

> 2. What is the meaning of the "dropped Ack(0)" warnings? Do they need

> fixing?

happens if you reset the spray.can.server.pipelining-limit to 1?

--

Johannes

-----------------------------------------------

Johannes Rudolph

http://virtual-void.net

Richard Bradley

Thanks, we'll look into those suggestions.

> > 2. What is the meaning of the "dropped Ack(0)" warnings? Do they

> > need fixing?

> It seems you have HTTP pipelining enabled. Is that by purpose?

Yes.

Does pipelining cause those warnings? Are they worth worrying about?

> What happens if you reset the spray.can.server.pipelining-limit to 1?

I think we had that set to 1 until recently and it hasn't affected the load testing (I think our test client might not support pipelining).

We'll do a test run with that off again and see if it makes any difference.

Best,

Rich

Richard Bradley

Thanks, we'll look into those suggestions.

Thanks,

Richard Bradley

Johannes Rudolph

On Tue, Jul 22, 2014 at 2:59 PM, Richard Bradley

<richard.brad...@gmail.com> wrote:

> We are going to try taking the app from the Spray benchmark above and seeing

> how many connections per second it can achieve, so we'll have a reproducible

> test case.

spray codebase contains similar benchmark code inside its codebase.

Maybe you can start from there:

https://github.com/spray/spray/tree/master/examples/spray-can/server-benchmark

Richard Bradley

Yes, a more easily reproducible test-case would be very good. The

spray codebase contains similar benchmark code inside its codebase.

Maybe you can start from there:

https://github.com/spray/spray/tree/master/examples/spray-can/server-benchmark

We have reproduced the issue using the Spray “benchmark” app linked above.

It shows the exact same symptoms:

- When load exceeds around 1200 connections/second, the app starts to take a long time to reply to new TCP connections

- This is even though the CPU on the machine is only at 15-20%

- So there is some bottleneck preventing Spray from accepting TCP connections fast enough

- What is it?

- How can we improve it?

We used Spray v 1.3.1 and Akka v 2.3.3

I can’t upload the test harness here, but it just makes lots of HTTP requests, using a new TCP connection for each request.

We have already eliminated the following possible bottlenecks:

- TIME_WAIT issues including TCP port exhaustion

- Limits on the maximum number of open file handles

- Increases to the socket “backlog” size

I have included some relevant graphs below, as well as the

diff of changes we had to make to the benchmark app (nothing significant).

If anyone has any ideas about what might be causing this

bottleneck, or any suggestions on relevant stats we could gather, they would be

gratefully received.

Thanks,

Rich

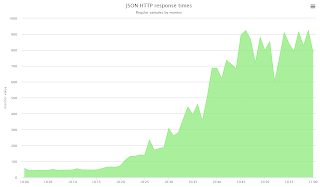

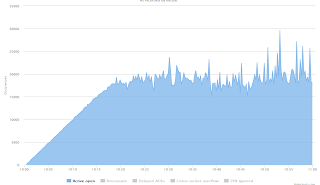

In these graphs, the test starts at 10:00, and takes about 20 mins to ramp up the load, reaching 1200 connections/sec at around 10:20.

CPU load (low throughout the test):

Response times (starts low, increases when the TCP connection issue manifests after around 10:20):

Number of TCP “listen socket overflow” events, as reported by “netstat -s” (“times the listen queue of a socket overflowed”).

I think this proves that the system isn’t accepting TCP connections fast enough:

Number of open TCP connections:

We have graphs showing that the slow requests are taking 3 or 9 seconds to complete, which is consistent with TCP backoff times when a connection attempt fails.

The diff of changes we made to the benchmark app:

diff --git a/spray/build.sbt b/spray/build.sbt

index 2a9b86f..b2ed753 100644

--- a/spray/build.sbt

+++ b/spray/build.sbt

@@ -15,9 +15,9 @@ resolvers ++= Seq(

libraryDependencies ++= Seq(

"io.spray" %% "spray-json" % "1.2.4",

- "io.spray" % "spray-can" % "1.1-20130619",

- "com.typesafe.akka" %% "akka-actor" % "2.1.2",

- "com.typesafe.akka" %% "akka-slf4j" % "2.1.2",

+ "io.spray" % "spray-can" % "1.3.1",

+ "com.typesafe.akka" %% "akka-actor" % "2.3.3",

+ "com.typesafe.akka" %% "akka-slf4j" % "2.3.3",

"ch.qos.logback"% "logback-classic" % "1.0.12" % "runtime"

)

diff --git a/spray/src/main/resources/application.conf b/spray/src/main/resources/application.conf

index ca36824..9e376ab 100644

--- a/spray/src/main/resources/application.conf

+++ b/spray/src/main/resources/application.conf

@@ -24,5 +24,5 @@ spray.can.server {

app {

interface = "0.0.0.0"

- port = 8080

+ port = 8099

}

diff --git a/spray/src/main/scala/spray/examples/BenchmarkService.scala b/spray/src/main/scala/spray/examples/BenchmarkService.scala

index ca3e66b..49c4280 100644

--- a/spray/src/main/scala/spray/examples/BenchmarkService.scala

+++ b/spray/src/main/scala/spray/examples/BenchmarkService.scala

@@ -18,6 +18,8 @@ class BenchmarkService extends Actor {

val unknownResource = HttpResponse(NotFound, entity = "Unknown resource!")

def fastPath: Http.FastPath = {

+ case HttpRequest(_, Uri(_, _, Slash(Segment("myapp", _)), _, _), _, _, _) =>

+ HttpResponse(entity = "You hit myapp ")

case HttpRequest(GET, Uri(_, _, Slash(Segment(x, Path.Empty)), _, _), _, _, _) =>

x match {

case "json" =>

Johannes Rudolph

Johannes Rudolph

<richard.brad...@gmail.com> wrote:

>> I would probably start with verifying what you are seeing directly on

>> the wire by running wireshark / tcpdump on the server and checking

>> where exactly the delays are introduced into the TCP conversation.

>

> We have already done this: the client sends a SYN to the Spray application,

> but the app takes a long time to respond with an ACK.

means even if the app isn't accepting connection at all the kernel

will already accept connections.

I tried reproducing your issue but everything works as far as I can

see. I set up a repository with the code used:

https://github.com/jrudolph/spray-benchmark

You can use `./build-and-run.sh` to build and run the server. Then in

another terminal `./benchmark.sh` to run an `ab` benchmark. It will

also show some of the important kernel parameters. This is what it

shows on my machine:

net.ipv4.tcp_max_syn_backlog = 50000

net.ipv4.tcp_tw_reuse = 0

net.ipv4.tcp_tw_recycle = 0

net.core.somaxconn = 50000

time(seconds) unlimited

file(blocks) unlimited

data(kbytes) unlimited

stack(kbytes) 8192

coredump(blocks) unlimited

memory(kbytes) unlimited

locked memory(kbytes) 64

process 127171

nofiles 4096

vmemory(kbytes) unlimited

locks unlimited

I increased somaxcon and tcp_max_syn_backlog. The README contains some

diagnostic commands I use to watch what happens. In your diff you

didn't increase the listen backlog in the spray app. I can get about

17k RPS (= 17000 connections per second) with this setup which ran

for 15 minutes without problems (which may not be long enough?).

Can you try reproducing it with this app?

I wonder why your graphs show 20k open connections if you only open

1200 per second. Are you testing on localhost or from another machine?

Michael Hamrah

Mike

Richard Bradley

> I wonder why your graphs show 20k open connections if you only open

> 1200 per second. Are you testing on localhost or from another machine?

Richard Bradley

Mathias Doenitz

Thanks for sharing your solution!

Cheers,

Mathias

---

mat...@spray.io

http://spray.io

> You received this message because you are subscribed to the Google Groups "spray.io User List" group.

> To unsubscribe from this group and stop receiving emails from it, send an email to spray-user+...@googlegroups.com.

> Visit this group at http://groups.google.com/group/spray-user.

> To view this discussion on the web visit https://groups.google.com/d/msgid/spray-user/c339bcf6-8370-4f86-b9be-24472ab54f79%40googlegroups.com.

> For more options, visit https://groups.google.com/d/optout.

Johannes Rudolph

Johannes

Edwin Fuquen

Richard Bradley

This is great info. It might be good to include a "Performance Tuning" section in the spray-can docs that could make suggestions on config parameters that should be changed in spray to get high connection throughput? Even some guidance on kernel parameter tuning would be nice, though not directly related to spray.