Metamath Zero

Mario Carneiro

var ph ps ch: wff*;

term wi (ph ps: wff): wff; infixr wi: -> 25;

term wn (ph: wff): wff; prefix wn: ~ 40;

axiom ax-1: << ph -> ps -> ph >>

(wi ph (wi ps ph));

axiom ax-2: << (ph -> ps -> ch) -> (ph -> ps) -> ph -> ch >>

(wi (wi ph (wi ps ch)) (wi (wi ph ps) (wi ph ch)));

axiom ax-3: << (~ph -> ~ps) -> ps -> ph >>

(wi (wi (wn ph) (wn ps)) (wi ps ph));

axiom ax-mp:

<< ph >> ph ->

<< ph -> ps >> (wi ph ps) ->

<< ps >> ps;

theorem a1i: << ph >> ph -> << ps -> ph >> (wi ps ph);

def wb (ph ps: wff): wff :=

<< ~((ph -> ps) -> ~(ps -> ph)) >>

(wn (wi (wi ph ps) (wn (wi ps ph)))) {

infix wb: <-> 20;

theorem bi1: << (ph <-> ps) -> ph -> ps >>

(wi (wb ph ps) (wi ph ps));

theorem bi2: << (ph <-> ps) -> ps -> ph >>

(wi (wb ph ps) (wi ps ph));

theorem bi3: << (ph -> ps) -> (ps -> ph) -> (ph <-> ps) >>

(wi (wi ph ps) (wi (wi ps ph) (wb ph ps)));

}

def wa (ph ps: wff): wff := << ~(ph -> ~ps) >> (wn (wi ph (wn ps))) {

infix wa: /\ 20;

theorem df-an: << (ph /\ ps) <-> ~(ph -> ~ps) >>

(wi (wa ph ps) (wn (wi ph (wn ps))));

}

def wtru (bound p: wff): wff := << p <-> p >> (wb p p) {

notation wtru := << T. >>;

theorem df-tru: << T. <-> (ph <-> ph) >> (wb wtru (wb ph ph));

}

pure nonempty sort set;

bound x y z w: set;

term wal (x: set) (ph: wff x): wff; prefix wal: A. 30;

def wex (x: set) (ph: wff x): wff :=

<< ~A. x ~ph >> (wn (wal x (wn ph))) {

prefix wex: E. 30;

theorem df-ex: << E. x ph <-> ~A. x ~ph >>

(wb (wex x ph) (wn (wal x (wn ph))));

}

axiom ax-gen: << ph >> ph -> << A. x ph >> (wal x ph);

axiom ax-4: << A. x (ph -> ps) -> A. x ph -> A. x ps >>

(wi (wal x (wi ph ps)) (wi (wal x ph) (wal x ps)));

axiom ax-5 (ph: wff): << ph -> A. x ph >> (wi ph (wal x ph));

var a b c: set;

term weq (a b: set): wff;

term wel (a b: set): wff;

{

local infix weq: = 50;

local infix wel: e. 40;

def weu (x: set) (ph: wff x): wff :=

<< E. y A. x (ph <-> x = y) >>

(wex y (wal x (wb ph (weq x y)))) {

prefix wex: E! 30;

theorem df-ex: << E! x ph <-> E. y A. x (ph <-> x = y) >>

(wb (weu x ph) (wex y (wal x (wb ph (weq x y)))));

}

axiom ax-6: << E. x x = a >> (wex x (weq x a));

axiom ax-7: << a = b -> a = c -> b = c >>

(wi (weq a b) (wi (weq a c) (weq b c)));

axiom ax-8: << a = b -> a e. c -> b e. c >>

(wi (weq a b) (wi (wel a c) (wel b c)));

axiom ax-9: << a = b -> c e. a -> c e. b >>

(wi (weq a b) (wi (wel c a) (wel c b)));

axiom ax-10: << ~A. x ph -> A. x ~ A. x ph >>

(wi (wal x ph) (wal x (wn (wal x ph))));

axiom ax-11: << A. x A. y ph -> A. y A. x ph >>

(wi (wal x (wal y ph)) (wal y (wal x ph)));

axiom ax-12 (ph: wff y): << A. y ph -> A. x (x = y -> ph) >>

(wi (weq x y) (wi (wal y ph) (wal x (wi (weq x y) ph))));

axiom ax-ext: << A. x (x e. a <-> x e. b) -> a = b >>

(wi (wal x (wb (wel x a) (wel x b))) (weq a b));

axiom ax-rep (ph: wff y z):

<< A. y E. x A. z (ph -> z = x) ->

E. x A. z (z e. x <-> E. y (y e. a /\ ph)) >>

(wi (wal y (wex x (wal z (wi ph (weq z x)))))

(wex x (wal z (wb (wel z x) (wex y (wa (wel y a) ph))))));

axiom ax-pow: << E. x A. y (A. z (z e. y -> z e. a) -> y e. x) >>

(wex x (wal y (wi (wal z (wi (wel z y) (wel z a))) (wel y x))));

axiom ax-un: << E. x A. y (E. z (y e. z /\ z e. a) -> y e. x) >>

(wex x (wal y (wi (wex z (wa (wel y z) (wel z a))) (wel y x))));

axiom ax-reg:

<< E. x x e. a -> E. x (x e. a /\ A. y (y e. x -> ~ y e. a)) >>

(wi (wex x (wel x a))

(wex x (wa (wel x z) (wal y (wi (wel y x) (wn (wel y a)))))));

axiom ax-inf:

<< E. x (a e. x /\ A. y (y e. x -> E. z (y e. z /\ z e. x))) >>

(wex x (wa (wel a x) (wal y (wi (wel y x)

(wex z (wa (wel y z) (wel z x)))))));

axiom ax-ac:

<< E. x A. y (y e. a -> E. z z e. y ->

E! z (z e. y /\ E. w (w e. x /\ y e. w /\ z e. w))) >>

(wex x (wal y (wi (wel y a) (wi (wex z (wel z y))

(weu z (wa (wel z y) (wex w

(wa (wa (wel w x) (wel y w)) (wel z w)))))))));

}

nonempty sort class;

var A B: class*;

term cab (x: set) (ph: wff x): class;

term welc (a: set) (A: class): wff;

notation cab (x: set) (ph: wff x) := << { x | ph } >>;

{

local infix welc: e. 50;

axiom ax-8b: << a = b -> a e. A -> b e. A >>

(wi (weq a b) (wi (welc a A) (welc b A)));

axiom ax-clab: << x e. {x | ph} <-> ph >>

(wb (welc x (cab x ph)) ph);

def wceq (A B: class): wff :=

<< A. x (x e. A <-> x e. B) >>

(wal x (welc x A) (welc x B)) {

infix wceq: = 50;

theorem df-ceq: << A = B <-> A. x (x e. A <-> x e. B) >>

(wb (wceq A B) (wal x (welc x A) (welc x B)));

}

def cv (a: set): class := << {x | wel x a} >>

(cab x (wel x a)) {

coercion cv: set -> class;

theorem df-cv: << a e. b <-> wel a b >>

(wb (welc a (cv b)) (wel a b));

}

}

def wcel (A B: class): wff :=

<< E. x (x = A /\ welc x B) >>

(wex x (wceq (cv x) A) (welc x B)) {

infix wcel: e. 50;

theorem df-cel: << A e. B <-> E. x (x = A /\ welc x B) >>

(wb (wcel A B) (wex x (wceq (cv x) A) (welc x B)));

}

ookami

Am Mittwoch, 20. Februar 2019 12:32:56 UTC+1 schrieb Mario Carneiro:

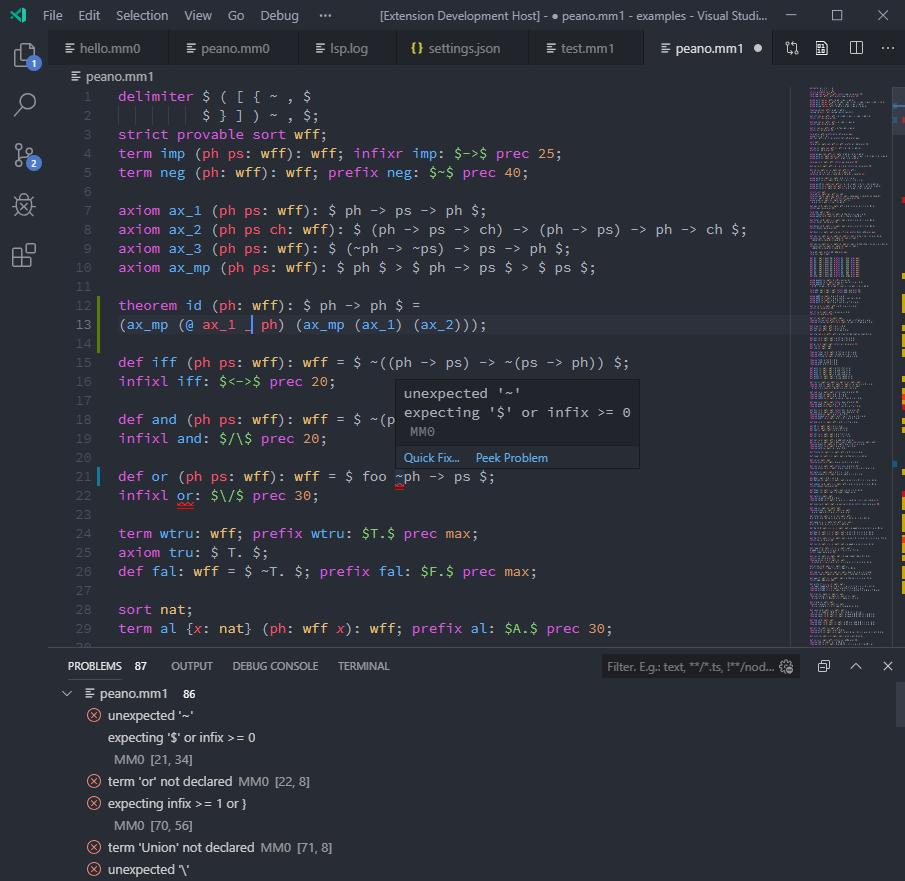

I'm experimenting with a new language, Metamath Zero, an amalgamation of Metamath, Ghilbert and Lean syntax. The goal is to balance human readability and ease of writing a verifier, while also making some attempts to patch the bugs in metamath soundness (not that it's wrong, but there is a lot of soundness justification currently happening outside the metamath verifier kernel). Without giving too much explanation of what the syntax means (it's all fictional syntax at the moment, there is no parser yet), I would like to use some of you as guinea pigs for the "readability" aspect. How obvious is it what things mean? I will put some more explanatory comments at the end.nonempty sort wff;var ph ps ch: wff*;

term wi (ph ps: wff): wff; infixr wi: -> 25;

term wn (ph: wff): wff; prefix wn: ~ 40;

axiom ax-1: << ph -> ps -> ph >>

(wi ph (wi ps ph));

axiom ax-2: << (ph -> ps -> ch) -> (ph -> ps) -> ph -> ch >>

(wi (wi ph (wi ps ch)) (wi (wi ph ps) (wi ph ch)));

axiom ax-3: << (~ph -> ~ps) -> ps -> ph >>

(wi (wi (wn ph) (wn ps)) (wi ps ph));

axiom ax-mp:

<< ph >> ph ->

<< ph -> ps >> (wi ph ps) ->

<< ps >> ps;

Sauer, Andrew Jacob

--

You received this message because you are subscribed to the Google Groups "Metamath" group.

To unsubscribe from this group and stop receiving emails from it, send an email to metamath+u...@googlegroups.com.

To post to this group, send email to meta...@googlegroups.com.

Visit this group at https://groups.google.com/group/metamath.

For more options, visit https://groups.google.com/d/optout.

Raph Levien

David A. Wheeler

> I'm experimenting with a new language, Metamath Zero, an amalgamation of

> Metamath, Ghilbert and Lean syntax.

automatically converted into it. It looks like that's the case.

Syntax should be simple, though "simple" is in the eye of the beholder :-).

> var ph ps ch: wff*;

> term wi (ph ps: wff): wff; infixr wi: -> 25;

I wonder if adding a "precedence: 25" would help, else the number is a mystery.

> axiom ax-1: << ph -> ps -> ph >>

> (wi ph (wi ps ph));

I think you should apply "Don't repeat yourself (DRY)", or a verifier will

have to recheck something that shouldn't need to be said at all.

> axiom ax-mp:

>

> << ph >> ph ->

> << ph -> ps >> (wi ph ps) ->

> << ps >> ps;

than metamath's current approach.

Axioms, in particular, are a big deal. It should be

"as obvious as possible" to the reader that they're correct.

I'm fine with s-expressions, but a lot of people DO NOT understand them.

If you make people input s-expressions, you might lose

some people entirely. In cases where the s-expression can be easily determined,

you shouldn't make a human create them.

You might consider using neoteric expressions instead of s-expressions.

They have the same meaning, but look more like math.

Basically "x(a b c)" means the same thing as "(x a b c)".

More details available here from Readable Lisp (my project):

https://readable.sourceforge.io/

That would convert this:

> wn(wi(wi(ph ps) wn(wi(ps ph))))

> theorem bi2: << (ph <-> ps) -> ps -> ph >>

> (wi (wb ph ps) (wi ps ph));

It's noise for the human, and the computer can recalculate & check that trivially.

I see infixr. Are you planning to support arbitrary-length expressions of the

same operator, e.g., "( 1 + 2 + 3 + 4 )"?

> * The notation system turned out a bit more complicated than I'd hoped.

> Each "math expression" is stated twice, once in chevrons and once in

> s-expression notation. The intent is that the system actually uses the

> s-expressions to represent the axioms (so there is no parse tree funny

> business), and because they form part of the trust base they are displayed

> to the user. The notation in the chevrons is checked only in the reverse

> direction - the s-expression is printed and compared to the given string.

> (In an IDE there would be a mode where you only type the notation and the

> computer generates the s-expression.) There is still a danger of ambiguous

> parsing, but at least you can see the parse directly in the case of a

> mismatch, and the worst effect of a bad parse is that the theorem being

> proven is not what the user thought - the ambiguity can't be further

> exploited in the proof.

axiom or definition if it results in a syntactic ambiguity?

I *think* that would get rid of the "funny business" while eliminating duplicate

declarations. But that depends on what "funny business" you mean,

and I'm not sure I know what you're referring to.

> You might wonder where the proofs are. They go in a separate file, not

> human readable. The idea is that this file contains all the information

> that is necessary to appraise the content and correctness of a theorem, and

> the proof file is just directions to the verifier to produce a proof of the

> claimed theorems.

as metamath does now - that makes it trivial to verify by humans and computers.

If it *also* has higher-level instructions (say, to try to reconstruct a proof if some

definitions have changed) that's fine.

--- David A. Wheeler

David A. Wheeler

> Metamath, Ghilbert and Lean syntax. The goal is to balance human

> readability and ease of writing a verifier, while also making some attempts

> to patch the bugs in metamath soundness (not that it's wrong, but there is

> a lot of soundness justification currently happening outside the metamath

> verifier kernel).

or at least argue why similar results can't be done with tweaks to

existing mechanisms like $j.

1. Putting more soundness justifications into the underlying verifier

is indeed a worthy goal. But most of set.mm is proofs, not definitions,

and there are very few "axioms" in the traditional sense... so it's not clear

that all the verifiers need to check that so much.

I can also imagine tweaking existing systems instead of creating a new language.

2. Making the input "more readable" is worthwhile, but I'm not sure that

by *itself* this is enough. Making it so that we can be more flexible

about parentheses would *certainly* be a nice improvement.

Supporting ph /\ ps /\ ch /\ ... would be nice as well.

Expanding on #2: If it can manage to easily support arbitrary-length arguments like

ph /\ ps /\ ch /\ th /\ ... then I can suddenly see that it's able to do something

that Metamath today can't. Is that easy? Worth it?

I don't know, but that *would* go beyond our current setup.

No matter what, I recommend that you do NOT depend

too much on precedence, even when available.

"Developer beliefs about binary operator precedence (part 1)” by Derek M. Jones

http://www.knosof.co.uk/cbook/accu06.html

"a survey of developers at the 2006 ACCU conference to determine if they correctly applied the precedence and associativity of the binary operators common to C, C++, C#, Java, Perl, and PHP. In this experiment only 66.7% of the answers were correct (standard deviation 8.8, poorest performer 45.2% correct, best performer 80.5% correct); this was not much better than random chance (50%). Even many widely-used operator pairs had relatively poor results; only 94% of the problems were correctly solved when they combined * and +, 92% when they combined * and -, and 69% when they combined / and +."

Professional mathematicians probably do get precedence correct, but where possible I'd like the results to be understood by others.

When we developed my "Readable Lisp" we supported infix but decided to NOT support precedence. The trade-offs are very different here, so I don't think that reasoning really applies here. But my point is that supporting lots of precedence levels isn't really, by itself, a big win.

> * "def" declares a definition. Different from both Ghilbert and Metamath,

> definitions here are "limited lifetime unfolded". Each def has a block

> after it containing one or more theorems about the definition. These

> theorems are proven where the definition is replaced by the definiendum,

> and once the block is closed these theorems are the only access to the

> definition. (This enables some information privacy if desired.) See wb for

> example, which uses characterizing theorems instead of an iff.

"don't use this theorem" so you can prove statements that you don't use.

But what about proving theorems that have to "reach into" multiple definitions to work?

You might need a way to express that you're intentionally breaking the rules.

> * The notation system turned out a bit more complicated than I'd hoped.

> Each "math expression" is stated twice, once in chevrons and once in

> s-expression notation.

and I don't think it's necessary in this case.

--- David A. Wheeler

Mario Carneiro

nonempty sort wff;var ph ps ch: wff*;

term wi (ph ps: wff): wff; infixr wi: -> 25;

What does the number say? Is it a precedence weight? Is the right-associativity hinted somewhere?

term wn (ph: wff): wff; prefix wn: ~ 40;

axiom ax-1: << ph -> ps -> ph >>

(wi ph (wi ps ph));

Is the line above an interpretation of the human readable infix, right-associative implication chain within double-angle brackets? In UPN format?

How do you forbid quantification over classes. Does this happen

since it is a new sort (your nonempty sort class;)

Problem is, you might end up with something else than ZFC.

term wal (x: set) (ph: wff x): wff; term cab (x: set) (ph: wff x): class;

def wtru (bound p: wff): wff := << p <-> p >> (wb p p) {

notation wtru := << T. >>;

theorem df-tru: << T. <-> (ph <-> ph) >> (wb wtru (wb ph ph));

}

def weu (x: set) (ph: wff x) (bound y: wff): wff :=

<< E. y A. x (ph <-> x = y) >>

(wex y (wal x (wb ph (weq x y)))) {

prefix wex: E! 30;

theorem df-eu: << E! x ph <-> E. y A. x (ph <-> x = y) >>

(wb (weu x ph) (wex y (wal x (wb ph (weq x y)))));

}

Here is a wish for a new system dubbed "Shullivan":

The ability to directly work with infix, etc.. no redundancy

with chevrons. I guess chevrons are only comments?

On Wed, 20 Feb 2019 06:32:39 -0500, Mario Carneiro <di....@gmail.com> wrote:

> I'm experimenting with a new language, Metamath Zero, an amalgamation of

> Metamath, Ghilbert and Lean syntax.

Syntax should be simple, though "simple" is in the eye of the beholder :-).

> var ph ps ch: wff*;

I don't see why you need a "*" here.

> term wi (ph ps: wff): wff; infixr wi: -> 25;

Why do you need to repeat "wi:" here?

I wonder if adding a "precedence: 25" would help, else the number is a mystery.

> axiom ax-1: << ph -> ps -> ph >>

> (wi ph (wi ps ph));

I see the s-expression below the axiom, but isn't that trivial to re-discover?

I think you should apply "Don't repeat yourself (DRY)", or a verifier will

have to recheck something that shouldn't need to be said at all.

> axiom ax-mp:

>

> << ph >> ph ->

> << ph -> ps >> (wi ph ps) ->

> << ps >> ps;

That's a lot of chevrons, and that seems much harder to follow

than metamath's current approach.

Axioms, in particular, are a big deal. It should be

"as obvious as possible" to the reader that they're correct.

I'm fine with s-expressions, but a lot of people DO NOT understand them.

If you make people input s-expressions, you might lose

some people entirely. In cases where the s-expression can be easily determined,

you shouldn't make a human create them.

You might consider using neoteric expressions instead of s-expressions.

They have the same meaning, but look more like math.

Basically "x(a b c)" means the same thing as "(x a b c)".

More details available here from Readable Lisp (my project):

https://readable.sourceforge.io/

That would convert this:

> (wn (wi (wi ph ps) (wn (wi ps ph))))

into this:

> wn(wi(wi(ph ps) wn(wi(ps ph))))

> theorem bi2: << (ph <-> ps) -> ps -> ph >>

> (wi (wb ph ps) (wi ps ph));

Again, I don't see why you need to expressly state the syntax proof.

It's noise for the human, and the computer can recalculate & check that trivially.

I see infixr. Are you planning to support arbitrary-length expressions of the

same operator, e.g., "( 1 + 2 + 3 + 4 )"?

> * The notation system turned out a bit more complicated than I'd hoped.

> Each "math expression" is stated twice, once in chevrons and once in

> s-expression notation. The intent is that the system actually uses the

> s-expressions to represent the axioms (so there is no parse tree funny

> business), and because they form part of the trust base they are displayed

> to the user. The notation in the chevrons is checked only in the reverse

> direction - the s-expression is printed and compared to the given string.

> (In an IDE there would be a mode where you only type the notation and the

> computer generates the s-expression.) There is still a danger of ambiguous

> parsing, but at least you can see the parse directly in the case of a

> mismatch, and the worst effect of a bad parse is that the theorem being

> proven is not what the user thought - the ambiguity can't be further

> exploited in the proof.

Wouldn't it be simpler to simply require that you cannot add a new

axiom or definition if it results in a syntactic ambiguity?

I *think* that would get rid of the "funny business" while eliminating duplicate

declarations. But that depends on what "funny business" you mean,

and I'm not sure I know what you're referring to.

> You might wonder where the proofs are. They go in a separate file, not

> human readable. The idea is that this file contains all the information

> that is necessary to appraise the content and correctness of a theorem, and

> the proof file is just directions to the verifier to produce a proof of the

> claimed theorems.

I think it's important that the proofs include the specific step-by-step instructions

as metamath does now - that makes it trivial to verify by humans and computers.

If it *also* has higher-level instructions (say, to try to reconstruct a proof if some

definitions have changed) that's fine.

On Wed, Feb 20, 2019 at 8:32 AM Mario Carneiro <di....@gmail.com> wrote:

I'm experimenting with a new language, Metamath Zero, an amalgamation of Metamath, Ghilbert and Lean syntax. The goal is to balance human readability and ease of writing a verifier, while also making some attempts to patch the bugs in metamath soundness (not that it's wrong, but there is a lot of soundness justification currently happening outside the metamath verifier kernel). Without giving too much explanation of what the syntax means (it's all fictional syntax at the moment, there is no parser yet), I would like to use some of you as guinea pigs for the "readability" aspect. How obvious is it what things mean? I will put some more explanatory comments at the end.nonempty sort wff;

Easy to write a verifier: Stop right here.

A large number of keywords will make any verifier complex. Think about how to be able to declare syntax, in the language itself. This will leave the compiler "soft", but will also allow expansions not to make the collection invalid. Look at proofs in HOL that are no longer possible to run today.

In a language I started, almost all statements started with "def" or "var". This allowed for having zillions of keywords within def, but which were not reserved words in the language itself.

In basic definitions, verbose:typecode wff ::nonempty;

var ph ps ch: wff*;

The : is also a keyword from what I understand. Style suggestion. Use var a b c :wff instead of var a b c: wff. That is, leave the : more attached to the type than to one of the variables.

By the way, only one type per variable? Think about adding something like partial types, or to use concepts of statically typed languages, declaring interfaces (::)?

term wi (ph ps: wff): wff; infixr wi: -> 25;

term wn (ph: wff): wff; prefix wn: ~ 40;

Here I suggest being as verbose as possible, more syntactic than logical.syntax wi ( ph :wff "->" ps :wff) :wff infix.right 25;Alternatively:syntax wi :wff ( ph :wff "->" ps :wff) infix.right 25;That is, types closest to the name, definition inside the parentheses, operator defined within quotes (escaped by \)

Operators like this, in quotes, allow a bit of language abuse, but also allow you to create things like writing Latex-style operations: \dot

The advantage of having a separate command for prefix/infix/suffix precedence is to tweak the priorities in advance. But it is asking for confusion. I will not suggest taking the command, but at least have the option to put priority in the syntax command itself to encourage it to stay in one place.

@[infixr -> 25] term wi: wff -> wff -> wff;

attribute [infixr -> 25] wi; local attribute [infixr -> 25] wi;

axiom ax-1: << ph -> ps -> ph >>

(wi ph (wi ps ph));

Here a weird suggestion, but... Axioms -> syntax. Then use the command, much like...

syntax ax-1 : "ph -> ps -> ph" :: (wi ph (wi ps ph)) ;Here : and :: are reused, with space to the right (without a type), only to denote clear points for the parser.

Or if you find overloading the syntax ugly (and it is), the suggestion above about "def":def.syntax wi :wff ( ph :wff "->" ps :wff) infix.right 25;def.axiom ax-1 : "ph -> ps -> ph" :: (wi ph (wi ps ph)) ;

axiom ax-2: << (ph -> ps -> ch) -> (ph -> ps) -> ph -> ch >>

(wi (wi ph (wi ps ch)) (wi (wi ph ps) (wi ph ch)));

axiom ax-3: << (~ph -> ~ps) -> ps -> ph >>

(wi (wi (wn ph) (wn ps)) (wi ps ph));

axiom ax-mp:

<< ph >> ph ->

<< ph -> ps >> (wi ph ps) ->

<< ps >> ps;

theorem a1i: << ph >> ph -> << ps -> ph >> (wi ps ph);

def.theorem a1i: << ph >> ph -> << ps -> ph >> (wi ps ph);That is, def is effectively a reserved word, and therefore can be specialized via .special. There will be def without specialization for everything else.

Other than that, general suggestions.

- The notion of local blocks, delimited by {} is good, but will probably clash with other uses. When building the parser pay attention to this. Starting everything by "def" helps mitigate this problem.

- The notion of separate and "solid" files is very important. I strongly suggest putting this in the language from the first version. A simple:file "path/name";in the solid file indicates where or to where the next lines go in case of split, and those lines, and a "master" file, containing only:file.include "path/name";is produced when split, allowing reconstruction.

- Instead of using << and >>, which certainly already has use, I suggest separating parts of syntax by <? and ?>, common from XML.

- In syntax, even within <? and ?>, everything that is expected to be fixed must be enclosed in quotation marks, and anything outside the quotation marks has some programmatic meaning.def.syntax wi :wff <? "(" ph :wff "->" ps :wff ")" ?> infix.right 25 ;def.axiom ax-1 <? ph -> ps -> ph ?> (wi ph (wi ps ph)) ;Which is more verbose than before, but leaves the parser simultaneously simpler and more flexible. In the first case the compiler knows that it is defining a thing, given by the quotation marks. In the second case everything is expected, and should come from the construction by s-expressions.

Looking at this now... (wi ph (wi ps ph)) will create a lot of "(" and ")" that does not exist in <? ph -> ps -> ph ?>. You realize that, I guess.

Mario Carneiro

On Wed, 20 Feb 2019 06:32:39 -0500, Mario Carneiro <di....@gmail.com> wrote:

> I'm experimenting with a new language, Metamath Zero, an amalgamation of

> Metamath, Ghilbert and Lean syntax. The goal is to balance human

> readability and ease of writing a verifier, while also making some attempts

> to patch the bugs in metamath soundness (not that it's wrong, but there is

> a lot of soundness justification currently happening outside the metamath

> verifier kernel).

If possible, I think it'd be good to have an additional reason to create a new language,

or at least argue why similar results can't be done with tweaks to

existing mechanisms like $j.

1. Putting more soundness justifications into the underlying verifier

is indeed a worthy goal. But most of set.mm is proofs, not definitions,

and there are very few "axioms" in the traditional sense... so it's not clear

that all the verifiers need to check that so much.

I can also imagine tweaking existing systems instead of creating a new language.

2. Making the input "more readable" is worthwhile, but I'm not sure that

by *itself* this is enough. Making it so that we can be more flexible

about parentheses would *certainly* be a nice improvement.

Supporting ph /\ ps /\ ch /\ ... would be nice as well.

Expanding on #2: If it can manage to easily support arbitrary-length arguments like

ph /\ ps /\ ch /\ th /\ ... then I can suddenly see that it's able to do something

that Metamath today can't. Is that easy? Worth it?

I don't know, but that *would* go beyond our current setup.

No matter what, I recommend that you do NOT depend

too much on precedence, even when available.

"Developer beliefs about binary operator precedence (part 1)” by Derek M. Jones

http://www.knosof.co.uk/cbook/accu06.html

"a survey of developers at the 2006 ACCU conference to determine if they correctly applied the precedence and associativity of the binary operators common to C, C++, C#, Java, Perl, and PHP. In this experiment only 66.7% of the answers were correct (standard deviation 8.8, poorest performer 45.2% correct, best performer 80.5% correct); this was not much better than random chance (50%). Even many widely-used operator pairs had relatively poor results; only 94% of the problems were correctly solved when they combined * and +, 92% when they combined * and -, and 69% when they combined / and +."

Professional mathematicians probably do get precedence correct, but where possible I'd like the results to be understood by others.

When we developed my "Readable Lisp" we supported infix but decided to NOT support precedence. The trade-offs are very different here, so I don't think that reasoning really applies here. But my point is that supporting lots of precedence levels isn't really, by itself, a big win.

> * "def" declares a definition. Different from both Ghilbert and Metamath,

> definitions here are "limited lifetime unfolded". Each def has a block

> after it containing one or more theorems about the definition. These

> theorems are proven where the definition is replaced by the definiendum,

> and once the block is closed these theorems are the only access to the

> definition. (This enables some information privacy if desired.) See wb for

> example, which uses characterizing theorems instead of an iff.

That makes sense. However, in some cases you will want a way to say

"don't use this theorem" so you can prove statements that you don't use.

But what about proving theorems that have to "reach into" multiple definitions to work?

You might need a way to express that you're intentionally breaking the rules.

> * The notation system turned out a bit more complicated than I'd hoped.

> Each "math expression" is stated twice, once in chevrons and once in

> s-expression notation.

As I noted earlier, stating things twice is undesirable,

and I don't think it's necessary in this case.

David A. Wheeler

> The chevrons are "checked comments", if you like. My idea for avoiding

> parsing is to take the s-expression, print it, and compare the result

> token-for-token with the input string (ignoring whitespace). There is still

> some potential for abuse if you write an ambiguous grammar, where the

> danger is that an ambiguous expression is disambiguated in the s-expression

> in a way other than what the reader expected, but the s-expression is at

> least available for reference.

opportunity for mischief than help. Best to avoid them.

> Translating from metamath to mm0 is a bit trickier, because some annotation

> information in the mm0 file is not present in mm files. If you skip some of

> the foundational material (roughly the contents of this file), translating

> a set.mm-like file should be straightforward and automatable.

It's how $j statements work now.

> I didn't demonstrate this in the file, but the chevrons are optional. So

> you could just write:

>

> axiom ax-mp: ph -> (wi ph ps) -> ps;

> and it would be understood just as well. In such a small axiom as this that

> might be a reasonable thing to do (and in particular for single wffs the <<

> ph >> ph business just looks silly). However the s-expressions are NOT

> optional. This is one of the things that would be omitted in the "less

> verbose" version of the file - you might just write

>

> axiom ax-mp: << ph >> -> << ph -> ps >> -> << ps >>;

>

> and the compiler, which has a proper parser, would produce the

> s-expressions for you.

min $e |- ph $.

maj $e |- ( ph -> ps ) $.

ax-mp $a |- ps $.

In current Metamath I have a name for every part (so I can refer to each one),

I can see the syntax directly, and I don't have a festoon of << ... >> everywhere

that makes it hard to see what's acting. Chevrons are TERRIBLE

when there are arrows, they look too much alike.

Also, don't you want -> to mean implication? It seems to be used in two different ways here.

> The problem with metamath's current approach is that it's subtly unsafe, as

> we saw recently with the example of syntax ambiguity causing proofs of

> false. Metamath treats statements as lists of symbols, which means that you

> can do some shenanigans with ambiguity if the grammar supports it. To

> prevent this, the verifier here works entirely with trees (encoded as

> s-expressions). So the worst that can happen is that a theorem doesn't say

> what you think, it can't be exploited in the middle of a proof.

That drops MIU, but I think supporting MIU isn't really necessary.

I disagree that the s-expressions needs to be exposed to the poor hapless user.

The verifier can trivially figure out what -> refers to; users should not

need to look up "wi" unless they're debugging a verifier.

Re: The shenanighans have already been dealt with: demand an unambiguous

parse by some criteria. If you can't show it's unambiguous, then it's not okay.

I think that'd be reasonable here too.

> People shouldn't have to write s-expressions

be there. Which I haven't seen yet.

S-expressions can be valuable, obviously. But we should

not ask people to read OR write "(wi ph ps)" when they

could use "ph -> ps".

> I don't like that spaces are significant with this layout.

> > theorem bi2: << (ph <-> ps) -> ps -> ph >>

> > > (wi (wb ph ps) (wi ps ph));

> >

> > Again, I don't see why you need to expressly state the syntax proof.

> > It's noise for the human, and the computer can recalculate & check that

> > trivially.

> >

>

> Not if the verifier doesn't have a parser! I'm worried that if I add a

> parser this will be a large fraction of the code, and it will be a large

> moving part that could contribute to uncertainty that the program has no

> bugs. (There will be a parser of course, for the code syntax itself, but it

> will be statically known so you can write it in a few functions.)

But I think you're trading away too much. If your goal is readability,

then "(wi ph ps)" is *terrible* compared to "ph -> ps".

Metamath maximizes simple parsing, and it manages to support ph -> ps.

I don't think you need to go that far backwards.

A relatively simple tree parser should work just fine.

> I see infixr. Are you planning to support arbitrary-length expressions of

> > the

> > same operator, e.g., "( 1 + 2 + 3 + 4 )"?

> >

>

> That would be handled by declaring "infixl cadd: + 60;" to say that + is

> left associative with precedence 60. Then "1 + 2 + 3 + 4" would be the

> printed form of (cadd (cadd (cadd c1 c2) c3) c4).

current Metamath.

> > Wouldn't it be simpler to simply require that you cannot add a new

> > axiom or definition if it results in a syntactic ambiguity?

> > I *think* that would get rid of the "funny business" while eliminating

> > duplicate

> > declarations. But that depends on what "funny business" you mean,

> > and I'm not sure I know what you're referring to.

> >

>

> How will you know if new notation (not axiom/definition) results in a

> syntax ambiguity? The general problem is undecidable. What I want to avoid

> is a gnarly grammar with a complicated ambiguity being exploited somewhere

> deep in the proof (which isn't even being displayed) and causing something

> to be provable that shouldn't be.

If you use a traditional LR parser, then make a table, and if you have shift/shift or

shift/reduce ambiguities given N tokens (whatever N is) and the precedence rules,

it's ambiguous. Done. You don't need - or want - infinite lookahead.

Parsing is well-studied.

Most languages have fixed operations instead of adding operations as they go.

I've not tried to parse language where it adds operators with precedence values,

so I don't know how difficult it is. Prolog does it, though, so I suspect it's not too hard.

Another approach would be to put all the syntax definitions in a separate file,

and use that to generate the parser to be used later.

That can be fast; most of the time the syntax definitions won't change, so you can

cache the results & regenerate the table/code only when the definitions change.

That would be an entirely conventional way to implement a parser, so there

are lots of tools you can use (which would reduce the code to be written).

Even easier: just drop precedence entirely. You don't need it;

people often add "unnecessary" parentheses for clarity.

If your goal is simplicity, that would be simple.

Implementing precedence is a lot of work for a mechanism that is

rarely used in practice. At the least, you could start *without* precedence,

and then add it later if desired.

I can see using s-expressions for the *proofs* but that is a different matter.

Anyway, you asked for comments, I hope you find these interesting.

--- David A. Wheeler

David A. Wheeler

> Everyone knows that + is lower precedence than *

You & I know that. But only 94% of professional programmers with 5+ years

got the correct answer when surveyed at a conference.

And random chance gives you 50%.

Sigh.

> If you have a solution to:

>

> * Simple static parser

> * Readable math

> * Guaranteed unambiguity

> * Minimal parsing "surprises"

LR parsers are extensively studied & implemented, let's start there.

* Simple: If you make syntax a separate file, you can generate a simple static parser.

* Readable math: Most math systems use LR or LL parsers, so you're no worse off than most.

* Guaranteed unambiguity: They *require* unambiguity or a tie-breaking rule - so if you say that

only precedence rules can break ties, that gives you unambiguity. Basically treat a shift/shift or a shift/reduce conflict as an error if precedence doesn't break it.

* Minimal parsing "surprises": These are very widely used, so they shouldn't surprise anyone.

You could make k=5 (that is, LR(5)).

I'm guessing you believe that's not enough. Can you help me understand why?

--- David A. Wheeler

Mario Carneiro

On Wed, 20 Feb 2019 22:47:43 -0500, Mario Carneiro <di....@gmail.com> wrote:

> Translating from metamath to mm0 is a bit trickier, because some annotation

> information in the mm0 file is not present in mm files. If you skip some of

> the foundational material (roughly the contents of this file), translating

> a set.mm-like file should be straightforward and automatable.

It's always possible to slip in some info in comments if all are agreeable.

It's how $j statements work now.

> I didn't demonstrate this in the file, but the chevrons are optional. So

> you could just write:

>

> axiom ax-mp: ph -> (wi ph ps) -> ps;

That switch back-and-forth is rather confusing for readability.

> and it would be understood just as well. In such a small axiom as this that

> might be a reasonable thing to do (and in particular for single wffs the <<

> ph >> ph business just looks silly). However the s-expressions are NOT

> optional. This is one of the things that would be omitted in the "less

> verbose" version of the file - you might just write

>

> axiom ax-mp: << ph >> -> << ph -> ps >> -> << ps >>;

>

> and the compiler, which has a proper parser, would produce the

> s-expressions for you.

Notationally this seems much worse than current Metamath:

min $e |- ph $.

maj $e |- ( ph -> ps ) $.

ax-mp $a |- ps $.

In current Metamath I have a name for every part (so I can refer to each one),

I can see the syntax directly, and I don't have a festoon of << ... >> everywhere

that makes it hard to see what's acting. Chevrons are TERRIBLE

when there are arrows, they look too much alike.

Also, don't you want -> to mean implication? It seems to be used in two different ways here.

> The problem with metamath's current approach is that it's subtly unsafe, as

> we saw recently with the example of syntax ambiguity causing proofs of

> false. Metamath treats statements as lists of symbols, which means that you

> can do some shenanigans with ambiguity if the grammar supports it. To

> prevent this, the verifier here works entirely with trees (encoded as

> s-expressions). So the worst that can happen is that a theorem doesn't say

> what you think, it can't be exploited in the middle of a proof.

I *agree* with you that processing-as-trees is the better approach.

That drops MIU, but I think supporting MIU isn't really necessary.

I disagree that the s-expressions needs to be exposed to the poor hapless user.

The verifier can trivially figure out what -> refers to; users should not

need to look up "wi" unless they're debugging a verifier.

Re: The shenanighans have already been dealt with: demand an unambiguous

parse by some criteria. If you can't show it's unambiguous, then it's not okay.

I think that'd be reasonable here too.

> > theorem bi2: << (ph <-> ps) -> ps -> ph >>

> > > (wi (wb ph ps) (wi ps ph));

> >

> > Again, I don't see why you need to expressly state the syntax proof.

> > It's noise for the human, and the computer can recalculate & check that

> > trivially.

> >

>

> Not if the verifier doesn't have a parser! I'm worried that if I add a

> parser this will be a large fraction of the code, and it will be a large

> moving part that could contribute to uncertainty that the program has no

> bugs. (There will be a parser of course, for the code syntax itself, but it

> will be statically known so you can write it in a few functions.)

I agree that you don't want an overly *complicated* parser.

But I think you're trading away too much. If your goal is readability,

then "(wi ph ps)" is *terrible* compared to "ph -> ps".

Metamath maximizes simple parsing, and it manages to support ph -> ps.

I don't think you need to go that far backwards.

A relatively simple tree parser should work just fine.

> > Wouldn't it be simpler to simply require that you cannot add a new

> > axiom or definition if it results in a syntactic ambiguity?

> > I *think* that would get rid of the "funny business" while eliminating

> > duplicate

> > declarations. But that depends on what "funny business" you mean,

> > and I'm not sure I know what you're referring to.

> >

>

> How will you know if new notation (not axiom/definition) results in a

> syntax ambiguity? The general problem is undecidable. What I want to avoid

> is a gnarly grammar with a complicated ambiguity being exploited somewhere

> deep in the proof (which isn't even being displayed) and causing something

> to be provable that shouldn't be.

If you are trying to solve the general problem you're trying too hard :-).

If you use a traditional LR parser, then make a table, and if you have shift/shift or

shift/reduce ambiguities given N tokens (whatever N is) and the precedence rules,

it's ambiguous. Done. You don't need - or want - infinite lookahead.

Parsing is well-studied.

Most languages have fixed operations instead of adding operations as they go.

I've not tried to parse language where it adds operators with precedence values,

so I don't know how difficult it is. Prolog does it, though, so I suspect it's not too hard.

Another approach would be to put all the syntax definitions in a separate file,

and use that to generate the parser to be used later.

That can be fast; most of the time the syntax definitions won't change, so you can

cache the results & regenerate the table/code only when the definitions change.

That would be an entirely conventional way to implement a parser, so there

are lots of tools you can use (which would reduce the code to be written).

Even easier: just drop precedence entirely. You don't need it;

people often add "unnecessary" parentheses for clarity.

If your goal is simplicity, that would be simple.

Implementing precedence is a lot of work for a mechanism that is

rarely used in practice. At the least, you could start *without* precedence,

and then add it later if desired.

On Wed, 20 Feb 2019 23:16:33 -0500, Mario Carneiro <di....@gmail.com> wrote:

> Everyone knows that + is lower precedence than *

Well, no.

You & I know that. But only 94% of professional programmers with 5+ years

got the correct answer when surveyed at a conference.

And random chance gives you 50%.

> If you have a solution to:

>

> * Simple static parser

> * Readable math

> * Guaranteed unambiguity

> * Minimal parsing "surprises"

Well, let's start with an easy approach & see if it works.

LR parsers are extensively studied & implemented, let's start there.

* Simple: If you make syntax a separate file, you can generate a simple static parser.

* Readable math: Most math systems use LR or LL parsers, so you're no worse off than most.

* Guaranteed unambiguity: They *require* unambiguity or a tie-breaking rule - so if you say that

only precedence rules can break ties, that gives you unambiguity. Basically treat a shift/shift or a shift/reduce conflict as an error if precedence doesn't break it.

* Minimal parsing "surprises": These are very widely used, so they shouldn't surprise anyone.

You could make k=5 (that is, LR(5)).

I'm guessing you believe that's not enough. Can you help me understand why?

André L F S Bacci

Indeed, dealing with modularization seems pretty important and apparently caused problems for Raph, so perhaps I should give it more thought.In a specification file such as this, we don't have to worry about proof dependencies, but we do have to be able to import axiom systems and syntax from another file. But now I'm worried about the possible security vulnurabilities this causes. A specification file is supposed to be self contained in the sense that if you know the language then it says what it means. If a specification file imports another, then the meaning of the first file depends on the meaning of that other file as well. It's even worse if the path is ambiguous in some way, and we don't see exactly what it's referring to. If the includes are relative to a path provided at the command line, then it's not even definite what file is being referred to.One way to make sure the import is right is to reproduce the axioms and theorems that are expected to be imported. This way the verifier can double check that all the files are stitched together correctly. But that may well be the whole file or nearly so (theorems don't need to be imported, but axioms and definitions do). And that's a hell of a lot of boilerplate, and also obscures the actual content of the file if it starts with a lengthy recap section.The specification file / proof file dichotomy is supposed to represent the distinction between *what* is being proven and *how* it should be proven. The proof file is a fine place for putting in instructions for how to find other files, but the specification files should be more focused on content and cross checks.I will return to this topic in a bit with a more concrete syntax proposal.

OlivierBinda

I can relate to "make the metamath parser simple, unambiguous and efficient"

I have just written an Antlr4 grammar for an old version of

metamath. But the performance was so bad (it took minutes to parse

4000 set.mm statements, with some statements like

|- ( ( ( ( ( ∨1c e. RR* /\\ ∨2c e. RR* ) /\\ -. ( ∨1c = 0 \\/ ∨2c = 0 ) ) /\\ -. ( ( ( 0 < ∨2c /\\ ∨1c = +oo ) \\/ ( ∨2c < 0 /\\ ∨1c = -oo ) ) \\/ ( ( 0 < ∨1c /\\ ∨2c = +oo ) \\/ ( ∨1c < 0 /\\ ∨2c = -oo ) ) ) ) /\\ -. ( ( ( 0 < ∨2c /\\ ∨1c = -oo ) \\/ ( ∨2c < 0 /\\ ∨1c = +oo ) ) \\/ ( ( 0 < ∨1c /\\ ∨2c = -oo ) \\/ ( ∨1c < 0 /\\ ∨2c = +oo ) ) ) ) -> ∨1c e. RR )"

that took like 9s to parse, I did not try to optimize it though)... the parser failed epically, by throwing an out of stack space exception (too much garbage to collect)

I had another go with the same parser BUT with doing a first pass

of the statement finding opening and closing braces,

so that I could parse subgroups and rebuild the tree with the

result

It worked a lot better (like 30x) : parsing set.mm in 10s ?

I would welcome being able to write a simple parser without using

a tool like antlr4 (that only has a few language targets and not

Kotlin, my favorite language) but it would be nice to be able to

find groups that can be parsed independently in the metamath

language as it obviously speeds things up 30x, and makes it also

possible to use many threads to parse 1 sentence (another speed up

possible there)

Yet, the parser that I wrote isn't a dynamic parser and I will

have to look into/implement that if I want the maths it can handle

to be extanded.

(At one point, I'll have to implement the same wikipedia

algorithm)

So, what I am trying to say is :

a) groups (stuff with easily found bounds like () ) are nice to

have whereas rules like (wiso statement)

c Isom c COMMA c LEFT_PARENTHESIS c COMMA c RIGHT_PARENTHESIS {$op="wiso";}

make things more difficult

things with prefix and clean separation are nice, things with

adjacency are complicated :/

b) if it is easy to make things computer-understandable (build a tree), it might easy (or not :/)to turn those things into human-friendly stuff (display, write and interact with it nicely) with another software layer that isn't in the language

c) writing things twice should be a big no no for any programmer and mathematician

d) even if you write things twice in your database, you'll still

have to build a parser to handle human input anyway :)

e) maybee there are other solutions ? like use colored spaces to

separate tokens and handle nesting ?

I have been playing 8 months with metamath, It took me a lot of

time to adapt and I am not sure the new language is better at

readibility, it hurt the first time I saw it (But I still love you

Mario, I'm a great fan, have been using your stuff all over the

place)

f) I have been doing university level maths for 20 years and

software development the last 6 years and the only precedence

order I know is about +-*/^(). So...

g) I would love to have metamath look more like the maths I write

on the blackboard (those braces !) but I would rather have a

language that is understood by a computer and easy to work with

Cheers,

Olivier Binda