shards error !

669 views

Skip to first unread message

Gal Akavia

Nov 17, 2021, 3:36:01 PM11/17/21

to Wazuh mailing list

Hi all,

My wazuh version - 4.1.5

ELK - v 7.10.2

ELK - v 7.10.2

Friends i'm following this guide because my ELK got FREEZED, am i on the right way here? Plz take a look at the error:

[2021-11-17T12:09:20,529][ERROR][c.a.o.s.a.s.InternalESSink] [node-1] Unable to index audit log {"audit_cluster_name":"elasticsearch","audit_transport_headers":{"_system_index_access_allowed":"false"},"audit_node_name":"node-1","audit_trace_task_id":"<HIDDEN_ֹNUMBER<","audit_transport_request_type":"CreateIndexRequest","audit_category":"INDEX_EVENT","audit_request_origin":"REST","audit_request_body":"{}","audit_node_id":"<HIDDEN_ֹNUMBER<","","audit_request_layer":"TRANSPORT","@timestamp":"2021-11-17T10:09:20.528+00:00","audit_format_version":4,"audit_request_remote_address":"127.0.0.1","audit_request_privilege":"indices:admin/auto_create","audit_node_host_address":"127.0.0.1","audit_request_effective_user":"admin","audit_trace_indices":["<wazuh-alerts-4.x-{2021.11.17||/d{yyyy.MM.dd|UTC}}>"],"audit_node_host_name":"127.0.0.1"} due to org.elasticsearch.common.ValidationException: Validation Failed: 1: this action would add [2] total shards, but this cluster currently has [1000]/[1000] maximum shards open;

This guide is not helping me so mutch because im missing a kibana TAB.

Please take a look at the picture attached to my comment, also the .txt file is my ossec.cong (hidden ipm' details)

Mauricio Ruben Santillan

Nov 17, 2021, 4:07:55 PM11/17/21

to Wazuh mailing list

Hello,

Thanks for using Wazuh!

Looks like your Elastic reached the shards limit of 1000 shards.

Thanks for using Wazuh!

Looks like your Elastic reached the shards limit of 1000 shards.

Can you please describe here your Elasticsearch architecture? Is a Wazuh all-in-one solution? Or is Elastic a cluster? If it is, how many nodes do you have?

Also, this request can provide some info from your ELK:

curl -XGET localhost:9200/_cluster/allocation/explain?pretty

curl -XGET localhost:9200/_cluster/health?pretty

In case you have a single node ELK, make sure

Normally by deleting old indices, the issue should be solved: https://www.elastic.co/guide/en/elasticsearch/reference/current/indices-delete-index.html

But you could also increase the shards limit by running next command in your ELK server:

curl -X PUT localhost:9200/_cluster/settings -H "Content-Type: application/json" -d '{ "persistent": { "cluster.max_shards_per_node": "3000" } }'

Normally we don't recommend to increase the shards limit too much since it could provoke inoperability and performance issues in your Elasticsearch cluster/server. I recommend you not to go further than 3000 shards.

You can also find these resources useful:

- https://www.elastic.co/guide/en/elasticsearch/reference/current/scalability.html

- https://www.elastic.co/guide/en/elasticsearch/reference/current/add-elasticsearch-nodes.html

- https://www.elastic.co/guide/en/elasticsearch/reference/current/modules-node.html#data-node

Looking forward to your comments!

Gal Akavia

Nov 17, 2021, 4:21:08 PM11/17/21

to Wazuh mailing list

Hi Mauricio, Thank's for that fast reply!

I'm using all-in-one, I have 1-node.

Did as you said, for now it's fixed, i'm following that guide, am i on the right way?

and:::

curl -XGET localhost:9200/_cluster/allocation/explain?pretty

{

"index" : "security-auditlog-2021.07.30",

"shard" : 0,

"primary" : false,

"current_state" : "unassigned",

"unassigned_info" : {

"reason" : "CLUSTER_RECOVERED",

"at" : "2021-11-16T11:41:36.054Z",

"last_allocation_status" : "no_attempt"

},

"can_allocate" : "no",

"allocate_explanation" : "cannot allocate because allocation is not permitted to any of the nodes",

"node_allocation_decisions" : [

{

"node_id" : "spEVr6o9RCiSDjJdHcBySg<obfuscated>",

"node_name" : "node-1",

"transport_address" : "127.0.0.1:9300",

"node_decision" : "no",

"deciders" : [

{

"decider" : "same_shard",

"decision" : "NO",

"explanation" : "a copy of this shard is already allocated to this node [[security-auditlog-2021.07.30][0], node[spEVr6o9RCiSDjJdHcBySg], [P], s[STARTED], a[id=RPVy_vvHTIeuXsE96Z7HBQ]]"

}

]

}

]

}

Also,

GET /_cluster/health?pretty

{

"cluster_name" : "elasticsearch",

"status" : "yellow",

"timed_out" : false,

"number_of_nodes" : 1,

"number_of_data_nodes" : 1,

"active_primary_shards" : 858,

"active_shards" : 858,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 142,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 85.8

}

Thank you!!

Mauricio Ruben Santillan

Nov 17, 2021, 7:01:39 PM11/17/21

to Gal Akavia, Wazuh mailing list

Hello Gal,

Yes, you're in the correct path (ISM is ILM equivalent in Opendistro). Applying an ISM will help you prevent reach the shards limit.

I did notice you have many unassigned shards. You could start by deleting the indices related to them. You can identify them by running: curl -XGET localhost:9200/_cat/shards?pretty

Also, you should check your Filebeat template (/etc/filebeat/wazuh-template.json). Most probably, it is set to work with 3 shards (by default it is set with 3):

{

"order": 0,

"index_patterns": [

"wazuh-alerts-4.x-*",

"wazuh-archives-4.x-*"

],

"settings": {

"index.refresh_interval": "5s",

"index.number_of_shards": "1", ===> Make sure to set "1" here

"index.number_of_replicas": "0", ===> Make sure to set "0" here

"index.auto_expand_replicas": "0-1",

"order": 0,

"index_patterns": [

"wazuh-alerts-4.x-*",

"wazuh-archives-4.x-*"

],

"settings": {

"index.refresh_interval": "5s",

"index.number_of_shards": "1", ===> Make sure to set "1" here

"index.number_of_replicas": "0", ===> Make sure to set "0" here

"index.auto_expand_replicas": "0-1",

...

Make sure to save the file and restart the filebeat service.

Have in mind that your current indices won't be affected with this change. Only the new ones will have 1 shard. In case you want to set this to your whole data, you would need to reindex your current indices (Move your indices to new indices, then move them back to their original indices).

Another option is just to let time pass. Your ISM will clean out your old data, and in some point, all your indices will have 1 shard only (the ones with more shards will be cleaned out).

Hope this helps!

--

You received this message because you are subscribed to the Google Groups "Wazuh mailing list" group.

To unsubscribe from this group and stop receiving emails from it, send an email to wazuh+un...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/wazuh/21d3df7b-f723-449d-a7be-3ee8e136a571n%40googlegroups.com.

Gal Akavia

Nov 18, 2021, 11:57:43 AM11/18/21

to Wazuh mailing list

Hi mauricio & thank you.

I did the steps as you said -

/etc/filebeat/wazuh-template.json.

Also i create ISM but seems it's not perfect, i want to delete indexes after 30 days but I find it's a little bit tricky.

{

"policy": {

"policy_id": "Cold_indices_get_smash",

"description": "hot warm delete workflow",

"last_updated_time": 1637188007862,

"schema_version": 1,

"error_notification": null,

"default_state": "hot",

"states": [

{

"name": "hot",

"actions": [

{

"rollover": {

"min_index_age": "1d"

}

}

],

"transitions": [

{

"state_name": "warm"

}

]

},

{

"name": "warm",

"actions": [

{

"replica_count": {

"number_of_replicas": 5

}

}

],

"transitions": [

{

"state_name": "delete",

"conditions": {

"min_index_age": "30d"

}

}

]

},

{

"name": "delete",

"actions": [

{

"notification": {

"destination": {

"chime": {

"url": "<URL>"

}

},

"message_template": {

"source": "The index {{ctx.index}} is being deleted",

"lang": "mustache"

}

}

},

{

"delete": {}

}

],

"transitions": []

}

],

"ism_template": null

}

}

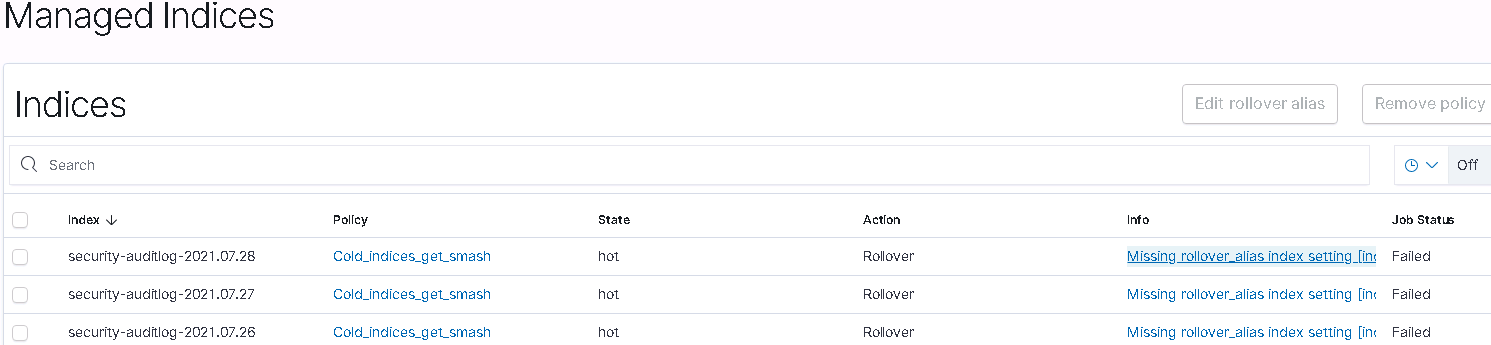

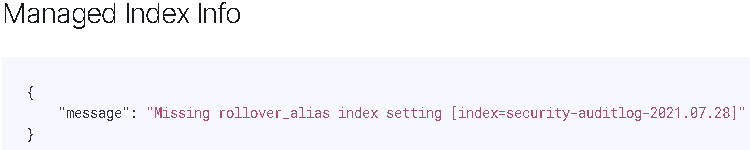

Then i applied the policy at Incides, Then at the Managed Indices tab this is the result and it seems something missing >>

Any suggestions?

Thank's a lot btw!!

Mauricio Ruben Santillan

Nov 24, 2021, 6:32:55 PM11/24/21

to Wazuh mailing list

Hello Gal,

PUT _opendistro/_ism/policies/policy-to-delete-after30days

{

"policy": {

"policy_id": "Cold_indices_get_smash",

"description": "hot warm delete workflow",

"last_updated_time": 1637188007862,

"schema_version": 1,

"error_notification": null,

"default_state": "hot",

"states": [

{

"name": "hot",

"actions": [

{

"rollover": {

"min_index_age": "1d"

}

}

],

"transitions": [

{

"state_name": "warm"

}

]

},

{

"name": "warm",

"actions": [

{

"replica_count": {

"number_of_replicas": 5

}

}

],

"transitions": [

{

"state_name": "delete",

"conditions": {

"min_index_age": "30d"

}

}

]

},

{

"name": "delete",

"actions": [

{

"notification": {

"destination": {

"chime": {

"url": "<URL>"

}

},

"message_template": {

"source": "The index {{ctx.index}} is being deleted",

"lang": "mustache"

}

}

},

{

"delete": {}

}

],

"transitions": []

}

],

"ism_template": {

"index_patterns": ["wazuh-alerts-*"],

"priority": 100

}

}

}

I'm really sorry for the delay on this.

After creating an ISM policy, the next step would be to attach the policy to an index or indices. This way, when an index is created that matches the index pattern from the ISM template, the index will have this new policy attached to it:

For this, you would need to run next endpoint in Kibana's Dev Tools:

Please, notice that this endpoint will attach the policy to wazuh-alerts-* indices.

You can also check next Opendistro guide for more information:

You should check the day after applying the policy that the new indices have the new policy assigned to them.

Hope this helps!

Gal Akavia

Nov 30, 2021, 5:59:44 AM11/30/21

to Wazuh mailing list

Hi Mauricio and thank you very much, I'll follow as you suggest and keep you update !

Mauricio Ruben Santillan

Nov 30, 2021, 5:03:50 PM11/30/21

to Gal Akavia, Wazuh mailing list

Awesome!

Let us know how it goes!

Let us know how it goes!

To view this discussion on the web visit https://groups.google.com/d/msgid/wazuh/7558d093-7796-4649-bca1-f75510e634e6n%40googlegroups.com.

Gal Akavia

Dec 1, 2021, 8:48:05 AM12/1/21

to Wazuh mailing list

Hi Mauricio,

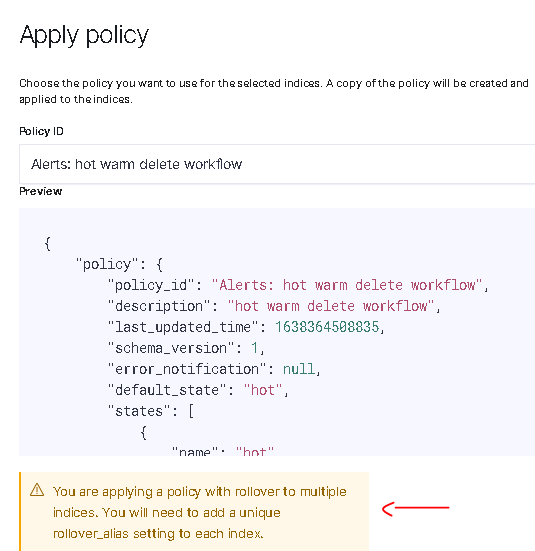

I follow your instractions step-by-step and created an exact lsm policy but when i try to apply it to alerts indices i get the following warning -

Try to match it to alerts indices

"index_patterns": [

"wazuh-alerts-*"

I tried to understand how to set it write the rollover alias but didn't get it.

My question is, am i must set rollover alias? If yes, can you please provide it by example please?

Thank you very mush mauricio!

Alexander Bohorquez

Dec 1, 2021, 5:46:23 PM12/1/21

to Wazuh mailing list

Hi Gal,

Sorry for the delay,

The error you are having is because there is no alias rollover. The rollover option for ISM is necessary when you want to "rotate" the indices based on some criteria, be it space used by the index or the number of documents. In this case, it is not necessary for which I recommend not to load it to the policy.

Here you can have more reference about this:

https://opendistro.github.io/for-elasticsearch-docs/docs/im/ism/#step-2-attach-policies-to-indices

In this case, based on what you need (Delete the index after 30 days). You can use the following retention policies and load them from Dev-tools:

The following policy will move indices into a cold state after 25 days and delete them after 30 days:

PUT _opendistro/_ism/policies/policy-to-delete-after30days

{

"policy": {

"description": "Wazuh index state management for OpenDistro to move indices into a cold state after 25 days and delete them after 30 days.",

"default_state": "hot",

"states": [

{

"name": "hot",

"actions": [

{

"replica_count": {

"number_of_replicas": 0

}

}

],

"transitions": [

{

"state_name": "cold",

"conditions": {

"min_index_age": "25d"

}

}

]

},

{

"name": "cold",

"actions": [

{

"read_only": {}

}

],

"transitions": [

{

"state_name": "delete",

"conditions": {

"min_index_age": "30d"

}

}

]

},

{

"name": "delete",

"actions": [

{

"delete": {}

}

],

"transitions": []

}

],

"ism_template": {

"index_patterns": ["wazuh-alerts-*"],

"priority": 100

}

}

}

Here you can find more information about the transition of states for an index with retention policies:

And this other policy will delete the indices once they have been reached 30 days since their creation:

PUT _opendistro/_ism/policies/policy-to-delete-after30days

{

"policy": {

"description": "Wazuh index state management for OpenDistro to delete indices after 30 days.",

"default_state": "hot",

"states": [

{

"name": "hot",

"actions": [

{

"replica_count": {

"number_of_replicas": 0

}

}

],

"transitions": [

{

"state_name": "delete",

"conditions": {

"min_index_age": "30d"

}

}

]

},

{

"name": "delete",

"actions": [

{

"delete": {}

}

],

"transitions": []

}

],

"ism_template": {

"index_patterns": ["wazuh-alerts-*"],

"priority": 100

}

}

}

I hope this information helps. Please let me know how it goes (Remember to unassign the current policy before applying/creating the new one).

Gal Akavia

Dec 2, 2021, 12:34:54 PM12/2/21

to Wazuh mailing list

Thank's alexander, did it now and it look good.

I have another question,

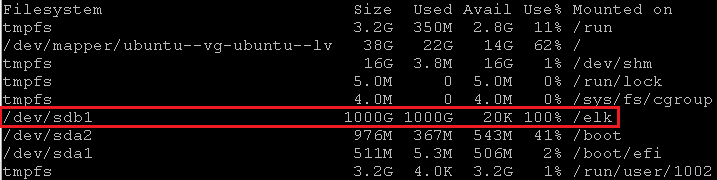

My /elk volume get full (1TB) looks like indices (or am i warng?)>

I used Dev tools and delete all indices (Statistics , monitoring, alerts & auditlogs) but still se the above.

My question is what is this files and is it ok to delete them ?

Mauricio Ruben Santillan

Dec 2, 2021, 1:31:41 PM12/2/21

to Gal Akavia, Wazuh mailing list

Hello Gal,

You should not delete indices-related files. Otherwise, you'll break your Elasticsearch.

If all the disk space is consumed by indices, then you should try to delete them using the Elastic API.

You can get a detail with all indices and their size by running next endpoint in Kibana's Dev Tools:

GET /_cat/indices/wazuh-alerts-*?v=true&s=index

This should show you an output similar to this:

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open wazuh-alerts-4.x-2021.08.04 XirmHqgYRyGRgVavOOJtwg 3 0 555 0 1.3mb 1.3mb

green open wazuh-alerts-4.x-2021.08.05 vlkbdl6fQfuAUHekEYMhoQ 3 0 302 0 995.9kb 995.9kb

green open wazuh-alerts-4.x-2021.08.06 7ugCRqPlR_qY2BC4ncnWSg 3 0 136 0 454.3kb 454.3kb

green open wazuh-alerts-4.x-2021.08.09 Cv7DfkfZR4KGoJH5zoqFMA 3 0 99 0 467.3kb 467.3kb

green open wazuh-alerts-4.x-2021.08.10 LSpNVsprQzifqhLU5qxVtQ 3 0 87 0 506.5kb 506.5kb

green open wazuh-alerts-4.x-2021.08.11 HiZUfB7TQWi4oAYmnxo-oQ 3 0 23 0 440.9kb 440.9kb

green open wazuh-alerts-4.x-2021.08.12 C5JKRuovRTaljSfjvUaGVg 3 0 395 0 1.2mb 1.2mb

green open wazuh-alerts-4.x-2021.08.13 cYSDoN_VRjOshpr_8DVAGw 3 0 125 0 564.2kb 564.2kb

green open wazuh-alerts-4.x-2021.08.17 RdrKHe3-QN-JDqRvXUcEkA 3 0 29 0 444.2kb 444.2kb

green open wazuh-alerts-4.x-2021.08.18 Buttb61VTK2Ti9g7-zqBAg 3 0 224 0 782.5kb 782.5kb

green open wazuh-alerts-4.x-2021.08.19 6rsosO--Qi2wQiqB32Pwxg 3 0 225 0 954.2kb 954.2kb

green open wazuh-alerts-4.x-2021.08.20 pjc2DtxdTjyPbZQd1rc9Jg 3 0 554 0 1.7mb 1.7mb

green open wazuh-alerts-4.x-2021.08.21 pDNKK9yDQqSlNL6ozK2Blg 3 0 487 0 715.2kb 715.2kb

green open wazuh-alerts-4.x-2021.08.22 C47i7Z0LSj-JRLkR83p8rQ 3 0 19 0 147.7kb 147.7kb

green open wazuh-alerts-4.x-2021.08.23 5p4vnWjNTIyj8P51yQfeXA 3 0 710 0 1.7mb 1.7mb

green open wazuh-alerts-4.x-2021.08.24 Pjv38-klQcCyFtH5uLfhug 3 0 697 0 1.2mb 1.2mb

green open wazuh-alerts-4.x-2021.08.25 KkF1ENIlRc-O4K8twV1C5g 3 0 562 0 1.5mb 1.5mb

green open wazuh-alerts-4.x-2021.08.26 Jvj_QPrhQG6U1Ahy4xXwBQ 3 0 96 0 481.6kb 481.6kb

green open wazuh-alerts-4.x-2021.08.27 xy9UFCBaTU-mSu8uZeNKJg 3 0 208 0 734.1kb 734.1kb

green open wazuh-alerts-4.x-2021.08.30 _dvIAOV3RTCDyO75tZcGRA 3 0 7 0 97.2kb 97.2kb

green open wazuh-alerts-4.x-2021.08.31 X4ixcg9xTtS0Nb6bFOQwVA 3 0 247 0 840.3kb 840.3kb

green open wazuh-alerts-4.x-2021.09.01 xrLC6uuOSDufnaPFLXCKZg 3 0 43 0 424.6kb 424.6kb

green open wazuh-alerts-4.x-2021.09.02 IEu3xlMlQNSSayfxkjFCvw 3 0 91 0 611kb 611kb

green open wazuh-alerts-4.x-2021.09.03 bPdACvh6Rt6u3lMoTuGpaA 3 0 305 0 872.4kb 872.4kb

green open wazuh-alerts-4.x-2021.09.04 vwdZYP3eTyqGWz3Twu_U9Q 3 0 58 0 397.4kb 397.4kb

green open wazuh-alerts-4.x-2021.09.07 MDtMZfI1SeWb75HNySiSFQ 3 0 24 0 285.2kb 285.2kb

green open wazuh-alerts-4.x-2021.09.08 t-lZFku7REipH9102_ffbg 3 0 116 0 769.9kb 769.9kb

green open wazuh-alerts-4.x-2021.09.09 bQmdTWeBQiaFMOKq4D0X2w 3 0 151 0 680.1kb 680.1kb

green open wazuh-alerts-4.x-2021.09.10 SGOO8b2BRpe9GtUXNt62_w 3 0 118 0 557.2kb 557.2kb

green open wazuh-alerts-4.x-2021.09.13 e-qEBfnNQpmO23oVcMJ8HA 3 0 620 0 1.1mb 1.1mb

green open wazuh-alerts-4.x-2021.09.14 SgR4eBPXSUO38AY6hScH3A 3 0 28 0 402.9kb 402.9kb

green open wazuh-alerts-4.x-2021.09.15 JWc16ElzSDuuLFBcsA0YsA 3 0 208 0 806.6kb 806.6kb

green open wazuh-alerts-4.x-2021.09.16 nKR8jXtFR5epXS9YxhI2BQ 3 0 219 0 1.3mb 1.3mb

green open wazuh-alerts-4.x-2021.09.17 d-Ij9YBXTbeVPlpkt-c2Tw 3 0 193 0 639.5kb 639.5kb

green open wazuh-alerts-4.x-2021.09.18 i2FceiQETXGoCFPQKy9LtA 3 0 9 0 121.7kb 121.7kb

green open wazuh-alerts-4.x-2021.09.20 KiMU9EfpSSKkBUPEJxCJJw 3 0 90 0 649.6kb 649.6kb

green open wazuh-alerts-4.x-2021.09.21 Q0HlEUl6TsyY40xhvi5RWA 3 0 76 0 506.8kb 506.8kb

green open wazuh-alerts-4.x-2021.09.22 C3XzoEpRRJewcfrf1z18IA 3 0 248 0 918kb 918kb

green open wazuh-alerts-4.x-2021.09.23 3kEH5ehLRWOa_YQ8ggcmKw 3 0 1809 0 2.2mb 2.2mb

green open wazuh-alerts-4.x-2021.09.24 l_m4HhdmRoq3JwdSTmwIfA 3 0 65 0 365.5kb 365.5kb

green open wazuh-alerts-4.x-2021.09.27 PA3FpcR9T2KCE5fynNFTIA 3 0 217 0 745kb 745kb

green open wazuh-alerts-4.x-2021.09.28 uFDL9_-DR5ehZWg-7_0e2w 3 0 369 0 1.1mb 1.1mb

green open wazuh-alerts-4.x-2021.09.29 aloFBgWnRBGNR9Kf3colqw 3 0 438 0 1.3mb 1.3mb

green open wazuh-alerts-4.x-2021.09.30 oypwdQVcRkWurIvAE-sJnw 3 0 891 0 2.1mb 2.1mb

green open wazuh-alerts-4.x-2021.10.01 WON_nO2zSPiK8s_Ie2cRIg 3 0 7557 0 1.8mb 1.8mb

green open wazuh-alerts-4.x-2021.10.04 Bo1vXEUQQR2t5Q6thWrefQ 3 0 4927 0 1.4mb 1.4mb

green open wazuh-alerts-4.x-2021.10.05 J0gumMKzStuC7vfTXg1j7w 3 0 12596 0 2.9mb 2.9mb

green open wazuh-alerts-4.x-2021.10.06 F4G-vh_yTjugGs9UZk1ZGQ 3 0 12354 0 3.6mb 3.6mb

green open wazuh-alerts-4.x-2021.10.07 306PIs9MR86NlDlSTvnh_Q 3 0 69101 0 43.8mb 43.8mb

green open wazuh-alerts-4.x-2021.10.12 hyZh1PjIQu6B6eHHM_I3mg 3 0 81657 0 53.6mb 53.6mb

green open wazuh-alerts-4.x-2021.10.13 yrInc2VcSvuJpHugbJU6Ug 3 0 101348 0 67.7mb 67.7mb

green open wazuh-alerts-4.x-2021.10.15 XT8p-WGOQEuYwBiBJQjtbQ 3 0 53022 0 34.9mb 34.9mb

green open wazuh-alerts-4.x-2021.10.16 mCnn-dHdRoeSWu6oD4Y_yQ 3 0 5898 0 4.5mb 4.5mb

green open wazuh-alerts-4.x-2021.10.18 7A7XXqIhTFqR103c5cY_iQ 3 0 100859 0 63.4mb 63.4mb

green open wazuh-alerts-4.x-2021.10.19 2l2y21M6SBC1BNy6oi32cQ 3 0 185834 0 114.3mb 114.3mb

green open wazuh-alerts-4.x-2021.10.20 1v3wiOV8Su2XqWvYfM-MNQ 3 0 57536 0 37.6mb 37.6mb

green open wazuh-alerts-4.x-2021.10.25 ggG9bA0_QneZVH-ywew7uQ 3 0 25936 0 17.8mb 17.8mb

green open wazuh-alerts-4.x-2021.10.26 D4Hg_2w0SNWg0npNzx81-g 3 0 161022 0 99.9mb 99.9mb

green open wazuh-alerts-4.x-2021.10.27 6FFmtoJrSr-BJ2j1YvKlSg 3 0 20837 0 14.2mb 14.2mb

green open wazuh-alerts-4.x-2021.11.01 fpaOJnLpTiGtodEGiXs2vQ 3 0 9371 0 7.9mb 7.9mb

green open wazuh-alerts-4.x-2021.11.03 AvoV6IHQRsSRXbda0_P6Eg 3 0 468 0 1020.6kb 1020.6kb

green open wazuh-alerts-4.x-2021.11.04 cUSNX3eSTGCseEsODG5kEg 3 0 178 0 716.4kb 716.4kb

green open wazuh-alerts-4.x-2021.11.05 xukQQloqSUiVOG9ET_raRQ 3 0 913 0 1.4mb 1.4mb

green open wazuh-alerts-4.x-2021.11.09 Qft89KCFQkuX9aAPoGtskA 3 0 49 0 161.2kb 161.2kb

green open wazuh-alerts-4.x-2021.11.10 extRzyP3T56C2muXcmPppQ 3 0 3 0 52.9kb 52.9kb

green open wazuh-alerts-4.x-2021.11.11 K5bCUyHORPKEFQzbc1-o-g 3 0 1141 0 2mb 2mb

green open wazuh-alerts-4.x-2021.11.12 jqlbna6ARluFmfc0xHPRrg 3 0 593 0 1.4mb 1.4mb

green open wazuh-alerts-4.x-2021.11.13 1mJxqwgHRlWDYQ2_Mo8iNw 3 0 4 0 57.6kb 57.6kb

green open wazuh-alerts-4.x-2021.11.15 7XiB7DtpQuyO05vcbmRGCQ 3 0 326 0 622.3kb 622.3kb

green open wazuh-alerts-4.x-2021.11.16 0xcyQtvYQ4KZhYPJsMAMXQ 3 0 331 0 628kb 628kb

green open wazuh-alerts-4.x-2021.11.17 _XOHJFj8QHGLZxABG_71Ig 3 0 336 0 653.7kb 653.7kb

green open wazuh-alerts-4.x-2021.11.18 b--iM345RfCFn5rfIGoRZQ 3 0 15 0 227.9kb 227.9kb

green open wazuh-alerts-4.x-2021.11.23 xSzK5LVmT5aETde63jqMBQ 3 0 498 0 1.4mb 1.4mb

green open wazuh-alerts-4.x-2021.11.24 likWJQ0bTrSpJE2D4I2lgQ 3 0 2809 0 2mb 2mb

green open wazuh-alerts-4.x-2021.11.25 XRLM0-gET72-1u_SE3xCrg 3 0 1816 0 2mb 2mb

green open wazuh-alerts-4.x-2021.11.26 yqMX40WCQVWABIiRSz2XCA 3 0 3851 0 3.8mb 3.8mb

green open wazuh-alerts-4.x-2021.11.29 hcx7hb-8QE-hbj7qiDEg8A 3 0 7387 0 5.2mb 5.2mb

green open wazuh-alerts-4.x-2021.11.30 olMftjsMQqeSPT_gPrk9tg 3 0 3559 0 4mb 4mb

green open wazuh-alerts-4.x-2021.12.01 DlVlO5kiTlqGjHabrw71Qw 3 0 1988 0 1.9mb 1.9mb

green open wazuh-alerts-4.x-2021.12.02 G4wnsm_PSzqSbICDXAC1mQ 3 0 2730 0 3.1mb 3.1mb

green open wazuh-alerts-4.x-2021.08.04 XirmHqgYRyGRgVavOOJtwg 3 0 555 0 1.3mb 1.3mb

green open wazuh-alerts-4.x-2021.08.05 vlkbdl6fQfuAUHekEYMhoQ 3 0 302 0 995.9kb 995.9kb

green open wazuh-alerts-4.x-2021.08.06 7ugCRqPlR_qY2BC4ncnWSg 3 0 136 0 454.3kb 454.3kb

green open wazuh-alerts-4.x-2021.08.09 Cv7DfkfZR4KGoJH5zoqFMA 3 0 99 0 467.3kb 467.3kb

green open wazuh-alerts-4.x-2021.08.10 LSpNVsprQzifqhLU5qxVtQ 3 0 87 0 506.5kb 506.5kb

green open wazuh-alerts-4.x-2021.08.11 HiZUfB7TQWi4oAYmnxo-oQ 3 0 23 0 440.9kb 440.9kb

green open wazuh-alerts-4.x-2021.08.12 C5JKRuovRTaljSfjvUaGVg 3 0 395 0 1.2mb 1.2mb

green open wazuh-alerts-4.x-2021.08.13 cYSDoN_VRjOshpr_8DVAGw 3 0 125 0 564.2kb 564.2kb

green open wazuh-alerts-4.x-2021.08.17 RdrKHe3-QN-JDqRvXUcEkA 3 0 29 0 444.2kb 444.2kb

green open wazuh-alerts-4.x-2021.08.18 Buttb61VTK2Ti9g7-zqBAg 3 0 224 0 782.5kb 782.5kb

green open wazuh-alerts-4.x-2021.08.19 6rsosO--Qi2wQiqB32Pwxg 3 0 225 0 954.2kb 954.2kb

green open wazuh-alerts-4.x-2021.08.20 pjc2DtxdTjyPbZQd1rc9Jg 3 0 554 0 1.7mb 1.7mb

green open wazuh-alerts-4.x-2021.08.21 pDNKK9yDQqSlNL6ozK2Blg 3 0 487 0 715.2kb 715.2kb

green open wazuh-alerts-4.x-2021.08.22 C47i7Z0LSj-JRLkR83p8rQ 3 0 19 0 147.7kb 147.7kb

green open wazuh-alerts-4.x-2021.08.23 5p4vnWjNTIyj8P51yQfeXA 3 0 710 0 1.7mb 1.7mb

green open wazuh-alerts-4.x-2021.08.24 Pjv38-klQcCyFtH5uLfhug 3 0 697 0 1.2mb 1.2mb

green open wazuh-alerts-4.x-2021.08.25 KkF1ENIlRc-O4K8twV1C5g 3 0 562 0 1.5mb 1.5mb

green open wazuh-alerts-4.x-2021.08.26 Jvj_QPrhQG6U1Ahy4xXwBQ 3 0 96 0 481.6kb 481.6kb

green open wazuh-alerts-4.x-2021.08.27 xy9UFCBaTU-mSu8uZeNKJg 3 0 208 0 734.1kb 734.1kb

green open wazuh-alerts-4.x-2021.08.30 _dvIAOV3RTCDyO75tZcGRA 3 0 7 0 97.2kb 97.2kb

green open wazuh-alerts-4.x-2021.08.31 X4ixcg9xTtS0Nb6bFOQwVA 3 0 247 0 840.3kb 840.3kb

green open wazuh-alerts-4.x-2021.09.01 xrLC6uuOSDufnaPFLXCKZg 3 0 43 0 424.6kb 424.6kb

green open wazuh-alerts-4.x-2021.09.02 IEu3xlMlQNSSayfxkjFCvw 3 0 91 0 611kb 611kb

green open wazuh-alerts-4.x-2021.09.03 bPdACvh6Rt6u3lMoTuGpaA 3 0 305 0 872.4kb 872.4kb

green open wazuh-alerts-4.x-2021.09.04 vwdZYP3eTyqGWz3Twu_U9Q 3 0 58 0 397.4kb 397.4kb

green open wazuh-alerts-4.x-2021.09.07 MDtMZfI1SeWb75HNySiSFQ 3 0 24 0 285.2kb 285.2kb

green open wazuh-alerts-4.x-2021.09.08 t-lZFku7REipH9102_ffbg 3 0 116 0 769.9kb 769.9kb

green open wazuh-alerts-4.x-2021.09.09 bQmdTWeBQiaFMOKq4D0X2w 3 0 151 0 680.1kb 680.1kb

green open wazuh-alerts-4.x-2021.09.10 SGOO8b2BRpe9GtUXNt62_w 3 0 118 0 557.2kb 557.2kb

green open wazuh-alerts-4.x-2021.09.13 e-qEBfnNQpmO23oVcMJ8HA 3 0 620 0 1.1mb 1.1mb

green open wazuh-alerts-4.x-2021.09.14 SgR4eBPXSUO38AY6hScH3A 3 0 28 0 402.9kb 402.9kb

green open wazuh-alerts-4.x-2021.09.15 JWc16ElzSDuuLFBcsA0YsA 3 0 208 0 806.6kb 806.6kb

green open wazuh-alerts-4.x-2021.09.16 nKR8jXtFR5epXS9YxhI2BQ 3 0 219 0 1.3mb 1.3mb

green open wazuh-alerts-4.x-2021.09.17 d-Ij9YBXTbeVPlpkt-c2Tw 3 0 193 0 639.5kb 639.5kb

green open wazuh-alerts-4.x-2021.09.18 i2FceiQETXGoCFPQKy9LtA 3 0 9 0 121.7kb 121.7kb

green open wazuh-alerts-4.x-2021.09.20 KiMU9EfpSSKkBUPEJxCJJw 3 0 90 0 649.6kb 649.6kb

green open wazuh-alerts-4.x-2021.09.21 Q0HlEUl6TsyY40xhvi5RWA 3 0 76 0 506.8kb 506.8kb

green open wazuh-alerts-4.x-2021.09.22 C3XzoEpRRJewcfrf1z18IA 3 0 248 0 918kb 918kb

green open wazuh-alerts-4.x-2021.09.23 3kEH5ehLRWOa_YQ8ggcmKw 3 0 1809 0 2.2mb 2.2mb

green open wazuh-alerts-4.x-2021.09.24 l_m4HhdmRoq3JwdSTmwIfA 3 0 65 0 365.5kb 365.5kb

green open wazuh-alerts-4.x-2021.09.27 PA3FpcR9T2KCE5fynNFTIA 3 0 217 0 745kb 745kb

green open wazuh-alerts-4.x-2021.09.28 uFDL9_-DR5ehZWg-7_0e2w 3 0 369 0 1.1mb 1.1mb

green open wazuh-alerts-4.x-2021.09.29 aloFBgWnRBGNR9Kf3colqw 3 0 438 0 1.3mb 1.3mb

green open wazuh-alerts-4.x-2021.09.30 oypwdQVcRkWurIvAE-sJnw 3 0 891 0 2.1mb 2.1mb

green open wazuh-alerts-4.x-2021.10.01 WON_nO2zSPiK8s_Ie2cRIg 3 0 7557 0 1.8mb 1.8mb

green open wazuh-alerts-4.x-2021.10.04 Bo1vXEUQQR2t5Q6thWrefQ 3 0 4927 0 1.4mb 1.4mb

green open wazuh-alerts-4.x-2021.10.05 J0gumMKzStuC7vfTXg1j7w 3 0 12596 0 2.9mb 2.9mb

green open wazuh-alerts-4.x-2021.10.06 F4G-vh_yTjugGs9UZk1ZGQ 3 0 12354 0 3.6mb 3.6mb

green open wazuh-alerts-4.x-2021.10.07 306PIs9MR86NlDlSTvnh_Q 3 0 69101 0 43.8mb 43.8mb

green open wazuh-alerts-4.x-2021.10.12 hyZh1PjIQu6B6eHHM_I3mg 3 0 81657 0 53.6mb 53.6mb

green open wazuh-alerts-4.x-2021.10.13 yrInc2VcSvuJpHugbJU6Ug 3 0 101348 0 67.7mb 67.7mb

green open wazuh-alerts-4.x-2021.10.15 XT8p-WGOQEuYwBiBJQjtbQ 3 0 53022 0 34.9mb 34.9mb

green open wazuh-alerts-4.x-2021.10.16 mCnn-dHdRoeSWu6oD4Y_yQ 3 0 5898 0 4.5mb 4.5mb

green open wazuh-alerts-4.x-2021.10.18 7A7XXqIhTFqR103c5cY_iQ 3 0 100859 0 63.4mb 63.4mb

green open wazuh-alerts-4.x-2021.10.19 2l2y21M6SBC1BNy6oi32cQ 3 0 185834 0 114.3mb 114.3mb

green open wazuh-alerts-4.x-2021.10.20 1v3wiOV8Su2XqWvYfM-MNQ 3 0 57536 0 37.6mb 37.6mb

green open wazuh-alerts-4.x-2021.10.25 ggG9bA0_QneZVH-ywew7uQ 3 0 25936 0 17.8mb 17.8mb

green open wazuh-alerts-4.x-2021.10.26 D4Hg_2w0SNWg0npNzx81-g 3 0 161022 0 99.9mb 99.9mb

green open wazuh-alerts-4.x-2021.10.27 6FFmtoJrSr-BJ2j1YvKlSg 3 0 20837 0 14.2mb 14.2mb

green open wazuh-alerts-4.x-2021.11.01 fpaOJnLpTiGtodEGiXs2vQ 3 0 9371 0 7.9mb 7.9mb

green open wazuh-alerts-4.x-2021.11.03 AvoV6IHQRsSRXbda0_P6Eg 3 0 468 0 1020.6kb 1020.6kb

green open wazuh-alerts-4.x-2021.11.04 cUSNX3eSTGCseEsODG5kEg 3 0 178 0 716.4kb 716.4kb

green open wazuh-alerts-4.x-2021.11.05 xukQQloqSUiVOG9ET_raRQ 3 0 913 0 1.4mb 1.4mb

green open wazuh-alerts-4.x-2021.11.09 Qft89KCFQkuX9aAPoGtskA 3 0 49 0 161.2kb 161.2kb

green open wazuh-alerts-4.x-2021.11.10 extRzyP3T56C2muXcmPppQ 3 0 3 0 52.9kb 52.9kb

green open wazuh-alerts-4.x-2021.11.11 K5bCUyHORPKEFQzbc1-o-g 3 0 1141 0 2mb 2mb

green open wazuh-alerts-4.x-2021.11.12 jqlbna6ARluFmfc0xHPRrg 3 0 593 0 1.4mb 1.4mb

green open wazuh-alerts-4.x-2021.11.13 1mJxqwgHRlWDYQ2_Mo8iNw 3 0 4 0 57.6kb 57.6kb

green open wazuh-alerts-4.x-2021.11.15 7XiB7DtpQuyO05vcbmRGCQ 3 0 326 0 622.3kb 622.3kb

green open wazuh-alerts-4.x-2021.11.16 0xcyQtvYQ4KZhYPJsMAMXQ 3 0 331 0 628kb 628kb

green open wazuh-alerts-4.x-2021.11.17 _XOHJFj8QHGLZxABG_71Ig 3 0 336 0 653.7kb 653.7kb

green open wazuh-alerts-4.x-2021.11.18 b--iM345RfCFn5rfIGoRZQ 3 0 15 0 227.9kb 227.9kb

green open wazuh-alerts-4.x-2021.11.23 xSzK5LVmT5aETde63jqMBQ 3 0 498 0 1.4mb 1.4mb

green open wazuh-alerts-4.x-2021.11.24 likWJQ0bTrSpJE2D4I2lgQ 3 0 2809 0 2mb 2mb

green open wazuh-alerts-4.x-2021.11.25 XRLM0-gET72-1u_SE3xCrg 3 0 1816 0 2mb 2mb

green open wazuh-alerts-4.x-2021.11.26 yqMX40WCQVWABIiRSz2XCA 3 0 3851 0 3.8mb 3.8mb

green open wazuh-alerts-4.x-2021.11.29 hcx7hb-8QE-hbj7qiDEg8A 3 0 7387 0 5.2mb 5.2mb

green open wazuh-alerts-4.x-2021.11.30 olMftjsMQqeSPT_gPrk9tg 3 0 3559 0 4mb 4mb

green open wazuh-alerts-4.x-2021.12.01 DlVlO5kiTlqGjHabrw71Qw 3 0 1988 0 1.9mb 1.9mb

green open wazuh-alerts-4.x-2021.12.02 G4wnsm_PSzqSbICDXAC1mQ 3 0 2730 0 3.1mb 3.1mb

This will help you identify the most disk-consuming indices to properly delete them.

Hope this helps!

To view this discussion on the web visit https://groups.google.com/d/msgid/wazuh/6388c03c-e7a9-40a9-95f6-e7f2b707da8cn%40googlegroups.com.

Gal Akavia

Dec 2, 2021, 2:36:04 PM12/2/21

to Wazuh mailing list

Hi mauricio my angel,

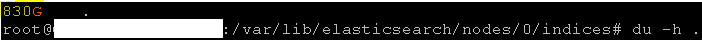

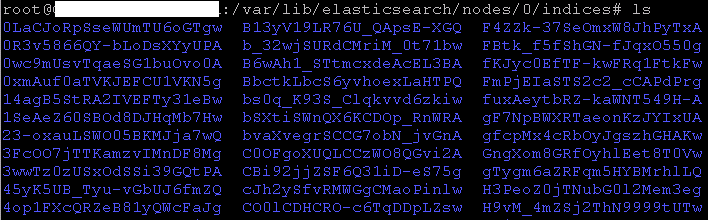

The problem is /elk volume get full (830GB in /var/lib/elasticsearch/nodes/0) now the ELK (web ui) frizzed.

I'll try to delete something (probablly and unfortunately from alerts.log) to free some space and will update you.

In addition, yesterday i did delete the following but still the same huge space in the above path.

DELETE /wazuh-statistics-2021.*

DELETE /wazuh-monitoring-2021.*

DELETE /security-auditlog-2021.*

DELETE /wazuh-alerts-4.*

Any idea?

Reply all

Reply to author

Forward

0 new messages