VDH can't download it? Maybe ffmpeg can.

4,243 views

Skip to first unread message

Wild Willy

Nov 25, 2021, 4:21:31 AM11/25/21

to Video DownloadHelper Q&A

To set the stage, I am doing this on Windows 7 64-bit, Firefox 94.0.2 64-bit, licensed VDH 7.6.3a1 beta, CoApp 1.6.3, VLC 3.0.16 Vetinari. The fact that I have a VDH license makes no difference, as you can learn by following references found in this thread:

https://groups.google.com/g/video-downloadhelper-q-and-a/c/BzPLK2YyL-s

Everything I'm showing here works the same even if you don't have a VDH license. But that's pretty much irrelevant anyway because I'm discussing how to do this when VDH doesn't get the video you want.

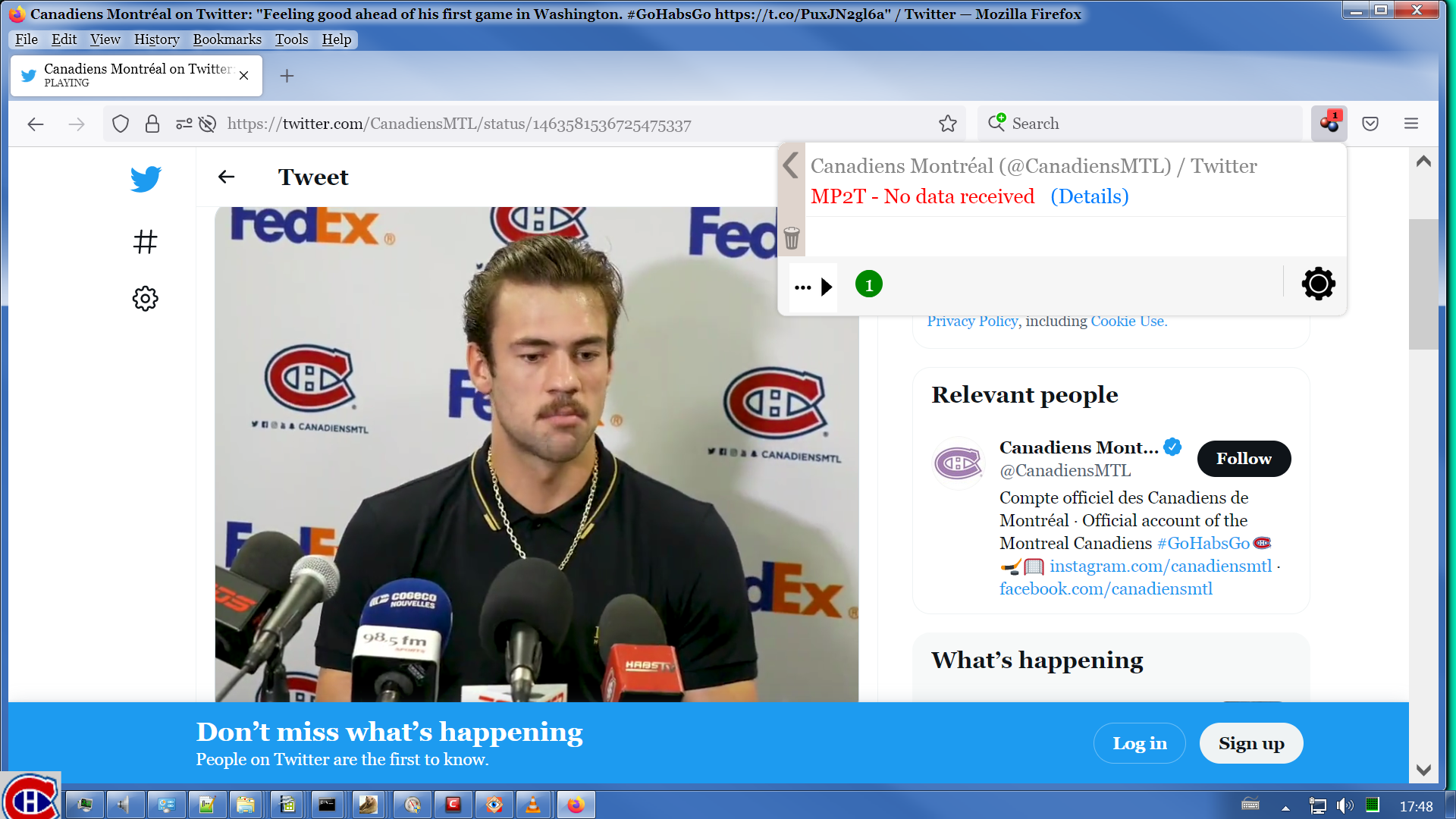

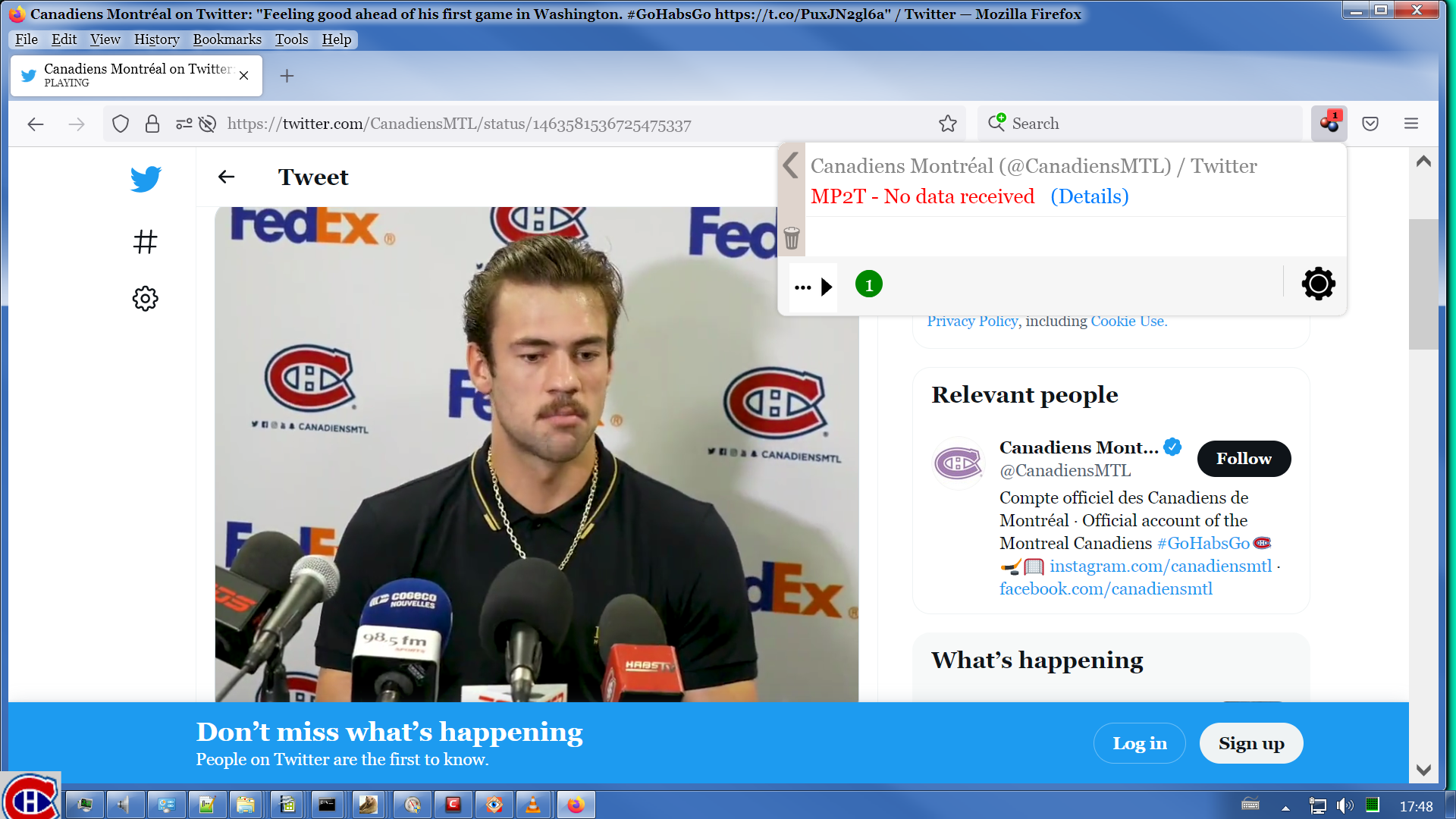

To start, let's go to this web page:

https://twitter.com/canadiensMTL

https://groups.google.com/g/video-downloadhelper-q-and-a/c/BzPLK2YyL-s

Everything I'm showing here works the same even if you don't have a VDH license. But that's pretty much irrelevant anyway because I'm discussing how to do this when VDH doesn't get the video you want.

To start, let's go to this web page:

https://twitter.com/canadiensMTL

I am not a member of Twitter (or any social media site, for that matter) so being logged on there is not an issue. But I chose Twitter because many people have had trouble getting videos off Twitter. Don't get tied up in that. What I'm showing here is something that can apply to many other web sites as well.

Twitter pages tend to have many videos on them. I've scrolled down to one that is nice & short. But you'll notice that I've also scrolled the VDH menu & there isn't an entry for the one in the image, the one that's just 16 seconds long. Truth in advertising, it does appear further down the VDH menu. But depending on how far down the Twitter page your selected video might be, it could be like searching for a needle in a haystack to find it on the VDH menu. But there's a way to simplify this. It may not be the best way, but like I say, I'm not a member of Twitter so this is what I've discovered. If you have a better way, do feel free to post about it in this thread.

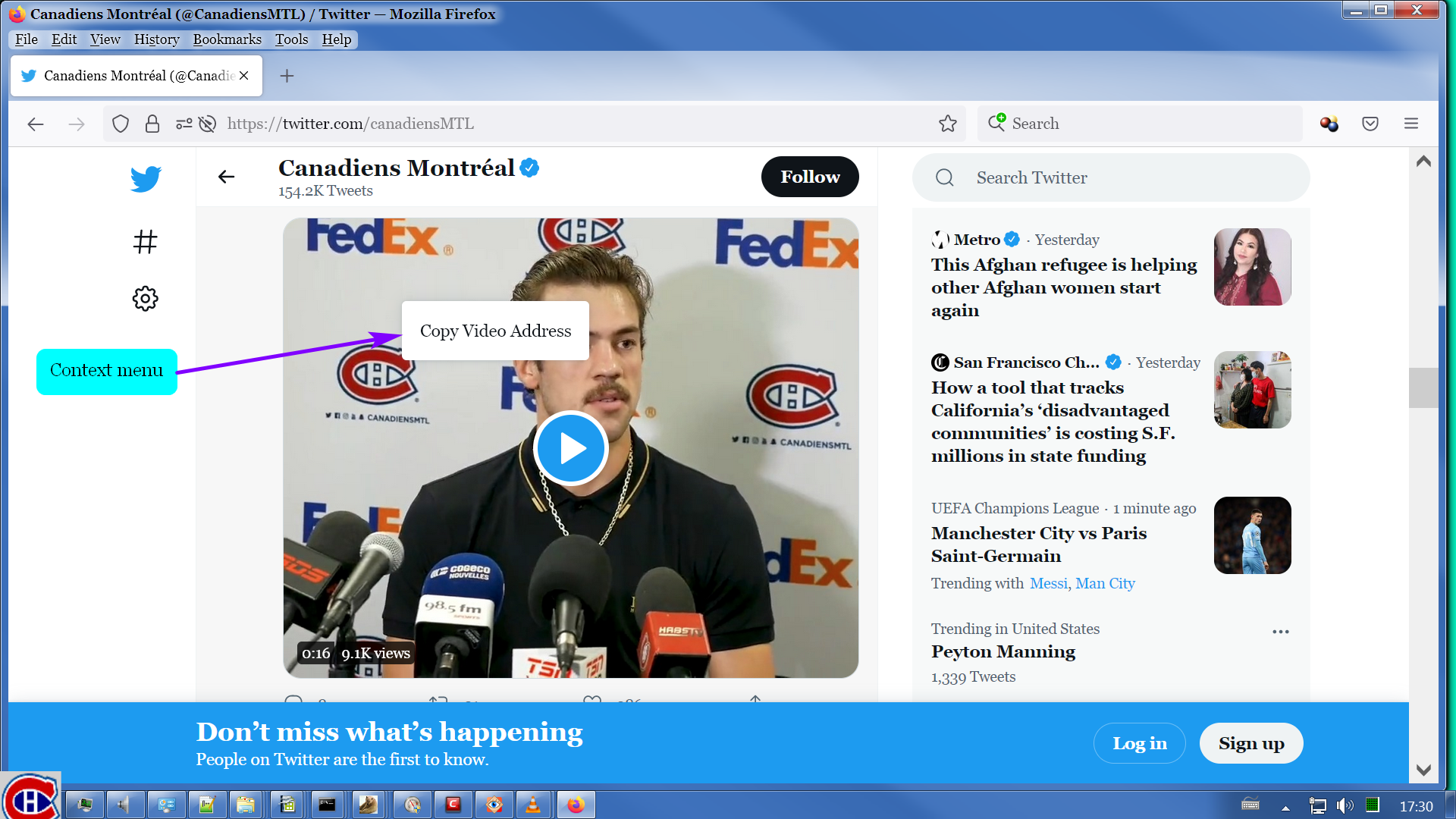

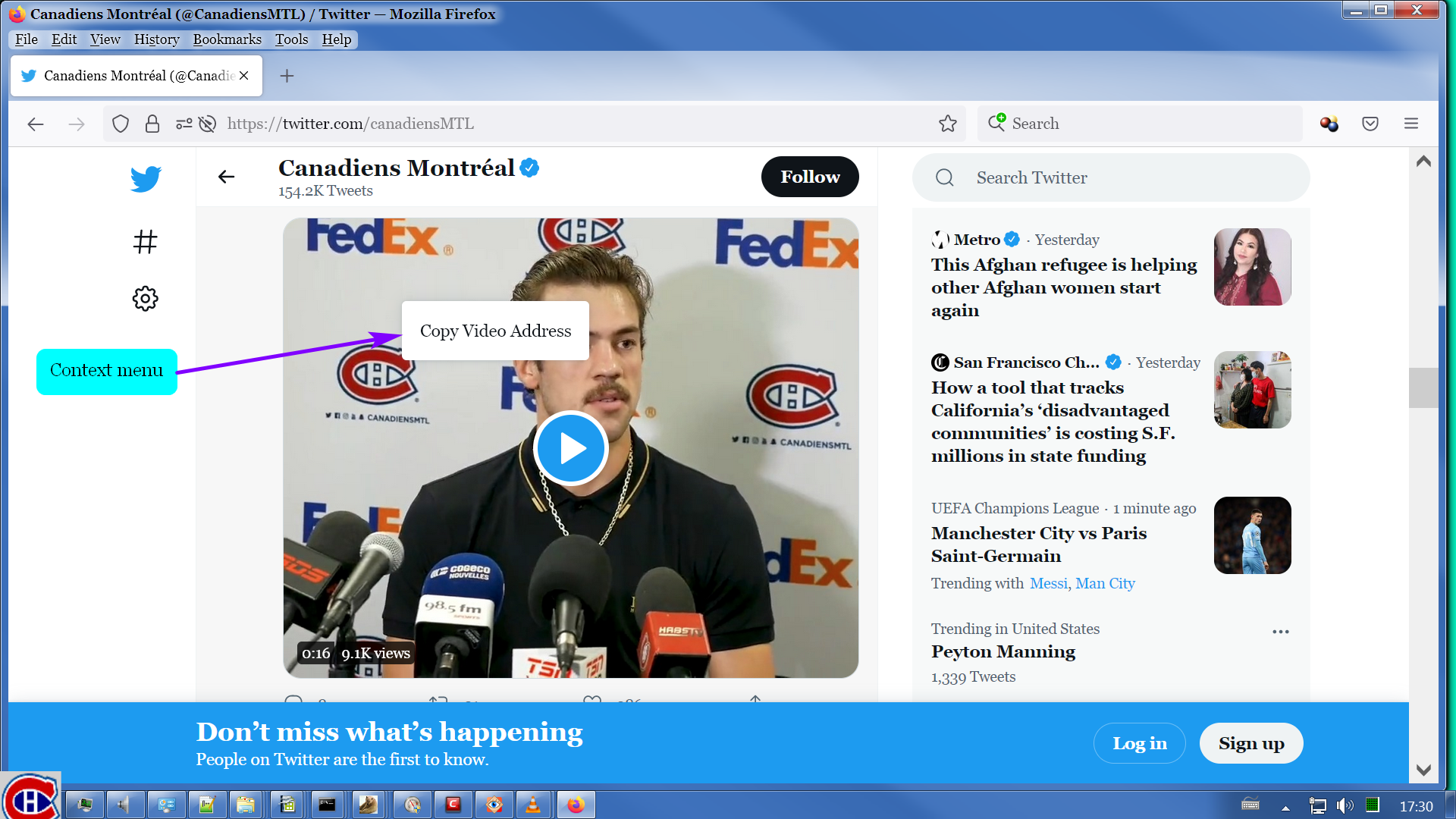

To isolate just the video I want, click mouse button 2 (MB2) on the video. Don't play the video, just pop up the context menu.

Twitter pages tend to have many videos on them. I've scrolled down to one that is nice & short. But you'll notice that I've also scrolled the VDH menu & there isn't an entry for the one in the image, the one that's just 16 seconds long. Truth in advertising, it does appear further down the VDH menu. But depending on how far down the Twitter page your selected video might be, it could be like searching for a needle in a haystack to find it on the VDH menu. But there's a way to simplify this. It may not be the best way, but like I say, I'm not a member of Twitter so this is what I've discovered. If you have a better way, do feel free to post about it in this thread.

To isolate just the video I want, click mouse button 2 (MB2) on the video. Don't play the video, just pop up the context menu.

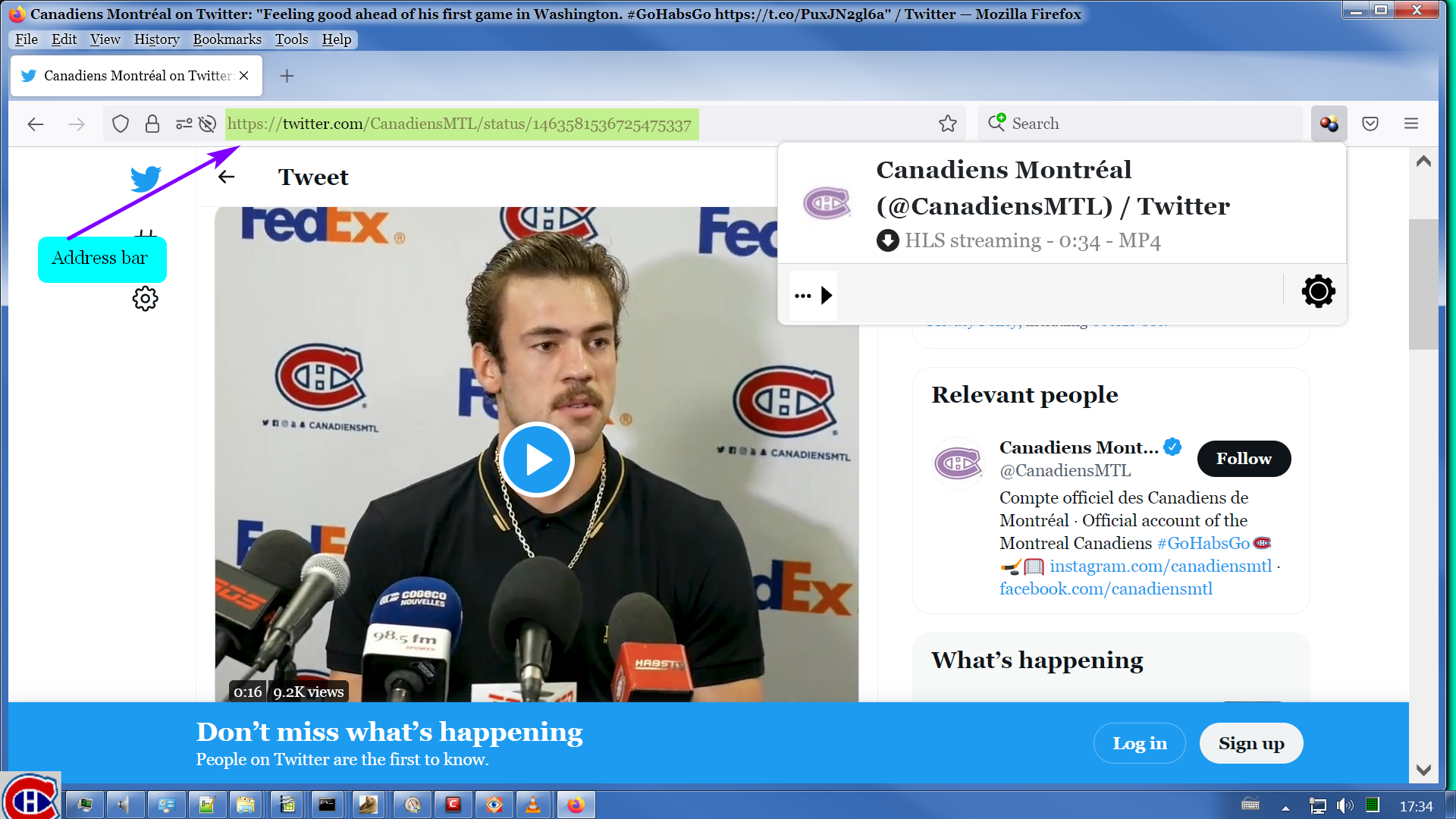

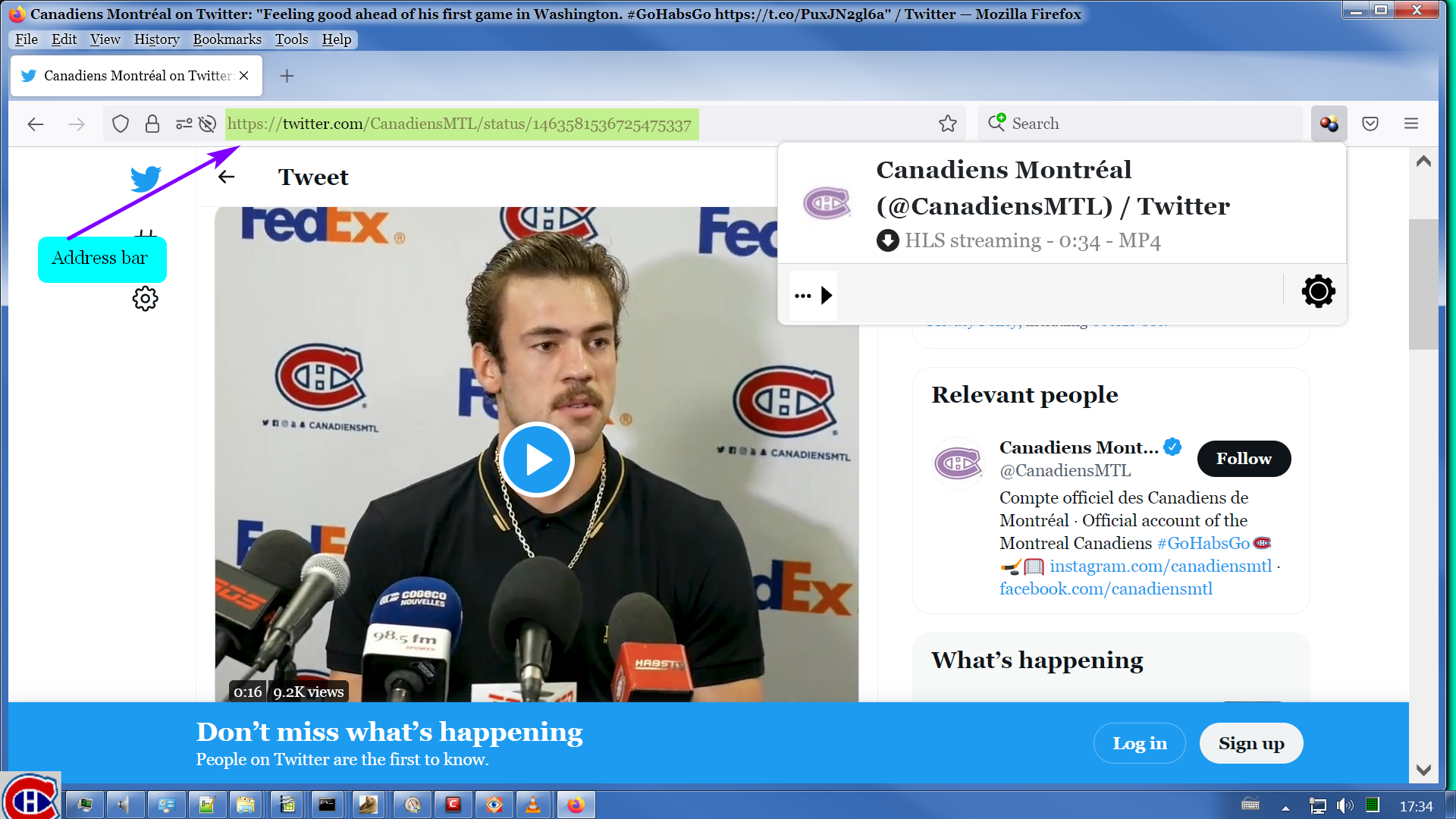

Not much of a context menu. Still, it's got what we need. Click that one item. This puts the URL of just this one video into the system clipboard. Now click in the address bar, paste in the URL, & hit Enter.

https://twitter.com/i/status/1463581536725475337

https://twitter.com/i/status/1463581536725475337

Hmmmm....... The VDH menu shows a variant of length 34 seconds for one 16-second video. Maybe I ought to go ahead & click play on the video.

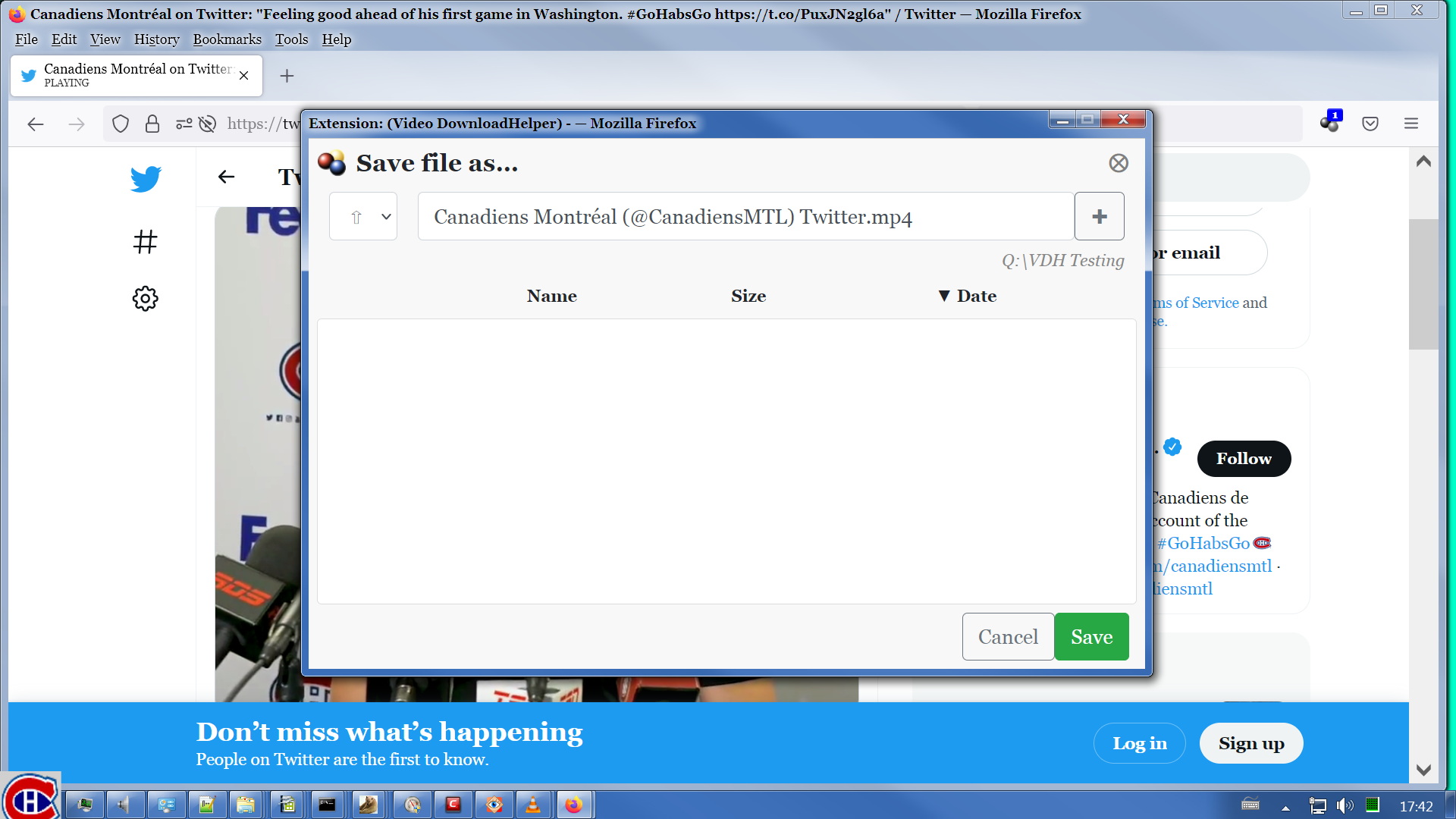

Doesn't make any difference. I guess the information provided by Twitter to VDH isn't enough for VDH to get the duration right. OK Let's assume this variant is our video. Let's go ahead & download it.

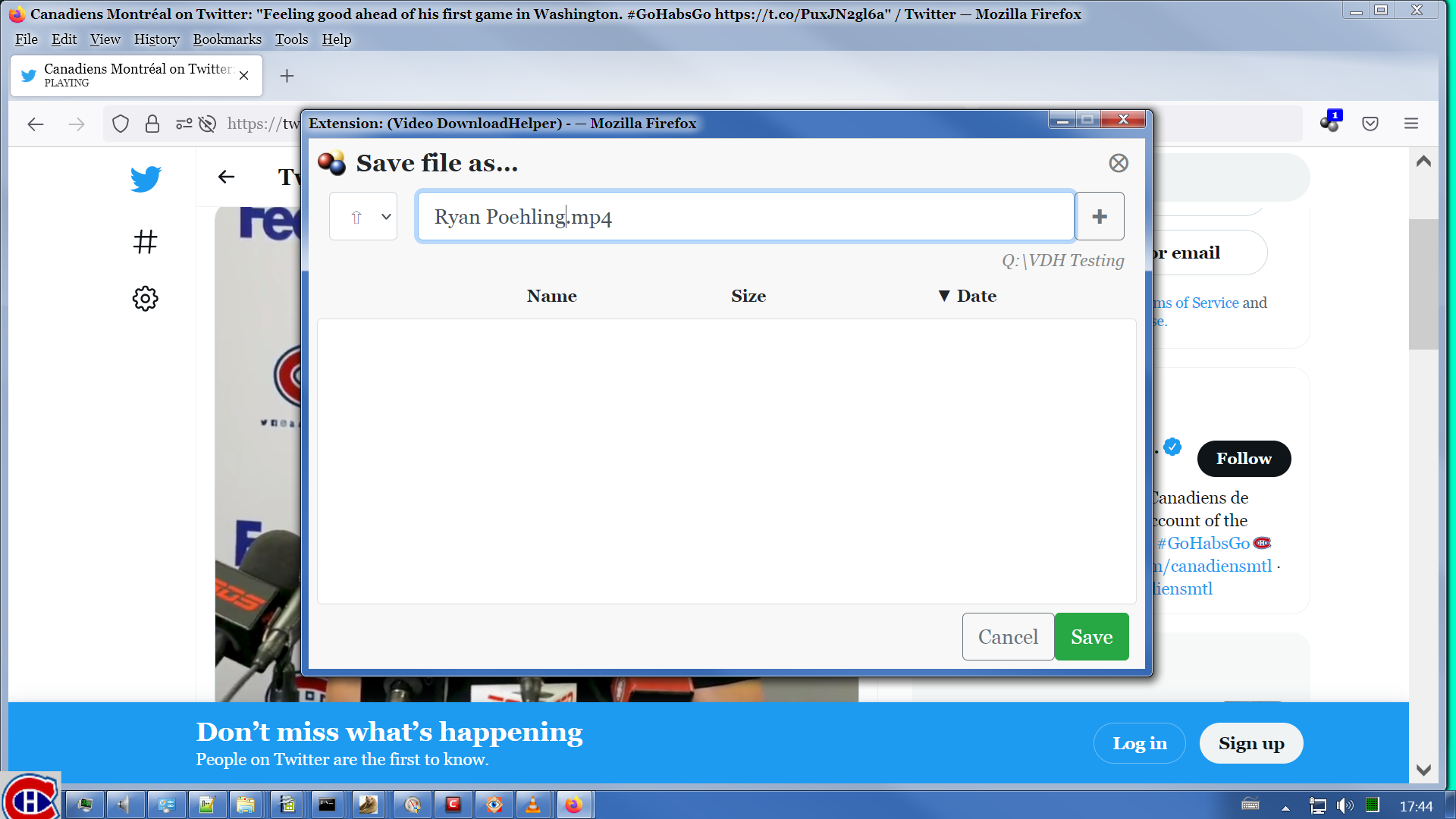

I don't like that default file name that VDH has picked. I'll replace it with the name of the guy in the video.

Now here's the VDH download progress status menu for this. Doesn't look promising.

Wild Willy

Nov 25, 2021, 5:26:57 AM11/25/21

to Video DownloadHelper Q&A

Indeed, it wasn't.

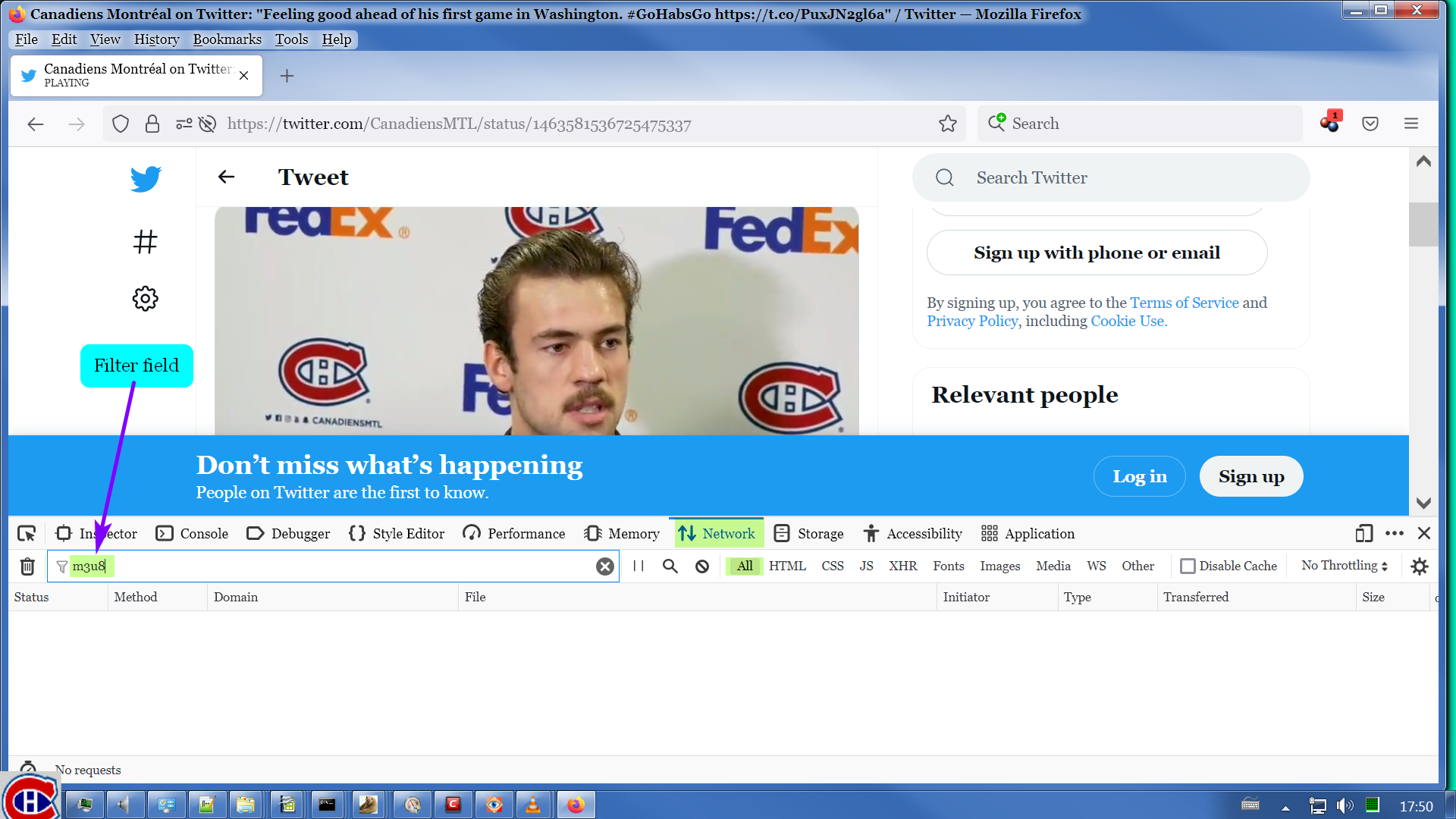

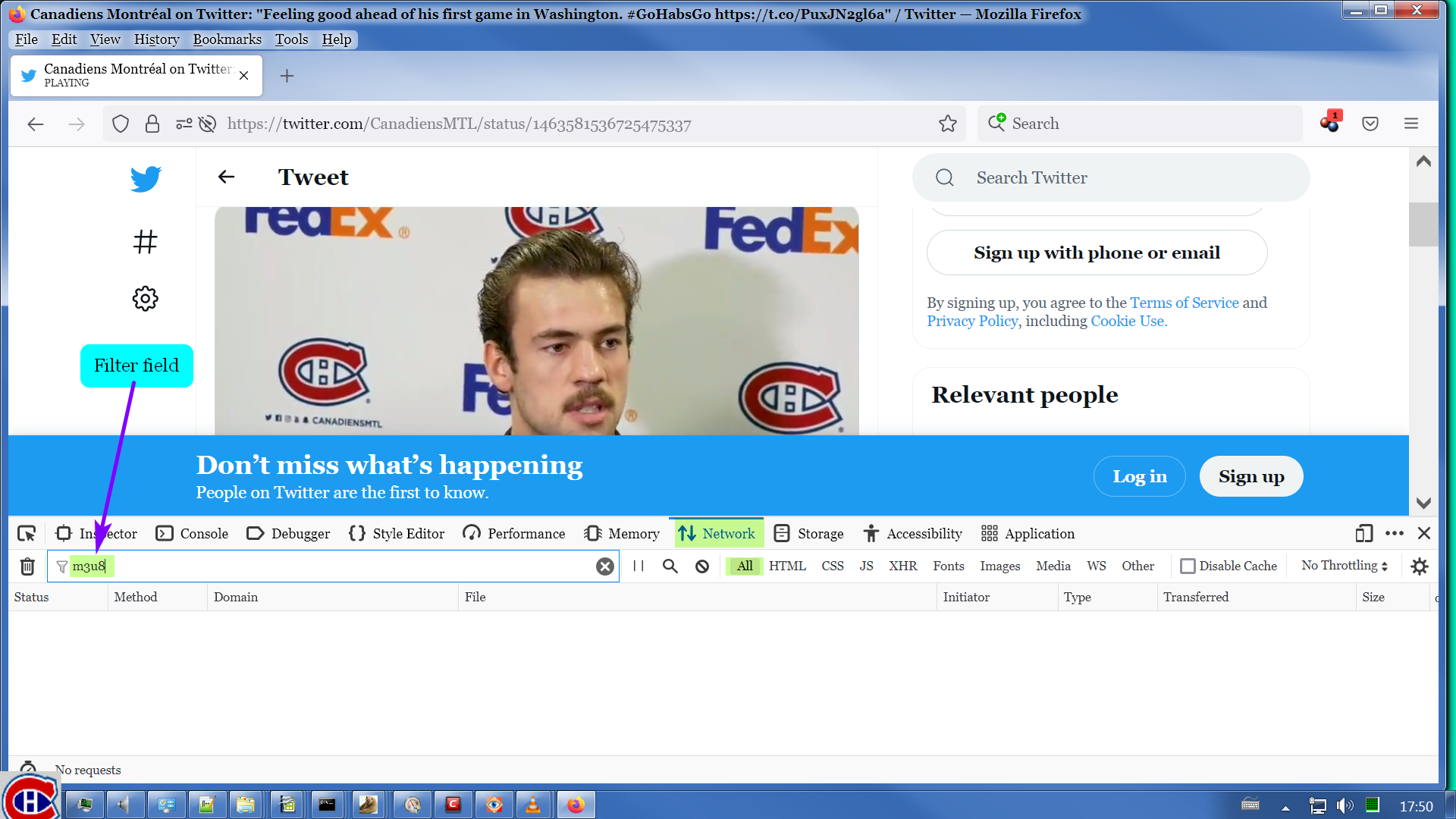

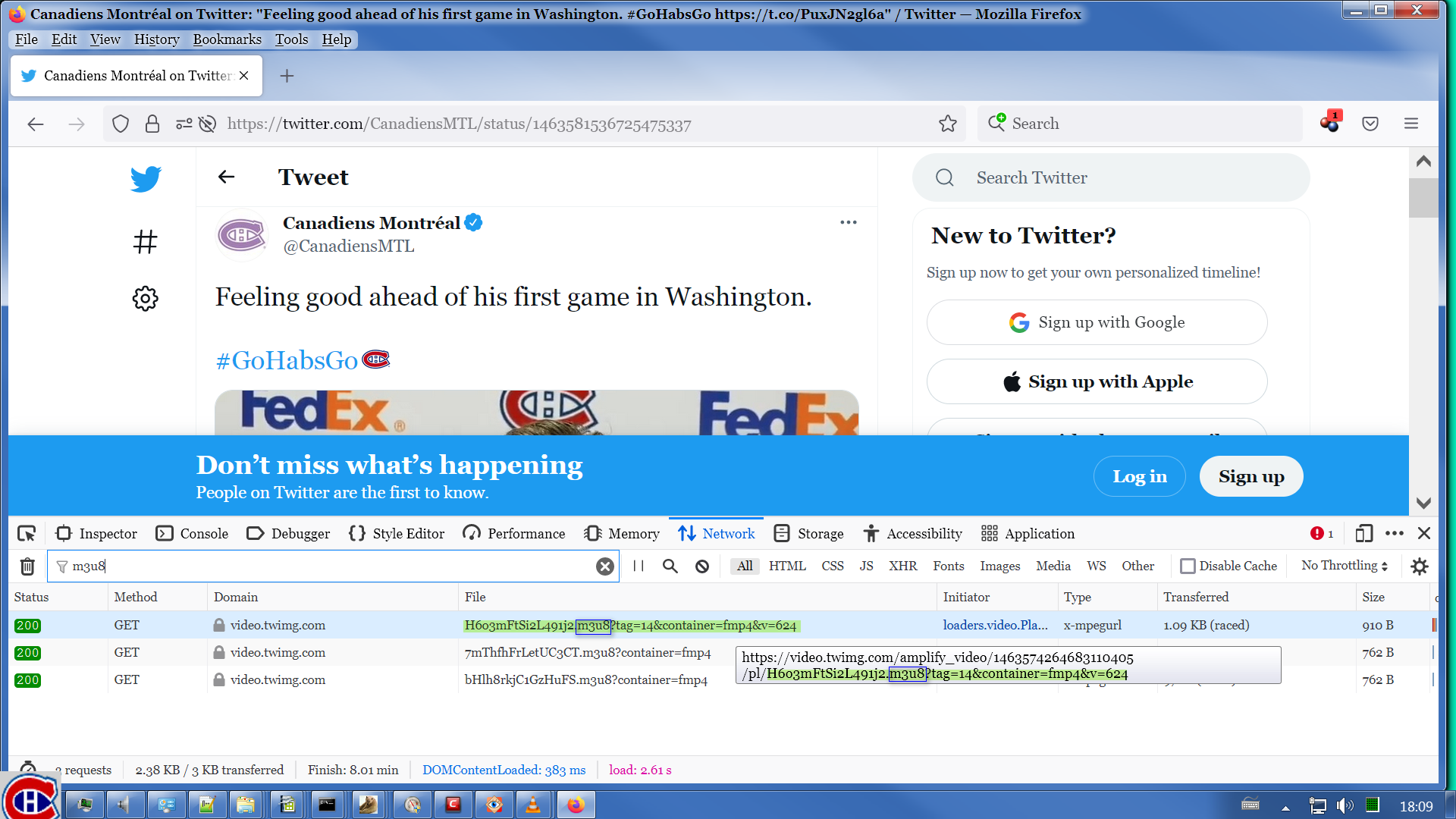

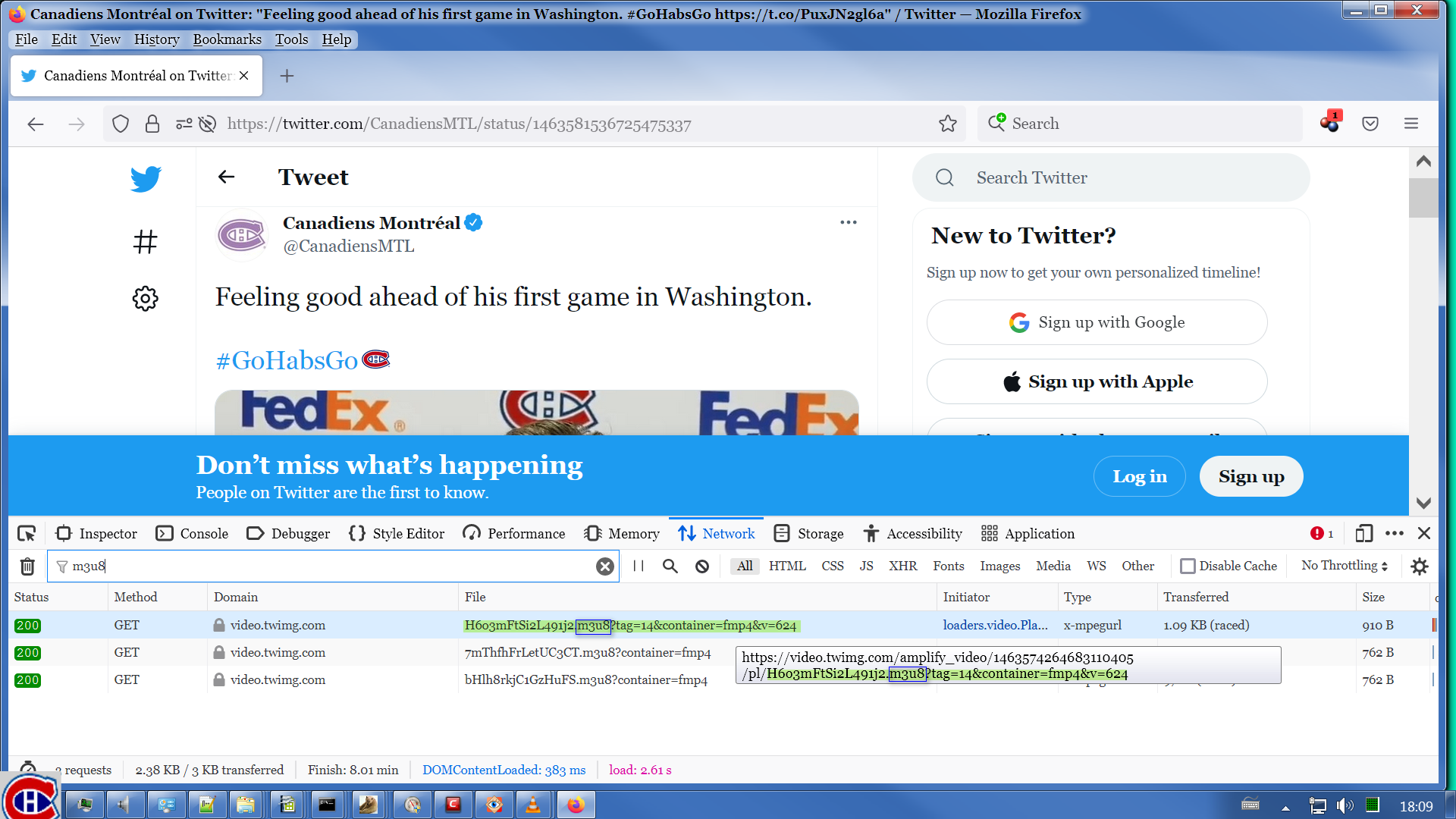

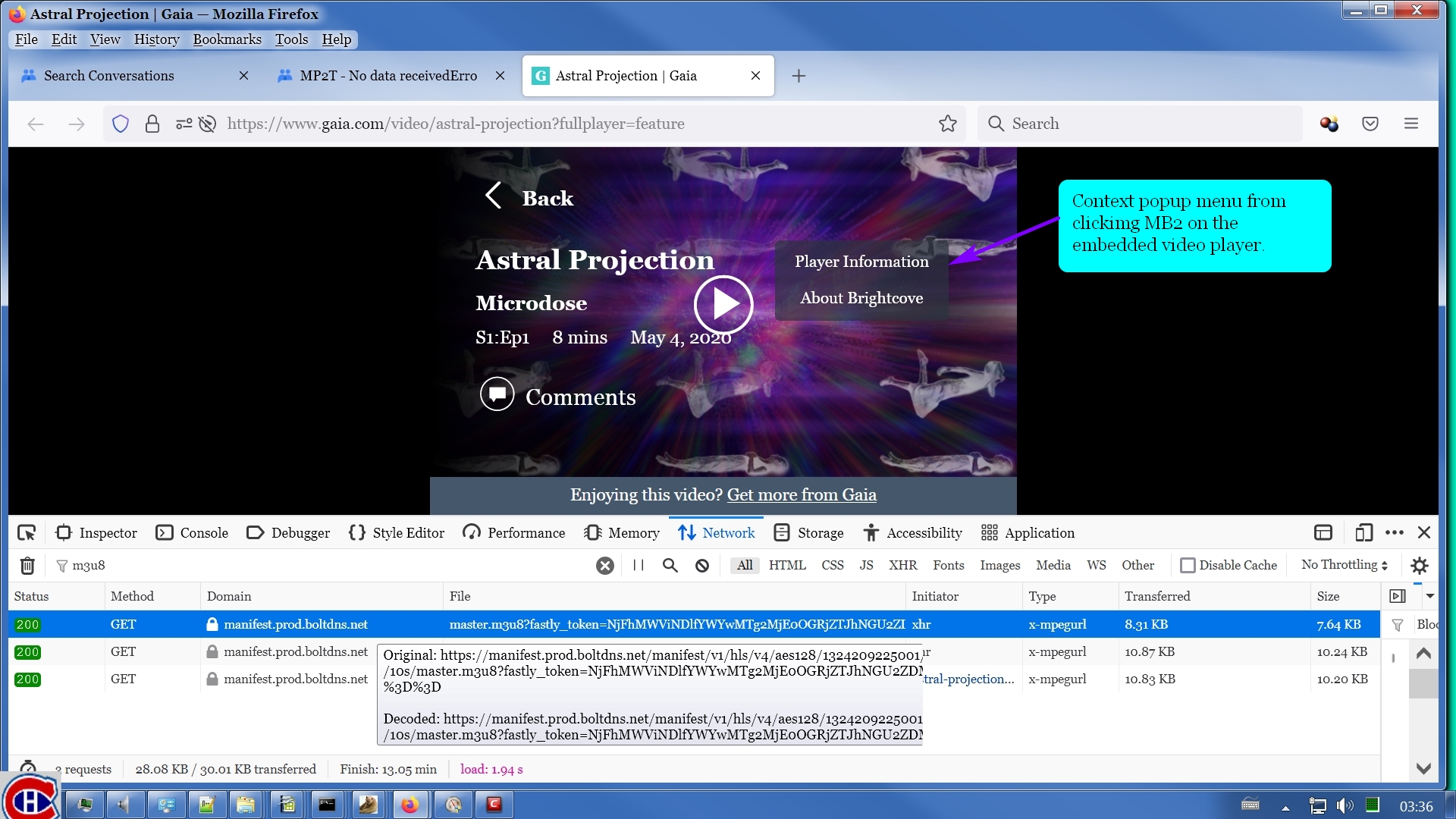

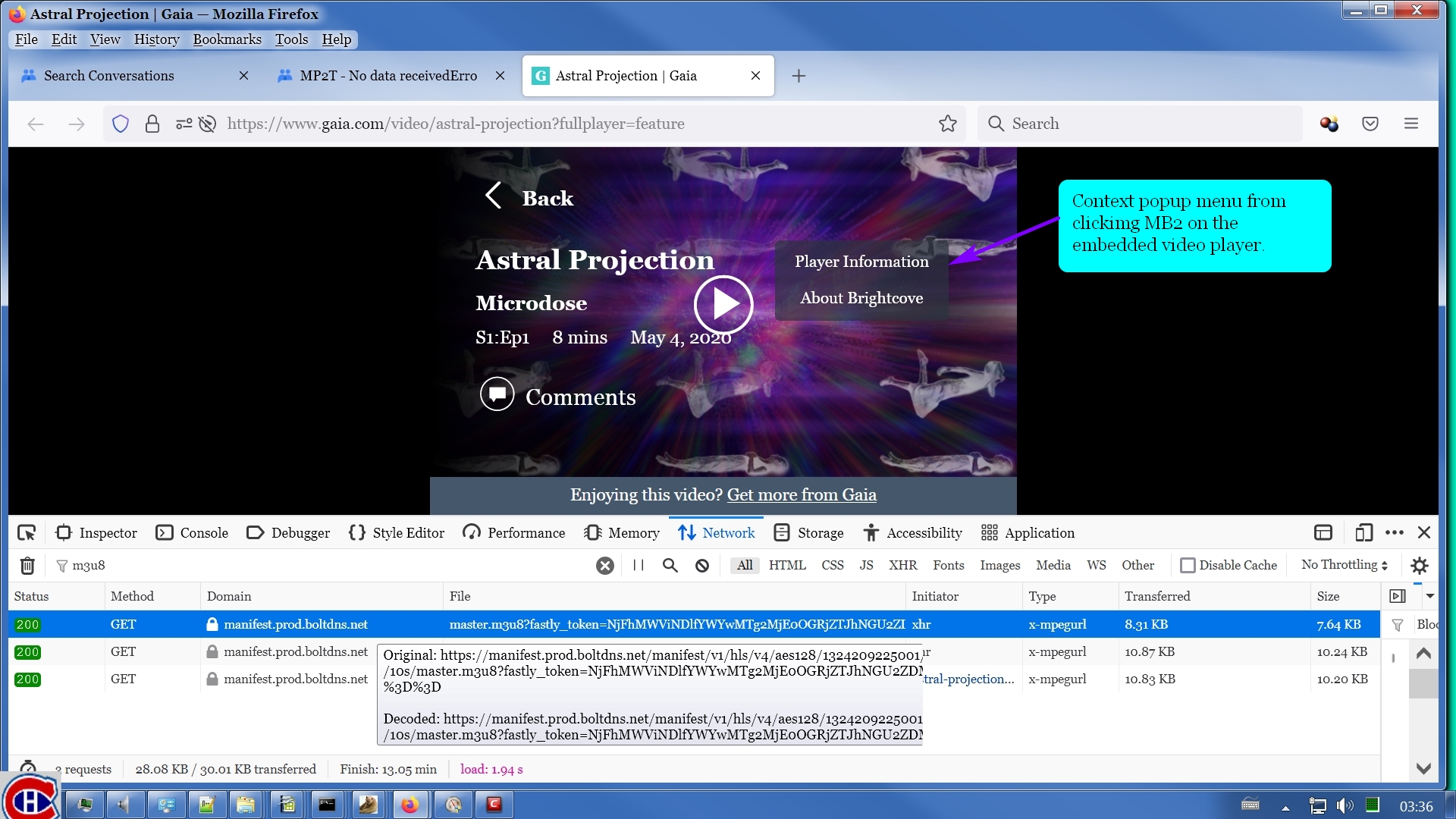

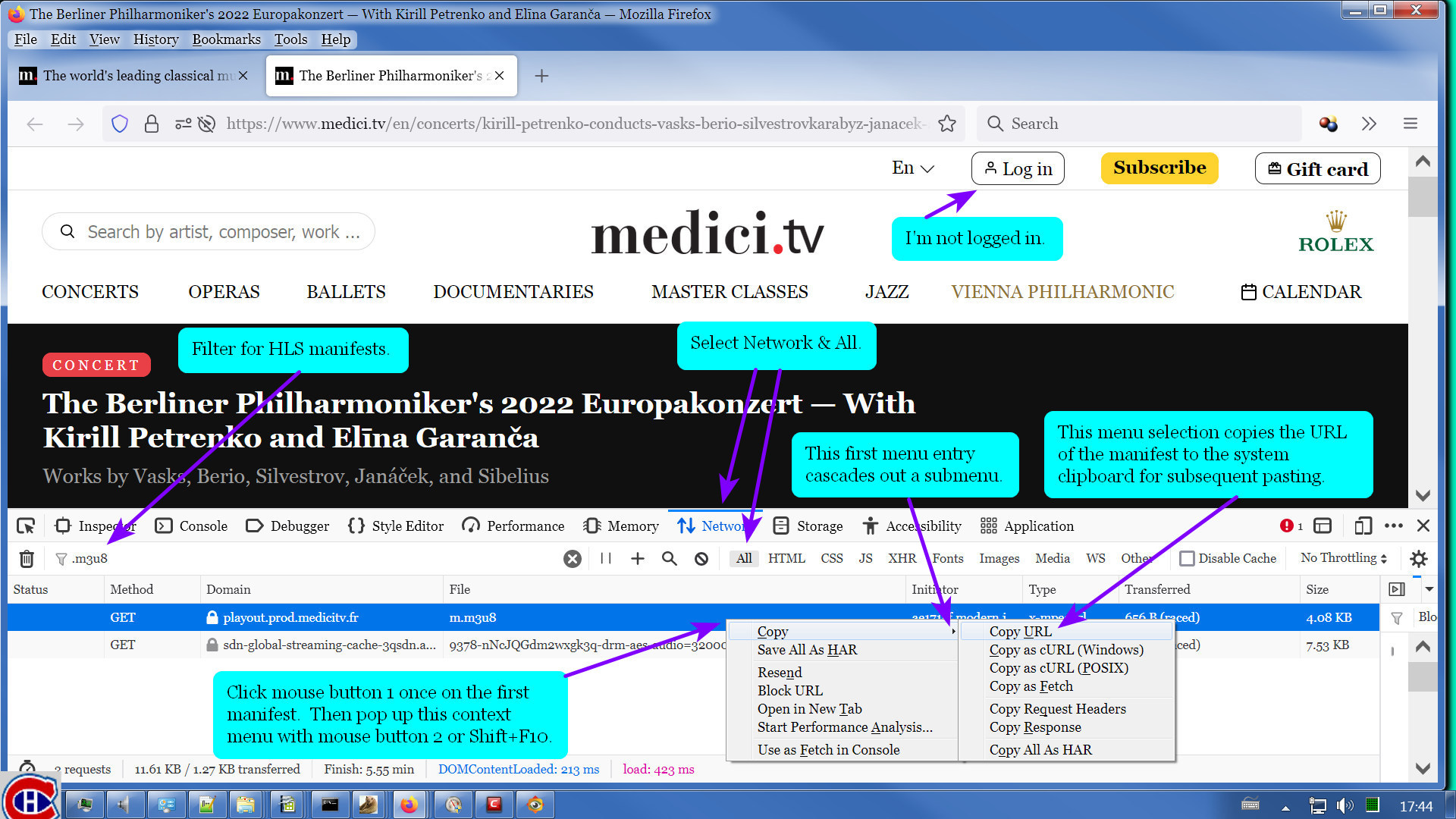

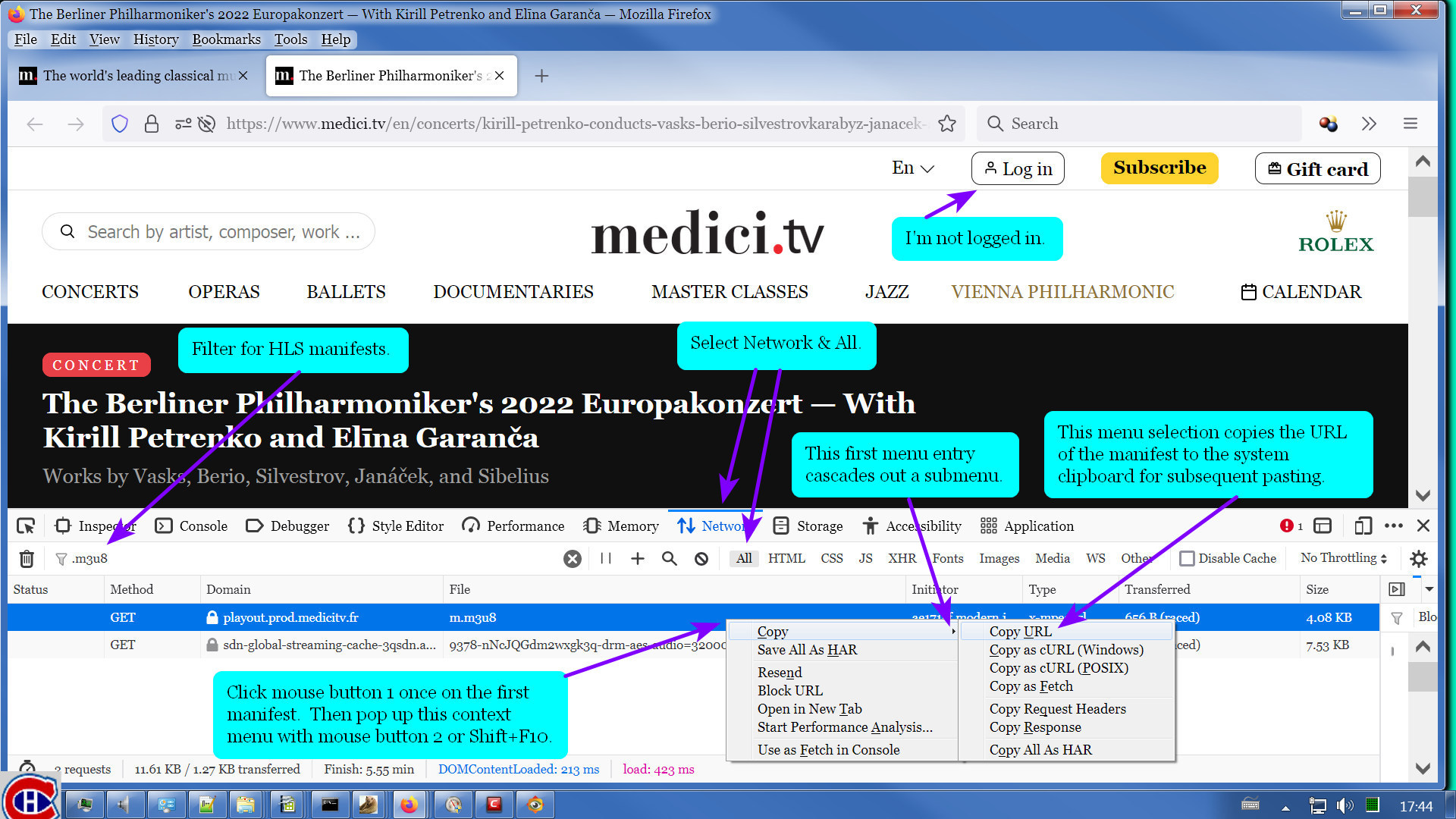

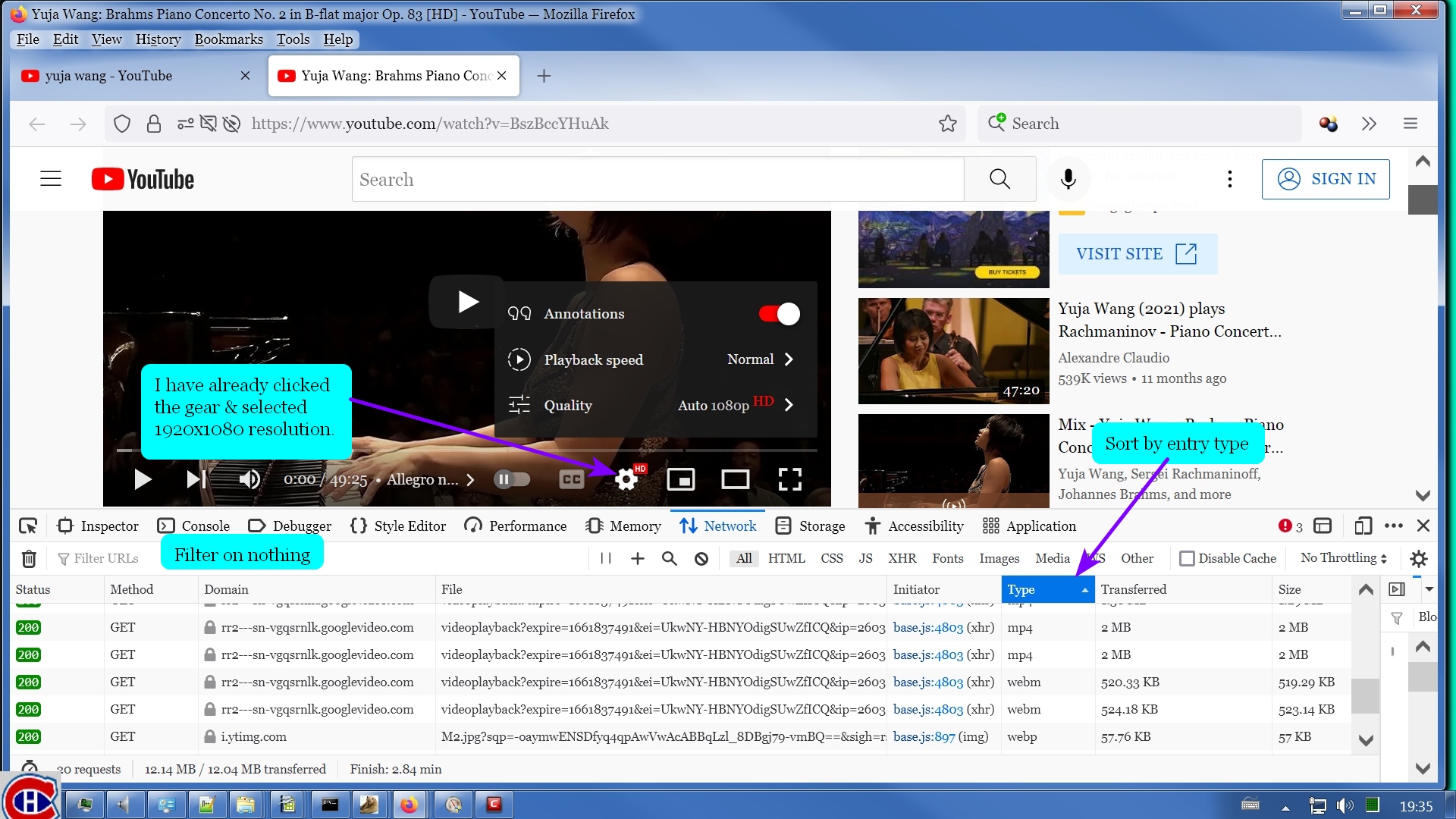

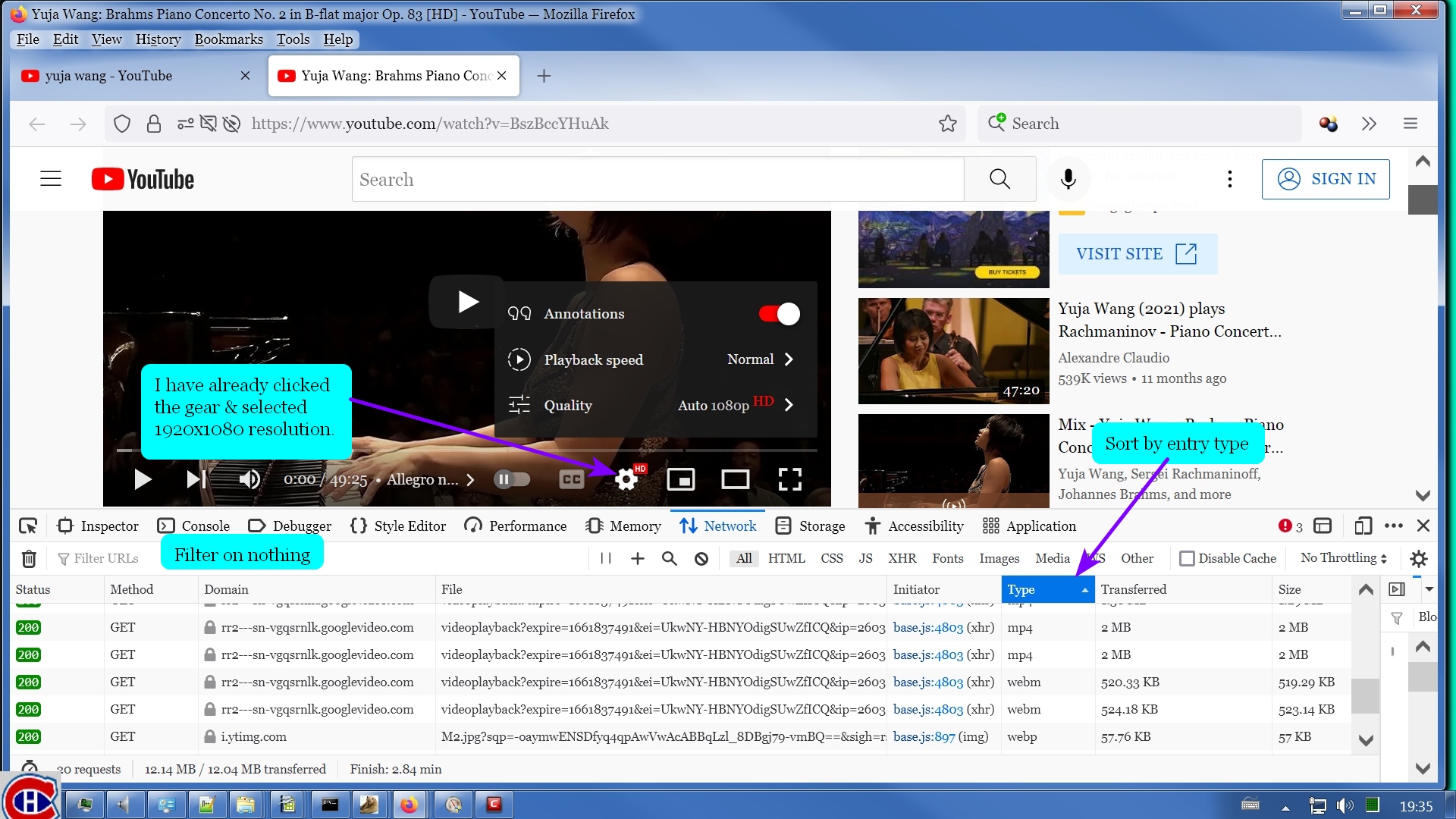

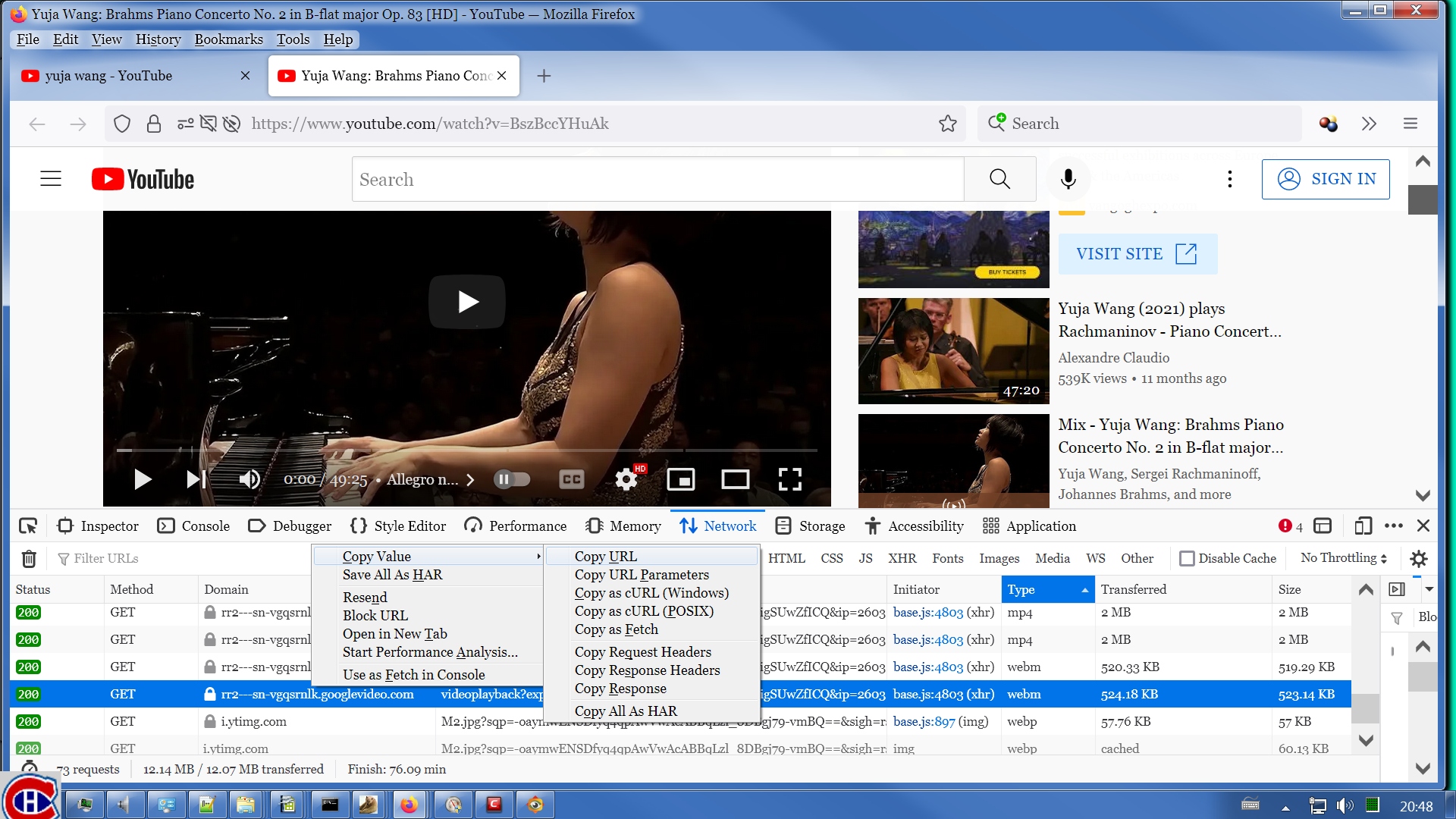

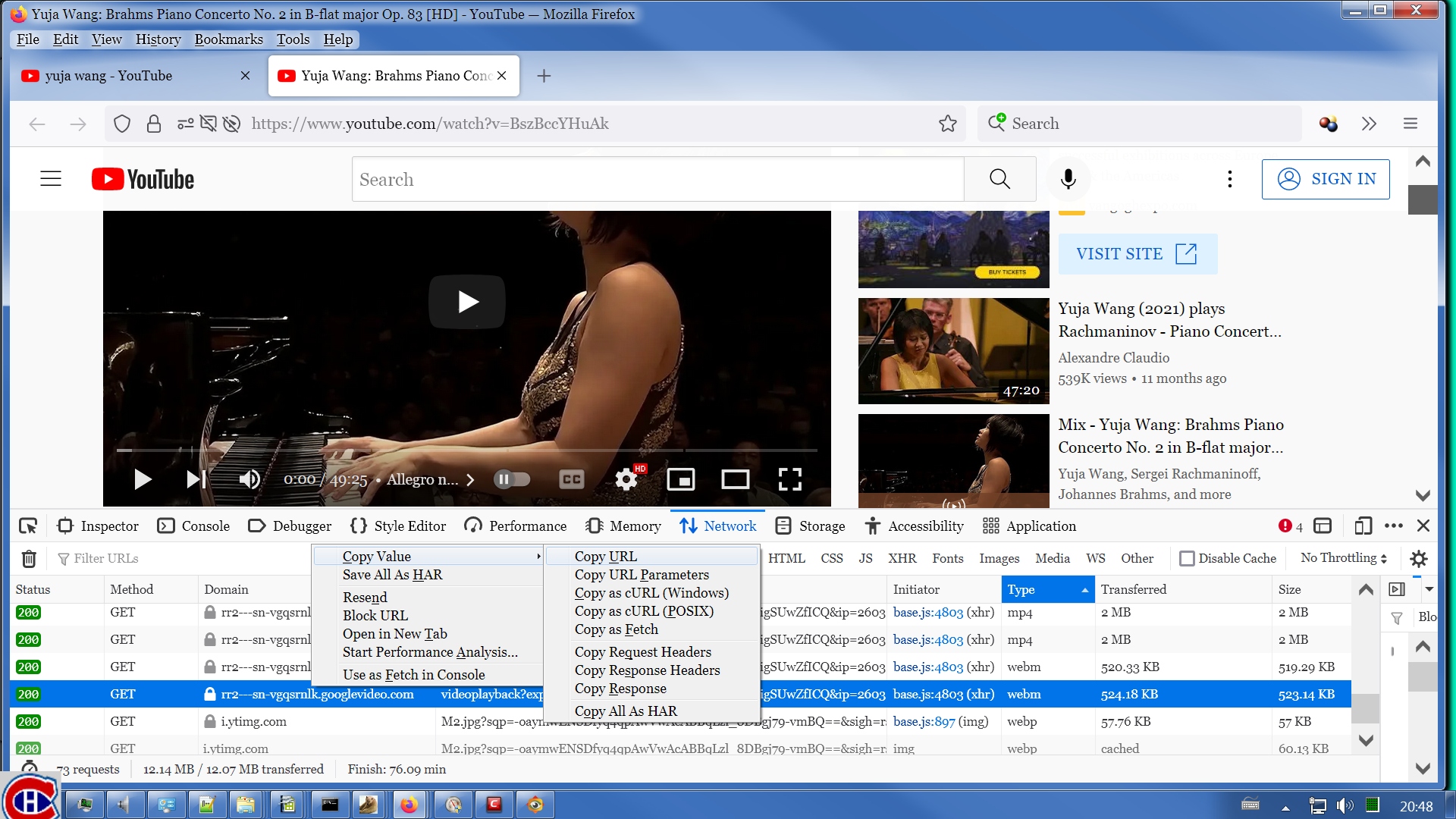

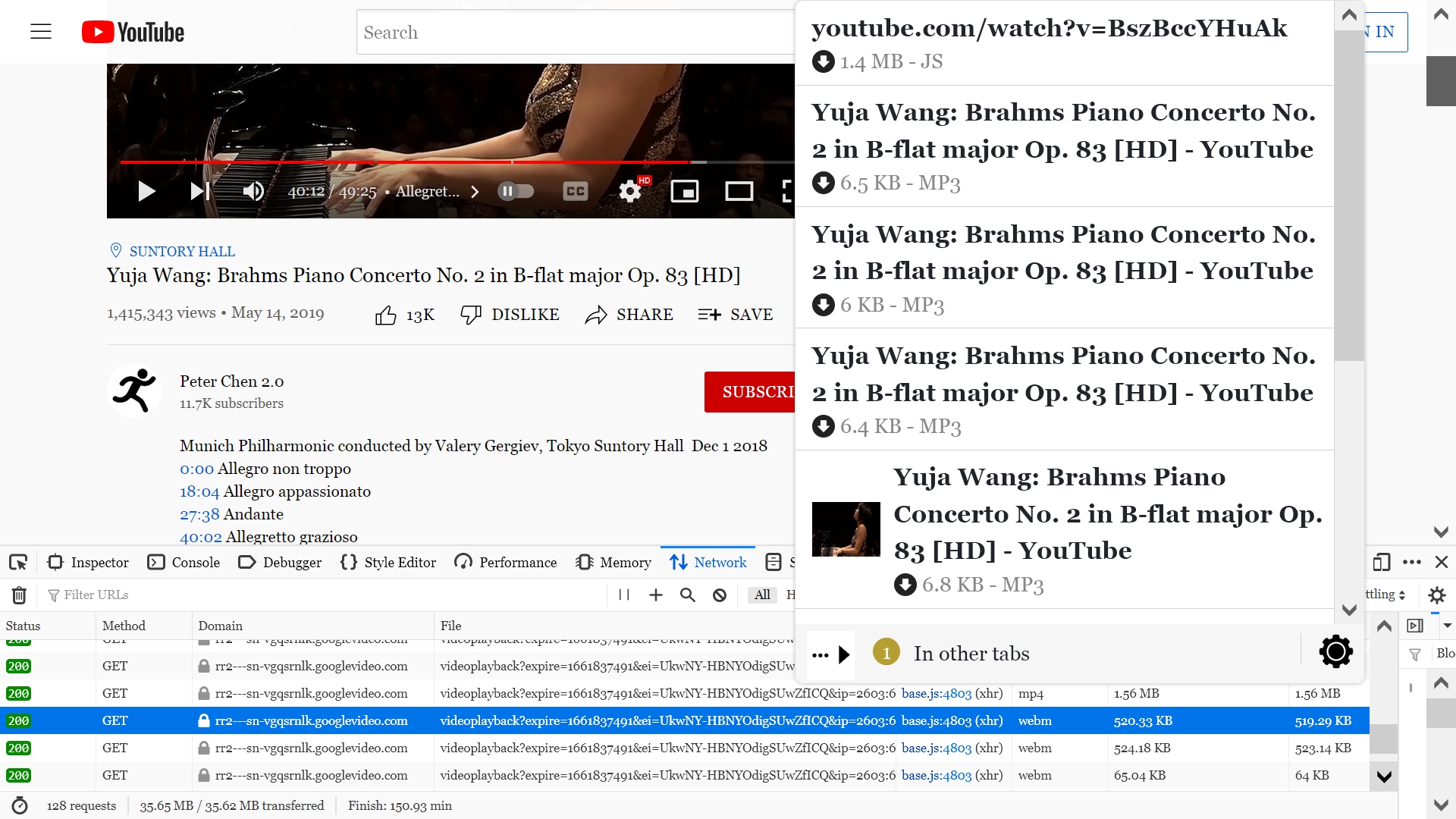

Time to go to plan B. Open the Firefox Network Monitor by clicking the F12 key.

It is possible that you won't have exactly what I'm showing. You need to select the highlighted items Network and All. The other selections to the right of All might also be selected at the same time as All. Unselect them if necessary. Then type m3u8 into the Filter field.

What is m3u8? There is a naming convention for manifest files. The file name for any manifest file has an extension of m3u8. We are looking for any manifest file that might be present here. Why are we interested in manifests? This will become clear as we go along here.

So far, there isn't anything showing in the Network Monitor. Not too helpful. Hit F5 to reload the page.

What is m3u8? There is a naming convention for manifest files. The file name for any manifest file has an extension of m3u8. We are looking for any manifest file that might be present here. Why are we interested in manifests? This will become clear as we go along here.

So far, there isn't anything showing in the Network Monitor. Not too helpful. Hit F5 to reload the page.

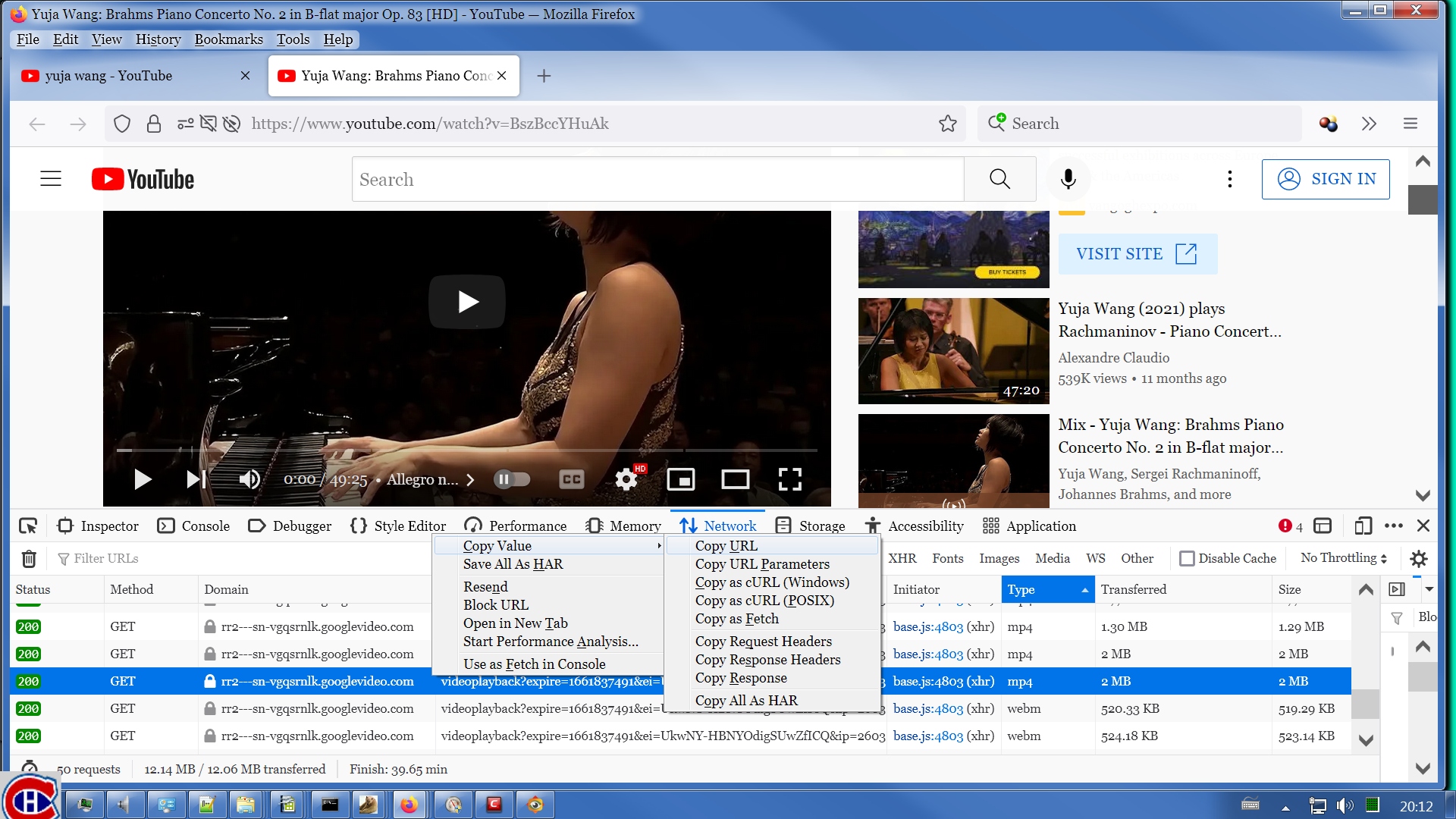

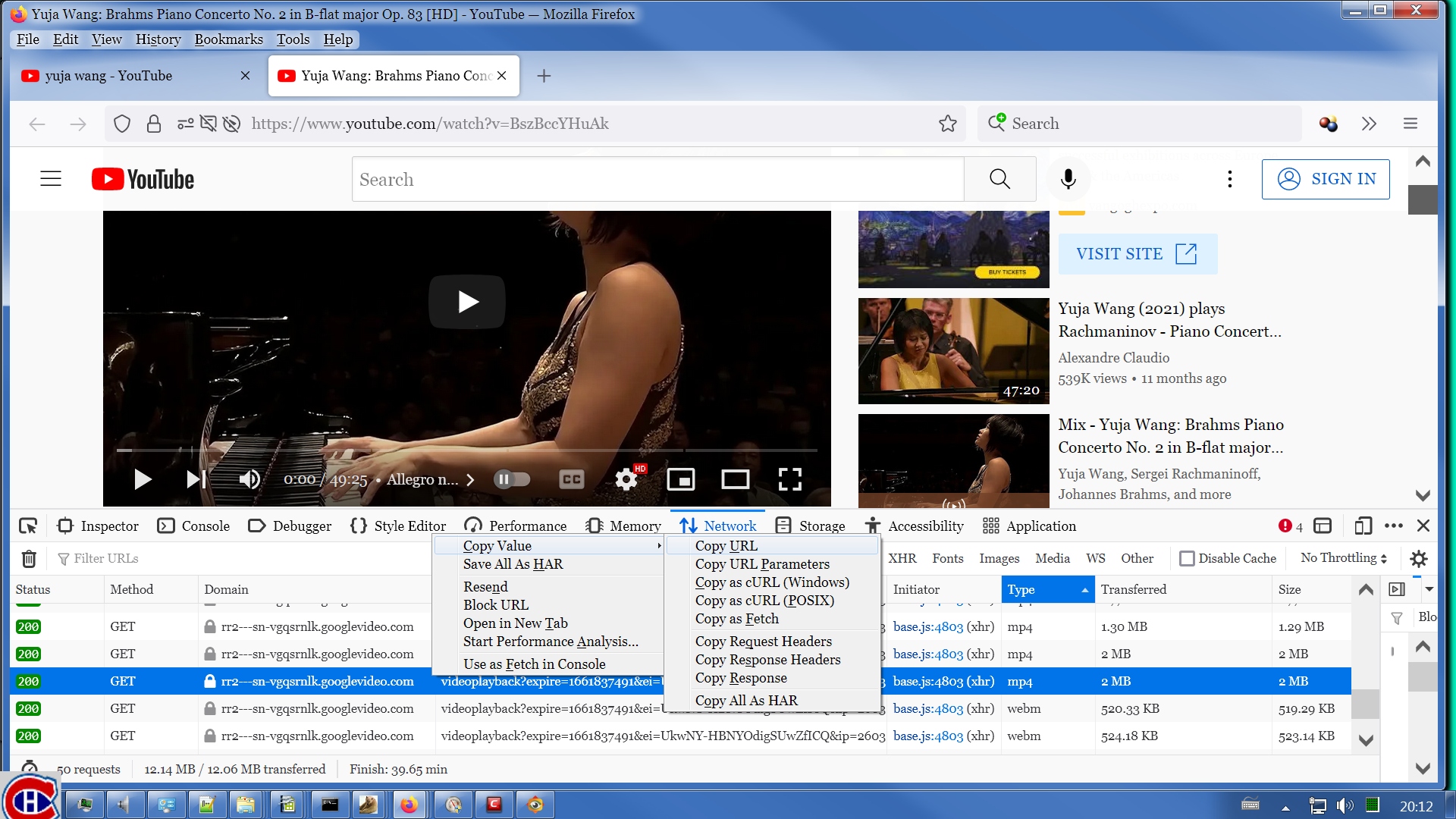

Good. We do have manifests. Some sites don't, notably YouTube. In that case, this whole discussion is of no help at all. Sorry. That's just the way it is. There is no international standard for web sites to present audio-visual content, so there's lots of sites that do not supply manifests. I suspect that in some cases, this is intentionally meant to thwart downloaders such as VDH & people who use the technique I'm discussing here. But where there is a manifest, there is a way. In this case, we are lucky.

So now that we know we have a manifest, what good does it do us? Let's look inside one. On the assumption that the first one is the most important one, let's look at that one. Double click the first entry in the list. In this image, I am hovering the mouse over the entry before double clicking it.

So now that we know we have a manifest, what good does it do us? Let's look inside one. On the assumption that the first one is the most important one, let's look at that one. Double click the first entry in the list. In this image, I am hovering the mouse over the entry before double clicking it.

Note how the complete URL in the hover text includes the string shown in the Network Monitor. The Network Monitor shows only the last part of the URL. You have to hover the mouse over the item in the Network Monitor to see the complete URL. Also notice the string m3u8 slyly buried in the middle of all that. That's why you filter. With the filter field empty, the Network Monitor would fill up with all kinds of stuff over time. Try it yourself some time. You'll see what I mean.

So now I double click on that entry. This normally brings up a Firefox dialog offering to display or save or otherwise handle the file. I have long ago gone through the exercise of setting my text editor as the default handler for objects of type m3u8. You do whatever you need to do on your system to get the manifest to display in your text editor. I'm talking about Notepad or a replacement for it. I happen to use Notepad++ but there are others. You see, a manifest is just a plain text file. Nothing mysterious to it. It's plain text that anybody can read. Here is the manifest I got by double clicking that entry in the Network Monitor. The lines are long but I can't figure out how to stop Google from splitting & wrapping the lines. So I've inserted something to indicate where the lines really begin & end. I have attached the Master Manifest as a file so you can see what it really looks like. But that just doesn't serve my purposes. I just want you to understand that some of this text is broken into lines that aren't really lines in the manifest. I'm also showing this text with altered colors because I can't figure out how to tell Google to treat this as code or something like that to make it scroll horizontally. If it gets really confusing, just open the attached manifest in your text editor & follow along there. Turn off line wrapping to see the file as it really is.

#EXTM3U

<new line>

#EXT-X-VERSION:6

<new line>

#EXT-X-INDEPENDENT-SEGMENTS

<new line>

#EXT-X-MEDIA:TYPE=SUBTITLES,GROUP-ID="subs",NAME="English",DEFAULT=NO,FORCED=NO,URI="/amplify_video/1463574264683110405/pl/s0/Su37b71s0nn2C_-b.m3u8",LANGUAGE="en",AUTOSELECT=YES,CHARACTERISTICS="twitter.show-text-when-muted"

<new line>

#EXT-X-STREAM-INF:AVERAGE-BANDWIDTH=288000,BANDWIDTH=288000,RESOLUTION=336x270,CODECS="mp4a.40.2,avc1.4d001e",SUBTITLES="subs"

<new line>

/amplify_video/1463574264683110405/pl/336x270/7mThfhFrLetUC3CT.m3u8?container=fmp4

<new line>

#EXT-X-STREAM-INF:AVERAGE-BANDWIDTH=832000,BANDWIDTH=832000,RESOLUTION=450x360,CODECS="mp4a.40.2,avc1.4d001e",SUBTITLES="subs"

<new line>

/amplify_video/1463574264683110405/pl/450x360/31cFuKED8bVckwfS.m3u8?container=fmp4

<new line>

#EXT-X-STREAM-INF:AVERAGE-BANDWIDTH=2176000,BANDWIDTH=2176000,RESOLUTION=900x720,CODECS="mp4a.40.2,avc1.640020",SUBTITLES="subs"

<new line>

/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4

There's a lot of detail in there that will make you dizzy. I will point out the important things you need to focus on. This is a master manifest. It does not describe a stream directly. It contains references to other manifests. That's what makes it a master. Those other manifests contain definitions of streams. This will gradually make more sense as we go along.

The first 3 lines are boilerplate. They are of no interest to us. You can ignore them.

#EXTM3U

<new line>

#EXT-X-VERSION:6

<new line>

#EXT-X-INDEPENDENT-SEGMENTS

<new line>

So now I double click on that entry. This normally brings up a Firefox dialog offering to display or save or otherwise handle the file. I have long ago gone through the exercise of setting my text editor as the default handler for objects of type m3u8. You do whatever you need to do on your system to get the manifest to display in your text editor. I'm talking about Notepad or a replacement for it. I happen to use Notepad++ but there are others. You see, a manifest is just a plain text file. Nothing mysterious to it. It's plain text that anybody can read. Here is the manifest I got by double clicking that entry in the Network Monitor. The lines are long but I can't figure out how to stop Google from splitting & wrapping the lines. So I've inserted something to indicate where the lines really begin & end. I have attached the Master Manifest as a file so you can see what it really looks like. But that just doesn't serve my purposes. I just want you to understand that some of this text is broken into lines that aren't really lines in the manifest. I'm also showing this text with altered colors because I can't figure out how to tell Google to treat this as code or something like that to make it scroll horizontally. If it gets really confusing, just open the attached manifest in your text editor & follow along there. Turn off line wrapping to see the file as it really is.

#EXTM3U

<new line>

#EXT-X-VERSION:6

<new line>

#EXT-X-INDEPENDENT-SEGMENTS

<new line>

#EXT-X-MEDIA:TYPE=SUBTITLES,GROUP-ID="subs",NAME="English",DEFAULT=NO,FORCED=NO,URI="/amplify_video/1463574264683110405/pl/s0/Su37b71s0nn2C_-b.m3u8",LANGUAGE="en",AUTOSELECT=YES,CHARACTERISTICS="twitter.show-text-when-muted"

<new line>

#EXT-X-STREAM-INF:AVERAGE-BANDWIDTH=288000,BANDWIDTH=288000,RESOLUTION=336x270,CODECS="mp4a.40.2,avc1.4d001e",SUBTITLES="subs"

<new line>

/amplify_video/1463574264683110405/pl/336x270/7mThfhFrLetUC3CT.m3u8?container=fmp4

<new line>

#EXT-X-STREAM-INF:AVERAGE-BANDWIDTH=832000,BANDWIDTH=832000,RESOLUTION=450x360,CODECS="mp4a.40.2,avc1.4d001e",SUBTITLES="subs"

<new line>

/amplify_video/1463574264683110405/pl/450x360/31cFuKED8bVckwfS.m3u8?container=fmp4

<new line>

#EXT-X-STREAM-INF:AVERAGE-BANDWIDTH=2176000,BANDWIDTH=2176000,RESOLUTION=900x720,CODECS="mp4a.40.2,avc1.640020",SUBTITLES="subs"

<new line>

/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4

There's a lot of detail in there that will make you dizzy. I will point out the important things you need to focus on. This is a master manifest. It does not describe a stream directly. It contains references to other manifests. That's what makes it a master. Those other manifests contain definitions of streams. This will gradually make more sense as we go along.

The first 3 lines are boilerplate. They are of no interest to us. You can ignore them.

#EXTM3U

<new line>

#EXT-X-VERSION:6

<new line>

#EXT-X-INDEPENDENT-SEGMENTS

<new line>

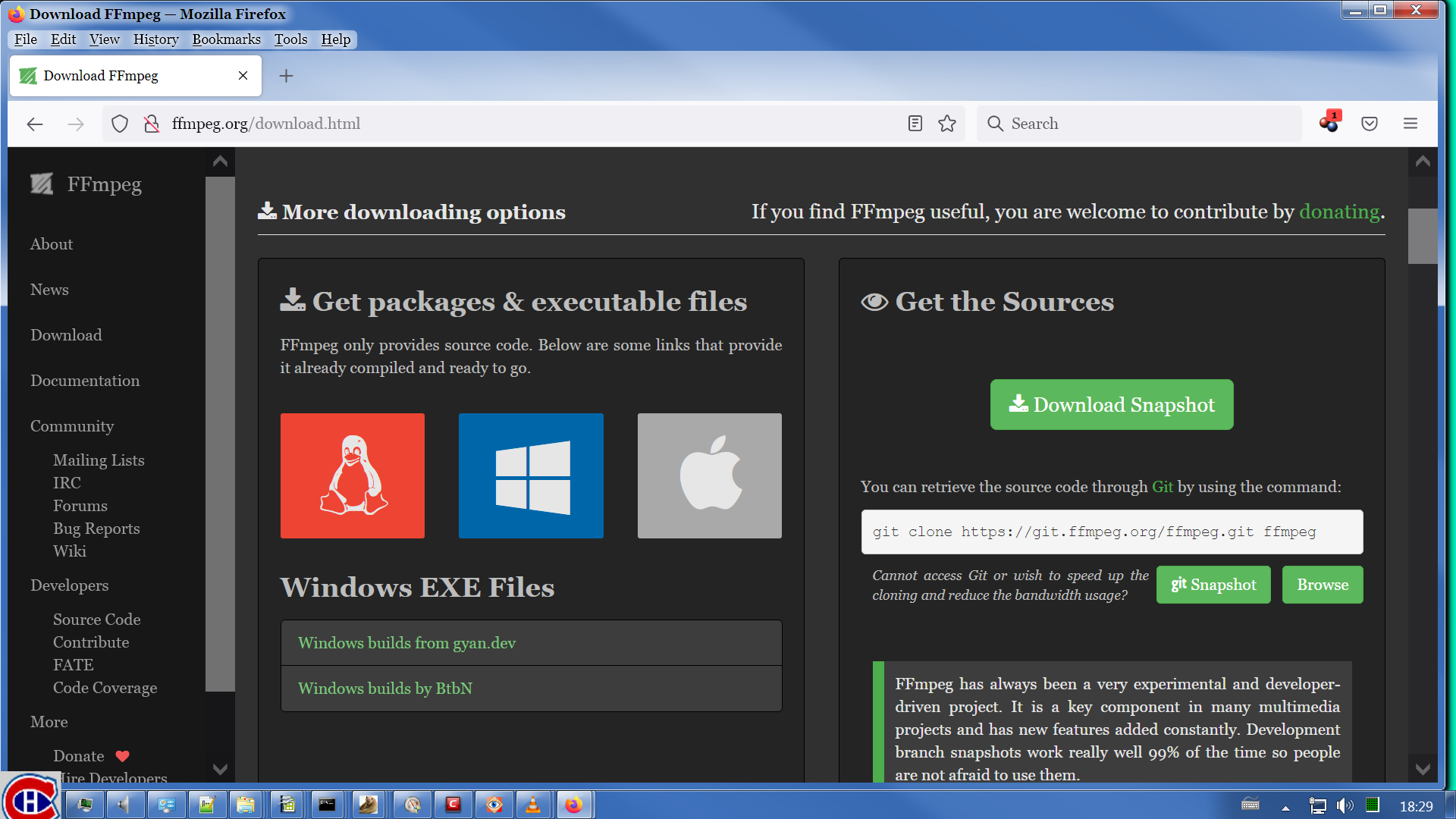

Each line that begins with #EXT-X- describes a stream of data that is stored on Twitter & that we can download. But we are not going to use VDH to do the download, obviously. We already tried that & it didn't work. The tool we are going to use is ffmpeg. You can get ffmpeg at ffmpeg.org.

It doesn't take a degree in rocket science to figure out what to do next. When you do the obvious, you get this page:

The 3 big icons on the left are for, left to right, Linux, Windows, macOS. When you hover your mouse over each one, the information displayed below the icons changes. I'm on Windows so that's what I'm showing. You select whichever platform you're on. Clicking one of the links below your icon takes you to another page where you can select what you need to download. Generally, this offers a range of selections. Ffmpeg is a rather complex piece of software that can be built with more or fewer features. Michel has included a trimmed down build of ffmpeg with VDH. I have tried to use that for what we're discussing here but it doesn't work for everything. So I have always gotten a full-featured version of ffmpeg. The page you download from will explain the details of each available package. If you're so inclined, you can even get the source code of ffmpeg. I have never been so inclined.

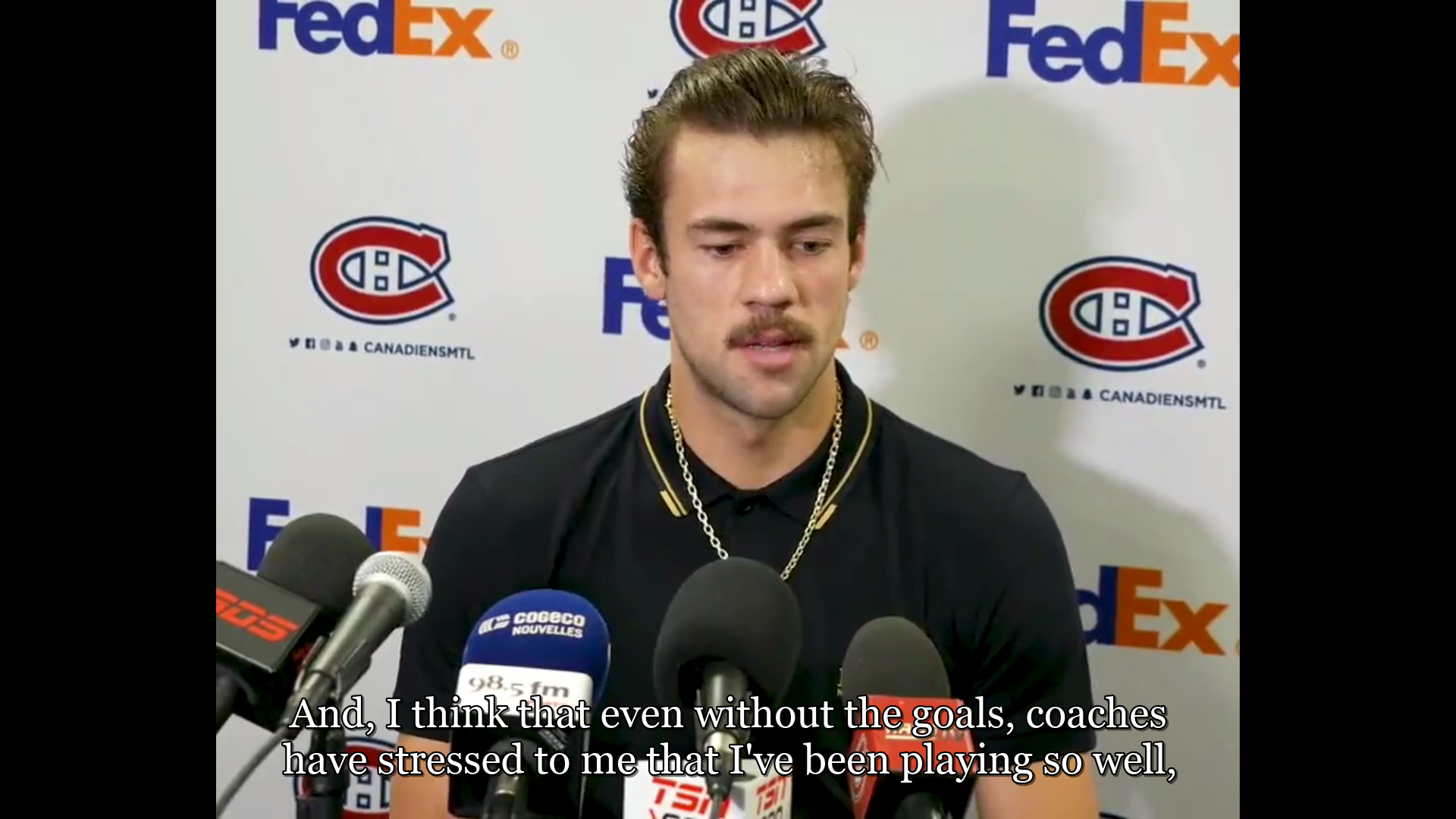

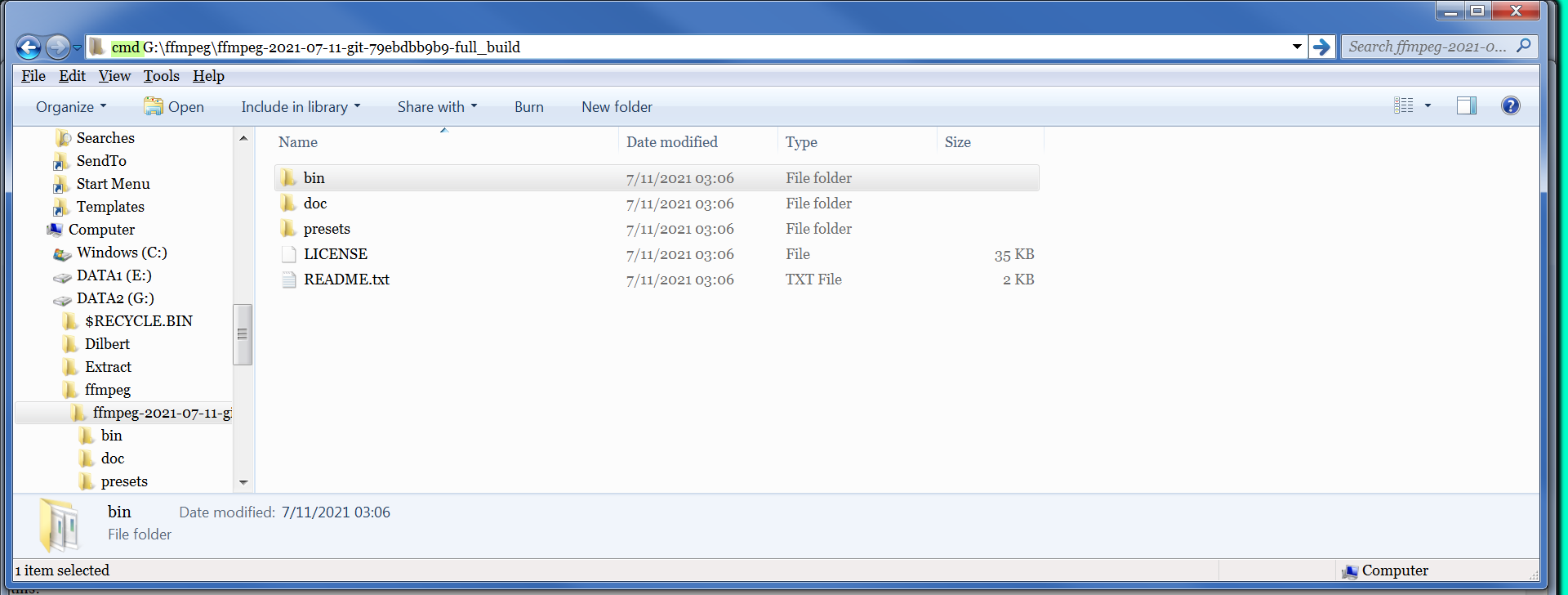

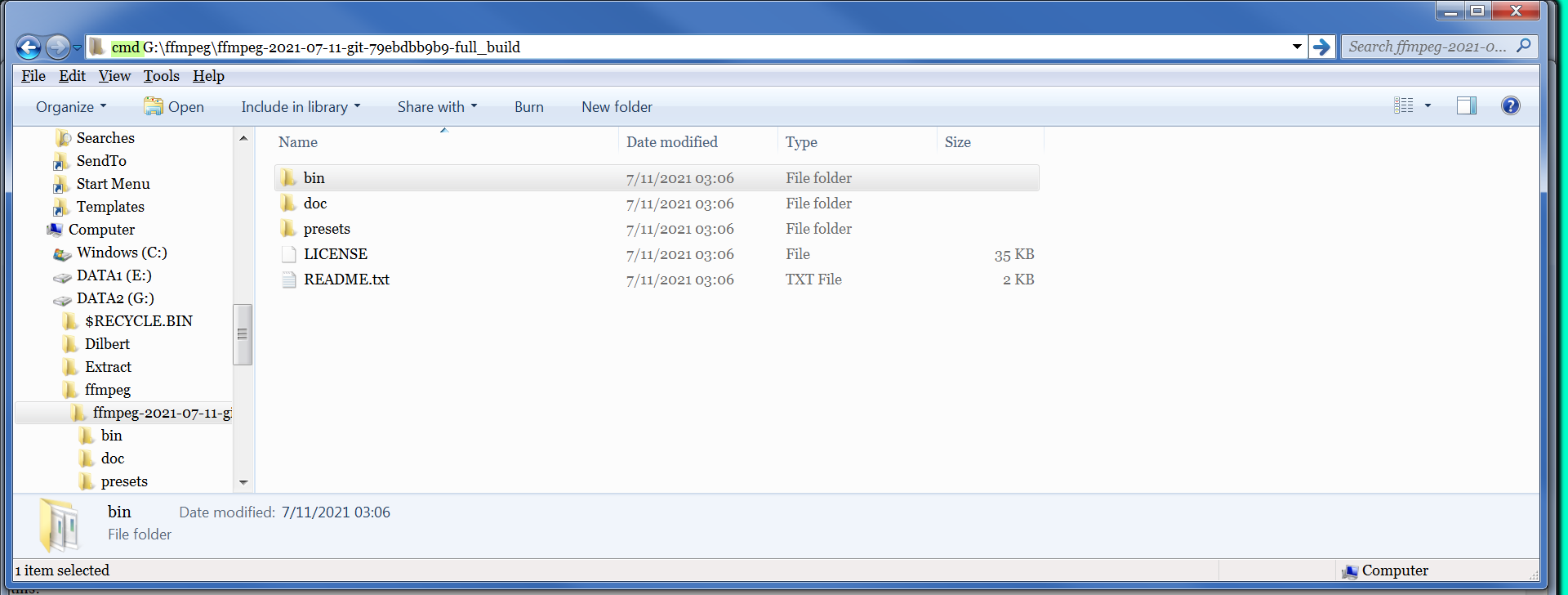

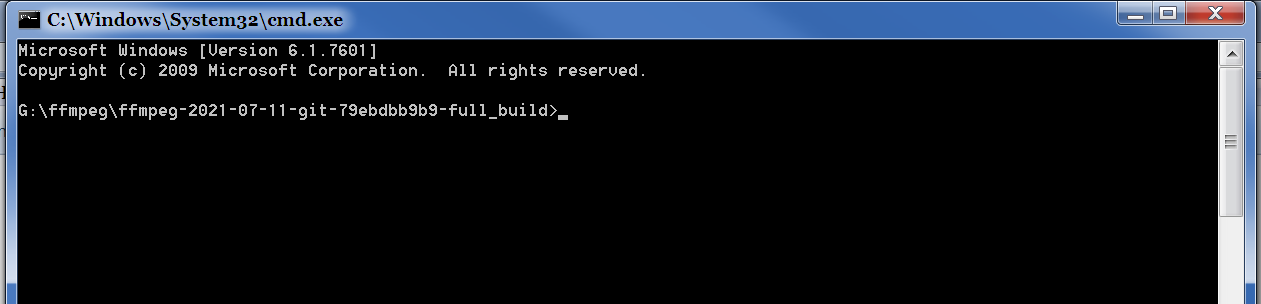

The ffmpeg package is just a zip file. There is no installer utility. You just unzip the zipfile somewhere on your disk space. Here's what mine looks like:

The ffmpeg package is just a zip file. There is no installer utility. You just unzip the zipfile somewhere on your disk space. Here's what mine looks like:

Not much to it. You'll note on the left that there is a subdirectory named doc. There is ffmpeg documentation in there in HTML format. Feel free to increase your frustration level by trying to make heads or tails of any of it. Take it from me, it's not usually a rewarding effort. You'll also note that I have a rather weird directory name. That's just what came with the package. It has the benefit of including the build date within it. As you can see, I downloaded this on July 11, 2021. You'll get whatever is there on the day you do this.

When I get a new ffmpeg (maybe in a few months), I will unzip it into the directory you can see called ffmpeg. That way, I will have 2 versions of ffmpeg side by side. I will try out the newer version a few times until I'm satisfied that it works properly. Then I will simply delete the directory tree for the old version. You could do things with your system SET environment to integrate ffmpeg with your Windows system. I have not bothered. It's more flexible this way. But to each his own.

But this is preparatory work. Let's get back to the manifest. Look at the first stream definition:

#EXT-X-MEDIA:TYPE=SUBTITLES,GROUP-ID="subs",NAME="English",DEFAULT=NO,FORCED=NO,URI="/amplify_video/1463574264683110405/pl/s0/Su37b71s0nn2C_-b.m3u8",LANGUAGE="en",AUTOSELECT=YES,CHARACTERISTICS="twitter.show-text-when-muted"

When I get a new ffmpeg (maybe in a few months), I will unzip it into the directory you can see called ffmpeg. That way, I will have 2 versions of ffmpeg side by side. I will try out the newer version a few times until I'm satisfied that it works properly. Then I will simply delete the directory tree for the old version. You could do things with your system SET environment to integrate ffmpeg with your Windows system. I have not bothered. It's more flexible this way. But to each his own.

But this is preparatory work. Let's get back to the manifest. Look at the first stream definition:

#EXT-X-MEDIA:TYPE=SUBTITLES,GROUP-ID="subs",NAME="English",DEFAULT=NO,FORCED=NO,URI="/amplify_video/1463574264683110405/pl/s0/Su37b71s0nn2C_-b.m3u8",LANGUAGE="en",AUTOSELECT=YES,CHARACTERISTICS="twitter.show-text-when-muted"

Specifically, look for TYPE=SUBTITLES.

#EXT-X-MEDIA:TYPE=SUBTITLES,GROUP-ID="subs",NAME="English",DEFAULT=NO,FORCED=NO,URI="/amplify_video/1463574264683110405/pl/s0/Su37b71s0nn2C_-b.m3u8",LANGUAGE="en",AUTOSELECT=YES,CHARACTERISTICS="twitter.show-text-when-muted"

We are lucking out. This video has subtitles. Many don't. Look at the URI parameter, which I have extracted below to remove the unwanted line break:

#EXT-X-MEDIA:TYPE=SUBTITLES,GROUP-ID="subs",NAME="English",DEFAULT=NO,FORCED=NO,URI="/amplify_video/1463574264683110405/pl/s0/Su37b71s0nn2C_-b.m3u8",LANGUAGE="en",AUTOSELECT=YES,CHARACTERISTICS="twitter.show-text-when-muted"

URI="/amplify_video/1463574264683110405/pl/s0/Su37b71s0nn2C_-b.m3u8"

#EXT-X-MEDIA:TYPE=SUBTITLES,GROUP-ID="subs",NAME="English",DEFAULT=NO,FORCED=NO,URI="/amplify_video/1463574264683110405/pl/s0/Su37b71s0nn2C_-b.m3u8",LANGUAGE="en",AUTOSELECT=YES,CHARACTERISTICS="twitter.show-text-when-muted"

We are lucking out. This video has subtitles. Many don't. Look at the URI parameter, which I have extracted below to remove the unwanted line break:

#EXT-X-MEDIA:TYPE=SUBTITLES,GROUP-ID="subs",NAME="English",DEFAULT=NO,FORCED=NO,URI="/amplify_video/1463574264683110405/pl/s0/Su37b71s0nn2C_-b.m3u8",LANGUAGE="en",AUTOSELECT=YES,CHARACTERISTICS="twitter.show-text-when-muted"

URI="/amplify_video/1463574264683110405/pl/s0/Su37b71s0nn2C_-b.m3u8"

First, notice that it ends with m3u8. So this stream definition points off to another manifest. Also, note that this is not a complete URL. Go back to the hover text in the Network Monitor. Note how that URL starts with:

https://video.twimg.com/amplify_video/

https://video.twimg.com/amplify_video/

To get the full URL of the stream manifest for the subtitles stream, you put the two together:

https://video.twimg.com/amplify_video/1463574264683110405/pl/s0/Su37b71s0nn2C_-b.m3u8

Remember this URL. I'll be using it later.

Now look at this bit:

#EXT-X-MEDIA:TYPE=SUBTITLES,GROUP-ID="subs",NAME="English",DEFAULT=NO,FORCED=NO,URI="/amplify_video/1463574264683110405/pl/s0/Su37b71s0nn2C_-b.m3u8",LANGUAGE="en",AUTOSELECT=YES,CHARACTERISTICS="twitter.show-text-when-muted"

This is how a manifest gives a name to a stream description. You can see that not every stream description in the manifest has a GROUP-ID. But this one does & it will become apparent why as we go along. So "subs" is the name of this stream.

Following the subtitles definition in our master manifest we have 3 more stream descriptions. They are all quite similar. I want to focus on the last one:

#EXT-X-STREAM-INF:AVERAGE-BANDWIDTH=2176000,BANDWIDTH=2176000,RESOLUTION=900x720,CODECS="mp4a.40.2,avc1.640020",SUBTITLES="subs"

/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4

https://video.twimg.com/amplify_video/1463574264683110405/pl/s0/Su37b71s0nn2C_-b.m3u8

Remember this URL. I'll be using it later.

Now look at this bit:

#EXT-X-MEDIA:TYPE=SUBTITLES,GROUP-ID="subs",NAME="English",DEFAULT=NO,FORCED=NO,URI="/amplify_video/1463574264683110405/pl/s0/Su37b71s0nn2C_-b.m3u8",LANGUAGE="en",AUTOSELECT=YES,CHARACTERISTICS="twitter.show-text-when-muted"

This is how a manifest gives a name to a stream description. You can see that not every stream description in the manifest has a GROUP-ID. But this one does & it will become apparent why as we go along. So "subs" is the name of this stream.

Following the subtitles definition in our master manifest we have 3 more stream descriptions. They are all quite similar. I want to focus on the last one:

#EXT-X-STREAM-INF:AVERAGE-BANDWIDTH=2176000,BANDWIDTH=2176000,RESOLUTION=900x720,CODECS="mp4a.40.2,avc1.640020",SUBTITLES="subs"

/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4

The first thing I want to point out is the part that says RESOLUTION=900x720.

#EXT-X-STREAM-INF:AVERAGE-BANDWIDTH=2176000,BANDWIDTH=2176000,RESOLUTION=900x720,CODECS="mp4a.40.2,avc1.640020",SUBTITLES="subs"

/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4

This is the best resolution being offered here. The other 2 stream descriptions have lower resolutions.

Now focus on BANDWIDTH=2176000.

#EXT-X-STREAM-INF:AVERAGE-BANDWIDTH=2176000,BANDWIDTH=2176000,RESOLUTION=900x720,CODECS="mp4a.40.2,avc1.640020",SUBTITLES="subs"

/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4

This gives a rough estimate of the quality of the video. Not the resolution, the quality. You could encounter manifests that show multiple stream descriptions of the same resolution. When that happens, just look at the BANDWIDTH parameter. It is likely that the value of BANDWIDTH will be different for each stream description of that particular resolution. The higher the BANDWIDTH value, the better quality the video will be at that resolution. Of course, the file you get will be larger as well. We don't happen to have that much choice in this case. That just makes things a tad simpler.

Notice that this stream description takes up 2 lines. It may look like 3 lines, but that's just Google automatically splitting & wrapping the 2 lines. The real manifest has just 2 lines here:

#EXT-X-STREAM-INF:AVERAGE-BANDWIDTH=2176000,BANDWIDTH=2176000,RESOLUTION=900x720,CODECS="mp4a.40.2,avc1.640020",SUBTITLES="subs"

/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4

There's all the various parameters on the first line. Then there's the partial URL on the second line. Note how this URL has .m3u8 hiding in it, so this is another reference from our master manifest to a stream manifest. As I describe above, this partial URL can be turned into a complete URL like this:

https://video.twimg.com/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4

Just for fun, navigate to this last URL. As it happens, it's the third entry in the Network Monitor. Just double click that. You will see that this is another manifest that looks completely unlike our master manifest here. It is the description of the actual stream itself. I won't be discussing this at all. You don't need to know anything about what's in the stream manifest. You just need to know its URL. And given the bizarre URLs we're looking at here, which are typical of what you'll find around the web, we should all be grateful for copy/paste.

Now focus on this bit:

#EXT-X-STREAM-INF:AVERAGE-BANDWIDTH=2176000,BANDWIDTH=2176000,RESOLUTION=900x720,CODECS="mp4a.40.2,avc1.640020",SUBTITLES="subs"

/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4

This is the best resolution being offered here. The other 2 stream descriptions have lower resolutions.

Now focus on BANDWIDTH=2176000.

#EXT-X-STREAM-INF:AVERAGE-BANDWIDTH=2176000,BANDWIDTH=2176000,RESOLUTION=900x720,CODECS="mp4a.40.2,avc1.640020",SUBTITLES="subs"

/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4

This gives a rough estimate of the quality of the video. Not the resolution, the quality. You could encounter manifests that show multiple stream descriptions of the same resolution. When that happens, just look at the BANDWIDTH parameter. It is likely that the value of BANDWIDTH will be different for each stream description of that particular resolution. The higher the BANDWIDTH value, the better quality the video will be at that resolution. Of course, the file you get will be larger as well. We don't happen to have that much choice in this case. That just makes things a tad simpler.

Notice that this stream description takes up 2 lines. It may look like 3 lines, but that's just Google automatically splitting & wrapping the 2 lines. The real manifest has just 2 lines here:

#EXT-X-STREAM-INF:AVERAGE-BANDWIDTH=2176000,BANDWIDTH=2176000,RESOLUTION=900x720,CODECS="mp4a.40.2,avc1.640020",SUBTITLES="subs"

/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4

There's all the various parameters on the first line. Then there's the partial URL on the second line. Note how this URL has .m3u8 hiding in it, so this is another reference from our master manifest to a stream manifest. As I describe above, this partial URL can be turned into a complete URL like this:

https://video.twimg.com/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4

Just for fun, navigate to this last URL. As it happens, it's the third entry in the Network Monitor. Just double click that. You will see that this is another manifest that looks completely unlike our master manifest here. It is the description of the actual stream itself. I won't be discussing this at all. You don't need to know anything about what's in the stream manifest. You just need to know its URL. And given the bizarre URLs we're looking at here, which are typical of what you'll find around the web, we should all be grateful for copy/paste.

Now focus on this bit:

#EXT-X-STREAM-INF:AVERAGE-BANDWIDTH=2176000,BANDWIDTH=2176000,RESOLUTION=900x720,CODECS="mp4a.40.2,avc1.640020",SUBTITLES="subs"

/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4

This is a reference back from this stream description to the earlier stream description.

#EXT-X-MEDIA:TYPE=SUBTITLES,GROUP-ID="subs",NAME="English",DEFAULT=NO,FORCED=NO,URI="/amplify_video/1463574264683110405/pl/s0/Su37b71s0nn2C_-b.m3u8",LANGUAGE="en",AUTOSELECT=YES,CHARACTERISTICS="twitter.show-text-when-muted"

#EXT-X-STREAM-INF:AVERAGE-BANDWIDTH=2176000,BANDWIDTH=2176000,RESOLUTION=900x720,CODECS="mp4a.40.2,avc1.640020",SUBTITLES="subs"

/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4

SUBTITLES="subs" in the last stream description refers back to GROUP-ID="subs" in the earlier stream description. The value "subs" is the same in both places. That is the connection. You'll note that all 3 #EXT-X-STREAM-INF stream descriptions refer back to the same subtitle stream description. That's not really surprising. The resolution is different but the video is the same, so all the streams share the same subtitles. You will encounter this reference structure fairly often if you have occasion to be looking at manifests with any regularity.

We have now identified everything we need to download this video and its subtitles. Let's get to it. I'm going to describe the process using basic steps. I don't do these things exactly like this. I have written everything into a script so I don't have to retype these complicated commands every time. I leave it to you to create a script convenient for you on your system.

/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4

This is a reference back from this stream description to the earlier stream description.

#EXT-X-MEDIA:TYPE=SUBTITLES,GROUP-ID="subs",NAME="English",DEFAULT=NO,FORCED=NO,URI="/amplify_video/1463574264683110405/pl/s0/Su37b71s0nn2C_-b.m3u8",LANGUAGE="en",AUTOSELECT=YES,CHARACTERISTICS="twitter.show-text-when-muted"

#EXT-X-STREAM-INF:AVERAGE-BANDWIDTH=2176000,BANDWIDTH=2176000,RESOLUTION=900x720,CODECS="mp4a.40.2,avc1.640020",SUBTITLES="subs"

/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4

SUBTITLES="subs" in the last stream description refers back to GROUP-ID="subs" in the earlier stream description. The value "subs" is the same in both places. That is the connection. You'll note that all 3 #EXT-X-STREAM-INF stream descriptions refer back to the same subtitle stream description. That's not really surprising. The resolution is different but the video is the same, so all the streams share the same subtitles. You will encounter this reference structure fairly often if you have occasion to be looking at manifests with any regularity.

We have now identified everything we need to download this video and its subtitles. Let's get to it. I'm going to describe the process using basic steps. I don't do these things exactly like this. I have written everything into a script so I don't have to retype these complicated commands every time. I leave it to you to create a script convenient for you on your system.

Wild Willy

Nov 25, 2021, 6:12:12 AM11/25/21

to Video DownloadHelper Q&A

Here is the command to download the video:

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://video.twimg.com/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4" -codec: copy "q:\VDH Testing\Ryan Poehling.mp4" 1>"q:\VDH Testing\Ryan Poehling.Err" 2>"q:\VDH Testing\Ryan Poehling.Log"

#EXT-X-STREAM-INF:AVERAGE-BANDWIDTH=2176000,BANDWIDTH=2176000,RESOLUTION=900x720,CODECS="mp4a.40.2,avc1.640020",SUBTITLES="subs"

/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://video.twimg.com/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4" -codec: copy "q:\VDH Testing\Ryan Poehling.mp4" 1>"q:\VDH Testing\Ryan Poehling.Err" 2>"q:\VDH Testing\Ryan Poehling.Log"

Let's break this down piece by piece. In general, the syntax for ffmpeg goes like this:

ffmpeg [parms for input file] -i [input file name] [parms for output file] [output file name]

The first piece is this:

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://video.twimg.com/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4" -codec: copy "q:\VDH Testing\Ryan Poehling.mp4" 1>"q:\VDH Testing\Ryan Poehling.Err" 2>"q:\VDH Testing\Ryan Poehling.Log"

I surround the full thing with double quotation marks. This isn't strictly necessary here. But as you'll see below, I have spaces in my file specifications. Some of my directories have spaces in their names & some of my files do as well. These need quotation marks because of the spaces. So I'm just in the habit of always coding quotation marks so I don't accidentally forget.

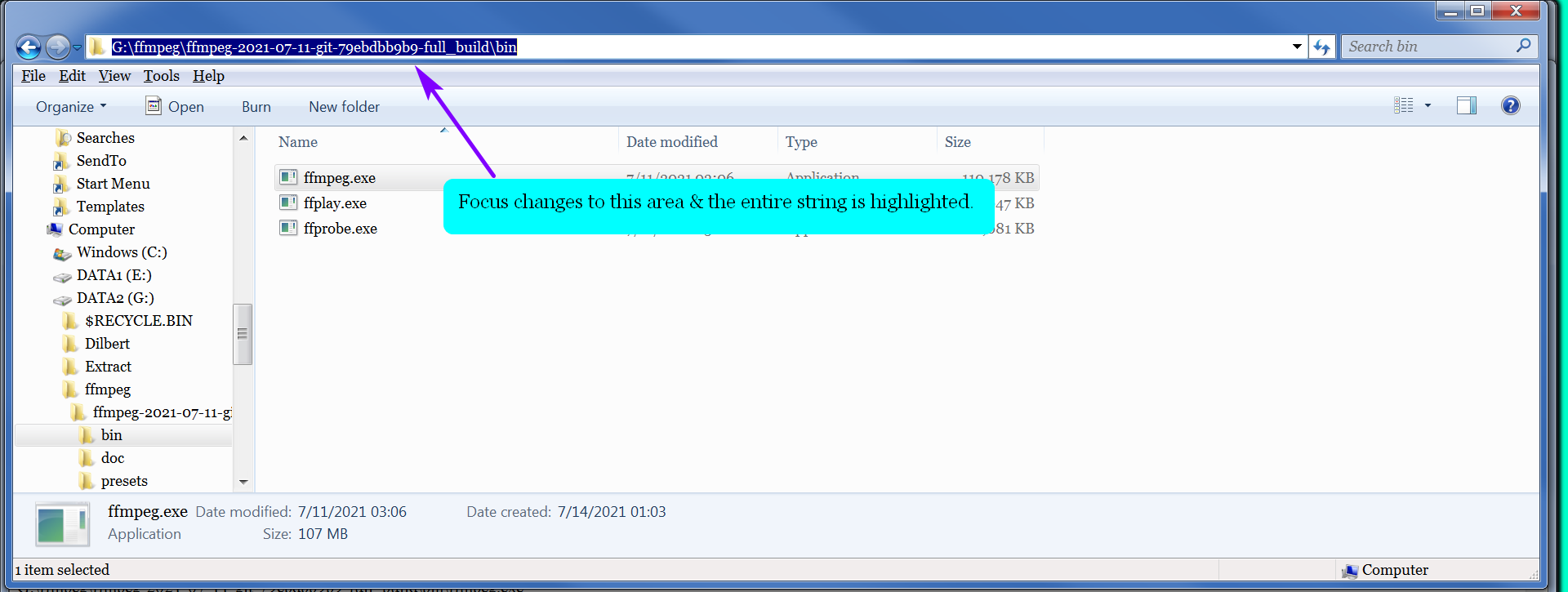

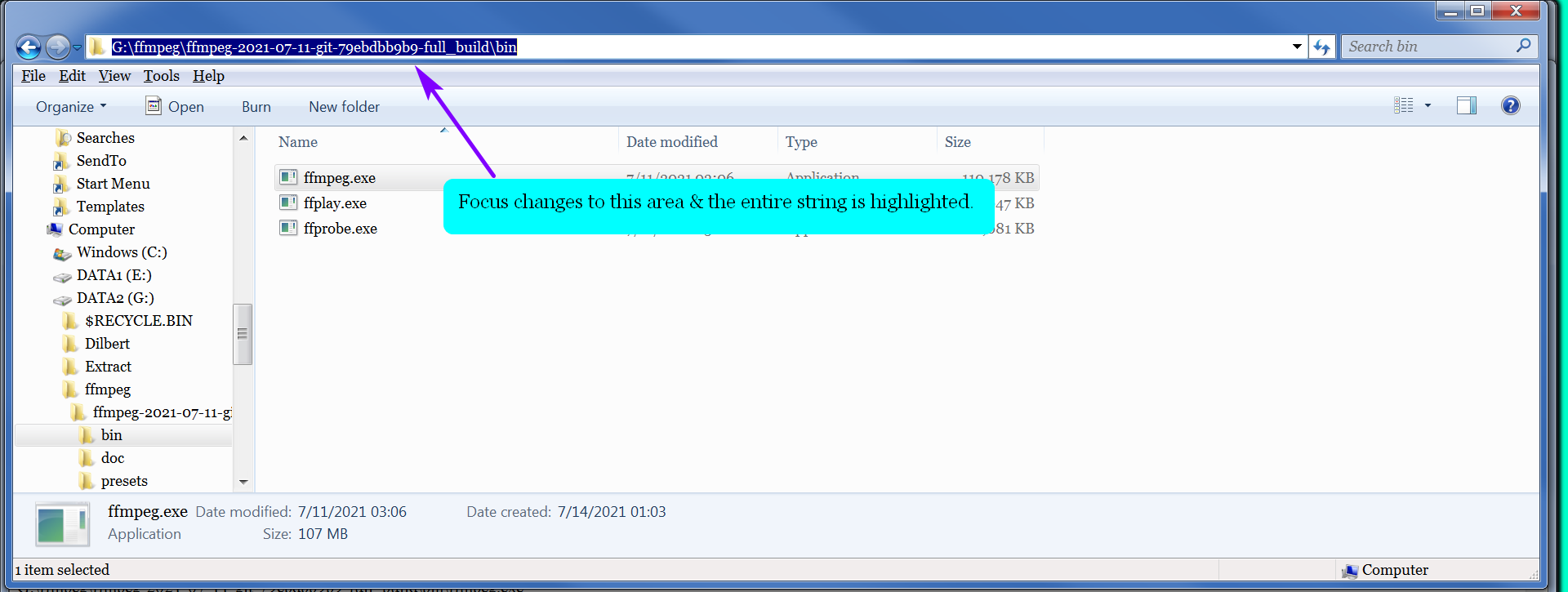

You can use a simple process to get to this without having to type all of this in. First, in Windows Explorer (the file manager) go to the directory where ffmpeg.exe is, as you can see in an image I included above. Now press the key sequence Alt+d. This works for me on Windows 7. I believe you can find this documented if you do a Google search. The key sequence may be different on your platform. You'll have to figure that out for yourself. When I press Alt+d, it looks like this:

ffmpeg [parms for input file] -i [input file name] [parms for output file] [output file name]

The first piece is this:

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://video.twimg.com/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4" -codec: copy "q:\VDH Testing\Ryan Poehling.mp4" 1>"q:\VDH Testing\Ryan Poehling.Err" 2>"q:\VDH Testing\Ryan Poehling.Log"

I surround the full thing with double quotation marks. This isn't strictly necessary here. But as you'll see below, I have spaces in my file specifications. Some of my directories have spaces in their names & some of my files do as well. These need quotation marks because of the spaces. So I'm just in the habit of always coding quotation marks so I don't accidentally forget.

You can use a simple process to get to this without having to type all of this in. First, in Windows Explorer (the file manager) go to the directory where ffmpeg.exe is, as you can see in an image I included above. Now press the key sequence Alt+d. This works for me on Windows 7. I believe you can find this documented if you do a Google search. The key sequence may be different on your platform. You'll have to figure that out for yourself. When I press Alt+d, it looks like this:

Now press the Home key to move the cursor to the front of the area & type in cmd followed by a space:

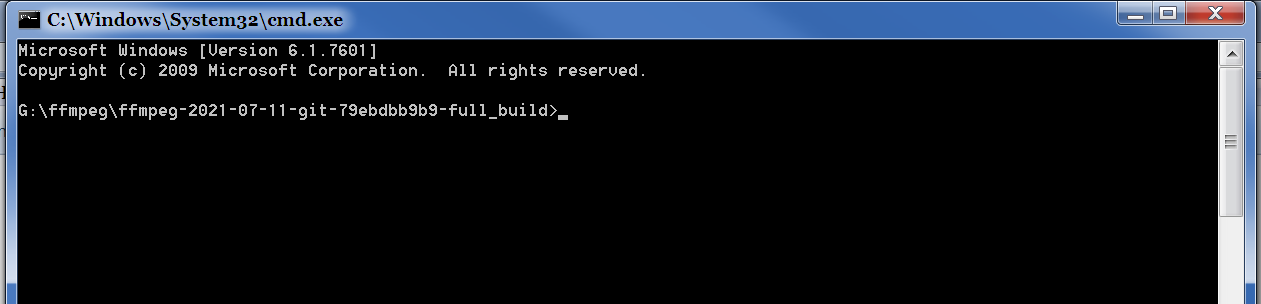

Now hit Enter.

Poof. Magic. You get a command window already set to the ffmpeg directory. You'll want to do some copy/pasting of this path information into whatever script you write. The script can reside in any directory on your system as long as you execute ffmpeg by coding the full file specification as I show above.

Next we have the parameters that apply to the input file:

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://video.twimg.com/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4" -codec: copy "q:\VDH Testing\Ryan Poehling.mp4" 1>"q:\VDH Testing\Ryan Poehling.Err" 2>"q:\VDH Testing\Ryan Poehling.Log"

Next we have the parameters that apply to the input file:

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://video.twimg.com/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4" -codec: copy "q:\VDH Testing\Ryan Poehling.mp4" 1>"q:\VDH Testing\Ryan Poehling.Err" 2>"q:\VDH Testing\Ryan Poehling.Log"

The whitelist is something that I figured out by trial & error. Over time as I have used ffmpeg, I encountered sites that would cause ffmpeg to generate an error message about a protocol that was not in the whitelist. That was all gibberish to me, so I scrounged around in the nearly incomprehensible documentation. I stumbled upon the -protocol_whitelist parameter. Over time, I kept encountering protocols that I needed to add to the list. This list has been unchanged for me for a while now. I'm sure ffmpeg will let me know with an error message if there's some new protocol I need to add to the list.

The -hwaccel parameter is something that's discussed over here:

https://groups.google.com/g/video-downloadhelper-q-and-a/c/uBknY74Q1SI

I won't explain it further. I have run with this parameter & without it. I suppose it's possible that in some situations it helps. It may not be doing anything for me. I haven't had a long enough video to download for which I had enough time to measure whether I'm getting any benefit. My sample video here is only 16 seconds long so I doubt it has much impact in this case. I have found that at least it doesn't cause any problems so I just run with it. You can make your own decision about this.

The next part is the input file:

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://video.twimg.com/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4" -codec: copy "q:\VDH Testing\Ryan Poehling.mp4" 1>"q:\VDH Testing\Ryan Poehling.Err" 2>"q:\VDH Testing\Ryan Poehling.Log"

The -hwaccel parameter is something that's discussed over here:

https://groups.google.com/g/video-downloadhelper-q-and-a/c/uBknY74Q1SI

I won't explain it further. I have run with this parameter & without it. I suppose it's possible that in some situations it helps. It may not be doing anything for me. I haven't had a long enough video to download for which I had enough time to measure whether I'm getting any benefit. My sample video here is only 16 seconds long so I doubt it has much impact in this case. I have found that at least it doesn't cause any problems so I just run with it. You can make your own decision about this.

The next part is the input file:

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://video.twimg.com/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4" -codec: copy "q:\VDH Testing\Ryan Poehling.mp4" 1>"q:\VDH Testing\Ryan Poehling.Err" 2>"q:\VDH Testing\Ryan Poehling.Log"

In this case, the input file is the stream manifest for the 900x720 video. This is a copy/paste out of the last stream definition in the master manifest, with the site name inserted in front so it's a complete URL.

#EXT-X-STREAM-INF:AVERAGE-BANDWIDTH=2176000,BANDWIDTH=2176000,RESOLUTION=900x720,CODECS="mp4a.40.2,avc1.640020",SUBTITLES="subs"

/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4

Ffmpeg can take many different things as input: the URL of an MP4 on the web, the URL of a manifest on the web (as here), the file name on your system of a manifest, and plenty more. This tool is like a Swiss Army knife for audio-visual functions. I know the barest fraction of what it can do. The horrible documentation & fruitless Google searches have kept me from learning more. If you know more, do feel free to share it by posting here.

Next we have the parameters for the output file:

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://video.twimg.com/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4" -codec: copy "q:\VDH Testing\Ryan Poehling.mp4" 1>"q:\VDH Testing\Ryan Poehling.Err" 2>"q:\VDH Testing\Ryan Poehling.Log"

In our case, we have only the one parameter. -codec: says the option applies to stream data of every supported media type: video, audio, subtitles, whatever is in the source input. You can code -codec:a, -codec:v, and other things. For our purposes, -codec: is all we need. The option here, copy, simply copies the input to the output. The input is coming off the Internet. The output is going into a file on my system. Ffmpeg does nothing to analyze or process the input in any way. It just copies what it reads from the input to the output. Ffmpeg can do things like muxing & encoding & other things, which I mostly don't understand. Like I said, Swiss Army knife. I understand copying. That's easy. It's all I need to understand. It works for what I want to do.

The next part is the output file:

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://video.twimg.com/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4" -codec: copy "q:\VDH Testing\Ryan Poehling.mp4" 1>"q:\VDH Testing\Ryan Poehling.Err" 2>"q:\VDH Testing\Ryan Poehling.Log"

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://video.twimg.com/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4" -codec: copy "q:\VDH Testing\Ryan Poehling.mp4" 1>"q:\VDH Testing\Ryan Poehling.Err" 2>"q:\VDH Testing\Ryan Poehling.Log"

In our case, we have only the one parameter. -codec: says the option applies to stream data of every supported media type: video, audio, subtitles, whatever is in the source input. You can code -codec:a, -codec:v, and other things. For our purposes, -codec: is all we need. The option here, copy, simply copies the input to the output. The input is coming off the Internet. The output is going into a file on my system. Ffmpeg does nothing to analyze or process the input in any way. It just copies what it reads from the input to the output. Ffmpeg can do things like muxing & encoding & other things, which I mostly don't understand. Like I said, Swiss Army knife. I understand copying. That's easy. It's all I need to understand. It works for what I want to do.

The next part is the output file:

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://video.twimg.com/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4" -codec: copy "q:\VDH Testing\Ryan Poehling.mp4" 1>"q:\VDH Testing\Ryan Poehling.Err" 2>"q:\VDH Testing\Ryan Poehling.Log"

It's just there at the end of the command. There's no -o or -switch or anything. Just the file name. Note the quotation marks because of the spaces.

I have some more things tacked on the end that are Windows tricks for capturing the output of ffmpeg.

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://video.twimg.com/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4" -codec: copy "q:\VDH Testing\Ryan Poehling.mp4" 1>"q:\VDH Testing\Ryan Poehling.Err" 2>"q:\VDH Testing\Ryan Poehling.Log"

This captures any command error messages that might occur. This file has always been empty when I run ffmpeg, but I keep it for completeness.

This is more important:

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://video.twimg.com/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4" -codec: copy "q:\VDH Testing\Ryan Poehling.mp4" 1>"q:\VDH Testing\Ryan Poehling.Err" 2>"q:\VDH Testing\Ryan Poehling.Log"

This captures the important, interesting, educational output generated by ffmpeg. I've attached the file below as Video Download Log.

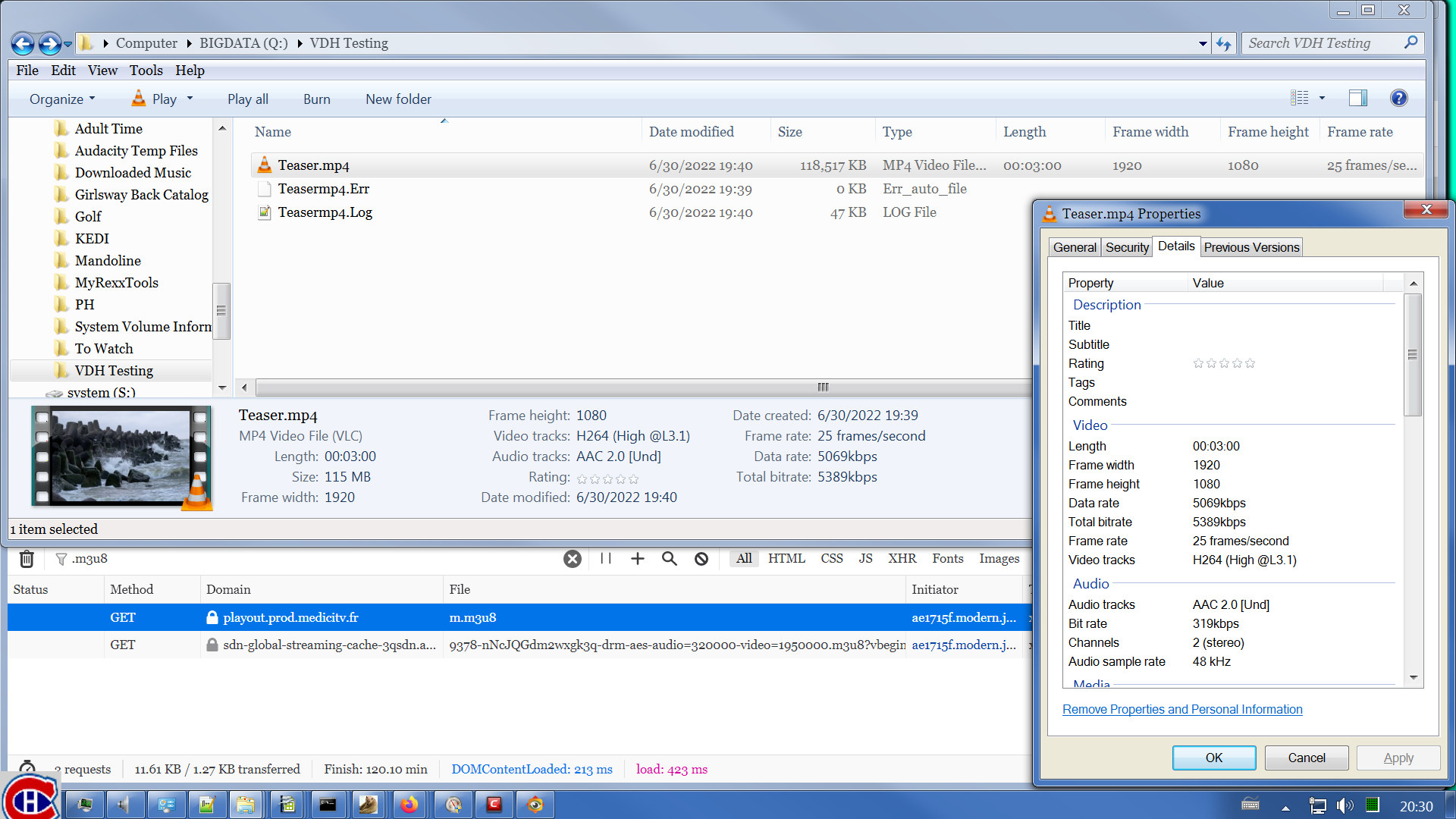

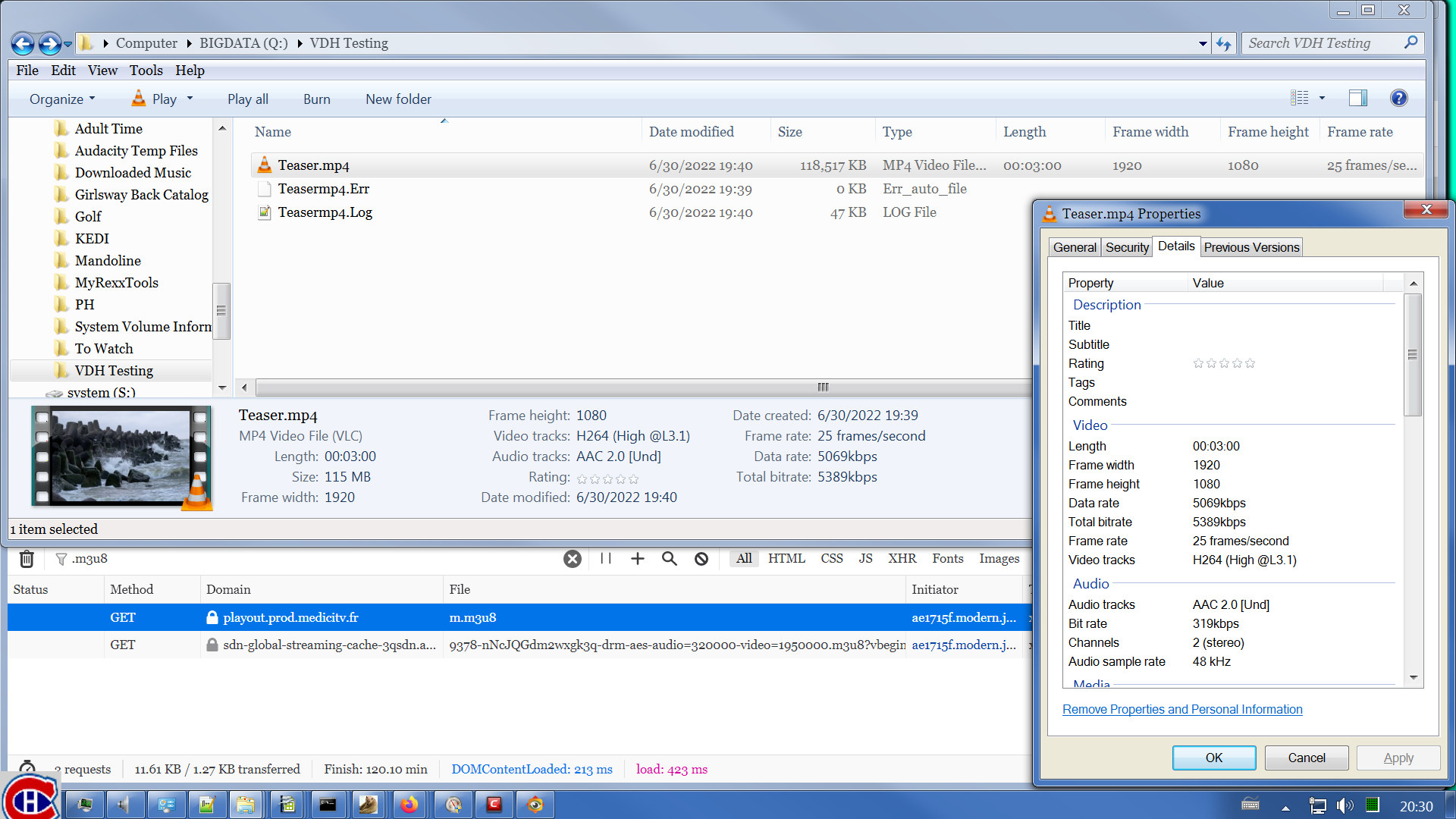

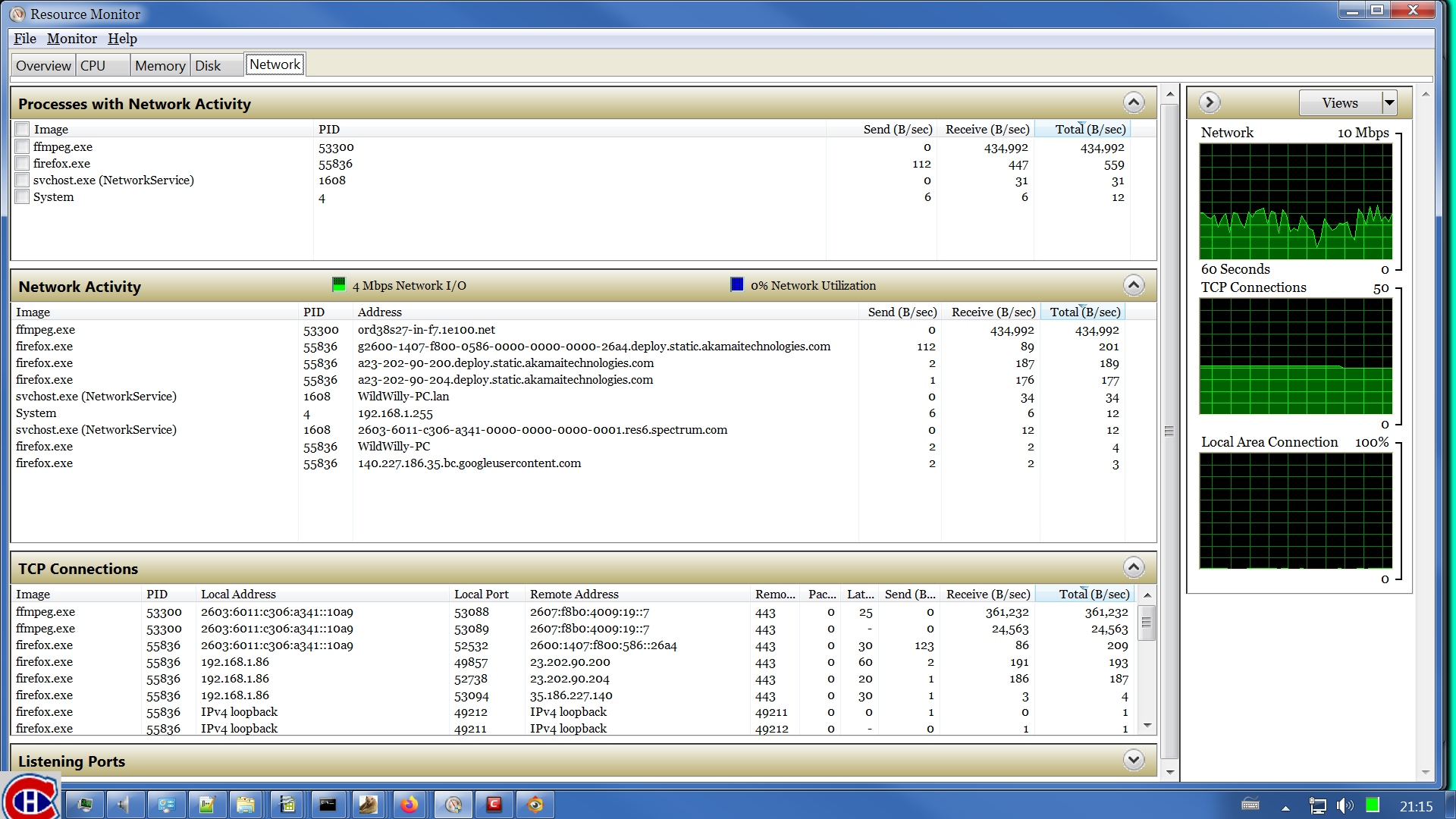

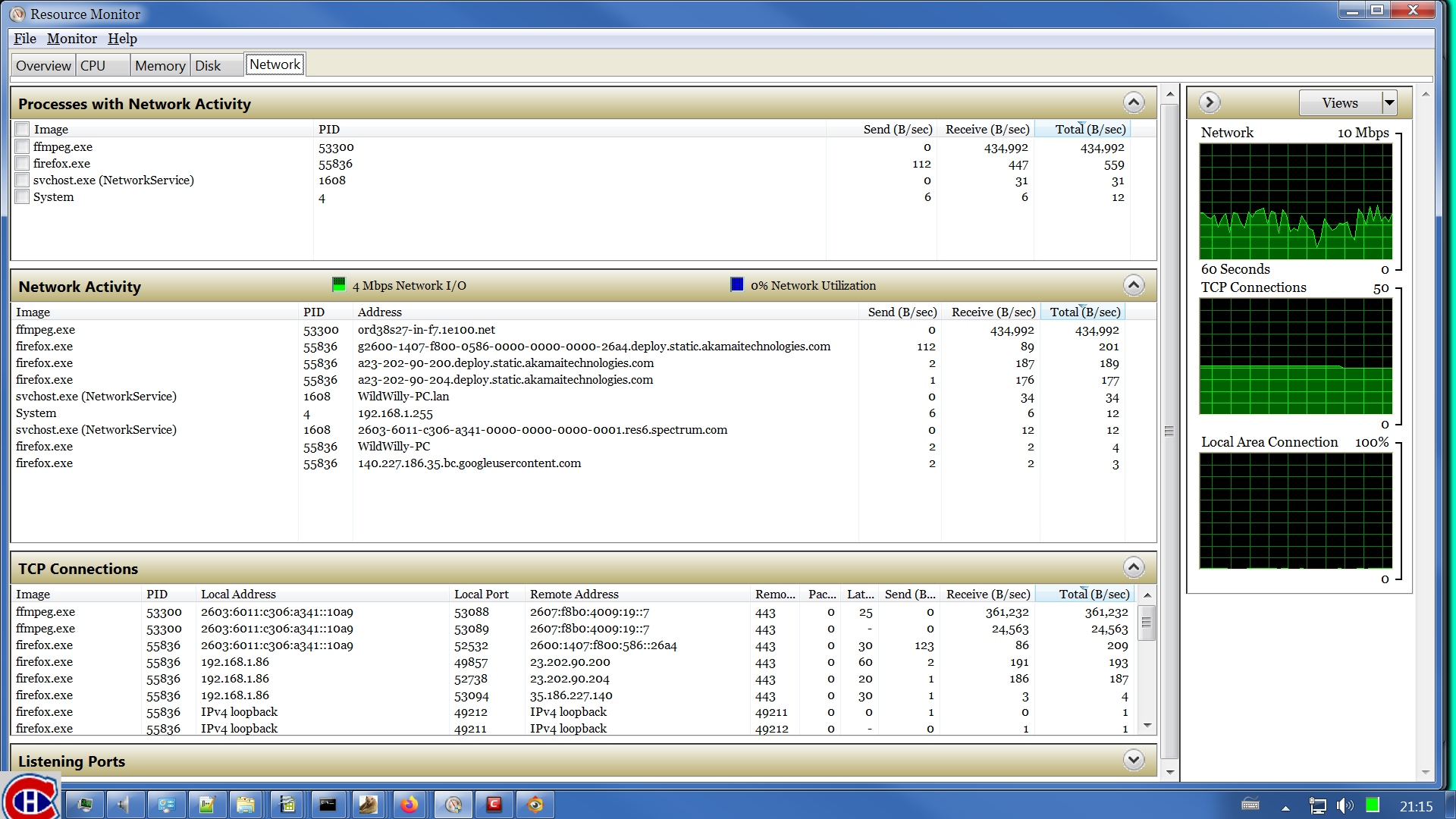

After I run a download, I like to run ffprobe. As you can see in earlier images, ffprobe comes with ffmpeg. It generates interesting output that confirms you've got the file you expected:

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffprobe.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp "q:\VDH Testing\Ryan Poehling.mp4" 1>>"q:\VDH Testing\Ryan Poehling.Err" 2>>"q:\VDH Testing\Ryan Poehling.Log"

The only additional thing worth noting here is the >> notation. On the ffmpeg command, I have > coded. This creates a new file. If the file already exists, > causes it to be overwritten. I use >> here so that the ffprobe output is added to the end of the file that already exists. This has nothing to do with ffmpeg. It's how Windows works. You can see the ffprobe output in the Video Download Log attachment. When it comes to other platforms, you're on your own figuring out how to capture the output of the command. Do please post that information here for everybody to benefit from your knowledge.

Now let's get the subtitles. What kind of subtitles are these? I copied the URL of the subtitle manifest, which I describe above & said to remember:

https://video.twimg.com/amplify_video/1463574264683110405/pl/s0/Su37b71s0nn2C_-b.m3u8

I pasted it into the address bar of Firefox, & hit Enter. This is what I got:

#EXTM3U

#EXT-X-PLAYLIST-TYPE:VOD

#EXT-X-VERSION:3

#EXT-X-TARGETDURATION:17

#EXT-X-MEDIA-SEQUENCE:0

#EXTINF:16.92,

/subtitles/amplify_video/1463574264683110405/0/ElDvtq3kN5eIVN50.vtt

#EXT-X-ENDLIST

So the subtitles are in WEBVTT format. That's my preferred format. There are other formats & if you encounter them, you would be able to figure them out like I've done here. Given that the subtitles are in a .vtt file, I executed this invocation of ffmpeg:

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://video.twimg.com/amplify_video/1463574264683110405/pl/s0/Su37b71s0nn2C_-b.m3u8" -codec: copy "Q:\VDH Testing\Ryan Poehling.vtt" 1>"Q:\VDH Testing\Ryan Poehling.Err" 2>"Q:\VDH Testing\Ryan Poehling.Log"

Pretty much the same thing again as above. Note that the input URL after -i is now the one I described above for the subtitles stream. Also note the file extension for the output file is .vtt. Ffmpeg figures out a lot of what you want to do from the file extension you give on the output file. It figured out I wanted a target MP4 file earlier from the .mp4 file extension I coded. Here, it knows I want subtitles from the .vtt file extension I coded on the output file. I followed this with an invocation of ffprobe, too similar to the above discussion to go over it again. All that output is attached below in the file Subtitles Download Log.

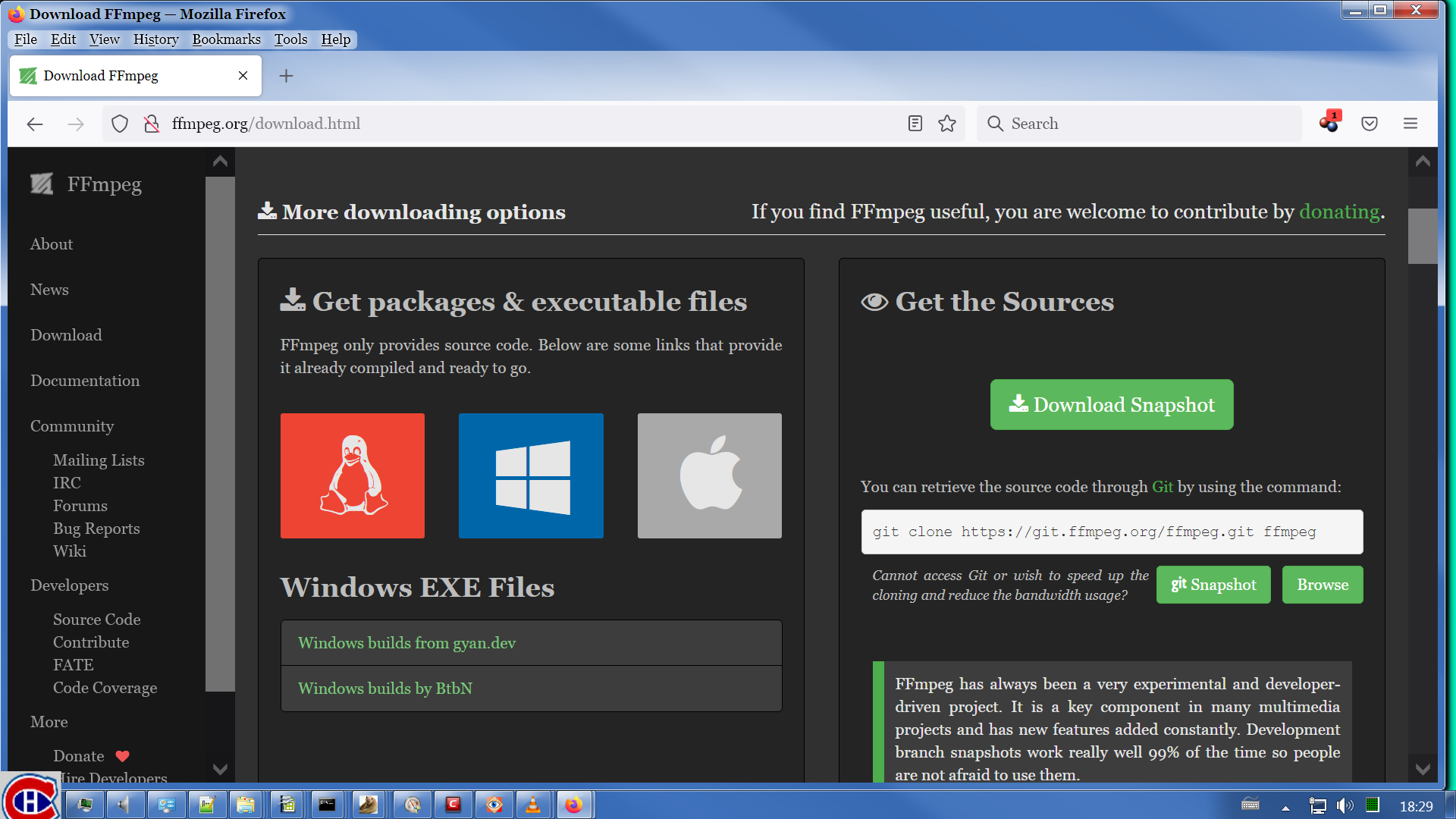

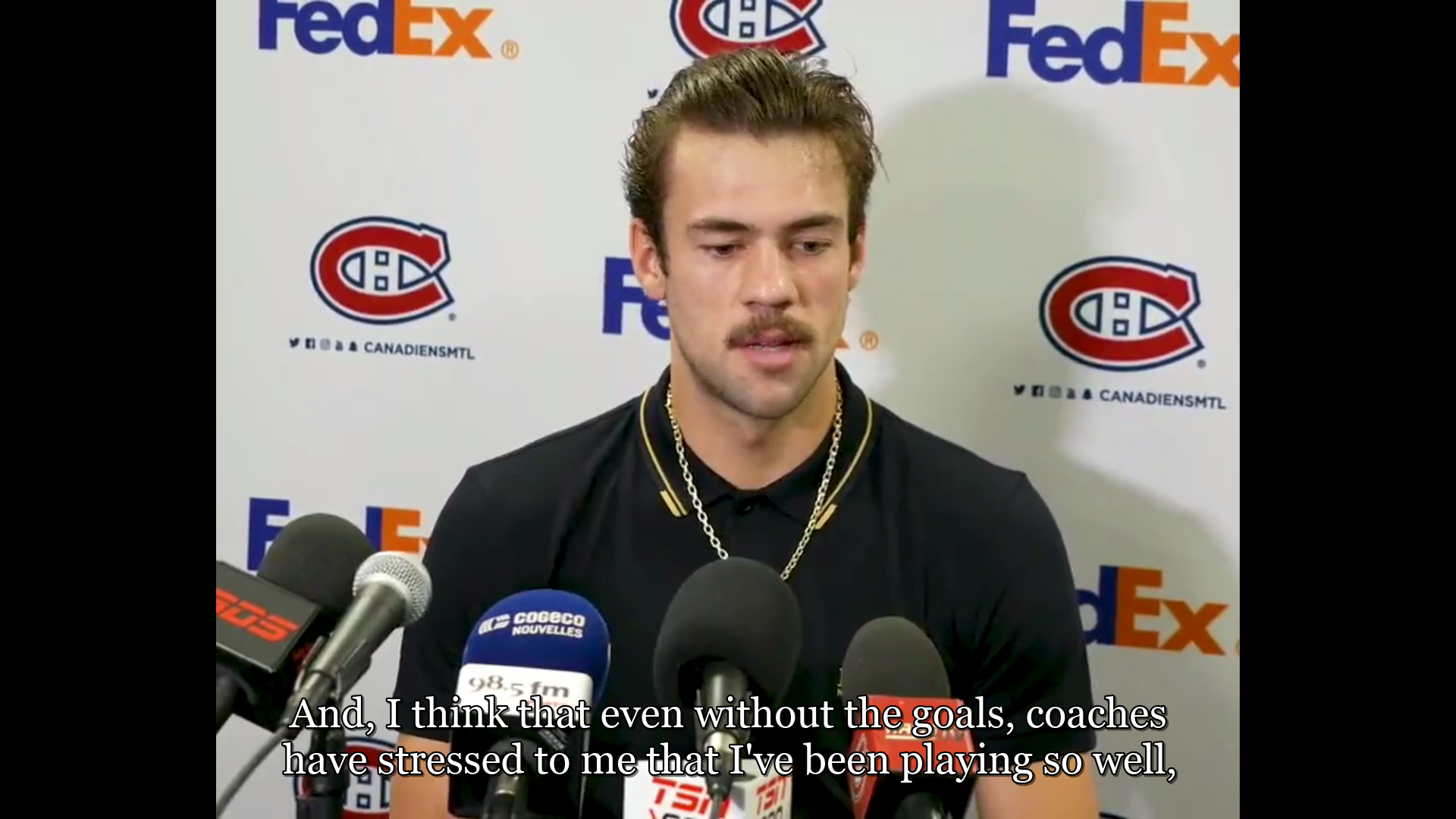

I was careful to name my output files the same, except for the extensions .mp4 & .vtt. This allows VLC to automatically detect the subtitles & display them during playback:

I have some more things tacked on the end that are Windows tricks for capturing the output of ffmpeg.

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://video.twimg.com/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4" -codec: copy "q:\VDH Testing\Ryan Poehling.mp4" 1>"q:\VDH Testing\Ryan Poehling.Err" 2>"q:\VDH Testing\Ryan Poehling.Log"

This captures any command error messages that might occur. This file has always been empty when I run ffmpeg, but I keep it for completeness.

This is more important:

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://video.twimg.com/amplify_video/1463574264683110405/pl/900x720/bHlh8rkjC1GzHuFS.m3u8?container=fmp4" -codec: copy "q:\VDH Testing\Ryan Poehling.mp4" 1>"q:\VDH Testing\Ryan Poehling.Err" 2>"q:\VDH Testing\Ryan Poehling.Log"

This captures the important, interesting, educational output generated by ffmpeg. I've attached the file below as Video Download Log.

After I run a download, I like to run ffprobe. As you can see in earlier images, ffprobe comes with ffmpeg. It generates interesting output that confirms you've got the file you expected:

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffprobe.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp "q:\VDH Testing\Ryan Poehling.mp4" 1>>"q:\VDH Testing\Ryan Poehling.Err" 2>>"q:\VDH Testing\Ryan Poehling.Log"

The only additional thing worth noting here is the >> notation. On the ffmpeg command, I have > coded. This creates a new file. If the file already exists, > causes it to be overwritten. I use >> here so that the ffprobe output is added to the end of the file that already exists. This has nothing to do with ffmpeg. It's how Windows works. You can see the ffprobe output in the Video Download Log attachment. When it comes to other platforms, you're on your own figuring out how to capture the output of the command. Do please post that information here for everybody to benefit from your knowledge.

Now let's get the subtitles. What kind of subtitles are these? I copied the URL of the subtitle manifest, which I describe above & said to remember:

https://video.twimg.com/amplify_video/1463574264683110405/pl/s0/Su37b71s0nn2C_-b.m3u8

I pasted it into the address bar of Firefox, & hit Enter. This is what I got:

#EXTM3U

#EXT-X-PLAYLIST-TYPE:VOD

#EXT-X-VERSION:3

#EXT-X-TARGETDURATION:17

#EXT-X-MEDIA-SEQUENCE:0

#EXTINF:16.92,

/subtitles/amplify_video/1463574264683110405/0/ElDvtq3kN5eIVN50.vtt

#EXT-X-ENDLIST

So the subtitles are in WEBVTT format. That's my preferred format. There are other formats & if you encounter them, you would be able to figure them out like I've done here. Given that the subtitles are in a .vtt file, I executed this invocation of ffmpeg:

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://video.twimg.com/amplify_video/1463574264683110405/pl/s0/Su37b71s0nn2C_-b.m3u8" -codec: copy "Q:\VDH Testing\Ryan Poehling.vtt" 1>"Q:\VDH Testing\Ryan Poehling.Err" 2>"Q:\VDH Testing\Ryan Poehling.Log"

Pretty much the same thing again as above. Note that the input URL after -i is now the one I described above for the subtitles stream. Also note the file extension for the output file is .vtt. Ffmpeg figures out a lot of what you want to do from the file extension you give on the output file. It figured out I wanted a target MP4 file earlier from the .mp4 file extension I coded. Here, it knows I want subtitles from the .vtt file extension I coded on the output file. I followed this with an invocation of ffprobe, too similar to the above discussion to go over it again. All that output is attached below in the file Subtitles Download Log.

I was careful to name my output files the same, except for the extensions .mp4 & .vtt. This allows VLC to automatically detect the subtitles & display them during playback:

Simple? Not.

Easy? I hope once you have followed these instructions a few times, you'll find it easy, especially if you write a script to help you avoid typos.

Not clear? Tell me. Post here. Let's discuss it.

Easy? I hope once you have followed these instructions a few times, you'll find it easy, especially if you write a script to help you avoid typos.

Not clear? Tell me. Post here. Let's discuss it.

Wild Willy

Nov 25, 2021, 6:25:35 AM11/25/21

to Video DownloadHelper Q&A

I got some of my colored strings wrong. They're hard to read. I apologize. I would go back & fix them if I could edit my posts. But the edit function was taken away from us by Google a year or two ago & no amount of complaining since has given it back. The only way for me to fix it is to delete the post & completely reenter it, uploading the embedded images & attachments again, to say nothing of fiddling with the colors again. Way too much trouble for way too little return on the investment. Also, Google might think I'm trying to spam the group & I might be blocked from posting for a while. Again, not worth it. Some of the things that are hard to read because of their colors are clickable links, & clicking those might compensate for my fat-fingering.

Some of my images are a bit small. Sorry again. I think I accidentally had the small image setting turned on. You can get around this by using the Firefox function for displaying an image in a separate tab. When you click MB2 on any image, that function appears in the popup context menu. You probably want to do this anyway so you can properly see what I'm talking about.

I must add that the above approach is what you should try if you find you are getting separate video & audio content. Most of the time, you can deal with it directly with VDH. But once in a while you need to resort to this approach, especially if subtitles are involved.

jcv...@gmail.com

Nov 26, 2021, 1:14:11 AM11/26/21

to Video DownloadHelper Q&A

impressive :)

thanks

jerome

Wild Willy

Nov 26, 2021, 10:31:00 AM11/26/21

to Video DownloadHelper Q&A

Thanks, Jérôme. Maybe all the images & so on above are impressive but the impressive work was really done over here, in the big opera thread:

https://groups.google.com/g/video-downloadhelper-q-and-a/c/8V2cRB-bcK4

I was not alone in amassing the knowledge it takes to perform this kind of download.

Now, on to other things . . .

For the following discussion, I assume you've read & understood the above posts in this thread.

In a recent post a fellow user offered this example that helps to illustrate even better what is possible:

https://www.gaia.com/video/astral-projection?fullplayer=feature

https://groups.google.com/g/video-downloadhelper-q-and-a/c/8V2cRB-bcK4

I was not alone in amassing the knowledge it takes to perform this kind of download.

Now, on to other things . . .

For the following discussion, I assume you've read & understood the above posts in this thread.

In a recent post a fellow user offered this example that helps to illustrate even better what is possible:

https://www.gaia.com/video/astral-projection?fullplayer=feature

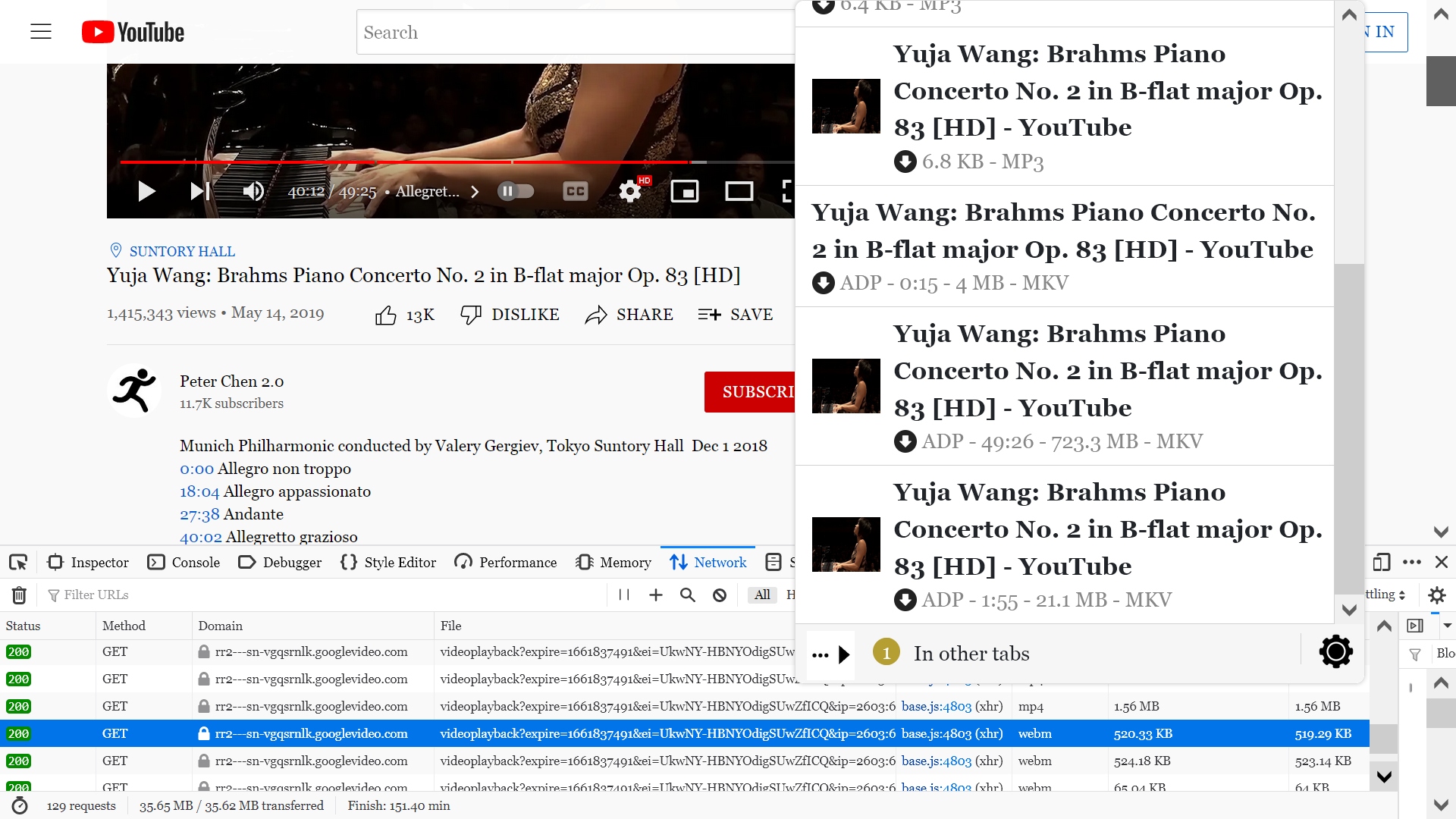

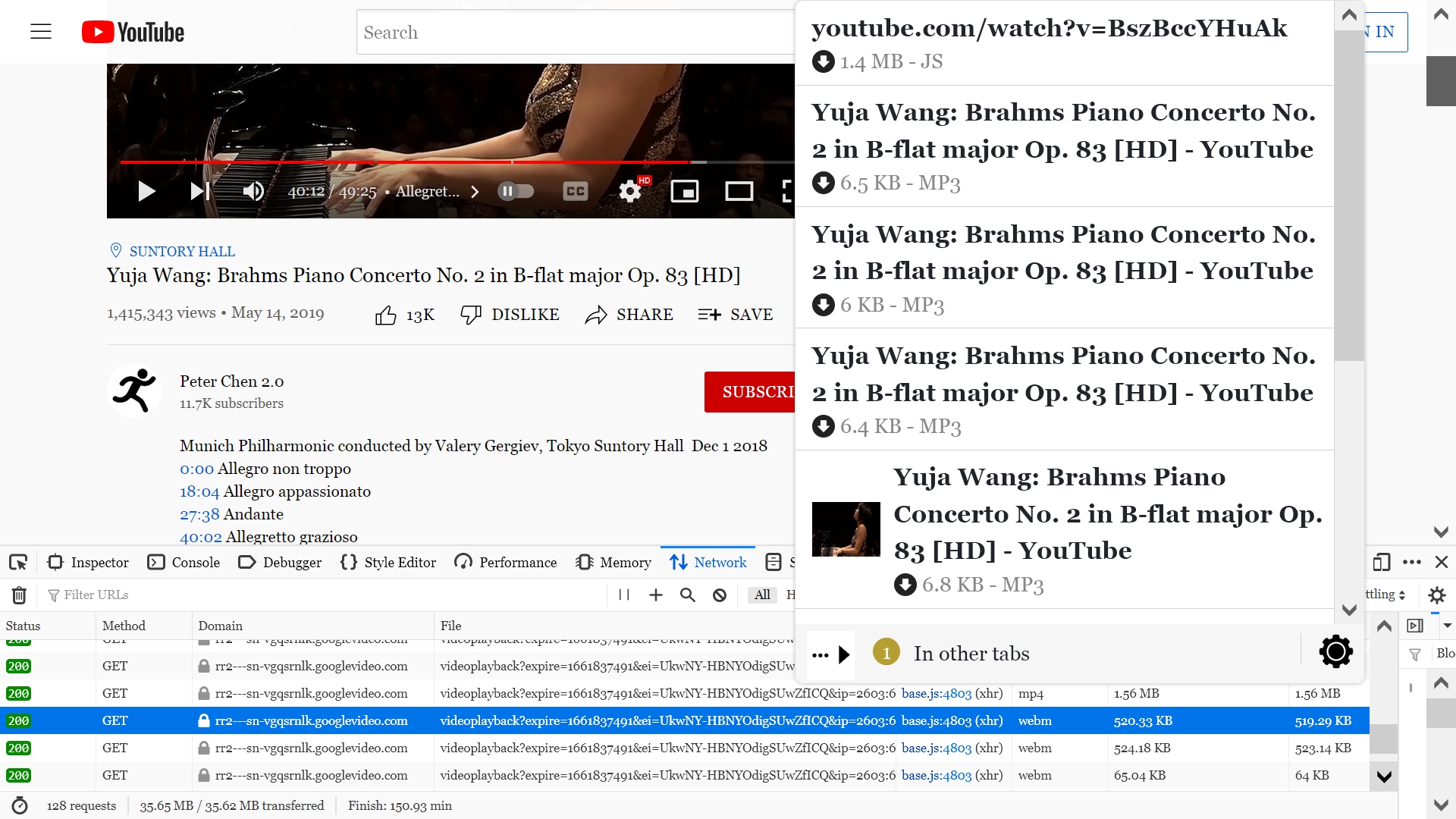

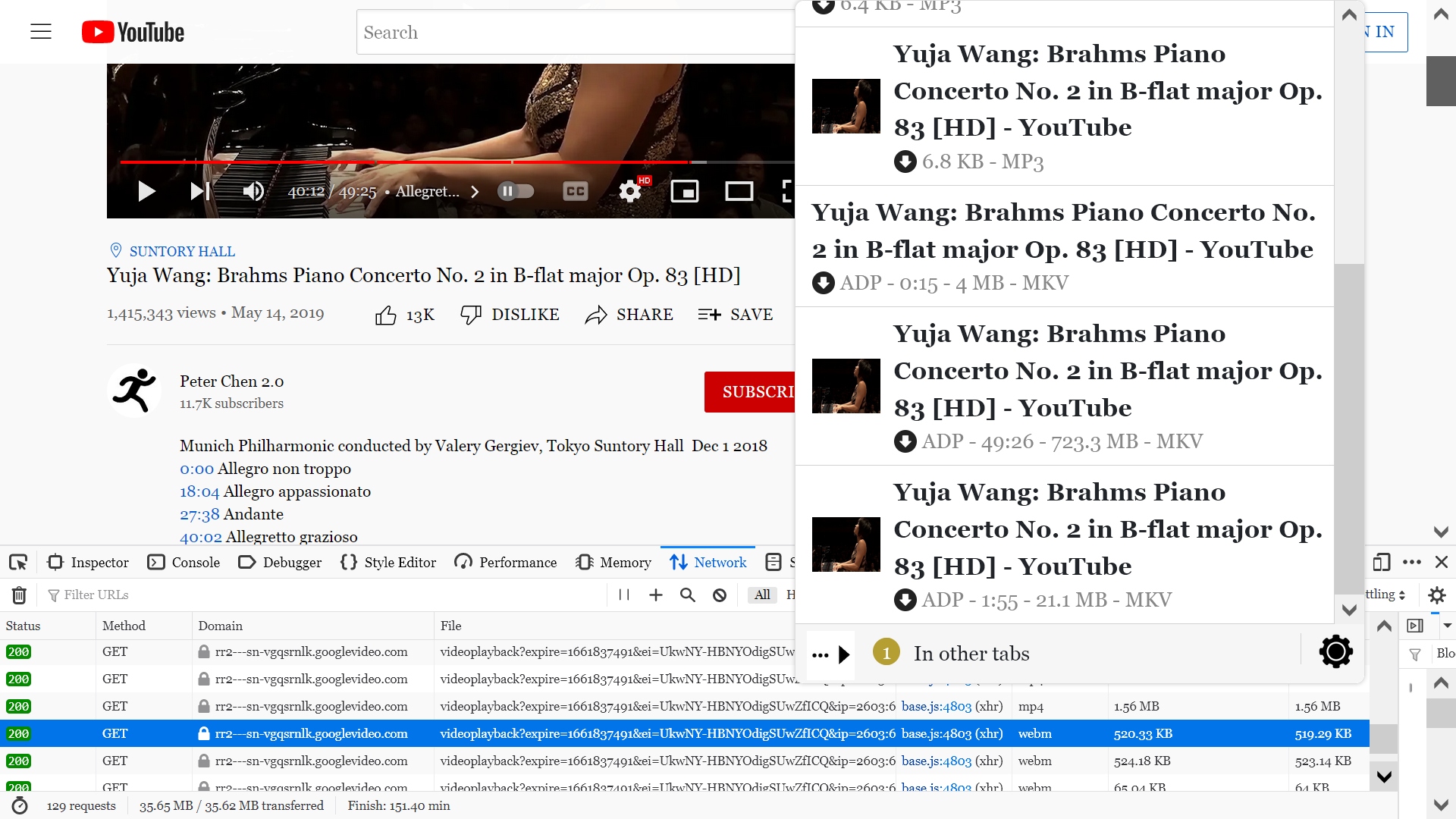

You can see I've already opened the Network Monitor & discovered the manifests. I've also popped up the context menu on the embedded video player. It shows that this site is using the Brightcove video player. That's the same one we encountered at the Metropolitan Opera. This means pretty much everything here will be the same as what we used to do with the free nightly opera streams (they've stopped those now), right down to the site serving the video. The URLs in the manifest are from the same site as what we were getting from the Met.

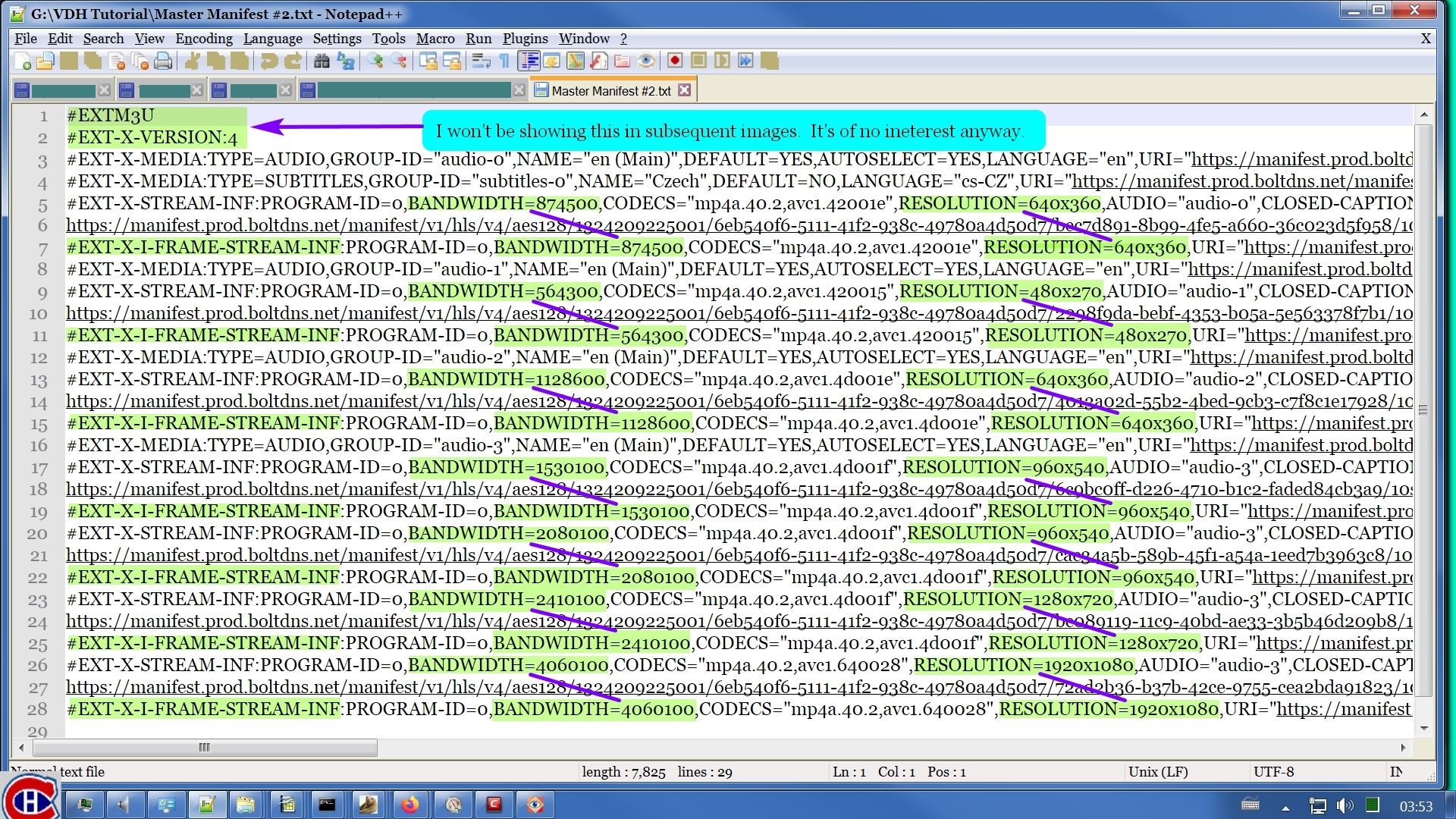

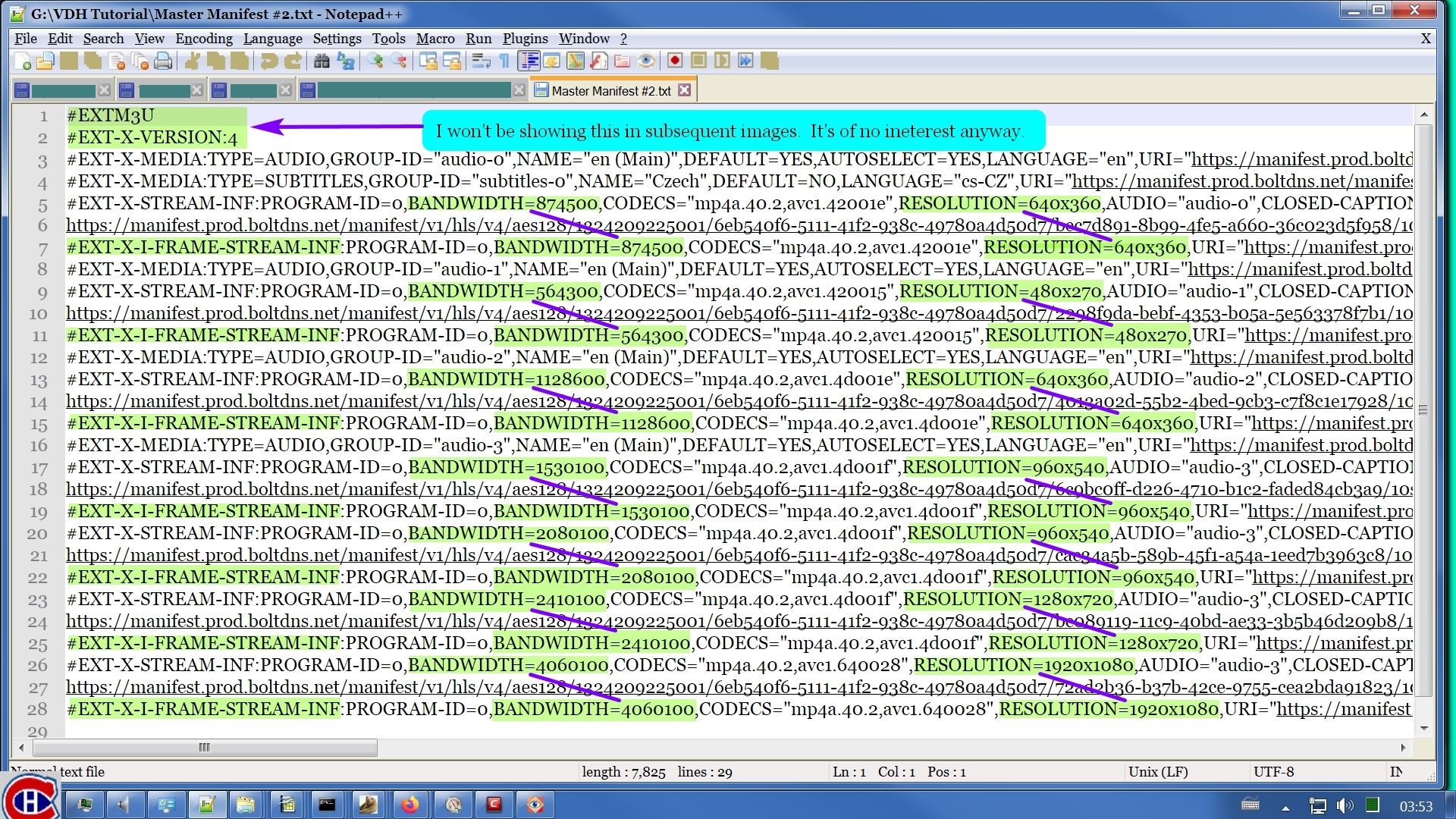

I'm not totally satisfied with my experiment above with color-coded text. So in this discussion, I will instead show screenshots of my text editor with annotations. This will probably be clearer.

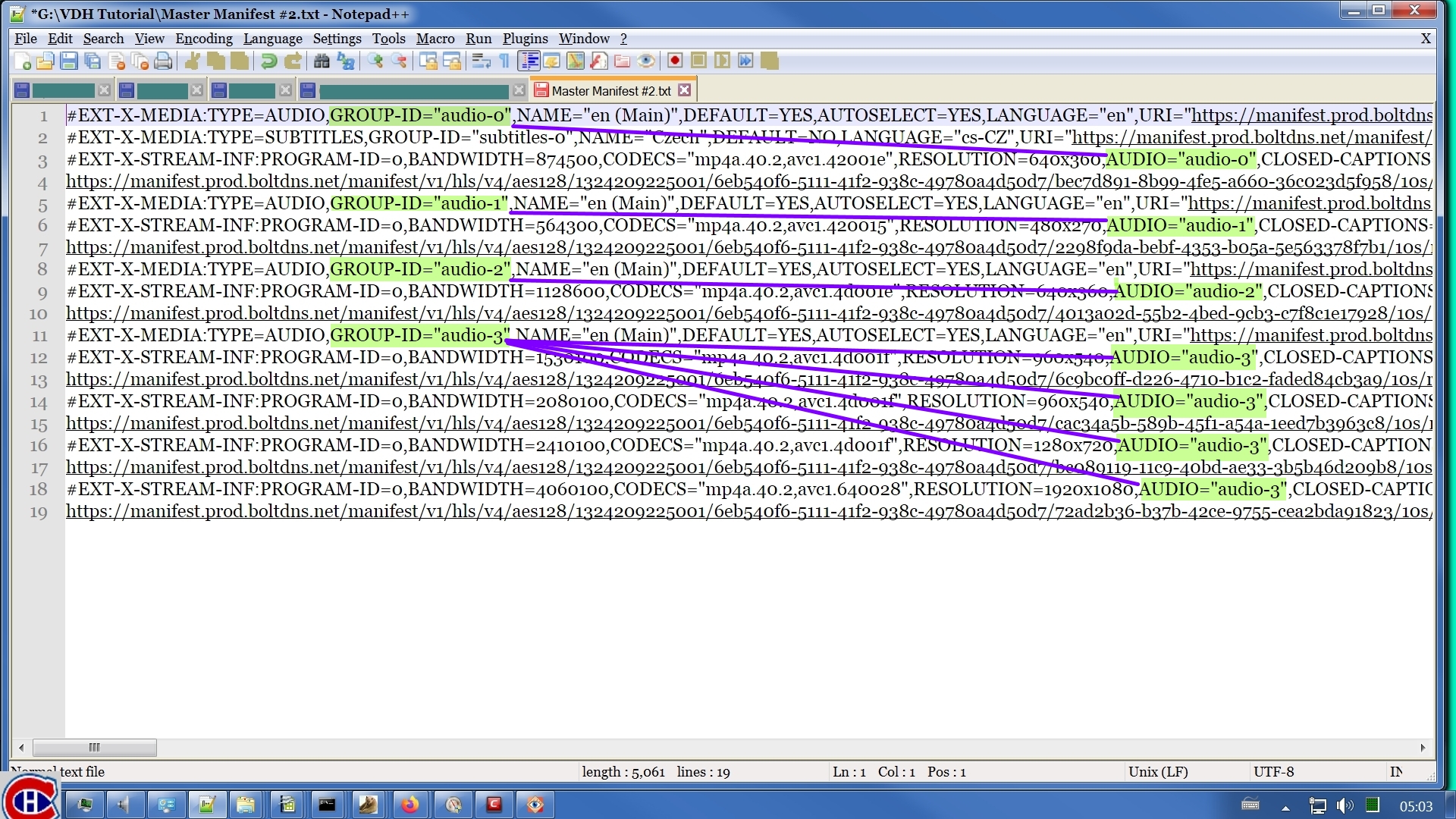

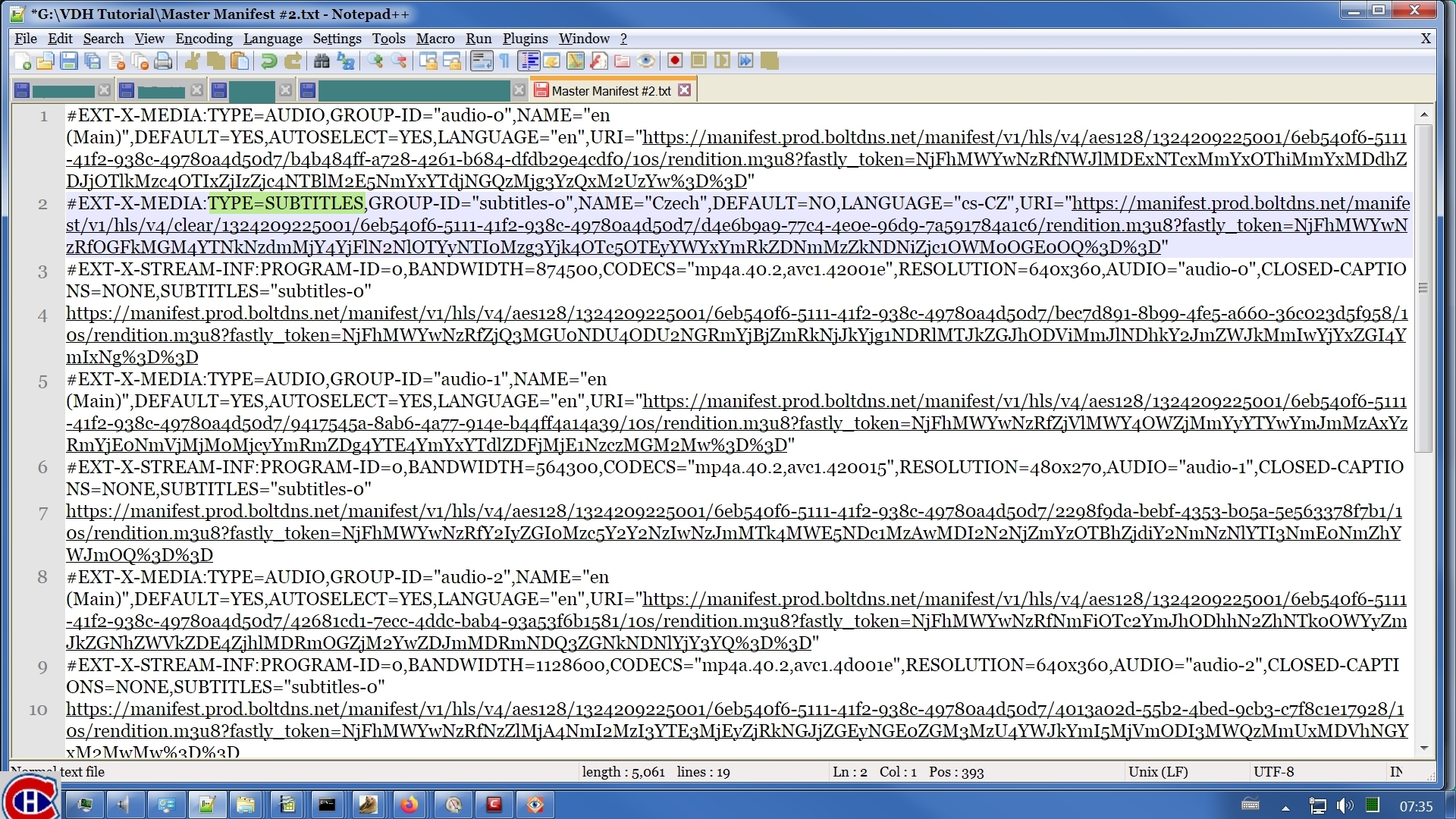

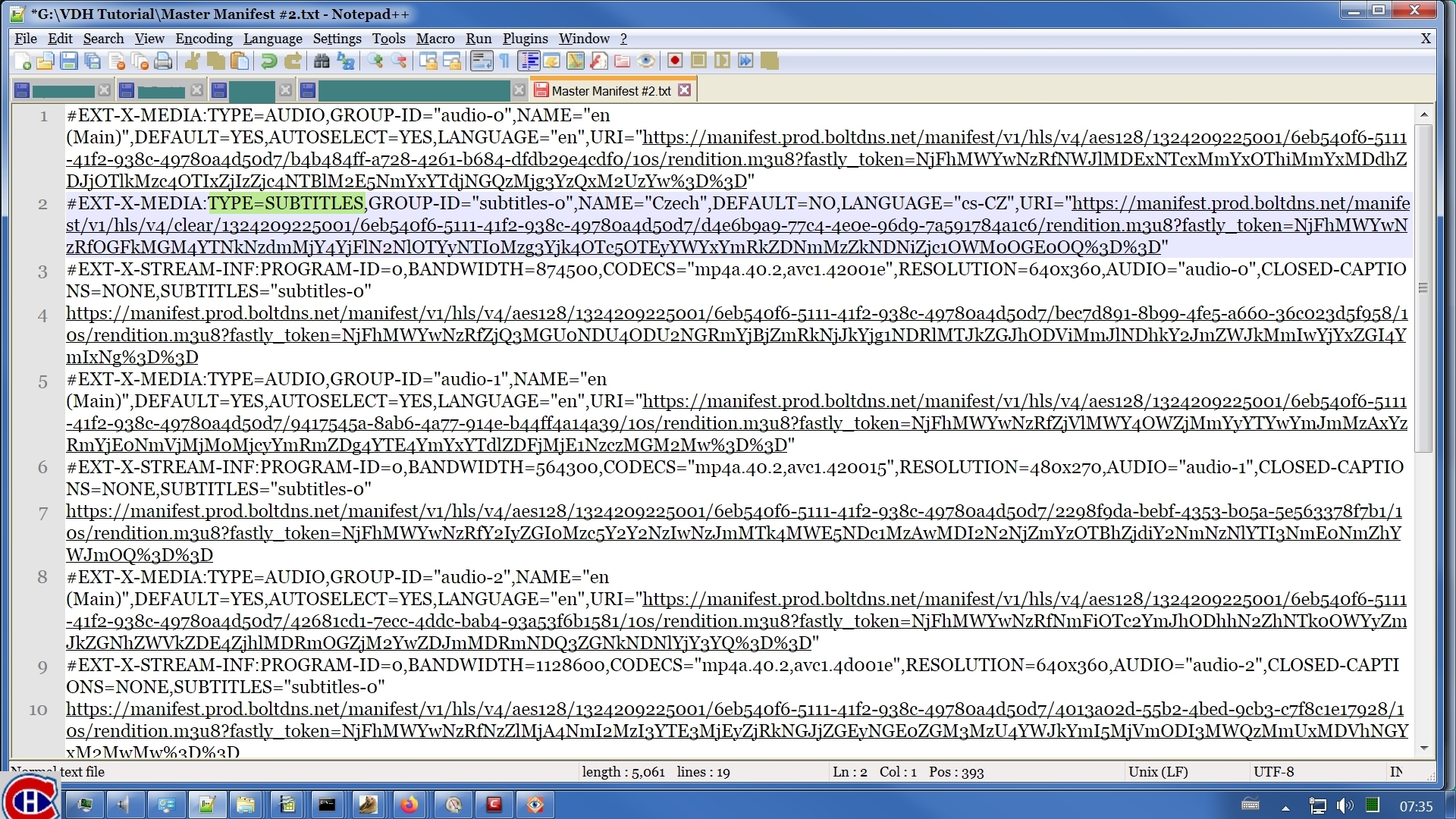

I got the master manifest the same way as detailed above. I've attached it as file Master Manifest #2. I must point out that in the images below I have turned off line wrapping in Notepad++. The URLs involved are ridiculously long, & showing the file with the lines wrapped makes it harder to see the important details among the mass of unimportant details. You can see this for yourself by downloading the attachment & pulling it up in Notepad or whatever you use instead of Notepad. I emphasize Notepad because you should absolutely not be looking at this in Word or Wordpad or anything like that. You want to be using a simple text editor, nothing fancier than that.

The structure of this manifest is a bit more elaborate than for the Twitter clip I used in my earlier example. There are more streams to choose from.

The first items I want to point out are the I-FRAME stream descriptions.

I'm not totally satisfied with my experiment above with color-coded text. So in this discussion, I will instead show screenshots of my text editor with annotations. This will probably be clearer.

I got the master manifest the same way as detailed above. I've attached it as file Master Manifest #2. I must point out that in the images below I have turned off line wrapping in Notepad++. The URLs involved are ridiculously long, & showing the file with the lines wrapped makes it harder to see the important details among the mass of unimportant details. You can see this for yourself by downloading the attachment & pulling it up in Notepad or whatever you use instead of Notepad. I emphasize Notepad because you should absolutely not be looking at this in Word or Wordpad or anything like that. You want to be using a simple text editor, nothing fancier than that.

The structure of this manifest is a bit more elaborate than for the Twitter clip I used in my earlier example. There are more streams to choose from.

The first items I want to point out are the I-FRAME stream descriptions.

It looks like each I-FRAME stream description is a duplicate of the video stream description right above it. The resolution & bandwidth of each I-FRAME stream description matches its partner right above it. But, despite appearances, they're actually not duplicates. I-FRAMEs are used to speed up skipping around in a video while you're streaming it off a server. If you were to download an I-FRAME stream (I have done it just once), you would find that VLC wouldn't play it. I discovered that it would play in ffplay (something that comes with ffmpeg & ffprobe). It turns out that it is a stream consisting of a single frame of the video extracted at regular intervals. It's just video without audio. The one time I tried this, it showed me one frame of the full video every 2 seconds. Basically it was unwatchable. There is a link in the big opera thread to my source for this information. So I will remove the I-FRAME items from the following images here.

Wild Willy

Nov 26, 2021, 10:55:43 AM11/26/21

to Video DownloadHelper Q&A

Now, let's get down to the proper business of analyzing this manifest.

Before we go too deep into this analysis, I want to get an idea of what we're dealing with here. You can use ffprobe to greatly help in this task. I ran ffprobe (an example is above) using the URL of this master manifest as input. I've attached the results as file Astral Projection Manifest ffprobe. I'm not going to go into details on this file just yet. It will make more sense after you understand the discussion below. I will say that ffprobe is able to untangle the various relationships in a master manifest & display the attributes of the various streams. You can look at that file now but I recommend you wait until later. Just as a curiosity, I'll mention now that ffprobe had a problem with the subtitles stream manifest. I don't know why. It worke fine, something I'll get to.

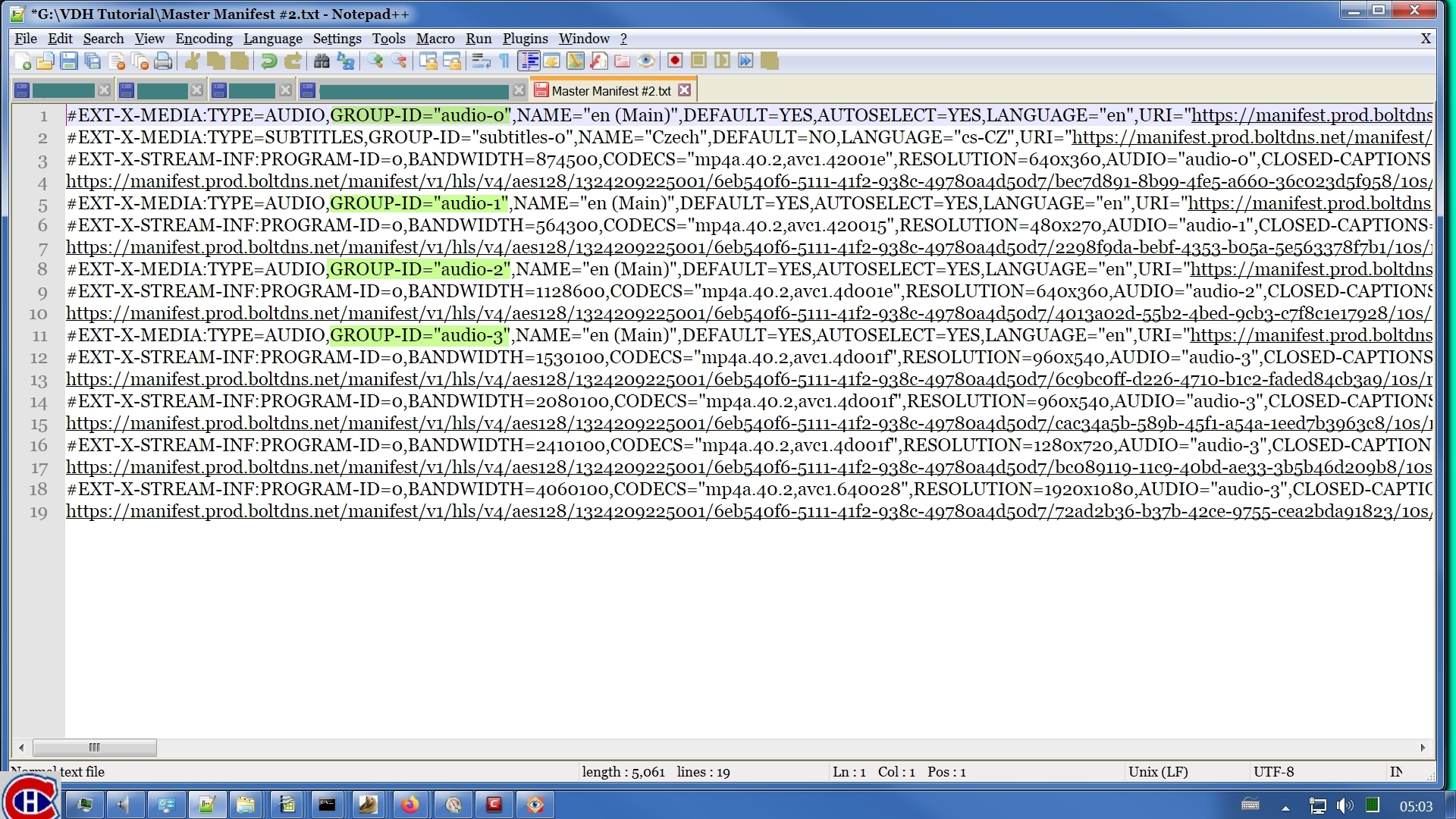

So. Back to our master manifest. The first thing I want to point out is the audio stream descriptions:

Before we go too deep into this analysis, I want to get an idea of what we're dealing with here. You can use ffprobe to greatly help in this task. I ran ffprobe (an example is above) using the URL of this master manifest as input. I've attached the results as file Astral Projection Manifest ffprobe. I'm not going to go into details on this file just yet. It will make more sense after you understand the discussion below. I will say that ffprobe is able to untangle the various relationships in a master manifest & display the attributes of the various streams. You can look at that file now but I recommend you wait until later. Just as a curiosity, I'll mention now that ffprobe had a problem with the subtitles stream manifest. I don't know why. It worke fine, something I'll get to.

So. Back to our master manifest. The first thing I want to point out is the audio stream descriptions:

There are 4 of them. The ffprobe output tells me these are audio streams without video. You can guess this from the absence of video resolution information from the stream descriptions.

Their names are audio-0, audio-1, audio-2, audio-3, in order from top to bottom. These names can be anything at all. They could be Elina, Isabel, Michel, & Jérôme. The names are whatever the developers of the server web site choose. The names are just ways to refer back to those stream descriptions. There's nothing intrinsically important about the names. The important thing about them is that they exist.

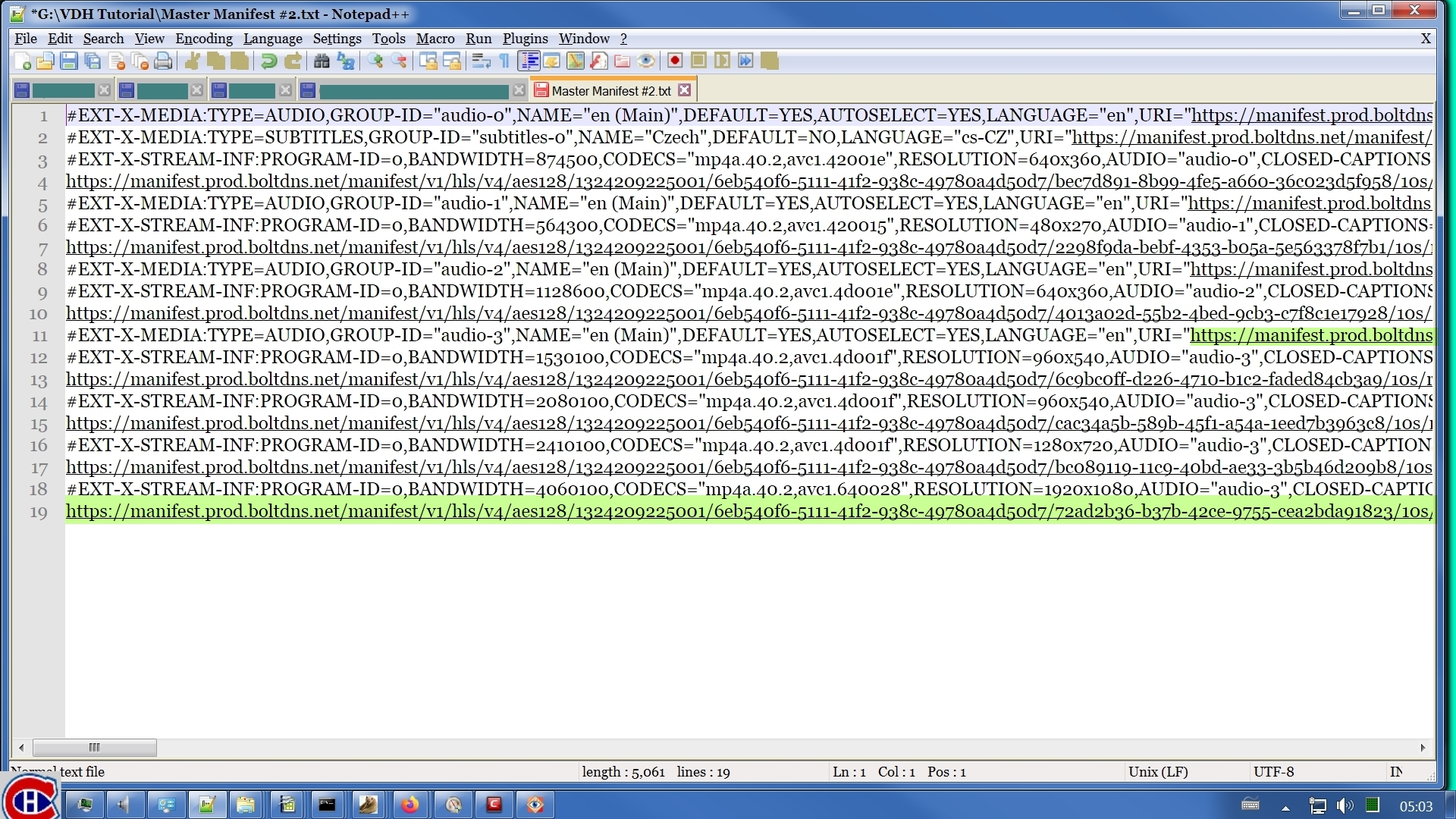

We also have 7 video stream descriptions:

We also have 7 video stream descriptions:

These are video streams without audio. The ffprobe output says that definitively. But you can guess that without looking at the ffprobe output by the fact that there is video resolution information present in the stream descriptions. There is also a parameter named AUDIO present, but I'll get to that in a moment.

You'll notice that some of the resolutions appear more than once.

You'll notice that some of the resolutions appear more than once.

You can tell that they are different streams from their bandwidths. The stream with the higher bandwidth value is a higher quality stream. Presumably, the one with the higher bandwidth value uses up more of the capacity of your Internet connection when you stream it in the player on the web page. The higher bandwidth value will also result in a larger file when you download it.

There is no similar way to differentiate the various audio stream descriptions. I can imagine that different audio streams could also be of different qualities. This might show up as different sampling rates or different bit rates. It might show up as mono vs stereo vs surround, even 5.1 surround vs 6.1 surround vs 7.1 surround vs 7.2 surround. The ffprobe output shows different bit rates for the audio streams. But the embedded player on the web site doen't give a tool for selecting audio quality, only video resolution. That isn't an issue, as will gradually become clear as we go along here. In the end, it's the same video at all the resolutions so the audio stream would be the same no matter the video resolution. Or so you might think. As we progress here, you'll learn how to pick the right audio stream to download for the video stream you will download.

There is no similar way to differentiate the various audio stream descriptions. I can imagine that different audio streams could also be of different qualities. This might show up as different sampling rates or different bit rates. It might show up as mono vs stereo vs surround, even 5.1 surround vs 6.1 surround vs 7.1 surround vs 7.2 surround. The ffprobe output shows different bit rates for the audio streams. But the embedded player on the web site doen't give a tool for selecting audio quality, only video resolution. That isn't an issue, as will gradually become clear as we go along here. In the end, it's the same video at all the resolutions so the audio stream would be the same no matter the video resolution. Or so you might think. As we progress here, you'll learn how to pick the right audio stream to download for the video stream you will download.

Wild Willy

Nov 26, 2021, 11:22:30 AM11/26/21

to Video DownloadHelper Q&A

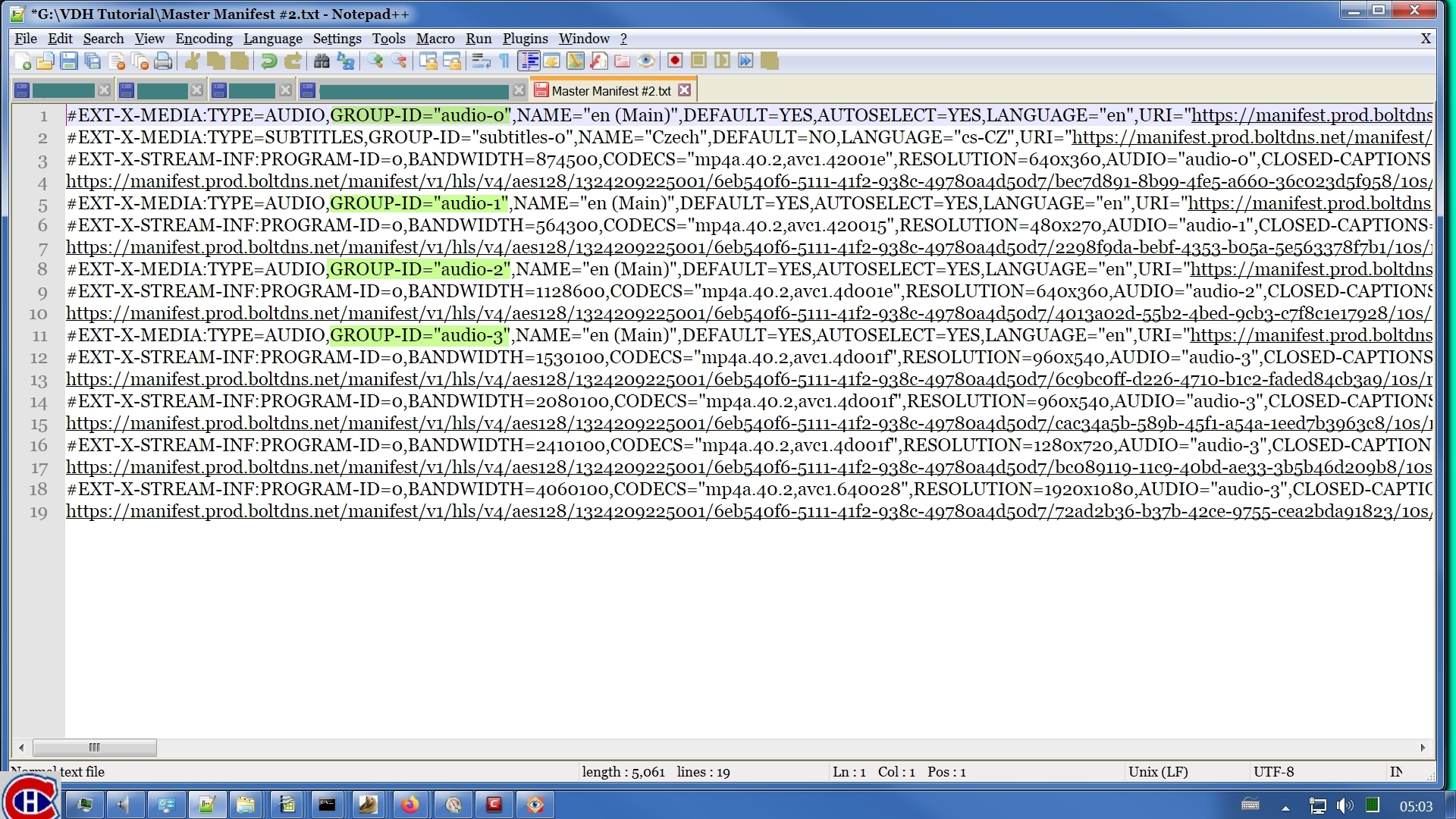

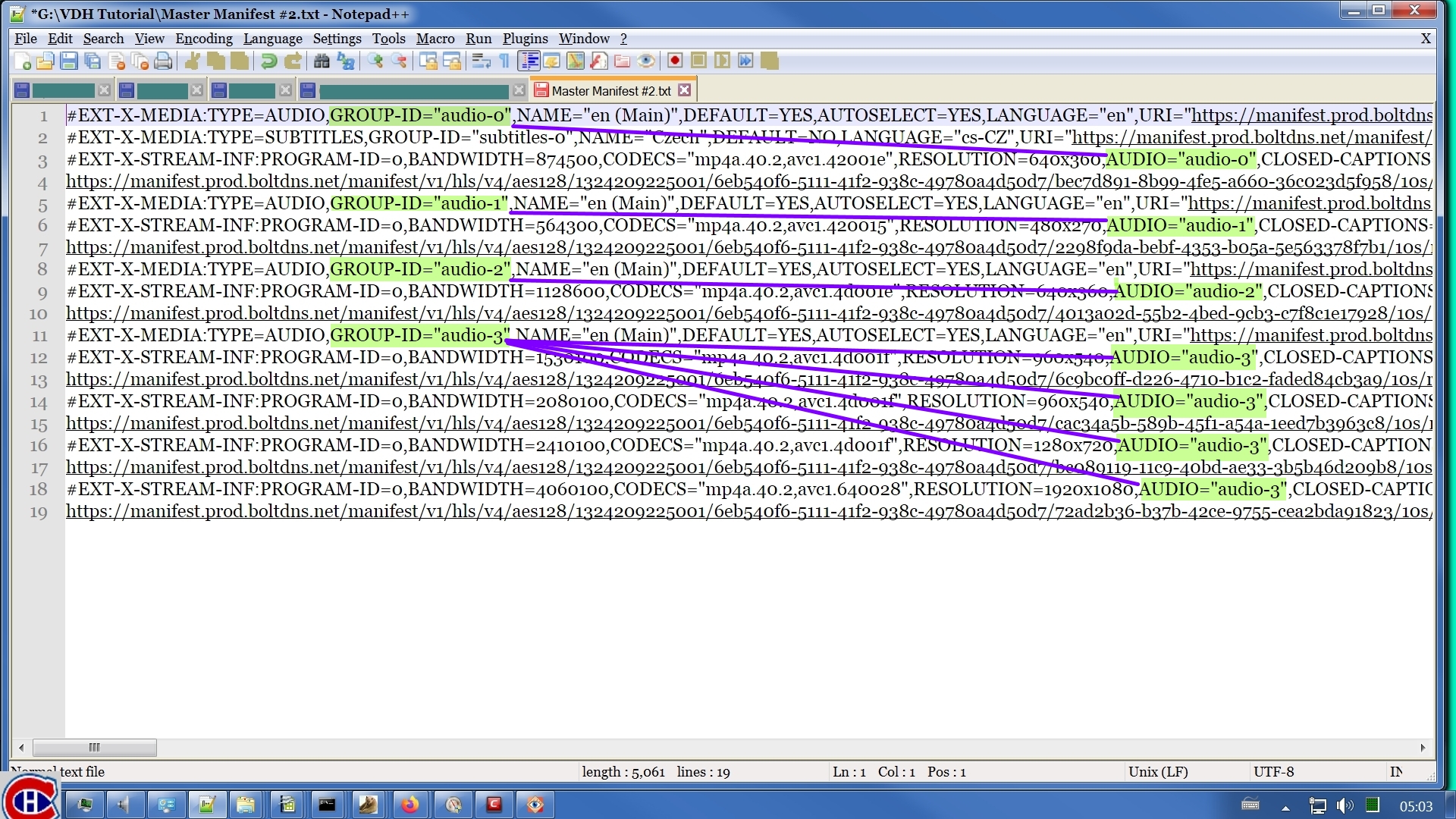

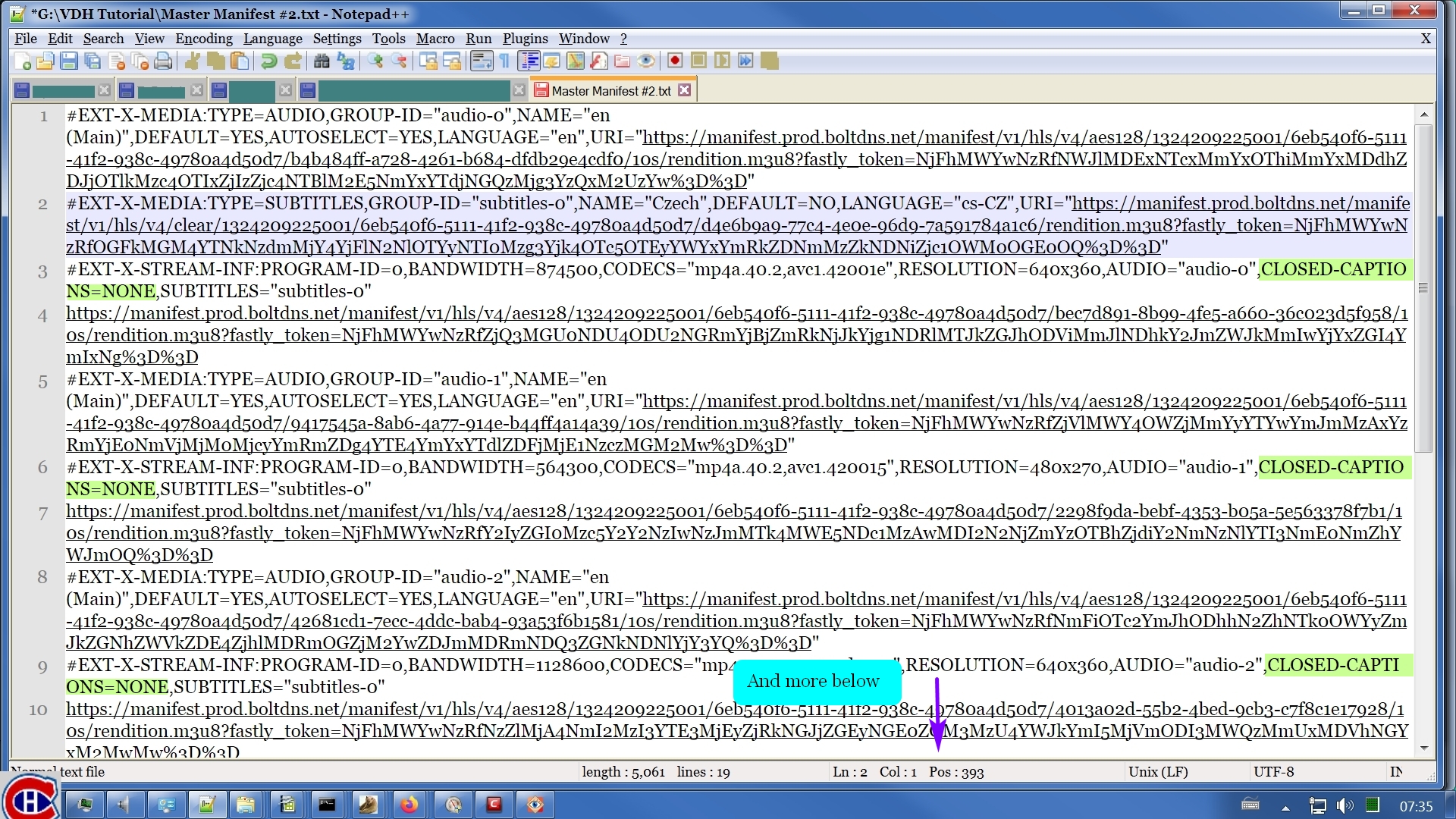

I've mentioned that the audio streams here have no video streams, & the video streams have no audio streams. But it would be silly if there were no way to pair up an audio stream with a video stream. That pairing information is present in this manifest.

We're finally getting to that naming thing I mentioned earlier. This image shows how the audio & video streams are related. The AUDIO parameter value on each video stream description matches the GROUP-ID parameter value on an earlier audio stream description. On an audio stream description, the parameter is named GROUP-ID. On a video stream description, the parameter is named AUDIO. Why can't they be the same? Beats me. I didn't design this syntax. We just have to put up with it. The important thing is the matching parameter values: audio-0, audio-1, audio-2, audio-3. The matching of the parameter values is what associates an audio stream with a video stream.

You can see that any given audio stream may have multiple video streams associated with it. The first 3 audio streams have only a single video stream each. But the fourth audio stream has 4 partner video streams.

Now that the structure is clear, we can choose which items to download.

I'm going to choose the highest video resolution, which means I will choose the audio stream associated with it.

I've highlighted the relevant URLs here. In the Twitter example upthread, the manifest contained only partial URLs & we had to insert https://etc. at the front of each partial URL that was in the manifest. Here, it's all done for us. So much simpler. Why don't all sites do it like this one? Might as well ask, "Why do I like opera but other people don't?" Like I said upthread, there's no international standard for this.

Notice that in an audio stream description, the URL is in a parameter of the form URI="". Not URL. URI. And there's double quotation marks around it. In a video stream description, the URL is on its own line, no parameter name with an equal sign in front of it, and no double quotation marks. Gee, isn't that consistent . . . NOT!!! Hey, they didn't ask me my opinion. It's dumb. I know it. You know it. We just have to live with it. Let's move on.

You'll note that in this image, my highlighting stops at the right edge. The URLs are really, really long, nearly 300 characters each. That's why I've turned off line wrapping in my text editor. It makes this easier to visualize what's going on here. But it also means you can't see the entirety of the URLs in the images. There's long chunks of URL off screen to the right. If you download the full manifest I've attached above, you'll be able to see the complete URLs.

At this point, it might be worth it for you to download & look at the ffprobe report I attached above. Look for how ffprobe has broken things down into Program 0, Program 1, Program 2, etc. Each Program consists of one audio stream & one video stream, paralleling the structure of the manifest as we've analyzed above. Note how ffprobe identifies each stream as Stream #0:0, Stream #0:1, Stream #0:2, etc. This is the ffmpeg way of identifying individual tracks of a stream. Note specifically how Stream #0:6 appears more than once, which is how it recognizes the audio stream "audio-3" & its 4 partner video streams. You can read the audio characteristics of each audio stream & see the differing bit rates, expressed as kb/s. You can also read the video characteristics, the resolution, frame rate, bit rate, & so on. Note how the bit rates shown in the ffprobe output match up with the BANDWIDTH values in the master manifest. Very handy tool, this ffprobe thing.

You can see that any given audio stream may have multiple video streams associated with it. The first 3 audio streams have only a single video stream each. But the fourth audio stream has 4 partner video streams.

Now that the structure is clear, we can choose which items to download.

I'm going to choose the highest video resolution, which means I will choose the audio stream associated with it.

Notice that in an audio stream description, the URL is in a parameter of the form URI="". Not URL. URI. And there's double quotation marks around it. In a video stream description, the URL is on its own line, no parameter name with an equal sign in front of it, and no double quotation marks. Gee, isn't that consistent . . . NOT!!! Hey, they didn't ask me my opinion. It's dumb. I know it. You know it. We just have to live with it. Let's move on.

You'll note that in this image, my highlighting stops at the right edge. The URLs are really, really long, nearly 300 characters each. That's why I've turned off line wrapping in my text editor. It makes this easier to visualize what's going on here. But it also means you can't see the entirety of the URLs in the images. There's long chunks of URL off screen to the right. If you download the full manifest I've attached above, you'll be able to see the complete URLs.

At this point, it might be worth it for you to download & look at the ffprobe report I attached above. Look for how ffprobe has broken things down into Program 0, Program 1, Program 2, etc. Each Program consists of one audio stream & one video stream, paralleling the structure of the manifest as we've analyzed above. Note how ffprobe identifies each stream as Stream #0:0, Stream #0:1, Stream #0:2, etc. This is the ffmpeg way of identifying individual tracks of a stream. Note specifically how Stream #0:6 appears more than once, which is how it recognizes the audio stream "audio-3" & its 4 partner video streams. You can read the audio characteristics of each audio stream & see the differing bit rates, expressed as kb/s. You can also read the video characteristics, the resolution, frame rate, bit rate, & so on. Note how the bit rates shown in the ffprobe output match up with the BANDWIDTH values in the master manifest. Very handy tool, this ffprobe thing.

Wild Willy

Nov 26, 2021, 11:35:37 AM11/26/21

to Video DownloadHelper Q&A

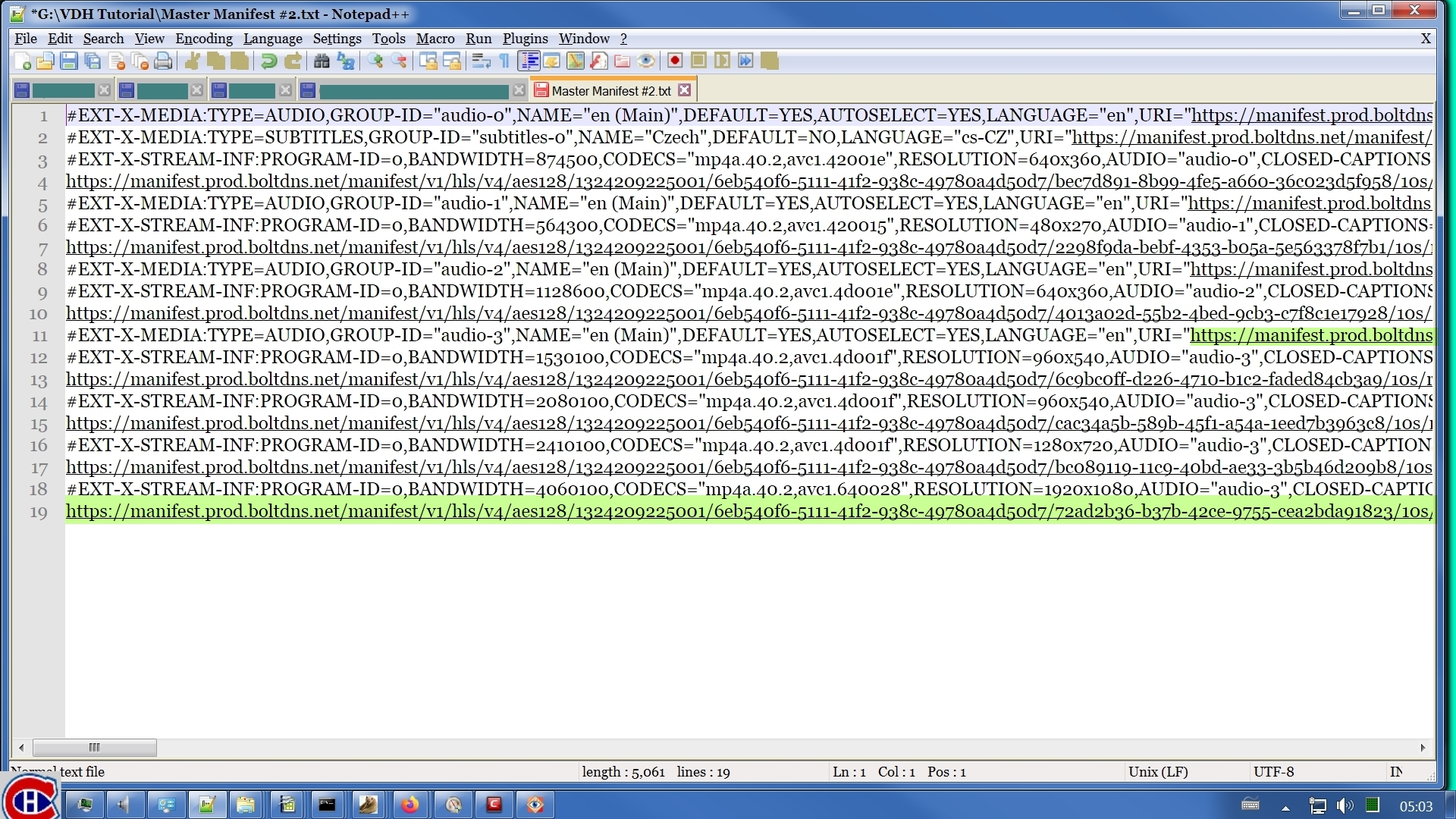

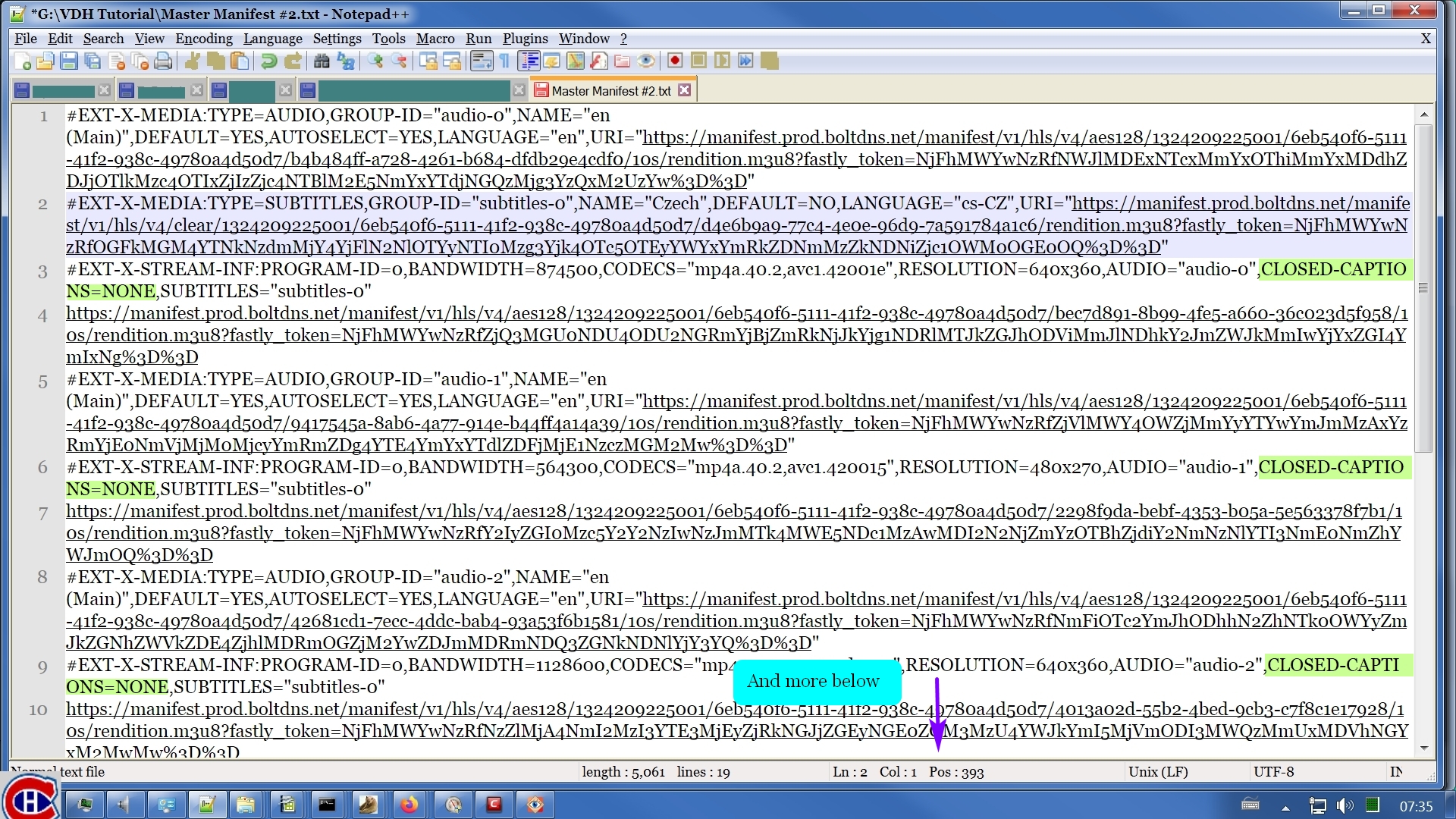

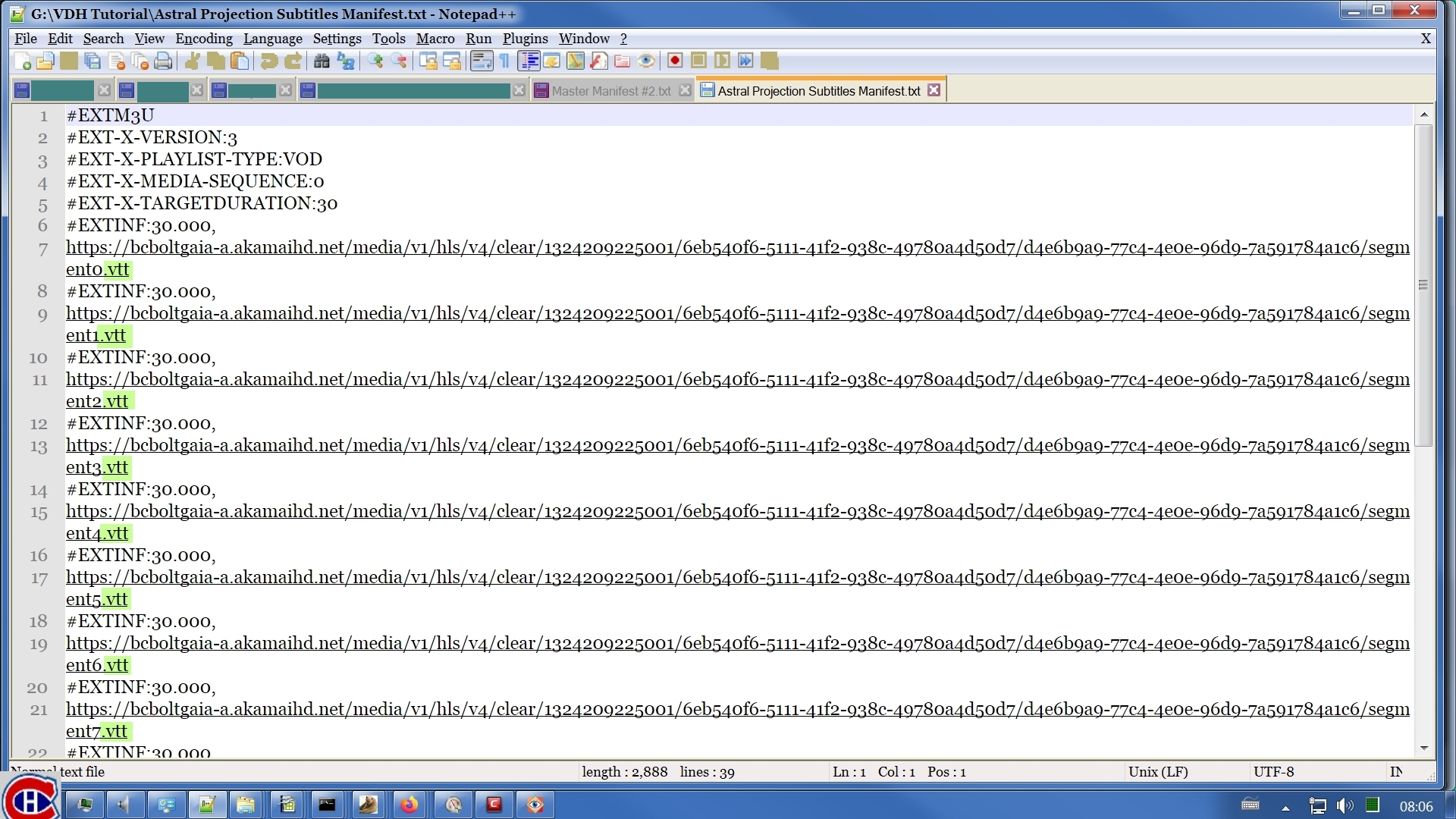

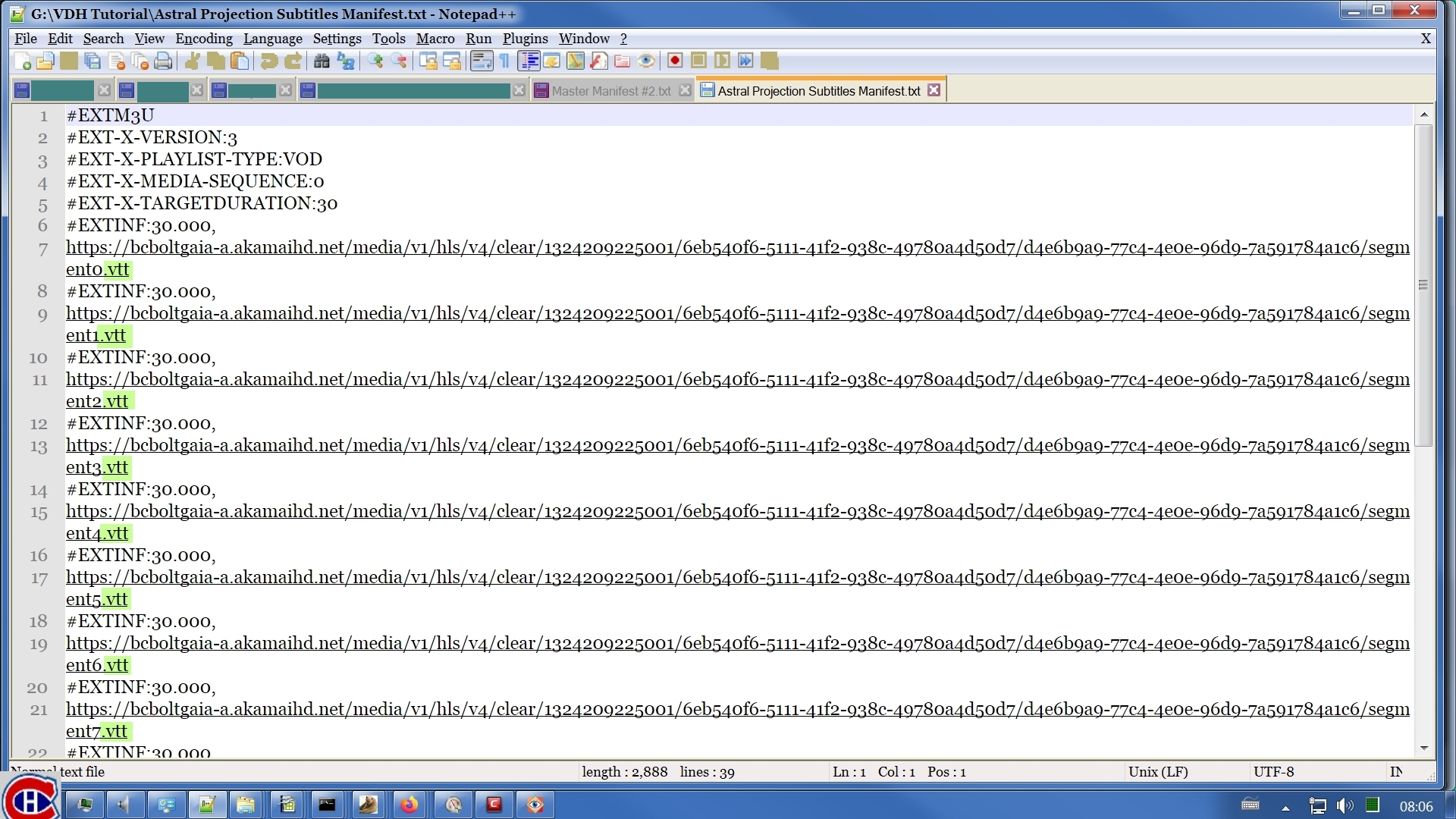

One last item in our manifest is the subtitles or captions. You'll see both words used. In fact, they both appear at various places in our master manifest. They are synonyms. In order to see how they fit in, I have turned line wrapping back on in my display of the master manifest. The relevant bits were scrolled off to the right when I turned line wrapping off, and I couldn't get all the necessary pieces to display in one screen by just scrolling right. So bear with the line wrapping for a moment.

There's just the one subtitles stream.

The web site developers have decided to name the subtitles stream subtitles-0. Also note that these are Czech subtitles. Maybe my build of ffprobe doesn't specifically include support for Czech language subtitles & that's why it couldn't deal with them. I'm just guessing.

Every video stream description apparently has no closed captions (if you can ignore the unfortunate line breaks).

Just as each video stream refers back to one of the audio streams, each video stream also refers back to the subtitles stream. In this case, they all refer back to the same subtitles stream, which is totally as expected.

Wild Willy

Nov 26, 2021, 11:50:36 AM11/26/21

to Video DownloadHelper Q&A

The URL for the subtitles stream appears in the stream description here in the manifest. Let's go look at it. We need to find out what type of subtitles we've got here. Copy the URL out of the manifest & paste it into the address bar in the browser. This is the first screenload of what you'll get:

It turns out these subtitles are in WEBVTT format.

Now we've extracted everything we need to download these components with ffmpeg. Here are the commands I used. For a detailed breakdown of the parts of these commands, read the posts upthread dealing with the Twitter example.

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://manifest.prod.boltdns.net/manifest/v1/hls/v4/clear/1324209225001/6eb540f6-5111-41f2-938c-49780a4d50d7/d4e6b9a9-77c4-4e0e-96d9-7a591784a1c6/rendition.m3u8?fastly_token=NjFhMWYwNzRfOGFkMGM4YTNkNzdmMjY4YjFlN2NlOTYyNTI0Mzg3Yjk4OTc5OTEyYWYxYmRkZDNmMzZkNDNiZjc1OWM0OGE0OQDD" -codec: copy "Q:\VDH Testing\Astral Projection Video 20200504.vtt" 1>"Q:\VDH Testing\Get Example.Err" 2>"Q:\VDH Testing\Get Example.Log"

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://manifest.prod.boltdns.net/manifest/v1/hls/v4/aes128/1324209225001/6eb540f6-5111-41f2-938c-49780a4d50d7/d506cd77-473f-4fa9-9048-01d9824e11ed/10s/rendition.m3u8?fastly_token=NjFhMWYwNzRfOWQwYjhkN2YxYjU2NWVkZjYwYWFjYjQ0M2FjNDhiMGYxMzkzNjQwY2NlNmNkZDQyZDkwYmI2YzdjMTgxZmRhNwDD" -codec: copy "Q:\VDH Testing\Astral Projection Audio 20200504.mp4" 1>>"Q:\VDH Testing\Get Example.Err" 2>>"Q:\VDH Testing\Get Example.Log"

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://manifest.prod.boltdns.net/manifest/v1/hls/v4/aes128/1324209225001/6eb540f6-5111-41f2-938c-49780a4d50d7/72ad2b36-b37b-42ce-9755-cea2bda91823/10s/rendition.m3u8?fastly_token=NjFhMWYwNzRfZTQwNzRiNzY2ZGViNWU4MjBiMTBkOWZmMzJhMTU1NmIyM2EwMjEyNDgyMTBhNTQwOThlMWRmZGM0ZGExNTJiZADD" -codec: copy "Q:\VDH Testing\Astral Projection Video 20200504.mp4" 1>>"Q:\VDH Testing\Get Example.Err" 2>>"Q:\VDH Testing\Get Example.Log"

Each one of these 3 commands is a single line. Google has broken the lines & wrapped them to make them fit on the web page. But when I executed them, they were single long lines.

I've attached the ffmpeg log file from these downloads.

Here are the Windows Properties of the audio & the video file I downloaded.

Now we've extracted everything we need to download these components with ffmpeg. Here are the commands I used. For a detailed breakdown of the parts of these commands, read the posts upthread dealing with the Twitter example.

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://manifest.prod.boltdns.net/manifest/v1/hls/v4/clear/1324209225001/6eb540f6-5111-41f2-938c-49780a4d50d7/d4e6b9a9-77c4-4e0e-96d9-7a591784a1c6/rendition.m3u8?fastly_token=NjFhMWYwNzRfOGFkMGM4YTNkNzdmMjY4YjFlN2NlOTYyNTI0Mzg3Yjk4OTc5OTEyYWYxYmRkZDNmMzZkNDNiZjc1OWM0OGE0OQDD" -codec: copy "Q:\VDH Testing\Astral Projection Video 20200504.vtt" 1>"Q:\VDH Testing\Get Example.Err" 2>"Q:\VDH Testing\Get Example.Log"

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://manifest.prod.boltdns.net/manifest/v1/hls/v4/aes128/1324209225001/6eb540f6-5111-41f2-938c-49780a4d50d7/d506cd77-473f-4fa9-9048-01d9824e11ed/10s/rendition.m3u8?fastly_token=NjFhMWYwNzRfOWQwYjhkN2YxYjU2NWVkZjYwYWFjYjQ0M2FjNDhiMGYxMzkzNjQwY2NlNmNkZDQyZDkwYmI2YzdjMTgxZmRhNwDD" -codec: copy "Q:\VDH Testing\Astral Projection Audio 20200504.mp4" 1>>"Q:\VDH Testing\Get Example.Err" 2>>"Q:\VDH Testing\Get Example.Log"

"G:\ffmpeg\ffmpeg-2021-07-11-git-79ebdbb9b9-full_build\bin\ffmpeg.exe" -protocol_whitelist file,crypto,data,http,https,tls,tcp -hwaccel auto -i "https://manifest.prod.boltdns.net/manifest/v1/hls/v4/aes128/1324209225001/6eb540f6-5111-41f2-938c-49780a4d50d7/72ad2b36-b37b-42ce-9755-cea2bda91823/10s/rendition.m3u8?fastly_token=NjFhMWYwNzRfZTQwNzRiNzY2ZGViNWU4MjBiMTBkOWZmMzJhMTU1NmIyM2EwMjEyNDgyMTBhNTQwOThlMWRmZGM0ZGExNTJiZADD" -codec: copy "Q:\VDH Testing\Astral Projection Video 20200504.mp4" 1>>"Q:\VDH Testing\Get Example.Err" 2>>"Q:\VDH Testing\Get Example.Log"

Each one of these 3 commands is a single line. Google has broken the lines & wrapped them to make them fit on the web page. But when I executed them, they were single long lines.

I've attached the ffmpeg log file from these downloads.

Here are the Windows Properties of the audio & the video file I downloaded.

Note the asymetry of what's reported for each file. The audio file has no video properties & the video file has no audio properties.

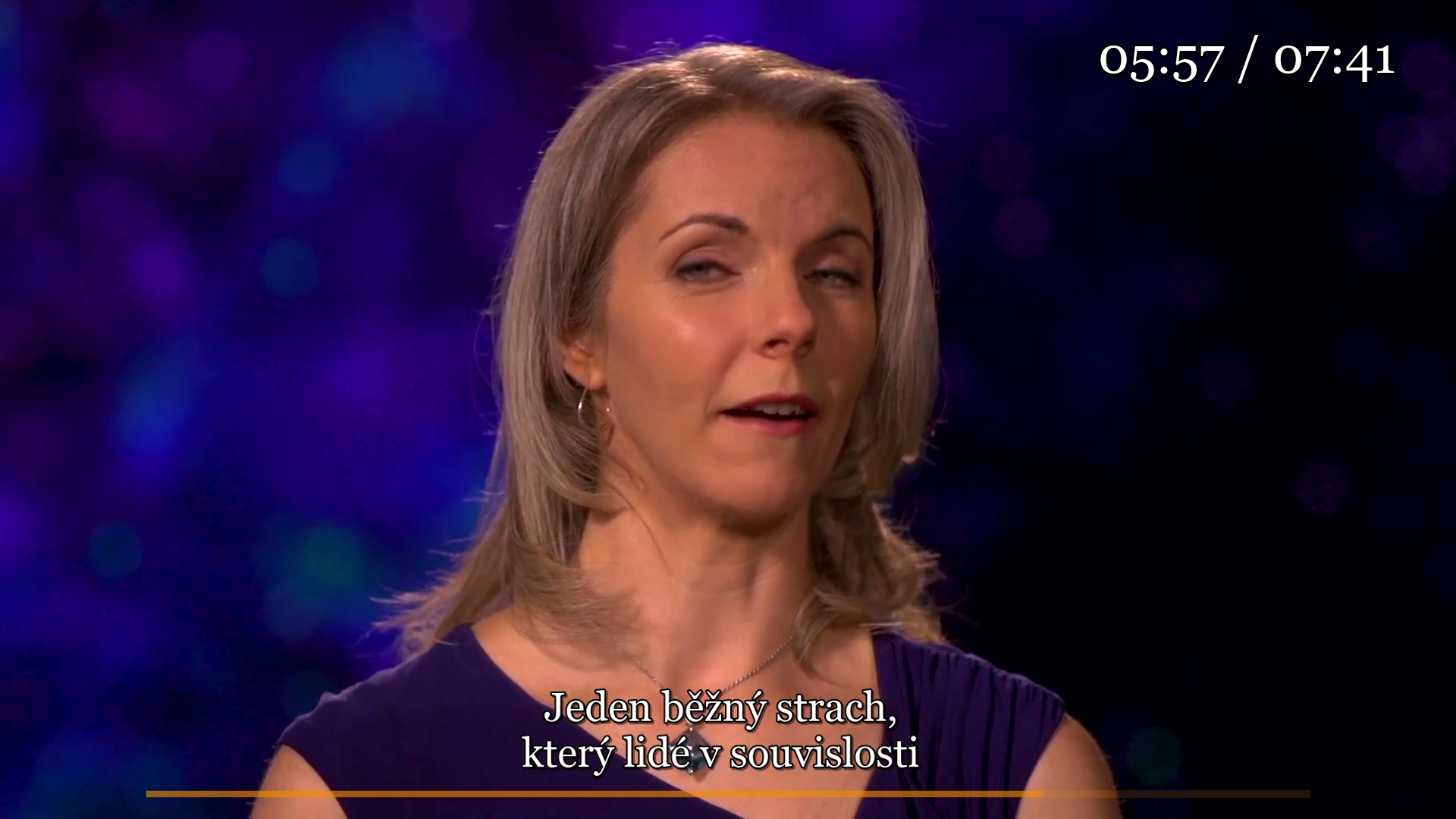

I played the 2 files synchronously in VLC.

I played the 2 files synchronously in VLC.

As you can see, I've got video with Czech captions. This happened automatically because I chose the same target file name for the captions file & the video file, changing only their extensions, .vtt & .mp4, respectively. You'll have to trust me that I've also got audio (in English).

Wild Willy

Dec 9, 2021, 8:50:30 PM12/9/21

to Video DownloadHelper Q&A

I've just encountered a rather curious case in connection with this thread:

https://groups.google.com/g/video-downloadhelper-q-and-a/c/PG5Nrok1_YI

I'm attaching the manifest from that one here. There's a number of interesting things

going on in this manifest.

First, look at the very last line. It shows AES & the URL ends with .key. Usually, this

indicates that we're looking at an encrypted stream. When I've hit things like this

before, ffmpeg wouldn't download them. Despite that, I did manage to get ffmpeg to

download this object, as I detail in the other thread.

Now look at the audio stream descriptions. They are similar to the ones I talk about

upthread here. But there's a catch. You'll notice that these audio streams do NOT

include URLs. So we're not looking at one of those cases of separate video & audio.

Each video stream here should include audio that does not need to be downloaded

separately. Note the names in the GROUP-ID parameters. The 160, 256, & 320 look like

bit rates, indicating different audio qualities. This appears to be borne out by my

results in the other thread.

Look at each video stream description. Each one contains an AUDIO parameter that refers

back to the GROUP-ID parameter in one of the audio stream descriptions, just like I

discuss upthread. I find this a bit weird, since the audio stream descriptions don't

actually describe audio streams; there's no URLs for the audio streams. Nevertheless, it

looks like the higher video resolutions refer to an audio stream that is of a higher

quality.

In this manifest, all the I-FRAME stream descriptions are in a block. This is unlike the

example upthread in which the I-FRAME stream descriptions alternated with regular video

stream descriptions. That's not really significant. It just shows that the structure of

a manifest is flexible. Compare the BANDWIDTH value on each I-FRAME stream description

with the BANDWIDTH value on each video stream description of the corresponding

RESOLUTION. They don't match exactly, unlike in the sample manifest upthread. I didn't

expect this. I guess they don't always have to match. Live & learn.

https://groups.google.com/g/video-downloadhelper-q-and-a/c/PG5Nrok1_YI

I'm attaching the manifest from that one here. There's a number of interesting things

going on in this manifest.

First, look at the very last line. It shows AES & the URL ends with .key. Usually, this

indicates that we're looking at an encrypted stream. When I've hit things like this

before, ffmpeg wouldn't download them. Despite that, I did manage to get ffmpeg to

download this object, as I detail in the other thread.

Now look at the audio stream descriptions. They are similar to the ones I talk about

upthread here. But there's a catch. You'll notice that these audio streams do NOT

include URLs. So we're not looking at one of those cases of separate video & audio.

Each video stream here should include audio that does not need to be downloaded

separately. Note the names in the GROUP-ID parameters. The 160, 256, & 320 look like

bit rates, indicating different audio qualities. This appears to be borne out by my

results in the other thread.

Look at each video stream description. Each one contains an AUDIO parameter that refers

back to the GROUP-ID parameter in one of the audio stream descriptions, just like I

discuss upthread. I find this a bit weird, since the audio stream descriptions don't

actually describe audio streams; there's no URLs for the audio streams. Nevertheless, it

looks like the higher video resolutions refer to an audio stream that is of a higher

quality.

In this manifest, all the I-FRAME stream descriptions are in a block. This is unlike the

example upthread in which the I-FRAME stream descriptions alternated with regular video

stream descriptions. That's not really significant. It just shows that the structure of

a manifest is flexible. Compare the BANDWIDTH value on each I-FRAME stream description

with the BANDWIDTH value on each video stream description of the corresponding

RESOLUTION. They don't match exactly, unlike in the sample manifest upthread. I didn't