"Proper Unicode Support" and VAST

Seth Berman

Hello All,

Happy New Year to everyone! At Instantiations, we're certainly looking forward to 2021.

First and foremost, the release of VAST Platform 2021 is on our minds. We're putting the finishing touches on it now and preparing to release it soon! After this release, one of the next important VAST additions is support for Unicode.

I recently hinted at our "Unicode Support" coming to VAST Platform 2022 in this post: https://groups.google.com/u/1/g/va-smalltalk/c/sG3x1rBBU-E.

Much exciting work has been done in this area in just the past couple months, and I wanted to share some of it with you. We're also planning to do a webinar at a later point this year to more formally demonstrate what has been developed.

So how does one define "Unicode Support"?

This is an important question to ask because the answer can vary widely. It is not a binary choice of "having Unicode" or "not having Unicode". In fact, I liked the way Joachim framed it in the aforementioned post as "Proper Unicode Support''. Thinking about it in terms of it being "proper" support provides a great frame of reference. (Some programming languages refer to this as "Unicode-correctness".)

To me, "Proper Unicode Support" means functionality integrated into the product such that many of the various concepts in the Unicode standard are available as first-class objects in VAST. I also think "proper" support within VAST means automatically handling many of the complex issues that occur when using Unicode. (Most languages force the user to deal with these issues.)

To meet the above criteria, we've been moving forward with an ambitious implementation that will provide a set of Unicode-related features that only languages like Swift, Raku (Perl 6), and Elixir will have parity with to date.

What needed to change inside the VAST Platform?

Unicode Support is truly not a single feature. We've been working towards "Proper Unicode Support" in VAST for many years through the continuing development of the many prerequisites. It's this group of prerequisite features that come together to make Unicode work properly and holistically.

It's important to note that these new features are absolutely essential. After all, VAST was initially designed at a time when Unicode was just being standardized and almost everyone still operated using single-byte character set encodings.

Some of these foundational features included reorganizing, fixing, and improving our code page converter. We also had to develop the capability for UTF-8 encoded filenames in our zip streams. Even the internals of our new OsProcess framework, both in the VM and in the image, were also designed with UTF-8 encoding by default. However, many features beyond this are still required to create the necessary foundation.

What are some of the technical considerations?

Many of you can attest (perhaps better than myself) to all the complexities with the digital representation and transmission of the world's languages. Issues are not magically solved because some bytes were thrown into a Unicode string.

The concept of a "character" itself is a complex topic when considered across the spectrum of all languages. Even with Unicode, users still face issues with encodings, either off-the-wire or via the filesystem. Endianness in some of the encoded forms (like UTF-16LE or UTF-16BE) can become an issue also.

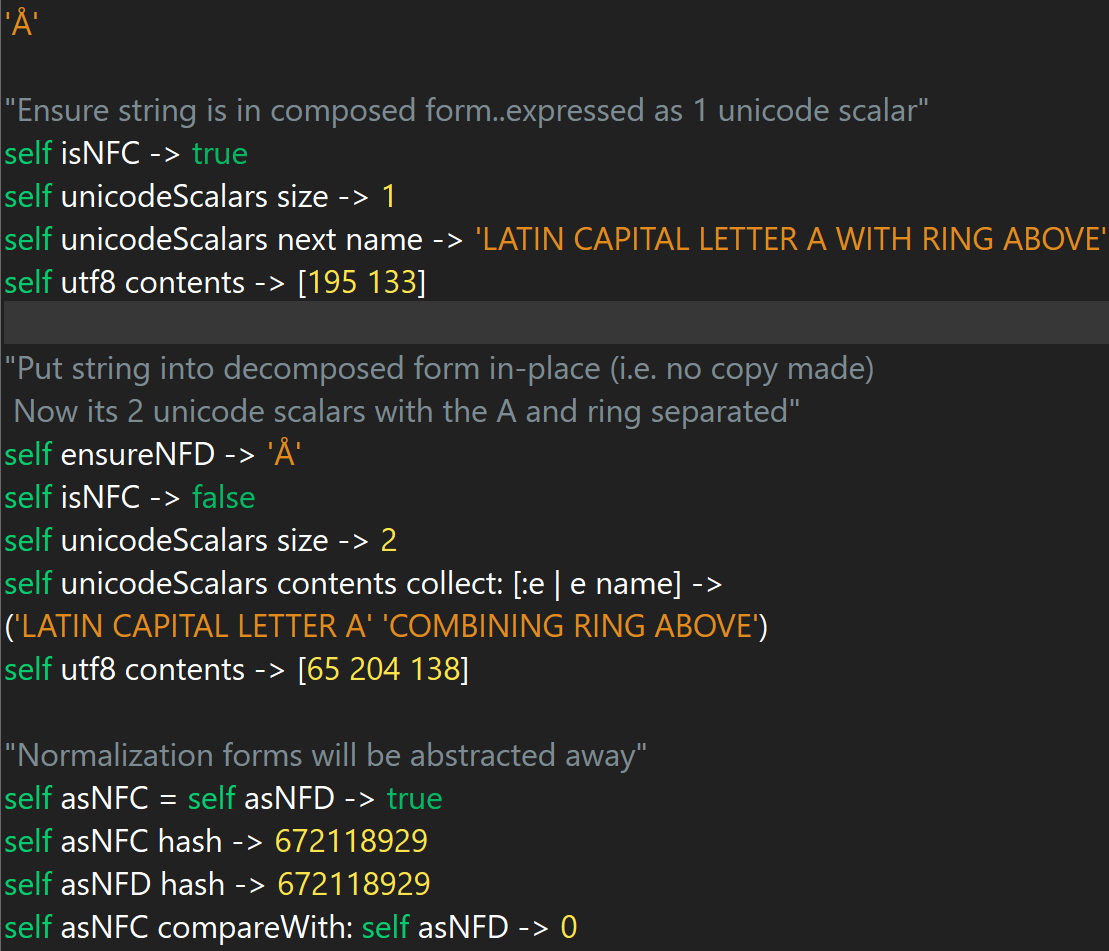

New complexity is introduced with normalization forms since there are many "user-perceived" characters that can have several different codepoint representations (like Å vs Å, see screenshot below). As mentioned, ambiguity regarding what a "character" is and how you can access it from a string can be involved. Even the Unicode standard's usage of the term "character" is not consistent.

For further reading, there is an interesting history regarding ANSI, regional character encodings, and the Unicode standard. If you are interested, I recommend books such as O'Reilly's "Unicode Explained" and "Fonts and Encodings". Looking at the history of various generations of programming languages with regards to digital language representation is also fascinating and enlightening.

What are some of the features being added to VAST?

VAST Platform (Current State)

The following is a very brief overview of the relevant abstractions in the existing VAST system.

- EsString

An EsString in VAST is an abstract class that expects its subclasses to be a locale-sensitive collection of "characters where each 'character' is of a fixed-length in size". - String

A String in VAST is a locale-sensitive byte-string which means each "character" has a fixed-length of 1 byte and uses a locale for the purposes of sorting strings/characters as well as case conversion. - DBString

There is also a DBString which extends the code-unit fixed-length to 2 bytes and is used for legacy double-byte code pages. - Character

Locale-sensitive value holder that can represent the range 0 to 65535. It is the basic element of a String or DBString.

VAST Platform with Unicode Core (NEW -- Coming to VAST 2022)

There are three main abstractions that we have developed to facilitate working with Unicode data which are the Unicode counterparts to the locale-based String/Character. They are: UnicodeScaler, Grapheme, UnicodeString.

- UnicodeScalar

A UnicodeScalar represents all Unicode code points except for a special range reserved for UTF-16 encoding.

Code points are the unique values that the Unicode consortium assigns to the Unicode code space. (The code space is the complete range of possible Unicode values.) Depending on your definition of what a character could be, a code point can represent a "character". There are many other classifications for code points as well.

Since Unicode scalars are a subset of code points, many languages consider a Unicode scalar to be the native "character", and accessing a string by an index value would result in a Unicode scalar.

In VAST, our UnicodeScalar is able to describe many properties from the Unicode Character Database, such as a scalar's name, its case, is it alphabetic, is it numeric, etc. VAST Unicode "Views" (described later in this post) will make it easy to access Unicode scalars from Graphemes and UnicodeStrings. - Grapheme

A Grapheme in VAST represents a user-perceived "character" and is the basic unit of a VAST UnicodeString.

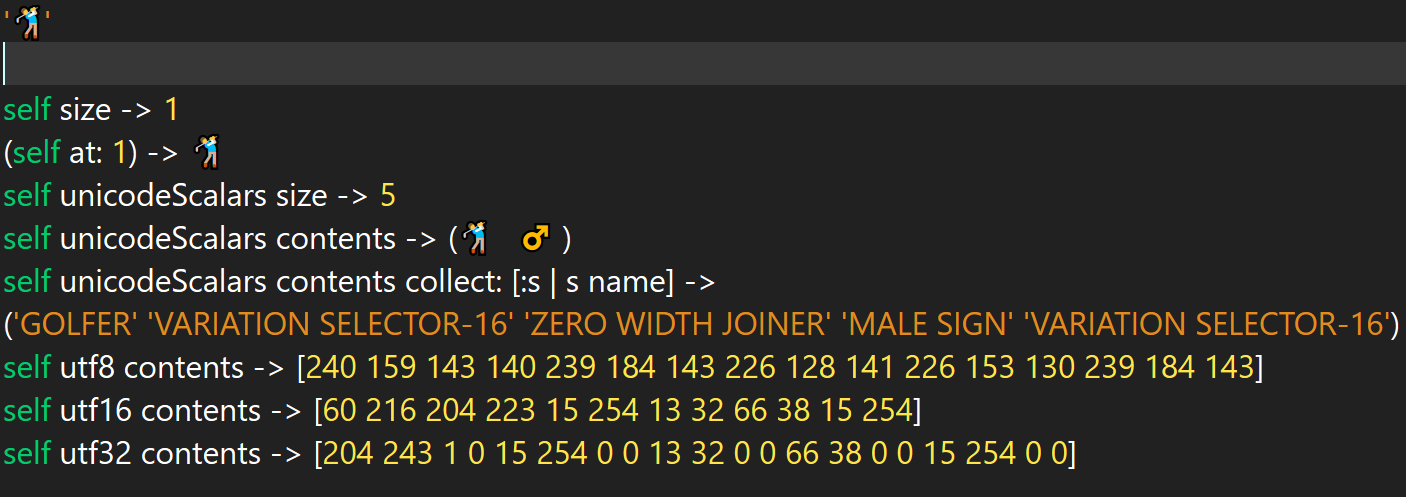

There are only a few programming languages with Unicode Support that consider a "character" to represent the written expression of a "character" in the way a user might see it on a screen, rather than a digital code point. While the visual expression would be called a Glyph, the digital expression of this concept is called a "grapheme cluster".

In VAST, we call this a Grapheme. The Grapheme is logically composed of one or more UnicodeScalars. It is identified using extended grapheme cluster boundary algorithms from "Text Segmentation" in the Unicode Standard (https://unicode.org/reports/tr29/).

The VAST Grapheme will abstract the details of how to group enough Unicode scalars together to form what we would think of as a "character" on the screen. It also abstracts the details regarding normalization. Normalization is problematic simply because it can create multiple binary representations of what is really the same string or character. Therefore concepts like comparison and hashing will give incorrect results, if unhandled.

However, our Grapheme handles these details transparently. It detects and ensures a common normalized form for various operations where these differences would matter, so the user can focus on programming, not worrying about what normalization form a string is in.

The new VAST Grapheme is the best Unicode analog to the standard Smalltalk Character class. A "ü" always maps to one Grapheme, even though as we described, it may be logically composed of 1 or 2 Unicode scalars. We maintain the original form for various reasons and do not implicitly convert a Grapheme's internals from one encoded normalization form to another.

In VAST, our Grapheme is also able to describe many of the same properties from the Unicode Character Database that a UnicodeScalar can. - UnicodeString

A UnicodeString in VAST is the Unicode analog to the standard String class and will be API compatible with it.

Our UnicodeString is logically a sequenceable collection of Graphemes and our UnicodeString is both mutable and growable. The standard String class is also mutable, but it cannot grow without making a copy of itself. This ability to grow and shrink is a natural consequence of how a container, with a contiguous and compact backing storage, would have to work given that each element is of variable-length in that backing storage. So given that we needed to support this behavior anyway in the backing storage, we decided it would be beneficial to reflect that ability in Smalltalk. As such, a UnicodeString is a subclass of AdditiveSequenceableCollection, similar to OrderedCollection.

Our UnicodeString is designed to be API compatible with EsString and subclasses, but it is not a subclass of EsString. There are a few reasons for this beyond just the ability to grow. EsString is locale-sensitive and is expected to have subclasses with fixed-length code units. Each one of those code units is expected to create a fully composed "character". (A Unicode string might not have fixed-length code units nor code units that always create a fully composed "character".)

Many APIs up and down the Collection hierarchy, which EsString is a part of, have the implicit expectation that the elements are self-contained and meaningful in isolation. In other words, it isn't expected that in order to grab the first "character" (string at: 1), that it has to collect a variable amount of elements to form it (as the case could be in Unicode). Or that copying from one index to another may end up copying from the middle of 1 "character" to the middle of another "character" giving nonsense as a result (also a possibility in Unicode). Or that reversing the "characters" in a string may give the wrong answer because the interpretation of what constitutes a character does not work in reverse, or works incorrectly (again, as can happen in Unicode).

Because UnicodeString is using "Graphemes", it naturally works correctly within the context of the Collection hierarchy and works efficiently for a VAST user.

Other New Features

- Constant Time Indexing

In this case, constant-time indexing is the ability to select a "character" in a string with the time it takes being independent of the size of the string.

Constant time indexing is a popular topic of discussion in relation to Unicode. A "character", the way VAST defines it as "Grapheme", is variable-length either in its number of code points or the number of bytes in its encoded forms. Variable-length elements mean you can't do constant-time indexing without optimizations (which will be discussed below and implemented).

The next logical question might be: "How important is constant-time indexing with strings?" Instead, perhaps the question should be: "How important is constant-time random accessing of strings?" When considering how developers interact with strings, is it often the case that they jump around from one element to another in random order? Unless building something like a text editor, this is much less likely. Streaming through a string linearly, making copies of strings/substrings, looking for the index of an element or substring, regex, etc. is the more likely scenario. Many of these approaches point to maintaining linear scanning performance, which is still difficult due to variable-length elements, but completely possible. However, this needs to be addressed in VAST, because current Smalltalk implementations using ReadStream and WriteStream would cause quadratic behavior (ie. poor performance) since they assume constant time indexing.

Thankfully, we have solved most all of these issues with "Unicode Views" which not only provide linear-time streaming, but also give a richer "position" object that allows constant time indexing back into the string. We've also updated ReadStream and WriteStream to offer a better path for UnicodeString since the idea is that a UnicodeString would one day be a drop-in replacement for String.

In general, we don't feel maintaining constant time indexing is critical. However, specific to this product where the existing algorithms expect that strings are constant time indexed, we're going to do our best to maintain it. At the end of the day, we are more interested in correctness and helping the user toward that end in what is a very complex area. - Views (a concept borrowed from Swift)

A Unicode view in VAST is a readable, positionable, and a bi-directional stream that provides a particular interpretation of the elements in a UnicodeString. The different views are: UnicodeScalarView, GraphemeView, Utf8View, Utf16View, and Utf32View.

As discussed, even the concept of how developers want to work with "characters" is not always consistent. We have chosen the basic accessible unit of the UnicodeString to be a Grapheme (backed by an extended grapheme cluster) and that should be thought of as VAST's Unicode "character". However, sometimes a user might want to view the UnicodeString as a collection of UnicodeScalars and be able to efficiently work with those.

Our UnicodeScalarView offers this and is available to both a Grapheme and UnicodeString. A user might want to get the UnicodeString, Grapheme or UnicodeScalar in terms of UTF-8, UTF-16, or UTF-32 encoded bytes, and our Utf8View, Utf16View and Utf32View offer that. And, of course, our UnicodeString has a Grapheme stream that gives linear performance over the contents of the UnicodeString and is the analog to asking a standard String for a #readStream.

All views keep bookmarks to the internal representation of a UnicodeString so it can pick up exactly where it left off in a call such as #next. The position in the stream will be in terms of whatever the basic unit of the view is. For the GraphemeView, these are Grapheme-based positions. For a UnicodeScalarView, these are UnicodeScalar-based positions. For any of the UTF views, each position is a byte-based position.

Views will always have a consistent view of the UnicodeString, even if code is making modifications to the UnicodeString during the usage of the view. This will be discussed in more detail below, but in short, a view is augmented by our copy-on-write feature for UnicodeStrings.

Unlike our standard streams, views have the ability to get the previous element and stream backwards. This is really powerful, especially considering the complexity of the encoding under the hood.

Optimizations

- Copy-On-Write

This feature has already been implemented and we're very excited about it. It offers the potential for huge memory savings. Every UnicodeString uses copy-on-write semantics, and this is implemented in the virtual machine. Copy-on-write allows multiple UnicodeStrings to share the same underlying storage until a write occurs. If a write is about to occur and the UnicodeString is sharing its storage, then it first makes a copy of the storage and performs a write on that. At this point, that particular UnicodeString now has its own unique storage, so there would be no further need for it to perform any copies again unless another UnicodeString starts sharing its storage. We have very quick write-barriers in the VM that detect the locations where writes occur and if action needs to be taken.

Even if only a substring copy is being made, the storage can still be shared and we use special string slice objects to keep track of the offsets into the shared storage. But this all happens behind the scenes, so the user just works with UnicodeStrings and doesn't care if its internals reflect a slice into other shared storage. As an example of the kinds of savings we're talking about, if you made 1000 copies of a UnicodeString that was 100MB in size...it would cost you an additional 32KB of memory on 64-bit.

As hinted at earlier, views also use this mechanism when initialized on a UnicodeString. The UnicodeString is informed of this and marks its storage as shared. Now a consistent view of the original string can be guaranteed even if that string is later modified while it's being viewed. - ASCII-Optimization

The reality is that many strings will be composed of only ASCII characters. Our internal storage is currently UTF-8, so if we can detect that all characters are in the ASCII range (ASCII is a subset of UTF-8), then we can mark the ASCII-flag in the object and begin to use byte-index optimized algorithms to achieve features like constant-time indexing.

- Canonical Form

Currently we use special algorithms to quickly determine if a string and/or an argument string are in a common normalized form. If so, we don't need to perform any normalization to ensure certain operations will be correct. However, we plan to do this ahead of time and mark a special flag that indicates the string is in the form we need it to be. - Small Strings

We are looking at inlining up to 7 bytes of encoded Unicode into the reference slot to a storage object. Very similar to how a SmallInteger is not a real object, but a tagged value placed into an object slot. This would offer some good savings per UnicodeString and offer faster access to the storage.

Beyond this, we have some follow-on work that we'll be integrating into VAST Platform 2022, namely, support for Literals, upgraded Scintilla editor with UTF-8 encoding by default, File System APIs, and Windows Wide APIs.

I realize this was a huge amount of information to digest, so thank you for reading it. That said, there's more to come!

We look forward to showing our customers and the community these new features during a live webinar in the coming months!

-Seth

Gabriel Cotelli

Joachim Tuchel

Seth Berman

Philippe Marschall

Seth Berman

Greetings Philippe,

Thanks for these questions and good to hear from you.

UnicodeString

"Someone has been busy :-)"

Always:)

"There is risk and opportunity here"

Agreed. Given the current state of Unicode in the product, I concluded mostly

opportunity.

"I assume #size would answer the number of graphemes."

Indeed, it does. And that size is cached

since it must be computed. A UnicodeString also has a capacity or 'usable

size'. This is mostly managed internally to give performance to various write

APIs like #addAll:, #replaceFrom:to:with:, #at:put: and so on for

variable-length elements.

"As most external systems (XSD, RDMS, ...) will likely

use code points or worse "Unicode code units" users will have to

remember to use the correct selector."

Good points. Many tokenization algorithms might also be sensitive to this as well and make code point iteration the better choice.

Views

"How are they different from encoding and decoding

support? Is this orthogonal to encoding and decoding support?"

Decoding/Encoding is happening under the hood in most views. The internal representation of the actual

data is UTF-8, and from there it must transform this into a stream of

graphemes, Unicode scalars, utf-8 (easy), utf-16, or utf-32. We have other

methods to convert various encoded forms to a UnicodeString, but views are not

that method. Views can be treated as positionable read streams, so the

next/atEnd APIs apply. What is a little different is that they are also bi-directional,

so there is an ability to stream in reverse. Views can be treated as a

read-only interface of a collection, so #do:, #collect:, #select:,

#inject:into: and so on are available. Asking for #size, #contents, and various

ranged copies are all optimized in the VM. Due to copy-on-write, views are

consistent no matter what happens to the UnicodeString later. In that sense, views are immutable.

"Did you consider making strings immutable? If so, what

were some of the considerations? Was there just too much code that expects

strings to be mutable?"

One of the main objectives was to create a String/Character drop-in

replacement. In that respect, it could

not practically be enforced as immutable. As mentioned, views can capture immutability

and give just that part of the collection interface that would be appropriate

for this constraint.

Additional Information

Certainly, there is a whole other list of challenges regarding coexistence with String and Character, but they are not as interesting to hear about, and my initial post in this thread was getting rather long. These challenges would not be unique to us and a lot of preparation for this task was done by researching the lessons-learned from various languages that have been augmented with Unicode, such as Delphi and Python 2. What we will not be doing is a Python 3-like transition where our existing String and Character just, all the sudden, become Unicode. That would be a disaster in VAST on so many levels.

I do not know if the choice of making the basic unit an extended grapheme cluster was bold or not. What bothered me most about any other representation was how the Collection APIs would have the potential to just fall apart on you. It reminded me of what might happen if one viewed a collection of Integers as a bunch of indexable bytes. Sure, if all the integer values are < 256, this is going to seamlessly work out for everyone. Until it doesn't. So, a 'byte' probably is not really the appropriate way to canonically view a collection of Integers. Likewise, when working with the Collection API, it just did not seem appropriate to force the user to work with only part of what they probably consider a character to be. It certainly creates a lot more work for them to use the Collection API appropriately and correctly. There are always UnicodeString representational exceptions, which is why we created performant views.

Many thanks for your questions Phillipe, I look forward to hopefully seeing you at a future ESUG event.

- Seth

Philippe Marschall

...

Views

"How are they different from encoding and decoding support? Is this orthogonal to encoding and decoding support?"

Decoding/Encoding is happening under the hood in most views. The internal representation of the actual data is UTF-8, and from there it must transform this into a stream of graphemes, Unicode scalars, utf-8 (easy), utf-16, or utf-32. We have other methods to convert various encoded forms to a UnicodeString, but views are not that method. Views can be treated as positionable read streams, so the next/atEnd APIs apply. What is a little different is that they are also bi-directional, so there is an ability to stream in reverse. Views can be treated as a read-only interface of a collection, so #do:, #collect:, #select:, #inject:into: and so on are available. Asking for #size, #contents, and various ranged copies are all optimized in the VM. Due to copy-on-write, views are consistent no matter what happens to the UnicodeString later. In that sense, views are immutable.

"Did you consider making strings immutable? If so, what were some of the considerations? Was there just too much code that expects strings to be mutable?"

One of the main objectives was to create a String/Character drop-in replacement. In that respect, it could not practically be enforced as immutable. As mentioned, views can capture immutability and give just that part of the collection interface that would be appropriate for this constraint.

....

I do not know if the choice of making the basic unit an extended grapheme cluster was bold or not.

....

Many thanks for your questions Phillipe, I look forward to hopefully seeing you at a future ESUG event.

Seth Berman

" Since all the optimizations went into this I wonder if at one point users will start asking for CP-1252 views or similar for efficient access and then you'll have to maintain two encoding and decoding stacks."

- From my standpoint, if customers continue to invest

in the product and ask for such things, then we will be happy to do it. Maybe

more to your meaning from an implementation point of view, I haven't really

talked much about the fact that the Unicode algorithms are implemented almost exclusively

in Rust. Rust has genuinely nice native Unicode support modelled

appropriately for a system-level programming language and a super-trim runtime

that we link in. We make use of their various 'crates' to help us get the

functionality we need, and my sincere goal is to be able to hand back to that

community like we did with Dart. In regard to your comment, I would

be taking a strong look at crates like 'encoding_rs' to

get that kind of functionality for our customers.

"I believe going against established consensus and conventional wisdom among 20+ year old programming languages is bold."

- That sounds ominous:) But I understand your meaning and it

is undeniably something that had to be thought about. We find ourselves

in the unusual position that we are a 20+ year old programming language that

currently has near zero formal support for Unicode, and we're just now

implementing it. There is a whole era of languages that had to make those

decisions long ago and chose things like UCS-2 as internal representations and

indexing decisions based off that. Time went on and lessons were learned

about the inadequacies of UCS-2, compatibility was likely a top goal and UTF-16

enters the picture. Their indexing strategy was probably set-in stone at

that point. In the modern era, the more recent languages like Rust, Go, Julia,

Swift (only as of ver5 due to object-c baggage) choose UTF-8 internal representations.

Some that don't, like Dart, have their hands tied because of Javascript.

There is a growing departure away from placing such importance on constant time

indexing. We're seeing more complexity in the underlying structure of

Unicode 'characters' like emoji which undoubtedly puts more strain on codepoint

based implementations. So, in general, I think consensus and wisdom

in this area is still in motion and not established. But to be fair, you

said established consensus/conventional wisdom among 20+ year old

languages. To that I would say those eras of languages are not our

target for this new support. And I would wonder, if those languages had both the body of

knowledge and the Unicode standard as it exists today, would they still be

making the same choices?

I think you are right, a grapheme-cluster based UnicodeString will have the potential to not do what is expected for everybody. This could be from a functional or performance point of view. We will work on optimizing the fast cases for it, but in the end, it’s not a byte string. But mostly, I believe the trade-offs to be favorable. We can abstract away normalization and character boundaries which I believe will be an ultimate win in this environment. I certainly don't believe in one-size-fits-all approaches. For example, Systems-level programming languages probably shouldn't use grapheme-cluster indexing by default. It’s not that way in Rust and I wouldn't expect.

Certainly, given a project of this magnitude, there are

going to be quite a few decisions that have been discussed that are going to

need adjustment (or abandonment) along the way.

This is a good thought exercise and I very much appreciate

it Philippe. I certainly respect your knowledge in this area and many others. I’ve

tried to elaborate as much as possible, even though I know some things have

been obvious to you. I hope you don't mind, I've done it for the benefit of the group as I'm already being told people are getting a great deal out of this.

- Seth

Seth Berman

Richard Sargent

Adriaan van Os

'Smalltalk' asUnicodeString = 'Smalltalk' --> true

'Smalltalk' = 'Smalltalk' asUnicodeString --> false

'Smalltalk' asUnicodeString sameAs: 'Smalltalk' asUnicodeString --> true

'Smalltalk' asUnicodeString sameAs: 'Smalltalk' --> true

'Smalltalk' sameAs: 'Smalltalk' asUnicodeString --> true (!)

'Smalltalk' asUnicodeString first = 'Smalltalk' asUnicodeString first --> true

'Smalltalk' asUnicodeString first = 'Smalltalk' first --> true

'Smalltalk' first = 'Smalltalk' asUnicodeString first --> false

'Smalltalk' asUnicodeString first sameAs: 'Smalltalk' asUnicodeString first --> true

'Smalltalk' asUnicodeString first sameAs: 'Smalltalk' first --> true

'Smalltalk' first sameAs: 'Smalltalk' asUnicodeString first --> false

'Smalltalk' asUnicodeString includesSubstring: 'Smalltalk' asUnicodeString --> true

'Smalltalk' asUnicodeString includesSubstring: 'Smalltalk' --> true

'Smalltalk' includesSubstring: 'Smalltalk' asUnicodeString --> false