Need help printing the exact Posit value

69 views

Skip to first unread message

A Avinash

Mar 6, 2023, 1:34:37 AM3/6/23

to Unum Computing

Hi,

I'm currently trying to compare the results of an algorithm using various datatypes. I'm using C++ and Stillwater Universal library for posits.

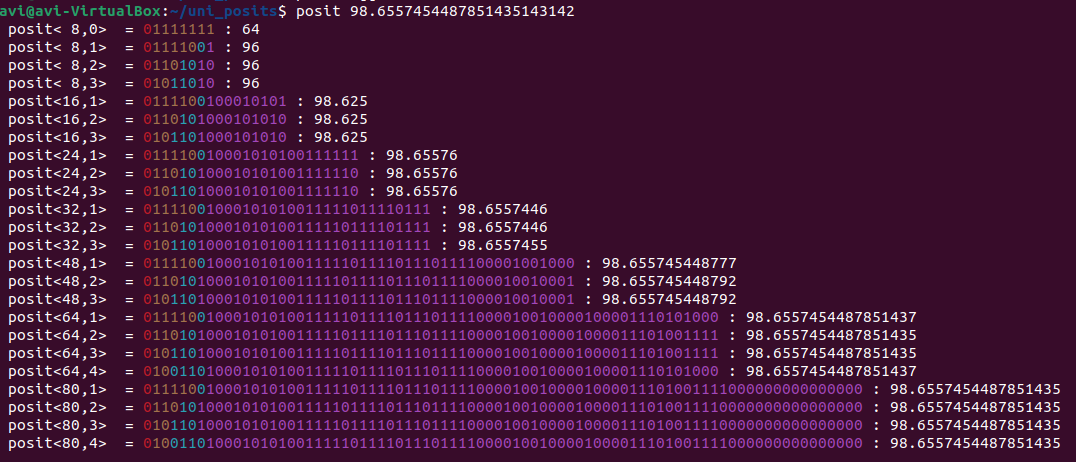

When I print the values they have only 4 to 5 decimal digits. So I used the setprecision method with an argument of 25. The obtained result however doesn't seem to be correct as the posit tool gives a different number.

Is there a way to print the exact value of the posit ?

Thanks

A Avinash

A Avinash

Mar 6, 2023, 1:36:36 AM3/6/23

to Unum Computing

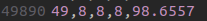

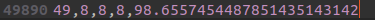

Before setprecision

Before setprecision After setprecision

After setprecision

A Avinash

Mar 6, 2023, 1:45:27 AM3/6/23

to Unum Computing

The posit I used was <64,3>

Theodore Omtzigt

Mar 6, 2023, 9:52:52 AM3/6/23

to Unum Computing

A Avinash:

We have not yet implemented Grisu3 for posits so the current limit is governed by the standard library double conversion. This has been on the todo list for years but we haven't been able to find an open-source developer that has been able to implement this. There have been several tries, and the fixpnt will print to arbitrary precision, but it is way too slow. Would you be interested in implementing decimal conversion for posits?

John Gustafson

Mar 6, 2023, 5:22:04 PM3/6/23

to Theodore Omtzigt, Unum Computing

There is a feature of posits that I hope someone will exploit in any formatting software: The need to express the power-of-ten exponent takes more space just as the decimal digits that are significant take less space, because of the tapered relative accuracy. The number of significand digits need not exceed the number needed to convert to any posit without ambiguity. This is a project almost worthy of a technical paper, if anyone wants to take it on. Let me clarify with some examples:

What about when the exponent requires two decimals to express? By that point, near 1E10, the posits express values 9,663,676,416 and 10,737,418,240, so you only need three significant decimal digits to express the number unambiguously, and the formatting still fits a ten-character budget:

Suppose you are using 16-bit standard posits (2 for the exponent size). I think 10 characters may suffice for all values to be expressed in human-readable form. Which is pretty cool and very compact. Here's what I mean: maxPos is 72,057,594,037,927,936, but it only has one significant digit so you can write it

+7.~E+16

The eight characters express the sign, the decimal point (probably don't need that), the "~" to express that the notation is inexact and there are more digits after the one shown, the E or e symbol to demarcate the exponent, the sign of the exponent, and two decimals to express the exponent. The "~" is a unum computing concept; I prefer to write a raised ellipsis "⋯" instead after the last significant digit, but few people know how to get to that from a standard keyboard so "~" makes more sense.

When the number is in the "sweet spot" with more significant digits, you need as many as five decimals to express a 16-bit posit (and this is shown in the Posit Standard, along with the minimum for 32-bit and 64-bit posits). So the number just barely bigger than 1 is 1 + 1/2048 = 1.00048828125, but all you need is "1.0005" to express that unambiguously for 16-bit posits. So for that number, the format would be

+1.0005~

and there is no need for an exponent field. For a number like 5/4 = 1.25 that can expressed exactly, you would format it as

+1.25

and not need the "~" at all; I assume the three unneeded spaces would be leading blanks, but they could also be trailing blanks.

The smallest 16-bit posit, minPos = 1/maxPos, is about 1.3878E–17, but again there is only one significant digit so

+1.~E-17

is sufficient to express the posit without ambiguity. You can go all the way to the posit for 999,424 with eight characters,

+999424.

and more than that is when you need to start using scientific notation. The next-larger posit is 1,003,520, which can still be written unambiguously with ten characters because there are fewer significant digits for that larger magnitude:

+1.004~E+6

+1.07~E+10

While expressing the exact posit value may be useful in some situations, it is mostly misleading since it makes it look like there are more significant digits than there really are. A fundamental principle of unum computing is to express what you don't know about a number. If we could get people used to the "~" annotation, it would go a long way to avoid confusion about why 0.1 + 0.2 isn't exactly 0.3 in binary formats for real numbers.

I envision a compiler that gives an error warning if you try to compile

posit16 x;x = 0.1;

just as it would complain if you tried to assign 0.1 to an integer. At least, I hope it would complain and not just silently truncate the 0.1 value to 0. The compiler would accept, for example,

posit16 x;x = 0.10000~;

since the closest 16-bit posit to 0.1 is 0.100006103515625. Now you have a way of making explicit the inexactness of translating (most) decimals into binary.

John

--

You received this message because you are subscribed to the Google Groups "Unum Computing" group.

To unsubscribe from this group and stop receiving emails from it, send an email to unum-computin...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/unum-computing/f881760b-215d-4cdb-af28-30e8fe4db5e0n%40googlegroups.com.

MitchAlsup

Mar 6, 2023, 9:12:35 PM3/6/23

to Unum Computing

Cute !

But you should allow::

posit16 x;

x = 0.1~;

to mean the closest representable number, without requiring trailing 0000s

to mean the closest representable number, without requiring trailing 0000s

Nathan Waivio

Mar 8, 2023, 7:20:42 PM3/8/23

to Unum Computing

Hi All,

For my Haskell implementation of the 3.2 Standard I get:

```

ghci> t = 98.6557454487851435143412 :: Posit64

ghci> displayBinary t

"0b0101101000101010011111011110111011110000100100001000011101001111"

98.65574544878514351

ghci> displayBinary t

"0b0101101000101010011111011110111011110000100100001000011101001111"

ghci> displayRational t

"444305978441107279 % 4503599627370496"

"444305978441107279 % 4503599627370496"

ghci> displayDecimal t

"98.6557454487851435143141998196369968354701995849609375"

ghci> t"98.6557454487851435143141998196369968354701995849609375"

98.65574544878514351

```

For the default case at the end, I just made up code like this for the standard pretty printed output:

```

-- decimal Precision

decimalPrec :: Int

decimalPrec :: Int

decimalPrec = fromIntegral $ 2 * (nBytes @es) + 1

```

To set the decimal precision because the Standard didn't have anything really defined.

The `displayDecimal` version uses a Haskell library that will print the Rational number as a decimal until it detects a repetition of the rational decimal representation.

Nathan.

Reply all

Reply to author

Forward

0 new messages