Recommended stress time

Sergey Matveev

If I run stressapptest without any parameters, when it will finish

after 20 seconds.

But if I want to test some modern server, to make it very hot, to hang

him if it has defective main components, such as processor, memory or

buses - 20 seconds is not enough to make him warm.

Of course 12 or more hours will be pretty enough I think, but it is

expensive :-).

Can you recommend some time ranges enough to really make hot server,

for example server with 2 Opteron processors with 16 GB of RAM, or

with 4 Opteron processors with 64 GB of RAM.

Thanks in advance!

--

Happy hacking, Sergey Matveev

FSF Associate member #5968 | FSFE Fellow #1390

Nick Sanders

so it's probably important to run longer than that, maybe 20 minutes total.. Beyond that,

it's a pretty straightforward tradeoff between spending more time to discover more errors.

For maximum stress, you can change the number of memory access threads with "-m X",

and run cpu stress threads with "-C X", depending on your compute to memory bandwidth

ratio, the exact count of cpu to memory threads may be different. "-W" may help your

machine become hotter, although it's of effective across all cpus.

You can try increasing the number of cpu threads / decreasing the number of memory

threads until the memory bandwidth used drops off, or trying different configurations

until you reach a maximum temperature.You can also modify os.h to use

AdlerMemcpyAsm instead of AdlerMemcpyWarmC, and use the "-W" flag, if you have sse2.

Thanks,

-Nick

Yvan Tian

Nick Sanders

How to know "the memory bandwidth used drops off"?

--

---

You received this message because you are subscribed to the Google Groups "stressapptest-discuss" group.

To unsubscribe from this group and stop receiving emails from it, send an email to stressapptest-di...@googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

Yvan Tian

[root@localhost stressapptest_v18]# stressapptest -M 10636.94843 -m 48 -i 48 -c 48 -W --read-block-size 1024000 --write-block-size 1024000 --read-threshold 100000 --write-threshold 100000 --segment-size 10240000000 --blocks-per-segment 32 -s 900 -d /dev/sdb1 -l runlog

2018/11/09-23:55:46(CST) Log: Commandline - stressapptest -M 10636.94843 -m 48 -i 48 -c 48 -W --read-block-size 1024000 --write-block-size 1024000 --read-threshold 100000 --write-threshold 100000 --segment-size 10240000000 --blocks-per-segment 32 -s 900 -d /dev/sdb1 -l runlog

2018/11/09-23:55:46(CST) Stats: SAT revision 1.0.9_autoconf, 64 bit binary

2018/11/09-23:55:46(CST) Log: root @ localhost.localdomain on Fri Nov 9 18:45:35 CST 2018 from open source release

2018/11/09-23:55:46(CST) Log: 1 nodes, 24 cpus.

2018/11/09-23:55:46(CST) Log: Prefer plain malloc memory allocation.

2018/11/09-23:55:46(CST) Log: Using mmap() allocation at 0x7f70e0602000.

2018/11/09-23:55:46(CST) Stats: Starting SAT, 10636M, 900 seconds

2018/11/09-23:55:48(CST) Log: region number 17 exceeds region count 1

2018/11/09-23:55:48(CST) Log: Region mask: 0x1

2018/11/09-23:55:59(CST) Log: Seconds remaining: 890

2018/11/09-23:56:09(CST) Log: Seconds remaining: 880

2018/11/09-23:56:19(CST) Log: Seconds remaining: 870

2018/11/09-23:56:29(CST) Log: Seconds remaining: 860

2018/11/09-23:56:39(CST) Log: Seconds remaining: 850

2018/11/09-23:56:49(CST) Log: Seconds remaining: 840

2018/11/09-23:56:59(CST) Log: Seconds remaining: 830

2018/11/09-23:57:09(CST) Log: Seconds remaining: 820

2018/11/09-23:57:19(CST) Log: Seconds remaining: 810

2018/11/09-23:57:29(CST) Log: Seconds remaining: 800

2018/11/09-23:57:39(CST) Log: Seconds remaining: 790

2018/11/09-23:57:49(CST) Log: Seconds remaining: 780

2018/11/09-23:57:59(CST) Log: Seconds remaining: 770

2018/11/09-23:58:09(CST) Log: Seconds remaining: 760

2018/11/09-23:58:19(CST) Log: Seconds remaining: 750

2018/11/09-23:58:29(CST) Log: Seconds remaining: 740

2018/11/09-23:58:39(CST) Log: Seconds remaining: 730

2018/11/09-23:58:49(CST) Log: Seconds remaining: 720

2018/11/09-23:58:59(CST) Log: Seconds remaining: 710

2018/11/09-23:59:09(CST) Log: Seconds remaining: 700

2018/11/09-23:59:19(CST) Log: Seconds remaining: 690

2018/11/09-23:59:29(CST) Log: Seconds remaining: 680

2018/11/09-23:59:39(CST) Log: Seconds remaining: 670

2018/11/09-23:59:49(CST) Log: Seconds remaining: 660

2018/11/09-23:59:59(CST) Log: Seconds remaining: 650

2018/11/10-00:00:09(CST) Log: Seconds remaining: 640

2018/11/10-00:00:19(CST) Log: Seconds remaining: 630

2018/11/10-00:00:29(CST) Log: Seconds remaining: 620

2018/11/10-00:00:39(CST) Log: Seconds remaining: 610

2018/11/10-00:00:49(CST) Log: Seconds remaining: 600

2018/11/10-00:00:59(CST) Log: Seconds remaining: 590

2018/11/10-00:01:04(CST) Log: Read took 135524 us which is longer than threshold 100000 us on disk /dev/sdb1 (thread 145).

2018/11/10-00:01:04(CST) Log: Read took 169463 us which is longer than threshold 100000 us on disk /dev/sdb1 (thread 145).

2018/11/10-00:01:05(CST) Log: Read took 473145 us which is longer than threshold 100000 us on disk /dev/sdb1 (thread 145).

2018/11/10-00:01:05(CST) Log: Read took 144052 us which is longer than threshold 100000 us on disk /dev/sdb1 (thread 145).

2018/11/10-00:01:06(CST) Log: Read took 145652 us which is longer than threshold 100000 us on disk /dev/sdb1 (thread 145).

2018/11/10-00:01:07(CST) Log: Read took 118733 us which is longer than threshold 100000 us on disk /dev/sdb1 (thread 145).

2018/11/10-00:01:09(CST) Log: Seconds remaining: 580

2018/11/10-00:01:19(CST) Log: Seconds remaining: 570

2018/11/10-00:01:29(CST) Log: Seconds remaining: 560

2018/11/10-00:01:39(CST) Log: Seconds remaining: 550

2018/11/10-00:01:49(CST) Log: Seconds remaining: 540

2018/11/10-00:01:59(CST) Log: Seconds remaining: 530

2018/11/10-00:02:09(CST) Log: Seconds remaining: 520

2018/11/10-00:02:19(CST) Log: Seconds remaining: 510

2018/11/10-00:02:29(CST) Log: Seconds remaining: 500

2018/11/10-00:02:39(CST) Log: Seconds remaining: 490

2018/11/10-00:02:49(CST) Log: Seconds remaining: 480

2018/11/10-00:02:59(CST) Log: Seconds remaining: 470

2018/11/10-00:03:09(CST) Log: Seconds remaining: 460

2018/11/10-00:03:19(CST) Log: Seconds remaining: 450

2018/11/10-00:03:29(CST) Log: Seconds remaining: 440

2018/11/10-00:03:39(CST) Log: Seconds remaining: 430

2018/11/10-00:03:49(CST) Log: Seconds remaining: 420

2018/11/10-00:03:59(CST) Log: Seconds remaining: 410

2018/11/10-00:04:09(CST) Log: Seconds remaining: 400

2018/11/10-00:04:19(CST) Log: Seconds remaining: 390

2018/11/10-00:04:29(CST) Log: Seconds remaining: 380

2018/11/10-00:04:39(CST) Log: Seconds remaining: 370

2018/11/10-00:04:49(CST) Log: Seconds remaining: 360

2018/11/10-00:04:59(CST) Log: Seconds remaining: 350

2018/11/10-00:05:02(CST) Log: Read took 315416 us which is longer than threshold 100000 us on disk /dev/sdb1 (thread 145).

2018/11/10-00:05:09(CST) Log: Seconds remaining: 340

2018/11/10-00:05:19(CST) Log: Seconds remaining: 330

2018/11/10-00:05:29(CST) Log: Seconds remaining: 320

2018/11/10-00:05:39(CST) Log: Seconds remaining: 310

2018/11/10-00:05:49(CST) Log: Seconds remaining: 300

2018/11/10-00:05:49(CST) Log: Pausing worker threads in preparation for power spike (300 seconds remaining)

2018/11/10-00:05:59(CST) Log: Seconds remaining: 290

2018/11/10-00:06:04(CST) Log: Resuming worker threads to cause a power spike (285 seconds remaining)

2018/11/10-00:06:09(CST) Log: Seconds remaining: 280

2018/11/10-00:06:19(CST) Log: Seconds remaining: 270

2018/11/10-00:06:29(CST) Log: Seconds remaining: 260

2018/11/10-00:06:39(CST) Log: Seconds remaining: 250

2018/11/10-00:06:49(CST) Log: Seconds remaining: 240

2018/11/10-00:06:59(CST) Log: Seconds remaining: 230

2018/11/10-00:07:09(CST) Log: Seconds remaining: 220

2018/11/10-00:07:19(CST) Log: Seconds remaining: 210

2018/11/10-00:07:29(CST) Log: Seconds remaining: 200

2018/11/10-00:07:39(CST) Log: Seconds remaining: 190

2018/11/10-00:07:49(CST) Log: Seconds remaining: 180

2018/11/10-00:07:59(CST) Log: Seconds remaining: 170

2018/11/10-00:08:09(CST) Log: Seconds remaining: 160

2018/11/10-00:08:19(CST) Log: Seconds remaining: 150

2018/11/10-00:08:29(CST) Log: Seconds remaining: 140

2018/11/10-00:08:39(CST) Log: Seconds remaining: 130

2018/11/10-00:08:49(CST) Log: Seconds remaining: 120

2018/11/10-00:08:59(CST) Log: Seconds remaining: 110

2018/11/10-00:09:09(CST) Log: Seconds remaining: 100

2018/11/10-00:09:19(CST) Log: Seconds remaining: 90

2018/11/10-00:09:29(CST) Log: Seconds remaining: 80

2018/11/10-00:09:39(CST) Log: Seconds remaining: 70

2018/11/10-00:09:49(CST) Log: Seconds remaining: 60

2018/11/10-00:09:59(CST) Log: Seconds remaining: 50

2018/11/10-00:10:09(CST) Log: Seconds remaining: 40

2018/11/10-00:10:19(CST) Log: Seconds remaining: 30

2018/11/10-00:10:29(CST) Log: Seconds remaining: 20

2018/11/10-00:10:39(CST) Log: Seconds remaining: 10

2018/11/10-00:10:50(CST) Stats: Found 0 hardware incidents

2018/11/10-00:10:50(CST) Stats: Completed: 13841693.00M in 901.17s 15359.63MB/s, with 0 hardware incidents, 0 errors

2018/11/10-00:10:50(CST) Stats: Memory Copy: 5298400.00M at 5883.14MB/s

2018/11/10-00:10:50(CST) Stats: File Copy: 0.00M at 0.00MB/s

2018/11/10-00:10:50(CST) Stats: Net Copy: 0.00M at 0.00MB/s

2018/11/10-00:10:50(CST) Stats: Data Check: 3260596.00M at 3619.53MB/s

2018/11/10-00:10:50(CST) Stats: Invert Data: 5228520.00M at 5807.38MB/s

2018/11/10-00:10:50(CST) Stats: Disk: 54177.00M at 60.18MB/s

2018/11/10-00:10:50(CST)

2018/11/10-00:10:50(CST) Status: PASS - please verify no corrected errors

2018/11/10-00:10:50(CST)

#2 1800s

[root@localhost stressapptest_v18]# stressapptest -M 10636.94843 -m 48 -i 48 -c 48 -W --read-block-size 1024000 --write-block-size 1024000 --read-threshold 100000 --write-threshold 100000 --segment-size 10240000000 --blocks-per-segment 32 -s 1800 -d /dev/sdb1 -l runlog

2018/11/10-01:08:30(CST) Log: Commandline - stressapptest -M 10636.94843 -m 48 -i 48 -c 48 -W --read-block-size 1024000 --write-block-size 1024000 --read-threshold 100000 --write-threshold 100000 --segment-size 10240000000 --blocks-per-segment 32 -s 1800 -d /dev/sdb1 -l runlog

2018/11/10-01:08:30(CST) Stats: SAT revision 1.0.9_autoconf, 64 bit binary

2018/11/10-01:08:30(CST) Log: root @ localhost.localdomain on Fri Nov 9 18:45:35 CST 2018 from open source release

2018/11/10-01:08:30(CST) Log: 1 nodes, 24 cpus.

2018/11/10-01:08:30(CST) Log: Prefer plain malloc memory allocation.

2018/11/10-01:08:30(CST) Log: Using mmap() allocation at 0x7f2bfd791000.

2018/11/10-01:08:30(CST) Stats: Starting SAT, 10636M, 1800 seconds

2018/11/10-01:08:32(CST) Log: region number 32 exceeds region count 1

2018/11/10-01:08:33(CST) Log: Region mask: 0x1

2018/11/10-01:08:43(CST) Log: Seconds remaining: 1790

2018/11/10-01:08:53(CST) Log: Seconds remaining: 1780

2018/11/10-01:09:03(CST) Log: Seconds remaining: 1770

2018/11/10-01:09:13(CST) Log: Seconds remaining: 1760

2018/11/10-01:09:23(CST) Log: Seconds remaining: 1750

2018/11/10-01:09:33(CST) Log: Seconds remaining: 1740

2018/11/10-01:09:43(CST) Log: Seconds remaining: 1730

2018/11/10-01:09:53(CST) Log: Seconds remaining: 1720

2018/11/10-01:10:03(CST) Log: Seconds remaining: 1710

2018/11/10-01:10:13(CST) Log: Seconds remaining: 1700

2018/11/10-01:10:23(CST) Log: Seconds remaining: 1690

2018/11/10-01:10:33(CST) Log: Seconds remaining: 1680

2018/11/10-01:10:43(CST) Log: Seconds remaining: 1670

2018/11/10-01:10:53(CST) Log: Seconds remaining: 1660

2018/11/10-01:11:03(CST) Log: Seconds remaining: 1650

2018/11/10-01:11:13(CST) Log: Seconds remaining: 1640

2018/11/10-01:11:23(CST) Log: Seconds remaining: 1630

2018/11/10-01:11:33(CST) Log: Seconds remaining: 1620

2018/11/10-01:11:43(CST) Log: Seconds remaining: 1610

2018/11/10-01:11:53(CST) Log: Seconds remaining: 1600

2018/11/10-01:12:03(CST) Log: Seconds remaining: 1590

2018/11/10-01:12:13(CST) Log: Seconds remaining: 1580

2018/11/10-01:12:23(CST) Log: Seconds remaining: 1570

2018/11/10-01:12:33(CST) Log: Seconds remaining: 1560

2018/11/10-01:12:43(CST) Log: Seconds remaining: 1550

2018/11/10-01:12:53(CST) Log: Seconds remaining: 1540

2018/11/10-01:13:03(CST) Log: Seconds remaining: 1530

2018/11/10-01:13:13(CST) Log: Seconds remaining: 1520

2018/11/10-01:13:23(CST) Log: Seconds remaining: 1510

2018/11/10-01:13:33(CST) Log: Seconds remaining: 1500

2018/11/10-01:13:43(CST) Log: Seconds remaining: 1490

2018/11/10-01:13:53(CST) Log: Seconds remaining: 1480

2018/11/10-01:14:03(CST) Log: Seconds remaining: 1470

2018/11/10-01:14:13(CST) Log: Seconds remaining: 1460

2018/11/10-01:14:23(CST) Log: Seconds remaining: 1450

2018/11/10-01:14:33(CST) Log: Seconds remaining: 1440

2018/11/10-01:14:43(CST) Log: Seconds remaining: 1430

2018/11/10-01:14:53(CST) Log: Seconds remaining: 1420

2018/11/10-01:15:03(CST) Log: Seconds remaining: 1410

2018/11/10-01:15:13(CST) Log: Seconds remaining: 1400

2018/11/10-01:15:23(CST) Log: Seconds remaining: 1390

2018/11/10-01:15:33(CST) Log: Seconds remaining: 1380

2018/11/10-01:15:43(CST) Log: Seconds remaining: 1370

2018/11/10-01:15:53(CST) Log: Seconds remaining: 1360

2018/11/10-01:16:03(CST) Log: Seconds remaining: 1350

2018/11/10-01:16:13(CST) Log: Seconds remaining: 1340

2018/11/10-01:16:23(CST) Log: Seconds remaining: 1330

2018/11/10-01:16:33(CST) Log: Seconds remaining: 1320

2018/11/10-01:16:43(CST) Log: Seconds remaining: 1310

2018/11/10-01:16:53(CST) Log: Seconds remaining: 1300

2018/11/10-01:17:03(CST) Log: Seconds remaining: 1290

2018/11/10-01:17:13(CST) Log: Seconds remaining: 1280

2018/11/10-01:17:23(CST) Log: Seconds remaining: 1270

2018/11/10-01:17:33(CST) Log: Seconds remaining: 1260

2018/11/10-01:17:43(CST) Log: Seconds remaining: 1250

2018/11/10-01:17:53(CST) Log: Seconds remaining: 1240

2018/11/10-01:18:03(CST) Log: Seconds remaining: 1230

2018/11/10-01:18:04(CST) Log: Read took 132598 us which is longer than threshold 100000 us on disk /dev/sdb1 (thread 145).

2018/11/10-01:18:04(CST) Log: Read took 135571 us which is longer than threshold 100000 us on disk /dev/sdb1 (thread 145).

2018/11/10-01:18:04(CST) Log: Read took 138136 us which is longer than threshold 100000 us on disk /dev/sdb1 (thread 145).

2018/11/10-01:18:13(CST) Log: Seconds remaining: 1220

2018/11/10-01:18:23(CST) Log: Seconds remaining: 1210

2018/11/10-01:18:33(CST) Log: Seconds remaining: 1200

2018/11/10-01:18:33(CST) Log: Pausing worker threads in preparation for power spike (1200 seconds remaining)

2018/11/10-01:18:43(CST) Log: Seconds remaining: 1190

2018/11/10-01:18:48(CST) Log: Resuming worker threads to cause a power spike (1185 seconds remaining)

2018/11/10-01:18:53(CST) Log: Seconds remaining: 1180

2018/11/10-01:19:03(CST) Log: Seconds remaining: 1170

2018/11/10-01:19:13(CST) Log: Seconds remaining: 1160

2018/11/10-01:19:23(CST) Log: Seconds remaining: 1150

2018/11/10-01:19:33(CST) Log: Seconds remaining: 1140

2018/11/10-01:19:43(CST) Log: Seconds remaining: 1130

2018/11/10-01:19:53(CST) Log: Seconds remaining: 1120

2018/11/10-01:20:03(CST) Log: Seconds remaining: 1110

2018/11/10-01:20:13(CST) Log: Seconds remaining: 1100

2018/11/10-01:20:23(CST) Log: Seconds remaining: 1090

2018/11/10-01:20:33(CST) Log: Seconds remaining: 1080

2018/11/10-01:20:43(CST) Log: Seconds remaining: 1070

2018/11/10-01:20:53(CST) Log: Seconds remaining: 1060

2018/11/10-01:21:03(CST) Log: Seconds remaining: 1050

2018/11/10-01:21:13(CST) Log: Seconds remaining: 1040

2018/11/10-01:21:23(CST) Log: Seconds remaining: 1030

2018/11/10-01:21:33(CST) Log: Seconds remaining: 1020

2018/11/10-01:21:43(CST) Log: Seconds remaining: 1010

2018/11/10-01:21:53(CST) Log: Seconds remaining: 1000

2018/11/10-01:22:03(CST) Log: Seconds remaining: 990

2018/11/10-01:22:13(CST) Log: Seconds remaining: 980

2018/11/10-01:22:23(CST) Log: Seconds remaining: 970

2018/11/10-01:22:33(CST) Log: Seconds remaining: 960

2018/11/10-01:22:43(CST) Log: Seconds remaining: 950

2018/11/10-01:22:53(CST) Log: Seconds remaining: 940

2018/11/10-01:23:03(CST) Log: Seconds remaining: 930

2018/11/10-01:23:13(CST) Log: Seconds remaining: 920

2018/11/10-01:23:23(CST) Log: Seconds remaining: 910

2018/11/10-01:23:33(CST) Log: Seconds remaining: 900

2018/11/10-01:23:43(CST) Log: Seconds remaining: 890

2018/11/10-01:23:53(CST) Log: Seconds remaining: 880

2018/11/10-01:24:03(CST) Log: Seconds remaining: 870

2018/11/10-01:24:13(CST) Log: Seconds remaining: 860

2018/11/10-01:24:23(CST) Log: Seconds remaining: 850

2018/11/10-01:24:33(CST) Log: Seconds remaining: 840

2018/11/10-01:24:43(CST) Log: Seconds remaining: 830

2018/11/10-01:24:53(CST) Log: Seconds remaining: 820

2018/11/10-01:25:03(CST) Log: Seconds remaining: 810

2018/11/10-01:25:13(CST) Log: Seconds remaining: 800

2018/11/10-01:25:23(CST) Log: Seconds remaining: 790

2018/11/10-01:25:33(CST) Log: Seconds remaining: 780

2018/11/10-01:25:43(CST) Log: Seconds remaining: 770

2018/11/10-01:25:53(CST) Log: Seconds remaining: 760

2018/11/10-01:26:03(CST) Log: Seconds remaining: 750

2018/11/10-01:26:13(CST) Log: Seconds remaining: 740

2018/11/10-01:26:23(CST) Log: Seconds remaining: 730

2018/11/10-01:26:33(CST) Log: Seconds remaining: 720

2018/11/10-01:26:43(CST) Log: Seconds remaining: 710

2018/11/10-01:26:53(CST) Log: Seconds remaining: 700

2018/11/10-01:27:03(CST) Log: Seconds remaining: 690

2018/11/10-01:27:13(CST) Log: Seconds remaining: 680

2018/11/10-01:27:23(CST) Log: Seconds remaining: 670

2018/11/10-01:27:33(CST) Log: Seconds remaining: 660

2018/11/10-01:27:43(CST) Log: Seconds remaining: 650

2018/11/10-01:27:53(CST) Log: Seconds remaining: 640

2018/11/10-01:28:03(CST) Log: Seconds remaining: 630

2018/11/10-01:28:13(CST) Log: Seconds remaining: 620

2018/11/10-01:28:23(CST) Log: Seconds remaining: 610

2018/11/10-01:28:33(CST) Log: Seconds remaining: 600

2018/11/10-01:28:33(CST) Log: Pausing worker threads in preparation for power spike (600 seconds remaining)

2018/11/10-01:28:43(CST) Log: Seconds remaining: 590

2018/11/10-01:28:48(CST) Log: Resuming worker threads to cause a power spike (585 seconds remaining)

2018/11/10-01:28:53(CST) Log: Seconds remaining: 580

2018/11/10-01:29:03(CST) Log: Seconds remaining: 570

2018/11/10-01:29:13(CST) Log: Seconds remaining: 560

2018/11/10-01:29:23(CST) Log: Seconds remaining: 550

2018/11/10-01:29:33(CST) Log: Seconds remaining: 540

2018/11/10-01:29:43(CST) Log: Seconds remaining: 530

2018/11/10-01:29:53(CST) Log: Seconds remaining: 520

2018/11/10-01:30:03(CST) Log: Seconds remaining: 510

2018/11/10-01:30:13(CST) Log: Seconds remaining: 500

2018/11/10-01:30:23(CST) Log: Seconds remaining: 490

2018/11/10-01:30:33(CST) Log: Seconds remaining: 480

2018/11/10-01:30:43(CST) Log: Seconds remaining: 470

2018/11/10-01:30:53(CST) Log: Seconds remaining: 460

2018/11/10-01:31:03(CST) Log: Seconds remaining: 450

2018/11/10-01:31:13(CST) Log: Seconds remaining: 440

2018/11/10-01:31:23(CST) Log: Seconds remaining: 430

2018/11/10-01:31:33(CST) Log: Seconds remaining: 420

2018/11/10-01:31:43(CST) Log: Seconds remaining: 410

2018/11/10-01:31:53(CST) Log: Seconds remaining: 400

2018/11/10-01:32:03(CST) Log: Seconds remaining: 390

2018/11/10-01:32:13(CST) Log: Seconds remaining: 380

2018/11/10-01:32:23(CST) Log: Seconds remaining: 370

2018/11/10-01:32:33(CST) Log: Seconds remaining: 360

2018/11/10-01:32:43(CST) Log: Seconds remaining: 350

2018/11/10-01:32:53(CST) Log: Seconds remaining: 340

2018/11/10-01:33:03(CST) Log: Seconds remaining: 330

2018/11/10-01:33:13(CST) Log: Seconds remaining: 320

2018/11/10-01:33:23(CST) Log: Seconds remaining: 310

2018/11/10-01:33:33(CST) Log: Seconds remaining: 300

2018/11/10-01:33:43(CST) Log: Seconds remaining: 290

2018/11/10-01:33:53(CST) Log: Seconds remaining: 280

2018/11/10-01:34:03(CST) Log: Seconds remaining: 270

2018/11/10-01:34:13(CST) Log: Seconds remaining: 260

2018/11/10-01:34:23(CST) Log: Seconds remaining: 250

2018/11/10-01:34:33(CST) Log: Seconds remaining: 240

2018/11/10-01:34:43(CST) Log: Seconds remaining: 230

2018/11/10-01:34:53(CST) Log: Seconds remaining: 220

2018/11/10-01:35:03(CST) Log: Seconds remaining: 210

2018/11/10-01:35:13(CST) Log: Seconds remaining: 200

2018/11/10-01:35:23(CST) Log: Seconds remaining: 190

2018/11/10-01:35:33(CST) Log: Seconds remaining: 180

2018/11/10-01:35:43(CST) Log: Seconds remaining: 170

2018/11/10-01:35:53(CST) Log: Seconds remaining: 160

2018/11/10-01:36:03(CST) Log: Seconds remaining: 150

2018/11/10-01:36:13(CST) Log: Seconds remaining: 140

2018/11/10-01:36:23(CST) Log: Seconds remaining: 130

2018/11/10-01:36:33(CST) Log: Seconds remaining: 120

2018/11/10-01:36:43(CST) Log: Seconds remaining: 110

2018/11/10-01:36:53(CST) Log: Seconds remaining: 100

2018/11/10-01:37:03(CST) Log: Seconds remaining: 90

2018/11/10-01:37:13(CST) Log: Seconds remaining: 80

2018/11/10-01:37:23(CST) Log: Seconds remaining: 70

2018/11/10-01:37:33(CST) Log: Seconds remaining: 60

2018/11/10-01:37:43(CST) Log: Seconds remaining: 50

2018/11/10-01:37:53(CST) Log: Seconds remaining: 40

2018/11/10-01:38:03(CST) Log: Seconds remaining: 30

2018/11/10-01:38:13(CST) Log: Seconds remaining: 20

2018/11/10-01:38:23(CST) Log: Seconds remaining: 10

2018/11/10-01:38:33(CST) Stats: Found 0 hardware incidents

2018/11/10-01:38:33(CST) Stats: Completed: 27711902.00M in 1800.06s 15395.02MB/s, with 0 hardware incidents, 0 errors

2018/11/10-01:38:33(CST) Stats: Memory Copy: 10569206.00M at 5872.68MB/s

2018/11/10-01:38:33(CST) Stats: File Copy: 0.00M at 0.00MB/s

2018/11/10-01:38:33(CST) Stats: Net Copy: 0.00M at 0.00MB/s

2018/11/10-01:38:33(CST) Stats: Data Check: 6533640.00M at 3629.85MB/s

2018/11/10-01:38:33(CST) Stats: Invert Data: 10500560.00M at 5835.30MB/s

2018/11/10-01:38:33(CST) Stats: Disk: 108494.00M at 60.29MB/s

2018/11/10-01:38:33(CST)

2018/11/10-01:38:33(CST) Status: PASS - please verify no corrected errors

2018/11/10-01:38:33(CST)

Yvan Tian

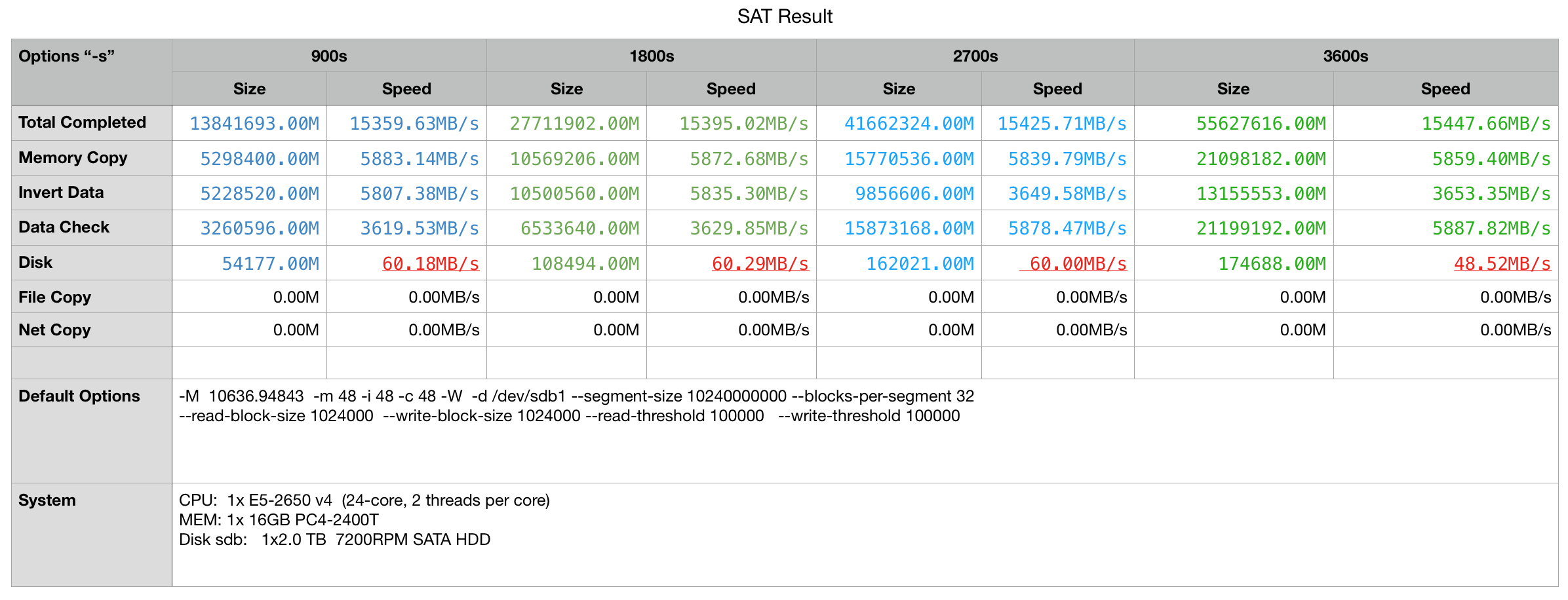

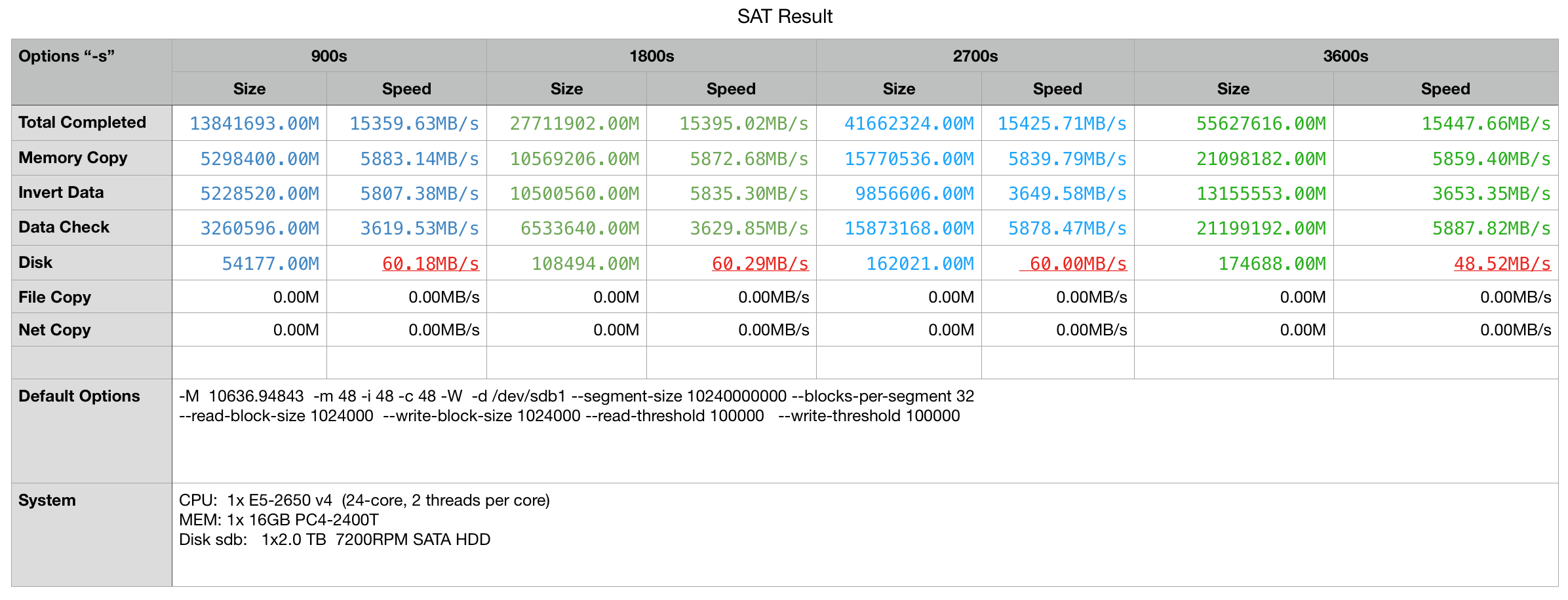

I made some other tests as contrast with each other:

Nick Sanders

I made some other tests as contrast with each other:

Nick Sanders

Yvan Tian

To unsubscribe from this group and stop receiving emails from it, send an email to stressapptest-discuss+unsub...@googlegroups.com.

Nick Sanders

In my understanding, the system as described with 16 DIMMs of memory should be allocated with more reading threads as the bandwidth is much greater than configured with 1DIMM, or there must be more suspended threads as a result of bandwidth competition.

Yvan Tian

在 2018年11月13日星期二 UTC+8上午1:11:13,Nick写道: