Feedback and questions about Soli Sandbox development

97 views

Skip to first unread message

Golan Levin

Aug 3, 2020, 2:24:55 AM8/3/20

to soli-sandbox-community

Hello,

I am a professor of Art at Carnegie Mellon University. My assistant and I have been experimenting for the past couple of days with Soli for the purposes of making expressive interactive artworks. It has been fun, and you can see some of our early prototypes here: https://groups.google.com/d/msg/soli-sandbox-community/D5RWxWXtFbk/lxlswZ4XDAAJ.

We have some observations and requests about Soli development, hopefully easily addressable by someone from the Soli/ATAP team.

Thanks so much,

Golan Levin

Observations from studying the Soli data streams:

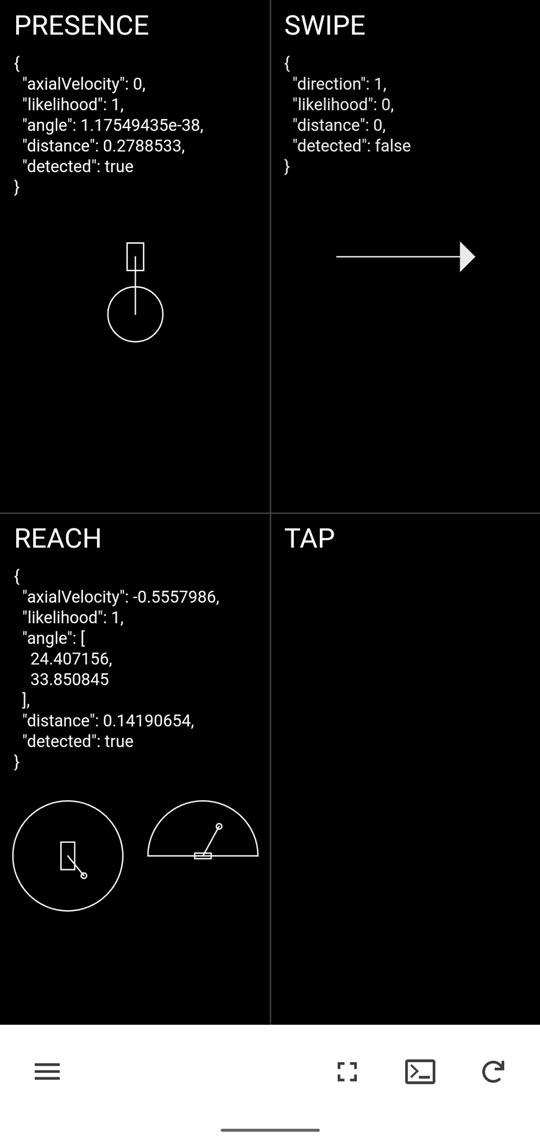

We built a "Dashboard" in order to view the signals coming from the Soli sensor.

This app is located at: https://soli-dashboard.glitch.me/

1. Lag, latency, and responsiveness:

- Soli events are received with an update rate of ~3 Hz. So it’s challenging to produce smooth animation that is coupled to Soli events. Is there any chance this could be improved to 10-30Hz?

- Swipe gestures are detected after a lag of ~300-400 milliseconds. Is this due to the slow update rate, or is this the amount of time required for the ML to decide a gesture has occurred?

- The Reach event is only capable of being detected for some 3-5 seconds, after which no matter where your hand is, it stops detecting, and you have to retrieve your hand and re-Reach. Could this be made to not shut off?

2. "Non-updated data fields" ( — are these bugs?):

The following data fields appear not to be updated, even though they are exposed by the API. Could this be fixed? This data could be useful for new interactions.

- For the Presence event, axialVelocity is always 0;

- For the Presence event, likelihood is always 1;

- For the Presence event, angle is always 1.17e-38 (the smallest positive normalized REAL value, 0x80800000);

- For the Swipe event, distance and likelihood are always 0.

3. Accuracy:

- Data from the Presence gesture's distance field is very noisy (which is understandable, but makes it challenging to use as a continuous controller for something). Are there any tips to improve this?

General Experiences Developing with the Four Soli Gestures.

- "Reach": this gesture sometimes works, sometimes doesn’t. It seems like the Reach event is only generated when the hand approaches the phone from some distance away, and only for a few more seconds when the hand is above the phone...but then it stops detecting. That's a problem. We think it would be much much better if there’s an event that constantly reads the angles and distance while the hand is above the phone, perhaps something like a “hover” event.

- "Tap": Unfortunately, we could not get this gesture to work at all. No matter how we tried, neither my assistant nor I could get it to work even a single time, on either of our two Pixel 4 phones, in different locations, etc. We tried tapping from all angles and distances, at all speeds, with hands or other objects, and nothing worked.

Reliability is an Issue.

The sporadic reliability of the Reach event is pretty frustrating. Currently, it seems like the expected use case is to activate the phone when the user is trying to pick it up. For such a use case, even if it sometimes doesn’t work, it’s not a big deal. But if I’m making an interactive game or experience based on the event and it only works from time to time, then it’s basically unusable.

Delay and Latency.

Overall we think “swipe” is the most robust and usable (we will probably base more demos on this event). However it also has the issue of feeling delayed, with a lag of perhaps 200-300ms. I imagine this is because the gesture is only classified when the whole movement finishes. Currently, it seems like the expected use case is to let the user switch songs, in which case such a delay would be acceptable. But again, if I’m basing an interactive experience on it, it just feels not very responsive. I am sorry to say, that (for things like games) I could achieve the same Swipe interaction with much less latency by doing frame-differencing or optical flow using the front-facing selfie camera.

Thoughts on the Soli Sandbox API.

The Soli Sandbox acts like a “keyboard replacement”, categorizing user motions into 4 bins and then “pressing the button for you”. This makes development very easy (it is possible to prototype Soli apps without actually having a Soli device, just by using a keyboard proxy). But it also profoundly diminishes the scope of the kinds of interactions which can be imagined and developed with this remarkable sensor. The API is biased towards a kind of design that conceives of interaction as "triggers" (as for a record player), and not as continuous controllers (as for a musical instrument).

The sporadic reliability of the Reach event is pretty frustrating. Currently, it seems like the expected use case is to activate the phone when the user is trying to pick it up. For such a use case, even if it sometimes doesn’t work, it’s not a big deal. But if I’m making an interactive game or experience based on the event and it only works from time to time, then it’s basically unusable.

Delay and Latency.

Overall we think “swipe” is the most robust and usable (we will probably base more demos on this event). However it also has the issue of feeling delayed, with a lag of perhaps 200-300ms. I imagine this is because the gesture is only classified when the whole movement finishes. Currently, it seems like the expected use case is to let the user switch songs, in which case such a delay would be acceptable. But again, if I’m basing an interactive experience on it, it just feels not very responsive. I am sorry to say, that (for things like games) I could achieve the same Swipe interaction with much less latency by doing frame-differencing or optical flow using the front-facing selfie camera.

Thoughts on the Soli Sandbox API.

The Soli Sandbox acts like a “keyboard replacement”, categorizing user motions into 4 bins and then “pressing the button for you”. This makes development very easy (it is possible to prototype Soli apps without actually having a Soli device, just by using a keyboard proxy). But it also profoundly diminishes the scope of the kinds of interactions which can be imagined and developed with this remarkable sensor. The API is biased towards a kind of design that conceives of interaction as "triggers" (as for a record player), and not as continuous controllers (as for a musical instrument).

A Request: Raw Sensor Data

When I first heard that Soli was now available in Pixel 4, I recalled with excitement how Theo Watson was tinkering with the Soli back in 2016, and how he made the "World's Tiniest Violin" project, which used the Soli sensor to detect a remarkably tiny and subtle movement. More recently, we have been experimenting in my lab creating ML programs for the Walabot rangefinder, a $100 radar device that produces detailed radar imagery at 100Hz. So we know what kinds of subtle interactions could be captured and detected with near-field radar.

There has been a major transformation in the landscape of interaction design since 2016. Over the last four years, it has become possible for artists and designers to create and train their own Machine Learning classifiers and regressors, using friendly tools like Google Teachable Machine and ml5.js. So while it's terrific that the Soli Sandbox uses p5.js, I think that the design of the API still misses the mark a bit in understanding its audience. Nowadays, even freshman Art and Design students (like mine) are creating and training their own ML models.

We want continuous input! We would like to get the Soli sensor raw data feed in real time, so that we not only have a "keyboard proxy", but can have a ..."mouse proxy" as well. We would like more forms of continuous data fields, similar to the azimuth and elevation angles in “Reach” mode, but constantly generated instead of only when the user makes a reaching motion. We can train our own gesture recognizers/regressors!

Summary

We know what the Soli sensor is capable of. We are excited to experiment with it, and we want to help Google succeed with radar-enabled products. We strongly encourage you to provide access to additional real-time, high-frame-rate, continuous data streams from the sensor.

golan...@gmail.com

Aug 7, 2020, 3:05:04 PM8/7/20

to soli-sandbox-community

Nazia Ahmed

Aug 7, 2020, 6:41:25 PM8/7/20

to soli-sandbo...@googlegroups.com

Thank you, Golan for your questions. Below are our answers for your in depth analysis. Let us know if you have any follow ups, and we’re excited to see what else you create with the Sandbox!

1. Lag, latency, and responsiveness:

Gestures on Soli Sandbox are currently optimized for mobile phone experiences. As you pointed out, Soli technology can be applied to a wide range of applications and use cases, and we are super excited about its possibilities.

Unfortunately, this setting is locked.

Swipe gestures are registered after the complete motion, and there is a slight computational delay as well.

This is by design—the reach gesture in its current state was designed to detect picking up a phone—we are unable to disable this.

2. "Non-updated data fields"

These are reserved fields and are not usable in the Sandbox at this time.

3. Accuracy

We think adding a smoothing filter (e.g., moving average, Kalman filter) may help. The code we shared for hand tracking has smoothing feature built in, so please take a look and let us know if that helps

General Experiences Developing with the Four Soli Gestures.

"Reach": The best way to reliably trigger this event is to reach as though you are going to pick up the phone. We designed this gesture to sense the intent of a person to interact with the device to speed up the face recognition camera, so that’s why you have to reach like you’re going to pick up the phone. Your description of a hover event is interesting. That kind of gesture may be better suited for gaming interactions like the kinds in your team’s prototypes.

“Tap”: We would recommend trying a range of different speeds and scales of the gesture to practice triggering it reliably.

Reliability

Yes, your expected use case is correct. While developing some of our game experiences, we’ve altered game mechanics to fit within these parameters. Check out the Pokémon Wave Hello experience to see some of it in action.

Delay and Latency

Swipe gestures are registered after the complete motion, and there is a slight computational delay as well.

There are advantages and disadvantages of any technology, but here are some of the reasons why we’re excited about using Soli for such use cases:

Understands movement from a wider angle and at different scales (from presence to gestures).

Always sensing to detect movement at low power.

Detects objects and motion through various materials for seamless interaction.

Soli is not a camera and doesn’t capture any visual images.

If you are interested in learning more about our vision for Soli, please check our website or this article we wrote in the Google design blog.

Raw sensor data

We were very excited by the ideas that our developers came up with with the alpha kit back then, but at this time we are not exposing any of the lower level data.

In a future state, a ""dev kit"" would be great to release, but we do not have any public information about it as of now.

Again, thank you so much for your contribution, and please feel free to submit your experiments for the team to check out here

On Sunday, August 2, 2020 at 11:24:55 PM UTC-7, Golan Levin wrote:

Hello,

I am a professor of Art at Carnegie Mellon University. My assistant and I have been experimenting for the past couple of days with Soli for the purposes of making expressive interactive artworks. It has been fun, and you can see some of our early prototypes here: https://groups.google.com/d/msg/soli-sandbox-community/D5RWxWXtFbk/lxlswZ4XDAAJ.

We have some observations and requests about Soli development, hopefully easily addressable by someone from the Soli/ATAP team.

Thanks so much,

Golan Levin

Observations from studying the Soli data streams:

We built a "Dashboard" in order to view the signals coming from the Soli sensor.

This app is located at: https://soli-dashboard.glitch.me/

1. Lag, latency, and responsiveness:

Unfortunately, this setting is locked.

Swipe gestures are registered after the complete motion, and there is a slight computational delay as well.

This is by design—the reach gesture in its current state was designed to detect picking up a phone—we are unable to disable this.

2. "Non-updated data fields"

These are reserved fields and are not usable in the Sandbox at this time.

3. Accuracy

We think adding a smoothing filter (e.g., moving average, Kalman filter) may help. The code we shared for hand tracking has smoothing feature built in, so please take a look and let us know if that helps

General Experiences Developing with the Four Soli Gestures.

“Tap”: We would recommend trying a range of different speeds and scales of the gesture to practice triggering it reliably.

Reliability

Yes, your expected use case is correct. While developing some of our game experiences, we’ve altered game mechanics to fit within these parameters. Check out the Pokémon Wave Hello experience to see some of it in action.

Delay and Latency

Swipe gestures are registered after the complete motion, and there is a slight computational delay as well.

There are advantages and disadvantages of any technology, but here are some of the reasons why we’re excited about using Soli for such use cases:

Understands movement from a wider angle and at different scales (from presence to gestures).

Always sensing to detect movement at low power.

Detects objects and motion through various materials for seamless interaction.

Soli is not a camera and doesn’t capture any visual images.

If you are interested in learning more about our vision for Soli, please check our website or this article we wrote in the Google design blog.

Raw sensor data

We were very excited by the ideas that our developers came up with with the alpha kit back then, but at this time we are not exposing any of the lower level data.

In a future state, a ""dev kit"" would be great to release, but we do not have any public information about it as of now.

Again, thank you so much for your contribution, and please feel free to submit your experiments for the team to check out here

On Sunday, August 2, 2020 at 11:24:55 PM UTC-7, Golan Levin wrote:

Hello,

I am a professor of Art at Carnegie Mellon University. My assistant and I have been experimenting for the past couple of days with Soli for the purposes of making expressive interactive artworks. It has been fun, and you can see some of our early prototypes here: https://groups.google.com/d/msg/soli-sandbox-community/D5RWxWXtFbk/lxlswZ4XDAAJ.

We have some observations and requests about Soli development, hopefully easily addressable by someone from the Soli/ATAP team.

Thanks so much,

Golan Levin

Observations from studying the Soli data streams:

We built a "Dashboard" in order to view the signals coming from the Soli sensor.

This app is located at: https://soli-dashboard.glitch.me/

1. Lag, latency, and responsiveness:

Soli events are received with an update rate of ~3 Hz. So it’s challenging to produce smooth animation that is coupled to Soli events. Is there any chance this could be improved to 10-30Hz?Swipe gestures are detected after a lag of ~300-400 milliseconds. Is this due to the slow update rate, or is this the amount of time required for the ML to decide a gesture has occurred?The Reach event is only capable of being detected for some 3-5 seconds, after which no matter where your hand is, it stops detecting, and you have to retrieve your hand and re-Reach. Could this be made to not shut off?

Reply all

Reply to author

Forward

0 new messages