Local (Rundeck) node breaks in specific projects

Dan Landerman

Dan L

rac...@rundeck.com

Hi Dan,

I reproduced your issue and it seems related to your node tags (your localhost is tagged as “NonProd”?), anyway, I leave a simplified ACL to test (check the application context block), feel free to modify it:

description: project context.

context:

project: ProjectEXAMPLE

for:

resource:

- equals:

kind: 'node'

allow: [read]

- equals:

kind: 'job'

allow: [read]

- equals:

kind: 'event'

allow: [read]

adhoc:

- deny: '*'

job:

- equals:

group: NonProd

allow: [run,read]

node:

- equals:

nodename: 'localhost'

allow: [read,run]

by:

group: mygroup

---

description: app context.

context:

application: 'rundeck'

for:

project:

- match:

name: ProjectEXAMPLE

allow: [read]

storage:

- match:

path: 'keys/.*'

allow: [read]

by:

group: mygroup

Hope it helps!

Dan L

SysadminX

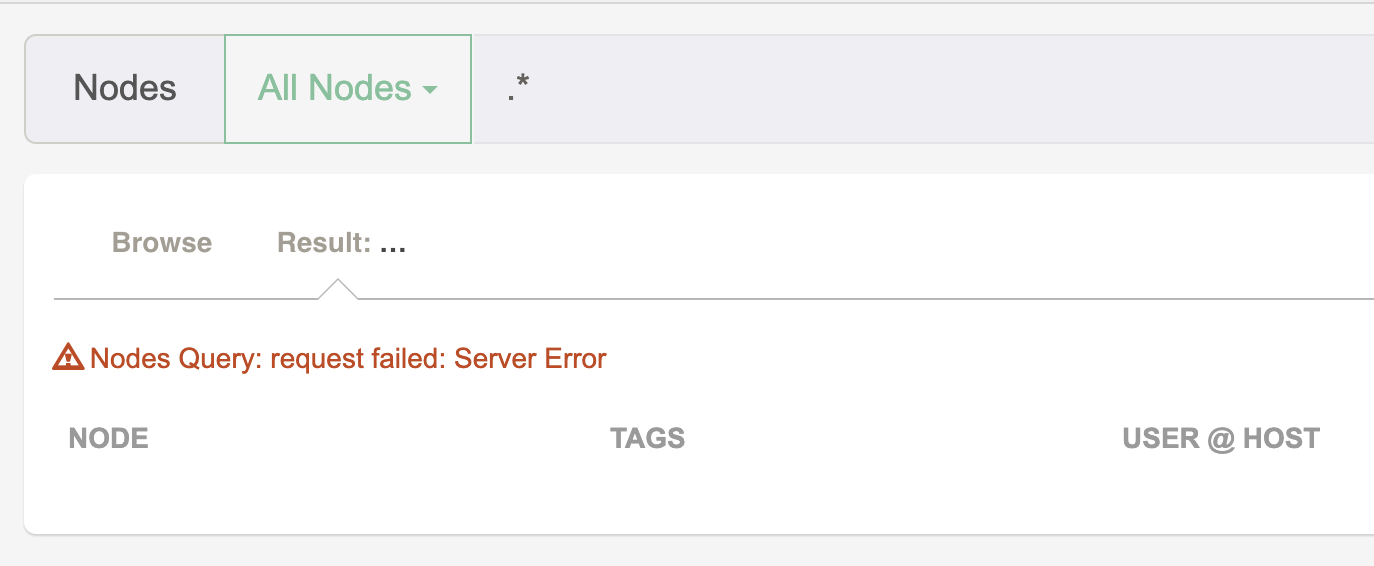

This does not work (the rundeck server is inaccessible):

rac...@rundeck.com

Hi SysadminX,

With the following ACL, it’s possible (tested on 3.4.0). The ACL is focused on the “user” group (you can use username: myuser instead of the group statment).

description: project context.

context:

project: ProjectEXAMPLE

for:

resource:

- equals:

kind: 'node'

allow: [read]

- equals:

kind: 'job'

allow: [read]

- equals:

kind: 'event'

allow: [read]

adhoc:

- allow: '*'

job:

- equals:

name: JobONE

allow: [run,read]

node:

- equals:

tags: 'db'

allow: [read,run]

- equals:

nodename: 'localhost'

allow: [read,run]

by:

group: user

---

description: app context.

context:

application: 'rundeck'

for:

project:

- match:

name: ProjectEXAMPLE

allow: [read]

storage:

- match:

path: 'keys/.*'

allow: [read]

by:

group: user

At the moment of executing the job, applying the .* filter, the user only can execute on the db tagged nodes and localhost (Rundeck server).

Hope it helps!

SysadminX

It seems that the problem is using contains: with tags:. If instead I use equals: with tags: (as you suggested), the ACLs work as expected. I see this issue on Github which appears to report this changed behavior as a bug. Should contains: no longer be used with tags:, or is this indeed a bug?

file1.aclpolicy:

rac...@rundeck.com

Hi,

Yes, the bug is related with “contains” statment. If you put those rules in two separate files Rundeck evaluates one by one, and keeps the same behavior of:

node:

- equals:

tags: 'db'

allow: [read,run]

- equals:

rundeck_server: 'true'

allow: [read,run]

So, I think that the best approach is put those rules on same file.

Regards!

SysadminX

rac...@rundeck.com

Hi SysadminX,

I tested again with your ACL and works as expected (just changing the project name, group name, job name, and the node tag name).

description: project context.

context:

project: ProductionPROJECT

for:

resource:

- equals:

kind: 'node'

allow: [read]

- equals:

kind: 'job'

allow: [read]

- equals:

kind: 'event'

allow: [read]

adhoc:

- allow: '*'

job:

- equals:

name: HelloWorld

allow: [run,read]

node:

- equals:

tags: 'db'

allow: [read,run]

- equals:

rundeck_server: 'true'

allow: [read,run]

by:

group: user

---

description: app context.

context:

application: 'rundeck'

for:

project:

- match:

name: ProductionPROJECT

allow: [read]

storage:

- match:

path: 'keys/.*'

allow: [read]

by:

group: user

Ensure that another ACL does not interfere with the current one. Also, take a look at this.

Regards!

SysadminX

group2.aclpolicy:

rac...@rundeck.com

In that case the best approach is to define separate user profiles for what you want to run. If the user belongs to both groups, will see all the rules defined for both. There is currently no ACL granularity level that defines which specific nodes a specific job can run (the user can see all nodes defined on both acl rules, and all jobs too), anothher approach you can use is to simply use node filters passing via options, or call the jobs from RD-CLI inside on inline script depending on ${job.username} variable.

Greetings!