Query: Policy/actor network update

15 views

Skip to first unread message

Shanu kumar

Sep 19, 2022, 10:27:49 AM9/19/22

to Reinforcement Learning Mailing List

Hello everyone,

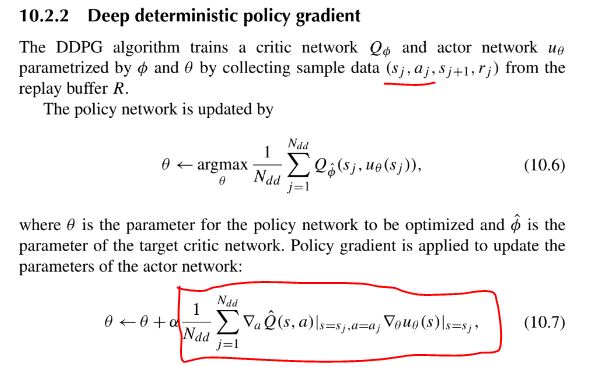

I have recently started working in RL domain and currently working on a sequential recommender system. One equation that I accidently landed to is highlighted below. As I understand this part comes from model based RL.

Generally we take State from the buffer but get action using the current policy instead of taking that directly from replay buffer. I applied this(only the highlighted part to update actor , critic's update is as instructed in DDPG paper, along with priority experience buffer) into my problem anyways and it improved the performance significantly.

I am trying to understand by following above equation:

1) How the performance is improving in terms of sample efficiency(ran it multiple times) and precision(better than model free approach) as this equation can make updates biased and in turn affect critic's performance. Current actor can produce better action for the same state?

2) What kind of problems this is actually be applicable to?

3) Is it learning the local trajectory with priority than the global model?

4) Is it related to MODEL VALUE BASED POLICY GRADIENT or guided policy search ?

If you could guide me to any resource that could help me understand that would be great. Appreciate you help. Thank you in advance !

Best regards,

Shanu

Reply all

Reply to author

Forward

0 new messages