Network module

1,538 views

Skip to first unread message

Tadeusz Pycio

May 22, 2021, 12:58:00 PM5/22/21

to retro-comp

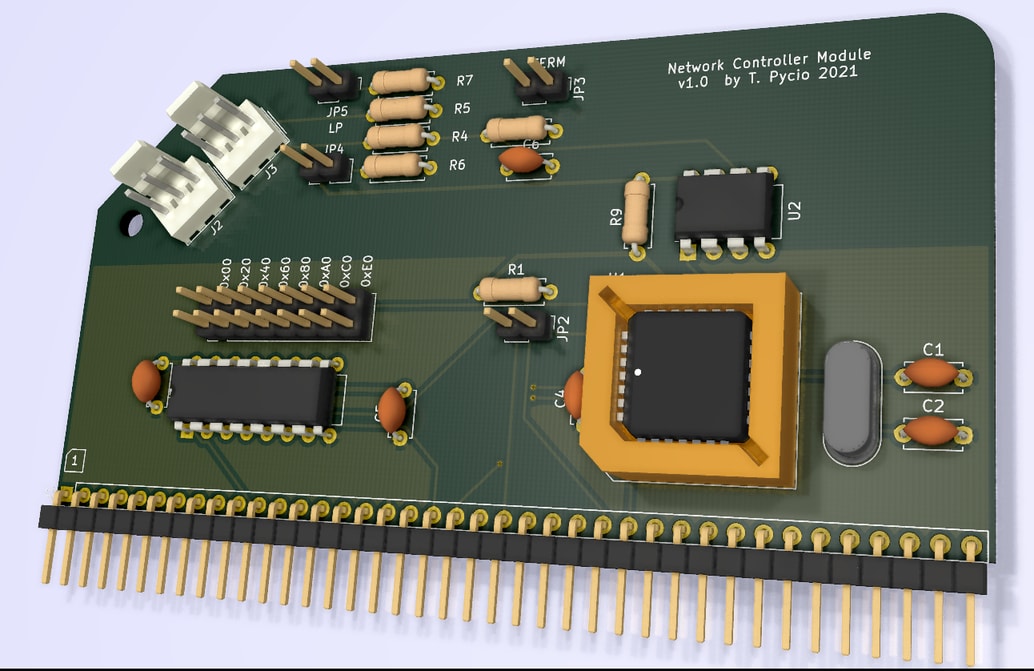

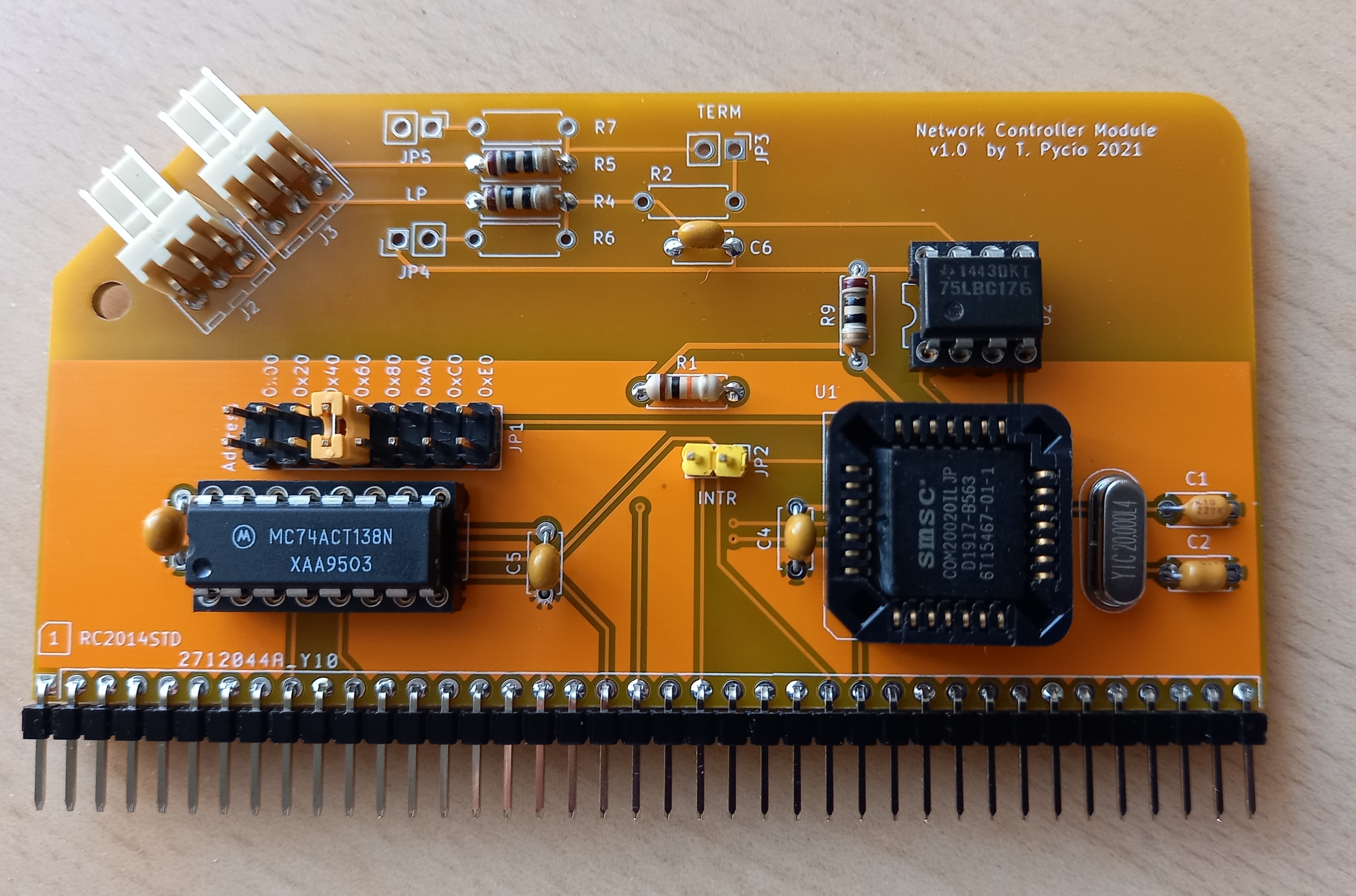

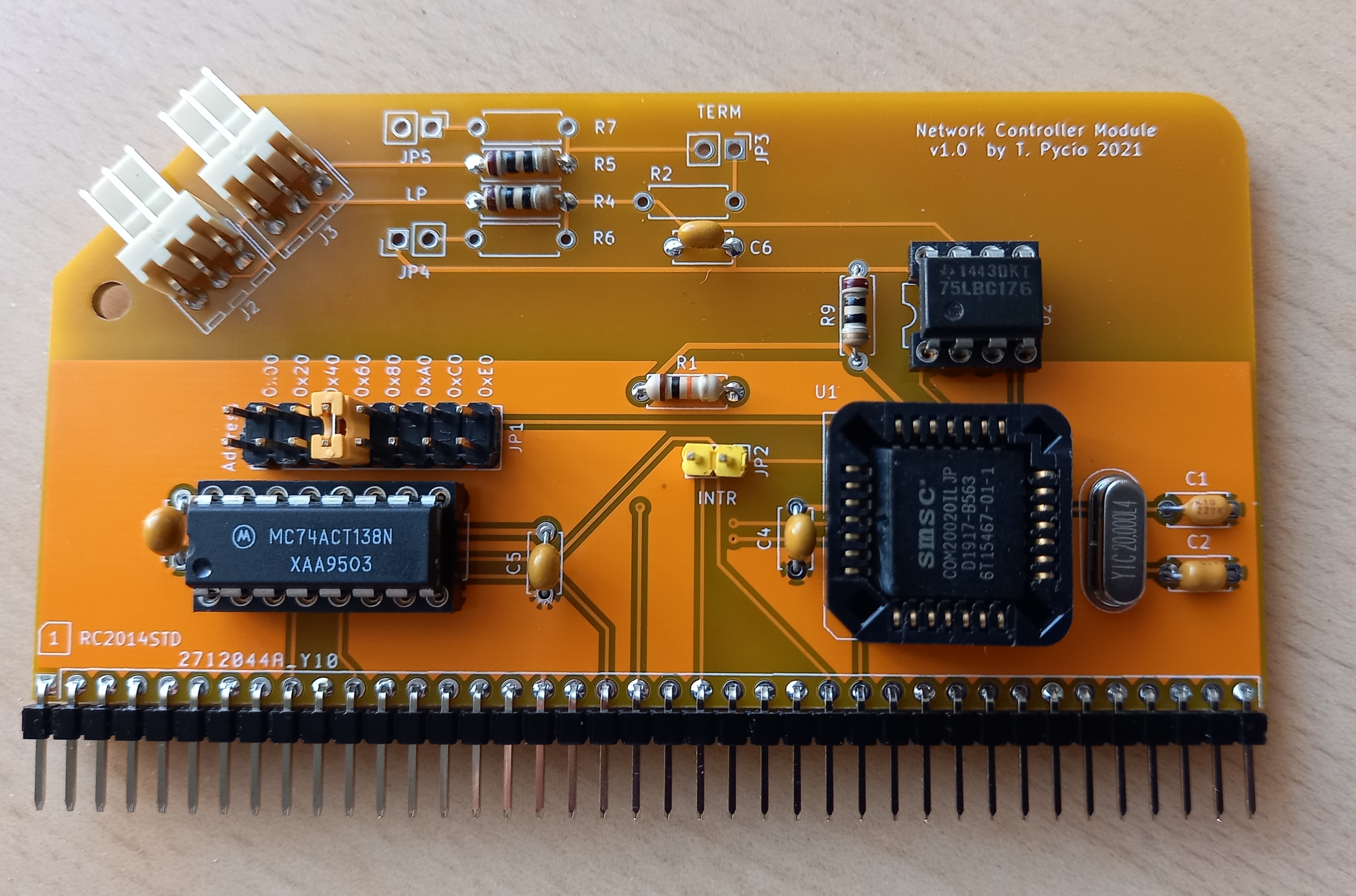

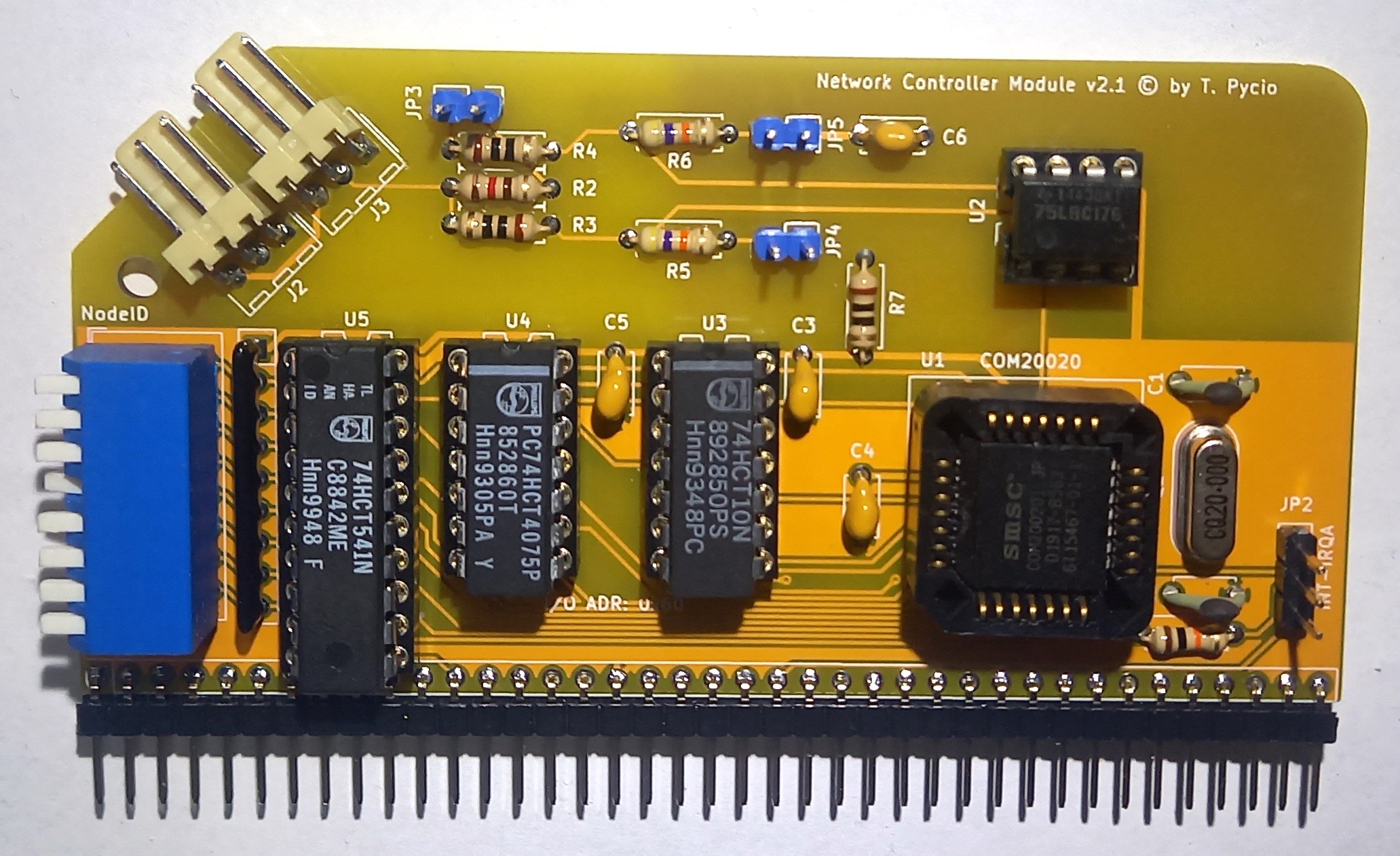

I am planning to put into PCB production soon the LAN module presented here.

The module is to be used for communication between RC2014 computers, transfer from a PC would require additional hardware.

The project is based on the ARCNET controller, and the physical layer is based on the RS485 driver. Theoretically 2.5-5 Mbps transfers can be achieved, depending on the chip used. Practice will show how it works, although the 2kB dual-port RAM in the IC gives hope for success.

Michael Gray

May 22, 2021, 1:45:16 PM5/22/21

to retro-comp

That’s exciting. I’d love to hear more about it (both the hardware and the software stack)! I’ve always been “aware of” ARCNET, but never did see it in enough detail way back when.

Michael

Kurt Pieper

May 22, 2021, 3:51:42 PM5/22/21

to retro-comp

Good idea.

This reminds me of my time on a Novell Distributure around 1983-1988.

CP / M in ARNET that has something!

This reminds me of my time on a Novell Distributure around 1983-1988.

CP / M in ARNET that has something!

Kurt

Douglas Miller

May 22, 2021, 5:12:32 PM5/22/21

to retro-comp

Not to throw water on the fire, but I wonder how useful an obscure/industrial network will be. There are TCP/IP protocol chips that will interface into a modern network and talk to modern PCs (witness: MT011 WizNET board for RC2014).

That being said, we can look into adding CP/NET support for this NIC. https://github.com/durgadas311/cpnet-z80. In this case, all servers will need to also have an ARCNET chip/network i/f.

Tadeusz Pycio

May 22, 2021, 5:38:23 PM5/22/21

to retro-comp

Hi Douglas,

You are absolutely right. In this case, the main goal was to use a simpler protocol without an intermediary layer between the network and the Z80, as Ethernet, and TCP/IP in particular, would be difficult to implement and inefficient. I assumed that this "network" would be able to provide storage to computers without it, a simple multi-processor implementation... I did not even envisage implementing CP/NET, maybe in the distant future. Also, I do not plan to try to adapt this network to work with modern computers at the moment, although it is possible with little effort. I have several different RC2014 computers, let them talk to each other.

Douglas Miller

May 22, 2021, 6:15:45 PM5/22/21

to retro-comp

Understood, but also keep in mind that the WizNET controller presents a very simple interface to the Z80, and does all the TCP/IP protocol itself. so, it is basically the same simplicity as the ARCNET controller, except that the network it connects to is able to connect to all modern computers.

Tadeusz Pycio

May 22, 2021, 7:19:56 PM5/22/21

to retro-comp

Hi Douglas,

It is true. The W5500 module has an entire IP stack, the problem is the lack of an efficient SPI chip adapted to the Z80. The only available (although not produced anymore) chip that performs this function is TP3456 and reaches a max of 4MHz, the implementation on TTL as in the MT011 module is 20MHz. TCP/IP is data hungry. Check the COM20020 product card, you will understand why I chose this path. This is a more advanced Serial Port with a large buffer and support for data packets, specifying the sender, recipient... What more is needed? :D

It is true. The W5500 module has an entire IP stack, the problem is the lack of an efficient SPI chip adapted to the Z80. The only available (although not produced anymore) chip that performs this function is TP3456 and reaches a max of 4MHz, the implementation on TTL as in the MT011 module is 20MHz. TCP/IP is data hungry. Check the COM20020 product card, you will understand why I chose this path. This is a more advanced Serial Port with a large buffer and support for data packets, specifying the sender, recipient... What more is needed? :D

Douglas Miller

May 22, 2021, 7:35:21 PM5/22/21

to retro-comp

I have no interest in arguing, but will just say that the W5500 is capable of 80MHz. The limiting factor is how fast the Zx80 can do I/O. For a given platform, both are capable of the same transfer rates (assuming an improved MT011, which is certainly possible and already on TODO lists) based on Zx80 I/O instructions. The only difference between the two is really the network, W5500 modules support industry standard Ethernet while the ARCNET has a much more limited audience. The W5500 supports 100Mbps on the wire, while this ARCNET module supports only 5Mbps.

On Saturday, May 22, 2021 at 6:19:56 PM UTC-5 Tadeusz Pycio wrote:

Hi Douglas,

It is true. The W5500 module has an entire IP stack, the problem is the lack of an efficient SPI chip adapted to the Z80. The only available (although not produced anymore) chip that performs this function is TP3456 and reaches a max of 4MHz, the implementation on TTL as in the MT011 module is 20MHz. TCP/IP is data hungry. Check the COM20020 product card, you will understand why I chose this path. This is a more advanced Serial Port with a large buffer and support for data packets, specifying the sender, recipient... What more is needed? :D

Tadeusz Pycio

May 22, 2021, 7:58:49 PM5/22/21

to retro-comp

Hi Douglas,

You got me wrong, I'm not going to argue which solution is better, Ethernet is standard, Arcnet is prehistory. There is no discussion here. I just decided that the historical protocol is the best fit for the historical processor, we will not be able to overcome the limitations imposed by the Z80 itself. That is why I chose this standard (which I did not remember for 20 years). The module shown here assumes that this network will be limited to my desk, and I don't think anyone else would want to go that route. Another curiosity. :D

You got me wrong, I'm not going to argue which solution is better, Ethernet is standard, Arcnet is prehistory. There is no discussion here. I just decided that the historical protocol is the best fit for the historical processor, we will not be able to overcome the limitations imposed by the Z80 itself. That is why I chose this standard (which I did not remember for 20 years). The module shown here assumes that this network will be limited to my desk, and I don't think anyone else would want to go that route. Another curiosity. :D

Douglas Miller

May 22, 2021, 8:08:47 PM5/22/21

to retro-comp

Right, the novelty of resurrecting an old network. If you decide you want CP/NET, we can help with that. I would just need whatever you learn from working with the COM20020/1 as to what it needs for initialization and general use. Or, I can help you make your own SNIOS. You'll also need MP/M code to run as a server, which is something I have not done for 40 years but others have done more recently - or else a modern PC interface.

On Saturday, May 22, 2021 at 6:58:49 PM UTC-5 Tadeusz Pycio wrote:

Hi Douglas,

Tadeusz Pycio

May 22, 2021, 8:45:15 PM5/22/21

to retro-comp

Hi Douglas,

Thank you for this declaration of help, it will certainly be useful as I master the basics of data transfer with this IC. Our fun is to bring back to life forgotten solutions, processors, ICs,... :D

Thank you for this declaration of help, it will certainly be useful as I master the basics of data transfer with this IC. Our fun is to bring back to life forgotten solutions, processors, ICs,... :D

John Lodden

May 22, 2021, 8:47:28 PM5/22/21

to retro-comp

TurboDOS from Software 2000 (a CP/M and MP/M compatible OS) has support for ARCnet on a Z80 and 8086 based systems with built-in networking.

Both FileServer and client side TuboDOS networks.

-jrl

Douglas Miller

May 22, 2021, 8:52:14 PM5/22/21

to retro-comp

Using the COM20020/1 controller chip? Or implementing ARCNET on legacy hardware?

John Lodden

May 22, 2021, 8:58:23 PM5/22/21

to retro-comp

TurboDOS has drivers for the COM XXXX series of chips. The proposed RC2014 looks like an almost clone of the EarthNET ARCnet S100 card and the card my company back in the late 80s made.

-jrl

Tadeusz Pycio

Jun 14, 2021, 1:04:43 PM6/14/21

to retro-comp

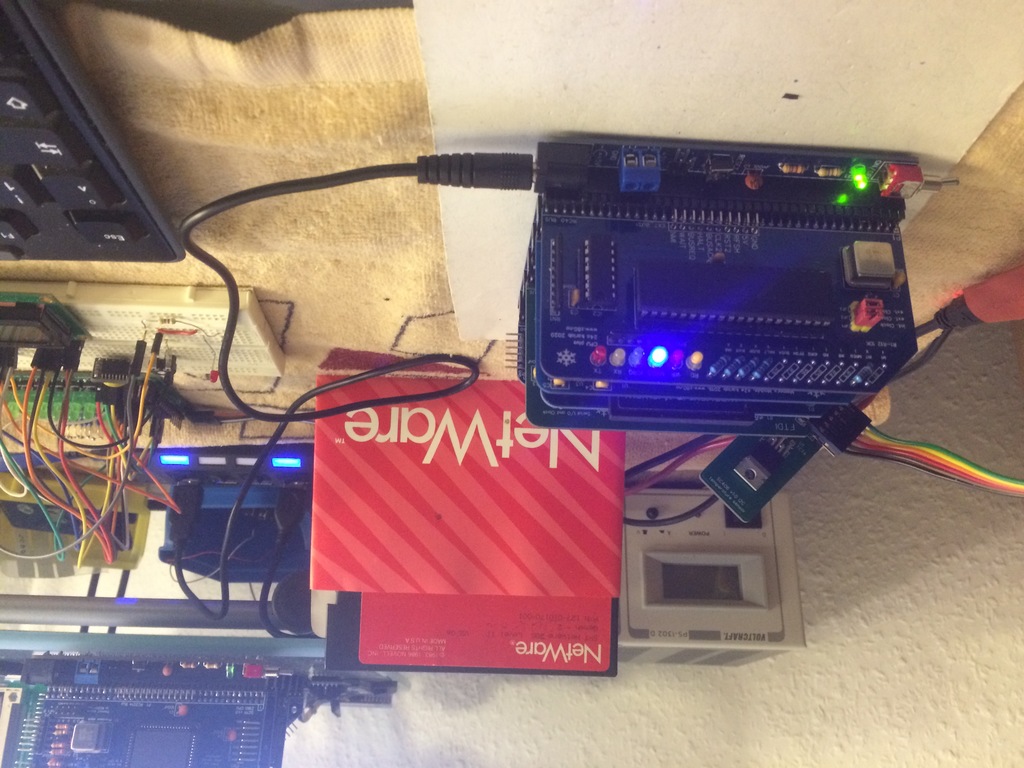

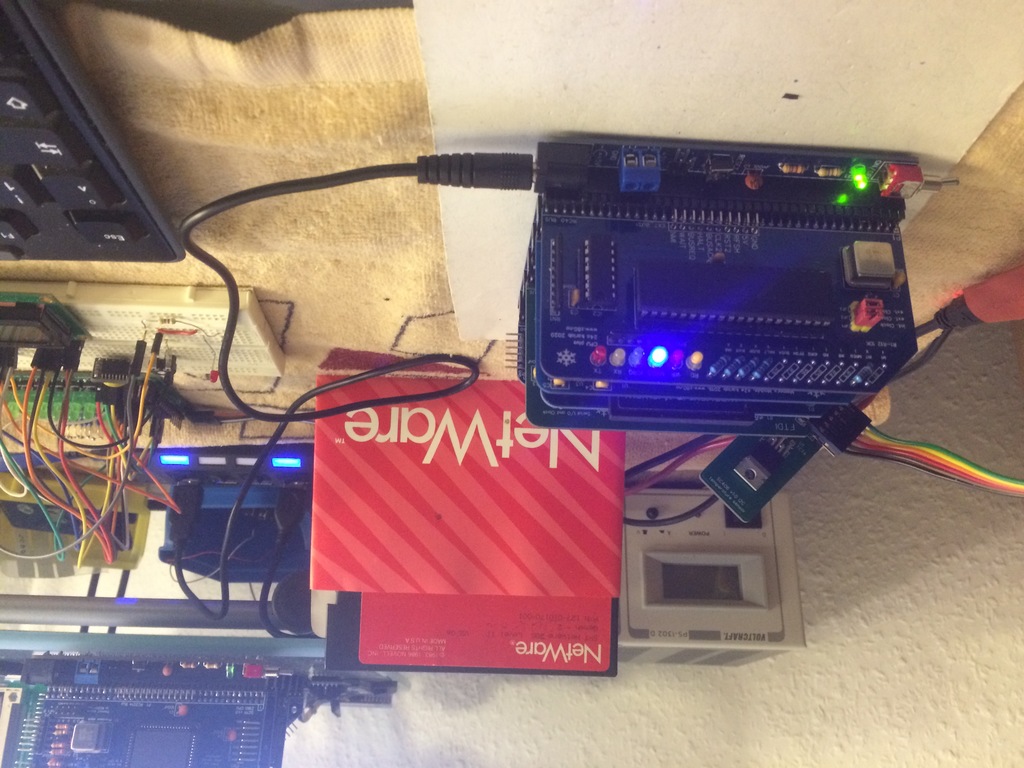

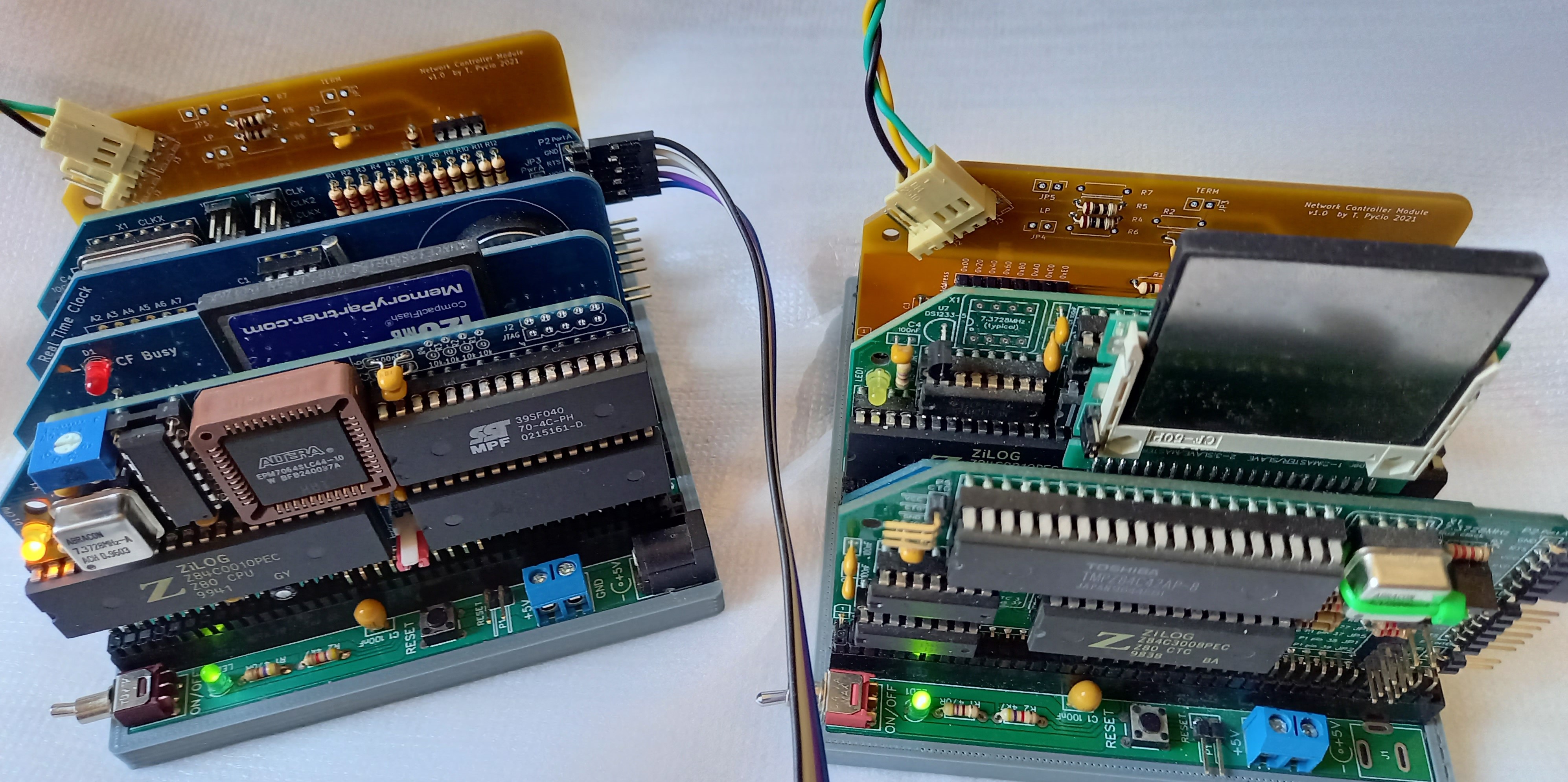

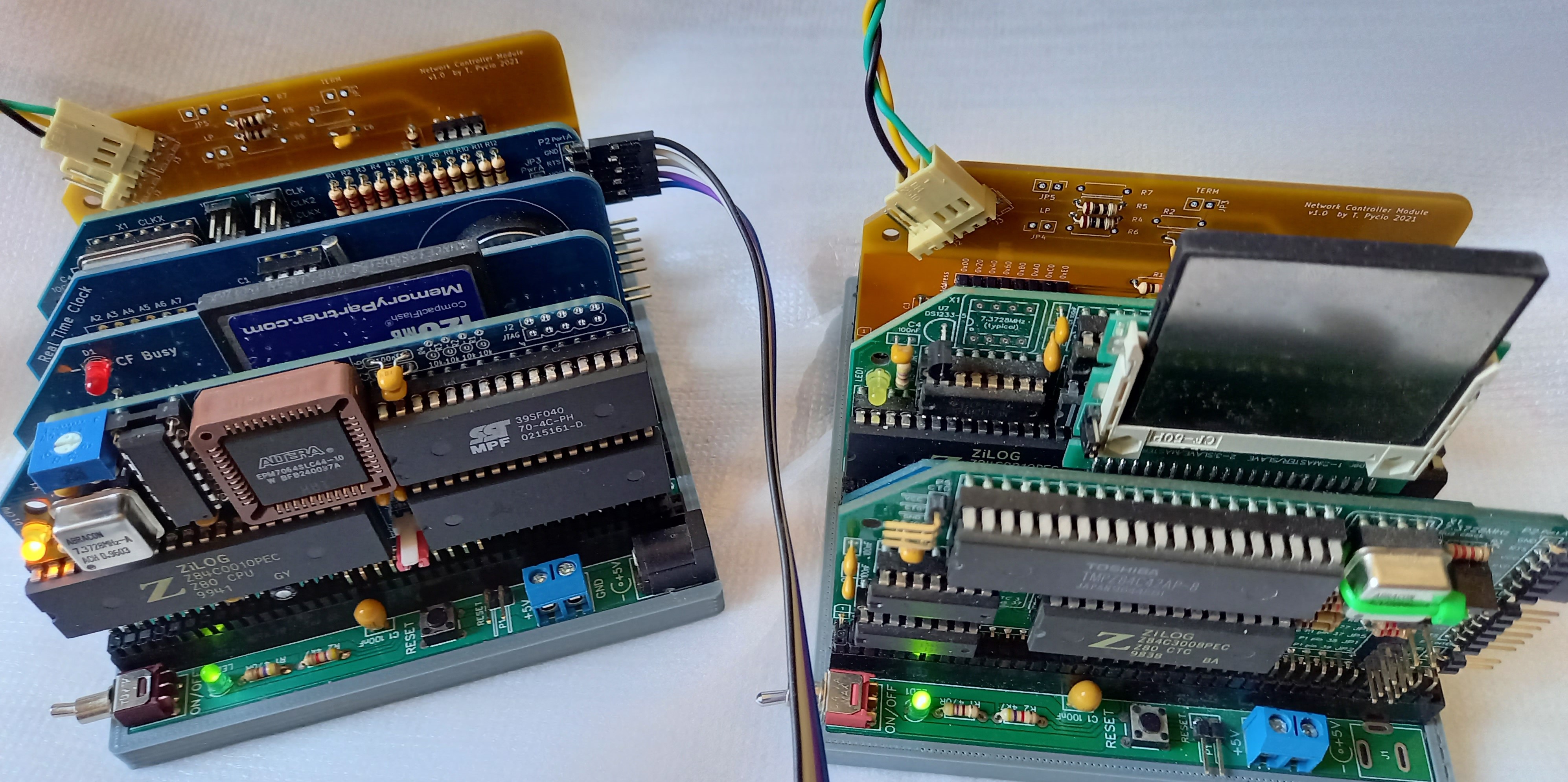

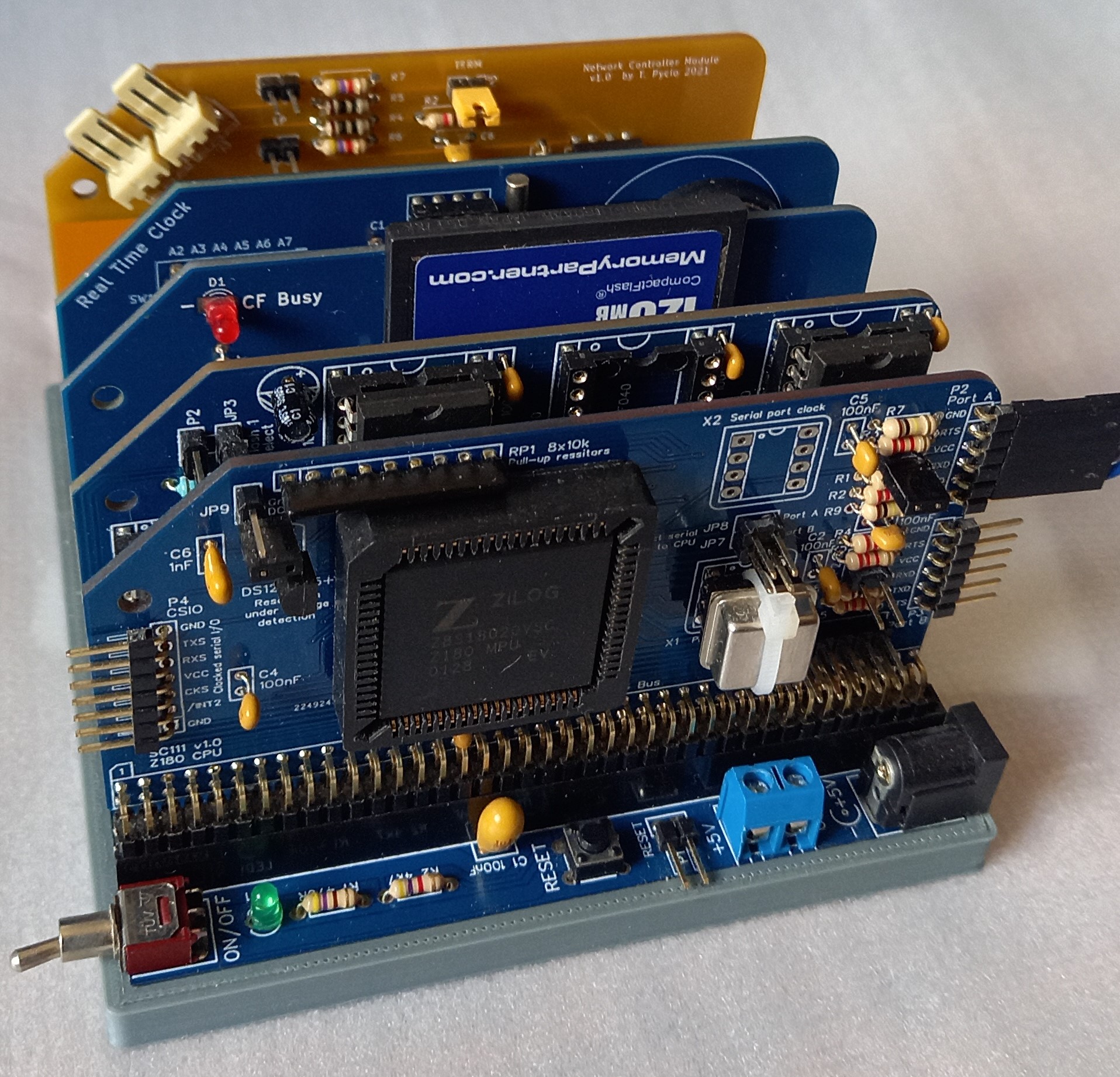

First prototype ready for testing.

Tadeusz Pycio

Jun 19, 2021, 7:52:06 AM6/19/21

to retro-comp

My first network on Z80 ;-) It doesn't do much at the moment, it configures a unique Node ID and can send the token. Data transmission still has a long way to go. I can already see that the interrupt controller will be useful, now I will use separate modules from the Z80CTC, but the next iteration of this network module will contain it.

Tadeusz Pycio

Sep 4, 2021, 10:18:33 AM9/4/21

to retro-comp

Has anyone of you tried to write and run MP/M II on RC2014? Maybe you know a good anchor point for me to take on this challenge ( here I am thinking of the Z180, it seems the best candidate with the required peripherals). The closest thing I've found to a implementation is an MP/M project for P112, maybe I missed something and someone has another suggestion. I would be grateful for a hint.

My intention is to run CP/NET, so there is a lot of work ahead of me here, and knowing a good path would help me a lot. Work on the requester CP/NOS 1.2 is coming to an end and I would like to tackle the other side.

My intention is to run CP/NET, so there is a lot of work ahead of me here, and knowing a good path would help me a lot. Work on the requester CP/NOS 1.2 is coming to an end and I would like to tackle the other side.

Alan Cox

Sep 4, 2021, 9:09:31 PM9/4/21

to Tadeusz Pycio, retro-comp

On Sat, 4 Sept 2021 at 15:18, Tadeusz Pycio <ta...@wp.pl> wrote:

Has anyone of you tried to write and run MP/M II on RC2014? Maybe you know a good anchor point for me to take on this challenge ( here I am thinking of the Z180, it seems the best candidate with the required peripherals). The closest thing I've found to a implementation is an MP/M project for P112, maybe I missed something and someone has another suggestion. I would be grateful for a hint.

I played with it a bit - the biggest problem seems to be that you can't use ROMWBW underneath it because ROMWBW doesn't allow you to do disk I/O to arbitrary bank/address pairs and also doesn't let you hook the tail of the interrupt handler so you need to run native drivers.

The SC126 and similar are IMHO ideal for it though because the SPI will work with a W5500 wireless chip quite nicely providing you either don't use an SD card or add the extra glue to make it work with two devices. (You need reset as well but you can steal \RESET from various locations on the board and bus)

Alan

Tadeusz Pycio

Sep 5, 2021, 7:17:22 AM9/5/21

to retro-comp

Hi Alan,

Did you use DRI sources and write XIOS yourself? Yes, I realize that I won't be able to use ROMWBW, although I will use it to boot MP/M. From the available descriptions of the P112 experience I conclude that the overhead of the OS itself is high and high performance of the storage subsystem is required, so I'm only considering CF Storage.

I know you are following a different path of implementing a physical network interface, in my case SPI will not matter much. My candidate for use as an MP/M based CP/NET server is this kit, as it has all the required components for its implementation.

I know you are following a different path of implementing a physical network interface, in my case SPI will not matter much. My candidate for use as an MP/M based CP/NET server is this kit, as it has all the required components for its implementation.

Douglas Miller

Sep 8, 2021, 9:08:26 AM9/8/21

to retro-comp

The issue for I/O and MP/M is that most hardware uses polled I/O and must actually use the CPU (software) to do the I/O transfers. This works fine for single-user/single-process systems, but is abysmal for multi-user/multi-process. Ideally, you'd have DMA, with completion interrupts, for I/O. I'm sure CF cards are much faster than any legacy storage I/O, but I don't believe it's actually instantaneous - plus you still consume CPU to do the actual data transfer.

Tadeusz Pycio

Sep 8, 2021, 11:24:10 AM9/8/21

to retro-comp

Hi Douglas,

That's true, I will have a problem with the storage because using it in interrupt and DMA mode would require creating a completely new module. Maybe sometime in the future, certainly not now. I would like to make it to the 40th anniversary of the MP/M II and CP/NET with what I have, but life will verify that. The other I/O components will be in the interrupt chain (tic, serial console, network card). For now, I decided that I will go the P112 route by tuning to the realities of RC2014 using the example of the kit presented earlier.

That's true, I will have a problem with the storage because using it in interrupt and DMA mode would require creating a completely new module. Maybe sometime in the future, certainly not now. I would like to make it to the 40th anniversary of the MP/M II and CP/NET with what I have, but life will verify that. The other I/O components will be in the interrupt chain (tic, serial console, network card). For now, I decided that I will go the P112 route by tuning to the realities of RC2014 using the example of the kit presented earlier.

Alan Cox

Sep 8, 2021, 2:13:47 PM9/8/21

to Douglas Miller, retro-comp

On Wed, 8 Sept 2021 at 14:08, Douglas Miller <durga...@gmail.com> wrote:

The issue for I/O and MP/M is that most hardware uses polled I/O and must actually use the CPU (software) to do the I/O transfers. This works fine for single-user/single-process systems, but is abysmal for multi-user/multi-process. Ideally, you'd have DMA, with completion interrupts, for I/O. I'm sure CF cards are much faster than any legacy storage I/O, but I don't believe it's actually instantaneous - plus you still consume CPU to do the actual data transfer.

People assume this but it turns out that

1. The MP/M file I/O subsystem is entirely single threaded

2. The MP/M and CP/M algorithms used don't scale to high speed large disks so you are totally CPU bound anyway

3. The memory bandwidth is totally saturated so even if you used DMA there is no CPU memory bandwidth unless you are going to run interleaved banked memory and cross bar switches

4. The CF adapter and even modern spinny rust have so much cache and speed that they are basically memory. In fact the CF transfers without DMA are the same as 1 wait state RAM

5. The I/O completion time is so fast that an IRQ actually occurs in less clocks than its useful to do other work

6. Read performance is very very latency sensitive.

This was true by the late CP/M era (and is why CP/M 3 not only supports big sectors but knows how to do multi sector I/O requests direct into user mmeory), but even then was true to the point that you didn't cache because it was a loss.

In the PC world PC/IX used the BIOS disk drivers. People wrote fancy interrupt driven ones and go no performance change either.

The performance difference between the CF adapter and the 82C55 interface for raw copies is about a factor of 4-5. The actual result seem under CP/M is almost the same. Fuzix tries quite hard to do direct copies and efficient disk I/O algorithms. DMA to the CF adapter does give me a speed up - but in most cases not a huge one because the raw block transfer is now faster but it's totally memory bandwidth and CPU constrained, and there is no CPU bandwidth when the DMA engine is servicing the CF card.

The old mainframe world was different because the memory bandwidth of the system was higher than the CPU could consume and you had lots of work.

Alan

Douglas Miller

Sep 8, 2021, 4:38:10 PM9/8/21

to retro-comp

Keep in mind that I/O throughput is only one dimension, and for a CP/NET server especially you need to take into account other factors. The right answer is not always what gives the fastest, or "fast enough", disk I/O. I'm not sure how much of the heavy lifting your network module does w.r.t. network protocol, but you need to consider the consequences of having the network neglected for the duration of disk I/O. The reason one might use DMA and interrupts here is to allow the the CPU to keep the network from getting clogged up. Perhaps it is moot for your situation, but it is something that a network server in general needs to consider.

Tadeusz Pycio

Sep 23, 2021, 2:19:03 PM9/23/21

to retro-comp

Hi All,

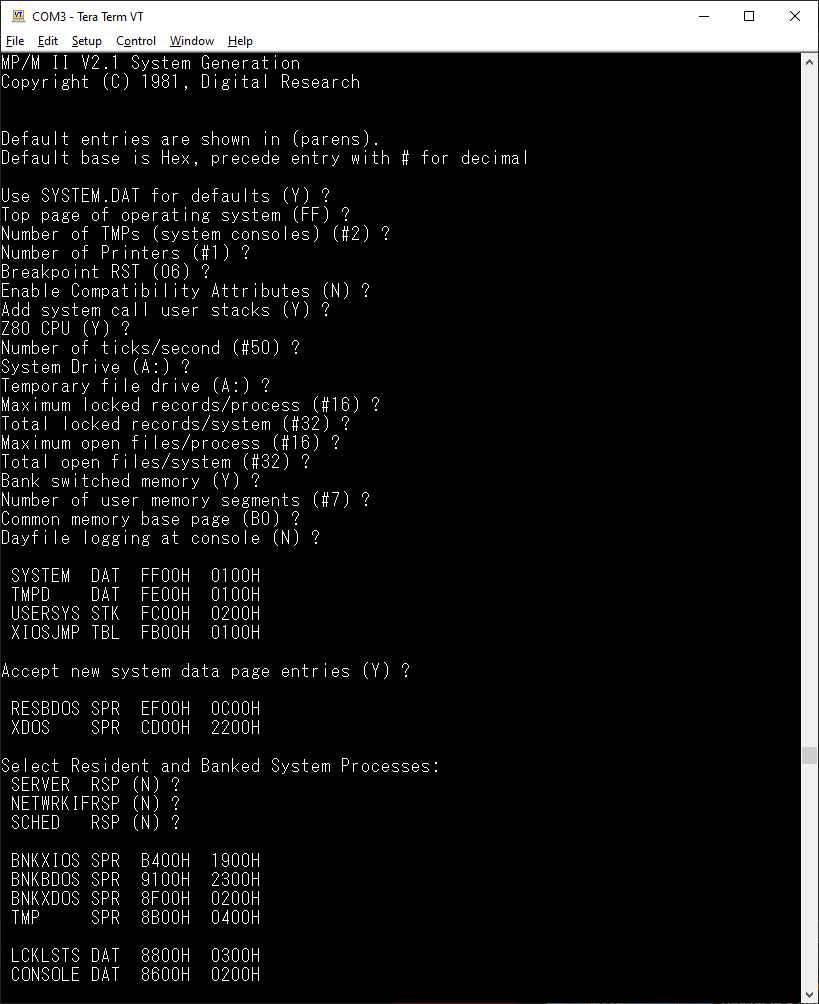

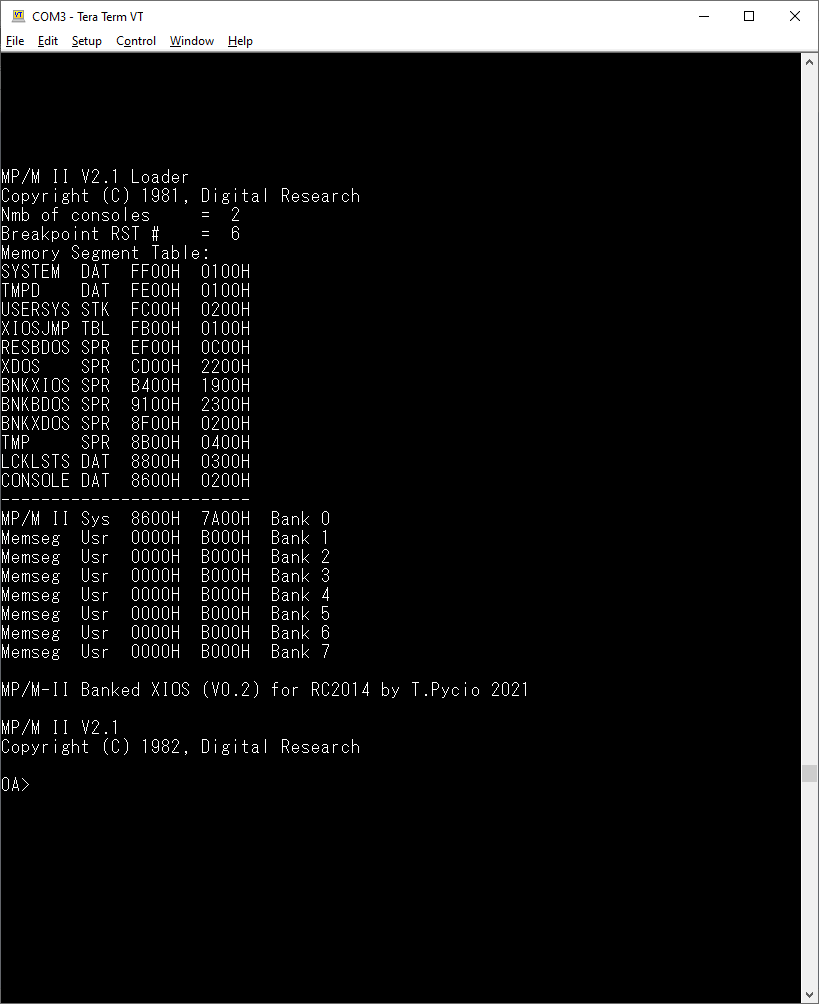

The CP/NET server is slowly being born. I'm currently at the early beta stage of MP/M, I have a lot of tweaking ahead of me before I move on to include the never yet tested in NETWRKIF.SPR version. For now, MP/M runs from the CP/M 2.2 environment, I'll leave direct boot from disk for dessert. The system is based on the DRI manuals, any sources found proved to be more challenging to understand than writing XIOS from scratch.

Tadeusz Pycio

Sep 28, 2021, 11:13:10 AM9/28/21

to retro-comp

Hi All,

Another puzzle piece included. The ROM loader looks to be working. The routines in there initialize the hardware, check the COM20020 chip version, set the NodeID and wait for the server to be present (testing the appearance of the NodeID=01 token) and then load the CP/Net OS. Currently supports XR88C681 and Z80-SIO serial ports.

Another puzzle piece included. The ROM loader looks to be working. The routines in there initialize the hardware, check the COM20020 chip version, set the NodeID and wait for the server to be present (testing the appearance of the NodeID=01 token) and then load the CP/Net OS. Currently supports XR88C681 and Z80-SIO serial ports.

Douglas Miller

Sep 28, 2021, 11:59:02 AM9/28/21

to retro-comp

Very nice.

Tadeusz Pycio

Sep 28, 2021, 1:30:02 PM9/28/21

to retro-comp

Hi Douglas,

For now it just looks nice, only the environment on which it will run is built. The Requester has only CPBIOS running, the server is only MP/M, I still have to write CPNIOS for the requester and NETWRKIF for the server. It will be nice only when I can see the prompt on the client and run the program from the server disk. The work is progressing slowly, because I have to modify the client and server sources at the same time - the charms of working in a computer network, you know something about it.

For now it just looks nice, only the environment on which it will run is built. The Requester has only CPBIOS running, the server is only MP/M, I still have to write CPNIOS for the requester and NETWRKIF for the server. It will be nice only when I can see the prompt on the client and run the program from the server disk. The work is progressing slowly, because I have to modify the client and server sources at the same time - the charms of working in a computer network, you know something about it.

wtorek, 28 września 2021 o 17:59:02 UTC+2 Douglas Miller napisał(a):

Very nice.

Douglas Miller

Sep 28, 2021, 4:56:00 PM9/28/21

to retro-comp

I believe, back in 1983, I used a pair of clients (requesters) and direct client-to-client messages to test out the SNIOS (NDOS functions 66, 67 or direct SNIOS calls), then I had a known-good set of routines to use in the NETWRKIF (that network included printer server nodes, so those could be used to test CP/NET clients as well). These days, I'd probably use a simulator and have a "cheater" server for testing purposes until the clients worked, then add a simulated server.

Doing both in tandem, on real hardware, is certainly more difficult.

Douglas Miller

Sep 28, 2021, 4:59:50 PM9/28/21

to retro-comp

Oh, one note: you seem to be using Node ID 01 as the server... if this number is the CP/NET node ID you might consider using 00 as the server - CP/NET commands assume node 00 when not specified, so it's less typing.

Tadeusz Pycio

Sep 28, 2021, 6:25:39 PM9/28/21

to retro-comp

You're right. The node naming can be misleading. I use (CP/Net NodeID)=(Arcnet NodeID)-1, because Arcnet NodeID=0 is used for broadcast and cannot be used to specify a device.

Tadeusz Pycio

Oct 16, 2021, 6:53:00 PM10/16/21

to retro-comp

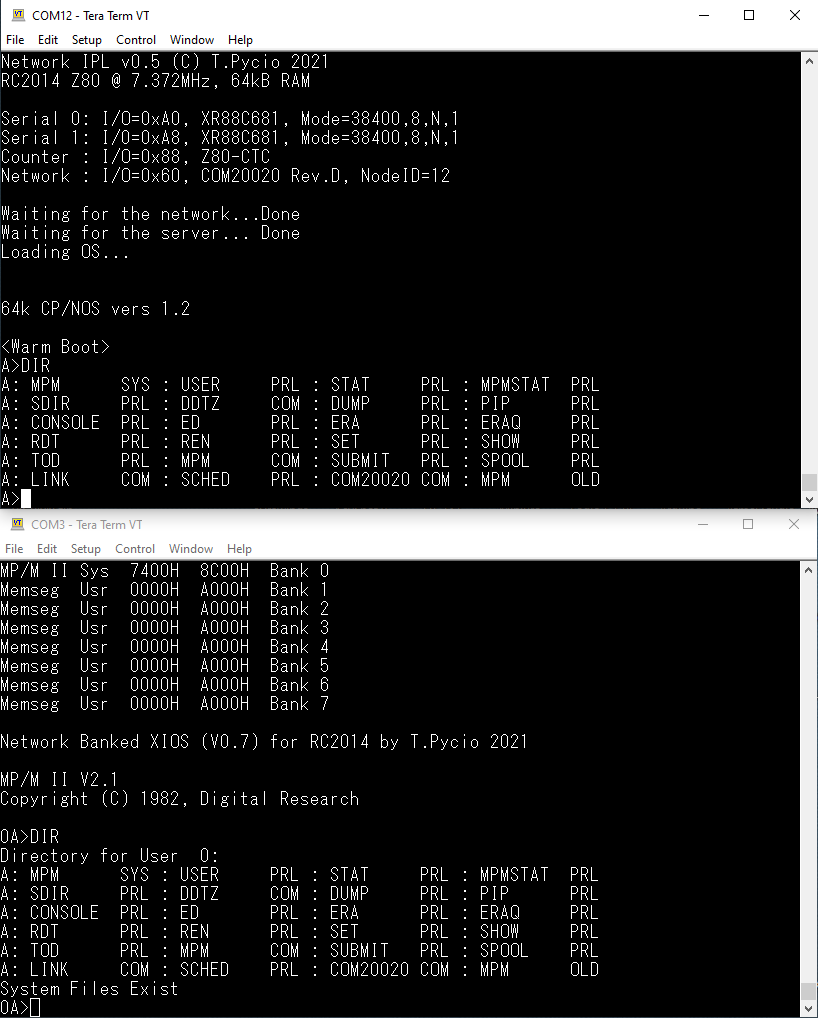

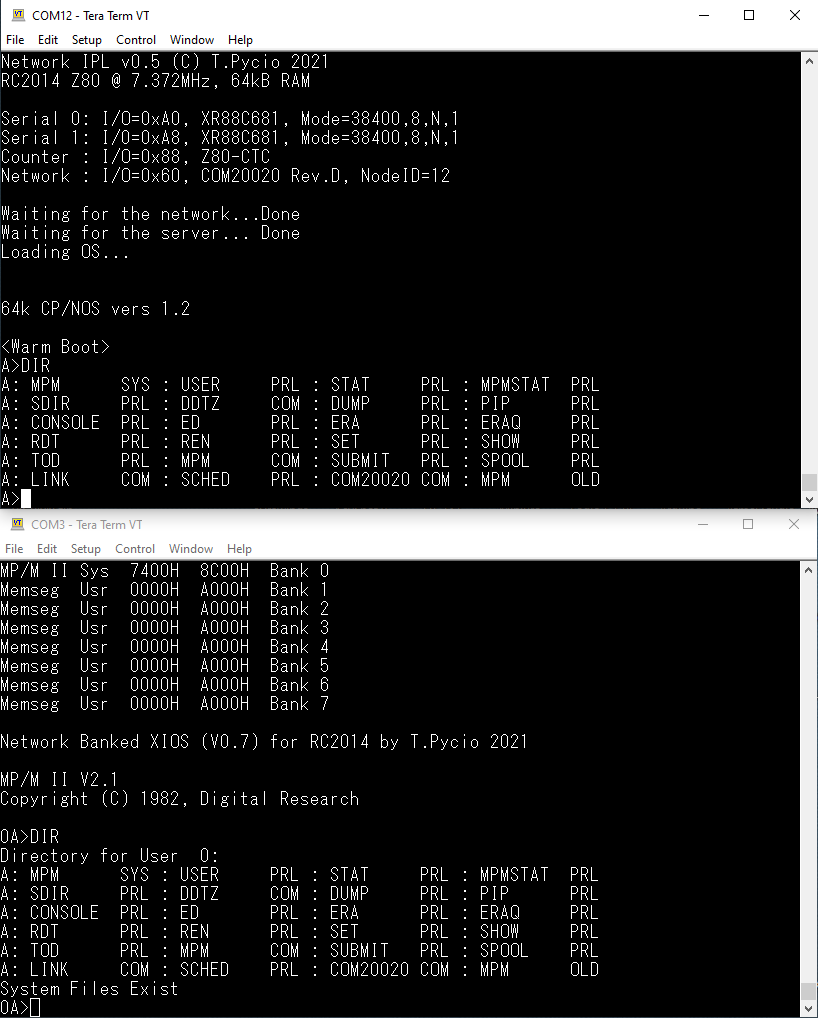

The network CP/NET has launched! Once I have completed testing with one client and have been successful with several, I will begin the second phase - optimization.

rwd...@gmail.com

Oct 17, 2021, 3:39:34 AM10/17/21

to retro-comp

I would like to draw your attention to the MP/M support in the PLI-80 (v1.4) compiler, should you wish to do any program development.

Richard

Tadeusz Pycio

Oct 17, 2021, 6:12:39 AM10/17/21

to retro-comp

Conclusions:

- Requester does not require interrupts, the sending and receiving process is single-threaded and takes place in a polling loop.

- Server MP/M is a more complicated process: receiving is done on an interrupt and setting a receive flag which wakes up the receiving process. I use two pages of a hardware Arcnet controller so I can receive two frames. The modified SERVER.RSP uses one output queue, the transmit process can be paused until the previous frame is sent (via polling). I don't know if I need to use a semaphore in this case to resolve a possible conflict of competition for the transmitter. With a single queue, this probably shouldn't happen. I need to read more.

- CF is efficient, there is no need to hook it up to the interrupt system and use polling.

- When a single requester is attached, the network file system is not much slower than directly attached storage.

Douglas Miller

Oct 17, 2021, 9:38:40 AM10/17/21

to retro-comp

Excellent progress.

As I understand that network controller chip, there are a limited number of pages (buffers) available for receive and transmit. If you plan to support an arbitrary number of requesters, you'll want to offload received messages promptly from the chip into MP/M RAM, otherwise the network itself will stall. The net affect may be the same (requesters don't get their responses), I think it is better to have things waiting in MP/M on semaphores rather than relying on the network chips to stall traffic. This is the general philosophy for modern network stacks, from what I've seen. The CP/NET server does have the concept of a maximum number of requesters (that can be logged in), so it's pretty easy to keep things under control that way as well - although having more physical requester nodes than the maximum allowed to log in can create more network stress if those "odd man out" requesters keep trying to log in.

I'm not sure what your other plans are for the MP/M machine, but I would suspect you want to avoid running applications there, except for a process to use for administrative purposes. Of course, all these guidelines I'm remembering are from 1983 when a Z80 ran at 4MHz, but a fast processor isn't a blank check to make the code less performant (unless you live on the East side of Lake Washington).

I'm not sure what your other plans are for the MP/M machine, but I would suspect you want to avoid running applications there, except for a process to use for administrative purposes. Of course, all these guidelines I'm remembering are from 1983 when a Z80 ran at 4MHz, but a fast processor isn't a blank check to make the code less performant (unless you live on the East side of Lake Washington).

Tadeusz Pycio

Oct 20, 2021, 3:23:45 AM10/20/21

to retro-comp

Hi Douglas,

Thanks. I have an idea that would solve the problem of PC - Arcnet CP/Net connection in the form of a bridge built on the RC2014 computer or a standalone design using e.g. AVR. You can use currently existing server software on PC for communication. My only concern is that the serial transmission may be too slow. This will probably be my next challenge after I deal with the current one.

Thanks. I have an idea that would solve the problem of PC - Arcnet CP/Net connection in the form of a bridge built on the RC2014 computer or a standalone design using e.g. AVR. You can use currently existing server software on PC for communication. My only concern is that the serial transmission may be too slow. This will probably be my next challenge after I deal with the current one.

Tadeusz Pycio

Oct 20, 2021, 4:34:34 PM10/20/21

to retro-comp

Douglas Miller

Oct 23, 2021, 3:56:31 PM10/23/21

to retro-comp

Looking good!

Congrats at arriving in the "Network Age"! ;-)

Douglas Miller

Oct 23, 2021, 4:07:59 PM10/23/21

to retro-comp

Speaking of an Arcnet-to-PC bridge, one possibility is to add a WizNET module to the server (e.g. MT011 https://github.com/markt4311/MT011) and connect to the PC (or any modern network) via Ethernet. It's a bit heavy-weight (code is simple, though), but does give you the best speed and ease of connectivity. We've got CP/NET code examples for accessing the WizNET buried in https://github.com/durgadas311/cpnet-z80.git, if you are interested in that route. The PC side just uses standard TCP/IP sockets (there's also a JAVA CP/NET server in that repo).

Douglas Miller

Oct 23, 2021, 4:09:36 PM10/23/21

to retro-comp

Oh, and there's also a JAVA Serial server in that repo, although you've already mentioned the down-side of using serial.

Tadeusz Pycio

Oct 23, 2021, 4:25:08 PM10/23/21

to retro-comp

Speaking of an Arcnet-to-PC bridge, one possibility is to add a WizNET module to the server

I'm afraid I'm not up to such a challenge, using two network interfaces under MP/M may be too difficult. A multithreaded bridge would have to be written, and modifying SERVER.RSP would require deassembly, I haven't found its source codes. Interesting, maybe I'll try it when I have more free time. I guess the only simple solution is to build such a bridge on some modern microcontroller with dedicated software.

Douglas Miller

Oct 23, 2021, 4:36:37 PM10/23/21

to retro-comp

SERVER.RSP is pretty simple, I'll disassemble it since no one seems to have the source code.

I'm not sure the bridge code is even as complex (which is simple) as SERVER.RSP, you basically don't have to un-pack the CP/NET messages at all, you just forward them to the PC (and the reverse for responses). It does require a modicum of MP/M programming, but mostly just working off examples already available, I think.

But, it is a measure of extra work, and for now you should thoroughly enjoy what you've accomplished!

Tadeusz Pycio

Oct 23, 2021, 4:39:59 PM10/23/21

to retro-comp

I've thought about it for a while and it might work after all, I'll add and MT011 on the next PCB production order. I don't need packet analysis for the bridge, only their translation. I accept the challenge ;-)

Douglas Miller

Oct 24, 2021, 2:43:41 PM10/24/21

to retro-comp

I've got an initial disassembly of SERVER.RSP. It is stored at https://github.com/durgadas311/cpnet-z80/blob/master/dist/mpm/server.asm

Note, this file is in "unix" mode - LF-only line endings. It does assemble/link (RMAC/LINK) back into an RSP that is identical to the original.

Tadeusz Pycio

Oct 24, 2021, 4:39:38 PM10/24/21

to retro-comp

You've gone fast! I took a step back.I found a bug that causes MP/M to hang (sic!) when the requester uses the disk intently. I will, however, implement a semaphore for the sending process.

Douglas Miller

Oct 24, 2021, 8:16:30 PM10/24/21

to retro-comp

Had a thought, not sure how you've designed your NetWrkIF module but something to consider is that the DRI "reference" implementation that they provided revolves around an RS232 "star" topology network, and there it makes sense to have a separate NetWrkIF process for each serial port (requester). But, in the case of bus topologies, like ArcNet or Ethernet, you really only want/need one process per adapter - so one process on the server - receiving and sending messages. Basically, the idea is to have one NetWrkIF process per *network interface*, and for ArcNet you have only one interface. You still want one SERVER process per requester. You have to come up with a way for distributing incoming messages to the correct SERVER process, based on the source node ID. You could probably make due with a single message queue for outgoing (response) messages, although I'm not sure that the default SERVER.RSP will do that. Both NetWrkIF and SERVER share the "server config table", which includes all the information about logged in requesters, their associated SERVER process, and any free logins/processes available. You might have to do more of the login procedure in NetWrkIF rather than SERVER.

Anyway, some design considerations.

Tadeusz Pycio

Oct 25, 2021, 2:09:31 AM10/25/21

to retro-comp

Yes, you are right, I believe SERVER.RSP was designed for slow serial transmissions and that is why each requester has its own separate process, queues. In my implementation I followed the ULCIF.ASM example, where each requester has its own process and input queue, and one common output queue. SERVER. RSP was modified as described in application note #01. I suspect that the change to one process and one queue for input and output operations for all requesters involves a more serious modification to the SERVER.RSP module. Currently I'm trying to find the reason for such behavior of the server, because the same application which uses the disk intensive, on the MP/M console works fine. Maybe I made a mistake somewhere.

Douglas Miller

Oct 25, 2021, 12:02:41 PM10/25/21

to retro-comp

Ah, I hadn't looked at ULCIF.ASM before, that's what I was envisioning. Although, I wonder if you really need/want two processes (in and out) or just one. Make sure that the in and out processes mutex properly, so that only one can access the hardware at the same time. If the hardware access is quick enough (I think it should be), it may make sense to just use DI/EI around the critical access to the ArcNet chip to prevent from being preempted.

Douglas Miller

Oct 27, 2021, 7:34:55 PM10/27/21

to retro-comp

I found a bug in SERVER.RSP, although I'm not sure whether you'd ever hit it.

During CP/NET LOGIN, if the maximum number of requesters is logged in (this one is MAX+1), then the error path through the login code will leave interrupts disabled. I don't know how long before MP/M forces interrupts back on, if at all, or whether this could cause a hang.

This would only be a problem if you were operating at/near the maximum number of requesters (and might be attempting to exceed it).

Tadeusz Pycio

Oct 28, 2021, 3:11:01 AM10/28/21

to retro-comp

I am impressed with the speed at which you analyze this code. Interesting, I thought that checking LOGIN and LOGOFF by the netwrkif module was needed for my own purposes, and here it turns out that it is not only. My work is progressing slowly as I can only devote an hour a day, hopefully the weekend will enable me to work more efficiently. I found another puzzle, using a mutex for the output process gives unexpected results with READ SEQUENTIAL messages. I'm still hoping I'm the one making the mistake, and not fighting a hidden SERVER.RSP bug.

Douglas Miller

Oct 28, 2021, 7:30:09 AM10/28/21

to retro-comp

Correct, in your single-process (actually two) NetWrkIF case, the NetWrkIF needs to essentially handle the login because it must choose a server queue/process before it can distribute the message. It doesn't technically login the requester, but it does locate a free spot in the login table so that it can distribute the message. I've not thoroughly analyzed this code path to ensure there are no issues, but I generally trust that DRI thought this case through. There could be some race conditions, though.

Douglas Miller

Oct 30, 2021, 8:58:17 PM10/30/21

to retro-comp

FYI, in case it is of interest, I have started working on CP/NET MP/M server files for a modular system. The goal is to abstract the network away from the NETWRKIF and SERVER modules, and optimize them for the "multi-drop" or "bus" topology, like WizNET Ethernet or Arcnet. I have not tested these, nor integrated them into the normal CP/NET builds yet, but am getting ready to test them. I'll need to dig my SC203 from storage and get MP/M running on it, if not already available. This system has an MT011 with WizNET and SDCard attached, so won't work with CF-based systems. Worst case, I'll have to write a BIOS/XIOS, but I'd rather not. This version of NETWRKIF uses a single process to avoid mutex issues with the hardware, and messages are spawned off to server processes for each requester.

Anyway, code may be viewed at https://github.com/durgadas311/cpnet-z80/tree/mpm-dev/mpm. I chose to go the banked/resident way, so there are two halves of each NETWRKIF and SERVER. Plus the NIOS for WizNET (linked with banked NETWRKIF). Theoretically, an NIOS could be written for the Arcnet controller as well. It may be beneficial to add the (client) SNIOS for Arcnet as well, allowing the build of packages for that.

Anyway, code may be viewed at https://github.com/durgadas311/cpnet-z80/tree/mpm-dev/mpm. I chose to go the banked/resident way, so there are two halves of each NETWRKIF and SERVER. Plus the NIOS for WizNET (linked with banked NETWRKIF). Theoretically, an NIOS could be written for the Arcnet controller as well. It may be beneficial to add the (client) SNIOS for Arcnet as well, allowing the build of packages for that.

Tadeusz Pycio

Oct 31, 2021, 3:45:44 AM10/31/21

to retro-comp

This is great news! You have taken an interesting route with the

banked

network modules. If you need MP/M sources, please send me an e-mail and I'll make what I have available, I haven't made them public yet as they are not suitable for that yet. It seems to me that you will spend most of your work on writing the SD support, as the rest is already done. To start with, you have to start with the MP/M loader, which is a simplified BIOS of CP/M, so you have to write that code.

Tadeusz Pycio

Nov 15, 2021, 6:25:37 PM11/15/21

to retro-comp

I have probably solved the problem of the MP/M system randomly crashing when working with a heavy load of disk operations by CP/NET requesters. The cause was the use of the interrupt system, which is quite often disabled by the OS, and this probably caused the conflict. I currently use polling incoming messages. I will be observing system performance after this change.

Douglas Miller

Dec 3, 2021, 6:29:51 PM12/3/21

to retro-comp

FYI, I have a running MP/M CP/NET Server based on a new design. I am running on Heathkit H89 simulators, with Z180 CPU and WizNET network adapters. This design uses one process for network receive, N processes for requester-servers, and uses a mutex to avoid collisions in the NIOS. It reduces the number of process and message queues used. The NIOS module (similar, but not identical, to SNIOS) is the only thing that should require changing for different network adapters. I'm in the process of merging it into the mainstream cpnet-z80 project, but for now the files are on the branch at https://github.com/durgadas311/cpnet-z80/tree/mpm-dev/mpm. Only the files resntsrv.asm, bnkntsrv.asm, ntwrkrcv.asm, servers.asm, nios.asm are used (as implied by the Makefile) to produce resident and banked RSP modules ("NETSERVR"). This RSP starts up in a holding mode to allow for configuration of the network chip after boot. When SRVSTART.COM is run, it releases the RSP and completes network initialization and is ready for requesters. I'm working on adding the ability to shutdown the RSP (and network) - with restart - as well.

My next step will be to get the MP/M running on an RC2014 and test the server code on a "real" WizNET.

Tadeusz: If you like, I can merge your SNIOS into the cpnet-z80 project, and look into creating an NIOS. This might provide some handy packaging options as the build creates all the CP/NET 1.2 "distro" files, as well as (soon) MP/M Server files. It also may open the door to a CP/M 3 CP/NET client, as I have that working - provided the server supports CP/M 3 requests (NETSERVR does not... yet).

Tadeusz Pycio

Dec 3, 2021, 6:54:58 PM12/3/21

to retro-comp

Hi Douglas,

I see your work is progressing quickly, I haven't done anything lately because I haven't had time to deal with it. The stopped network process is prompted by the desire to make it universal? I initiated the network card through SystemInit of XIOS module. I definitely need to look into what you did.

I see your work is progressing quickly, I haven't done anything lately because I haven't had time to deal with it. The stopped network process is prompted by the desire to make it universal? I initiated the network card through SystemInit of XIOS module. I definitely need to look into what you did.

Douglas Miller

Dec 3, 2021, 8:00:04 PM12/3/21

to retro-comp

The WizNET (ethernet) module requires initialization, in this case from NVRAM. Rather than put a lot of code (required to initialize it) into the RSP, I opted for using an existing COM file and thought it might be a useful feature to not start CP/NET immediately - but maybe not. It's easy enough to by-pass. I did not put the network code in the XIOS as I wanted MP/M to be independent of the CP/NET Server application. So, I modeled it more like CP/NET clients with an interchangeable NIOS.

That does make device polling more complicated, although currently I just delay and check in the NetServr code (similar to ULCI code). My XIOS allows for external RSPs to plug-in to the poll mechanism, so I've thought about adding that later. I take the general stance that running a CP/NET Server generally excludes other uses for MP/M, which is supported by the large amount of resources consumed by the CP/NET Server. So, I wanted the MP/M implementation to not be dependent on CP/NET.

In my case, the WizNET module is on the same SPI adapter as the SDCard (disk), and so I use MXDisk to mutex the network code as well (must prevent a disk operation from being interrupted and dispatching to a network operation, and vice versa). This is not required if the network and disk hardware are independent, so it is a compile option. Although I don't think it particularly hurts anything. Polling (XIOS poll-device) is also an option.

I'm currently running with max requesters set to 7. For the WizNET, this is a hardware limit since it has a total of 8 sockets (and I reserve one to receive messages after the maximum requesters have logged in). Because the RSP is split into resident/banked parts, the number of requesters supported has a minimal effect on common memory (only process descriptors).

The server currently requires login, but I'm considering an option to compile-out that requirement.

Another thing I'm looking into is support for restricting access to certain drives. Right now, there's nothing to prevent a client from mapping to MP/M drive A: and wiping out everything.

Douglas Miller

Dec 19, 2021, 4:10:20 PM12/19/21

to retro-comp

I've made some progress on my MP/M Server, latest updates are in the github repo (https://github.com/durgadas311/cpnet-z80). This includes a way to prevent CP/NET clients from writing to server drive A: (system drive), so that a client can no longer wipe out the MP/M installation. This does require that the XIOS check certain bits in the process descriptor, and return an error from the WRITE routine if the process has protected A:.

It still does not use device polling, but that requires non-standard interfaces in the XIOS to add hooks to the device poll table/routine. I suppose it could do some sort of daisy-chain modification of the XIOS JMP table to insert itself in the call (should work on any XIOS), but I'm not yet keen on that idea.

I also modified the SRVSTAT.COM utility there, so that it can be run on the server (MP/M) as well as the clients. This is a convenient way to check on the status of the server.

This has been tested on a simulator, but not yet run on a real RC2014. Also, I have not looked into adding an NIOS.ASM for the ArcNET chip yet.

Douglas Miller

Dec 26, 2021, 10:17:43 AM12/26/21

to retro-comp

I've been able to test mt MP/M server on real hardware now (at least MP/M is on hardware, requesters are simulated on a Linux PC). Things seem to be working well. The drive protection needed to be reworked, and no longer relies on XIOS changes. I added a utility to change the R/O protection on-the-fly.

I do see some choppy performance when running a long PIP on one requesters and performing DIR commands on another. But, this is probably to be expected in my setup, where both the disk and network share the same adapter (SPI) and so must be mutexed. That does put a dent in concurrency.

I also cleaned up the mutexing and allowed for utilities to also use the mutex, so that configuration/diagnostic utilities may be run while the server is running.

Tadeusz: if you would like to try this, share your SNIOS/network code and I can put that into an NIOS and build a copy for your system.

Tadeusz Pycio

Dec 16, 2022, 6:31:54 PM12/16/22

to retro-comp

I have finally worked out the modules for CP/NOS which will read the NodeID in hardware. For CP/Net running from under CP/M I'm thinking of a configuration file that contains this. The second option is the previously presented I2C module. This is slowly starting to look like the whole thing.

Kurt Pieper

Dec 17, 2022, 3:04:37 AM12/17/22

to retro-comp

Hello Tadeusz

that's something again. Congratulations on this partial success of the CP/NOS.

Top left are 6 pins to connect FTD232?

That would be great.

I already backed up 90% of my CPM/Programs on a Linux machine.

My Kaypro 4/84 has been upgraded with a MainWell power supply and two Gotek LW. Floppy disks or maladjustment of the Epson LW are no longer a problem for me. There is no wiring diagram for the Califonia dc power supplies. I'm not talking about the defective accountants.

That's not the case with the Kaypro power supplies. Try to get a reference diskette for setting up the Epson LW.

With your module I could access the Linux computer (Pi 400) directly with rc2014. Develop further on the rc2014 Prg and send back to the Linux computer. Programs for my Kaypro are written to the stick or the Kaypro computer fetches the data from the Pi400 via cpnet via the RS232.

Hello Tadeusz, I am a hobby user. I've read and tried a lot over the years, with good success. At the moment I'm trying to improve my basic knowledge of electrical engineering. Here in Europe a Prof.Dr.Mandred Hild published a YOUTUBE course. Count me in. Excellently done. I enjoy it.

I wish you a nice time and stay healthy.

Kurt

that's something again. Congratulations on this partial success of the CP/NOS.

Top left are 6 pins to connect FTD232?

That would be great.

I already backed up 90% of my CPM/Programs on a Linux machine.

My Kaypro 4/84 has been upgraded with a MainWell power supply and two Gotek LW. Floppy disks or maladjustment of the Epson LW are no longer a problem for me. There is no wiring diagram for the Califonia dc power supplies. I'm not talking about the defective accountants.

That's not the case with the Kaypro power supplies. Try to get a reference diskette for setting up the Epson LW.

With your module I could access the Linux computer (Pi 400) directly with rc2014. Develop further on the rc2014 Prg and send back to the Linux computer. Programs for my Kaypro are written to the stick or the Kaypro computer fetches the data from the Pi400 via cpnet via the RS232.

Hello Tadeusz, I am a hobby user. I've read and tried a lot over the years, with good success. At the moment I'm trying to improve my basic knowledge of electrical engineering. Here in Europe a Prof.Dr.Mandred Hild published a YOUTUBE course. Count me in. Excellently done. I enjoy it.

I wish you a nice time and stay healthy.

Kurt

Reply all

Reply to author

Forward

0 new messages