TESTERS NEEDED! Amazon S3 File Store Support

1,632 views

Skip to first unread message

Dan Huby

Aug 17, 2019, 2:21:57 PM8/17/19

to ResourceSpace

Hi all,

Steve Bowman has kindly contributed S3 storage functionality and we're looking for assistance testing this before merging in to the trunk. We're targetting 9.2 for this which means all tests/fixes need to be in place by the 9.2 Release Candidate branch, which all being well will be the end of February 2020.

To try out this functionality check out:

Please post any feedback to this thread so Steve can respond and we all have visibility.

Many thanks,

Dan Huby

ResourceSpace Project Lead

Steve

Aug 19, 2019, 12:15:11 PM8/19/19

to ResourceSpace

You can find instructions on setting up AWS and ResourceSpace to use AWS S3 storage in the ../documentation folder of the branch referenced above.

Thanks, Steve.

Brian Irwin

Oct 5, 2019, 1:05:15 PM10/5/19

to ResourceSpace

Hello, Steve.

This is great news! Thank you very much for your contribution!

I have been using S3 as a filestore for ResourceSpace for several years by way of mounting s3 buckets directly to the file system. (s3fs, riofs, and goofys).

There has been a significant performance hit with this method since current RS expects locally attached storage. Issues include heavy api calls and a long time to render assets on view.php ($storageurl parameter helps with speed here)

I'm looking forward to testing it out!

How is the performance so far?

Since I already have all of my assets in an s3 bucket, can I just add that to the config and be good to go? Or do I need to do a sync process?

Thanks in advance for any insight!

Best Regards,

Brian

Steve

Oct 10, 2019, 9:17:30 AM10/10/19

to ResourceSpace

Brian- Thanks for agreeing to test the new AWS S3 code in RS. I did try using S3FS initially, but as you experienced, using it had significant performance issues. This new code branch uses the AWS PHP API for direct calls. So far, performance seems quite good in my testing. I have tested it with a 50 Mbps up/down fiber connection and the performance was good. The original files in S3 are stored in the same structure as in the traditional local filestore, except that the path below and including ../filestore is not included in the object names. More information on configuration is available in the PDF document at https://svn.resourcespace.com/svn/rs/branches/sbowman/20190807_svnsbowman_aws_s3_storage/documentation/. If your S3 files have the same object name structure, it should work. When uploading a file or using the ../pages/tools/

filestore_to_s3.php script, a placeholder file is created in the local filestore to resolve a few issues with the existing code. That may be an issue for you, but will need to try it out and see.

Please let me know any issues you run into with the new code so I can get it ready for Dan to add to the trunk. Would like to see it get incorporated into v9.2 early next year.

Thanks, Steve.

Abdulaziz Hamdan

Oct 30, 2019, 6:03:07 PM10/30/19

to ResourceSpace

Hello, Steve.

Thank you for the contribution, I've been wandering around for a week trying integrate s3 as the default storage but with no luck (no errors, but it's still not storing on s3 nor on local) .. i thought i was getting close, but i kept getting more confused of what i might have missed because there's no documentation on it.

Will follow up with your suggestions, test it out and will provide feedback on it.

Thanks.

Regards,

Abdulaziz

_______________________________________________________

Troy Yeager

Dec 17, 2019, 4:02:23 PM12/17/19

to ResourceSpace

Looking forward to this fantastic inclusion into ResourceSpace...

Questions: Will this work with non amazon object storage (i.e. ceph), and will there be a way to have it store data across multiple buckets?

Thanks,

Troy

Steve

Dec 18, 2019, 8:28:59 AM12/18/19

to ResourceSpace

Troy- You should be able to use this with non-AWS S3-compatible storage by setting the correct endpoint URL. It would be very helpful if you could test this branch to make sure all is working ok.

Thanks, Steve.

Troy Yeager

Dec 18, 2019, 9:06:13 AM12/18/19

to resour...@googlegroups.com

Thanks Steve! I’ll give it a go.

Troy- You should be able to use this with non-AWS S3-compatible storage by setting the correct endpoint URL. It would be very helpful if you could test this branch to make sure all is working ok.Thanks, Steve.

--

ResourceSpace: Open Source Digital Asset Management

http://www.resourcespace.com

---

You received this message because you are subscribed to a topic in the Google Groups "ResourceSpace" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/resourcespace/JT833klfwjc/unsubscribe.

To unsubscribe from this group and all its topics, send an email to resourcespac...@googlegroups.com.

To view this discussion on the web, visit https://groups.google.com/d/msgid/resourcespace/2cc9bd59-d7c3-4b03-81e8-e875987aaaeb%40googlegroups.com.

Dan Huby

Dec 18, 2019, 11:54:08 AM12/18/19

to ResourceSpace

On Wednesday, 30 October 2019 22:03:07 UTC, Abdulaziz Hamdan wrote:

Hello, Steve.Thank you for the contribution, I've been wandering around for a week trying integrate s3 as the default storage but with no luck (no errors, but it's still not storing on s3 nor on local) .. i thought i was getting close, but i kept getting more confused of what i might have missed because there's no documentation on it.

Steve said above it was in the ../documentation folder of the branch. Specifically:

Troy Yeager

Dec 18, 2019, 2:08:48 PM12/18/19

to ResourceSpace

I don't see any references to setting the endpoint URL anywhere. I assume it would be set in the config.php file. Maybe something like...

$aws_endpoint_URL = '[ENTER URL HERE]';

Thanks,

Troy

Steve

Dec 18, 2019, 2:25:18 PM12/18/19

to ResourceSpace

It is not a current setting in the existing RS branch code. You would need to add it in the ../include/aws_sdk.php file. You should be able to add a custom S3 endpoint by adding:

'endpoint' => 'your.endpoint.url:port',

to the $s3Client setup in the aws_sdk.php file just below line 32: 'region' => $aws_region,

I have not tested this, but should work. You might also have to adjust a few other S3 parameters for the specific S3-compatible service.

Note that the CloudWatch functions will likely not work, unless your S3 storage device also supports that service.

Hope it works ok for you.

Steve

David Bitton

Feb 7, 2020, 10:58:32 AM2/7/20

to ResourceSpace

Is there support for using an existing bucket? It sure would be great to drop RS on top of an existing bucket and slurp the images in to RS.

Steve

Feb 10, 2020, 7:18:26 PM2/10/20

to ResourceSpace

Not currently, but you could modify the S3 enabling code to parse through using static sync. Steve.

Steve

Mar 4, 2020, 4:14:59 PM3/4/20

to ResourceSpace

Any feedback on the S3 code?

Abdulaziz Hamdan

Oct 9, 2020, 6:21:27 AM10/9/20

to ResourceSpace

Hi steve,

Would like to update you from what i have been though the ResourceSpace with AWS S3.

Since downloading the version through SVN in MAR 2020, i have tested the AWS S3 and uploading and all are working thanks to you. ( i havent checked if there are any updates on your AWS code, as i am used to Git and not very familiar with SVN)

However, as a feedback.. i came across a few errors i faced here , hence

I have yet used to RS to its full extent .. the Errors are:

1. uploading after sometime not success, but unless if bucket was public access then i was able to upload again (re-apply non public bucket is ok now)..not sure what happened previously.

2. One day, i get error 500 internal server.. Upon checking error, it was:

after that, i retry to upload but it will give the same error; so

unless i truncate database tables (`resource`, `resource_alt_files`,

`resource_custom_access`, `resource_data`, `resource_dimensions`,

`resource_keyword`, `resource_log`, `resource_node`,

`resource_related`), and also clear out my bucket, only then i may

proceed to upload success. Not exactly sure what was the issue.

3. Upon uploading as well..sometimes i get error duplicate chunk[2] of file;

although.. when testing on another pc, he error does not happen, so i am not sure what happened.

Docker:

FROM alpine:3.11

VOLUME /etc/nginx/ssl

EXPOSE 80 443

ARG VERSION

RUN apk update && \

apk add openssl unzip nginx bash ca-certificates s6 curl ssmtp mailx php7 php7-phar php7-curl \

php7-fpm php7-json php7-zlib php7-xml php7-dom php7-ctype php7-opcache php7-zip php7-iconv \

php7-pdo php7-pdo_mysql php7-pdo_sqlite php7-pdo_pgsql php7-mbstring php7-session php7-bcmath \

php7-gd php7-mcrypt php7-openssl php7-sockets php7-posix php7-ldap php7-simplexml php7-fileinfo \

php7-mysqli php7-dev php7-intl imagemagick ffmpeg ghostscript exiftool subversion mysql-client && \

rm -rf /var/cache/apk/* && \

rm -rf /var/www/localhost && \

rm -f /etc/php7/php-fpm.d/www.conf

RUN apk upgrade

# ADD . /var/www/app

ADD docker/ /

RUN chmod +x /usr/local/bin/entrypoint.sh

COPY ./ /var/www/app

VOLUME /var/www/app

COPY custom-php.ini $PHP_INI_DIR/conf.d/

VOLUME /var/www/app/filestore

RUN rm -rf /var/www/app/docker && echo $VERSION > /version.txt

ENTRYPOINT ["/usr/local/bin/entrypoint.sh"]

CMD []

My RS Configs:

$mysql_server = 'host.docker.internal';

$mysql_db = 'resourcespace';

$mysql_username = 'root';

$mysql_password = '';

$mysql_bin_path = '/usr/bin';

$baseurl = 'http://localhost:8001';

$imagemagick_path = '/usr/bin';

$ghostscript_path = '/usr/bin';

$exiftool_path = '/usr/bin';

$antiword_path = '/usr/bin';

$ffmpeg_path = '/usr/bin';

$config_windows = '';

$defaultlanguage = 'en-US';

$email_notify = '[private]';

$email_from = ;

$debug_log = true;

$debug_log_location = dirname(_FILE_)."/../filestore/logs.txt";

# Secure keys

$spider_password = '[private]' ;

$scramble_key = '[private]' ;

$api_scramble_key = '[private]' ;

#SMTP settings

$use_smtp = true;

$use_phpmailer = true;

$smtp_secure = 'tls';

$smtp_host = 'email-smtp.us-east-1.amazonaws.com';

$smtp_port = 587;

$smtp_auth = true;

$smtp_username = '[private]';

$smtp_password = '[private]';

$homeanim_folder = 'filestore/system/slideshow_0e307dcc49b9eeb';

$merge_filename_with_title = true;

$merge_filename_with_title_default = 'replace';

/*

New Installation Defaults

-------------------------

The following configuration options are set for new installations only.

This provides a mechanism for enabling new features for new installations without affecting existing installations (as would occur with changes to config.default.php)

*/

// Set imagemagick default for new installs to expect the newer version with the sRGB bug fixed.

$imagemagick_colorspace = "sRGB";

$contact_link=false;

$slideshow_big=true;

$home_slideshow_width=1920;

$home_slideshow_height=1080;

$themes_simple_view=true;

$themes_category_split_pages=true;

$theme_category_levels=8;

$stemming=true;

$case_insensitive_username=true;

$user_pref_user_management_notifications=true;

$themes_show_background_image = true;

$use_zip_extension=true;

$collection_download=true;

$ffmpeg_preview_force = true;

$ffmpeg_preview_extension = 'mp4';

$ffmpeg_preview_options = '-f mp4 -ar 22050 -b 650k -ab 32k -ac 1';

$daterange_search = true;

$upload_then_edit = false; //on false, filename title replace feature success

$search_filter_nodes = true;

$defaultlanguage="en"; # default language, uses ISO 639-1 language codes ( en, es etc.)

$disable_languages=true;

$purge_temp_folder_age=90;

$syncdir=str_replace("include","",dirname(_FILE_))."filestore"; # The sync folder

$storagedir = str_replace("include","",dirname(_FILE_))."filestore"; // Filestore location, such as “/var/www/resourcespace/filestore”.

$originals_separate_storage = false;

$purge_temp_folder_age = '';

$exiftool_write = true;

$exiftool_write_metadata = true;

$replace_resource_preserve_option = false;

$replace_resource_preserve_default = false;

$replace_batch_existing = false;

$custompermshowfile = false;

$aws_s3 = true; // Enable AWS S3 original file storage?

//$aws_bucket = ''; // replaced at the top to group it with the DB configs

$aws_bucket = '[private]';

$aws_region = 'ap-southeast-1'; // AWS region the S3 bucket is located in.

$aws_storage_class = 'STANDARD'; // AWS S3 storage class.

$aws_tmp_purge = 5; // Time in minutes to purge the AWS tmp folder.

RS Check page result:

ResourceSpace version SVN Trunk

MySQL version 5.7.23 (client-encoding: latin1) OK

PHP version 7.3.22 (config: /etc/php7/php.ini) OK

PHP large file support (64 bit platform)? OK

PHP.INI value for 'memory_limit' 200M OK

PHP.INI value for 'post_max_size' 100M OK

PHP.INI value for 'upload_max_filesize' 100M OK

Is the PHP timezone the same as the one MySQL uses? FAIL: PHP timezone is "Asia/Kuala_Lumpur" and MySQL timezone is "Malay Peninsula Standard Time"

PHP GD version bundled (2.1.0 compatible) OK

PHP EXIF extension FAIL

PHP ZIP extension OK

Installed PHP extensions Core PDO Phar Reflection SPL SimpleXML Zend OPcache bcmath cgi-fcgi ctype curl date dom fileinfo filter gd hash iconv intl json ldap libxml mbstring mcrypt mysqli mysqlnd openssl pcre pdo_mysql pdo_pgsql pdo_sqlite posix readline session sockets standard xml zip zlib

Write access to /var/www/app/filestore OK

Write access to filestore/system/slideshow_0e307dcc49b9eeb FAIL: filestore/system/slideshow_0e307dcc49b9eeb not writable. Open permissions to enable home animation cropping feature in the transform plugin.

Blocked browsing of 'filestore' directory OK

Amazon Web Services (AWS) Simple Storage Service (S3) Based Original Filestore Bucket: [private]

Bucket located in region: ap-southeast-1

Bucket owner: [private]

Owner ID: [private] OK

ImageMagick Version: ImageMagick 7.0.9-7 Q16 x86_64 2019-12-03 https://imagemagick.org OK

FFmpeg ffmpeg version 4.2.4 Copyright (c) 2000-2020 the FFmpeg developers OK

Ghostscript GPL Ghostscript 9.50 (2019-10-15) OK

ExifTool 11.79 OK

Last scheduled task execution (days) Never WARNING

AWS config page result:

Use AWS S3 object-based original file filestore? Yes

AWS key pair (key / secret) set? Yes / Yes

Using original file separated filestore ($originals_separate_storage = true)? No

ResourceSpace parameters check ($exiftool_write = true, $exiftool_write_option = true, $force_exiftool_write_metadata = true, $replace_resource_preserve_option = false, $replace_resource_preserve_default = false, $replace_batch_existing = false, and $custompermshowfile = false)? FAIL

Storage directory set ($storagedir)? /var/www/app/filestore OK

Purge temp folder age ($purge_temp_folder_age): days OK

S3 bucket accessible? [private] OK

Bucket located in region: ap-southeast-1 OK

Bucket owner: [private] OK

Owner ID: 01c89c2e594053df77b416af9903639cdb611ddf919041937a4a0d79b0abb1e1

S3 bucket storage class: Standard Storage

Relevance matching will not be effective and periodic e-mail reports will not be sent. Ensure batch/cron.php is executed at least once daily via a cron job or similar.

Thank you.

Would like to update you from what i have been though the ResourceSpace with AWS S3.

Since downloading the version through SVN in MAR 2020, i have tested the AWS S3 and uploading and all are working thanks to you. ( i havent checked if there are any updates on your AWS code, as i am used to Git and not very familiar with SVN)

1. uploading after sometime not success, but unless if bucket was public access then i was able to upload again (re-apply non public bucket is ok now)..not sure what happened previously.

2. One day, i get error 500 internal server.. Upon checking error, it was:

3. Upon uploading as well..sometimes i get error duplicate chunk[2] of file;

although.. when testing on another pc, he error does not happen, so i am not sure what happened.

4.

Resources uploaded to s3 are not attached with their previews (hence

videos, docs, pdfs are not having preview fetched from s3). Meaning that

even when item is uploaded successfully and can be accessed (opened and

downloaded), but they will lack preview image in s3. I realized that

upon deleting a resource, i noticed the imgs generated on local, but not

on S3.

So

as you can see, jpgs are generated as preview on local for all, but

they're not uploaded to s3 to be considered as an attached item.

--------------------------------------

--------------------------------------

--------------------------------------

--------------------------------------

-End of report-

--------------------------------------

--------------------------------------

--------------------------------------

--------------------------------------

For your reference, my pc resources are:

Win10 Pro (build 19041.508).

Wamp 3.23 64bit - Php 7.3 &

MySql 5.7

Using Docker (2.4.0.0 - 48506 - stable) as linux container, and my configs are:

Docker:

FROM alpine:3.11

VOLUME /etc/nginx/ssl

EXPOSE 80 443

ARG VERSION

RUN apk update && \

apk add openssl unzip nginx bash ca-certificates s6 curl ssmtp mailx php7 php7-phar php7-curl \

php7-fpm php7-json php7-zlib php7-xml php7-dom php7-ctype php7-opcache php7-zip php7-iconv \

php7-pdo php7-pdo_mysql php7-pdo_sqlite php7-pdo_pgsql php7-mbstring php7-session php7-bcmath \

php7-gd php7-mcrypt php7-openssl php7-sockets php7-posix php7-ldap php7-simplexml php7-fileinfo \

php7-mysqli php7-dev php7-intl imagemagick ffmpeg ghostscript exiftool subversion mysql-client && \

rm -rf /var/cache/apk/* && \

rm -rf /var/www/localhost && \

rm -f /etc/php7/php-fpm.d/www.conf

RUN apk upgrade

# ADD . /var/www/app

ADD docker/ /

RUN chmod +x /usr/local/bin/entrypoint.sh

COPY ./ /var/www/app

VOLUME /var/www/app

COPY custom-php.ini $PHP_INI_DIR/conf.d/

VOLUME /var/www/app/filestore

RUN rm -rf /var/www/app/docker && echo $VERSION > /version.txt

ENTRYPOINT ["/usr/local/bin/entrypoint.sh"]

CMD []

_______________________________

_______________________________

$mysql_server = 'host.docker.internal';

$mysql_db = 'resourcespace';

$mysql_username = 'root';

$mysql_password = '';

$mysql_bin_path = '/usr/bin';

$baseurl = 'http://localhost:8001';

$imagemagick_path = '/usr/bin';

$ghostscript_path = '/usr/bin';

$exiftool_path = '/usr/bin';

$antiword_path = '/usr/bin';

$ffmpeg_path = '/usr/bin';

$config_windows = '';

$defaultlanguage = 'en-US';

$email_notify = '[private]';

$email_from = ;

$debug_log = true;

$debug_log_location = dirname(_FILE_)."/../filestore/logs.txt";

# Secure keys

$spider_password = '[private]' ;

$scramble_key = '[private]' ;

$api_scramble_key = '[private]' ;

#SMTP settings

$use_smtp = true;

$use_phpmailer = true;

$smtp_secure = 'tls';

$smtp_host = 'email-smtp.us-east-1.amazonaws.com';

$smtp_port = 587;

$smtp_auth = true;

$smtp_username = '[private]';

$smtp_password = '[private]';

$homeanim_folder = 'filestore/system/slideshow_0e307dcc49b9eeb';

$merge_filename_with_title = true;

$merge_filename_with_title_default = 'replace';

/*

New Installation Defaults

-------------------------

The following configuration options are set for new installations only.

This provides a mechanism for enabling new features for new installations without affecting existing installations (as would occur with changes to config.default.php)

*/

// Set imagemagick default for new installs to expect the newer version with the sRGB bug fixed.

$imagemagick_colorspace = "sRGB";

$contact_link=false;

$slideshow_big=true;

$home_slideshow_width=1920;

$home_slideshow_height=1080;

$themes_simple_view=true;

$themes_category_split_pages=true;

$theme_category_levels=8;

$stemming=true;

$case_insensitive_username=true;

$user_pref_user_management_notifications=true;

$themes_show_background_image = true;

$use_zip_extension=true;

$collection_download=true;

$ffmpeg_preview_force = true;

$ffmpeg_preview_extension = 'mp4';

$ffmpeg_preview_options = '-f mp4 -ar 22050 -b 650k -ab 32k -ac 1';

$daterange_search = true;

$upload_then_edit = false; //on false, filename title replace feature success

$search_filter_nodes = true;

$defaultlanguage="en"; # default language, uses ISO 639-1 language codes ( en, es etc.)

$disable_languages=true;

$purge_temp_folder_age=90;

$syncdir=str_replace("include","",dirname(_FILE_))."filestore"; # The sync folder

$storagedir = str_replace("include","",dirname(_FILE_))."filestore"; // Filestore location, such as “/var/www/resourcespace/filestore”.

$originals_separate_storage = false;

$purge_temp_folder_age = '';

$exiftool_write = true;

$exiftool_write_metadata = true;

$replace_resource_preserve_option = false;

$replace_resource_preserve_default = false;

$replace_batch_existing = false;

$custompermshowfile = false;

$aws_s3 = true; // Enable AWS S3 original file storage?

//$aws_bucket = ''; // replaced at the top to group it with the DB configs

$aws_bucket = '[private]';

$aws_region = 'ap-southeast-1'; // AWS region the S3 bucket is located in.

$aws_storage_class = 'STANDARD'; // AWS S3 storage class.

$aws_tmp_purge = 5; // Time in minutes to purge the AWS tmp folder.

_______________________________

_______________________________

_______________________________

ResourceSpace version SVN Trunk

MySQL version 5.7.23 (client-encoding: latin1) OK

PHP version 7.3.22 (config: /etc/php7/php.ini) OK

PHP large file support (64 bit platform)? OK

PHP.INI value for 'memory_limit' 200M OK

PHP.INI value for 'post_max_size' 100M OK

PHP.INI value for 'upload_max_filesize' 100M OK

Is the PHP timezone the same as the one MySQL uses? FAIL: PHP timezone is "Asia/Kuala_Lumpur" and MySQL timezone is "Malay Peninsula Standard Time"

PHP GD version bundled (2.1.0 compatible) OK

PHP EXIF extension FAIL

PHP ZIP extension OK

Installed PHP extensions Core PDO Phar Reflection SPL SimpleXML Zend OPcache bcmath cgi-fcgi ctype curl date dom fileinfo filter gd hash iconv intl json ldap libxml mbstring mcrypt mysqli mysqlnd openssl pcre pdo_mysql pdo_pgsql pdo_sqlite posix readline session sockets standard xml zip zlib

Write access to /var/www/app/filestore OK

Write access to filestore/system/slideshow_0e307dcc49b9eeb FAIL: filestore/system/slideshow_0e307dcc49b9eeb not writable. Open permissions to enable home animation cropping feature in the transform plugin.

Blocked browsing of 'filestore' directory OK

Amazon Web Services (AWS) Simple Storage Service (S3) Based Original Filestore Bucket: [private]

Bucket located in region: ap-southeast-1

Bucket owner: [private]

Owner ID: [private] OK

ImageMagick Version: ImageMagick 7.0.9-7 Q16 x86_64 2019-12-03 https://imagemagick.org OK

FFmpeg ffmpeg version 4.2.4 Copyright (c) 2000-2020 the FFmpeg developers OK

Ghostscript GPL Ghostscript 9.50 (2019-10-15) OK

ExifTool 11.79 OK

Last scheduled task execution (days) Never WARNING

_______________________________

_______________________________

_______________________________

Use AWS S3 object-based original file filestore? Yes

AWS key pair (key / secret) set? Yes / Yes

Using original file separated filestore ($originals_separate_storage = true)? No

ResourceSpace parameters check ($exiftool_write = true, $exiftool_write_option = true, $force_exiftool_write_metadata = true, $replace_resource_preserve_option = false, $replace_resource_preserve_default = false, $replace_batch_existing = false, and $custompermshowfile = false)? FAIL

Storage directory set ($storagedir)? /var/www/app/filestore OK

Purge temp folder age ($purge_temp_folder_age): days OK

S3 bucket accessible? [private] OK

Bucket located in region: ap-southeast-1 OK

Bucket owner: [private] OK

Owner ID: 01c89c2e594053df77b416af9903639cdb611ddf919041937a4a0d79b0abb1e1

S3 bucket storage class: Standard Storage

Relevance matching will not be effective and periodic e-mail reports will not be sent. Ensure batch/cron.php is executed at least once daily via a cron job or similar.

=======================

=========End of references==============

=======================

That is all what i

have to report and feedback, apologize for late reporting as i actually

planned to submit this earlier time but i got busy with personal stuff

due to covid19 as well as other projects.

Do let me know if you need anything further or have any suggestion or if anything not very clear.

Thank you.

Regards,

Abdulaziz

Steve

Oct 15, 2020, 1:26:54 PM10/15/20

to ResourceSpace

Thanks for the feedback. I did create a new S3 branch at http://svn.resourcespace.com/svn/rs/branches/sbowman/20201015_sbowman_aws_s3_2c/. Suggest using this branch with updated code. This new code contains options for using S3 providers other than AWS, among a few other enhancements. Recreating previews is not currently working, should be fixed shortly. Also working on a local S3 download cache for popular files to reduce downloading from S3. Modified the S3 Installation Check page and added a new S3 Dashboard.

Only the original files are stored in S3; the previews are stored locally for performance. If you delete a resource, the local previews and the original file in S3 is deleted.

Let me know what you think.

Thanks, Steve.

Abdulaziz Hamdan

Oct 22, 2020, 2:42:01 PM10/22/20

to ResourceSpace

Hi Steve, thanks for getting back.

Thank you.

Btw, regarding my errors i got above, i managed to get some hints why they might have appeared.

1. was due to some large image file of 15MB not uploaded successfully to s3, but yet path was registered in RS, so resource was looking for it in S3 (on empty value).

3. suspect was due to php error limitation (though no error mentioned about that).

In any case, i shall clone the branch you mentioned and have a look at it and update you.

Actually in my site i intend to fully use S3 as my storage replacement, because i dont want to depend on limited local storage i have for the site; What can you advise to fully replace filestore with S3 where i would be uploading/storing/processing direct straight with s3?

Please advise.

Thank you.

Abdulaziz Hamdan

Nov 2, 2020, 11:36:40 PM11/2/20

to ResourceSpace

Hi Steve,

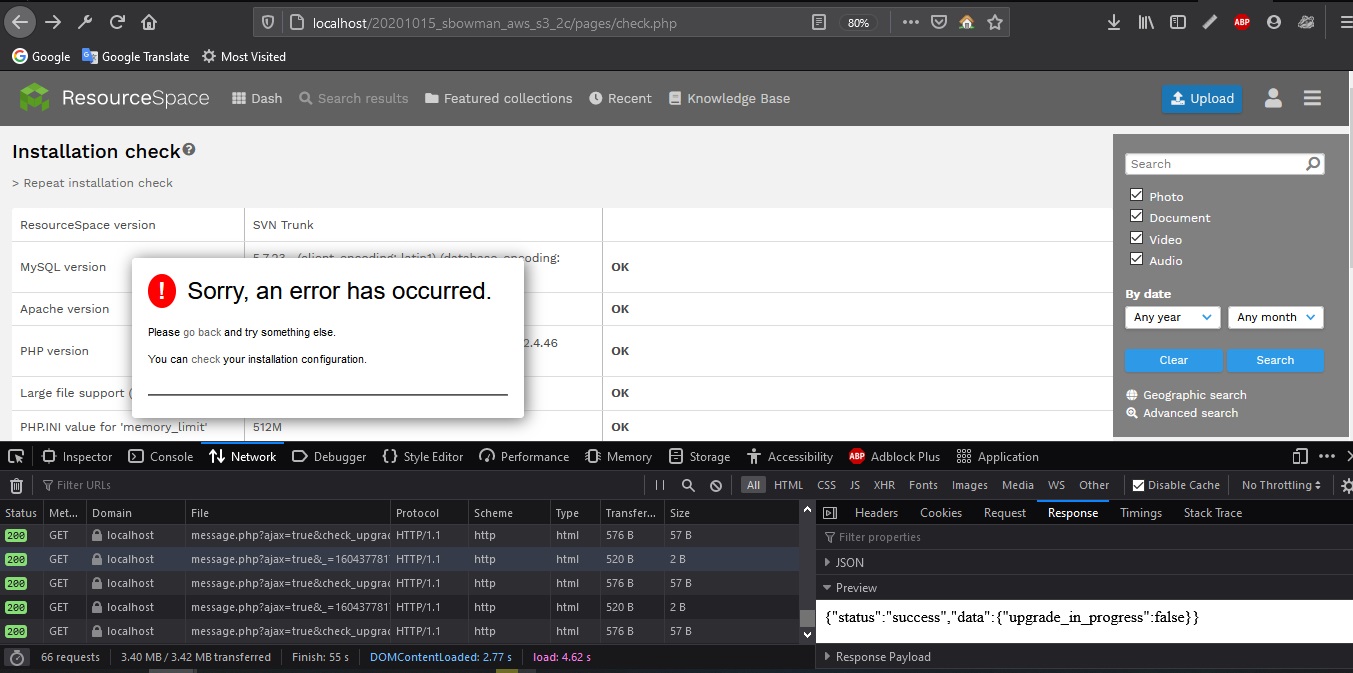

Just checked the branch you mentioned, as soon as i did a git svn clone as a new project and upon running, the check installation gave me error:

I added the following so it can show me the error, but nothing is coming up.

ini_set('display_errors', 1);

ini_set('display_startup_errors', 1);

error_reporting(E_ALL);

ini_set('display_startup_errors', 1);

error_reporting(E_ALL);

I am using Wamp64bit: Apache 2.4.4, Php7.3, Mysql 5.7

Anyway.. i proceeded to add the AWS S3 in configs in config.php (as suggested by documentation before), but it is not behaving the same as the previous branch where menu will show AWS S3 Configuration; Also i checked the branch if there are any changes and i noticed there is a new branch (http://svn.resourcespace.com/svn/rs/branches/sbowman/20201026_sbowman_s3_3/), is this a newer branch i can update to, or is it just another branch for something else?

Please advise.

Thank you.

On Friday, October 16, 2020 at 1:26:54 AM UTC+8 Steve wrote:

Tom

Nov 17, 2020, 1:23:26 AM11/17/20

to ResourceSpace

Hey there,

We're more than happy to try and help test. Just to confirm this is the correct branch to test on?

Also, any idea roughly when this will be in the main release?

Many thanks!

Steve

Nov 19, 2020, 9:36:09 AM11/19/20

to ResourceSpace

Tom/Abdulaziz- Sorry for the slow response. The latest branch is http://svn.resourcespace.com/svn/rs/branches/sbowman/20201026_sbowman_s3_3/ to use. I made some improvements over the previous s3 branches. Not sure on when this can be added to the trunk and a future release, waiting on Dan to do that. I will have a overview document, along with a more detailed description document ready, hopefully later today. This branch is working for me, but would sure appreciate any feedback, so any importnat issues can be resolved, and we can ask Dan to add to the trunk. Let me know what you think.

Thanks, Steve

Steve

Nov 19, 2020, 9:38:12 AM11/19/20

to ResourceSpace

You could turn off LPR and HPR preview generation. These sizes get large and are often unnecessary to have.

Steve

Nov 19, 2020, 6:38:02 PM11/19/20

to ResourceSpace

The latest revision (R16840) contains the attached branch information file and the latest S3 storage integration into ResourceSpace. The PDF document contains a lot of configuration information and details on the S3 integration workflow. I hope it helps in your testing.

Thanks, Steve

Steve

Nov 23, 2020, 12:37:48 PM11/23/20

to ResourceSpace

The SVN branch https://svn.resourcespace.com/svn/rs/branches/sbowman/20201026_sbowman_s3_3/ contains the latest S3 object-based storage integration. The PDF at the branch root contains an overview, setup, and how the integration works.

Steve

Andrew McCullough

Jan 16, 2021, 12:08:24 PM1/16/21

to ResourceSpace

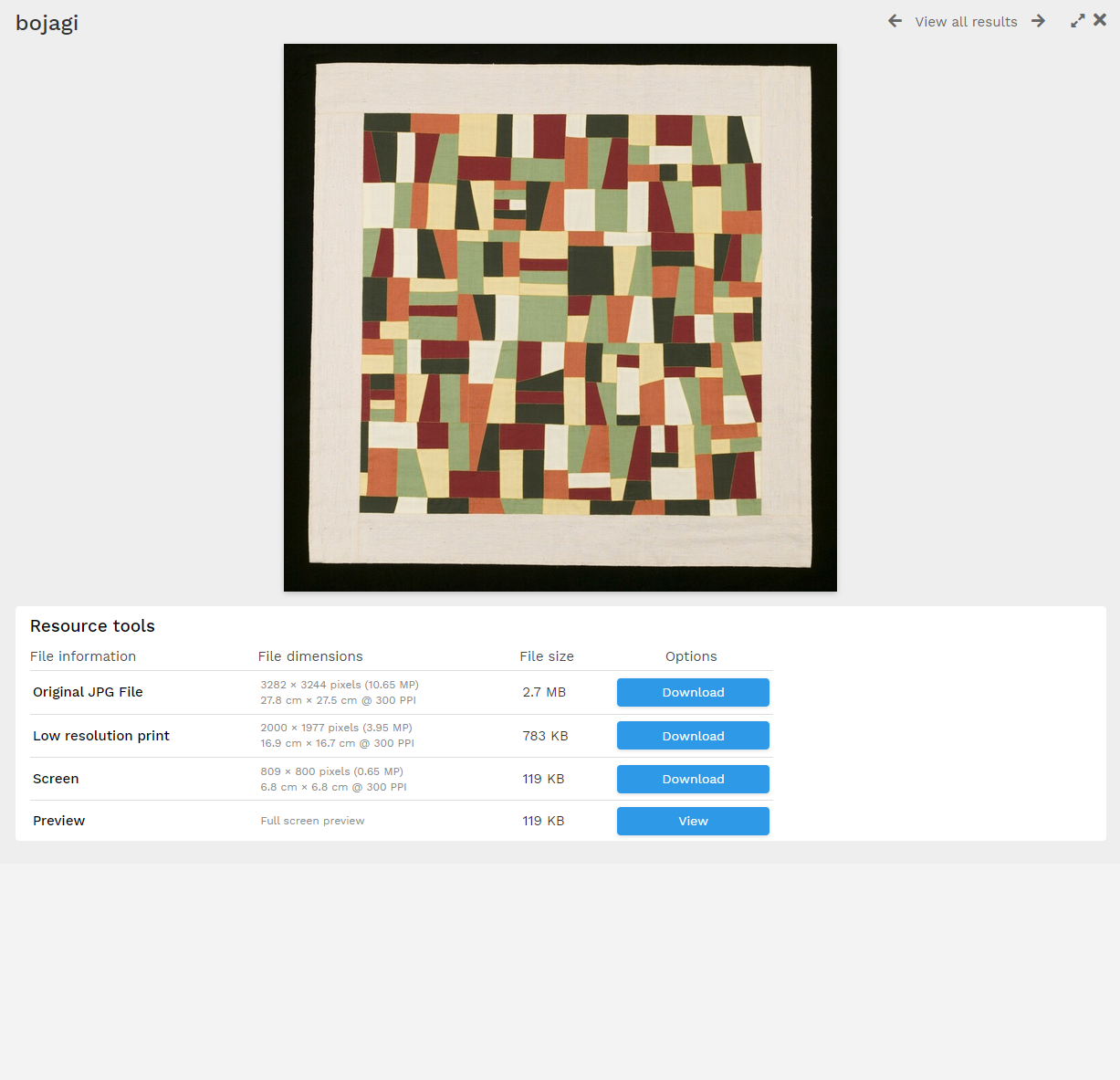

This is great and super timely for us (as we are moving a bunch of stuff over to AWS). I tested the S3 connection and so far it is working super well with uploads, download, and all the rest. A strange thing about the ResourceSpace installation from the branch though is that my resource view pages stop rendering after the download region:

Not sure why this is the case and can't find anything in the logs, user is super admin, etc. etc.

Sorry, this probably isn't a very helpful contribution but I thought I would put it out there in case anyone else is encountering the same...

Andrew

Brian Gollands

Jul 26, 2021, 6:35:59 PM7/26/21

to ResourceSpace

I'm new to RS but have a working installation up. What I really need for our institution is to use it with S3 storage. Before attempting this, I want to confirm that I'm on the right track:

1. I gather that, even with the new RS 9.6, Steve's way is the only way to enable S3 support. Thus, rather than install the latest distro from RS, I need to install the one from the following? https://svn.resourcespace.com/svn/rs/branches/sbowman/20201026_sbowman_s3_3/

2. What version of RS is this branch based on?

Thanks!

Brian

Steve

Jul 27, 2021, 9:45:13 AM7/27/21

to ResourceSpace

Brian- The attached ZIP file contains the latest S3 storage plugin that works with ResourceSpace v9.6. I suggest using this plugin, rather than the older branch that is not up to date. Place the plugin in the ResourceSpace ../plugins folder with the default plugins.

Thanks, Steve.

Steve

Jul 27, 2021, 10:41:49 AM7/27/21

to ResourceSpace

The plugin is now available in GitHub: https://github.com/sdbowman/ResourceSpace-S3-Storage-Plugin.

Brian Gollands

Jul 27, 2021, 5:49:58 PM7/27/21

to ResourceSpace

Steve --

Wow! Christmas came early! Thanks very much, Steve!

Brian

Andrew McCullough

Jul 27, 2021, 8:25:40 PM7/27/21

to ResourceSpace

Agreed with Brian, thank you for your work on this--it is so great!

Steve

Jul 28, 2021, 6:21:08 PM7/28/21

to ResourceSpace

Glad it is working ok.

Steve

Jul 29, 2021, 12:37:09 PM7/29/21

to ResourceSpace

Updated ../hooks/upload_plupload.php to resolve issue with the Upload Log displaying twice. Also added installation instructions.

Andrew McCullough

Aug 1, 2021, 1:17:11 PM8/1/21

to ResourceSpace

A couple of notes for those coming after.

I managed to get filestore transfer tool working. A few things:

- variables in the migration script are called things like $s3_bucket. In the configuration file, the names of these variables has been updated to $s3_storage_bucket, so you will need to change the name in the migration script for it to work.

- I had trouble with file extensions on original files. Images with .jpeg extensions would result in the wrong file path and were therefore not found when doing the upload. Apparently, this is just one of the limitations of the get_resource_path() API function? So in order to pass an explicit file extension to get_resource_path, I performed a get_resource_data on each $ref (this same change has to be made in step 2 and step 3):

// Build array of the resource original file and alternative files to upload to a S3 bucket.

$ref_original[0]['ref'] = 0;

$ref_original[0]['file_extension'] = get_resource_data($ref)['file_extension']; #2021-07-31 ADDED file extension for original resource

$ref_files = array_merge($ref_original + $alt_files);

// Loop through resource original files and upload to S3.

foreach($ref_files as $file)

{

// Setup AWS SDK S3 putObject parameters.

if($file['ref'] == 0)

{

$s3filepath = get_resource_path($ref, true, '', false, $file['file_extension']); #2021-07-31 ADDED FILE EXTENSION to FIX FILE COULD NOT BE FOUND ERRORs

$file_output = 'Uploading original file (';

$file_info = 'Original file: ';

}

else

{

$s3filepath = get_resource_path($ref, true, '', false, $file['file_extension'], true, 1, false, '', $file['ref']);

$file_output = 'Uploading alternative file (';

$file_info = 'Original alternative file #' . $file['ref'] . ': ';

}

The script did not seem to work with any alternative files, which really didn't matter with my implementation, but if alternative files are important to you then it might be something you want to look into.

A final note. Each time I ran the script, it started uploading files from the beginning. I think the intention is that the script will skip through resources that already exist in the S3 bucket, but that wasn't the case for some reason. No matter, but something to think about. I ended up hard-coding starting ref numbers on subsequent runs to avoid repeat uploads when I was debugging.

I hope this helps someone in the future!

Best,

Andrew

Andrew McCullough

Aug 1, 2021, 1:31:46 PM8/1/21

to ResourceSpace

Also, if you have a big filestore, don't forget to increase your $php_time_limit in config. I just set mine to 0 (no limit) and will change it back when I'm all done.

Andrew McCullough

Aug 2, 2021, 7:24:57 PM8/2/21

to ResourceSpace

Another note to add here. I transferred images to S3, and then transferred by installation and previews to an EC2 instance on amazon. One thing that got me during that process was after I loaded the database and files into EC2 I had a persistent mkdir() permissions issue. Turns out the config_json field on the plugins table in the database for s3_storage has the filestore location stored, which was being used to supply the $storagedir variable, which was throwing the error. So, if you are moving your installation you will want to go into the database and edit this manually.

Reply all

Reply to author

Forward

Message has been deleted

0 new messages