bitmap index slowing down replication of a large repository

97 views

Skip to first unread message

Matthias Sohn

Sep 7, 2021, 9:03:23 AM9/7/21

to Repo and Gerrit Discussion

For some months we were observing sporadic performance issues (few times per week)

with replicating a large repository to another datacenter (Germany to Netherlands) using the replication plugin.

This server still runs gerrit 2.16 (jgit 5.1.15) and it will be upgraded to gerrit 3.2 (jgit 5.12.0) soon.

Here statistics for this repository:

$ git-sizer

Processing blobs: 2516889

Processing trees: 11785880

Processing commits: 1622835

Matching commits to trees: 1622835

Processing annotated tags: 5938

Processing references: 1062290

| Name | Value | Level of concern |

| ---------------------------- | --------- | ------------------------------ |

| Overall repository size | | |

| * Commits | | |

| * Count | 1.62 M | *** |

| * Total size | 791 MiB | *** |

| * Trees | | |

| * Count | 11.8 M | ******* |

| * Total size | 14.5 GiB | ******* |

| * Total tree entries | 399 M | ******* |

| * Blobs | | |

| * Count | 2.52 M | * |

| * Total size | 321 GiB | !!!!!!!!!!!!!!!!!!!!!!!!!!!!!! |

| * References | | |

| * Count | 1.06 M | !!!!!!!!!!!!!!!!!!!!!!!!!!!!!! |

| | | |

| Biggest objects | | |

| * Trees | | |

| * Maximum entries [1] | 5.57 k | ***** |

| * Blobs | | |

| * Maximum size [2] | 19.8 MiB | ** |

| | | |

| Biggest checkouts | | |

| * Number of directories [3] | 64.1 k | !!!!!!!!!!!!!!!!!!!!!!!!!!!!!! |

| * Maximum path depth [4] | 21 | ** |

| * Maximum path length [5] | 206 B | ** |

| * Number of files [3] | 241 k | **** |

| * Total size of files [6] | 3.25 GiB | *** |

ref statistics:

866k refs/changes

197k refs/cache-automerge

6k refs/tags

215 refs/heads

197k refs/cache-automerge

6k refs/tags

215 refs/heads

Most of the time the replication delay for this repository was between 5-10 minutes. Sporadically (few times a week)

a replication task ran for 2-3 hours. During that time other replication tasks for the same repository kept being rescheduled.

This isn't acceptable for the project team since they need prompt results from their CI jobs fetching from the Gerrit replica.

Sometimes restarting the replication plugin could workaround the long running outlier task, sometimes not.

We created thread dumps for such a long runner and found that it's busy inflating the bitmap index all the time

(all thread dumps looked like this) :

"ReplicateTo-k8s-repo_name-2" #1559 prio=5 os_prio=0 cpu=174628860.00 [reset 174628860.00] ms elapsed=445547.09 [reset 445547.09] s allocated=30884509752584 B (28.09 TB) [reset 30884509752584 B (28.09 TB)] defined_classes=21

io= file i/o: 17880575361/19907555 B, net i/o: 31360313041/443577391 B, files opened:-1555, socks opened:1715 [reset file i/o: 17880575361/19907555 B, net i/o: 31360313041/443577391 B, files opened:-1555, socks opened:1715 ]

tid=0x00007f42729d7000 nid=0xfbed / 64493 pthread-id=139913916573440 runnable [_thread_blocked (_call_back), stack(0x00007f403f3be000,0x00007f403f4bf000)] [0x00007f403f4bc000] top_bci: 262

java.lang.Thread.State: RUNNABLE

at org.eclipse.jgit.internal.storage.file.InflatingBitSet.contains(I)Z(InflatingBitSet.java:107)

at org.eclipse.jgit.internal.storage.file.BitmapIndexImpl$ComboBitset.contains(I)Z(BitmapIndexImpl.java:182)

at org.eclipse.jgit.internal.storage.file.BitmapIndexImpl$CompressedBitmapBuilder.contains(Lorg/eclipse/jgit/lib/AnyObjectId;)Z(BitmapIndexImpl.java:218)

at org.eclipse.jgit.revwalk.BitmapWalker.findObjects(Ljava/lang/Iterable;Lorg/eclipse/jgit/lib/BitmapIndex$BitmapBuilder;Z)Lorg/eclipse/jgit/lib/BitmapIndex$BitmapBuilder;(BitmapWalker.java:152)

at org.eclipse.jgit.internal.storage.pack.PackWriter.findObjectsToPackUsingBitmaps(Lorg/eclipse/jgit/revwalk/BitmapWalker;Ljava/util/Set;Ljava/util/Set;)V(PackWriter.java:2000)

at org.eclipse.jgit.internal.storage.pack.PackWriter.findObjectsToPack(Lorg/eclipse/jgit/lib/ProgressMonitor;Lorg/eclipse/jgit/revwalk/ObjectWalk;Ljava/util/Set;Ljava/util/Set;Ljava/util/Set;)V(PackWriter.java:1795)

at org.eclipse.jgit.internal.storage.pack.PackWriter.preparePack(Lorg/eclipse/jgit/lib/ProgressMonitor;Lorg/eclipse/jgit/revwalk/ObjectWalk;Ljava/util/Set;Ljava/util/Set;Ljava/util/Set;)V(PackWriter.java:914)

at org.eclipse.jgit.internal.storage.pack.PackWriter.preparePack(Lorg/eclipse/jgit/lib/ProgressMonitor;Ljava/util/Set;Ljava/util/Set;Ljava/util/Set;Ljava/util/Set;)V(PackWriter.java:864)

at org.eclipse.jgit.internal.storage.pack.PackWriter.preparePack(Lorg/eclipse/jgit/lib/ProgressMonitor;Ljava/util/Set;Ljava/util/Set;)V(PackWriter.java:786)

at org.eclipse.jgit.transport.BasePackPushConnection.writePack(Ljava/util/Map;Lorg/eclipse/jgit/lib/ProgressMonitor;)V(BasePackPushConnection.java:356)

at org.eclipse.jgit.transport.BasePackPushConnection.doPush(Lorg/eclipse/jgit/lib/ProgressMonitor;Ljava/util/Map;Ljava/io/OutputStream;)V(BasePackPushConnection.java:219)

at org.eclipse.jgit.transport.TransportHttp$SmartHttpPushConnection.doPush(Lorg/eclipse/jgit/lib/ProgressMonitor;Ljava/util/Map;Ljava/io/OutputStream;)V(TransportHttp.java:1109)

at org.eclipse.jgit.transport.BasePackPushConnection.push(Lorg/eclipse/jgit/lib/ProgressMonitor;Ljava/util/Map;Ljava/io/OutputStream;)V(BasePackPushConnection.java:170)

at org.eclipse.jgit.transport.PushProcess.execute(Lorg/eclipse/jgit/lib/ProgressMonitor;)Lorg/eclipse/jgit/transport/PushResult;(PushProcess.java:172)

at org.eclipse.jgit.transport.Transport.push(Lorg/eclipse/jgit/lib/ProgressMonitor;Ljava/util/Collection;Ljava/io/OutputStream;)Lorg/eclipse/jgit/transport/PushResult;(Transport.java:1346)

at org.eclipse.jgit.transport.Transport.push(Lorg/eclipse/jgit/lib/ProgressMonitor;Ljava/util/Collection;)Lorg/eclipse/jgit/transport/PushResult;(Transport.java:1392)

at com.googlesource.gerrit.plugins.replication.PushOne.pushVia(Lorg/eclipse/jgit/transport/Transport;)Lorg/eclipse/jgit/transport/PushResult;(PushOne.java:503)

at com.googlesource.gerrit.plugins.replication.PushOne.runImpl()V(PushOne.java:474)

at com.googlesource.gerrit.plugins.replication.PushOne.runPushOperation()V(PushOne.java:361)

at com.googlesource.gerrit.plugins.replication.PushOne.access$000(Lcom/googlesource/gerrit/plugins/replication/PushOne;)V(PushOne.java:86)

at com.googlesource.gerrit.plugins.replication.PushOne$1.call()Ljava/lang/Void;(PushOne.java:319)

at com.googlesource.gerrit.plugins.replication.PushOne$1.call()Ljava/lang/Object;(PushOne.java:316)

at com.google.gerrit.server.util.RequestScopePropagator.lambda$cleanup$1(Ljava/util/concurrent/Callable;)Ljava/lang/Object;(RequestScopePropagator.java:212)

at com.google.gerrit.server.util.RequestScopePropagator$$Lambda$334/2085504562.call()Ljava/lang/Object;(Unknown Source)

at com.google.gerrit.server.util.RequestScopePropagator.lambda$context$0(Lcom/google/gerrit/server/util/RequestContext;Ljava/util/concurrent/Callable;)Ljava/lang/Object;(RequestScopePropagator.java:191)

at com.google.gerrit.server.util.RequestScopePropagator$$Lambda$335/1244911544.call()Ljava/lang/Object;(Unknown Source)

at com.google.gerrit.server.git.PerThreadRequestScope$Propagator.lambda$scope$0(Lcom/google/gerrit/server/git/PerThreadRequestScope$Context;Ljava/util/concurrent/Callable;)Ljava/lang/Object;(PerThreadRequestScope.java:73)

at com.google.gerrit.server.git.PerThreadRequestScope$Propagator$$Lambda$336/1812496673.call()Ljava/lang/Object;(Unknown Source)

at com.googlesource.gerrit.plugins.replication.PushOne.run()V(PushOne.java:323)

at com.google.gerrit.server.logging.LoggingContextAwareRunnable.run()V(LoggingContextAwareRunnable.java:72)

at java.util.concurrent.Executors$RunnableAdapter.call()Ljava/lang/Object;(Executors.java:511)

at java.util.concurrent.FutureTask.run()V(FutureTask.java:266)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(Ljava/util/concurrent/ScheduledThreadPoolExecutor$ScheduledFutureTask;)V(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run()V(ScheduledThreadPoolExecutor.java:293)

at com.google.gerrit.server.git.WorkQueue$Task.run()V(WorkQueue.java:646)

at java.util.concurrent.ThreadPoolExecutor.runWorker(Ljava/util/concurrent/ThreadPoolExecutor$Worker;)V(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run()V(ThreadPoolExecutor.java:624)

at java.lang.Thread.run()V(Thread.java:836)

io= file i/o: 17880575361/19907555 B, net i/o: 31360313041/443577391 B, files opened:-1555, socks opened:1715 [reset file i/o: 17880575361/19907555 B, net i/o: 31360313041/443577391 B, files opened:-1555, socks opened:1715 ]

tid=0x00007f42729d7000 nid=0xfbed / 64493 pthread-id=139913916573440 runnable [_thread_blocked (_call_back), stack(0x00007f403f3be000,0x00007f403f4bf000)] [0x00007f403f4bc000] top_bci: 262

java.lang.Thread.State: RUNNABLE

at org.eclipse.jgit.internal.storage.file.InflatingBitSet.contains(I)Z(InflatingBitSet.java:107)

at org.eclipse.jgit.internal.storage.file.BitmapIndexImpl$ComboBitset.contains(I)Z(BitmapIndexImpl.java:182)

at org.eclipse.jgit.internal.storage.file.BitmapIndexImpl$CompressedBitmapBuilder.contains(Lorg/eclipse/jgit/lib/AnyObjectId;)Z(BitmapIndexImpl.java:218)

at org.eclipse.jgit.revwalk.BitmapWalker.findObjects(Ljava/lang/Iterable;Lorg/eclipse/jgit/lib/BitmapIndex$BitmapBuilder;Z)Lorg/eclipse/jgit/lib/BitmapIndex$BitmapBuilder;(BitmapWalker.java:152)

at org.eclipse.jgit.internal.storage.pack.PackWriter.findObjectsToPackUsingBitmaps(Lorg/eclipse/jgit/revwalk/BitmapWalker;Ljava/util/Set;Ljava/util/Set;)V(PackWriter.java:2000)

at org.eclipse.jgit.internal.storage.pack.PackWriter.findObjectsToPack(Lorg/eclipse/jgit/lib/ProgressMonitor;Lorg/eclipse/jgit/revwalk/ObjectWalk;Ljava/util/Set;Ljava/util/Set;Ljava/util/Set;)V(PackWriter.java:1795)

at org.eclipse.jgit.internal.storage.pack.PackWriter.preparePack(Lorg/eclipse/jgit/lib/ProgressMonitor;Lorg/eclipse/jgit/revwalk/ObjectWalk;Ljava/util/Set;Ljava/util/Set;Ljava/util/Set;)V(PackWriter.java:914)

at org.eclipse.jgit.internal.storage.pack.PackWriter.preparePack(Lorg/eclipse/jgit/lib/ProgressMonitor;Ljava/util/Set;Ljava/util/Set;Ljava/util/Set;Ljava/util/Set;)V(PackWriter.java:864)

at org.eclipse.jgit.internal.storage.pack.PackWriter.preparePack(Lorg/eclipse/jgit/lib/ProgressMonitor;Ljava/util/Set;Ljava/util/Set;)V(PackWriter.java:786)

at org.eclipse.jgit.transport.BasePackPushConnection.writePack(Ljava/util/Map;Lorg/eclipse/jgit/lib/ProgressMonitor;)V(BasePackPushConnection.java:356)

at org.eclipse.jgit.transport.BasePackPushConnection.doPush(Lorg/eclipse/jgit/lib/ProgressMonitor;Ljava/util/Map;Ljava/io/OutputStream;)V(BasePackPushConnection.java:219)

at org.eclipse.jgit.transport.TransportHttp$SmartHttpPushConnection.doPush(Lorg/eclipse/jgit/lib/ProgressMonitor;Ljava/util/Map;Ljava/io/OutputStream;)V(TransportHttp.java:1109)

at org.eclipse.jgit.transport.BasePackPushConnection.push(Lorg/eclipse/jgit/lib/ProgressMonitor;Ljava/util/Map;Ljava/io/OutputStream;)V(BasePackPushConnection.java:170)

at org.eclipse.jgit.transport.PushProcess.execute(Lorg/eclipse/jgit/lib/ProgressMonitor;)Lorg/eclipse/jgit/transport/PushResult;(PushProcess.java:172)

at org.eclipse.jgit.transport.Transport.push(Lorg/eclipse/jgit/lib/ProgressMonitor;Ljava/util/Collection;Ljava/io/OutputStream;)Lorg/eclipse/jgit/transport/PushResult;(Transport.java:1346)

at org.eclipse.jgit.transport.Transport.push(Lorg/eclipse/jgit/lib/ProgressMonitor;Ljava/util/Collection;)Lorg/eclipse/jgit/transport/PushResult;(Transport.java:1392)

at com.googlesource.gerrit.plugins.replication.PushOne.pushVia(Lorg/eclipse/jgit/transport/Transport;)Lorg/eclipse/jgit/transport/PushResult;(PushOne.java:503)

at com.googlesource.gerrit.plugins.replication.PushOne.runImpl()V(PushOne.java:474)

at com.googlesource.gerrit.plugins.replication.PushOne.runPushOperation()V(PushOne.java:361)

at com.googlesource.gerrit.plugins.replication.PushOne.access$000(Lcom/googlesource/gerrit/plugins/replication/PushOne;)V(PushOne.java:86)

at com.googlesource.gerrit.plugins.replication.PushOne$1.call()Ljava/lang/Void;(PushOne.java:319)

at com.googlesource.gerrit.plugins.replication.PushOne$1.call()Ljava/lang/Object;(PushOne.java:316)

at com.google.gerrit.server.util.RequestScopePropagator.lambda$cleanup$1(Ljava/util/concurrent/Callable;)Ljava/lang/Object;(RequestScopePropagator.java:212)

at com.google.gerrit.server.util.RequestScopePropagator$$Lambda$334/2085504562.call()Ljava/lang/Object;(Unknown Source)

at com.google.gerrit.server.util.RequestScopePropagator.lambda$context$0(Lcom/google/gerrit/server/util/RequestContext;Ljava/util/concurrent/Callable;)Ljava/lang/Object;(RequestScopePropagator.java:191)

at com.google.gerrit.server.util.RequestScopePropagator$$Lambda$335/1244911544.call()Ljava/lang/Object;(Unknown Source)

at com.google.gerrit.server.git.PerThreadRequestScope$Propagator.lambda$scope$0(Lcom/google/gerrit/server/git/PerThreadRequestScope$Context;Ljava/util/concurrent/Callable;)Ljava/lang/Object;(PerThreadRequestScope.java:73)

at com.google.gerrit.server.git.PerThreadRequestScope$Propagator$$Lambda$336/1812496673.call()Ljava/lang/Object;(Unknown Source)

at com.googlesource.gerrit.plugins.replication.PushOne.run()V(PushOne.java:323)

at com.google.gerrit.server.logging.LoggingContextAwareRunnable.run()V(LoggingContextAwareRunnable.java:72)

at java.util.concurrent.Executors$RunnableAdapter.call()Ljava/lang/Object;(Executors.java:511)

at java.util.concurrent.FutureTask.run()V(FutureTask.java:266)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(Ljava/util/concurrent/ScheduledThreadPoolExecutor$ScheduledFutureTask;)V(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run()V(ScheduledThreadPoolExecutor.java:293)

at com.google.gerrit.server.git.WorkQueue$Task.run()V(WorkQueue.java:646)

at java.util.concurrent.ThreadPoolExecutor.runWorker(Ljava/util/concurrent/ThreadPoolExecutor$Worker;)V(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run()V(ThreadPoolExecutor.java:624)

at java.lang.Thread.run()V(Thread.java:836)

This led us to the idea to try without a bitmap index and to our surprise this reduced the replication delay to 20-90 seconds !

The slowest tasks now take 2.5 minutes only. No more outliers taking more than 2 hours.

It looks like using the bitmap index for pushing from this large repository slows down upload-pack considerably.

Did anybody else observe similar behavior ?

So far we run git gc using git 2.26.2.

We plan to try again with bitmap index generated by jgit gc.

There are quite a number of changes affecting bitmap implementation in jgit between 5.1.15 and 5.12.0

hence we will also try with gerrit 3.2.

-Matthias

Matthias Sohn

Sep 13, 2021, 12:01:38 PM9/13/21

to Repo and Gerrit Discussion

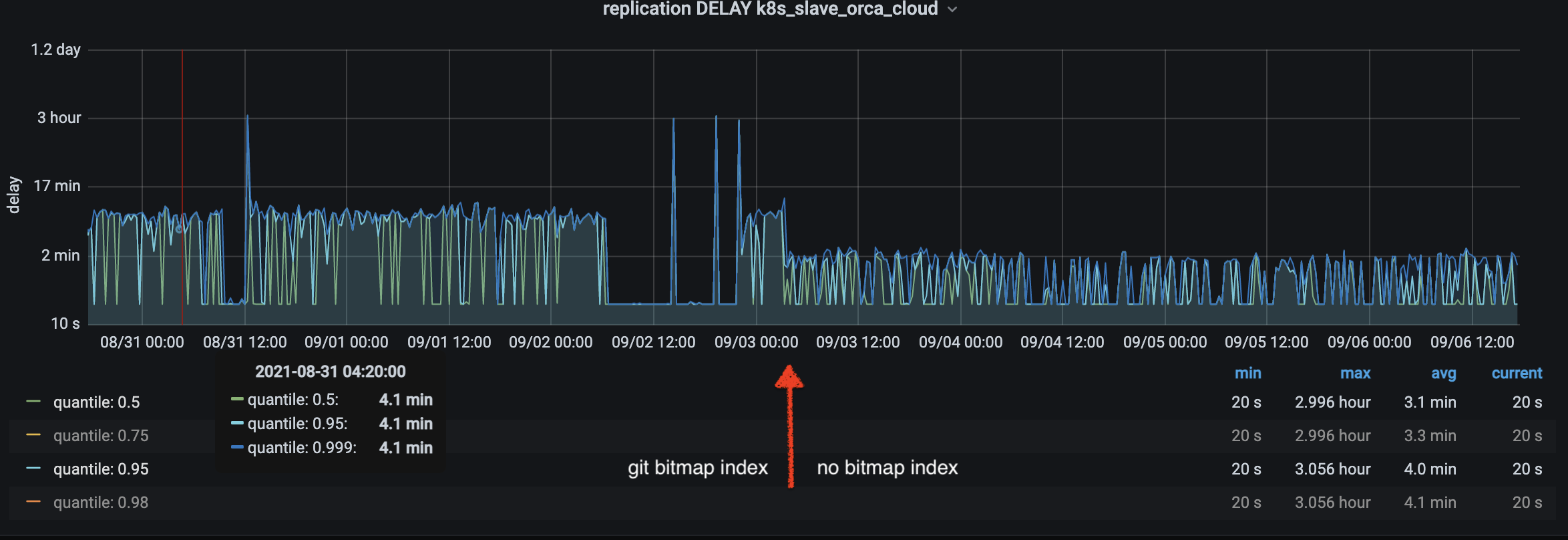

Here is the replication delay with git bitmap index (left of the red arrow) and without bitmap index (right of the arrow).

Note that the vertical axis has a logarithmic scale.

It looks like using the bitmap index for pushing from this large repository slows down upload-pack considerably.Did anybody else observe similar behavior ?So far we run git gc using git 2.26.2.We plan to try again with bitmap index generated by jgit gc.

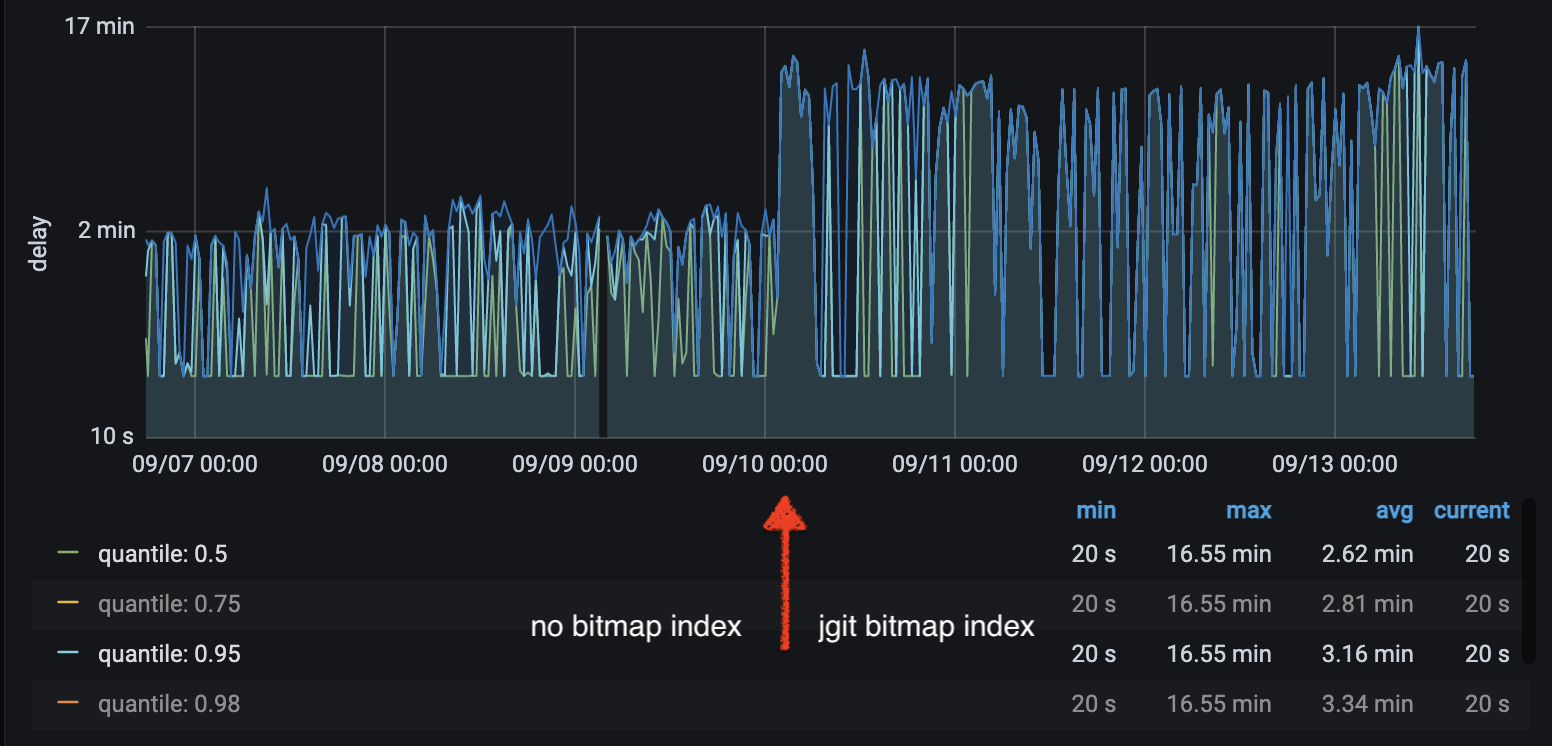

We also tried with bitmap index created by jgit gc (using jgit 5.12.0 to gc):

again the replication delay is lower without bitmap index (left of the red arrow)

compared to the delay with bitmap index created by jgit gc (right of the red arrow).

With jgit generated bitmap index we didn't observe the 2.5 hour spikes we sporadically saw with git bitmap index.

Han-Wen Nienhuys

Sep 13, 2021, 12:43:47 PM9/13/21

to Matthias Sohn, Repo and Gerrit Discussion

On Mon, Sep 13, 2021 at 6:01 PM Matthias Sohn <matthi...@gmail.com> wrote:

On Tue, Sep 7, 2021 at 3:03 PM Matthias Sohn <matthi...@gmail.com> wrote:

For some months we were observing sporadic performance issues (few times per week)

with replicating a large repository to another datacenter (Germany to Netherlands) using the replication plugin.This server still runs gerrit 2.16 (jgit 5.1.15) and it will be upgraded to gerrit 3.2 (jgit 5.12.0) soon.

Most of the time the replication delay for this repository was between 5-10 minutes. Sporadically (few times a week)a replication task ran for 2-3 hours. During that time other replication tasks for the same repository kept being rescheduled.This isn't acceptable for the project team since they need prompt results from their CI jobs fetching from the Gerrit replica.Sometimes restarting the replication plugin could workaround the long running outlier task, sometimes not.We created thread dumps for such a long runner and found that it's busy inflating the bitmap index all the time(all thread dumps looked like this) :

This led us to the idea to try without a bitmap index and to our surprise this reduced the replication delay to 20-90 seconds !

The slowest tasks now take 2.5 minutes only. No more outliers taking more than 2 hours.

Here is the replication delay with git bitmap index (left of the red arrow) and without bitmap index (right of the arrow).Note that the vertical axis has a logarithmic scale.

It looks like using the bitmap index for pushing from this large repository slows down upload-pack considerably.Did anybody else observe similar behavior ?

I asked around internally to our JGit experts. Here are comments:

- push is using the JGit client. That hasn't had much love; we don't use it all inside Google (we use pull replication internally).

- there is a plan to have negotiation on push, but likely wouldn't affect this problem, which seems more basic.

- one theory: it's trying to replicate refs/changes/*. Since those are in the GC_REST pack, bitmaps won't work for them,

so all work trying to look for them is wasted.

- upgrading JGit might help; this is code from ~2018 ?

Han-Wen Nienhuys - Google Munich

I work 80%. Don't expect answers from me on Fridays.

--

Google Germany GmbH, Erika-Mann-Strasse 33, 80636 Munich

Registergericht und -nummer: Hamburg, HRB 86891

Sitz der Gesellschaft: Hamburg

Geschäftsführer: Paul Manicle, Halimah DeLaine Prado

Martin Fick

Sep 13, 2021, 2:35:23 PM9/13/21

to Han-Wen Nienhuys, Matthias Sohn, Repo and Gerrit Discussion

On 2021-09-13 10:43, 'Han-Wen Nienhuys' via Repo and Gerrit Discussion

wrote:

I would love to hear more about what these plans and what this might

mean! Is this potentially trying to introduce git protocol 2 to push?

> - one theory: it's trying to replicate refs/changes/*. Since those are

> in the GC_REST pack, bitmaps won't work for them,

> so all work trying to look for them is wasted.

Interesting thought. Does your git gced pack have changes in it?

Another somewhat related thought was wondering if you have any sort of

ACLs setup on your replication (i.e. an auth group)? If so, could that

somehow make objects in the bitmap not visible to the destination and

thus could similarly cause a lot of wasted work with the bitmaps?

-Martin

--

The Qualcomm Innovation Center, Inc. is a member of Code

Aurora Forum, hosted by The Linux Foundation

wrote:

mean! Is this potentially trying to introduce git protocol 2 to push?

> - one theory: it's trying to replicate refs/changes/*. Since those are

> in the GC_REST pack, bitmaps won't work for them,

> so all work trying to look for them is wasted.

Another somewhat related thought was wondering if you have any sort of

ACLs setup on your replication (i.e. an auth group)? If so, could that

somehow make objects in the bitmap not visible to the destination and

thus could similarly cause a lot of wasted work with the bitmaps?

-Martin

--

The Qualcomm Innovation Center, Inc. is a member of Code

Aurora Forum, hosted by The Linux Foundation

Han-Wen Nienhuys

Sep 14, 2021, 5:08:42 AM9/14/21

to Martin Fick, Matthias Sohn, Repo and Gerrit Discussion

On Mon, Sep 13, 2021 at 8:35 PM Martin Fick <mf...@codeaurora.org> wrote:

> > - push is using the JGit client. That hasn't had much love; we don't

> > use it all inside Google (we use pull replication internally).

> > - there is a plan to have negotiation on push, but likely wouldn't

> > affect this problem, which seems more basic.

>

> I would love to hear more about what these plans and what this might

> mean! Is this potentially trying to introduce git protocol 2 to push?

It's exactly that. See

> > - push is using the JGit client. That hasn't had much love; we don't

> > use it all inside Google (we use pull replication internally).

> > - there is a plan to have negotiation on push, but likely wouldn't

> > affect this problem, which seems more basic.

>

> I would love to hear more about what these plans and what this might

> mean! Is this potentially trying to introduce git protocol 2 to push?

https://public-inbox.org/git/cover.1620162764....@google.com/

We've been testing this internally, and have seen large decreases in

the amount of data sent.

The primary use-case is with large repositories, where history moves

quickly, so uploads against old versions of the target branch run a

risk of picking a bad common ancestor.

Matthias Sohn

Sep 14, 2021, 5:44:36 AM9/14/21

to Han-Wen Nienhuys, Repo and Gerrit Discussion

On Mon, Sep 13, 2021 at 6:43 PM Han-Wen Nienhuys <han...@google.com> wrote:

On Mon, Sep 13, 2021 at 6:01 PM Matthias Sohn <matthi...@gmail.com> wrote:On Tue, Sep 7, 2021 at 3:03 PM Matthias Sohn <matthi...@gmail.com> wrote:For some months we were observing sporadic performance issues (few times per week)with replicating a large repository to another datacenter (Germany to Netherlands) using the replication plugin.This server still runs gerrit 2.16 (jgit 5.1.15) and it will be upgraded to gerrit 3.2 (jgit 5.12.0) soon.Most of the time the replication delay for this repository was between 5-10 minutes. Sporadically (few times a week)a replication task ran for 2-3 hours. During that time other replication tasks for the same repository kept being rescheduled.This isn't acceptable for the project team since they need prompt results from their CI jobs fetching from the Gerrit replica.Sometimes restarting the replication plugin could workaround the long running outlier task, sometimes not.We created thread dumps for such a long runner and found that it's busy inflating the bitmap index all the time(all thread dumps looked like this) :This led us to the idea to try without a bitmap index and to our surprise this reduced the replication delay to 20-90 seconds !The slowest tasks now take 2.5 minutes only. No more outliers taking more than 2 hours.Here is the replication delay with git bitmap index (left of the red arrow) and without bitmap index (right of the arrow).Note that the vertical axis has a logarithmic scale.It looks like using the bitmap index for pushing from this large repository slows down upload-pack considerably.Did anybody else observe similar behavior ?I asked around internally to our JGit experts. Here are comments:- push is using the JGit client. That hasn't had much love; we don't use it all inside Google (we use pull replication internally).- there is a plan to have negotiation on push, but likely wouldn't affect this problem, which seems more basic.

that sounds like an awesome plan

- one theory: it's trying to replicate refs/changes/*. Since those are in the GC_REST pack, bitmaps won't work for them,so all work trying to look for them is wasted.

yes, most of the time it's trying to replicate refs/changes/* since a lot of developers work on that large repo and

keep pushing new changes/patchsets all the time

- upgrading JGit might help; this is code from ~2018 ?

we will upgrade this server to Gerrit 3.2 soon

Saša Živkov

Sep 14, 2021, 8:23:16 AM9/14/21

to Matthias Sohn, Repo and Gerrit Discussion

Today we got a 5 hour spike when using the jgit generated bitmap index. Therefore, switching to jgit+bitmaps didn't

improve anything regarding the push performance.

There are quite a number of changes affecting bitmap implementation in jgit between 5.1.15 and 5.12.0hence we will also try with gerrit 3.2.-Matthias

--

--

To unsubscribe, email repo-discuss...@googlegroups.com

More info at http://groups.google.com/group/repo-discuss?hl=en

---

You received this message because you are subscribed to the Google Groups "Repo and Gerrit Discussion" group.

To unsubscribe from this group and stop receiving emails from it, send an email to repo-discuss...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/repo-discuss/CAKSZd3Rz-s24aA7hc_9L61z4PY-L%3DPVxaP6BOL6peEhJrGjFYg%40mail.gmail.com.

Reply all

Reply to author

Forward

0 new messages