when adding a model to repository, the order of the statement are gone

73 views

Skip to first unread message

lee

Apr 1, 2021, 4:29:54 PM4/1/21

to RDF4J Users

Hi Devs,

I have a RDF model created like this,

ModelFactory modelFactory = new LinkedHashModelFactory();

Model model = modelFactory.createEmptyModel();

Model model = modelFactory.createEmptyModel();

it has several hundreds of statements, and these statements have a particular ordering. When I add this model to the repository, i.e.,

public static int commitModelToTripleStore(Model model, String endpointUrl) {

Repository repo = new SPARQLRepository(endpointUrl);

repo.init();

RepositoryConnection con = null;

try {

con = repo.getConnection();

con.add(model);

} catch (RepositoryException e) {

} finally {

con.close();

}

return model.size();

}

Repository repo = new SPARQLRepository(endpointUrl);

repo.init();

RepositoryConnection con = null;

try {

con = repo.getConnection();

con.add(model);

} catch (RepositoryException e) {

} finally {

con.close();

}

return model.size();

}

The order of the statements are gone, and it is hard for me to tell in which order the statements are add to the backend database. Could someone please let me know how to keep the order when adding these statements?

Many thanks!

Jeen Broekstra

Apr 1, 2021, 6:47:37 PM4/1/21

to RDF4J Users

Hi Lee,

RDF models are defined as sets of statements, in other words: unordered collections. There is no way to preserve order of statements in the database simply because that is not part of the RDF model.

Can you clarify why you want this and more generally what you're trying to do? There may be other ways to achieve what you need.

Cheers,

Jeen

PS I realize it's somewhat confusing that the LinkedHashModel object itself does preserve order, but this is done purely as a convenience for working with small amounts of data in code. Conceptually, it's still a Set (just one with predictable iteration order).

--You received this message because you are subscribed to the Google Groups "RDF4J Users" group.To unsubscribe from this group and stop receiving emails from it, send an email to rdf4j-users...@googlegroups.com.To view this discussion on the web visit https://groups.google.com/d/msgid/rdf4j-users/1e960d84-6c56-4cba-be6a-904f2b006d9dn%40googlegroups.com.

lee

Apr 1, 2021, 7:59:00 PM4/1/21

to RDF4J Users

Hi Jeen,

What you have said here is very very helpful! The name LinkedHashModel does give me the impression that somehow the order will be preserved. But thanks to your explanation, now I understand it much better.

What I want to accomplish is to have RDF Lists in a named graph. Seems like we can use RDF collections to do this, for example, RDFCollections.asRDF(). However, RDFCollections are using blank nodes to create RDF lists, which is totally fine except that when I want to delete this named graph, it gives me an exception saying the delete cannot be accomplished since the graph has blank nodes inside it. This is not feasible for us, since our use cases require us to create/delete some named graphs containing RDF lists over and over. Then I started to look if I can use RDF collections to create RDF lists without using blank nodes, i.e., everything inside the RDF list will have a URI. But I have no luck to find that. That is where I started to think if I can preserve the order on my own, which of course does not work too well since the graph is a *set* of triples.

Hope I am making sense... if you could give me some directions, that will be super helpful!!

thanks!

Lee.

Jeen Broekstra

Apr 1, 2021, 8:03:20 PM4/1/21

to RDF4J Users

On Fri, 2 Apr 2021, at 10:59, lee wrote:

Hi Jeen,What you have said here is very very helpful! The name LinkedHashModel does give me the impression that somehow the order will be preserved. But thanks to your explanation, now I understand it much better.What I want to accomplish is to have RDF Lists in a named graph. Seems like we can use RDF collections to do this, for example, RDFCollections.asRDF(). However, RDFCollections are using blank nodes to create RDF lists, which is totally fine except that when I want to delete this named graph, it gives me an exception saying the delete cannot be accomplished since the graph has blank nodes inside it.

That sounds odd. Can you describe in detail what you're doing (what your data looks like, and how you're trying to delete the named graph)? Because I can't immediately think of a situation where a named graph delete would fail because of the presence of blank nodes...

Cheers,

Jeen

lee

Apr 1, 2021, 8:27:05 PM4/1/21

to RDF4J Users

Thanks Jeen!

my named graph has lots of "normal" triples, but it also has RDF lists look like this,

ex:entity ex:hasAttribute _:n1 .

_:n1 rdf:type rdf:list .

_:n1 rdf:first ex:attribute1 .

_:n1 rdf:rest _:n2 .

_:n2 rdf: first ex:attribute2 .

_:n2 rdf:rest rdf:nil .

when we query this named graph, we would like ex:attribute1, ex:attribute2, ... to keep this order when they show up in the query result.

I will now go back to the RDF Collection idea since it looks like that is the only way to keep the ordering of the attributes. This will inevitably use blank nodes, and when we encounter the delete exception again we will for sure ask for help. I will use this same thread when I capture that error message.

Thank you Jeen! and if you any other way we can do this (keep the ordering), do let us know.

lee

Apr 1, 2021, 11:11:37 PM4/1/21

to RDF4J Users

Hi Jeen,

Hope this will be helpful for you to see the problem -

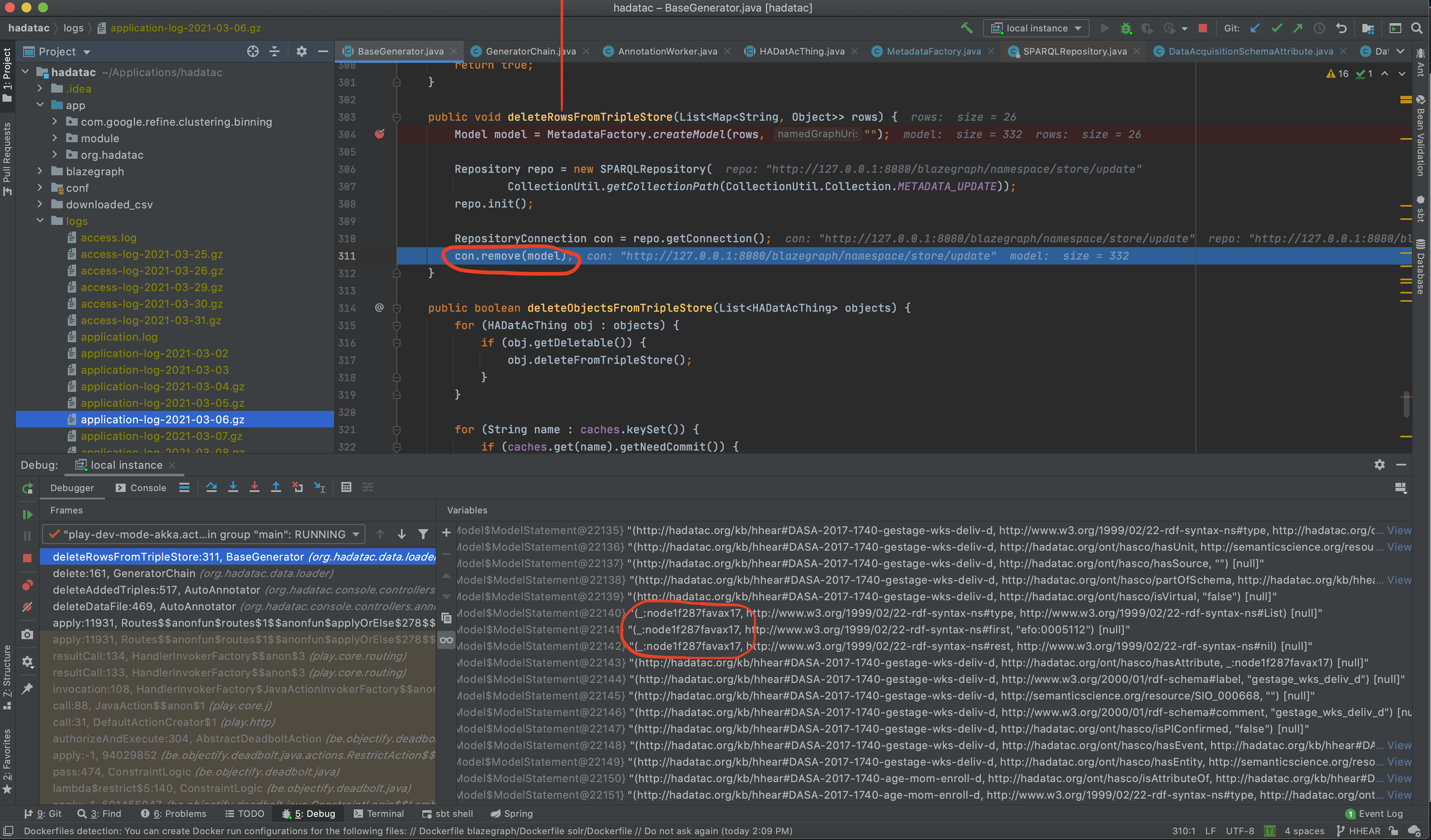

inside this deleteRowsFromTripleStore() method - I created a Model, which I show that in the debug window, and you can see it has a few blank nodes, and then I use a RepositoryConnection.remove(model) to get rid of the statements in the model. It will throw an exception like this: (after the picture):

Error in DASchemaAttrGenerator: error executing transaction

org.eclipse.rdf4j.repository.RepositoryException: error executing transaction

at org.eclipse.rdf4j.repository.sparql.SPARQLConnection.commit(SPARQLConnection.java:401)

at org.eclipse.rdf4j.repository.base.AbstractRepositoryConnection.conditionalCommit(AbstractRepositoryConnection.java:332)

at org.eclipse.rdf4j.repository.sparql.SPARQLConnection.remove(SPARQLConnection.java:673)

at org.hadatac.data.loader.BaseGenerator.deleteRowsFromTripleStore(BaseGenerator.java:311). see above picture, the RED circle on line 311

at org.hadatac.data.loader.GeneratorChain.delete(GeneratorChain.java:161)

at org.hadatac.console.controllers.annotator.AutoAnnotator.deleteAddedTriples(AutoAnnotator.java:517)

at org.hadatac.console.controllers.annotator.AutoAnnotator.deleteDataFile(AutoAnnotator.java:469)

at router.Routes$$anonfun$routes$1$$anonfun$applyOrElse$278$$anonfun$apply$278.apply(Routes.scala:11931)

at router.Routes$$anonfun$routes$1$$anonfun$applyOrElse$278$$anonfun$apply$278.apply(Routes.scala:11931)

at play.core.routing.HandlerInvokerFactory$$anon$3.resultCall(HandlerInvoker.scala:134)

at play.core.routing.HandlerInvokerFactory$$anon$3.resultCall(HandlerInvoker.scala:133)

at play.core.routing.HandlerInvokerFactory$JavaActionInvokerFactory$$anon$8$$anon$2$$anon$1.invocation(HandlerInvoker.scala:108)

at play.core.j.JavaAction$$anon$1.call(JavaAction.scala:88)

at play.http.DefaultActionCreator$1.call(DefaultActionCreator.java:31)

at be.objectify.deadbolt.java.actions.AbstractDeadboltAction.authorizeAndExecute(AbstractDeadboltAction.java:304)

at be.objectify.deadbolt.java.ConstraintLogic.pass(ConstraintLogic.java:474)

at be.objectify.deadbolt.java.ConstraintLogic.lambda$restrict$5(ConstraintLogic.java:140)

at java.util.concurrent.CompletableFuture.uniComposeStage(CompletableFuture.java:995)

at java.util.concurrent.CompletableFuture.thenCompose(CompletableFuture.java:2137)

at java.util.concurrent.CompletableFuture.thenCompose(CompletableFuture.java:110)

at be.objectify.deadbolt.java.ConstraintLogic.restrict(ConstraintLogic.java:128)

at be.objectify.deadbolt.java.actions.RestrictAction.applyRestriction(RestrictAction.java:75)

at be.objectify.deadbolt.java.actions.AbstractRestrictiveAction.lambda$null$1(AbstractRestrictiveAction.java:59)

at java.util.Optional.orElseGet(Optional.java:267)

at be.objectify.deadbolt.java.actions.AbstractRestrictiveAction.lambda$execute$2(AbstractRestrictiveAction.java:59)

at java.util.concurrent.CompletableFuture.uniComposeStage(CompletableFuture.java:995)

at java.util.concurrent.CompletableFuture.thenCompose(CompletableFuture.java:2137)

at java.util.concurrent.CompletableFuture.thenCompose(CompletableFuture.java:110)

at be.objectify.deadbolt.java.actions.AbstractRestrictiveAction.execute(AbstractRestrictiveAction.java:58)

at be.objectify.deadbolt.java.actions.AbstractDeadboltAction.call(AbstractDeadboltAction.java:141)

at play.core.j.JavaAction$$anonfun$9.apply(JavaAction.scala:138)

at play.core.j.JavaAction$$anonfun$9.apply(JavaAction.scala:138)

at scala.concurrent.impl.Future$PromiseCompletingRunnable.liftedTree1$1(Future.scala:24)

at scala.concurrent.impl.Future$PromiseCompletingRunnable.run(Future.scala:24)

at play.core.j.HttpExecutionContext$$anon$2.run(HttpExecutionContext.scala:56)

at play.api.libs.streams.Execution$trampoline$.execute(Execution.scala:70)

at play.core.j.HttpExecutionContext.execute(HttpExecutionContext.scala:48)

at scala.concurrent.impl.Future$.apply(Future.scala:31)

at scala.concurrent.Future$.apply(Future.scala:494)

at play.core.j.JavaAction.apply(JavaAction.scala:138)

at play.api.mvc.Action$$anonfun$apply$2.apply(Action.scala:96)

at play.api.mvc.Action$$anonfun$apply$2.apply(Action.scala:89)

at play.api.libs.streams.StrictAccumulator$$anonfun$mapFuture$2$$anonfun$1.apply(Accumulator.scala:174)

at play.api.libs.streams.StrictAccumulator$$anonfun$mapFuture$2$$anonfun$1.apply(Accumulator.scala:174)

at scala.util.Try$.apply(Try.scala:192)

at play.api.libs.streams.StrictAccumulator$$anonfun$mapFuture$2.apply(Accumulator.scala:174)

at play.api.libs.streams.StrictAccumulator$$anonfun$mapFuture$2.apply(Accumulator.scala:170)

at scala.Function1$$anonfun$andThen$1.apply(Function1.scala:52)

at scala.Function1$$anonfun$andThen$1.apply(Function1.scala:52)

at scala.Function1$$anonfun$andThen$1.apply(Function1.scala:52)

at play.api.libs.streams.StrictAccumulator.run(Accumulator.scala:207)

at play.api.libs.streams.FlattenedAccumulator$$anonfun$run$2.apply(Accumulator.scala:231)

at play.api.libs.streams.FlattenedAccumulator$$anonfun$run$2.apply(Accumulator.scala:231)

at scala.concurrent.Future$$anonfun$flatMap$1.apply(Future.scala:253)

at scala.concurrent.Future$$anonfun$flatMap$1.apply(Future.scala:251)

at scala.concurrent.impl.CallbackRunnable.run(Promise.scala:36)

at akka.dispatch.BatchingExecutor$AbstractBatch.processBatch(BatchingExecutor.scala:55)

at akka.dispatch.BatchingExecutor$BlockableBatch$$anonfun$run$1.apply$mcV$sp(BatchingExecutor.scala:91)

at akka.dispatch.BatchingExecutor$BlockableBatch$$anonfun$run$1.apply(BatchingExecutor.scala:91)

at akka.dispatch.BatchingExecutor$BlockableBatch$$anonfun$run$1.apply(BatchingExecutor.scala:91)

at scala.concurrent.BlockContext$.withBlockContext(BlockContext.scala:72)

at akka.dispatch.BatchingExecutor$BlockableBatch.run(BatchingExecutor.scala:90)

at akka.dispatch.TaskInvocation.run(AbstractDispatcher.scala:40)

at akka.dispatch.ForkJoinExecutorConfigurator$AkkaForkJoinTask.exec(ForkJoinExecutorConfigurator.scala:43)

at akka.dispatch.forkjoin.ForkJoinTask.doExec(ForkJoinTask.java:260)

at akka.dispatch.forkjoin.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1339)

at akka.dispatch.forkjoin.ForkJoinPool.runWorker(ForkJoinPool.java:1979)

at akka.dispatch.forkjoin.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:107)

Caused by: org.eclipse.rdf4j.query.UpdateExecutionException: SPARQL-UPDATE: updateStr=DELETE DATA

{

<http://hadatac.org/kb/hhear#DASA-2016-1431-5-CHEARPID> <http://hadatac.org/ont/hasco/isAttributeOf> <http://hadatac.org/kb/hhear#DASO-2016-1431-5-child> .

<http://hadatac.org/kb/hhear#DASA-2016-1431-5-CHEARPID> <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://hadatac.org/ont/hasco/DASchemaAttribute> .

org.eclipse.rdf4j.repository.RepositoryException: error executing transaction

at org.eclipse.rdf4j.repository.sparql.SPARQLConnection.commit(SPARQLConnection.java:401)

at org.eclipse.rdf4j.repository.base.AbstractRepositoryConnection.conditionalCommit(AbstractRepositoryConnection.java:332)

at org.eclipse.rdf4j.repository.sparql.SPARQLConnection.remove(SPARQLConnection.java:673)

at org.hadatac.data.loader.BaseGenerator.deleteRowsFromTripleStore(BaseGenerator.java:311). see above picture, the RED circle on line 311

at org.hadatac.data.loader.GeneratorChain.delete(GeneratorChain.java:161)

at org.hadatac.console.controllers.annotator.AutoAnnotator.deleteAddedTriples(AutoAnnotator.java:517)

at org.hadatac.console.controllers.annotator.AutoAnnotator.deleteDataFile(AutoAnnotator.java:469)

at router.Routes$$anonfun$routes$1$$anonfun$applyOrElse$278$$anonfun$apply$278.apply(Routes.scala:11931)

at router.Routes$$anonfun$routes$1$$anonfun$applyOrElse$278$$anonfun$apply$278.apply(Routes.scala:11931)

at play.core.routing.HandlerInvokerFactory$$anon$3.resultCall(HandlerInvoker.scala:134)

at play.core.routing.HandlerInvokerFactory$$anon$3.resultCall(HandlerInvoker.scala:133)

at play.core.routing.HandlerInvokerFactory$JavaActionInvokerFactory$$anon$8$$anon$2$$anon$1.invocation(HandlerInvoker.scala:108)

at play.core.j.JavaAction$$anon$1.call(JavaAction.scala:88)

at play.http.DefaultActionCreator$1.call(DefaultActionCreator.java:31)

at be.objectify.deadbolt.java.actions.AbstractDeadboltAction.authorizeAndExecute(AbstractDeadboltAction.java:304)

at be.objectify.deadbolt.java.ConstraintLogic.pass(ConstraintLogic.java:474)

at be.objectify.deadbolt.java.ConstraintLogic.lambda$restrict$5(ConstraintLogic.java:140)

at java.util.concurrent.CompletableFuture.uniComposeStage(CompletableFuture.java:995)

at java.util.concurrent.CompletableFuture.thenCompose(CompletableFuture.java:2137)

at java.util.concurrent.CompletableFuture.thenCompose(CompletableFuture.java:110)

at be.objectify.deadbolt.java.ConstraintLogic.restrict(ConstraintLogic.java:128)

at be.objectify.deadbolt.java.actions.RestrictAction.applyRestriction(RestrictAction.java:75)

at be.objectify.deadbolt.java.actions.AbstractRestrictiveAction.lambda$null$1(AbstractRestrictiveAction.java:59)

at java.util.Optional.orElseGet(Optional.java:267)

at be.objectify.deadbolt.java.actions.AbstractRestrictiveAction.lambda$execute$2(AbstractRestrictiveAction.java:59)

at java.util.concurrent.CompletableFuture.uniComposeStage(CompletableFuture.java:995)

at java.util.concurrent.CompletableFuture.thenCompose(CompletableFuture.java:2137)

at java.util.concurrent.CompletableFuture.thenCompose(CompletableFuture.java:110)

at be.objectify.deadbolt.java.actions.AbstractRestrictiveAction.execute(AbstractRestrictiveAction.java:58)

at be.objectify.deadbolt.java.actions.AbstractDeadboltAction.call(AbstractDeadboltAction.java:141)

at play.core.j.JavaAction$$anonfun$9.apply(JavaAction.scala:138)

at play.core.j.JavaAction$$anonfun$9.apply(JavaAction.scala:138)

at scala.concurrent.impl.Future$PromiseCompletingRunnable.liftedTree1$1(Future.scala:24)

at scala.concurrent.impl.Future$PromiseCompletingRunnable.run(Future.scala:24)

at play.core.j.HttpExecutionContext$$anon$2.run(HttpExecutionContext.scala:56)

at play.api.libs.streams.Execution$trampoline$.execute(Execution.scala:70)

at play.core.j.HttpExecutionContext.execute(HttpExecutionContext.scala:48)

at scala.concurrent.impl.Future$.apply(Future.scala:31)

at scala.concurrent.Future$.apply(Future.scala:494)

at play.core.j.JavaAction.apply(JavaAction.scala:138)

at play.api.mvc.Action$$anonfun$apply$2.apply(Action.scala:96)

at play.api.mvc.Action$$anonfun$apply$2.apply(Action.scala:89)

at play.api.libs.streams.StrictAccumulator$$anonfun$mapFuture$2$$anonfun$1.apply(Accumulator.scala:174)

at play.api.libs.streams.StrictAccumulator$$anonfun$mapFuture$2$$anonfun$1.apply(Accumulator.scala:174)

at scala.util.Try$.apply(Try.scala:192)

at play.api.libs.streams.StrictAccumulator$$anonfun$mapFuture$2.apply(Accumulator.scala:174)

at play.api.libs.streams.StrictAccumulator$$anonfun$mapFuture$2.apply(Accumulator.scala:170)

at scala.Function1$$anonfun$andThen$1.apply(Function1.scala:52)

at scala.Function1$$anonfun$andThen$1.apply(Function1.scala:52)

at scala.Function1$$anonfun$andThen$1.apply(Function1.scala:52)

at play.api.libs.streams.StrictAccumulator.run(Accumulator.scala:207)

at play.api.libs.streams.FlattenedAccumulator$$anonfun$run$2.apply(Accumulator.scala:231)

at play.api.libs.streams.FlattenedAccumulator$$anonfun$run$2.apply(Accumulator.scala:231)

at scala.concurrent.Future$$anonfun$flatMap$1.apply(Future.scala:253)

at scala.concurrent.Future$$anonfun$flatMap$1.apply(Future.scala:251)

at scala.concurrent.impl.CallbackRunnable.run(Promise.scala:36)

at akka.dispatch.BatchingExecutor$AbstractBatch.processBatch(BatchingExecutor.scala:55)

at akka.dispatch.BatchingExecutor$BlockableBatch$$anonfun$run$1.apply$mcV$sp(BatchingExecutor.scala:91)

at akka.dispatch.BatchingExecutor$BlockableBatch$$anonfun$run$1.apply(BatchingExecutor.scala:91)

at akka.dispatch.BatchingExecutor$BlockableBatch$$anonfun$run$1.apply(BatchingExecutor.scala:91)

at scala.concurrent.BlockContext$.withBlockContext(BlockContext.scala:72)

at akka.dispatch.BatchingExecutor$BlockableBatch.run(BatchingExecutor.scala:90)

at akka.dispatch.TaskInvocation.run(AbstractDispatcher.scala:40)

at akka.dispatch.ForkJoinExecutorConfigurator$AkkaForkJoinTask.exec(ForkJoinExecutorConfigurator.scala:43)

at akka.dispatch.forkjoin.ForkJoinTask.doExec(ForkJoinTask.java:260)

at akka.dispatch.forkjoin.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1339)

at akka.dispatch.forkjoin.ForkJoinPool.runWorker(ForkJoinPool.java:1979)

at akka.dispatch.forkjoin.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:107)

Caused by: org.eclipse.rdf4j.query.UpdateExecutionException: SPARQL-UPDATE: updateStr=DELETE DATA

{

<http://hadatac.org/kb/hhear#DASA-2016-1431-5-CHEARPID> <http://hadatac.org/ont/hasco/isAttributeOf> <http://hadatac.org/kb/hhear#DASO-2016-1431-5-child> .

<http://hadatac.org/kb/hhear#DASA-2016-1431-5-CHEARPID> <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://hadatac.org/ont/hasco/DASchemaAttribute> .

.

.. lots of statements here...

<http://hadatac.org/kb/hhear#DASA-2016-1431-5-ZBFA> <http://hadatac.org/ont/hasco/hasEvent> <http://hadatac.org/kb/hhear#DASO-2016-1431-5-visit2> .

<http://hadatac.org/kb/hhear#DASA-2016-1431-5-ZBFA> <http://hadatac.org/ont/hasco/hasEntity> ""^^<http://www.w3.org/2001/XMLSchema#string> .

<http://hadatac.org/kb/hhear#DASA-2016-1431-5-ZBFA> <http://www.w3.org/ns/prov#wasDerivedFrom> <http://hadatac.org/kb/hhear#DASA-2016-1431-5-CBMI> .

<http://hadatac.org/kb/hhear#DASA-2016-1431-5-ZBFA> <http://www.w3.org/ns/prov#wasDerivedFrom> <http://hadatac.org/kb/hhear#DASA-2016-1431-5-cd-age> .

};

java.util.concurrent.ExecutionException: org.openrdf.query.MalformedQueryException: Blank nodes are not permitted in DELETE DATA

at java.base/java.util.concurrent.FutureTask.report(FutureTask.java:122)

at java.base/java.util.concurrent.FutureTask.get(FutureTask.java:191)

at com.bigdata.rdf.sail.webapp.BigdataServlet.submitApiTask(BigdataServlet.java:281)

at com.bigdata.rdf.sail.webapp.QueryServlet.doSparqlUpdate(QueryServlet.java:448)

at com.bigdata.rdf.sail.webapp.QueryServlet.doPost(QueryServlet.java:229)

at com.bigdata.rdf.sail.webapp.RESTServlet.doPost(RESTServlet.java:269)

at com.bigdata.rdf.sail.webapp.MultiTenancyServlet.doPost(MultiTenancyServlet.java:170)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:707)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:790)

at org.eclipse.jetty.servlet.ServletHolder.handle(ServletHolder.java:865)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1655)

at org.eclipse.jetty.websocket.server.WebSocketUpgradeFilter.doFilter(WebSocketUpgradeFilter.java:215)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1642)

at org.eclipse.jetty.servlet.ServletHandler.doHandle(ServletHandler.java:533)

at org.eclipse.jetty.server.handler.ScopedHandler.handle(ScopedHandler.java:146)

at org.eclipse.jetty.security.SecurityHandler.handle(SecurityHandler.java:548)

at org.eclipse.jetty.server.handler.HandlerWrapper.handle(HandlerWrapper.java:132)

at org.eclipse.jetty.server.handler.ScopedHandler.nextHandle(ScopedHandler.java:257)

at org.eclipse.jetty.server.session.SessionHandler.doHandle(SessionHandler.java:1595)

at org.eclipse.jetty.server.handler.ScopedHandler.nextHandle(ScopedHandler.java:255)

at org.eclipse.jetty.server.handler.ContextHandler.doHandle(ContextHandler.java:1340)

at org.eclipse.jetty.server.handler.ScopedHandler.nextScope(ScopedHandler.java:203)

at org.eclipse.jetty.servlet.ServletHandler.doScope(ServletHandler.java:473)

at org.eclipse.jetty.server.session.SessionHandler.doScope(SessionHandler.java:1564)

at org.eclipse.jetty.server.handler.ScopedHandler.nextScope(ScopedHandler.java:201)

at org.eclipse.jetty.server.handler.ContextHandler.doScope(ContextHandler.java:1242)

at org.eclipse.jetty.server.handler.ScopedHandler.handle(ScopedHandler.java:144)

at org.eclipse.jetty.server.handler.ContextHandlerCollection.handle(ContextHandlerCollection.java:220)

at org.eclipse.jetty.server.handler.HandlerCollection.handle(HandlerCollection.java:126)

at org.eclipse.jetty.server.handler.HandlerWrapper.handle(HandlerWrapper.java:132)

at org.eclipse.jetty.server.Server.handle(Server.java:503)

at org.eclipse.jetty.server.HttpChannel.handle(HttpChannel.java:364)

at org.eclipse.jetty.server.HttpConnection.onFillable(HttpConnection.java:260)

at org.eclipse.jetty.io.AbstractConnection$ReadCallback.succeeded(AbstractConnection.java:305)

at org.eclipse.jetty.io.FillInterest.fillable(FillInterest.java:103)

at org.eclipse.jetty.io.ChannelEndPoint$2.run(ChannelEndPoint.java:118)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.runTask(EatWhatYouKill.java:333)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.doProduce(EatWhatYouKill.java:310)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.tryProduce(EatWhatYouKill.java:168)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.run(EatWhatYouKill.java:126)

at org.eclipse.jetty.util.thread.ReservedThreadExecutor$ReservedThread.run(ReservedThreadExecutor.java:366)

at org.eclipse.jetty.util.thread.QueuedThreadPool.runJob(QueuedThreadPool.java:765)

at org.eclipse.jetty.util.thread.QueuedThreadPool$2.run(QueuedThreadPool.java:683)

at java.base/java.lang.Thread.run(Thread.java:834)

Caused by: org.openrdf.query.MalformedQueryException: Blank nodes are not permitted in DELETE DATA

at com.bigdata.rdf.sail.sparql.Bigdata2ASTSPARQLParser.parseUpdate2(Bigdata2ASTSPARQLParser.java:309)

at com.bigdata.rdf.sail.webapp.QueryServlet$SparqlUpdateTask.call(QueryServlet.java:521)

at com.bigdata.rdf.sail.webapp.QueryServlet$SparqlUpdateTask.call(QueryServlet.java:460)

at com.bigdata.rdf.task.ApiTaskForIndexManager.call(ApiTaskForIndexManager.java:68)

at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628)

... 1 more

Caused by: com.bigdata.rdf.sail.sparql.ast.VisitorException: Blank nodes are not permitted in DELETE DATA

at com.bigdata.rdf.sail.sparql.UpdateExprBuilder.doUnparsedQuadsDataBlock(UpdateExprBuilder.java:786)

at com.bigdata.rdf.sail.sparql.UpdateExprBuilder.visit(UpdateExprBuilder.java:180)

at com.bigdata.rdf.sail.sparql.UpdateExprBuilder.visit(UpdateExprBuilder.java:118)

at com.bigdata.rdf.sail.sparql.ast.ASTDeleteData.jjtAccept(ASTDeleteData.java:23)

at com.bigdata.rdf.sail.sparql.Bigdata2ASTSPARQLParser.parseUpdate2(Bigdata2ASTSPARQLParser.java:289)

... 7 more

at org.eclipse.rdf4j.repository.sparql.query.SPARQLUpdate.execute(SPARQLUpdate.java:53)

at org.eclipse.rdf4j.repository.sparql.SPARQLConnection.commit(SPARQLConnection.java:399)

... 67 more

Caused by: org.eclipse.rdf4j.repository.RepositoryException: SPARQL-UPDATE: updateStr=DELETE DATA

<http://hadatac.org/kb/hhear#DASA-2016-1431-5-ZBFA> <http://hadatac.org/ont/hasco/hasEntity> ""^^<http://www.w3.org/2001/XMLSchema#string> .

<http://hadatac.org/kb/hhear#DASA-2016-1431-5-ZBFA> <http://www.w3.org/ns/prov#wasDerivedFrom> <http://hadatac.org/kb/hhear#DASA-2016-1431-5-CBMI> .

<http://hadatac.org/kb/hhear#DASA-2016-1431-5-ZBFA> <http://www.w3.org/ns/prov#wasDerivedFrom> <http://hadatac.org/kb/hhear#DASA-2016-1431-5-cd-age> .

};

java.util.concurrent.ExecutionException: org.openrdf.query.MalformedQueryException: Blank nodes are not permitted in DELETE DATA

at java.base/java.util.concurrent.FutureTask.report(FutureTask.java:122)

at java.base/java.util.concurrent.FutureTask.get(FutureTask.java:191)

at com.bigdata.rdf.sail.webapp.BigdataServlet.submitApiTask(BigdataServlet.java:281)

at com.bigdata.rdf.sail.webapp.QueryServlet.doSparqlUpdate(QueryServlet.java:448)

at com.bigdata.rdf.sail.webapp.QueryServlet.doPost(QueryServlet.java:229)

at com.bigdata.rdf.sail.webapp.RESTServlet.doPost(RESTServlet.java:269)

at com.bigdata.rdf.sail.webapp.MultiTenancyServlet.doPost(MultiTenancyServlet.java:170)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:707)

at javax.servlet.http.HttpServlet.service(HttpServlet.java:790)

at org.eclipse.jetty.servlet.ServletHolder.handle(ServletHolder.java:865)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1655)

at org.eclipse.jetty.websocket.server.WebSocketUpgradeFilter.doFilter(WebSocketUpgradeFilter.java:215)

at org.eclipse.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1642)

at org.eclipse.jetty.servlet.ServletHandler.doHandle(ServletHandler.java:533)

at org.eclipse.jetty.server.handler.ScopedHandler.handle(ScopedHandler.java:146)

at org.eclipse.jetty.security.SecurityHandler.handle(SecurityHandler.java:548)

at org.eclipse.jetty.server.handler.HandlerWrapper.handle(HandlerWrapper.java:132)

at org.eclipse.jetty.server.handler.ScopedHandler.nextHandle(ScopedHandler.java:257)

at org.eclipse.jetty.server.session.SessionHandler.doHandle(SessionHandler.java:1595)

at org.eclipse.jetty.server.handler.ScopedHandler.nextHandle(ScopedHandler.java:255)

at org.eclipse.jetty.server.handler.ContextHandler.doHandle(ContextHandler.java:1340)

at org.eclipse.jetty.server.handler.ScopedHandler.nextScope(ScopedHandler.java:203)

at org.eclipse.jetty.servlet.ServletHandler.doScope(ServletHandler.java:473)

at org.eclipse.jetty.server.session.SessionHandler.doScope(SessionHandler.java:1564)

at org.eclipse.jetty.server.handler.ScopedHandler.nextScope(ScopedHandler.java:201)

at org.eclipse.jetty.server.handler.ContextHandler.doScope(ContextHandler.java:1242)

at org.eclipse.jetty.server.handler.ScopedHandler.handle(ScopedHandler.java:144)

at org.eclipse.jetty.server.handler.ContextHandlerCollection.handle(ContextHandlerCollection.java:220)

at org.eclipse.jetty.server.handler.HandlerCollection.handle(HandlerCollection.java:126)

at org.eclipse.jetty.server.handler.HandlerWrapper.handle(HandlerWrapper.java:132)

at org.eclipse.jetty.server.Server.handle(Server.java:503)

at org.eclipse.jetty.server.HttpChannel.handle(HttpChannel.java:364)

at org.eclipse.jetty.server.HttpConnection.onFillable(HttpConnection.java:260)

at org.eclipse.jetty.io.AbstractConnection$ReadCallback.succeeded(AbstractConnection.java:305)

at org.eclipse.jetty.io.FillInterest.fillable(FillInterest.java:103)

at org.eclipse.jetty.io.ChannelEndPoint$2.run(ChannelEndPoint.java:118)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.runTask(EatWhatYouKill.java:333)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.doProduce(EatWhatYouKill.java:310)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.tryProduce(EatWhatYouKill.java:168)

at org.eclipse.jetty.util.thread.strategy.EatWhatYouKill.run(EatWhatYouKill.java:126)

at org.eclipse.jetty.util.thread.ReservedThreadExecutor$ReservedThread.run(ReservedThreadExecutor.java:366)

at org.eclipse.jetty.util.thread.QueuedThreadPool.runJob(QueuedThreadPool.java:765)

at org.eclipse.jetty.util.thread.QueuedThreadPool$2.run(QueuedThreadPool.java:683)

at java.base/java.lang.Thread.run(Thread.java:834)

Caused by: org.openrdf.query.MalformedQueryException: Blank nodes are not permitted in DELETE DATA

at com.bigdata.rdf.sail.sparql.Bigdata2ASTSPARQLParser.parseUpdate2(Bigdata2ASTSPARQLParser.java:309)

at com.bigdata.rdf.sail.webapp.QueryServlet$SparqlUpdateTask.call(QueryServlet.java:521)

at com.bigdata.rdf.sail.webapp.QueryServlet$SparqlUpdateTask.call(QueryServlet.java:460)

at com.bigdata.rdf.task.ApiTaskForIndexManager.call(ApiTaskForIndexManager.java:68)

at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628)

... 1 more

Caused by: com.bigdata.rdf.sail.sparql.ast.VisitorException: Blank nodes are not permitted in DELETE DATA

at com.bigdata.rdf.sail.sparql.UpdateExprBuilder.doUnparsedQuadsDataBlock(UpdateExprBuilder.java:786)

at com.bigdata.rdf.sail.sparql.UpdateExprBuilder.visit(UpdateExprBuilder.java:180)

at com.bigdata.rdf.sail.sparql.UpdateExprBuilder.visit(UpdateExprBuilder.java:118)

at com.bigdata.rdf.sail.sparql.ast.ASTDeleteData.jjtAccept(ASTDeleteData.java:23)

at com.bigdata.rdf.sail.sparql.Bigdata2ASTSPARQLParser.parseUpdate2(Bigdata2ASTSPARQLParser.java:289)

... 7 more

at org.eclipse.rdf4j.repository.sparql.query.SPARQLUpdate.execute(SPARQLUpdate.java:53)

at org.eclipse.rdf4j.repository.sparql.SPARQLConnection.commit(SPARQLConnection.java:399)

... 67 more

Caused by: org.eclipse.rdf4j.repository.RepositoryException: SPARQL-UPDATE: updateStr=DELETE DATA

Please kindly let me know which part could be the problem...

Thank you!

Lee

Jeen Broekstra

Apr 2, 2021, 4:27:56 AM4/2/21

to RDF4J Users

Ah, thanks. There's a couple of things going on here.

First of all: the reason you are getting the error is that you are communicating with a remote SPARQL endpoint (rather than directly using an RDF4J store, either locally or remote). The RDF4J API is more advanced than the SPARQL protocol: it has ways to handle communication about blank nodes, but unfortunately those don't always work when communicating with a (non-RDF4J) SPARQL endpoint.

The SPARQLRepository that you are using to access your Blazegraph store works by translating API operations into SPARQL queries and updates. But SPARQL has no way to address blank nodes directly - which means that the blank nodes you have in your Model object will not correspond to the blank nodes in the Blazegraph store. The error you are seeing is caused by a bug in RDF4J SPARQLRepository: it converts the remove operation into a SPARQL DELETE DATA query, but still uses blank node identifiers, which isn't allowed in the SPARQL standard. I'll take a closer look at this and log it as bug. But let me stress that even if this bug wasn't there, this probably still wouldn't quite do what you want.

In your original mail, you mentioned using named graphs, but I don't see any evidence of you actually using that in your code. Yet this might be the best way forward, as a workaround.

If you can use a unique named graph identifier for each collection that you want to add or remove, things immediately become a lot simpler. For example, to add a new collection, insert it into named graph http://example.org/collection1:

// create a named graph IRI for this collection

IRI collection1 = iri("http://example.org/collection1");

// add my model containing the RDF Collection to this named graph in Blazegraph

con.add(model, collection1);

and when you want to remove this collection, instead of doing

conn.remove(model);

just do:

conn.clear(collection1);

The reason this works is that the clear operation translates to a different SPARQL command. You're no longer saying "remove all these statements with these blank nodes", you're just saying "remove everything in this named graph".

Cheers,

Jeen

--You received this message because you are subscribed to the Google Groups "RDF4J Users" group.To unsubscribe from this group and stop receiving emails from it, send an email to rdf4j-users...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/rdf4j-users/dac99f1a-b0fe-44af-8873-10502f01bd62n%40googlegroups.com.Attachments:

lee

Apr 2, 2021, 8:55:50 AM4/2/21

to RDF4J Users

Thank you very much Jeen! this answered lots of our questions. the way we create named graphs is as follows:

model.add(sub, pred, obj, (Resource)namedGraph);

so we are adding statement (sub, pred, obj) one by one, with a namedGraph IRI. Would this way be OKAY too? or, do we have to follow your example to create named graphs?

We will discuss more about what you have said in our group meeting and will come back for more questions if needed.

Thanks!

Lee

Jeen Broekstra

Apr 2, 2021, 5:59:38 PM4/2/21

to RDF4J Users

On Fri, 2 Apr 2021, at 23:55, lee wrote:

Thank you very much Jeen! this answered lots of our questions. the way we create named graphs is as follows:model.add(sub, pred, obj, (Resource)namedGraph);so we are adding statement (sub, pred, obj) one by one, with a namedGraph IRI. Would this way be OKAY too? or, do we have to follow your example to create named graphs?

Yes, that would be fine too.

To view this discussion on the web visit https://groups.google.com/d/msgid/rdf4j-users/f9641ce8-b62c-4d2a-ba55-88d833724ee7n%40googlegroups.com.

Reply all

Reply to author

Forward

0 new messages