Google would have created the first generalized artificial intelligence, “competing” with the human mind

rael-science

Google would have created the first generalized artificial intelligence, “competing” with the human mind

⇧ [VIDÉO] You might also like this partner content (after ad)

DeepMind, a company (belonging to Google) specialized in

artificial intelligence, has just presented its new artificial

intelligence named “Gato”. Unlike “classic” AIs, which specialize in a

specific task, Gato is able to perform more than 600 tasks, often much

better than humans. Controversy is launched as to whether this is

really the first “generalized artificial intelligence” (GAI). Experts

remain skeptical of DeepMind’s announcement.

Artificial intelligence has positively changed many disciplines.

Incredible specialized neural networks are now able to produce results

far beyond human capabilities in many areas.

One of the great challenges in the field of AI is the realization of a

system integrating generalized artificial intelligence (GAI), or strong

artificial intelligence. Such a system must be able to understand and

master any task of which a human being would be capable. She would

therefore be able to compete with human intelligence, and even develop a

certain degree of consciousness. Earlier this year, Google unveiled a

type of AI capable of coding like an average programmer. Recently, in

this race for AI, DeepMind announced the creation of Gato, an artificial

intelligence presented as the first AGI in the world. The results are

published in arXiv.

An unprecedented generalist agent model

A single AI system capable of solving many tasks is not something

new. For example, Google recently started using a system for its search

engine called the “unified multitasking model,” or MUM, that can handle

text, images, and video to perform tasks, from research to

cross-linguistic variations. in the spelling of a word, and the

association of search queries with relevant images.

Incidentally, Senior Vice President Prabhakar Raghavan provided an

impressive example of MUM in action, using the mock search query: I hiked Mount Adams and now want to hike Mount Fuji next fall, what should I do differently to prepare? “. MUM enabled Google Search to show the differences and similarities

between Mount Adams and Mount Fuji. He also brought up articles dealing

with the equipment needed to ascend the latter. Nothing too impressive

you would say, but concretely with Gato, what is innovative is the

diversity of the tasks that are approached and the method of training,

of a single and unique system.

Gato’s guiding design principle is to train on the widest variety of

relevant data possible, including various applications such as images,

text, proprioception, joint torques, button presses and others. discrete

and continuous observations and actions.

To enable processing of this multimodal data, scientists encode it

into a flat sequence of “tokens”. These tokens serve to represent data

in a way that Gato can understand, allowing the system, for example, to

figure out which combination of words in a sentence makes grammatical

sense. These sequences are grouped together and processed by a

transformative neural network, typically used in language processing.

The same network, with the same weights, is used for the different

tasks, unlike traditional neural networks. Indeed, in the latter, each

neuron is assigned a particular weight and therefore a different

importance. In simple terms, the weight determines what information

enters the network and calculates an output data.

In this representation, Gato can be trained and sampled from a

standard large-scale language model, on a large number of datasets

including agents’ experience in simulated and real environments, in

addition to a variety of natural language datasets and images. When

operating, Gato uses context to assemble these sampled tokens to

determine the form and content of its responses.

The results are quite heterogeneous. When it comes to dialog, Gato

falls far short of rivaling the prowess of GPT-3, Open AI’s text

generation model. He can give wrong answers during conversations. For

example he answers that Marseille is the capital of France. The authors

point out that this could probably be improved with further scaling.

Nevertheless, he still proved to be extremely capable in other areas.

Its designers claim that, half the time, Gato performs better than

human experts in 450 of the 604 tasks listed in the research paper.

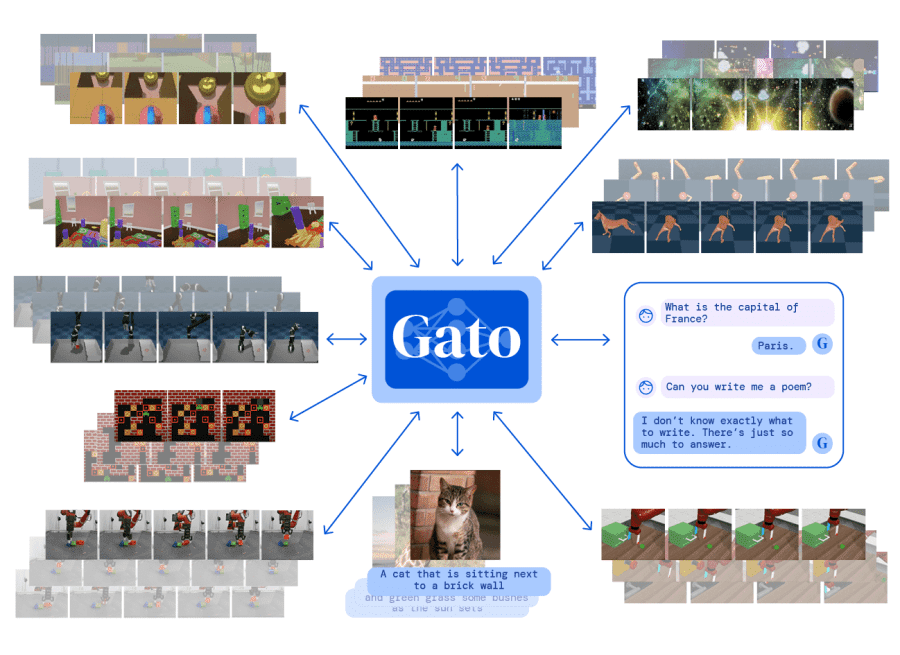

Examples of the tasks performed by Gato, as sequences of tokens. © S. Reed et al., 2022.

Examples of the tasks performed by Gato, as sequences of tokens. © S. Reed et al., 2022. ” The Game is Over “, Actually ?

Some AI researchers see the AGI as an existential catastrophe for

humans: a “super intelligent” system that surpasses human intelligence

would replace humanity on Earth, under the worst-case scenario. Other

experts believe that it will not be possible in our lifetime to see the

emergence of these AGIs. This is the pessimistic opinion that Tristan

Greene argued in his editorial on the site TheNextWeb. He

explains that it’s easy to mistake Gato for a real IAG. The difference,

however, is that a general intelligence could learn to do new things

without prior training.

The response to this article was not long in coming. On TwitterNando

de Freitas, researcher at DeepMind and professor of machine learning at

the University of Oxford, said the game was over (“ The Game is Over ”) in the long quest for generalized artificial intelligence. He adds : ” It’s

about making these models bigger, safer, more computationally

efficient, faster to sample, with smarter memory, more modalities,

innovative data, online/offline… It is by solving these challenges that we will obtain the IAG “.

Nevertheless, the authors warn against the development of these AGIs: “ Although

generalist agents are still an emerging field of research, their

potential impact on society calls for a thorough interdisciplinary

analysis of their risks and benefits. […] Generalist agent harm

mitigation tools are relatively underdeveloped and require further

research before these agents are deployed “.

Moreover, generalist agents, capable of performing actions in the

physical world, pose new challenges requiring new mitigation strategies.

For example, physical embodiment could lead users to anthropomorphize

the agent, leading to misplaced trust in the case of a faulty system.

In addition to these risks of seeing the AGI tip into a harmful

operation for humanity, no data currently demonstrates the ability to

produce solid results in a consistent manner. This is particularly due

to the fact that human problems are often difficult, not always having a

single solution, and for which no prior training is possible.

Tristant Greene, despite Nando de Fraitas’ response, maintains his opinion just as harshly, on TheNextWeb : “ It’s

nothing short of miraculous to see a machine pull off feats of

diversion and conjuring a la Copperfield, especially when you realize

that said machine is no smarter than a toaster (and obviously dumber

than the dumbest mouse) “.

Whether or not we agree with these statements, or whether we are more

optimistic about the development of AGIs, it nevertheless seems that

the scaling up of such intelligences, competing with our human minds, is

still far from complete. be completed, and controversies appeased.