Looking to contribute discrete choice models (mixed logit and others)

1,147 views

Skip to first unread message

Joseph F Wyer

Feb 11, 2016, 3:49:23 PM2/11/16

to pystatsmodels

Hi Everyone,

I am a colleague of Chad Fulton's at Oregon. I have a fair amount of experience with extending existing and developing my own mixed logit via simulated maximum likelihood code in Matlab. I have some experience in Python but want to make a full transition away from Matlab for my day to day

Things I would like to contribute if open:

Things I would like to contribute if open:

- Add mixed logit and other discrete choice methods with simulation like models covered by Train (2003, http://eml.berkeley.edu/books/choice2.html).

- Upon some poking I found binary logit and probit but no ordered logit or probit. Are these still open?

My goal is to transition away from MatLab and gain experience developing python code. If there is documentation or specific modules where you think I could make contributions in this area let me know.

Also, I'm considering writing a proposal for google summer of code would any of these topics make a good google summer of code project?

Thanks,

Joe Wyer

josef...@gmail.com

Feb 11, 2016, 4:22:08 PM2/11/16

to pystatsmodels

Very good topic if you want to continue working on half finished code.

I was looking at it today because of a stackoverflow question. We had a previous GSOC on this, but largely the student ran out of time because of family reasons.

Here is the PR rebased almost two years ago.

This contains discrete choice models CLogit (in the style of McFadden conditional logit) and mixed logit based on Halton sequence integration.

I have draft code for ordered logit and probit, that seems to work fine, and a draft version of ConditionalLogit (Chamberlain, panel data with removed fixed effects, but currently only one event is supported, i.e. multinomial clogit with varying number of choices).

for reference: I didn't remember where I put this, it looks like there is no PR yet

If you are relatively new to Python, being able to start from an existing version might make the getting started easier.

I need to look at these things again to see how it can be organized so it's easier to work on it.

Josef

Thanks,Joe Wyer

Joseph F Wyer

Feb 11, 2016, 4:56:22 PM2/11/16

to pystatsmodels

Hi Josef,

Great! I don't think I have a problem hooking into half-finished stuff, especially since I'm relatively new. Thanks for providing links of PRs to look at.

I'll dig into learning more about the package and those PRs this month.

josef...@gmail.com

Feb 11, 2016, 10:21:20 PM2/11/16

to pystatsmodels

Please reply inline or at bottom in most Python related mailing lists or news groups. It's much easier to maintain context.

I was a bit rushed when I wrote my initial reply.

The multinomial models, discrete choice area is pretty large and we have several models that are in various stages of completion, and "messiness".

So a bit more background information:

If you are in discrete choice, then ConditionalLogit might not be so interesting, and I skip it.

OrderedChoice is a relatively straight forward example for a maximum likelihood model. It uses `GenericLikelihoodModel` to inherit from. This is our main MLE framework for rapid development or prototyping because it does a lot automatically.

This might be easiest to get started with the general pattern of model and results classes that we use.

CLogit is the basic discrete choice models. It's a bit more complicated because it combines the two case, identical regressor and different parameters across choices and varying regressors and identical parameters. AFAIR, this is mostly finished and would need a review for usability and checking of test coverage.

Mixed Logit MXLogit is a mixture model (IIRC) that uses Pseudo Monte Carlo integration for the unobserved heterogeneity. It is missing unit tests, and I think I never reviewed the details.

There was also some draft version for nested Logit, as a discrete choice, random utility model, but I don't see it in the old PR right now.

That was mainly modeled after the nested transport problem in Greene. (I spend some time to figure out the parameter restrictions that come from the optimal choice in a random utility model, a long time ago.) My initial draft versions were a bit complicated because I allowed for general tree structures (no fixed number of levels).

I will have to do another rebase of the discrete choice PR, and I might put it in the sandbox, so it's easier to get started. (I have some suspected fixes, I wasn't sure about them, that need to be merged before rebase.)

In terms of getting started (based on how I work), I would recommend

- user interface: Try to play with it, and especially coming from the outside, check what works well, what is clumsy to use and what results look weird

- unit test; check and add a verified test case, especially for results that look "weird", and where they are just missing.

- missing functionality: Especially coming from another package: Does the code have the required functionality and results. Add if necessary and possible or open and issue for future work and extension.

I would recommend starting out with something easy to figure out the pattern and then move to the models that you are interested in and familiar with from matlab.

If you have questions or comments to specific models it is also useful to add them to a github issue because it's easier to keep track off compared to the mailing list.

About GSOC: The topic would fit very well. I had to reply "we don't have it" for CLogit twice recently on github.

If you are graduating soon, then you need to check the Google information for eligibility.

Josef

josef...@gmail.com

Feb 11, 2016, 10:44:43 PM2/11/16

to pystatsmodels

I forgot to mention:

From the statistics side we will get MixedGLM which includes mixed Logit or Probit for Panel data with random coefficients or random intercept. We had a GSOC last year, and we should get something usable in the near future. This is based on approximate numerical integration.

Josef

Joseph F Wyer

Mar 7, 2016, 4:37:06 PM3/7/16

to pystatsmodels

So I've forked statsmodels and am looking at the dcm_rebased branch. I'm not exactly clear on how the two PRs you, Josef, mentioned earlier 1605 and 2600 relate to the dcm_rebased branch.

I saw you posted a summary of open issues here: https://github.com/statsmodels/statsmodels/issues/2382

This looks like it was about a year ago. Have there been any big changes since then or can I consider this a current "wishlist" for mxlogit?

You recommended looking at the ordered choice code to get a feel for how to use the `GeneralLikehlihoodModel' class. I am not finding any Ordered model code in the statsmodels/discrete/ folder on the dcm_rebased branch.

My ideas for moving forward is to:

I saw you posted a summary of open issues here: https://github.com/statsmodels/statsmodels/issues/2382

This looks like it was about a year ago. Have there been any big changes since then or can I consider this a current "wishlist" for mxlogit?

You recommended looking at the ordered choice code to get a feel for how to use the `GeneralLikehlihoodModel' class. I am not finding any Ordered model code in the statsmodels/discrete/ folder on the dcm_rebased branch.

My ideas for moving forward is to:

- Look and play with at the ordered model to get a feel for statsmodels classes used.

- Take a close look at the clogit and mxlogit code and see where it stands and how it functions.

Thanks for any pointers on these questions.

josef...@gmail.com

Mar 7, 2016, 4:59:00 PM3/7/16

to pystatsmodels

brief answer, I'm busy with other things right now

ordered logit and probit wasn't part of the dcm GSOC, it's more recent code in my PR

It's not directly linked to the dcm code, but is in the same extension to multinomial logit category.

Essentially nothing happened with dcm since the rebase.

I reused the halton sequence mixing code for an experimental generic mixed model used for Poisson as example, but not for anything multinomial.

Josef

josef...@gmail.com

Mar 7, 2016, 5:33:43 PM3/7/16

to pystatsmodels

I just remembered:

issue #2382 has a back link from

where I tried to fix the first point.

I didn't merge it because I fixed this by analogy to other models without checking the references for mixed logit or dcm.

I think something like that needs to be changed in the rebased branch.

Josef

Timothy Brathwaite

Mar 14, 2016, 10:42:03 PM3/14/16

to pystatsmodels

Hi all,

My name is Timothy Brathwaite, and I'm a PhD student at UC Berkeley working in the area of discrete choice with Prof Joan Walker.

I don't have any experience developing software professionally or contributing to large open-source projects such as statsmodels. However, I have implemented a number of discrete choice models, including the conditional logit model in python. I developed a fully functioning module that has worked very well for myself and others in my research group, and I just published it to PyPi today:

I haven't seen any conditional logit implementation in statsmodels yet, especially none that accounts for choice sets that vary across observations and that allows coefficients to be constrained across a subset of alternatives (in addition to the usual choices of having a single coefficient across all alternatives or a different coefficient for each alternative).

I think that the correct place for such a package is really within statsmodels as opposed to being a standalone package, and I would like to contribute if possible.

Would anyone here be willing to take a look at my project (there are examples on the github page) and provide some direction on how best to go about contributing?

Thanks for reading,

Timothy

josef...@gmail.com

Mar 15, 2016, 1:24:05 AM3/15/16

to pystatsmodels

On Mon, Mar 14, 2016 at 10:30 PM, Timothy Brathwaite <timoth...@gmail.com> wrote:

Hi all,My name is Timothy Brathwaite, and I'm a PhD student at UC Berkeley working in the area of discrete choice with Prof Joan Walker.I don't have any experience developing software professionally or contributing to large open-source projects such as statsmodels. However, I have implemented a number of discrete choice models, including the conditional logit model in python. I developed a fully functioning module that has worked very well for myself and others in my research group, and I just published it to PyPi today:I haven't seen any conditional logit implementation in statsmodels yet, especially none that accounts for choice sets that vary across observations and that allows coefficients to be constrained across a subset of alternatives (in addition to the usual choices of having a single coefficient across all alternatives or a different coefficient for each alternative).I think that the correct place for such a package is really within statsmodels as opposed to being a standalone package, and I would like to contribute if possible.Would anyone here be willing to take a look at my project (there are examples on the github page) and provide some direction on how best to go about contributing?Thanks for reading,

Hi Timothy,

Thanks, it's great to see someone else working in this area. With some effort it should be possible to get this area well covered in statsmodels.

Did you compare your implementation with the ConditionalLogit in the former GSOC PR?

I only had time for some partial skimming of your package. So I leave most comments until after I have have looked at it a bit more carefully.

In terms of implementation the main difference is that we separate out the results into Results classes and don't store the estimation results as attributes of the model instance. Also, most of our results in statsmodels are calculated lazily on demand, while AFAICS you calculate all results immediately.

Overall, AFAICS, you are not subclassing any of our models or results, which requires code duplication from our perspective. However, there might be some parts where statsmodels still needs refactoring and part of your code might be closer to a future statsmodels than the current one. (Just a vague impression right now, especially for the usage of scipy minimize.)

The notebooks look good, but I didn't have time to check the details yet.

Josef

josef...@gmail.com

Mar 15, 2016, 12:56:15 PM3/15/16

to pystatsmodels

On Tue, Mar 15, 2016 at 1:24 AM, <josef...@gmail.com> wrote:

On Mon, Mar 14, 2016 at 10:30 PM, Timothy Brathwaite <timoth...@gmail.com> wrote:Hi all,My name is Timothy Brathwaite, and I'm a PhD student at UC Berkeley working in the area of discrete choice with Prof Joan Walker.I don't have any experience developing software professionally or contributing to large open-source projects such as statsmodels. However, I have implemented a number of discrete choice models, including the conditional logit model in python. I developed a fully functioning module that has worked very well for myself and others in my research group, and I just published it to PyPi today:I haven't seen any conditional logit implementation in statsmodels yet, especially none that accounts for choice sets that vary across observations and that allows coefficients to be constrained across a subset of alternatives (in addition to the usual choices of having a single coefficient across all alternatives or a different coefficient for each alternative).I think that the correct place for such a package is really within statsmodels as opposed to being a standalone package, and I would like to contribute if possible.Would anyone here be willing to take a look at my project (there are examples on the github page) and provide some direction on how best to go about contributing?Thanks for reading,Hi Timothy,Thanks, it's great to see someone else working in this area. With some effort it should be possible to get this area well covered in statsmodels.Did you compare your implementation with the ConditionalLogit in the former GSOC PR?I only had time for some partial skimming of your package. So I leave most comments until after I have have looked at it a bit more carefully.In terms of implementation the main difference is that we separate out the results into Results classes and don't store the estimation results as attributes of the model instance. Also, most of our results in statsmodels are calculated lazily on demand, while AFAICS you calculate all results immediately.Overall, AFAICS, you are not subclassing any of our models or results, which requires code duplication from our perspective. However, there might be some parts where statsmodels still needs refactoring and part of your code might be closer to a future statsmodels than the current one. (Just a vague impression right now, especially for the usage of scipy minimize.)

The notebooks look good, but I didn't have time to check the details yet.,

Timothy

Some questions on the model definition about explanatory variables that show up for only some alternatives.

I don't remember having ever looked at those.

AFAICS, in the actual design matrix used in the model the choice specific variable is filled up with zeros for all choices where the variable doesn't apply.

In terms of computation this is then not different from any other variable with alternative specific values, is it?

In terms of theory: are there any problems with identification of the corresponding parameters, or problems from the filling with zeros?

My guess is it's identified through within alternative variation, and requires a alternative specific intercept to be present.

Is there a reference for this case? I haven't looked at this literature in a while.

Aside: We follow the numpy docstring standard like most packages in this part of scientific python.

The core part looks very good so far, especially having analytical derivative and hessian.

For discussing details it would be better to move to an issue and github.

Josef

josef...@gmail.com

Mar 15, 2016, 1:19:41 PM3/15/16

to pystatsmodels

Another general question:

What are your extension plans for this? Which models do you think follow the same pattern?

I'm asking mainly because I saw the more general pattern with using a base class and `utility_transform` to specify some model specific features.

Josef

Timothy Brathwaite

Mar 15, 2016, 9:57:23 PM3/15/16

to pystatsmodels

Hey Josef, thanks for taking a look!

--Timothy

You're right--I do calculate all results immediately, and I'm not yet subclassing any of your models or results. This was just to reduce the amount of dependencies needed. I haven't actually looked under the hood at your results or model classes yet, beyond what was needed to use the statsmodels summary tables. I'm happy to take a look at whether I could easily subclass your existing models or results to reduce as much code duplication as possible.

In terms of the choice specific variables, filling the design matrix with zeros was done specifically to make computation the same regardless of whether or not a variable shows up in the utility equation for a given alternative or not. As for identification, as long as there is alternative variation, the parameter should be identified. I think that alternative variation is sufficient for identification and that an alternative specific intercept isn't necessary. My usual go to for issues of identification are: Ken Train's Discrete Choice Methods with Simulation, section 2.5 (Chapter 2. Properties of Discrete Choice Models). He doesn't discuss choice specific variables per se though.

I literally just found out about the numpy docstring standard when trying to register pylogit on PyPi. I wish I had known about it sooner! I'll get to reformatting my docstrings next week (as I have a wedding to go to this weekend).

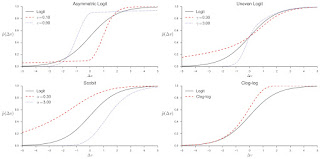

As far as extensions go, I have four other models already coded and working with pylogit on my own computer. Two of these models are related to known models. They are multinomial generalizations of the clog-log model and the scobit model ("Scobit: An Alternative Estimator to Probit and Logit"). The other two models are new generalizations of the conditional logit model that I developed in my research. I'm currently writing an article on these four models now, so I decided to hold off on including them in the first pylogit release. In terms of differences from the standard logit model here's a picture of what they look like in the binary case. They are all asymmetric choice models (in terms of the shape of their probability curve, and they [often] have an extra number of parameters to be estimated in order to determine the precise shape of the curve). In this sense they are very similar to the "Generalized Logistic Model" described by Stukel.

--Timothy

josef...@gmail.com

Mar 15, 2016, 10:41:36 PM3/15/16

to pystatsmodels

On Tue, Mar 15, 2016 at 9:57 PM, Timothy Brathwaite <timoth...@gmail.com> wrote:

Hey Josef, thanks for taking a look!You're right--I do calculate all results immediately, and I'm not yet subclassing any of your models or results. This was just to reduce the amount of dependencies needed. I haven't actually looked under the hood at your results or model classes yet, beyond what was needed to use the statsmodels summary tables. I'm happy to take a look at whether I could easily subclass your existing models or results to reduce as much code duplication as possible.In terms of the choice specific variables, filling the design matrix with zeros was done specifically to make computation the same regardless of whether or not a variable shows up in the utility equation for a given alternative or not. As for identification, as long as there is alternative variation, the parameter should be identified. I think that alternative variation is sufficient for identification and that an alternative specific intercept isn't necessary. My usual go to for issues of identification are: Ken Train's Discrete Choice Methods with Simulation, section 2.5 (Chapter 2. Properties of Discrete Choice Models). He doesn't discuss choice specific variables per se though.

I was thinking that the slope estimate might be biased without alternative specific intercept. That's based on an analogy where we have a truncated at zero explanatory variable and the endogenous values at those zeros are not on the regression line.

It's just visual intuition, I haven't seen any math for it.

I literally just found out about the numpy docstring standard when trying to register pylogit on PyPi. I wish I had known about it sooner! I'll get to reformatting my docstrings next week (as I have a wedding to go to this weekend).As far as extensions go, I have four other models already coded and working with pylogit on my own computer. Two of these models are related to known models. They are multinomial generalizations of the clog-log model and the scobit model ("Scobit: An Alternative Estimator to Probit and Logit"). The other two models are new generalizations of the conditional logit model that I developed in my research. I'm currently writing an article on these four models now, so I decided to hold off on including them in the first pylogit release. In terms of differences from the standard logit model here's a picture of what they look like in the binary case. They are all asymmetric choice models (in terms of the shape of their probability curve, and they [often] have an extra number of parameters to be estimated in order to determine the precise shape of the curve). In this sense they are very similar to the "Generalized Logistic Model" described by Stukel.

That sounds interesting.

If you know generalized linear models, GLM, then this would correspond to using different link functions. However, the standard implementation as in statsmodels GLM does not allow for extra parameters in the link function itself.

In terms of implementation your helper functions for `utility_transforms` and derivatives might then be similar to what we have in the Link classes. (I never looked at the details for the GLM version of multinomial models, we don't have those in GLM.)

Josef

Timothy Brathwaite

Mar 16, 2016, 6:07:44 PM3/16/16

to pystatsmodels

Oh, got it. I think you're totally right about the coefficients being biased if you leave out the alternative specific intercept and there's no theoretical reason to thing the mean of the alternative specific error terms is zero. This is just based off of my experience with linear regression. In this case, I think you'd be able to identify a parameter for the choice specific variable, but the identified coefficient wouldn't be very useful.

And you're spot on--in my mind, I think of these logit-type models really as being an exercise in using different link functions. Claudia Czado at the Technical University of Munich has done a lot of work on parametrized link functions for the binary case, but I've seen only two papers using parametric link functions for multinomial models (one of them not even making the link, no pun intended, with GLMs so it was mostly by accident). Thanks for the hint about the Link classes, I'll take a look at what you guys are using and see if there's any similarity that can result in code sharing.

-Timothy

Reply all

Reply to author

Forward

0 new messages