back to the tiered memory

42 views

Skip to first unread message

Anton Gavriliuk

Sep 27, 2022, 5:23:58 AM9/27/22

to pmem

Hi all

I have 2 x 8280L sockets box with 768 GB DRAM and 6144 GB PMEM running under RHEL 8.6 with latest available ml kernel 5.19.11-1.el8.elrepo.x86_64

When I setting up tiered memory, I got next warning

[root@memverge ~]# daxctl reconfigure-device --mode=system-ram all

dax1.0:

WARNING: detected a race while onlining memory

Some memory may not be in the expected zone. It is

recommended to disable any other onlining mechanisms,

and retry. If onlining is to be left to other agents,

use the --no-online option to suppress this warning

dax1.0: 2 memory sections already online

dax0.0:

WARNING: detected a race while onlining memory

Some memory may not be in the expected zone. It is

recommended to disable any other onlining mechanisms,

and retry. If onlining is to be left to other agents,

use the --no-online option to suppress this warning

dax0.0: 1 memory section already online

[root@memverge ~]# cat /sys/devices/system/memory/auto_online_blocks

offline

[root@memverge ~]#

Optance system-ram devices are going to existing NUMA nodes and don't create additional CPU-free NUMA nodes.

I have 2 x 8280L sockets box with 768 GB DRAM and 6144 GB PMEM running under RHEL 8.6 with latest available ml kernel 5.19.11-1.el8.elrepo.x86_64

When I setting up tiered memory, I got next warning

[root@memverge ~]# daxctl reconfigure-device --mode=system-ram all

dax1.0:

WARNING: detected a race while onlining memory

Some memory may not be in the expected zone. It is

recommended to disable any other onlining mechanisms,

and retry. If onlining is to be left to other agents,

use the --no-online option to suppress this warning

dax1.0: 2 memory sections already online

dax0.0:

WARNING: detected a race while onlining memory

Some memory may not be in the expected zone. It is

recommended to disable any other onlining mechanisms,

and retry. If onlining is to be left to other agents,

use the --no-online option to suppress this warning

dax0.0: 1 memory section already online

[root@memverge ~]# cat /sys/devices/system/memory/auto_online_blocks

offline

[root@memverge ~]#

Optance system-ram devices are going to existing NUMA nodes and don't create additional CPU-free NUMA nodes.

[root@memverge ~]# daxctl list

[

{

"chardev":"dax1.0",

"size":3183575302144,

"target_node":1,

"align":2097152,

"mode":"system-ram",

"movable":true

},

{

"chardev":"dax0.0",

"size":3183575302144,

"target_node":0,

"align":2097152,

"mode":"system-ram",

"movable":true

}

]

[root@memverge ~]#

[root@memverge ~]# numactl -H

available: 2 nodes (0-1)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27

node 0 size: 3419704 MB

node 0 free: 3139765 MB

node 1 cpus: 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55

node 1 size: 3420152 MB

node 1 free: 3141870 MB

node distances:

node 0 1

0: 10 21

1: 21 10

[root@memverge ~]# lsmem

RANGE SIZE STATE REMOVABLE BLOCK

0x0000000000000000-0x000000607fffffff 386G online yes 0-192

0x0000006c80000000-0x000003b17fffffff 3.3T online yes 217-1890

0x000003bd80000000-0x000006a1ffffffff 2.9T online yes 1915-3395

Memory block size: 2G

Total online memory: 6.6T

Total offline memory: 0B

[root@memverge ~]# free -h

total used free shared buff/cache available

Mem: 6.5Ti 544Gi 6.0Ti 13Mi 711Mi 5.8Ti

Swap: 4.0Gi 0B 4.0Gi

[root@memverge ~]#

Any ideas why Optane system-ram devices are going to existing NUMA nodes and don't create their own CPU-free NUMA nodes ?

Anton

[

{

"chardev":"dax1.0",

"size":3183575302144,

"target_node":1,

"align":2097152,

"mode":"system-ram",

"movable":true

},

{

"chardev":"dax0.0",

"size":3183575302144,

"target_node":0,

"align":2097152,

"mode":"system-ram",

"movable":true

}

]

[root@memverge ~]#

[root@memverge ~]# numactl -H

available: 2 nodes (0-1)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27

node 0 size: 3419704 MB

node 0 free: 3139765 MB

node 1 cpus: 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55

node 1 size: 3420152 MB

node 1 free: 3141870 MB

node distances:

node 0 1

0: 10 21

1: 21 10

[root@memverge ~]# lsmem

RANGE SIZE STATE REMOVABLE BLOCK

0x0000000000000000-0x000000607fffffff 386G online yes 0-192

0x0000006c80000000-0x000003b17fffffff 3.3T online yes 217-1890

0x000003bd80000000-0x000006a1ffffffff 2.9T online yes 1915-3395

Memory block size: 2G

Total online memory: 6.6T

Total offline memory: 0B

[root@memverge ~]# free -h

total used free shared buff/cache available

Mem: 6.5Ti 544Gi 6.0Ti 13Mi 711Mi 5.8Ti

Swap: 4.0Gi 0B 4.0Gi

[root@memverge ~]#

Any ideas why Optane system-ram devices are going to existing NUMA nodes and don't create their own CPU-free NUMA nodes ?

Anton

Jeff Moyer

Sep 27, 2022, 7:17:54 PM9/27/22

to Anton Gavriliuk, pmem

Anton Gavriliuk <antos...@gmail.com> writes:

> Hi all

>

> I have 2 x 8280L sockets box with 768 GB DRAM and 6144 GB PMEM running

> under RHEL 8.6 with latest available ml kernel 5.19.11-1.el8.elrepo.x86_64

>

> When I setting up tiered memory, I got next warning

>

> [root@memverge ~]# daxctl reconfigure-device --mode=system-ram all

> dax1.0:

> WARNING: detected a race while onlining memory

> Some memory may not be in the expected zone. It is

> recommended to disable any other onlining mechanisms,

> and retry. If onlining is to be left to other agents,

> use the --no-online option to suppress this warning

> dax1.0: 2 memory sections already online

> dax0.0:

> WARNING: detected a race while onlining memory

> Some memory may not be in the expected zone. It is

> recommended to disable any other onlining mechanisms,

> and retry. If onlining is to be left to other agents,

> use the --no-online option to suppress this warning

> dax0.0: 1 memory section already online

>

> [root@memverge ~]# cat /sys/devices/system/memory/auto_online_blocks

> offline

> [root@memverge ~]#

Did you try the −−no−online option to daxctl reconfigure-device?

> Hi all

>

> I have 2 x 8280L sockets box with 768 GB DRAM and 6144 GB PMEM running

> under RHEL 8.6 with latest available ml kernel 5.19.11-1.el8.elrepo.x86_64

>

> When I setting up tiered memory, I got next warning

>

> [root@memverge ~]# daxctl reconfigure-device --mode=system-ram all

> dax1.0:

> WARNING: detected a race while onlining memory

> Some memory may not be in the expected zone. It is

> recommended to disable any other onlining mechanisms,

> and retry. If onlining is to be left to other agents,

> use the --no-online option to suppress this warning

> dax1.0: 2 memory sections already online

> dax0.0:

> WARNING: detected a race while onlining memory

> Some memory may not be in the expected zone. It is

> recommended to disable any other onlining mechanisms,

> and retry. If onlining is to be left to other agents,

> use the --no-online option to suppress this warning

> dax0.0: 1 memory section already online

>

> [root@memverge ~]# cat /sys/devices/system/memory/auto_online_blocks

> offline

> [root@memverge ~]#

-Jeff

Vishal Verma

Sep 27, 2022, 8:29:40 PM9/27/22

to Jeff Moyer, Anton Gavriliuk, pmem

Actually I'm not sure --no-online will help here.

The target_node being '0' and '1' seems problematic. Normally the NFIT table specifies a new memory-only node for NVDIMMs, and it seems like the platform firmware might not be setting that up correctly.

There's some discussion here[1] which can help you test if this is happening.

Thanks,

Vishal

--

You received this message because you are subscribed to the Google Groups "pmem" group.

To unsubscribe from this group and stop receiving emails from it, send an email to pmem+uns...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/pmem/x491qrwbdyz.fsf%40segfault.boston.devel.redhat.com.

Anton Gavriliuk

Sep 28, 2022, 10:21:35 AM9/28/22

to Jeff Moyer, pmem

>

Did you try the −−no−online option to daxctl reconfigure-device?

There is no WARNING anymore, but still have two existing numa nodes.

[root@memverge ~]# numactl -H

available: 2 nodes (0-1)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27

available: 2 nodes (0-1)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27

node 0 size: 386616 MB

node 0 free: 154045 MB

node 0 free: 154045 MB

node 1 cpus: 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55

node 1 size: 387064 MB

node 1 free: 154526 MB

node 1 free: 154526 MB

node distances:

node 0 1

0: 10 21

1: 21 10

[root@memverge ~]# daxctl reconfigure-device --mode=system-ram --no-online all

[

[

{

"chardev":"dax0.0",

"size":3183575302144,

"target_node":0,

"align":2097152,

"mode":"system-ram"

}

]

reconfigured 2 devices

reconfigured 2 devices

[root@memverge ~]# numactl -H

available: 2 nodes (0-1)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27

node 0 size: 3419704 MB

available: 2 nodes (0-1)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27

node 0 size: 3419704 MB

node 0 free: 3139635 MB

node 1 cpus: 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55

node 1 size: 3420152 MB

node 1 free: 3139265 MB

node distances:

node 0 1

0: 10 21

1: 21 10

[root@memverge ~]#

Anton

ср, 28 сент. 2022 г. в 02:17, Jeff Moyer <jmo...@redhat.com>:

Anton Gavriliuk

Sep 28, 2022, 3:00:29 PM9/28/22

to Vishal Verma, Jeff Moyer, pmem

Thank you Vishal

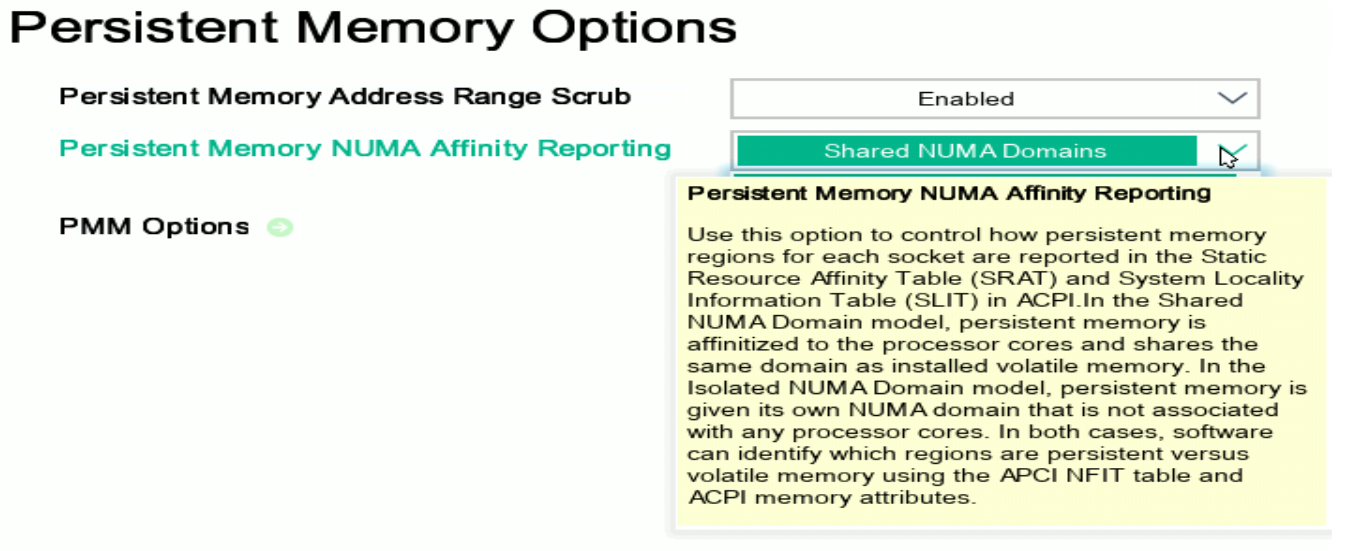

In the BIOS settings I found the tunable and change it "Shared NUMA Domains" -> "Isolated NUMA Domains"

Everything is fine now.

[root@memverge anton]# numactl -H

available: 4 nodes (0-3)

available: 4 nodes (0-3)

node 0 cpus: 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27

node 0 size: 386648 MB

node 0 free: 107487 MB

node 0 free: 107487 MB

node 1 cpus: 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55

node 1 size: 387031 MB

node 1 free: 106451 MB

node 2 cpus:

node 2 size: 3033088 MB

node 2 free: 3033088 MB

node 3 cpus:

node 3 size: 3033088 MB

node 3 free: 3033088 MB

node distances:

node 0 1 2 3

0: 10 21 17 28

1: 21 10 28 17

2: 17 28 10 28

3: 28 17 28 10

[root@memverge anton]#

node 1 free: 106451 MB

node 2 cpus:

node 2 size: 3033088 MB

node 2 free: 3033088 MB

node 3 cpus:

node 3 size: 3033088 MB

node 3 free: 3033088 MB

node distances:

node 0 1 2 3

0: 10 21 17 28

1: 21 10 28 17

2: 17 28 10 28

3: 28 17 28 10

[root@memverge anton]#

So now I have a memory tiered system even without CXL and I'm going to play with it!

Anton

ср, 28 сент. 2022 г. в 03:29, Vishal Verma <stella...@gmail.com>:

Vishal Verma

Sep 28, 2022, 3:18:21 PM9/28/22

to Anton Gavriliuk, Jeff Moyer, pmem

Cheers! I wasn't aware this was a tunable - makes sense. What system is this?

Thanks

Vishal

Anton Gavriliuk

Sep 28, 2022, 3:47:48 PM9/28/22

to Vishal Verma, Jeff Moyer, pmem

This is an HPE DL380g10 box. Quite old, but still a VERY good 2 socket box.

Firstly I run Inte's mlc for checking latencies across the numa nodes

[root@memverge Linux]# ./mlc --latency_matrix

Intel(R) Memory Latency Checker - v3.9a

Command line parameters: --latency_matrix

Using buffer size of 2000.000MiB

Measuring idle latencies (in ns)...

Numa node

Numa node 0 1 2 3

0 82.5 148.1 172.8 238.1

1 147.9 82.5 237.8 173.0

[root@memverge Linux]#

Intel(R) Memory Latency Checker - v3.9a

Command line parameters: --latency_matrix

Using buffer size of 2000.000MiB

Measuring idle latencies (in ns)...

Numa node

Numa node 0 1 2 3

0 82.5 148.1 172.8 238.1

1 147.9 82.5 237.8 173.0

[root@memverge Linux]#

So for local/remote Optane we have 172/238 ns, not bad. It would be interesting to see these numbers in the physical CXL.mem setup.

Anyway, thanks to Optane, right now we are able to use CXL.mem setup even without actually CXL.

Anton

ср, 28 сент. 2022 г. в 22:18, Vishal Verma <stella...@gmail.com>:

Reply all

Reply to author

Forward

0 new messages