"Global" Rotation Offset

fxframes

fxframes

johanne...@formann.de

Would try to adjust (either in hardware or in the openpnp settings) that.

fxframes

johanne...@formann.de

Does the problem persist, if you disable bottom vision?

fxframes

fxframes

Mike Menci

fxframes

Mike Menci

On 2 Jun 2021, at 19.39, fxframes <mp5...@gmail.com> wrote:

Thanks for the suggestion.

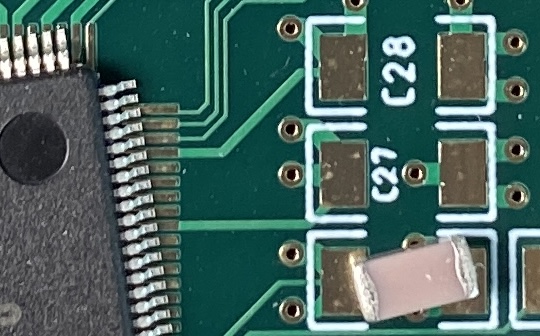

From the images below it does seem to turn a bit more than 90º.

Would it be the case of adjusting steps/mm?

Start.<Screenshot 2021-06-02 at 18.34.54.png>End<Screenshot 2021-06-02 at 18.35.29.png>

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/50bc1d31-954f-4f0d-ae1d-600c6530c369n%40googlegroups.com.

<Screenshot 2021-06-02 at 18.34.54.png><Screenshot 2021-06-02 at 18.35.29.png>

bert shivaan

--

bert shivaan

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/F48373B7-C9C8-4AC9-BF79-7531291982B5%40gmail.com.

fxframes

fxframes

johanne...@formann.de

bert shivaan

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/383ce959-b97a-403a-9cc5-83a6ded9d650n%40googlegroups.com.

fxframes

Mike Menci

On 2 Jun 2021, at 23.34, fxframes <mp5...@gmail.com> wrote:

Interesting... I’ll take a look tomorrow. Thanks.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/5b673ece-adcc-4b3d-a832-e2afb5e8f5ean%40googlegroups.com.

ma...@makr.zone

Hi fxframes

Some thoughts:

- If you use pre-rotate then moderately wrong steps/degree

should automatically be compensated. So during these tests, do not

use pre-rotate. But during production do use it (it

is way better).

- If it is a steps/degree issue, then different placement angles

should result in different angle offsets. Parts at 0° should

show no offset, at 90° it should show, at 180° double that. If

this does not happen, then it is not a steps/degrees

issue.

- If it is a steps/degree issue, then OpenPnP is not the place to fix this. Check your controller settings instead. The images you posted earlier, would suggest that.

- If you checked all that and still see the angular offset, then look at the fiducial PCB orientation. Double check if your fiducial locations E-CAD import file contains the right coordinates (you would not be the first user to mistakenly import an older revision of a design ;-)

- The bottom camera has both a Position Rotation and

a Image Transforms Rotation (on two

separate tabs). Make sure the Position Rotation

is zero (Frankly, I don't know if a non-zero Position

Rotation can be made to work correctly at all or if this

field should better be removed).

- If all this does not help, then yes, send log and machine.xml

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/c8780fec-e689-4e73-936d-c20c931ec4ffn%40googlegroups.com.

fxframes

fxframes

Please check out the video below. the first cap is set to 0º rotation in the job and the second one is set to 180º, but both rotate ~180º before arriving at the bottom camera.

fxframes

Hi fxframes

Some thoughts:

- If you use pre-rotate then moderately wrong steps/degree should automatically be compensated. So during these tests, do not use pre-rotate. But during production do use it (it is way better).

- If it is a steps/degree issue, then different placement angles should result in different angle offsets. Parts at 0° should show no offset, at 90° it should show, at 180° double that. If this does not happen, then it is not a steps/degrees issue.

- If it is a steps/degree issue, then OpenPnP is not the place to fix this. Check your controller settings instead. The images you posted earlier, would suggest that.

--

fxframes

ma...@makr.zone

> I've disabled pre-rotation BUT the parts still rotate on

their way to the bottom camera ?

> ...but both rotate ~180º before arriving at the bottom camera.

The feeder itself and the part inside the tape can also each have

a rotation, so this is normal. The difference is that the part

must be visible at 0° in the camera when it is aligned. The

rotation 0° means: "I see the part in the same orientation as when

I look at the footprint as drawn in the E-CAD library".

Conversely, with pre-rotate: "I see the part as it will be placed

on the PCB on the machine". So it will already have the rotation

of the design plus the rotation of the PCB itself. The advantage

of pre-rotate is that any inaccuracies through the rotation

(including runout, backlash etc.) will already be compensated out.

Important for large parts, where a few degrees offset will result

in relatively

large pad offsets, due to leverage.

_Mark

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/f248de23-8f68-40bc-b45d-1516f040567an%40googlegroups.com.

fxframes

tony...@att.net

ma...@makr.zone

Good catch Tony! I'll fix it.

@fxframes, in the mean-time you can set it manually. I would be

surprised, if this is related with the problem here, though...

https://github.com/openpnp/openpnp/wiki/GcodeDriver%3A-Command-Reference#home_command

_Mark

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/7d1097d9-53d0-439f-b5f4-d1dd29b0227an%40googlegroups.com.

fxframes

» The feeder itself and the part inside the tape can also each have a rotation, so this is normal. The difference is that the part must be visible at 0° in the camera when it is aligned. The rotation 0° means: "I see the part in the same orientation as when I look at the footprint as drawn in the E-CAD library”.

ma...@makr.zone

> If I’m understanding this correctly Mark, should the part be correctly aligned before it leaves the bottom camera and is moved over to the pcb to be placed?

Well, not really. When pre-rotate is disabled, what you

see is what OpenPnP initially thinks is zero degrees. So

what you effectively see is the pick angle error.

In your images it is huge and both the part and the nozzle angle

(visible as the crosshairs) are strange.

Something is really wrong here.

- Check the angle(s) of the feeders/rotation in tape.

- Check if the rotation axis turns the right way around (Issues

& Solutions should have told you to use one of the Flip

options to mirror the image, but if you have a mirror in your

camera view path, then that advice is false)

- Check the pulley on the rotation axis. When I assembled my

Liteplacer I forgot to fasten the pulley screw there and "I

almost bit the table edge" (to translate a Swiss-German idiom

verbatim) trying to find that bug. :-}

_Mark

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/08E44EDA-FC50-4509-BADA-19CB34357927%40gmail.com.

fxframes

ma...@makr.zone

The Strip-Feeder calculates the feeder rotation by looking at the sprocket holes. The Rotation in Tape is on top of that. Looking at your photos, your parts may well be 180° turned.

Now that I look at your feeder image: there is only one hole highlighted in red!

It is possible that all this comes from a faulty Strip Feeder

sprocket hole recognition! Yes, that finally could explain why

both the part and the nozzle are rotated so oddly.

Are you sure the feeder sprocket hole recognition pipeline works

well? After all these are transparent or black plastic tapes (it

seems), which is very difficult!!

I never managed to create a reliable pipeline with these on my PushPullFeeder:

I only recently developed a new vision stage that can do it reliably, but that is not yet ready for multi-hole recognition.

https://github.com/openpnp/openpnp/pull/1179#issuecomment-823295084

_Mark

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/EBD7D655-34C3-4445-88E4-D90129377210%40gmail.com.

fxframes

» Now that I look at your feeder image: there is only one hole highlighted in red!

dennis bonotto

> On Jun 3, 2021, at 21:01, fxframes <mp5...@gmail.com> wrote:

>

> This is the pipeline I’m using, even if it’s wrong in finding just one hole, hopefully it contains some ideas to help with clear tapes.

>

> <Screen Recording 2021-06-03 at 20.55.51.mp4>

ma...@makr.zone

I'm no expert for the strip feeder, I always thought it needs multiple holes. But maybe that's only for Auto Setup.

The last thing that comes to my mind, is that the strip feeder

will do very crazy things, when not setup exactly right, for

instance when the reference/last holes do not match the reality.

The strip feeder only tries to correct the tape "course", it

cannot correct its initial position.

See this animation:

https://makr.zone/strip-feeder-crazy.gif

So if you shifted your home coordinate perhaps and all your

feeder holes are off by a certain distance, then the strange pick

angle could happen.

But then again, alignment should fix this. Gotta go to bed...

_Mark

fxframes

ma...@makr.zone

Thanks for reporting this back. This was one heck of an odyssey!

;-)

> Mark, just regarding the strip feeder pipeline, if you

ever figure out if it needs

to find multiple holes even in manual setup mode, would you

please let me know?

Yeah, I had a look. It does not need more than one hole

in update mode, i.e. only in Auto Setup.

_Mark

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CB079DF6-7185-4D6E-8729-665EB2F398CC%40gmail.com.

ma...@makr.zone

Oh, and don't forget to re-enable pre-rotate. Like I said, it

gives better results.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/2c05685d-8bad-cabd-7074-f17e22668347%40makr.zone.

fxframes

Clemens Koller

On 02/06/2021 21.41, fxframes wrote:

> Well I'm not sure now because it seems that on the LitePlacer the 2.25 gear reduction on the rotation axis dictates that the correct number here is 160 (360º/2.25) which is what it is right now.

> Jason posted this here some time ago. For the record, I did play a little bit with it but not much changed. I'm not sure if this can or even should be set somewhere else.

The scaling differs significantly in between these modes. I am currently a kind of happy with the radius mode $4am=3.

With some effort studying the *urgh* documentation, I came to the conclusion that it makes sense to set $ara to 57.2957795deg which corresponds to 1rad or 360deg / 2*PI. Then the Travel per Revolution $4tr is 160 because of the 2.25 gear ratio from the stepper to the nozzle axis( 360deg / 2.25 = 160deg)

Here are my A-Axis parameters for TinyG in "radius mode":

$4ma=3

$4sa=0.9

$4tr=160

$4mi=8

$4po=0

$4pm=2

$aam=3

$avm=100000

$afr=100000

$atn=-1"

$atm=-1"

$ajm=57600

$ajh=57600

$ajd=0.05

$ara=57.2957795

$asn=0

$asx=0

$asv=21600

$alv=21600

$alb=5

$azb=2

Maybe somebody can post the parameters for linear mode - is anybody using it?

As mentioned some time ago, I strongly recommend to avoid setting $ parameters from within OpenPnP, as TinyG is unable to update them internally as fast as it accepts the parameters and OpenPnP just cannot know that it's pushing TinyG beyond its limits!!!

Greets,

Clemens

On 02/06/2021 21.41, fxframes wrote:

> Well I'm not sure now because it seems that on the LitePlacer the 2.25 gear reduction on the rotation axis dictates that the correct number here is 160 (360º/2.25) which is what it is right now.

> Jason posted this here some time ago. For the record, I did play a little bit with it but not much changed. I'm not sure if this can or even should be set somewhere else.

>

> I agree that OpenPnP seems to be turning more/less than it should, what's baffling me is that it seems to add/subtract a number of degrees to all placements, regardless of nozzle tip or vision settings.

> In the images in my first post there are 4 different parts which were placed using 3 different nozzles and of course used their own individual vision pipelines.

> On Wednesday, June 2, 2021 at 8:06:20 PM UTC+1 mike....@gmail.com wrote:

>

> Correct - what is your current value?

> Mike

>

>> On 2 Jun 2021, at 19.39, fxframes <mp5...@gmail.com> wrote:

>>

>> Thanks for the suggestion.

>> From the images below it does seem to turn a bit more than 90º.

>> Would it be the case of adjusting steps/mm?

>>

>> Start.

>>

>> <Screenshot 2021-06-02 at 18.34.54.png>

>>

>>

>> End

>>

>> <Screenshot 2021-06-02 at 18.35.29.png>

>>

>> On Wednesday, June 2, 2021 at 6:07:31 PM UTC+1 mike....@gmail.com wrote:

>>

>> Try this - JOG nozzle rotation 9 times 10 deg ____on top of up-looking camera ___ does your part turn 90 deg on nozzle ?

>>

>> On Wednesday, 2 June 2021 at 19:04:35 UTC+2 mp5...@gmail.com wrote:

>>

>> My bad, after taking the bottom camera down I understood that the "skew" on the image is a consequence of me trying to manually adjust the Bottom camera rotation...

>> So I'm back to square one.

>>

>> On Wednesday, June 2, 2021 at 4:51:46 PM UTC+1 fxframes wrote:

>>

>> Correct, I redid the bottom camera calibration and rotation for all nozzle tips and the problem persists.

>> However, even though all nozzle tips calibrated successfully and the result shown for the bottom camera is around 1.7º,

>>

>>

>> when aligning the nozzle between placements I noticed how the bottom camera "square" is skewed by a similar angle (close to 5º) to the placement error as you can see below.

>> Shouldn't the nozzle tip calibration have caught that?

>>

>>

>>

>> On Wednesday, June 2, 2021 at 3:46:13 PM UTC+1 johanne...@formann.de wrote:

>>

>> Just to be sure: tried to rotate bottom vision camera (SW or HW), and that didn't changed the behavior?

>> Does the problem persist, if you disable bottom vision?

>>

>> mp5...@gmail.com schrieb am Mittwoch, 2. Juni 2021 um 16:14:45 UTC+2:

>>

>> Thanks, I did it again just in case, but that doesn't seem to be what's causing this. Just ran those parts again:

>>

>>

>> On Wednesday, June 2, 2021 at 2:33:39 PM UTC+1 johanne...@formann.de wrote:

>>

>> Is your bottom vision camera rotated?

>> Would try to adjust (either in hardware or in the openpnp settings) that.

>>

>> mp5...@gmail.com schrieb am Mittwoch, 2. Juni 2021 um 15:24:54 UTC+2:

>>

>> Just adding something, my PCB is detected through fiducials at a slight angle, but again much smaller than the angle at which the parts are being placed.

>>

>> On Wednesday, June 2, 2021 at 2:22:52 PM UTC+1 fxframes wrote:

>>

>> Hello Everyone,

>>

>> I'm having trouble with a rotation offset that seems to applied to all the parts on this one job. I have tried turning "Rotate parts prior to vision?" on and off, to no avail.

>>

>> I've also checked that bottom vision is recognising the parts well, and these are three very different packages as you can see in the images below.

>>

>> This did not seem to affect a previous, different job.

>>

>> I did a visual check on steps/mm ($4tr=160, TinyG) and it seems to be about right (made a pen mark on the rotation axis) and anyway the offset is much larger.

>>

>> Any tips?

>>

>> Thanks!

>>

>>

>> IMG_1901.jpg

>> --

>> You received this message because you are subscribed to the Google Groups "OpenPnP" group.

>> To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

>> <Screenshot 2021-06-02 at 18.35.29.png>

>

> You received this message because you are subscribed to the Google Groups "OpenPnP" group.

> To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/c8780fec-e689-4e73-936d-c20c931ec4ffn%40googlegroups.com <https://groups.google.com/d/msgid/openpnp/c8780fec-e689-4e73-936d-c20c931ec4ffn%40googlegroups.com?utm_medium=email&utm_source=footer>.

Clemens Koller

I forgot to mention that I've setup my A-Axis differently as in the default Liteplacer+TinyG. The A-Axis Stepper is mounted facing upwards and not

facing downwards to get more Z travel range. In any case, take care that the direction of the rotation is correct. When I press the right "C-Axis" button, my A-Axis turns (regular view onto PCB) negatively, clockwise, the same direction as shown on the button image.

You can change the rotation of the stepper simply by inverting the current through one winding (swapping the leads) or, off course, in the TinyG configuration.

Just in case if you want to check the precision/repeatability of some rotational axis, you can glue a little mirror on the axis and use a laser pointer to

magnify the positional error with the distance to a wall. It's still sticks on my nozzle axis.

Clemens

Clemens Koller

On 03/06/2021 22.00, fxframes wrote:

> This is the pipeline I’m using, even if it’s wrong in finding just one hole, hopefully it contains some ideas to help with clear tapes.

The DetectCirclesHough is supposed to detect all cirles in the image (unless masked).

My thoughs are:

Do not use the MaskCircle d=200 as you cannot really see how robust the circle detection is. I recommend to use a MaskRectangle if necessary.

Do not use BlurGaussian at all (I tend to say: generally in OpenPnP) - use BlurMedian 5 because of it's edge and round corner preserving behaviour.

(In OpenPnP, the only reason I am using BlurGaussian followed by a Threshold operation is to do some lazy erosion/dilatation).

Do not use DetectEdgesCanny to prepare an image for DetectCirclesHough, as the DetectCirclesHough has (unfortunately?) already an Canny Edge Detector built. If you use the DetectEdgesCanny before the DetectCirclesHough, you get the Hough Operating to work on edges of edges (=two edges), which leads to positional jitter.

I strongly suggest replacing the OpenPnP's default Image Pipelines with that in mind.

Attached is my image pipeline for regular 1608M resistors in a white tape on black background.

Since all my tapes are alinged horizontally quite close to each other, I am using MaskRectangle accordingly. But this is optionally.

Greets,

Clemens

fxframes

My A axis is set to linear, here are the parameters.

[4ma] m4 map to axis 3 [0=X,1=Y,2=Z...]

[4sa] m4 step angle 0.900 deg

[4tr] m4 travel per revolution 160.0000 mm

[4mi] m4 microsteps 8 [1,2,4,8]

[4po] m4 polarity 0 [0=normal,1=reverse]

[4pm] m4 power management 2 [0=disabled,1=always on,2=in cycle,3=when moving]

[aam] a axis mode 1 [standard]

[avm] a velocity maximum 50000 deg/min

[afr] a feedrate maximum 200000 deg/min

[atn] a travel minimum 0.000 deg

[atm] a travel maximum 600.000 deg

[ajd] a junction deviation 0.1000 deg (larger is faster)

[ara] a radius value 5.3052 deg

[asn] a switch min 0 [0=off,1=homing,2=limit,3=limit+homing]

[asx] a switch max 0 [0=off,1=homing,2=limit,3=limit+homing]

[asv] a search velocity 2000 deg/min

[alv] a latch velocity 2000 deg/min

[alb] a latch backoff 5.000 deg

[azb] a zero backoff 2.000 deg

>

> Hi!

fxframes

Do not use the MaskCircle d=200 as you cannot really see how robust the circle detection is. I recommend to use a MaskRectangle if necessary.

Do not use BlurGaussian at all (I tend to say: generally in OpenPnP) - use BlurMedian 5 because of it's edge and round corner preserving behaviour.

Do not use DetectEdgesCanny to prepare an image for DetectCirclesHough, as the DetectCirclesHough has (unfortunately?) already an Canny Edge Detector built.

Attached is my image pipeline for regular 1608M resistors in a white tape on black background.

On 5 Jun 2021, at 20:51, Clemens Koller <cleme...@gmx.net> wrote:

Hi!

This doesn't look okay or robust in my opinion.

The DetectCirclesHough is supposed to detect all cirles in the image (unless masked).

My thoughs are:

Do not use the MaskCircle d=200 as you cannot really see how robust the circle detection is. I recommend to use a MaskRectangle if necessary.

Do not use BlurGaussian at all (I tend to say: generally in OpenPnP) - use BlurMedian 5 because of it's edge and round corner preserving behaviour.

(In OpenPnP, the only reason I am using BlurGaussian followed by a Threshold operation is to do some lazy erosion/dilatation).

Do not use DetectEdgesCanny to prepare an image for DetectCirclesHough, as the DetectCirclesHough has (unfortunately?) already an Canny Edge Detector built. If you use the DetectEdgesCanny before the DetectCirclesHough, you get the Hough Operating to work on edges of edges (=two edges), which leads to positional jitter.

I strongly suggest replacing the OpenPnP's default Image Pipelines with that in mind.

Attached is my image pipeline for regular 1608M resistors in a white tape on black background.

Since all my tapes are alinged horizontally quite close to each other, I am using MaskRectangle accordingly. But this is optionally.

Greets,

Clemens

--

You received this message because you are subscribed to a topic in the Google Groups "OpenPnP" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/openpnp/HPZlD0ohRcE/unsubscribe.

To unsubscribe from this group and all its topics, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/84593f71-c3f4-3884-5c18-b89fd48d35f0%40gmx.net.

<imgpipeline-R1608M-Hough.xml>

ma...@makr.zone

Do not use BlurGaussian at all (I tend to say: generally in OpenPnP) - use BlurMedian 5 because of it's edge and round corner preserving behaviour.

If you say it this general, I disagree ;-)

BlurMedian should only be used with essentially binary (black and

white/very high contrast) images, to erode away insignificant

specks typically after a thresholding/channel

masking/edge detection operation has taken place.

On color/gray-scale images with soft gradients, BlurMedian loses

location information, i.e. it unpredictably "shifts around" the

image by as much as its kernel size. An unevenly lighted gradient

- e.g. a rounded edge on a paper sprocket hole, a ball on a BGA or

a bevel on a pin lighted slightly from the side - may appear to

shift to one side. As you cannot determine the percentile (it

always takes the fiftiest percentile, a.k.a. the median) it

effectively creates an artificial edge at an

unpredictable gradient level.

https://makr.zone/blur-median.gif

Source:

https://www.tutorialspoint.com/opencv/opencv_median_blur.htm

See how the rucksack is "growing" into the back of the boy, how

the top is "lifted", how the boy's face seems to be "pushed in",

how the balloon seen closest to him seemingly shifts position

down/left.

Of course the effect is exaggerated here, but you get the idea.

- Use BlurGaussian before a thresholding/channel masking/edge detection operation.

- Use BlurMedian after a thresholding/channel masking/edge detection operation.

_Mark

Clemens Koller

On 07/06/2021 17.34, ma...@makr.zone wrote:

> Am 05.06.2021 um 21:51 schrieb Clemens Koller:

>> Do not use BlurGaussian at all (I tend to say: generally in OpenPnP) - use BlurMedian 5 because of it's edge and round corner preserving behaviour.

>

>

> BlurMedian should only be used with essentially binary (black and white/very high contrast) images, to erode away insignificant specks typically /after /a thresholding/channel masking/edge detection operation has taken place.

I disagree, too, if you say "only". ;-)

There is a quite good explanation here: https://en.wikipedia.org/wiki/Median_filter#Edge_preservation_properties

An important part is also: "For small to moderate levels of Gaussian noise..." which I assume is the usual case in OpenPnP's camera images.

I agree with you that a BlurMedian (as well as a BlurGauss+Threshold) on a binary image can be (mis-)used as a erosion / dilatation operation.

However, I would not advice to do that. If you get a lot of undesired speckles, your preprocessing is likely not very robust (i.e. after applying a threshold).

> On color/gray-scale images with soft gradients, BlurMedian loses location information, i.e. it unpredictably "shifts around" the image by as much as its kernel size.

And a BlurGaussian + Threshold operation is also displacing edges with an uneven amount depending on the Blur radius + threshold value.

> An unevenly lighted gradient - e.g. a rounded edge on a paper sprocket hole, a ball on a BGA or a bevel on a pin lighted slightly from the side - may appear to shift to one side. As you cannot determine the percentile (it always takes the fiftiest percentile, a.k.a. the median) it effectively creates an /artificial /edge at an unpredictable gradient level.

This is true. I was however not talking about edge displacement / shifting (loosing spatial information), which can be a problem in some cases. I was talking about edge preserving behaviour (maintaining contrast information, which is what Canny chews on). An edge displacement is very often not an issue in OpenPnP's use cases when the filter operation is sufficiently isotropic.

> If there is a rule:

>

> 2. Use BlurMedian /after /a thresholding/channel masking/edge detection operation.

I don't think that these rules in a general sense are helpful or valid. Off course it all depends on the final goal of the image operations.

I would vote to implement one of the advanced bilateral filters to improve robustness in this case.

It's a pity that I don't have more time to dig deeper into the code, here. :-(

Clemens

ma...@makr.zone

> I was however not talking about edge displacement / shifting (loosing spatial information), which can be a problem in some cases. I was talking about edge preserving behaviour (maintaining contrast information...

OK, I understand. For filters in photography, you're absolutely right. Sorry, I was talking about performing machine vision not about erm... beautifying photos ;-) I guess you agree, for machine vision, it is 100% about spatial information.

> ... which is what Canny chews on

Canny specifically should be prepended by a Gaussian filter:

https://en.wikipedia.org/wiki/Canny_edge_detector#Gaussian_filter

https://docs.opencv.org/master/da/d5c/tutorial_canny_detector.html

If you want to improve on it, you'd need a special replacement

for the Gaussian filter "in order to reach high accuracy of

detection of the real edge":

https://en.wikipedia.org/wiki/Canny_edge_detector#Replace_Gaussian_filter

Edges are always fickle, lots of tuning needed. The most robust solution is not to detect edges in the first place but instead work probabilistically. Like with template image matching.

... or with my circular symmetry stage, see the "Example Images"

section here:

https://github.com/openpnp/openpnp/pull/1179

I since tested it on the machine. It nails everything, zero

detection fails so far (that were not sitting behind the

keyboard). Sprocket holes in tapes of all colors/transparent on

all backgrounds, nozzle tips, (bad) fiducials. Even completely out

of focus with barely any contrast. Doesn't care a bit about

changing ambient light.

One original pipeline, zero setup: All it requires is the

expected diameter. Once the camera units per pixel are known, it's

a no-brainer. Everybody can use a caliper on a nozzle tip or read

the datasheet, no pipeline editing skills required. OpenPnP

provides the diameter dynamically to the pipeline from easy to set

GUI settings or existing data (like footprints if available).

In the meantime I gave it sub-pixel accuracy. Working on

multi-target-detection now...

Sorry about the blab, I'm just really happy how this turned out. 8-D

_Mark

P.S. It took me a while to come back to the idea...

https://groups.google.com/g/openpnp/c/0-S2DMXe3t0/m/0FCu8kTzBQAJ

Clemens Koller

On 07/06/2021 21.55, ma...@makr.zone wrote:

> /> //I was however not talking about edge displacement / shifting (loosing spatial information), which can be a problem in some cases. I was talking about edge preserving behaviour (maintaining contrast information.../

> OK, I understand. For filters in photography, you're absolutely right. Sorry, I was talking about performing machine vision not about erm... beautifying photos ;-) I guess you agree, for machine vision, it is 100% about spatial information.

> /> ... which is what Canny chews on/

> Canny specifically should be prepended by a Gaussian filter:

> https://en.wikipedia.org/wiki/Canny_edge_detector#Gaussian_filter

This (what is written there) is true for the general case. It would not be a good idead to suggest the Median Filter *generally*, as it will fail badly when you have to deal with salt and pepper noise, for example. But this is usually/very likely *not* the case in OpenPnP, where people are using normal CMOS (CCD) cameras which have a relatively uniform gaussian (thermal) noise distribution (AWGN+x). Then, in this case, the Median Filters will just perform better as I've tried to explain already. That's why I've included the Wikipedia link to the Median Filter last time. Maybe you didn't notice:

"For small to moderate levels of Gaussian noise, the median filter is demonstrably better than Gaussian blur at removing noise whilst preserving edges for a given, fixed window size."

I was working for several years in a company where we had to deal with edge detection of all kind of solar cells and ceramic patches with sub-pixel precision up to the point when we started to go with telecentric optics and shorter wavelengths to achieve higher precision. I am not talking about general approaches. Machine Vision only. 8-)

> If you want to improve on it, you'd need a special replacement for the Gaussian filter "in order to reach high accuracy of detection of the real edge":

>

> Edges are always fickle, lots of tuning needed. The most robust solution is /not /to detect edges in the first place but instead work */probabilistically/*. Like with template image matching.

Well... it all depends of the final goal and how to wisely tailor the intermediate processing steps to achieve that. Adaptive filtering is only as good as the model of your imaging channel is. It likely not a big deal to get better than the "blind" approach (which is usually not taking the image content into account to adjust the filters) which are more or less the current default image pipelines in OpenPnP.

> ... or with my circular symmetry stage, see the "Example Images" section here:

> I since tested it on the machine. It nails everything, zero detection fails so far (that were not sitting behind the keyboard). Sprocket holes in tapes of all colors/transparent on all backgrounds, nozzle tips, (bad) fiducials. Even completely out of focus with barely any contrast. Doesn't care a bit about changing ambient light.

>

> One original pipeline, zero setup: All it requires is the expected diameter. Once the camera units per pixel are known, it's a no-brainer. Everybody can use a caliper on a nozzle tip or read the datasheet, no pipeline editing skills required. OpenPnP provides the diameter dynamically to the pipeline from easy to set GUI settings or existing data (like footprints if available).

>

> In the meantime I gave it sub-pixel accuracy. Working on multi-target-detection now...

>

> Sorry about the blab, I'm just really happy how this turned out. 8-D

Otherwise, as I've written some weeks ago, it would be fun to get my code based on https://users.fmrib.ox.ac.uk/~steve/susan/ back to life. But the stuff is highly optimized c++ code with lookup tables and pointer arithmetics because we had to go for max. throughput... propably not so easy to get over into the Java world. The math behind SUSAN is IMO very interesting and can be tailored (adaptively) to all kind of feature extraction stages (adaptive denoising, edge detection, corner detection (and even anisotropic). I think I've read that the patent is expired in the meanwhile... There are several sources out in the wild. I am not sure if something ended up already in OpenCV or not. YMMV.

Clemens

ma...@makr.zone

Hi Clemens,

To explain why I bother: I was originally responding to this:

> Do not use BlurGaussian at all (I tend to say: generally in OpenPnP) - use BlurMedian 5 because of it's edge and round corner preserving behaviour.

All I was saying is that this is not true in its general and

absolute form. I would still argue it is more often wrong

than true.

And I started to care because this has a potency to mislead

users.

> "For small to moderate levels of Gaussian noise,

the median filter is demonstrably better than Gaussian blur at

removing noise whilst preserving edges for a given, fixed window

size."

Well I still believe this sentence does apply to

photography. Immediately before that sentence you cited, it says:

"Edges are of critical importance to the

visual appearance of images, for example."

https://en.wikipedia.org/wiki/Median_filter#Edge_preservation_properties

It does preserve an edge, yes, but not necessarily at the right

place, as I demonstrated with the boy+balloons image:

https://makr.zone/blur-median.gif

Like I said the median blur is fine if the image at hand is

already very high contrast, ideally already binary. If there are

no relevant smooth gradients or artifacts involved in or around

the edge, then OK.

If in doubt, use Gaussian. Gaussian does better preserve spacial

information, at least above the channel (integral) resolution and

noise level. Hence it is benign for noise and other artifacts

reduction. Most common cameras use MJPEG or other compression

methods that produce artifacts. These look nice in our brains but

are bad for machine vision. Compression often involves an

underlying 8x8-pixel block size. Gaussian will typically restore a

weaker, but more likely correct edge signal out of that

(probabilistically speaking).

> I am looking forward to test this and read the code when I setup the next PCB on the machine.

You can already do that, if you want. it's already in newer

OpenPnP 2.0 (not yet with sub-pixel accuracy). The pipelines are

posted in the PR. Just paste them an try. First version code is

also linked:

https://github.com/openpnp/openpnp/pull/1179

_Mark

fxframes

fxframes

ma...@makr.zone

Hi fx frames

this is not yet supported in the current stage, it can only detect one hole. But I'm just in the process of testing this. ;-)

Coming soon!

_Mark

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/3215201e-c589-47b0-99b3-426642c74997n%40googlegroups.com.

fxframes

ma...@makr.zone

Ah yes, if you're only using it for the feed vision and not for

Auto Setup then it should work.

But the PR is already done:

https://github.com/openpnp/openpnp/pull/1217

Please update your OpenPnP 2.0 version.

You could help me by testing the pipelines

as proposed in the PR. ;-)

They should work out-of-the-box, the goal is no tuning

with any tape color or material, any background color or material,

transparent tapes etc.

Also for Auto Setup.

But be mindful that you need quite accurate Units per Pixel set

on the camera and your feeders must be close to the camera focal

plane in Z.

If it does not works as is with your feeders, please send the input images (insert an ImageWriteDebug stage right after ImageCapture). Appreciate!

_MarkTo view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/bde39d3b-1bfe-4535-8547-ed961b51f38en%40googlegroups.com.

fxframes

fxframes

fxframes

fxframes

On 16 Jun 2021, at 10:30, fxframes <mp5...@gmail.com> wrote:

Hello Mark,This doesn't seem quite right yet.Out of the box and running auto setup it gives me

<Screenshot 2021-06-16 at 10.20.31.png>This is what the pipeline looks like. You can see the hole in the center isn't "found".

<Screenshot 2021-06-16 at 10.21.27.png>

<Screenshot 2021-06-16 at 10.21.50.png>Also when maxTargetCount=1 it finds the wrong hole.

<Screenshot 2021-06-16 at 10.24.19.png>On Wednesday, June 16, 2021 at 9:50:41 AM UTC+1 fxframes wrote:Thanks Mark, I will test this and report back.Ah yes, if you're only using it for the feed vision and not for Auto Setup then it should work.

But the PR is already done:

https://github.com/openpnp/openpnp/pull/1217Please update your OpenPnP 2.0 version.

You could help me by testing the pipelines as proposed in the PR. ;-)

They should work out-of-the-box, the goal is no tuning with any tape color or material, any background color or material, transparent tapes etc.

Also for Auto Setup.

But be mindful that you need quite accurate Units per Pixel set on the camera and your feeders must be close to the camera focal plane in Z.

If it does not works as is with your feeders, please send the input images (insert an ImageWriteDebug stage right after ImageCapture). Appreciate!

_Mark

--

You received this message because you are subscribed to a topic in the Google Groups "OpenPnP" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/openpnp/HPZlD0ohRcE/unsubscribe.

To unsubscribe from this group and all its topics, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/68efad37-1568-4469-b839-e9db6ce48ad7n%40googlegroups.com.

<Screenshot 2021-06-16 at 10.21.50.png><Screenshot 2021-06-16 at 10.24.19.png><Screenshot 2021-06-16 at 10.20.31.png><Screenshot 2021-06-16 at 10.21.27.png>

ma...@makr.zone

Hi fxframes

thanks for testing!

The DetectCircularSymmetry stage has a search range (the maxDistance

property) that limits the search to this maximum distance from the

expected position, which is usually the camera center (but it can

be overridden by the vision operation such as in nozzle tip

calibration associated camera calibration).

The search range controls both the scope but also the

computational cost of the stage. Remember: I had to develop it in

Java and Java is clearly not a good match for this low level pixel

crunching stuff. Conclusion: there is no need for a mask.

But both the expected position and the search range will only be

parametrized by OpenPnP when inside the actual vision function

of the specific (feeder) operation. It is different in Auto

Setup (range goes to camera edge) and in feed operation

(range is only half a sprocket pitch).

When in the Editor the search range is like in Auto Setup i.e. to

the camera edge, therefore the number and selection of

holes detected might be misleading.

You can make the search range visible by enabling the diagnostics

switch. Then the difference becomes visible in the result

images with the overlaid heat map, see the ImageWriteDebug images

below.

The important question for me is

this: Does it work when used in normal operation, i.e.

with Auto Setup and with feed operations?

For a feed operation (positional calibration):

In Auto-Setup / Editor (Note my camera is too narrow at the moment, because I lifted the table by 20mm but not yet the camera, so the Auto-Setup range is a bit narrow)

_Mark

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/793DBF63-D4E4-4390-BF4E-E066F4626D6D%40gmail.com.

ma...@makr.zone

fxframes

ma...@makr.zone

Could you take this pipeline (if you haven't already):

https://github.com/openpnp/openpnp/wiki/DetectCircularSymmetry#referencestripfeeder

And then enable the first ImageDebugWrite stage and then send me the images?

Found here

$HOME/.openpnp2/org.openpnp.vision.pipeline.stages.ImageWriteDebug/

Don't forget to disable again, it creates a ton of images in Auto Setup.

_Mark

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CB0E0C13-2475-42E3-92D8-2BC147D5533A%40gmail.com.

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CB0E0C13-2475-42E3-92D8-2BC147D5533A%40gmail.com.

--

You received this message because you are subscribed to a topic in the Google Groups "OpenPnP" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/openpnp/HPZlD0ohRcE/unsubscribe.

To unsubscribe from this group and all its topics, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/b580a345-038d-4307-ac54-b9ef63d59bb4n%40googlegroups.com.

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CB0E0C13-2475-42E3-92D8-2BC147D5533A%40gmail.com.

ma...@makr.zone

Got the images. Can you please post your down-looking camera's

Units per Pixel?

Thanks,

Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/83ddb7f0-f907-2930-1ecd-60a5faa10c43%40makr.zone.

fxframes

ma...@makr.zone

Yes, very difficult.

These are the gotchas I see:

- Your light diffuser has a hole in the middle, where the camera

looks through. The clear tape therefore has no reflection there.

Having a co-axial light (half-way mirror before the camera

bouncing light) would help (I have the same problem on mine).

- The feeder holder has a strong layer pattern that is seen

through the clear tape, because of point 1. The layer pattern

disrupts circular symmetry outside the hole. You may have to

reduce subSampling down from 8, so it is not fooled by

interference effects. The stage might then be slower.

- The feeder holder has an outcropping that keeps the tape in.

This outcropping (or the shadow of it) goes right to the

sprocket hole edge. It therefore breaks the circular symmetry

around the hole. Ideally you would reduce the outcropping for

the next feeders you print, it seems less would do.

- You can try to workaround that by reducing the outerMargin

to 0.1, so the ring margin will not be cut so much. However,

this may not work (i.e. you'll have to experiment with other

values) due to the next point.

- In your video it seems the Units per Pixel are not accurate

for the tape surface. I guess it is higher in Z than PCB surface

i.e. it appears larger. You see how the machine moves much

farther than what you clicked. And you see how four ticks on the

cross hairs do not align with the sprocket hole pitch (4mm).

- I tried reconstructing Units per Pixel and got

~0.0206mm/pixel. I guess your calibrated value is significantly

higher, if I got that right ;-).

- Once I apply the right Units per Pixel and reduce outerMargin

to 0.1, I get detection on the image that seems like the one

that fails in the video: strip_7555303524774678967.png

Regarding the feeder surface Z:

@tonyluken has introduced "3D" Units per Pixel. Unfortunately it

is not yet applied to feeder Z. Once this is available, it will be

possible to compensate:

https://github.com/openpnp/openpnp/pull/1112

However, until then you should have the feeder tape surfaces very

close in Z to the PCB surface i.e. where you calibrated your Units

per Pixel. Everything must ideally be on the same Z plane.

Otherwise, you will likely always have some problems, because

these feeders' vision works with well known absolute

geometry from the EIA 481 standard.

For the ReferenceStripFeeder the issue is mostly with Auto-Setup

(I guess that's why you didn't use it even before trying this new

stage). There is some tolerance in the code and maybe you can get

it working by playing with the innerMargin/outerMargin.

For the routine feed vision, the camera will be centered on the

sprocket hole and Units per Pixel will not be so important (maybe

for 0402 or 0201 parts where the hole offset detected with the

wrong Units per Pixel might start to matter, but I doubt it).

Conclusion: The reason it failed can be well explained (so far)

and most of these issues will create similar problems with other

stages.

I'm afraid the new stage cannot perform miracles ;-)

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/f1ceb511-ef0e-2015-57a3-530eb3fafe12%40makr.zone.

fxframes

On 16 Jun 2021, at 19:37, ma...@makr.zone wrote:

Yes, very difficult.

These are the gotchas I see:

- Your light diffuser has a hole in the middle, where the camera looks through. The clear tape therefore has no reflection there. Having a co-axial light (half-way mirror before the camera bouncing light) would help (I have the same problem on mine).

- The feeder holder has a strong layer pattern that is seen through the clear tape, because of point 1. The layer pattern disrupts circular symmetry outside the hole. You may have to reduce subSampling down from 8, so it is not fooled by interference effects. The stage might then be slower.

- The feeder holder has an outcropping that keeps the tape in. This outcropping (or the shadow of it) goes right to the sprocket hole edge. It therefore breaks the circular symmetry around the hole. Ideally you would reduce the outcropping for the next feeders you print, it seems less would do.

- You can try to workaround that by reducing the outerMargin to 0.1, so the ring margin will not be cut so much. However, this may not work (i.e. you'll have to experiment with other values) due to the next point.

- In your video it seems the Units per Pixel are not accurate for the tape surface. I guess it is higher in Z than PCB surface i.e. it appears larger. You see how the machine moves much farther than what you clicked. And you see how four ticks on the cross hairs do not align with the sprocket hole pitch (4mm).

- <noljkpaaiceennln.png>

- I tried reconstructing Units per Pixel and got ~0.0206mm/pixel. I guess your calibrated value is significantly higher, if I got that right ;-).

- Once I apply the right Units per Pixel and reduce outerMargin to 0.1, I get detection on the image that seems like the one that fails in the video: strip_7555303524774678967.png

- <docdkaioemdihpdm.png>

fxframes

- Your light diffuser has a hole in the middle, where the camera looks through. The clear tape therefore has no reflection there. Having a co-axial light (half-way mirror before the camera bouncing light) would help (I have the same problem on mine).

- The feeder holder has a strong layer pattern that is seen through the clear tape, because of point 1. The layer pattern disrupts circular symmetry outside the hole. You may have to reduce subSampling down from 8, so it is not fooled by interference effects. The stage might then be slower.

- The feeder holder has an outcropping that keeps the tape in. This outcropping (or the shadow of it) goes right to the sprocket hole edge. It therefore breaks the circular symmetry around the hole. Ideally you would reduce the outcropping for the next feeders you print, it seems less would do.

- You can try to workaround that by reducing the outerMargin to 0.1, so the ring margin will not be cut so much. However, this may not work (i.e. you'll have to experiment with other values) due to the next point.

- In your video it seems the Units per Pixel are not accurate for the tape surface. I guess it is higher in Z than PCB surface i.e. it appears larger. You see how the machine moves much farther than what you clicked. And you see how four ticks on the cross hairs do not align with the sprocket hole pitch (4mm).

- <noljkpaaiceennln.png>

- I tried reconstructing Units per Pixel and got ~0.0206mm/pixel. I guess your calibrated value is significantly higher, if I got that right ;-).

- Once I apply the right Units per Pixel and reduce outerMargin to 0.1, I get detection on the image that seems like the one that fails in the video: strip_7555303524774678967.png

- <docdkaioemdihpdm.png>

ma...@makr.zone

Glad it works out.

> One final note, did you get one of those coax LEDs for yourself? There doesn’t seem to be a lot of them around. This one seems like it could work.

If you want even lighting in the full camera view plus high

Z clearance, co-axial lighting becomes problematic, because the

half-mirror needs to reach the edge of the reflecting light cone

(or pyramid?). The mirror glass will need to be large and reach

far away from the lens front and reduce Z clearance. You will

likely need a longer focal length (which in itself is good, but

requires buying a new lens), and a higher camera mounting point

(which is difficult on an existing machine design).

Therefore, I was thinking about creating a hybrid design, with

only the center part (where the camera needs to peek through a

diffuser) being half-mirrored and the rest conventional. You could

use one of these very thin microscope cover glasses, that are

available with optical quality. LEDs would be pointing up from a

ring towards the diffuser, and towards the mirror from a small

"side-car" PCB angled at 90°.

But I only got to design a very basic "light cone" in OpenSCAD so

far (6.2mm lens):

Another design is not co-axial but has a diffuser design that

only leaves a tiny gap (hope you get the two pictures):

_Mark

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/F9D319D5-C52C-45A8-BEFC-6527DF0D1909%40gmail.com.