Can BootVision be configured for two different cameras?

ByronDong

mark maker

Hi Byron,

The idea was discussed before, but unfortunately nobody implemented such a thing, yet. 😎

https://groups.google.com/g/openpnp/c/O-J2KPbHCE4/m/gvNPefiEAQAJ

My old answer is still almost the same:

> Does OpenPNP support the use of Multiple Up Cameras?

No, the current implementation does not support the use of multiple cameras, but yes, OpenPnP does allow you to define multiple Up Cameras and has the architecture to support this with relatively few things missing, and (more importantly) nothing standing in the way. Always amazes me how great Jason's underlying architecture is!

Quasi-parallel vision i.e. just dedicating one camera for each nozzle but still performing the alignments one after the other could probably be done in a few lines of code, the GUI to associate a nozzle with a camera being the hardest part ;-). The gain is reduced motion time to position each nozzle plus some avoided settle times. This is probably only worth it, if you do the vision at retracted nozzle height, so no Safe Z up/down motion is needed (EDIT: or with dedicated one-per-nozzle Z axes). Doing it at a different focal plane than the PCB plane introduces some parallax problems that we luckily already solved (Marek has this on his machine and his testing helped me develop a solution) :-)

https://makr.zone/improved-runout-compensation-and-bottom-camera-calibration/346/

However, true ganged-up bottom vision i.e. doing it at the very

same time would be a much taller order. Much more to reprogram in

OpenPnP. Iterative/multi-pass bottom vision would either only work

for rotation (only if you have non-shared C axes) or spoil most of

the time gained. You would have to calibrate camera (pixel)

centers and/or provide a way to mechanically adjust the cameras to

the nozzles in X/Y and/or plane. Again this only makes sense if

done at retracted nozzle height (EDIT: or with dedicated

one-per-nozzle Z axes).

One important thing to keep in mind is added CPU load through

running so many quality USB cameras.

https://makr.zone/camera-fps-cpu-load-and-lighting-exposure/519/

_Mark

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/b2ece242-8f4a-4aa0-9b0d-3d627704e3c1n%40googlegroups.com.

ByronDong

mark maker

Hi Byron,

The recommended camera is an ELP 720p USB camera. It has shown to

be the best choice again and again. You can connect two via hub to

the same USB port.

https://github.com/openpnp/openpnp/wiki/Build-FAQ#what-should-i-build

720p is enough resolution for both the top and bottom camera.

A good quality of the image (low compression) and full

manual settings are more important than more resolution,

and the ELP cameras have it.

The ELP 1080p camera can also be used, alternatively in 720p mode

and then has double the frame rate (60fps). This is what I use.

But because this doubles the bandwidth, you

need a dedicated USB root hub/computer port for each camera,

i.e. you can not connect both cameras via the same

hub!

More resolution is counter-productive: you need too much

processing power for computer vision, but without any better

results. Note that the few computer vision applications that

really count (fiducials, sprocket holes etc.) do sub-pixel

accuracy nowadays, you get positional accuracy of many times the

nominal pixel resolution! (it has become a bit of an obsession

when I made the DetectCircularSymmetry

stage, where I measured 4-8 times the pixel resolution on my

machine).

Higher resolution needs more bandwidth, otherwise it will just be

compressed more, and compression artifacts worsen computer vision

accuracy. Compression is optimized for human viewers (perceptual

coding), so even if modern codecs can compress more, it might be

detrimental to positional accuracy.

You also need more light for effective higher resolution, unless the sensor is larger. But a larger sensor means clunkier lens and less focal depth, which is bad at least for the top camera.

There are also many cameras out there that fake high resolution and/or because the lens optical quality, lens speed etc. do not match the resolution, they have to "pretty up" the image with algorithms, which makes computer vision inaccurate. Not being able to switch off denoising, sharpening and other "pretty"-algorithms is bad.

Also be mindful that low fps is bad, so never exchange

more resolution for lower fps. And low fps can

be caused by not enough light.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/548cad8d-7eeb-4db0-8115-40c5e9c343fan%40googlegroups.com.

Jarosław Karwik

mark maker

Hi Jarosław,

Thanks for the offer, this is great!

These are some thoughts...

- All uses of the bottom camera that I am aware of, are looking

at a nozzle (with or without a part on).

- So I suggest we make a drop-down on the nozzle to define which bottom camera should be used.

- We add a nozzle parameter to

the already existing VisionUtils.getBottomVisionCamera()

function.

- All callers (currently 17) must now pass the nozzle.

- We perhaps need to check, if some code does not go through the VisionUtils.getBottomVisionCamera() function and make it so.

- We probably need to introduce a "nozzle order" field, that orders the nozzles in a way that allows aligned vision.

- To explain: In case of a four-nozzle machine that has the

nozzles in a rectangular configuration but "only" two cameras,

they need to go through the bottom vision steps in aligned

pairs. Note that the cameras could be aligned in X or in Y, for

various reasons.

- Make the notion of "default nozzle" aware of the camera that

addresses it. Say a user presses "move nozzle to camera" (e.g.

through drag-jogging on the bottom camera view) without saying

which nozzle (i.e. selected tool is not a nozzle), it should

respect the camera-to-nozzle assignment.

- See MachineControlsPanel.getSelectedNozzle().

- See Head.getDefaultNozzle(),

which should probably get an overload that takes a camera as parameter, and all callers

need to be checked if they are in the context of a

particular camera.

- Double check that Issues & Solutions calibrates all bottom cameras (should already be the case)

- Make Issues & Solutions use a "default nozzle" as described above (is not currently the case).

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/370f58eb-0fb0-4d2e-9536-82f5acbcd204n%40googlegroups.com.

Jarosław Karwik

mark maker

Ah, I understand, you would forfeit "ganged-up" operation.

I guess this could be added on top: because we have the nozzle

(point 3 in my list), we can also get the part that is

currently on the nozzle. And this part can then override the

standard affinity.

However, I would not add it inside the pipeline,

but outside in the vision settings. So users can

change it without having special pipeline editing skills.

Furthermore, this is also technically more straight-forward, as

the "camera" is passed into the pipeline as a property and

is queried by various stages for dimension, Units per Pixel etc.

Such properties should not suddenly be changed by the pipeline.

https://github.com/search?q=org%3Aopenpnp+pipeline.setProperty%28+camera&type=code

https://github.com/search?q=org%3Aopenpnp+pipeline.getProperty%28+camera+%29&type=code

This way the pipeline also remains neutral, i.e. it can be

exchanged between users/OpenPnP installations.

_Mark

Well, what you recommend is selecting camera by nozzle.This may work - it is relatively nice and simple concept.

Just that I usually misuse my nozzles - to avoid changing nozzle I use "mid" size for both small components ( like small transistors) as well as large components ( like so16 .. so24)That is why original idea was to associate it with component - not the nozzle. This however would be more complicated as it introduces more settings , nozle/camera matrix etc.So the idea to add camera selection in pipeline.

czwartek, 23 czerwca 2022 o 12:29:07 UTC+2 ma...@makr.zone napisał(a):

Hi Jarosław,

Thanks for the offer, this is great!

These are some thoughts...

- All uses of the bottom camera that I am aware of, are looking at a nozzle (with or without a part on).

- So I suggest we make a drop-down on the nozzle to define which bottom camera should be used.

- We add a nozzle parameter to the already existing VisionUtils.getBottomVisionCamera() function.

- All callers (currently 17) must now pass the nozzle.

- We perhaps need to check, if some code does not go through the VisionUtils.getBottomVisionCamera() function and make it so.

- We probably need to introduce a "nozzle order" field, that orders the nozzles in a way that allows aligned vision.

- To explain: In case of a four-nozzle machine that has the nozzles in a rectangular configuration but "only" two cameras, they need to go through the bottom vision steps in aligned pairs. Note that the cameras could be aligned in X or in Y, for various reasons.

- Make the notion of "default nozzle" aware of the camera that addresses it. Say a user presses "move nozzle to camera" (e.g. through drag-jogging on the bottom camera view) without saying which nozzle (i.e. selected tool is not a nozzle), it should respect the camera-to-nozzle assignment.

- See MachineControlsPanel.getSelectedNozzle().

- See Head.getDefaultNozzle(), which should probably get an overload that takes a camera as parameter, and all callers need to be checked if they are in the context of a particular camera.

- Double check that Issues & Solutions calibrates all bottom cameras (should already be the case)

- Make Issues & Solutions use a "default nozzle" as described above (is not currently the case).

_Mark

On 23.06.22 08:53, Jarosław Karwik wrote:

I have once made such change ( on my private branch), but) as I sold machine it was done for I never published it.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/af665b0f-57ba-4735-97b8-3e6b5bfe4ca0n%40googlegroups.com.

ByronDong

Jarosław Karwik

mark maker

Yes that sounds even better.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/51543b88-15ea-46cf-b496-8030ec0ee6e0n%40googlegroups.com.

Jarosław Karwik

mark maker

> And using different cameras mean that these settings might be a little different.

I see two use cases (so far):

- Use multiple equal cameras for some sort of "ganged-up" vision, i.e. avoid moves between nozzles (or at least make these moves very small and fast).

- Use multiple different cameras with different properties, like lens focal length for instance, e.g. for small and large parts.

In (1) the visions settings should be equal and not contain a

camera selection. Instead the camera should be taken from the

nozzle.

In (2) the camera must actually be selected by properties like

the package size.

As you know in the new vision settings system, there is this

"inheritance" in place, so if no "override" vision settings are

assigned to a Part or Package the next level is inherited.

Part <-- Package <-- Default.

It would be relatively easy to make the selection of "Default"

subject to filter-properties of the Part/Package.

Part <-- Package <-- Small Default. <-- Large Default

Then the camera can be assigned to the Visions Settings. It can then still be overridden on certain Parts or Packages.

Even a mix of (1) and (2) is possible that way, using three

cameras. Only the "Large Default" would assign a large view

camera. The "Small Default" would not, and instead the nozzle

assignment would be effective.

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/368d4863-21b6-4ff8-a50c-0f26458f0a50n%40googlegroups.com.

Jarosław Karwik

mark maker

Hi Jarosław

Just to make it clear, it would not be true parallel

ganged-up vision. The vision would still be performed sequentially,

but if multiple nozzles are exactly aligned with multiple cameras,

and bottom vision is done at nozzle balance Z (not PCB Z) and if

the JobProcessor is slightly updated to allow custom sorting of

the nozzles, then it would still be much faster, because there

would be no move between the alignment steps of the

nozzles (or only a tiny adjustment move if the nozzles are not perfectly

aligned). With adaptive Camera Settle, the time

between camera shots would be virtually zero! So almost "ganged-up".

I've all laid it out in the post 2022 12:29:07 UTC+2 (in this

discussion). If somebody builds that machine and is ready to do

some thorough testing, I will implement that (offer stands

for the next two months or so, implementing might take some

weeks).

As for too large parts: I'm still planning to do a multi-shot

bottom vision extension (where part corners are centered

above the camera and aligned between multiple shots). Usually, you

only have very few large parts (typically one large MCU)

and you can afford for them to take a bit longer. Just make sure

that you can move even the largest part sideways to the corners,

at any rotation, and still not bump into something.

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/18fb2763-7020-4958-a30b-2fbcbff6cde9n%40googlegroups.com.

bert shivaan

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/14953446-aa21-e63a-8deb-8e1f2530cf15%40makr.zone.

mark maker

In the proposed first approach it would just have to move 1mm between shots. But that's still much better than the full move between nozzles. And I expect users could come up with a clever adjustable camera holder, so we can do better than 1mm.

In a later revision, I'm sure we could just crop the camera

images to make the imperfect nozzle center become the pixel center

(half-way adjust per camera). No more move required.

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CA%2BKNHNw5Vp8tL8eoNxibeHa0-V7svAJK58VqOQjqBrhdXoZ5ZA%40mail.gmail.com.

bert shivaan

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/9667f61f-a6c5-7048-d668-920d727d323b%40makr.zone.

mark maker

I'm afraid it would require almost all the PnP functionality to be conclusive, I mean more conclusive than simulation.

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CA%2BKNHNxLFe9r%3DBTkhzTazeijAikKagx%2B2Btt%2B1Zg%3DFJEn1D4KA%40mail.gmail.com.

bert shivaan

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/161a938c-2a39-1452-47e2-825fd47dbbf4%40makr.zone.

Jarosław Karwik

mark maker

Hi Jarosław,

I'm currently implementing multi-shot bottom vision. Larger

problem than I thought, but I'm getting there.

So maybe your use case for using two cameras (one small, one

large) is going to be obsolete?

Naturally, multi-shot is going to be slower, but for typical projects, where you only have one or two large ICs, this will be insignificant. And I'm making sure it'll be as efficient as possible.

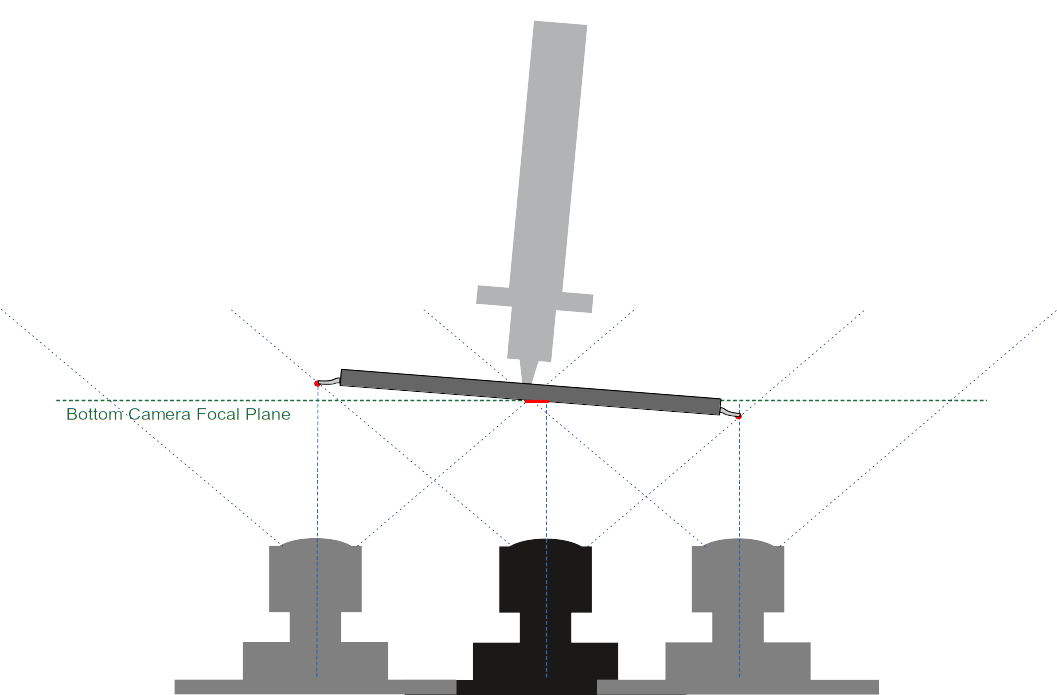

Multi-shot can also improve the precision, because it will

capture the package corners in the center of the camera, with no

parallax. The following illustration shows one example where this

could matter: a typical wide angle camera and a large part that is

not held precisely planar (exaggeration):

Having said that, in the multi-shot feature, I'm also making

sure, that nothing will be in the way of having multiple cameras

in the future.

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/7860ee83-df4a-4d9f-a230-e6bcba862542n%40googlegroups.com.

Jarosław Karwik

Jim Freeman

mark maker

Hi Jim

> Will the 4 corner points be made available for post-processing?

What do you mean by post-processing?

> Also do you think this will be more accurate that processing a single image. If the machine ( mine for instance) has 50 micron error in positioning then each corner measurement will have that on top of the accuracy of finding the corner.

If these errors are essentially random, the yes, you can expect

an improvement, simply by laws of probability (confidence

interval). The more corners you probe, the more accurate the

overall result. This will obviously only reduce the bottom vision

error, not the placement error...

In my implementation you will be able to tell it to probe extra

corners to improve accuracy (at the cost of some extra bottom

vision time).

If the errors are not random, then chances are, you can

reduce them by backlash compensation (or by fixing some underlying

problem).

_Mark

--

You received this message because you are subscribed to the Google Groups "OpenPnP" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openpnp+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CABRkqyB2%2B%3DxNzR7r1yT%2BwLnZVBDRj%3DWywUJ%2BoGx3BECSC9f21g%40mail.gmail.com.

vespaman

When this thread started, I instantly got interested for my CHM--T48VB. I measured that using only the sensors on a M12 optics flex cable will fit.

I am currently doing a hw design on a i.mx8m plus, with 2 cams MIPI like this right now, so there are some synergies. But there can only be 2 MIPI cameras on each imx8mp. Maybe a third/fourth camera can interface through USB3. If, dreaming on, this could then be a vision/sensor hub, that presents images onto ethernet for openPnP. Or maybe it can even host also OpenPnP, and make use of the AI/ML neural core, but that might be too much work for those little arm cores.

mark maker

I was thinking a bit more...

One problem is that you'd need to shoot the parts at balanced Z

height, otherwise you still need to move Z, which would probably

spoil most of the gain.

But by nailing Z you cannot account for different part heights and the part undersides will be blurred on tall parts. You could still use it for small passives (which are typically the most numerous), but probably not in general. 🙁

Ideally, you would use a quadro-head, two pairs of nozzles with

shared axes. The pairs could be side-by-side (one row) or in two

rows one pair behind the other, but the two rows further apart

than the nozzles, to make space for the cameras.

The cameras would be aligned with one nozzle of each pair, either

skipping one if in a row, or being aligned with one of the two

rows.

Either way, both nozzle could independently move their Z at the

same time and we would get all the accuracy benefits of a focal

plane on the same Z as the PCB. When they move to the second set

of shots, there is time to move X and the two Z at the same time.

This would really rock. 😎

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/3b0cffe0-269c-41d4-9f3d-f735794f02c3n%40googlegroups.com.

bert shivaan

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/6748cae1-029a-67b5-171f-4c16a61332c2%40makr.zone.

mark maker

> if the nozzles are too close to see with 2 cameras, can we use a pic of both of them

It is the camera's PCBs/housing etc. that is colliding, not their

view area.

To have a large camera view cover multiple nozzles is certainly

possible, as some Neoden machines show. But you probably need a

hires camera in still shot mode, a long focal length, and

consequently a large camera distance, so the parallax errors

remain reasonable.

And this will very likely only work for small parts (which could still be very useful, as these are typically the most numerous).

But the required changes to the code would be very profound, they

would reach deep into the Job Planner and Job Processor. Unlikely

to happen soon 😬.

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/CA%2BKNHNwaxA%3D%2Bz2UA%2BVHKHOkpW%3D7WWsRQdDm2K5NxgQJPOz7k5Q%40mail.gmail.com.

bing luo

Connect 2-way cameras through the hub and transmit through a USB cable. You can identify 2 independent video devices. The 2-way cameras work at the same time

The binocular camera is drive free (no driver is required), conforms to the standard drive free UVC protocol, facilitates secondary development, has good versatility, and provides a programming interface for content setting (brightness, contrast, saturation, tone, clarity, white balance, exposure, gain)

DirectShow, opencv and other software development can be used in Windows system, and v4l2 development can be used in Linux system

Users can use MJPEG format to output dual video of any resolution, and the maximum video can be 2560 * 720 (1280 * 720x2)

Blowtorch

mark maker

Good point. But I'd prefer an automatic optimization rather than

static assignment. I guess that can easily be obtained.

_Mark

To view this discussion on the web visit https://groups.google.com/d/msgid/openpnp/a2078331-e796-42c3-8105-cdb6982759e1n%40googlegroups.com.