Santa Claus

a. Execution for the first time b. Execution for the second time

Fig 1. Time consumed by DGEMM of OpenBLAS v0.2.10 pre3

Fig 2. Time consumed by DGEMM of MKL 11.1 update 1

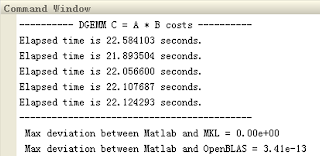

Fig 3. Time consumed by expression of "C = A * B" in Matlab R2012a

Figure 3 indicates that the results computed by either MKL or OpenBlas basically agree well with that returned by Matlab. However, as shown in Figure 1, OpenBLAS exhibites the slowest but also unsteady computing speed.

When the foregoing binary "libopenblas.dll" and "libopenblas.lib" (which were built by myself) are substituted by the early release OpenBLAS-v0.2.8-x86-Win.zip, OpenBLAS speeds itself up significantly, and saves nearly half time than its contestants, which is shown in Figure 4. This is a definitely, positively, absolutely great boost to the computing speed of BLAS !!! (sorry for my excitation)

Fig 4. Time consumed by DGEMM of OpenBLAS v0.2.8

Finally, can you be kind enough to answer my following questions that

1) why does OpenBLAS v0.2.8 outperform OpenBLAS v0.2.10 pre 3 so much?

2) if I have missed or mistaken something in the build process?

Thanks in advance for your upcoming replies~

Best regards,

Wenkai Zhao

Werner Saar

> Dear all,

>

> Many thanks to the instructions suggested in

> https://github.com/xianyi/OpenBLAS/wiki/How-to-use-OpenBLAS-in-Microsoft-Visual-Studio

> ,

> I have successfully built the binary "libopenblas.dll" as well as

> "libopenblas.lib" of openblas-v0.2.10-pre3-src.tar.gz

>

> MKL 11.1 update 1 (with static link libraries) and Matlab R2012a (with C =

> A * B) respectively, the corresponding results (in terms of computing

> speed) are presented below,

>

> <https://lh4.googleusercontent.com/-g3pOcabl_O8/U7Jz8Nyt-GI/AAAAAAAAAB8/wP5CE6mIjXs/s1600/OpenBLAS+v0.2.10+pre3_2.png>

> a. Execution for the first time

> b. Execution for the second time

>

> Fig 1. Time consumed by DGEMM of OpenBLAS v0.2.10 pre3

>

> Fig 2. Time consumed by DGEMM of MKL 11.1 update 1

>

> Fig 3. Time consumed by expression of "C = A * B" in Matlab R2012a

>

> Figure 3 indicates that the results computed by either MKL or OpenBlas

> basically agree well with that returned by Matlab. However, as shown in

> Figure 1, OpenBLAS exhibites the slowest but also unsteady computing speed.

>

>

> When the foregoing binary "libopenblas.dll" and "libopenblas.lib" (which

> were built by myself) are substituted by the early release

> OpenBLAS-v0.2.8-x86-Win.zip

> its contestants, which is shown in Figure 4. This is a definitely,

> positively, absolutely great boost to the computing speed of BLAS !!!

> (sorry for my excitation)

>

> Fig 4. Time consumed by DGEMM of OpenBLAS v0.2.8

>

>

> Finally, can you be kind enough to answer my following questions that

>

> 1) why does OpenBLAS v0.2.8 outperform OpenBLAS v0.2.10 pre 3 so much?

>

> 2) if I have missed or mistaken something in the build process?

>

>

> Thanks in advance for your upcoming replies~

>

>

> Best regards,

>

> Wenkai Zhao

>

I need some informations.

What is your processor and how did you build OpenBLAS. Did

you built a 32- or 64-binary?

Best regards

Werner

Santa Claus

Zhang Xianyi

--

You received this message because you are subscribed to the Google Groups "OpenBLAS-users" group.

To unsubscribe from this group and stop receiving emails from it, send an email to openblas-user...@googlegroups.com.

To post to this group, send email to openbla...@googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

Santa Claus

José Luis García Pallero

> Hi Xianyi,

>

> So pleased to see your reply!

>

> OpenBLAS actually beats both MKL and Matlab in terms of speed when BLASs are

> called.

>

> However, the LAPACK subroutines in OpenBLAS perform not as well as the BLAS

> subroutines. To execute DGEEV of LAPACK on a 500-by-500 real matrix,

> OpenBLAS consumed 1.11 sec while MKL and Matlab consumed 0.74 sec and 0.61

> sec respectively.

>

> In my view, if BLASs have been accelerated significantly, LAPACKs will be

> also accelerated accordingly, won't they?

This is not entirely exact. The reference Lapack is not parallelized,

so its parallel performance relies entirely on BLAS. Obviously, as

faster is BLAS, Lapack is also faster, but it is not true that if a

version of OpenBLAS is 1.2x faster than other version, Lapack has to

be 1.2x faster too. The Lapack routines in MKL are probably

parallelized one level over the BLAS, so its performance is better

that the reference Lapack+BLAS

Best regards

*****************************************

José Luis García Pallero

jgpa...@gmail.com

(o<

/ / \

V_/_

Use Debian GNU/Linux and enjoy!

*****************************************

Santa Claus

在 2014年7月4日星期五UTC+8下午4时22分23秒,José Luis García Pallero写道: