Behavior Camera + V4 Imaging Synchronization

1,168 views

Skip to first unread message

Miguel Carvalho

Nov 27, 2020, 3:43:50 AM11/27/20

to Miniscope

Hello everyone,

New V4 miniscope user here. We have recently received our new V4 miniscopes and we've been looking into how to setup experiments with synchronized imaging and behavior tracking.

Our first approach was to use the latest version of the DAQ software to initiate both behavior camera and scope. The final result are imaging and behavior videos that aren't exactly synchronized, most likely due to slight fluctuations in frame rate in each of them. Regardless of this, and as suggested in a previous discussion here, the timestamp files can be used to match each scope frame with the closest behavior camera frame.

We are wondering if more experienced users have found a more straightforward way to synchronize both imaging and behavior streams. For instance, does having the miniscope DAQ trigger frame acquisition in the behavior camera render better synchronization? Is having both behavior camera and scope controlled via Bonsai also a good alternative?

Thanks in advance for any input.

Sylar Grayson

Nov 27, 2020, 12:06:30 PM11/27/20

to Miniscope

Hi!

Have you checked the delta T between the two systems?

Here is my method, but it is mainly focusing on postprocessing:

I try to measure the delta T between the camera recording system and scope recording system before I start my behavior experiment. The delta T could be a function or a constant. After registration, it seems to work a little better in some way. However, I am still looking forward to solve this problem from a hardware level.

Hope you might find it useful.

Kaii

miguelmv...@gmail.com

Nov 27, 2020, 1:06:05 PM11/27/20

to Sylar Grayson, Miniscope

Hi,

The delta T between the two systems isn’t constant since frame rate varies differently in each one of them. As a result, after recording the number of scope and behavior camera frames is slightly different.

Would be great to have a solution that would circumvent this problem and render the exact same number of frames. Is this a known issue among other users?

On 27 Nov 2020, at 18:06, Sylar Grayson <perryger...@gmail.com> wrote:

Hi!

--

You received this message because you are subscribed to a topic in the Google Groups "Miniscope" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/miniscope/JTCI2Wu9tJw/unsubscribe.

To unsubscribe from this group and all its topics, send an email to miniscope+...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/miniscope/d2ce7233-99cc-4f4c-b5d6-68d6f3cf8a83n%40googlegroups.com.

Matthias Klumpp

Nov 27, 2020, 2:24:22 PM11/27/20

to miguelmv...@gmail.com, Sylar Grayson, Miniscope

Hi there!

Am Fr., 27. Nov. 2020 um 19:06 Uhr schrieb <miguelmv...@gmail.com>:

> [...]

opportunity to point at my Syntalos project which was specifically

designed to solve synchronization as well as automation issues for

electrophysiology and Miniscope experiments. ;-)

To make use of it, you would simple create a Miniscope and a camera

node, as well as a video recorder node for each of them within a

Syntalos board, and the synchronization would be handled

automatically. We actually tested this and there is no significant

(above one frame) delay between a video source and the Miniscope - if

there was one, however, Syntalos would at least keep the offset

constant.

Usually, these sync problems are way more severe when

electrophysiological data is recorded, as that data has a way bigger

temporal resolution compared to Miniscopes, which are easier to

synchronize.

Potential issues with Syntalos are that it will only run on Linux

(that's a design decision, but it may run (less well) on Microsofts

Linux compatibility layer (Windows Subsystem for Linux)), and that you

currently will have to build the project from source. Also, the

documentation is a bit lacking.

I am currently working on the last two aspects, and hopefully in the

end you will be able to install this project with just a few clicks

and find a lot more documentation online.

The source code an more info on this project is available here:

https://github.com/bothlab/syntalos (and our department is using it a

lot for new experiments)

We don't support the Miniscope-based behavior camera hardware yet, but

it's almost guaranteed that it will be added (generic webcams and a

few types of industrial cameras will work though).

> Would be great to have a solution that would circumvent this problem and render the exact same number of frames. Is this a known issue among other users?

Just a shot in the dark here: The video files themselves to not

contain accurate timestamps (as in: synchronized timestamps), but

rather count frames and have a known, fixed, framerate (instead of a

variable one). Calculating a timestamp from the frame number and

framerate will be very inaccurate (this can be fixed for some video

file formats, but usually isn't worth the effort for our DAQ cases).

Therefore, the official Miniscope DAQ software (as well as any other

DAQ tool that I know of) will create some file which maps frame

numbers to actual timestamps, in case of the Miniscope DAQ stored as

CSV alongside the video file. When reading the data, you'd have to

take a timestamp from these CSV files, not the video files. Then the

times should be accurate-ish. Are you doing that?

I am sorry if you are already using things that way and this is a very

dumb suggestion, but it's better to ask :-)

Another solution to the sync problem I have seen people using is to

make the DAQ tool output UNIX timestamps and compare those with

another video-recording application that also creates absolute UNIX

timestamps for each frame. That's also a very viable way to solve this

issue.

How much does your framerate vary? For my V4 Miniscopes, it's usually

not a large amount (1-3 frames max), but I do see the framerate

fluctuate wildly (10-20 frames range) as soon as there is a

connectivity issue and the signal isn't handled properly (which is

usually some cable issue, or a soldering problem). Depending on how

bad it is, that's maybe worth a look independently of the sync

problem.

Good luck with your experiments and with solving this problem!

Cheers,

Matthias

> On 27 Nov 2020, at 18:06, Sylar Grayson <perryger...@gmail.com> wrote:

>

> Hi!

>

> Have you checked the delta T between the two systems?

> Here is my method, but it is mainly focusing on postprocessing:

> I try to measure the delta T between the camera recording system and scope recording system before I start my behavior experiment. The delta T could be a function or a constant. After registration, it seems to work a little better in some way. However, I am still looking forward to solve this problem from a hardware level.

> Hope you might find it useful.

>

> Kaii

> 在2020年11月27日星期五 UTC+8 下午4:43:50<miguelmv...@gmail.com> 写道:

>>

>> Hello everyone,

>>

>> New V4 miniscope user here. We have recently received our new V4 miniscopes and we've been looking into how to setup experiments with synchronized imaging and behavior tracking.

>>

>> Our first approach was to use the latest version of the DAQ software to initiate both behavior camera and scope. The final result are imaging and behavior videos that aren't exactly synchronized, most likely due to slight fluctuations in frame rate in each of them. Regardless of this, and as suggested in a previous discussion here, the timestamp files can be used to match each scope frame with the closest behavior camera frame.

>>

>> We are wondering if more experienced users have found a more straightforward way to synchronize both imaging and behavior streams. For instance, does having the miniscope DAQ trigger frame acquisition in the behavior camera render better synchronization? Is having both behavior camera and scope controlled via Bonsai also a good alternative?

>>

>> Thanks in advance for any input.

>>

>>

>

> --

> You received this message because you are subscribed to a topic in the Google Groups "Miniscope" group.

> To unsubscribe from this topic, visit https://groups.google.com/d/topic/miniscope/JTCI2Wu9tJw/unsubscribe.

> To unsubscribe from this group and all its topics, send an email to miniscope+...@googlegroups.com.

> To view this discussion on the web visit https://groups.google.com/d/msgid/miniscope/d2ce7233-99cc-4f4c-b5d6-68d6f3cf8a83n%40googlegroups.com.

>

> --

> You received this message because you are subscribed to the Google Groups "Miniscope" group.

> To unsubscribe from this group and stop receiving emails from it, send an email to miniscope+...@googlegroups.com.

> To view this discussion on the web visit https://groups.google.com/d/msgid/miniscope/309AC9E1-FD88-4752-9AC2-19E8B9DB8D97%40gmail.com.

--

I welcome VSRE emails. See http://vsre.info/

Am Fr., 27. Nov. 2020 um 19:06 Uhr schrieb <miguelmv...@gmail.com>:

> [...]

> The delta T between the two systems isn’t constant since frame rate varies differently in each one of them. As a result, after recording the number of scope and behavior camera frames is slightly different.

It's probably overkill for your case, but also a pretty great

opportunity to point at my Syntalos project which was specifically

designed to solve synchronization as well as automation issues for

electrophysiology and Miniscope experiments. ;-)

To make use of it, you would simple create a Miniscope and a camera

node, as well as a video recorder node for each of them within a

Syntalos board, and the synchronization would be handled

automatically. We actually tested this and there is no significant

(above one frame) delay between a video source and the Miniscope - if

there was one, however, Syntalos would at least keep the offset

constant.

Usually, these sync problems are way more severe when

electrophysiological data is recorded, as that data has a way bigger

temporal resolution compared to Miniscopes, which are easier to

synchronize.

Potential issues with Syntalos are that it will only run on Linux

(that's a design decision, but it may run (less well) on Microsofts

Linux compatibility layer (Windows Subsystem for Linux)), and that you

currently will have to build the project from source. Also, the

documentation is a bit lacking.

I am currently working on the last two aspects, and hopefully in the

end you will be able to install this project with just a few clicks

and find a lot more documentation online.

The source code an more info on this project is available here:

https://github.com/bothlab/syntalos (and our department is using it a

lot for new experiments)

We don't support the Miniscope-based behavior camera hardware yet, but

it's almost guaranteed that it will be added (generic webcams and a

few types of industrial cameras will work though).

> Would be great to have a solution that would circumvent this problem and render the exact same number of frames. Is this a known issue among other users?

contain accurate timestamps (as in: synchronized timestamps), but

rather count frames and have a known, fixed, framerate (instead of a

variable one). Calculating a timestamp from the frame number and

framerate will be very inaccurate (this can be fixed for some video

file formats, but usually isn't worth the effort for our DAQ cases).

Therefore, the official Miniscope DAQ software (as well as any other

DAQ tool that I know of) will create some file which maps frame

numbers to actual timestamps, in case of the Miniscope DAQ stored as

CSV alongside the video file. When reading the data, you'd have to

take a timestamp from these CSV files, not the video files. Then the

times should be accurate-ish. Are you doing that?

I am sorry if you are already using things that way and this is a very

dumb suggestion, but it's better to ask :-)

Another solution to the sync problem I have seen people using is to

make the DAQ tool output UNIX timestamps and compare those with

another video-recording application that also creates absolute UNIX

timestamps for each frame. That's also a very viable way to solve this

issue.

How much does your framerate vary? For my V4 Miniscopes, it's usually

not a large amount (1-3 frames max), but I do see the framerate

fluctuate wildly (10-20 frames range) as soon as there is a

connectivity issue and the signal isn't handled properly (which is

usually some cable issue, or a soldering problem). Depending on how

bad it is, that's maybe worth a look independently of the sync

problem.

Good luck with your experiments and with solving this problem!

Cheers,

Matthias

> On 27 Nov 2020, at 18:06, Sylar Grayson <perryger...@gmail.com> wrote:

>

> Hi!

>

> Have you checked the delta T between the two systems?

> Here is my method, but it is mainly focusing on postprocessing:

> I try to measure the delta T between the camera recording system and scope recording system before I start my behavior experiment. The delta T could be a function or a constant. After registration, it seems to work a little better in some way. However, I am still looking forward to solve this problem from a hardware level.

> Hope you might find it useful.

>

> Kaii

> 在2020年11月27日星期五 UTC+8 下午4:43:50<miguelmv...@gmail.com> 写道:

>>

>> Hello everyone,

>>

>> New V4 miniscope user here. We have recently received our new V4 miniscopes and we've been looking into how to setup experiments with synchronized imaging and behavior tracking.

>>

>> Our first approach was to use the latest version of the DAQ software to initiate both behavior camera and scope. The final result are imaging and behavior videos that aren't exactly synchronized, most likely due to slight fluctuations in frame rate in each of them. Regardless of this, and as suggested in a previous discussion here, the timestamp files can be used to match each scope frame with the closest behavior camera frame.

>>

>> We are wondering if more experienced users have found a more straightforward way to synchronize both imaging and behavior streams. For instance, does having the miniscope DAQ trigger frame acquisition in the behavior camera render better synchronization? Is having both behavior camera and scope controlled via Bonsai also a good alternative?

>>

>> Thanks in advance for any input.

>>

>>

>

> --

> You received this message because you are subscribed to a topic in the Google Groups "Miniscope" group.

> To unsubscribe from this topic, visit https://groups.google.com/d/topic/miniscope/JTCI2Wu9tJw/unsubscribe.

> To unsubscribe from this group and all its topics, send an email to miniscope+...@googlegroups.com.

> To view this discussion on the web visit https://groups.google.com/d/msgid/miniscope/d2ce7233-99cc-4f4c-b5d6-68d6f3cf8a83n%40googlegroups.com.

>

> --

> To unsubscribe from this group and stop receiving emails from it, send an email to miniscope+...@googlegroups.com.

> To view this discussion on the web visit https://groups.google.com/d/msgid/miniscope/309AC9E1-FD88-4752-9AC2-19E8B9DB8D97%40gmail.com.

--

I welcome VSRE emails. See http://vsre.info/

Daniel Aharoni

Nov 30, 2020, 5:12:47 PM11/30/20

to Miniscope

I will just reiterate part of what Matthias said:

The new Miniscope Software generates a .csv timestamp file within each folder that holds recording data. This file gives the acquisition timing in milliseconds of when each frame (or chunk of data) was received by the software. These timestamps share a common start point across all data types recording so you can use this to sync your data.

The new Miniscope Software generates a .csv timestamp file within each folder that holds recording data. This file gives the acquisition timing in milliseconds of when each frame (or chunk of data) was received by the software. These timestamps share a common start point across all data types recording so you can use this to sync your data.

I want to also point out that you definitely should never just use the expected FPS and the frame number to calculate the timing of each frame. While the V4 Miniscope FPS is very stable, frames once in a while can be dropped. In addition, most USB behavioral camera's FPS is very much not stable.

Hope this helps.

Miguel Carvalho

Nov 30, 2020, 5:35:35 PM11/30/20

to Daniel Aharoni, Miniscope

Hi Matthias and Daniel,

Thank you for your answers. I share your opinion that using frame rates and frame number to calculate the timing wouldn’t be a good solution, but as Daniel mentions the .csv files are good option to synch the data. I am starting to suspect this might be a connection problem with the behaviour camera, so I’ll look into this next.

To view this discussion on the web visit https://groups.google.com/d/msgid/miniscope/eadbc46c-9c16-480f-9599-5ca83743f1cfn%40googlegroups.com.

Simon Fisher

Dec 2, 2020, 12:11:35 AM12/2/20

to Miniscope

Jumping into this conversation with some similar general questions...

I would be keen to know how people handle synchronisation of data between three systems: miniscope Ca2+ signals, video recordings, and also events from a behaving animal (e.g. touchscreen or med-associates chamber)?

Assuming the video camera used is integrated into the miniscope acquisition software, then sync between those two would be sorted via the CSV timestamp file, as outlined in posts above. If I also have a behavioral system generating events with its own timestamp process, and I triggered the start of miniscope recording from this behavioral system (which would presumably set a timestamp event in the miniscope CSV file?), then can I just use both timestamp files from the individual systems to link behavior events and miniscope signals? By that I mean: if a behavior event occurs 10s into the behavior timestamp file, then I can index 10s into the miniscope CSV file and find the closest Ca2+ imaging frame, and video camera frame. Essentially, is it OK practice to trust different clocks like this?

If using a different video camera that isn't integrated into the miniscope acqusition software (e.g. one from FLIR), then I guess that'd need to be triggered from the behavior system too, and then ideally it can record absolute timestamps to use for lookups. Or is there a better way here?

Matthias - Your Syntalos solution looks very nice, although probably too much overhead from my needs, and the need for linux may present some challenges. That said, it would be interesting to check out and try. One quick question: is it possible to trigger the start of a 'flow' (e.g. start miniscope and video recording) from an external system (e.g. med-associates box)? Maybe using a connected Arduino with the Firmata protocol and associated module in Syntalos?

Thanks all

Daniel Aharoni

Dec 2, 2020, 5:40:32 PM12/2/20

to Miniscope

Hi,

Assuming the video camera used is integrated into the miniscope acquisition software, then sync between those two would be sorted via the CSV timestamp file, as outlined in posts above. If I also have a behavioral system generating events with its own timestamp process, and I triggered the start of miniscope recording from this behavioral system (which would presumably set a timestamp event in the miniscope CSV file?), then can I just use both timestamp files from the individual systems to link behavior events and miniscope signals? By that I mean: if a behavior event occurs 10s into the behavior timestamp file, then I can index 10s into the miniscope CSV file and find the closest Ca2+ imaging frame, and video camera frame. Essentially, is it OK practice to trust different clocks like this?

Yes this sounds fine. To sync separate acquisitions systems you just need a single common time point. If you trigger the Miniscope recording using an external trigger and log that trigger, you can use this information to sync other devices. You can also use the Sync Out signal from the Miniscope DAQ which toggles a digital signal (3.3V high) on each acquired Minsicope frame. This Sync Out signal only outputs during Miniscope recording.

If using a different video camera that isn't integrated into the miniscope acqusition software (e.g. one from FLIR), then I guess that'd need to be triggered from the behavior system too, and then ideally it can record absolute timestamps to use for lookups. Or is there a better way here?

I think you would use a similar setup to the one discus above. With a bit of coding work you could also just add in FLIR acqusition into the Miniscope Software.

Matthias Klumpp

Dec 2, 2020, 8:07:35 PM12/2/20

to Simon Fisher, Miniscope

Am Mi., 2. Dez. 2020 um 06:11 Uhr schrieb Simon Fisher <s.d.f...@gmail.com>:

> [...]

That's exactly one of the things we are using Syntalos for (another

experiment also using actuators triggered by certain animal behavior,

and yet another one triggering camera recordings if the animal is at a

certain position).

> Matthias - Your Syntalos solution looks very nice, although probably too much overhead from my needs, and the need for linux may present some challenges.

I don't think it's actually that much overhead - except for the work

currently needed to compile it, of course ;-) (which should also be

straightforward, but if you never compiled a cmake/C++ application,

this might be challenging without instructions - it's certainly doable

though, and I can also help with that).

> That said, it would be interesting to check out and try. One quick question: is it possible to trigger the start of a 'flow' (e.g. start miniscope and video recording) from an external system (e.g. med-associates box)? Maybe using a connected Arduino with the Firmata protocol and associated module in Syntalos?

You can start a board via an external, local trigger using D-Bus, but

that isn't very well supported yet (it's a hidden function primarily

used for automated testing). Depending on what you want to do, it may

not even be needed though. Syntalos speaks Firmata over serial

interfaces natively, so simple controls should work via its Firmata

module. That can be used to enable/disable recordings, cameras, or

trigger pretty much any sideeffect that one could need. The Firmata

support is usually coupled with Syntalos' Python scripting interface,

which permits you to run arbitrary Python functions based on incoming

events (and also trigger stuff within Syntalos).

So, depending on your setup, the software might already work well or

may need some tweaks (I am very curious about usecases other than ours

though).

One important thing to note is that Syntalos, unlike a tool like

Bonsai and LabView, is no visual programming language. It's a

framework in which other tools can run as modules and interchange data

efficiently (and most importantly, share timestamps as much as

possible).

Btw, the Miniscope DAQ board having a hardware timing output is pretty

neat - in an ideal world, all of our measurement devices would have a

clock master out and a clock slave in port, so you could select one

master hardware clock to precisely synchronize all devices in an

experiment. But I've only seen these things in physics labs so far, an

no neuroscience hardware seems to have this unless it's built in-house

with this specifically addressed.

With our "low" sampling rates (compared to electrophysiology), the

software sync (even the one the official Miniscope DAQ software does)

should be good enough though :-)

Cheers,

Matthias

> To view this discussion on the web visit https://groups.google.com/d/msgid/miniscope/af2ccffc-1908-4aa8-a661-8642dc234b6an%40googlegroups.com.

> [...]

> I would be keen to know how people handle synchronisation of data between three systems: miniscope Ca2+ signals, video recordings, and also events from a behaving animal (e.g. touchscreen or med-associates chamber)?

> [...]

That's exactly one of the things we are using Syntalos for (another

experiment also using actuators triggered by certain animal behavior,

and yet another one triggering camera recordings if the animal is at a

certain position).

> Matthias - Your Syntalos solution looks very nice, although probably too much overhead from my needs, and the need for linux may present some challenges.

currently needed to compile it, of course ;-) (which should also be

straightforward, but if you never compiled a cmake/C++ application,

this might be challenging without instructions - it's certainly doable

though, and I can also help with that).

> That said, it would be interesting to check out and try. One quick question: is it possible to trigger the start of a 'flow' (e.g. start miniscope and video recording) from an external system (e.g. med-associates box)? Maybe using a connected Arduino with the Firmata protocol and associated module in Syntalos?

that isn't very well supported yet (it's a hidden function primarily

used for automated testing). Depending on what you want to do, it may

not even be needed though. Syntalos speaks Firmata over serial

interfaces natively, so simple controls should work via its Firmata

module. That can be used to enable/disable recordings, cameras, or

trigger pretty much any sideeffect that one could need. The Firmata

support is usually coupled with Syntalos' Python scripting interface,

which permits you to run arbitrary Python functions based on incoming

events (and also trigger stuff within Syntalos).

So, depending on your setup, the software might already work well or

may need some tweaks (I am very curious about usecases other than ours

though).

One important thing to note is that Syntalos, unlike a tool like

Bonsai and LabView, is no visual programming language. It's a

framework in which other tools can run as modules and interchange data

efficiently (and most importantly, share timestamps as much as

possible).

Btw, the Miniscope DAQ board having a hardware timing output is pretty

neat - in an ideal world, all of our measurement devices would have a

clock master out and a clock slave in port, so you could select one

master hardware clock to precisely synchronize all devices in an

experiment. But I've only seen these things in physics labs so far, an

no neuroscience hardware seems to have this unless it's built in-house

with this specifically addressed.

With our "low" sampling rates (compared to electrophysiology), the

software sync (even the one the official Miniscope DAQ software does)

should be good enough though :-)

Cheers,

Matthias

Miguel Carvalho

Dec 3, 2020, 6:19:20 AM12/3/20

to Miniscope

Hi all,

One more question, this time regarding the Synch Out port. For the purpose of behavioral synchronization we have been discussing, I have tried having the miniscope DAQ triggering frame acquisition with our imaging source camera. However, with this method, the number of frames acquired with the camera appears to be half of the ones from the miniscope. Any advice?

Daniel Aharoni

Dec 3, 2020, 12:08:16 PM12/3/20

to Miniscope

Hi,

The Miniscope DAQ's Sync Output toggles its output for each acquired Miniscope frame. Likely your other imaging source camera gets triggered on only the rise of the signal and not on both the rise and fall.

zahra rezaei

Dec 10, 2021, 9:32:23 PM12/10/21

to Miniscope

Hi Daniel,

I have a behavioral camera which is triggered by Miniscope and generates its own timestamps. To compare time stamps generated by Miniscope and behavioral camera for synchronization purpose I need a common time point as you also mentioned previously in this thread. I have the starting time of my behavioral camera. I wonder how I can log the starting time of Miniscope, too, for later comparison.

Thank you.

Daniel Aharoni

Dec 17, 2021, 5:08:38 PM12/17/21

to Miniscope

Hi, I am not sure I fully understand your setup. How does the Miniscope trigger your behavioral camera (as I am assuming the behavioral camera is controlled and acquired by different software)?

The Miniscope software will log the start time of the Miniscope Software recording along with individual timestamps relative to this start time. Maybe the is enough?

Jon

Oct 24, 2023, 1:42:20 AM10/24/23

to Miniscope

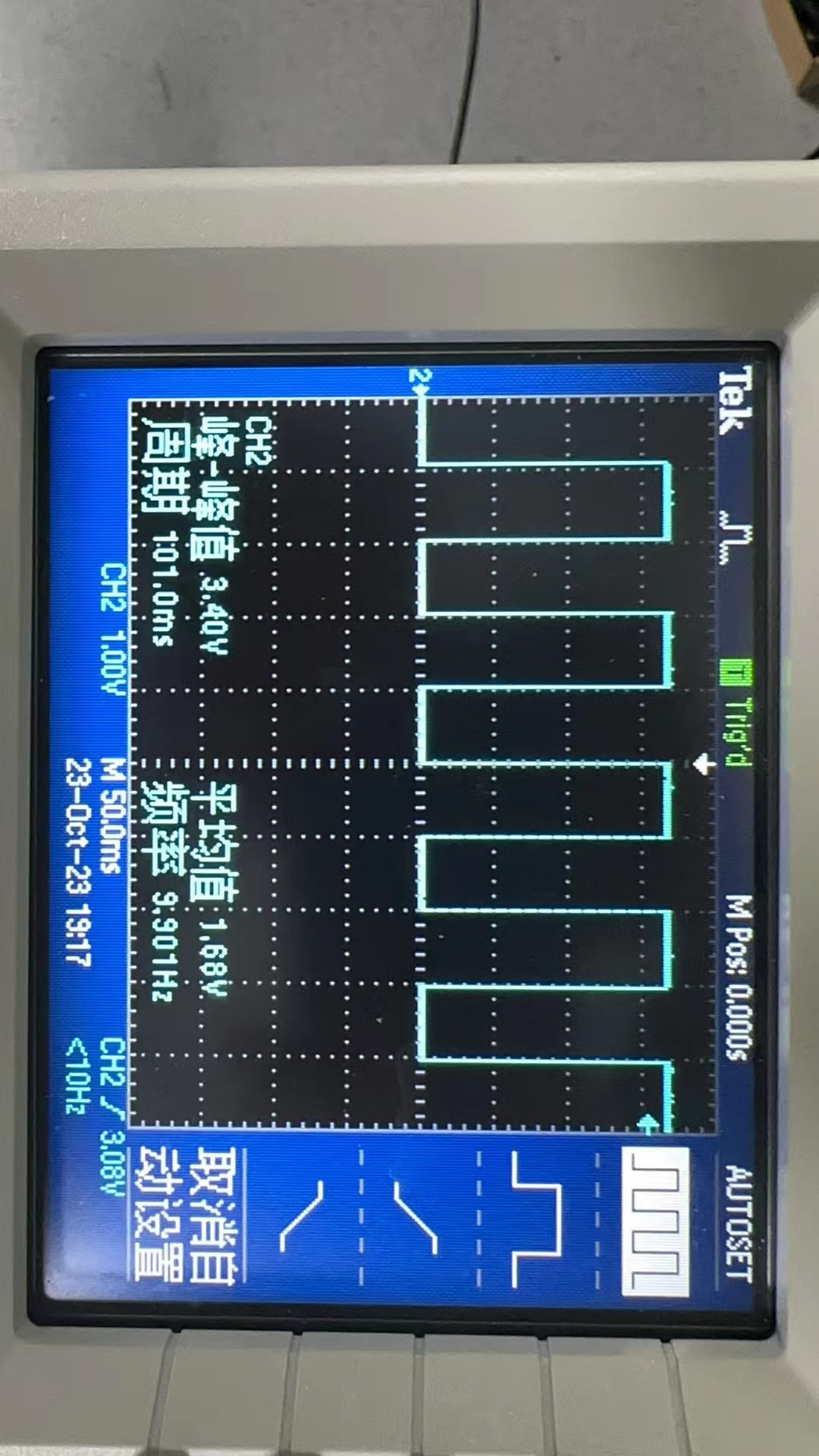

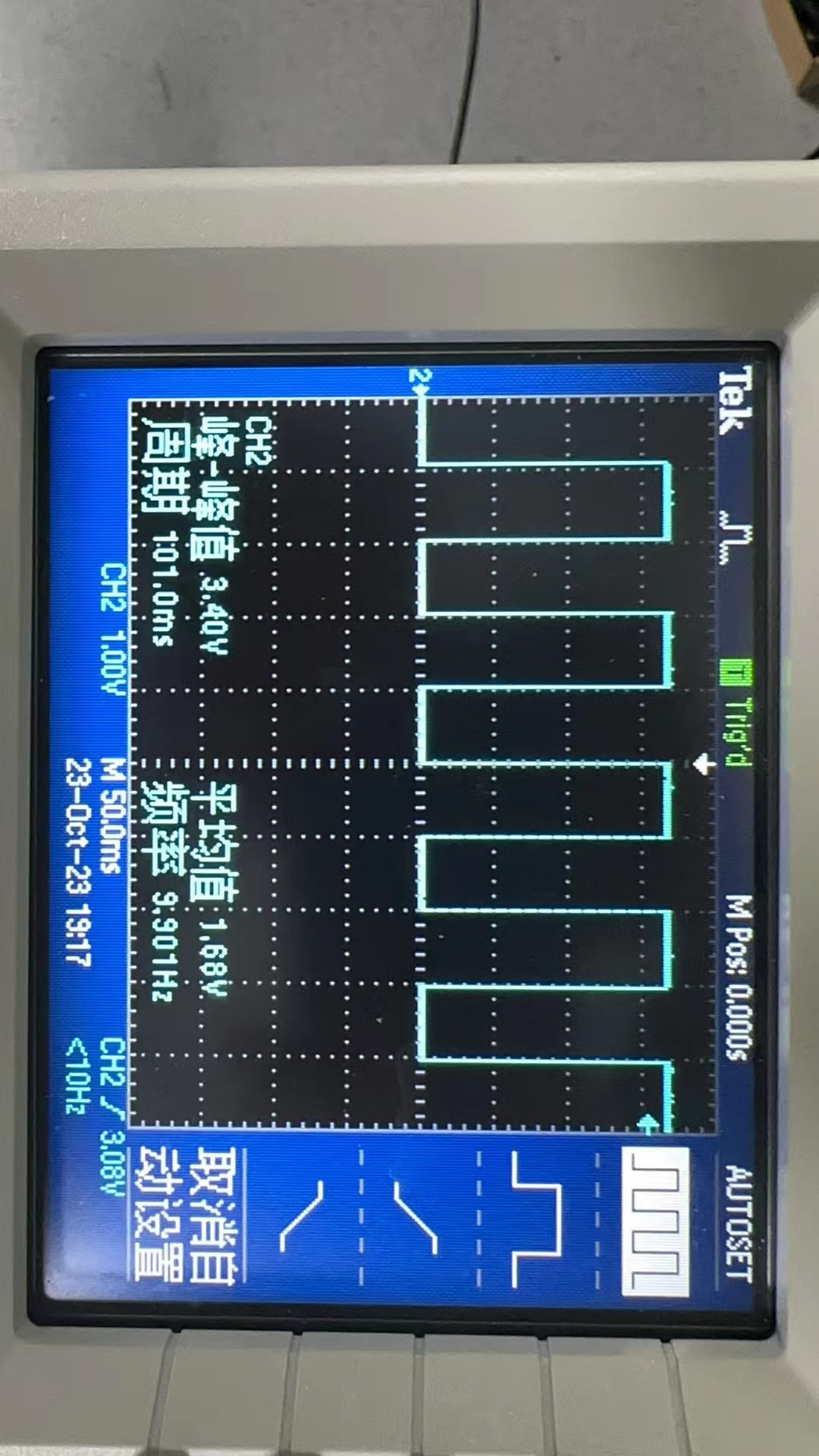

Hi Daniel,

I have some issues with the Sync Output port.

1. The sync output only works when you start recording? once I plug the SMA into this port, it gets high, so the frame is acquired on which side?

2. I set the FPS to 20, and the freq of sync output is 10,

Ruoyu

Oct 24, 2023, 5:05:35 AM10/24/23

to Miniscope

Hi Daniel,

I want to synchronize my v4 miniscope and behavioral camera to an extern visual stimulus (opengl script, presented on a LED arena). Is there any way to let my DAQ-QT-Software store the relative UNIX timestamps in .csv file during my recording?

Thank you!

Ruoyu

JIAXIN FU

Oct 30, 2023, 1:56:59 PM10/30/23

to Miniscope

Hi there,

Anyone familiar with the use of Syntalos here? I'm just setting up the v4 miniscope and I'm trying to synchronise data from the behavioural webcam and the miniscope. I'm running Syntalos on the Windows Subsystem for Linux and encountered issues with audio and camera connections. Although my computer could play audio through the speaker and the webcam could work through the Camera APP embedded in Windows, the selection for camera is greyed out in the General Camera module and the audio module said 'fail to connect'. I wonder how could I solve these problems with this software to be able to start using it?

Any other suggestions on ways to synchronise data, either through software or hardware, would be greatly appreciated. Thanks!

Reply all

Reply to author

Forward

0 new messages