gradient does not decrease

57 views

Skip to first unread message

Xiaofu Wang

Apr 5, 2021, 12:20:00 PM4/5/21

to Manopt

I try to reproduce the experiment of the attached paper use a product manifold of complex circle and complex

euclidean manifolds. However, the output gradnorm does not decrease monotonically while the cost function decreases. It has confused me for several months.

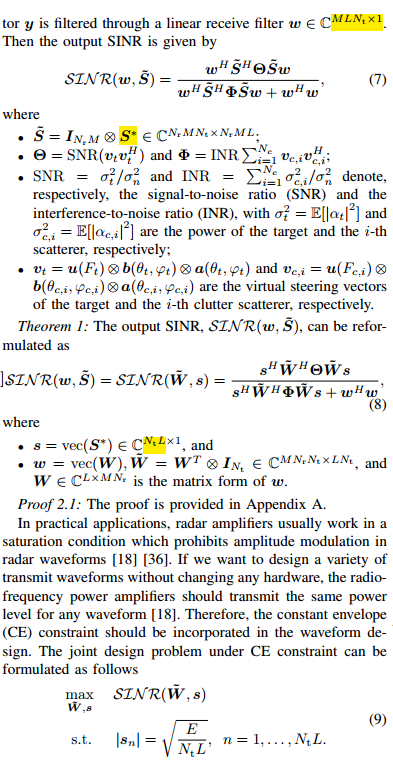

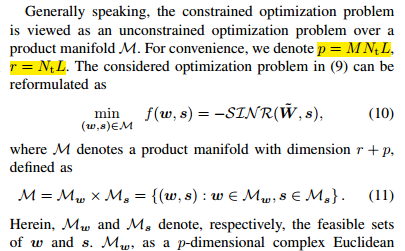

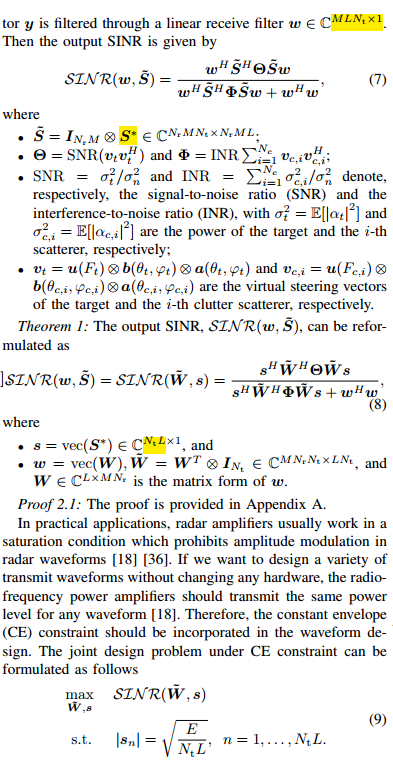

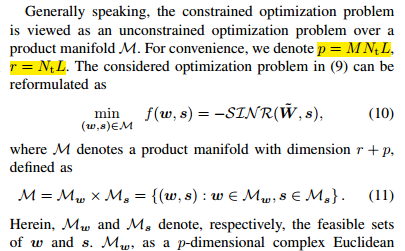

The problem is formulated as:

Could you please help me check my work? My matlab code and the paper are attached.

Thanks a lot.

function [X, Xcost, info] = STAPWaveformDesign()

%%

Nt = 4; Nr = 4; % transmit and receive antennas

M = 16; L = 16; % CPI pulses number; code length

Fr = 1000; F0 = 1e9; B = 1e6; % PRF; carrier frenquency; bandwidth

H = 9000; Vp = 150; % heigh and velocity of the plane

Range = 12728; ThetaT = 0; % range and azimuth of the target

PhiT = asin(H/Range); Vt = 45; % elevation and speed of target

Nc = 361; % clutter patches

PhiC = PhiT; % elevation of clutter, equals to target

ThetaC = linspace(0,360,361)/180*pi; % azimuth of each clutter patch

SNR = 0; INR = 30; % signal-to-noise-ratio, signal-to-intereference-ratio

%%

c = 3e8; lamda = c / F0; % speed of light, wavelength

dt = lamda * 2; dr = lamda / 2; % antenna space

ut = (0:(Nt-1)).'*dt; ur = (0:(Nr-1)).'*dr; % positions of transmit and receive antenna

% =========================== target===================

Ft = 2 * Vt / (lamda * Fr); % doppler of target

uFt = exp(1i*2*pi*(0:(M-1)).'*Ft); % temporal steer vector

at = exp(1i*(2*pi/lamda)*ut*cos(PhiT)*sin(ThetaT)); % transmit steer vector

bt = exp(1i*(2*pi/lamda)*ur*cos(PhiT)*sin(ThetaT)); % rceiver steer vector

vt = kron(kron(uFt,bt),at); % virtual steer vector

THETA = 10^(SNR/10)*(vt*vt');

% ======================== intereference ====================

temp_vc = zeros(M*Nr*Nt,M*Nr*Nt);

for ii = 1:Nc

Fc_i = 2*Vp*cos(PhiC)*sin(ThetaC(ii))/(lamda*Fr);

uFc_i = exp(1i*2*pi*(0:(M-1)).'*Fc_i); % temporal steer vector

ac_i = exp(1i*(2*pi/lamda)*ut*cos(PhiC)*sin(ThetaC(ii))); % transmit steer vector

bc_i = exp(1i*(2*pi/lamda)*ur*cos(PhiC)*sin(ThetaC(ii))); % receiver steer vector

vc_i = kron(kron(uFc_i, bc_i), ac_i); % virtual steer vector

temp_vc = temp_vc + vc_i*vc_i';

end

PHI = 10^(INR/10)*temp_vc;

% =======================================================================

r = Nt*L; % dimension of signal

p = M*Nr*L; % dimension of filter

tuple.w = euclideancomplexfactory(p,1); % complex eculian product

tuple.s = complexcirclefactory(r,1); % complex circle product

Manifold = productmanifold(tuple); % product manifold

problem.M = Manifold; %

problem.cost = @cost; % cost function

problem.egrad = @grad; % gradient

options.maxiter = 100;

options.beta_type = 'P-R';

options.minstepsize = 1e-6;

options.linesearch = @linesearch;

% checkgradient(problem);

[X, Xcost, info] = conjugategradient(problem,[],options);

% [X, Xcost, info] = steepestdescent(problem,[],options);

figure(1)

subplot(1,2,1)

plot([info.gradnorm],'-*')

ylabel('norm of grad')

xlabel('iter num')

title('curve of gradient')

subplot(1,2,2)

plot(10*log10(abs(-[info.cost])))

ylabel('cost function')

xlabel('iter num')

title('curve of cost function')

%% ==================== functions =====================

function f = cost(X) % equation(8)

w = X.w; s = X.s;

W = reshape(w,[L,M*Nr]);

Wwave = kron(W.', eye(Nt)); % W~

f1 = s'*Wwave'*THETA*Wwave*s; %

f2 = s'*Wwave'*PHI*Wwave*s + w'*w; %

f = - real(f1 / f2);

end

function g = grad(X) % ecudian gradient

w = X.w; s = X.s;

W = reshape(w,[L,M*Nr]);

Wwave = kron(W.', eye(Nt)); % W~

Sstar = reshape(s,[Nt, L]); % S*

Swave = kron(eye(Nr*M),Sstar); % S~

P = Swave'*THETA*Swave; Q = Swave'*PHI*Swave;

k = w'*Q*w + w'*w;

R = Wwave'*THETA*Wwave; U = Wwave'*PHI*Wwave;

belta = s'*U*s + w'*w;

egradw = -(2/k)*P*w + (2/k^2)*w'*P*w*(Q*w+w); % equation(22)

egrads = -(2/belta)*R*s + 2/(belta^2)*s'*R*s*(U*s); % equation(23)

egrad.w = egradw;

egrad.s = egrads;

g = Manifold.egrad2rgrad(X, egrad);

end

end

Bamdev Mishra

Apr 6, 2021, 2:21:11 AM4/6/21

to Xiaofu Wang, Manopt

Hello Xiaofu,

Thanks for the interest in Manopt.

Verifying the correctness of the code reproducibility from another's work is painful at the least. Also, I am not sure whether the problem is with using Manopt or with implementing the above mentioned algorithm. Can you simplify the problem and ask the question related specifically to Manopt? It will help to resolve the issue properly and quickly.

Regards,

Bamdev

--

http://www.manopt.org

---

You received this message because you are subscribed to the Google Groups "Manopt" group.

To unsubscribe from this group and stop receiving emails from it, send an email to manopttoolbo...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/manopttoolbox/9998171c-6540-4fb6-87cb-684b4b5b606an%40googlegroups.com.

Xiaofu Wang

Apr 6, 2021, 3:26:06 AM4/6/21

to Manopt

Thanks very much for your reply.

The mentioned problem was solved using manopt and product manifold. I try to minimizing a cost function of two variables. However, the grad norm does not decrease gradually while the cost function decrease. The gradients are calculated correctly. I wandering, do all gradients decrease gradually?

Xiaofu.

Nicolas Boumal

Apr 6, 2021, 3:31:43 AM4/6/21

to Manopt

Hello,

Indeed, while the cost function value decreases monotonically with most algorithms (steepest descent, conjugate gradients, trust-regions all enforce this to a large extent), the gradient norm may not decrease monotonically.

However, we usually see the gradient norm decrease after a while (not necessarily monotonically, but "over all"). With your code, that seems not to be the case, which I admit is a bit strange. I had a quick look, and the checkgradient tests pass. Also, I thought perhaps the norm of X.w grows to infinity, but that's not the case (it stabilizes around 36 in my run). By the way, to check this I used:

options.statsfun = statsfunhelper('w_norm', @(X) norm(X.w, 'fro'));

plot([info.w_norm], '-*');

Moreover, trustregions also doesn't find points with small gradient after a while.

So, I don't quite know what's going on here, but it seems that this cost function is challenging for some reason. Are we sure it is differentiable? Bounded below? Perhaps it's necessary to run many, many more iterations to see it converge?

Best,

Nicolas

Xiaofu Wang

Apr 6, 2021, 3:54:36 AM4/6/21

to Manopt

Dear, Nicolas,

Thanks for your replying.

In the paper I want to reproduce, experiment results shows that the gradient norm decerease for steepest descent, conjugate gradients and trust-regions methods. It's quite strange.

In the paper I want to reproduce, experiment results shows that the gradient norm decerease for steepest descent, conjugate gradients and trust-regions methods. It's quite strange.

Best,

Xiaofu

Bamdev Mishra

Apr 6, 2021, 4:05:47 AM4/6/21

to Xiaofu Wang, Manopt

It is strange indeed.

Have you tried contacting the authors? They probably have the codes already.

Regards,

To view this discussion on the web visit https://groups.google.com/d/msgid/manopttoolbox/00337ab1-37ac-455d-a9a5-ef5ff99238a5n%40googlegroups.com.

Xiaofu Wang

Apr 6, 2021, 4:27:50 AM4/6/21

to Manopt

Yes, I have contacted. But they don’t seem to be willing to open source.

Best,

Xiaofu

Reply all

Reply to author

Forward

0 new messages