Re: [MAGIC-list] Choice of the reference model [was: AIXI limitations]

Laurent

For the record, my first email was:

Up to now, here are my main concerns with AIXI:

- Reference Turing machine

- There is currently no best choice, but it seems intuitive that small TMs are better than any random TM

- Reinforcement Learning

- RL as a utility function is

very convenient for simplicity, but prone to self-delusion.

Knowledge-seeking is better but it prefers true random number generators

(not generated by itself). I'm currently thinking about a utility

function that is like knowledge-seeking but, roughly, does not like

things that are too complex, or more precisely, it doesn't like things

that are predictably random.

- Incomputability

- Time dimension not taken into account. That is the current most important problem. If we can solve that in an optimal way, we will have real AGI. I'm also working on things in this corner, but right now I'd prefer to keep my ideas for myself :)

- Things I don't understand yet...

Regarding limitations of the AIXI, I'd like to second Laurent on the point that the completely free choice of reference machine is a bit unsatisfying.

If I haven't missed anything, this means, among other things, that if we have two strings:

a = 111 b = 100110101010010111010101010101 001111010101

we can not objectively say that a is simpler than b, since according to some obscure languages (reference machines) b will actually be simpler than a.

Exactly.

But intuitively, a being simpler than b makes a lot more sense. Why?

In most programming languages, a would be far simpler than b, although both may turn out to be coded like "print 'aaa'"...

Now consider:

a = 111

b = 10101010

Which is simpler?

The question is more difficult, but it still seem plausible that a is simpler.

Even harder :

a = 111111...

b = 101010...

a still looks simpler, but if I had to bet my arm on it, I wouldn't risk it for less than ten million euros (not sure I would even risk it, my arm is quite useful).

As Laurent already said, shorter reference machine makes more sense intuitively. But appealing to intuition here is clearly not so much better than motivating a cognitive architecture intuitively - it works in practice, but is mathematically unsatisfying. Also, can one really say that one reference machine is shorter/simpler than another? doesn't that also depend on the language in which one describes the reference machines?

*If* we choose a "reference class of models" (e.g., Turing machines), then we can choose the smallest reference machine.

I thought about another option though, just a while back:

Instead (but still considering the TMs class), why not choose the reference UTM that orders TMs in the exact same order as the "natural" order, which is to grow TMs depending on the number of states, of transitions, and some lexicographical order?

(my first problem then was that there was too many TMs of same complexity)

Now, there still also remains the problem of the reference class of models. Why should we go for TMs? Why not something else? How to decide?

TMs have a nice, very simple formulation given our human knowledge. In the end, does it all boils down to our own world? Should we choose the simplest formulation given the axioms of our world? Is that even possible?

This bothers me a lot. Does anyone know of any attempts to resolve this issue? It must at least have been attempted I feel.

The idea was to simulate TMs on other TMs, and do that in loop, to try to find some fixed point, or something in the genre, but that did not work.

Also, Hutter has some discussions on these matters in his 2005 book and in :

"A Philosophical Treatise of Universal Induction"

http://www.hutter1.net/official/bib.htm#uiphil

On last resort, we can set for a consensus on a "intuitive" best choice, if we can prove that finding the best model is not feasible. But if we can avoid that, I'd be happier.

Laurent

Tom Everitt

As Laurent already said, shorter reference machine makes more sense intuitively. But appealing to intuition here is clearly not so much better than motivating a cognitive architecture intuitively - it works in practice, but is mathematically unsatisfying. Also, can one really say that one reference machine is shorter/simpler than another? doesn't that also depend on the language in which one describes the reference machines?

Yes absolutely.

*If* we choose a "reference class of models" (e.g., Turing machines), then we can choose the smallest reference machine.

I thought about another option though, just a while back:

Instead (but still considering the TMs class), why not choose the reference UTM that orders TMs in the exact same order as the "natural" order, which is to grow TMs depending on the number of states, of transitions, and some lexicographical order?

(my first problem then was that there was too many TMs of same complexity)

Now, there still also remains the problem of the reference class of models. Why should we go for TMs? Why not something else? How to decide?

TMs have a nice, very simple formulation given our human knowledge. In the end, does it all boils down to our own world? Should we choose the simplest formulation given the axioms of our world? Is that even possible?

This is good news. I hadn't actually realized that the structure of the TM is objective, and that some structures are arguably simpler than other. The smallest UTM found seems to have 22 states, but it doesn't seem to be proven minimal. http://en.wikipedia.org/wiki/Universal_Turing_machine#Smallest_machines

Hutter pointed me to a failed attempt by Müller, IIRC. I don't have the exact reference on this computer.This bothers me a lot. Does anyone know of any attempts to resolve this issue? It must at least have been attempted I feel.

The idea was to simulate TMs on other TMs, and do that in loop, to try to find some fixed point, or something in the genre, but that did not work.

This is probably the article you mean http://arxiv.org/abs/cs/0608095. It sounds interesting, I will definitely read it. Unfortunately it seems that they conclude it is not really possible to find a simplest, universal computer:

"Moreover, we show that the reason for failure has a clear and interesting physical interpretation, suggesting that every other conceivable attempt to get rid of those additive constants must fail in principle, too."

Also, Hutter has some discussions on these matters in his 2005 book and in :

"A Philosophical Treatise of Universal Induction"

http://www.hutter1.net/official/bib.htm#uiphil

Looks interesting, too.

On last resort, we can set for a consensus on a "intuitive" best choice, if we can prove that finding the best model is not feasible. But if we can avoid that, I'd be happier.

But I'm definitely going to read Muller's argument about that this hole question is "hopeless", if he's convincing I guess we'll have to resort to that intuitive best choice of yours.

Tom

Laurent

Now, there still also remains the problem of the reference class of models. Why should we go for TMs? Why not something else? How to decide?

TMs have a nice, very simple formulation given our human knowledge. In the end, does it all boils down to our own world? Should we choose the simplest formulation given the axioms of our world? Is that even possible?

This is good news. I hadn't actually realized that the structure of the TM is objective, and that some structures are arguably simpler than other. The smallest UTM found seems to have 22 states, but it doesn't seem to be proven minimal. http://en.wikipedia.org/wiki/Universal_Turing_machine#Smallest_machines

Wolfram's 2,3 TM is even smaller, but the computation model is a bit limited, it seems.

Hutter pointed me to a failed attempt by Müller, IIRC. I don't have the exact reference on this computer.This bothers me a lot. Does anyone know of any attempts to resolve this issue? It must at least have been attempted I feel.

The idea was to simulate TMs on other TMs, and do that in loop, to try to find some fixed point, or something in the genre, but that did not work.

This is probably the article you mean http://arxiv.org/abs/cs/0608095. It sounds interesting, I will definitely read it.

This is exactly the one, I'm impressed you found it!

Unfortunately it seems that they conclude it is not really possible to find a simplest, universal computer:

"Moreover, we show that the reason for failure has a clear and interesting physical interpretation, suggesting that every other conceivable attempt to get rid of those additive constants must fail in principle, too."

I would not be so categorical myself, but who knows.

Yes, as I see it now, there are two "possible" paths to finding the ultimate reference machine. One is to try to order the UTM's "mathematically", but perhaps in a different way than Muller tried. The other, more philosophical approach, would be to find some structure for "turing-complete computing structures" in general, and apply a similar argument to the one you gave above on Turing machines.

On last resort, we can set for a consensus on a "intuitive" best choice, if we can prove that finding the best model is not feasible. But if we can avoid that, I'd be happier.

Yes. Actually, I'd like very much a computation model that has many symmetries. I'd call that the "spherical model of computation". Now that we have the name, we just need to define the model, that should be easy ;)

Laurent

Tom Everitt

Hehehe, I hope you're right :) Seriously though, I totally agree about that the philosophical solution would be the nicest by far. The ultimate insight on what computation is... I wonder if it's possible. I'll let you know if I get anywhere near an idea on what that would look like, and I don't think I need to tell you that I'm all ears if you come up with something :)

Laurent

Hehehe, I hope you're right :) Seriously though, I totally agree about that the philosophical solution would be the nicest by far. The ultimate insight on what computation is... I wonder if it's possible.

Maybe the smallest possible Turing-complete (save for infinite memory) computers in our universe?

The problem is that they don't look so "simple"... But maybe that's because of our biased macro-view human perspective.

Laurent

Abram Demski

I think we can say that, in principle, the best UTM would be the one

which best predicts the environment of the agent. In other words, the

UTM with the most built-in knowledge. The update process is a descent

towards minimal error; in other words, inductive learning can be seen

as a search for a better prior. The posterior of a Bayesian update can

always be treated as a new prior for the next Bayesian update.

The update to a universal distribution remains universal, so we can

even regard the result as a new universal Turing machine, although it

is not explicitly represented as such.

So, I strongly sympathize with the idea that there's no single best

prior. However, that doesn't mean we can't find nice desirable

properties in the directions you guys are discussing. The equivalence

class "Turing Complete" is a very weak one, which doesn't require very

many properties to be preserved. Different models of computation can

change the complexity classes of specific problems, for example.

One property I mentioned to Tom is the ratio of always-meaningful

programs (total functions) to sometimes-meaningless ones (partial

functions). IE, we ask how easy it is to get into an infinite loop

which produces no output. This number has been called "Omega".

It's not possible to have a language which is always-meaningful while

being Turing complete, so we can work at this from "both

directions"... augmenting always-meaningful languages to increase

expressiveness, and partially restricting Turing-complete languages to

be more meaningful. However, there is no perfect middle point-- we can

only get closer in each direction.

--Abram

--

Abram Demski

http://lo-tho.blogspot.com/

http://groups.google.com/group/one-logic

Laurent

FYI, Tim Tyler is also interested in this question.

He's just been invited.

I think we can say that, in principle, the best UTM would be the one

which best predicts the environment of the agent. In other words, the

UTM with the most built-in knowledge. The update process is a descent

towards minimal error; in other words, inductive learning can be seen

as a search for a better prior. The posterior of a Bayesian update can

always be treated as a new prior for the next Bayesian update.

The update to a universal distribution remains universal, so we can

even regard the result as a new universal Turing machine, although it

is not explicitly represented as such.

You're right that if we go down the road of quantum physics, we should simply put as much knowledge as possible, to tailor the agent as much as possible toward the real-world.

Let's assume for a moment that we don't have knowledge about the real-world.

The question of the ultimate prior is then: Is there a UTM that is better than any other?

I.e.: Is there a universal notion of simplicity?

This looks somewhat equivalent to: "Is the definition of Turing machines simple because they are tailored to our own world, or is there an elegant mathematical truth behind that?"

Laurent

Tim Tyler

I also think it is not a huge deal. The "just use FORTRAN-77" is

reasonable. If you want to give your

machine a head start in the real world, you tell it all the things you

know - e.g. audio/video codecs.

--

__________

|im |yler http://timtyler.org/ t...@tt1lock.org Remove lock to

reply.

Tom Everitt

Hi, yes - I do have a page about the issue: http://matchingpennies.com/the_one_true_razor/

I also think it is not a huge deal. The "just use FORTRAN-77" is

reasonable. If you want to give your

machine a head start in the real world, you tell it all the things you

know - e.g. audio/video codecs.

If we have

a = 101,

b = 101101001000001010100101111111111010

do you not share the intuition that a is, in some sense, objectively simpler than b?

Another thing is that priors does matter, since your first prior will always affect your later posteriors... And to choose it so it fits the world well is not much of a solution either, since we want a general solution, no? But I do agree with Abrams point on that it makes sense to look for relatively "meaningful" languages.

Maybe the smallest possible Turing-complete (save for infinite memory) computers in our universe?

The problem is that they don't look so "simple"... But maybe that's because of our biased macro-view human perspective.

Tim Tyler

> So a question to you "no one true razor/prior" proponents:

>

> If we have

>

> a = 101,

> b = 101101001000001010100101111111111010

>

> do you not share the intuition that a is, in some sense,

> objectively simpler than b?

a = 01010010101010010011010110101101011

...and...

b = 101101001000001010100101111111111010

...then that intuition is not so clear.

Tom Everitt

If the choice of reference machine can be made completely arbitrarily, then in some choices a will be simpler than b, and in other choices b will be simpler than a. No matter what a and b happens to be (as long as they are finite strings).

So to say that there is no "right" choice of reference machine (or class of reference machines), must therefore imply that a=1 is not (can not be!) objectively simpler than b=10101100111111100100100000110101.

Do you see my point?

Wén Shào

Tom Everitt

Tom Everitt

They start out promising:

"The complexity C(x) is invariant only up to a constant depending on the reference function phi_0. Thus, one may object, for every string x there is an additively optimal recursive function psi_0 such that C_psi_0(x) = 0 [psi_0 assigns complexity 0 to x]. So how can one claim that C(x) is an objective notion?"

They then set about giving a "mathematically clean solution" by defining some equivalence classes, claiming these equivalence classes had a single smallest element. This is all well, but the problem is that according to their definition of equivalence classes, all universal Turing machines end up in the smallest equivalence class. And, clearly, for every string x there is a universal Turing machine assigning complexity 0 to x. (Just take any universal Turing machine, and redefine it so it prints x on no input.)

So in my opinion their argument is not making C(x) any more objective.

Any thoughts?

PS. If anyone wants to see the argument for himself, but don't have the book at hand, I can just scan the page (it's only one page so it's no trouble). Just let me know.

Wén Shào

--

Before posting, please read this: https://groups.google.com/forum/#!topic/magic-list/_nC7PGmCAE4

To post to this group, send email to magic...@googlegroups.com

To unsubscribe from this group, send email to

magic-list+...@googlegroups.com

For more options, visit this group at

http://groups.google.com/group/magic-list?hl=en?hl=en

Laurent

It's strange this solution is rarely quoted, though I suppose Marcus (for example) is well aware of it?

I'll need to have a closer look sometime soon.

Laurent

Wén Shào

Wén Shào

Laurent

Hi Tom and Laurent,

I agree with Tom, their solution is not quite satisfying. I believe Marcus is well aware of this, and I personally don't think Marcus accepts their argument as well, as whenever he mentions (has to mention) this issue in his papers, he uses "universally agreed-upon enumeration" to "get rid of" arbitrariness.

Thanks Wén, good to know.

As far as I know, there isn't a completely satisfying solution yet (I don't believe any of my colleague knows one). Nevertheless, the arbitrariness in choosing universal Turing machine only matters when we examine the complexity of a particular object, which we seldom do.

The reference UTM is also of importance when considering small strings, which we often do.

One could however argue that our experience in the world (genetic and cultural) has made "our reference machine" less arbitrary, and that it would go similarly for an AGI with more than 20 years of age.

Laurent

Sun Yi

Hello Guys,

I just finished reading Shane’s paper on the negative results on making Solomonoff induction practical, and a question pop out:

What is the precise linkage between the theory of Solomonoff induction and the reality?

Take probability theory for example: The only linkage between the theory of probability and the reality is ‘the fundamental interpretative hypothesis’, which states ‘that events with zero or low probability are unlikely to occur’ (Shafer and Vovk, ‘Probability and finance: It’s only a game’, pp.5) This makes clear that conclusions drawn from probabilistic inference about the reality make sense only if we accept this hypothesis, and as a indirect consequence, provides theoretical basis for the generalization results in the statistical learning context. (E.g., A is better than B on a set of i.i.d. examples => A is likely to be better than B on all cases otherwise something with small probability will happen.)

When looking at the theory of Solomonoff induction (or the RL extension AIXI), I found such clearly stated linkage is somehow missing. Shane’s negative results show that we cannot say much mathematically about the performance of a complex predictor. However, in the original paper, it is suggested that the creation of AGI would be more or less an experimental science. However, I also found this point problematic. One of the reason is that almost all experimental study requires the comparison between two agents A and B. And if A and B are complex algorithmic objects, and if we adopt Shane’s definition that a predictor is universal if it ‘eventually’ correctly predict a sequence, then there is practically no way to draw any conclusion by looking at the performance of the agents on finite data. As a result, to make an experimental study useful, we have to answer precisely the following question:

What is the fundamental hypothesis we must accept to say that ‘Predictor A is better than B on the given set of finite strings => A is likely to be better than B on all sequences’?

In other word, we must state very clearly under what hypothesis should we belief that the experimental result will generalize, before we start to do any experiment. I don’t have an answer to this problem, yet I think the answer is necessary if we want to make the universal theory practically relevant.

Regards,

Sun Yi

--

Laurent

That's a very interesting question you raise.

Hello Guys,

I just finished reading Shane’s paper on the negative results on making Solomonoff induction practical,

Btw, note the negative result holds for agents too, not only for prediction:

http://www.hutter1.net/official/bib.htm#asyoptag

(And I will discuss Shane's results in another thread.)

and a question pop out:

What is the precise linkage between the theory of Solomonoff induction and the reality?

You may also want to read "A philosophical treatise of Universal induction":

http://www.hutter1.net/official/bib.htm#uiphil

It does not make a direct, irrefutable link with the real world, but it shows how Solomonoff's Induction solves some long-standing philosophical problems about induction, like the Raven's paradox .

Take probability theory for example: The only linkage between the theory of probability and the reality is ‘the fundamental interpretative hypothesis’, which states ‘that events with zero or low probability are unlikely to occur’ (Shafer and Vovk, ‘Probability and finance: It’s only a game’, pp.5) This makes clear that conclusions drawn from probabilistic inference about the reality make sense only if we accept this hypothesis, and as a indirect consequence, provides theoretical basis for the generalization results in the statistical learning context. (E.g., A is better than B on a set of i.i.d. examples => A is likely to be better than B on all cases otherwise something with small probability will happen.)

If you go this far, you can also question the validity of mathematical axioms, and Leo Pape would probably love to discuss that with you ;)

However, regarding probability theory, I thought that (IIRC) Cox's axioms were pretty solid, i.e. don't they (him, Jaynes and others, see Jaynes 2003) show that probability theory is entailed properly (within some parameter set) from desirable principles, and if those principles are not used, we can get into trouble?

When looking at the theory of Solomonoff induction (or the RL extension AIXI), I found such clearly stated linkage is somehow missing. Shane’s negative results show that we cannot say much mathematically about the performance of a complex predictor. However, in the original paper, it is suggested that the creation of AGI would be more or less an experimental science.

(Shane, if you're reading, is that the reason why you went to neuroscience?)

Personally, I don't think there is a need to consider AGI only as an experimental science.

There are still many questions to answer. Maybe performance is not the right criterion, or maybe it is but we need a different framework were we have different properties.

It is also clear that computable agents will perform much poorer than AIXI in the real world (if that makes sense to consider AIXI in the real world).

Legg's results are a start, but I believe this is far from the end of the story. We need more results, in different situations, etc.

However, I also found this point problematic. One of the reason is that almost all experimental study requires the comparison between two agents A and B. And if A and B are complex algorithmic objects, and if we adopt Shane’s definition that a predictor is universal if it ‘eventually’ correctly predict a sequence, then there is practically no way to draw any conclusion by looking at the performance of the agents on finite data. As a result, to make an experimental study useful, we have to answer precisely the following question:

What is the fundamental hypothesis we must accept to say that ‘Predictor A is better than B on the given set of finite strings => A is likely to be better than B on all sequences’?

Why couldn't we compare agents theoretically?

Also, infinite sequences are of interest, even if we end up saying, for example, that some agent cannot learn any infinite sequence for some reason.

So an agent can perform very poorly on some sequences/strings, yet perform well on some others.

A computable agent cannot perform well against some kind of adversarial environments? Well, maybe this kind of environments are too specific (maybe we can measure this specificity with the mutual information of the agent and the environment: if they are too much alike, the agent might perform poorly), but the agent can still perform very well in highly complex environments that are not adversarial.

In other word, we must state very clearly under what hypothesis should we belief that the experimental result will generalize, before we start to do any experiment. I don’t have an answer to this problem, yet I think the answer is necessary if we want to make the universal theory practically relevant.

Peter Sunehag is trying to axiomatize rational RL: http://www.hutter1.net/official/bib.htm#aixiaxiom

That's a nice start.

What would be marvelous is a set of hypothesis that entail the AGI theory (potentially based on Solomonoff Induction), along with proofs that outside these boundaries, some good properties cannot hold.

One such results is Hutter's "only agents based on bayes mixtures can be Pareto optimal":

Hutter's book 2005, and, I think, http://www.hutter1.net/ai/optisp.htm

Laurent

Rohrer, Brandon R

Abram Demski

--

Before posting, please read this: https://groups.google.com/forum/#!topic/magic-list/_nC7PGmCAE4

To post to this group, send email to magic...@googlegroups.com

To unsubscribe from this group, send email to

magic-list+...@googlegroups.com

For more options, visit this group at

http://groups.google.com/group/magic-list?hl=en?hl=en

Abram Demski

Laurent

> It is also clear that computable agents will perform much poorer than AIXI in the real world (if that makes sense to consider AIXI in the real world).

I'll try to contradict it, and you can tell me if I'm wrong.

My understanding is that AIXI is provably optimal (given that we can agree on the Turing machine used to express plans, accept the specific formulation of the Solomonoff predictor as optimal, and agree on a horizon) across all possible RL problems. But the real world, by which I assume you mean the physical world, will not present a uniform sampling of all possible RL problems to an agent. In fact, it is quite possible that the real world will only present an infinitessimal subset of all possible RL problems to the agent. In this case, an agent may be able to far outperform AIXI on physical world RL tasks, yet still may be considered "general" in the sense that it can do anything that we ourselves are likely to do or need done.

Your argument has some appeal.

So, yes, in a sense, we might do better than AIXI in our real world, that's right.

If you consider one single task, like adding 32 bits numbers, then certainly we can do better than AIXI, since we might even be able to prove that we have the best algorithm. But that is limited to very simple tasks, and the world is much more complex.

And:

- Today, I tend to think that our world exposes us often to (some) simple "sub-environments" (all goes as expected, everything is predictable), and seldom to more complex sub-environments. This distribution reflects Occam's razor, except than instead of considering a diversity of environments, we consider one single environment composed of multiple sub-environments. Apparently, you seem to refer to that too, if I'm not mistaken.

- Our real world looks Turing-complete (save for some huuuge memory bound), since we can create computers. Thus virtually all environments can be expressed in this framework, expect the veeery complex ones maybe, but note that the prior of these environments is small.

So it's probably not infinitesimal.

Your argument might be better in a simpler environment than the real world.

- AIXI does not need the world to be a composition of environments. It works great in any environment. For Solomonoff Induction, a computable agent can only save a finite amount of prediction mistakes in any environment.

(This is not completely true for AIXI, see http://www.springerlink.com/content/p2780778k054411x/ and http://www.hutter1.net/official/bib.htm#asyoptag but that problem may arise in computable agents as well, as it is a problem of exploration, not of exploitation)

I.e., even if you choose the environment, and the best agent for that environment, Solomonoff Induction will learn to predict like that agent in less errors than the size of the smallest program that computes the environment. (For the real world, "all we need" for the smallest program are the laws of physics and the initial state at the big-bang, for example. Much less is required in fact, as many things do not directly depend on distant stars).

- AIXI discards all environments that are not consistent with its experience. So it (probably) suffices for AIXI to throw one single die to "infer" many things about classical mechanics, and maybe quantum dynamics and Newton's laws of gravitation. With a few more throws it could generalize the laws very quickly. It does not need to observe the sand and the stars to infer that the world might be composed of particles (or that it leads to a good model). Learning is amazingly fast.

The knowledge it gains about the world grows so quickly that even if you add some additional initial knowledge to a computable agent, AIXI should catch up in no time.

- last but not least, I was considering *real-time* real-world agents, those which can only do a very limited number of computations per interaction step, whereas AIXI can do an infinite number of computations in the same time. For such real agents, learning can only be extremely slow, since this requires time.

So I still think that AIXI would be vastly more intelligent in the real world than the real agents we will be able to create.

Is this an accurate answer?

Laurent

Sun Yi

Hello,

My name is SUN Yi, Yi is the first name: )

From my point of view:

- There is no problem about the linkage between the ideal Solomonoff induction and the reality, except it is not computable : )

If we make the assumption that the environment is computable (which is a religious thing since we cannot mathematically reason about the computability of the world in which we live, accept it or not...), then we can make the precise prediction with bounded regret.

- My problem is with the computable agent. Shane’s result shows that even if we make the assumption that the world is simple in the Kolmogorov sense, we still cannot guarantee the performance of the agent. Moreover, beyond certain point we cannot even mathematically reason about the computable agent.

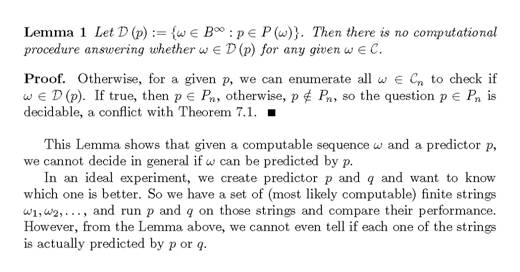

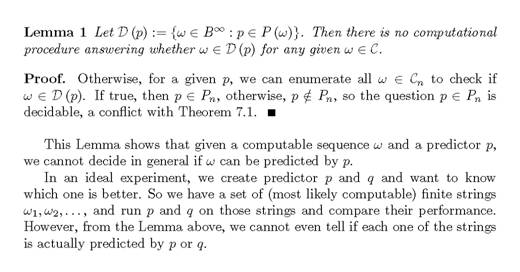

- As for the comparison of two agents, I have the intuition that it is not decidable (no proof yet). Anyway, I wrote the following Lemma, basically restate Shane’s result. However, it shows that deciding if a sequence is predicted by a given predictor is very hard.

Regards,

Sun Yi

From: magic...@googlegroups.com [mailto:magic...@googlegroups.com] On Behalf Of Laurent

Sent: Tuesday, August 23, 2011 21:51

To: magic...@googlegroups.com

--

Rohrer, Brandon R

Your argument might be better in a simpler environment than the real world

- AIXI discards all environments that are not consistent with its experience. So it (probably) suffices for AIXI to throw one single die to "infer" many things about classical mechanics, and maybe quantum dynamics and Newton's laws of gravitation. With a few more throws it could generalize the laws very quickly. It does not need to observe the sand and the stars to infer that the world might be composed of particles (or that it leads to a good model). Learning is amazingly fast.

The knowledge it gains about the world grows so quickly that even if you add some additional initial knowledge to a computable agent, AIXI should catch up in no time.

- last but not least, I was considering *real-time* real-world agents, those which can only do a very limited number of computations per interaction step, whereas AIXI can do an infinite number of computations in the same time. For such real agents, learning can only be extremely slow, since this requires time.

So I still think that AIXI would be vastly more intelligent in the real world than the real agents we will be able to create.

Abram Demski

Laurent,

Thanks for your response. I've added a couple of notes below.____________________- Our real world looks Turing-complete (save for some huuuge memory bound), since we can create computers. Thus virtually all environments can be expressed in this framework, expect the veeery complex ones maybe, but note that the prior of these environments is small.So it's probably not infinitesimal.

Your argument might be better in a simpler environment than the real world

>>True. If you meant to include the computers we create when you cited the "real world" then my comment isn't relevant.

- AIXI discards all environments that are not consistent with its experience. So it (probably) suffices for AIXI to throw one single die to "infer" many things about classical mechanics, and maybe quantum dynamics and Newton's laws of gravitation. With a few more throws it could generalize the laws very quickly. It does not need to observe the sand and the stars to infer that the world might be composed of particles (or that it leads to a good model). Learning is amazingly fast.

The knowledge it gains about the world grows so quickly that even if you add some additional initial knowledge to a computable agent, AIXI should catch up in no time.

>>You seem to have an intuition about how an AIXI would perform in certain situations. I don't have this, or I should say, my intuition is different. But since my intuition based on no empirical evidence, I don't give it much weight. My impression was that AIXI never discards an environment, but just considers them less likely when they are contradicted. The notion that a single observation could be used to definitively rule out certain possibilities seems strange to me. Why would that be so? Does Solomonoff Induction make no allowance for inaccuracies in observations? If so, that seems like another major barrier to implementing a variant of AIXI.

>>It would be great to be able to make such a comparison in practice.

- last but not least, I was considering *real-time* real-world agents, those which can only do a very limited number of computations per interaction step, whereas AIXI can do an infinite number of computations in the same time. For such real agents, learning can only be extremely slow, since this requires time.

>>Hardly a fair comparison! :)

So I still think that AIXI would be vastly more intelligent in the real world than the real agents we will be able to create.

Brandon

--

Before posting, please read this: https://groups.google.com/forum/#!topic/magic-list/_nC7PGmCAE4

To post to this group, send email to magic...@googlegroups.com

To unsubscribe from this group, send email to

magic-list+...@googlegroups.com

For more options, visit this group at

http://groups.google.com/group/magic-list?hl=en?hl=en

Laurent

Brandon,One point below.On Wed, Aug 24, 2011 at 2:24 AM, Rohrer, Brandon R <brr...@sandia.gov> wrote:

Laurent,

Thanks for your response. I've added a couple of notes below.____________________- Our real world looks Turing-complete (save for some huuuge memory bound), since we can create computers. Thus virtually all environments can be expressed in this framework, expect the veeery complex ones maybe, but note that the prior of these environments is small.So it's probably not infinitesimal.

Your argument might be better in a simpler environment than the real world

>>True. If you meant to include the computers we create when you cited the "real world" then my comment isn't relevant.

- AIXI discards all environments that are not consistent with its experience. So it (probably) suffices for AIXI to throw one single die to "infer" many things about classical mechanics, and maybe quantum dynamics and Newton's laws of gravitation. With a few more throws it could generalize the laws very quickly. It does not need to observe the sand and the stars to infer that the world might be composed of particles (or that it leads to a good model). Learning is amazingly fast.

The knowledge it gains about the world grows so quickly that even if you add some additional initial knowledge to a computable agent, AIXI should catch up in no time.

>>You seem to have an intuition about how an AIXI would perform in certain situations. I don't have this, or I should say, my intuition is different. But since my intuition based on no empirical evidence, I don't give it much weight. My impression was that AIXI never discards an environment, but just considers them less likely when they are contradicted. The notion that a single observation could be used to definitively rule out certain possibilities seems strange to me. Why would that be so? Does Solomonoff Induction make no allowance for inaccuracies in observations? If so, that seems like another major barrier to implementing a variant of AIXI.My guess is that this confusion comes from the existence of two different formulations of solomonoff induction.The simpler formulation is a mixture model of all computable deterministic models. In this case, an individual model makes hard predictions about the future, and we can simply discard it if it turns out to be wrong. Bad models will initially be eliminated very very quickly.:The mixture model can also be formulated as a combination of all computable probability distributions. In this case, models make soft predictions, so we are adjusting their relative probabilities rather than discarding them.(I should provide a reference for the equivalence, but I'm not sure where it is proven...)

Brandon, when I say "discard an environment", I'm talking about an environment as a program p, so that on input y_{1:t} (the history of the agent's actions from time 1 to t), p(y_{1:t}) produces the output x_{1:t} (observations of the agent).

If the output produced by this program is different from the true output as received by the agent, then this particular environment/program is no longer consistent and gets discarded.

However, it is easy to construct a new program p' based on p that remains consistent with the history. In general, though, p' is more complex than p.

This means that as the agent interacts with the environment, there is *always* an infinite variety of environments that are consistent with the history, and there are always environments that predict whatever you want (i.e. any future is *possible*). However, Occam's razor makes some futures less and less likely, making the program representing the true environment more and more likely.

Laurent

Tom Everitt

My guess is that this confusion comes from the existence of two different formulations of solomonoff induction.The simpler formulation is a mixture model of all computable deterministic models. In this case, an individual model makes hard predictions about the future, and we can simply discard it if it turns out to be wrong. Bad models will initially be eliminated very very quickly.:The mixture model can also be formulated as a combination of all computable probability distributions. In this case, models make soft predictions, so we are adjusting their relative probabilities rather than discarding them.(I should provide a reference for the equivalence, but I'm not sure where it is proven...)

Are they really equivalent as induction principles?

From Li & Vitanyi, p. 273, they are equivalent in the sense that:

"there is a constant c such that for every string x, log(1/m(x)) will differ at most c from C(x) "

So they do have a close connection. But does that mean they will perform equally well (bad) in probabilistic worlds?

Consider the world that outputs 1 with 80% probability. Since it is still pretty random, I imagine you will not manage much compression with a Turing machine, and since it is not possible to express probabilities with TM's, will it even predict that the next input will be 1 with higher probability then 0? Not sure.

The measure m on the other hand should soon converge on the (computable) distribution 80-20, and make adequate predictions.

At least this is the way I've always thought about it, but I have no proof this is right in any sense.

Abram Demski

--

Before posting, please read this: https://groups.google.com/forum/#!topic/magic-list/_nC7PGmCAE4

To post to this group, send email to magic...@googlegroups.com

To unsubscribe from this group, send email to

magic-list+...@googlegroups.com

For more options, visit this group at

http://groups.google.com/group/magic-list?hl=en?hl=en

Eray Ozkural

- There is no problem about the linkage between the ideal Solomonoff induction and the reality, except it is not computable : )If we make the assumption that the environment is computable (which is a religious thing since we cannot mathematically reason about the computability of the world in which we live, accept it or not...), then we can make the precise prediction with bounded regret.

Yet, this is a misunderstanding. There is no assumption that the world itself is a computation.

On the other hand, very rigorous mathematical results show that QM and GR are formulated precisely in computable mathematics (which is something else to say).

What Sol. induction assumes is that the probability distribution we're working with is computable, which is something else entirely (much weaker than already proven results mentioned above) and not religious at all.

Best,

--

http://groups.yahoo.com/group/ai-philosophy

Sun Yi

Hello,

The assumption that the environment is computable or sampled from a computable distribution is not verifiable by any agent inside that environment. In that sense accepting the computable assumption or refuting it are equally unfounded.

Of course this does not mean that we cannot design some environment for the agent that is totally under our control.

Regards,

Sun Yi

From: magic...@googlegroups.com [mailto:magic...@googlegroups.com] On Behalf Of Eray Ozkural

Sent: Wednesday, August 24, 2011 23:29

To: magic...@googlegroups.com

Subject: Re: [MAGIC-list] Link between AGI theory and reality

On Wed, Aug 24, 2011 at 12:11 PM, Sun Yi <y...@idsia.ch> wrote:

--

Abram Demski

Eray Ozkural

Laurent

From my point of view:

- There is no problem about the linkage between the ideal Solomonoff induction and the reality, except it is not computable : )

If we make the assumption that the environment is computable (which is a religious thing since we cannot mathematically reason about the computability of the world in which we live, accept it or not...), then we can make the precise prediction with bounded regret.

- My problem is with the computable agent. Shane’s result shows that even if we make the assumption that the world is simple in the Kolmogorov sense, we still cannot guarantee the performance of the agent. Moreover, beyond certain point we cannot even mathematically reason about the computable agent.

I think this statement goes way beyond Shane's results. What he says, IIRC, is that you cant' find it, except by mere chance, but that does not mean you cannot say anything about such an agent.

And as I said before, optimality is only one criterion.

- As for the comparison of two agents, I have the intuition that it is not decidable (no proof yet).

Maybe when choosing 2 particulars agents, this is true in general, but maybe we can make classes of agents that can be compared.

Anyway, I wrote the following Lemma, basically restate Shane’s result. However, it shows that deciding if a sequence is predicted by a given predictor is very hard.

Let me first restate the lemma, to be sure I understand correctly:

p \in P(w) means that p is a predictor of w.

D(p) is the set of sequences that p learns to predict correctly (i.e. there is some time t after which p makes no mistake on w).

You're saying that we cannot decide, for a given p and a given w, if p learns to predict w correctly?

While this is probably true in general (Rice theorem, Pigeon Hole argument) when considering p as any program and w as any (even computable ) sequence (this is similar to testing semantic equality of two given programs, and so finding the smallest equivalent program), this is not true in particular.

As you can find the smallest canonical Finite State Automaton for example, you can chose p and w in the regular language class, and there you can decide all the time if p predicts w, I think.

I mean, it's not because you cannot say something in general that you can't say something on more specific classes.

Am I missing something?

Laurent

Laurent

>>It would be great to be able to make such a comparison in practice.So I still think that AIXI would be vastly more intelligent in the real world than the real agents we will be able to create.

http://www.hutter1.net/official/bib.htm#aixictwx

Note that this is an *extreme approximation* of AIXI, which latter has infinitely more computational power.

Recently I stumbled upon an comparison with traditional RL, and that showed that AIXI was much better, but I can't remember where I saw that...

Anyway, just to give you a sense, a "fairer" comparison of an approximation of AIXI would be to run Q-learning on a 80286 and MC-AIXI on a grid of fast computers. Probably you can guess the result if they are allocated the same time ;)

Laurent

Wén Shào

Vaibhav Gavane

In the book, the constant c is actually never "fixed". For two

complexities C_1 and C_2 to be equivalent it is sufficient that there

exist *some* constant c such that for all x |C_1(x) - C_2(x)| <= c.

Then, all universal Turing machines are indeed in the smallest

equivalence class.

I think what the authors really mean by "clean solution" is "justification".

Vaibhav

On 10/24/11, Wén Shào <90b5...@gmail.com> wrote:

> Hi Tom,

>

> Sorry to bring this old thread up. You said that, by the argument made in

> LV08, all universal Turing machines will end up in the smallest equivalence

> class. I'm not so sure about this, once you fixed a constant c, why

> necessarily do all universal Turing machines end up in the smallest

> equivalence class?

>

> Let's put whether this argument solves the arbitrariness in choosing

> reference machine aside, the mathematical solution i.e. the whole idea of

> equivalence class and the partial order on this set is well defined, isn't

> it? Moreover, to me it's not the case that all universal Turing machines

> will end up in the smallest equivalence class.

>

> Cheers,

>

> Wén

>

>

>

> On Mon, Aug 22, 2011 at 11:33 PM, Tom Everitt <tom4e...@gmail.com> wrote:

>

>> Hi again, I finally had time to read Li & Vitanyi a bit more closely.

>>

>> They start out promising:

>>

>> "The complexity C(x) is invariant only up to a constant depending on the

>> reference function *phi_0*. Thus, one may object, for *every* string

>> *x*there is an additively optimal recursive function

>> *psi_0* such that C_psi_0(x) = 0 [psi_0 assigns complexity 0 to x]. So how

>> can one claim that C(x) is an objective notion?"

>>

>> They then set about giving a "mathematically clean solution" by defining

>> some equivalence classes, claiming these equivalence classes had a single

>> smallest element. This is all well, but the problem is that according to

>> their definition of equivalence classes, all universal Turing machines end

>> up in the smallest equivalence class. And, clearly, for every string

>> *x*there is a universal Turing machine assigning complexity 0 to

>> *x*. (Just take any universal Turing machine, and redefine it so it prints

>> *x* on no input.)

Wén Shào

Tom Everitt

I'm glad you brought this one up again.

This is what confused me, it looks like from the book even within an equivalence class the "constant" c can vary depending on the complexities you're looking at and there is no fixed c for an equivalence class. How does this help to justify invariance?

Exactly, it doesn't seem to justify invariance at all. I'm not sure what you mean with that c varies with the complexities, c varies only with psi and phi (and is really a constant with respect to x at least), yet even so I definitely agree with you that it's quite unsatisfying.

On the other hand, if we force a fixed c on equivalence relations, then we won't end up with all UTM being in one equivalence class and the partial ordering is still well defined. There might be some other problems with it, but it makes more sense than having multiple c's? Or not? Not quite sure, just some random thoughts.

Keeping c fixed will remove some of the other UTM's from the phi_0 equivalence class, yes. Yet which UTMs it would remove would depend on phi_0 (and phi_0 is more or less arbitrarily chosen) - so in terms of objectiveness I think we would be back to square one.

I've noticed that right after they have a long discussion about the objectiveness of the numbering of the recursive functions, I'm not sure if it's meant to support this objectiveness argument or if the point is something else. Either way, after reading it quickly I don't see how it could help them.

Regards

Tom

Vaibhav Gavane

equivalence relation at all. I can see that reflexivity and symmetry

are satisfied, but I can't see how to improve the upper bound of 2c in

the case of transitivity.

Vaibhav

Tom Everitt

Tom

Eray Ozkural

Tom Everitt

In practical work, the invariance theorem is mostly irrelevant, since the constants involved are quite high. A lot of problems have complexity beneath those c's, so what's the point?

The point is purely theoretical I suppose. It would feel nice with an objective measure of complexity. In fact, this seems to be the main advertising point of Kolmogorov Complexity: that it entails that complexity is a property of the object rather than the language/subject. But never trust a salesman, right? :)

(Of course, it still works for infinite sequences.)

Solomonoff argues that subjectivity is a desired feature of algorithmic probability,

How could subjectivity possibly be a desirable property?

so according to him, at least, searching for an objective measure, the ultimate language, is moot. Memory brings subjectivity, obviously, so we use actually very complex machines, not minimal machines, in practice.Philosophically, I think this has been solved partially inand more completely in my proposal in 2007:So, the idea is that, you can use our universe as the universal machine. That's the most low-level machine imaginable, so it's the most neutral model that is free of any bias whatsoever.

Okay, so given that the choice of reference machine is necessarily subjective, this would probably be the _most_ objective we can get. But it really wouldn't be objective.

Theoretically, I think you could use a universal quantum computer:Which would really mean that Deutsch solved that problem in 1985.The caveat I made was that, a slight but important detail: ultimately it is the universal model of computation that the correct Grand Unified Theory is going to provide. So, it could be some string theory contraption, or a RUCA, whatever you like, as well. The point is that such machines necessarily do not contain any information about particular world-states, but contain only universal information.

One of the most desirable features of an objective AIT would be that it would make induction objective - so once we would have found this GUT, no one could ever question it (given he had the same data). Now, since we can't find an objective basis of induction, we have to rely on a questionable GUT, which in turn means that once we've found the semi-objective GUT-reference machine, it will also be forever questionable. Are you with me?

So we will never have a solid foundation of science *crying*. (This may be to push my argument a bit far, I'm not sure. Let's hope.)

This is probably only philosophically annoying, but still. It serves to show why I find it incomprehensible to desire a subjective AIT.

Tom

Eray Ozkural

On Mon, Oct 24, 2011 at 12:37 PM, Eray Ozkural <exama...@gmail.com> wrote:In practical work, the invariance theorem is mostly irrelevant, since the constants involved are quite high. A lot of problems have complexity beneath those c's, so what's the point?

The point is purely theoretical I suppose. It would feel nice with an objective measure of complexity. In fact, this seems to be the main advertising point of Kolmogorov Complexity: that it entails that complexity is a property of the object rather than the language/subject. But never trust a salesman, right? :)

(Of course, it still works for infinite sequences.)

Solomonoff argues that subjectivity is a desired feature of algorithmic probability,

How could subjectivity possibly be a desirable property?

so according to him, at least, searching for an objective measure, the ultimate language, is moot. Memory brings subjectivity, obviously, so we use actually very complex machines, not minimal machines, in practice.Philosophically, I think this has been solved partially inand more completely in my proposal in 2007:So, the idea is that, you can use our universe as the universal machine. That's the most low-level machine imaginable, so it's the most neutral model that is free of any bias whatsoever.

Okay, so given that the choice of reference machine is necessarily subjective, this would probably be the _most_ objective we can get. But it really wouldn't be objective.

Theoretically, I think you could use a universal quantum computer:Which would really mean that Deutsch solved that problem in 1985.The caveat I made was that, a slight but important detail: ultimately it is the universal model of computation that the correct Grand Unified Theory is going to provide. So, it could be some string theory contraption, or a RUCA, whatever you like, as well. The point is that such machines necessarily do not contain any information about particular world-states, but contain only universal information.

One of the most desirable features of an objective AIT would be that it would make induction objective - so once we would have found this GUT, no one could ever question it (given he had the same data). Now, since we can't find an objective basis of induction, we have to rely on a questionable GUT, which in turn means that once we've found the semi-objective GUT-reference machine, it will also be forever questionable. Are you with me?

So we will never have a solid foundation of science *crying*. (This may be to push my argument a bit far, I'm not sure. Let's hope.)

This is probably only philosophically annoying, but still. It serves to show why I find it incomprehensible to desire a subjective AIT.

Tom Everitt

On Mon, Oct 24, 2011 at 5:37 PM, Tom Everitt <tom4e...@gmail.com> wrote:How could subjectivity possibly be a desirable property?Well, it's a another way of saying that a well-educated man has a better perspective. :)

Hehehe, I know, Solomonoff could definitely have used a proper logic education ;)

Okay, so given that the choice of reference machine is necessarily subjective, this would probably be the _most_ objective we can get. But it really wouldn't be objective.

It would because from a properly positivist viewpoint, "other possible universes with different physical law" are metaphysical fantasies only. If you try to answer the following question you can see that for yourself: in what sense would it not be objective?

Okay, it might appear like that from a positivist standpoint, but I'm far from convinced that the positivists are right - even though their views are appealing in many ways (I don't think I'm very up to date on positivism though, we basically just discussed Carnap/Vienna group in philosophy class).

Anyway, it seems problematic to disqualify metaphysical possibility. For example, the foundation of AIXI is "the cybernetic model", with some agent interacting with some "world". This world is essentially any metaphysically possible world, and we make no reference, have no need, of the contingencies of the natural sciences to do this. The result is a nice theory of intelligence with minimal requirements as to what world it is put in - and nice theoretical insights on intelligence.

To do something similar, only allowing nomological possible worlds, would be quite hard, no? Especially since we don't even know the laws of the universe; in contrast we do know what's metaphysically possible.

So that's why metaphysical possibility is relevant, and a universe-based prior is non-objective.

I'm sure you have a bunch of counter arguments. Bring them on :)

Abram Demski

--

Before posting, please read this: https://groups.google.com/forum/#!topic/magic-list/_nC7PGmCAE4

To post to this group, send email to magic...@googlegroups.com

To unsubscribe from this group, send email to

magic-list+...@googlegroups.com

For more options, visit this group at

http://groups.google.com/group/magic-list?hl=en?hl=en

Eray Ozkural

On Mon, Oct 24, 2011 at 4:54 PM, Eray Ozkural <exama...@gmail.com> wrote:On Mon, Oct 24, 2011 at 5:37 PM, Tom Everitt <tom4e...@gmail.com> wrote:How could subjectivity possibly be a desirable property?Well, it's a another way of saying that a well-educated man has a better perspective. :)

Hehehe, I know, Solomonoff could definitely have used a proper logic education ;)

Okay, so given that the choice of reference machine is necessarily subjective, this would probably be the _most_ objective we can get. But it really wouldn't be objective.

It would because from a properly positivist viewpoint, "other possible universes with different physical law" are metaphysical fantasies only. If you try to answer the following question you can see that for yourself: in what sense would it not be objective?

Okay, it might appear like that from a positivist standpoint, but I'm far from convinced that the positivists are right - even though their views are appealing in many ways (I don't think I'm very up to date on positivism though, we basically just discussed Carnap/Vienna group in philosophy class).

Anyway, it seems problematic to disqualify metaphysical possibility. For example, the foundation of AIXI is "the cybernetic model", with some agent interacting with some "world". This world is essentially any metaphysically possible world, and we make no reference, have no need, of the contingencies of the natural sciences to do this. The result is a nice theory of intelligence with minimal requirements as to what world it is put in - and nice theoretical insights on intelligence.

To do something similar, only allowing nomological possible worlds, would be quite hard, no? Especially since we don't even know the laws of the universe; in contrast we do know what's metaphysically possible.

So that's why metaphysical possibility is relevant, and a universe-based prior is non-objective.

I'm sure you have a bunch of counter arguments. Bring them on :)

Tom Everitt

Abram:

I agree to some extent - it is comforting that just starting with any universal prior, we will eventually converge to the true hypothesis. However, since we will always be stuck in finite time, we will always have the case:

Prior1 predicts TOE1 with high probability, and (absurd) Prior2 predicts (absurd) TOE2 with high probability, and we have no means to say whose right. Apart from some, apparently ill-based, intuition.

And this will always be the case, forever (until we reach time=infinity).

Thus my obsession with an objective prior. Having one seems to be the only way out of this problem.

Of course, in practice, this is never a problem. We seem to have our built-in, fairly good, reference machine, and it's working out well enough. But I maintain that it's not philosophically satisfying. (Well, in a sense. I suppose it would also be of some value to understand why we can never achieve an objective science, and be able to move on with good conscience.)

Eray

I need to think about your points for a bit before I reply. Let me just make the one remark below, though :)

On Mon, Oct 24, 2011 at 8:22 PM, Tom Everitt <tom4e...@gmail.com> wrote:

Well, it's a another way of saying that a well-educated man has a better perspective. :)

Hehehe, I know, Solomonoff could definitely have used a proper logic education ;)

That's not correct, because it's obvious from his writing that he does understand the incompleteness theorems in logic very well. Somebody who understands meta-mathematics is likely to understand predicate calculus, etc. very well. He also worked with Carnap, who knew a bit of logic :) What do you base this on? We positivists are all logic freaks :)

Irony :D (I have great respect for Sol. actually, perhaps it doesn't always show.) But it was interesting extra information you provided for your argument, didn't know he worked with Carnap for instance.

Tom Everitt

However, note that the extension does not really increase the predictive accuracy or the applicability of the method in any way. In particular, it does not create a set of more interesting problems of AI, since RL-problems are already among the kinds of problems that an AI system must be able to solve. It's just another kind of optimization problem. Although, obviously, it's a *general* optimization problem, and it is AI-complete, since we can cast any computational problem(P and NP problems etc.) and prediction/probability problems as RL-problems. But you don't have to. They can still be solved with a general problem solver that uses Sol. induction. So, I don't think that agent models are special in any way. They are not the foundation of intelligence. They are, as you say, part of cybernetic animal models, i.e. animats, that *use* intelligence.They are applications. It's artificial life, rather than intelligence. Intelligence is prediction. Nothing less, nothing more. Of course, I think behaviorism is a philosophical farce, that's why I badly want to avoid behaviorist sounding definitions. But that just makes my conviction stronger :)

Okay, I get the point about artificial life contra intelligence. But my argument works for Sol. induction/prediction as well.

In order to know that the prediction works in this world - before knowing anything about this world - we need to figure out exactly what worlds are possible. And this is essentially all the metaphysically possible worlds!

Note though that with worlds I mean programs, and in particular their output. So the world with ghosts is essentially the same world as this world - they have the same output. (Only slightly more unlikely.)

From Wikipedia:

"Positivism is a philosophical approach.... [in which] ....sense experiences and their logical and mathematical treatment are the exclusive source of all worthwhile information."

Would this mean that I cannot a priori deduce what worlds I could possibly encounter? Strictly speaking, that would be doing math that's not the treatment of some sense datum. But that must be permissible, no?

In the final analysis, Solomonoff induction works because it is a *complete* formalization of the scientific process, and has been shown to apply to any problem in the observable universe. It is based on a *correct philosophy of science*. That's what's important. What else could be significant here? If this is based on scientific process, talk of metaphysics is completely irrelevant.

I agree that's pretty grand - it's a pretty good summation of why I like Sol. induction. Even so, I would have wished that the ultimate theory of science would have dealt with the problem I mentioned to Abram in my previous mail:

The interpretation of data should not be completely subjective. Consider this:

We are in a world, and have 100 times made output 1 and received positive feedback, and a 100 times made output 0 and received negative feedback.

Now, since we can't say which prior is the best, we can not say that in order to maximize reward for the next output, we should choose 1.

This is highly unintuitive. Perhaps it really is this way, but it's not very satisfying (to my uneducated mind, at least :) ).

Tom

Eray Ozkural

On Mon, Oct 24, 2011 at 8:24 PM, Eray Ozkural <exama...@gmail.com> wrote:However, note that the extension does not really increase the predictive accuracy or the applicability of the method in any way. In particular, it does not create a set of more interesting problems of AI, since RL-problems are already among the kinds of problems that an AI system must be able to solve. It's just another kind of optimization problem. Although, obviously, it's a *general* optimization problem, and it is AI-complete, since we can cast any computational problem(P and NP problems etc.) and prediction/probability problems as RL-problems. But you don't have to. They can still be solved with a general problem solver that uses Sol. induction. So, I don't think that agent models are special in any way. They are not the foundation of intelligence. They are, as you say, part of cybernetic animal models, i.e. animats, that *use* intelligence.They are applications. It's artificial life, rather than intelligence. Intelligence is prediction. Nothing less, nothing more. Of course, I think behaviorism is a philosophical farce, that's why I badly want to avoid behaviorist sounding definitions. But that just makes my conviction stronger :)

Okay, I get the point about artificial life contra intelligence. But my argument works for Sol. induction/prediction as well.

In order to know that the prediction works in this world - before knowing anything about this world - we need to figure out exactly what worlds are possible. And this is essentially all the metaphysically possible worlds!

Note though that with worlds I mean programs, and in particular their output. So the world with ghosts is essentially the same world as this world - they have the same output. (Only slightly more unlikely.)

From Wikipedia:

"Positivism is a philosophical approach.... [in which] ....sense experiences and their logical and mathematical treatment are the exclusive source of all worthwhile information."

Would this mean that I cannot a priori deduce what worlds I could possibly encounter? Strictly speaking, that would be doing math that's not the treatment of some sense datum. But that must be permissible, no?

In the final analysis, Solomonoff induction works because it is a *complete* formalization of the scientific process, and has been shown to apply to any problem in the observable universe. It is based on a *correct philosophy of science*. That's what's important. What else could be significant here? If this is based on scientific process, talk of metaphysics is completely irrelevant.

I agree that's pretty grand - it's a pretty good summation of why I like Sol. induction. Even so, I would have wished that the ultimate theory of science would have dealt with the problem I mentioned to Abram in my previous mail:

The interpretation of data should not be completely subjective. Consider this:

We are in a world, and have 100 times made output 1 and received positive feedback, and a 100 times made output 0 and received negative feedback.

Now, since we can't say which prior is the best, we can not say that in order to maximize reward for the next output, we should choose 1.

This is highly unintuitive. Perhaps it really is this way, but it's not very satisfying (to my uneducated mind, at least :) ).

Abram Demski

--Abram

--

Before posting, please read this: https://groups.google.com/forum/#!topic/magic-list/_nC7PGmCAE4

To post to this group, send email to magic...@googlegroups.com

To unsubscribe from this group, send email to

magic-list+...@googlegroups.com

For more options, visit this group at

http://groups.google.com/group/magic-list?hl=en?hl=en

Eray Ozkural

Eray,Tom is not saying anything about induction not working, or Laplace's rule failing. He is only pointing out that we need to choose a prior to determine how much evidence it takes to converge to correct predictions, and there will always be a different universal prior that we could have chosen which would tell us "not yet!" and find some different alternative more probable.

In other words, there may always be some rogue scientists who have examined the same evidence as everyone else but have a sufficiently different taste when it comes to "elegance" of a theory that they prefer a different explanation, and make different predictions. Furthermore, these rogue scientists may be correct. (The best we can do is judge their theories with our own prior, but we have no claim to "the best prior".

Eray Ozkural

Eray Ozkural

Abram Demski

--

Before posting, please read this: https://groups.google.com/forum/#!topic/magic-list/_nC7PGmCAE4

To post to this group, send email to magic...@googlegroups.com

To unsubscribe from this group, send email to

magic-list+...@googlegroups.com

For more options, visit this group at

http://groups.google.com/group/magic-list?hl=en?hl=en

Tom Everitt

On Tue, Oct 25, 2011 at 7:45 PM, Abram Demski <abram...@gmail.com> wrote:Eray,Tom is not saying anything about induction not working, or Laplace's rule failing. He is only pointing out that we need to choose a prior to determine how much evidence it takes to converge to correct predictions, and there will always be a different universal prior that we could have chosen which would tell us "not yet!" and find some different alternative more probable.Then, that's a moot point, because no universal prior tells you how close you are to the real solution. They can never do that.

It is actually not entirely moot (I wish it was).

Consider the case where what we've seen is

s = 111111111111111111111111111111111111111111

it seems obvious that the best prediction of the next bit is 1, given the data we've seen.

But if we don't have an objective prior, we have no means to say that 1 is the best prediction, because some priors will say that 0 is the most probable continuation. (Even a measure on priors would do, then we could say that most priors predict 1.)

(This is really just Goodman's "grue" example in an AIT context.)

So what I'm after is not really a clue as to how close we are to the true model, it's a deep fact we can never have that.

What I'm after is an objective way to interpret data! Because intuitively, 1 is the objectively best prediction of the continuation of s above. But as of now, AIT does not provide any support for this intuition (maybe rightly so).

(In practice, I agree, this is not a problem. This is an entirely theoretical issue.)

Eray Ozkural

Eray,

I agree, it's not going to be much of a concern in practice. Indeed, it seems like we would have to know the data ahead of time in order to specifically go and construct priors that would significantly disagree after more than 1,000 observed bits. The concern is purely theoretical.The question arises, what would we even do with such a "best UTM" if we had it? Presumably it would have to have some fairly special powers, enough for us to prefer it over other UTMs. It is very similar to trying to design the best programming language. In fact, it's the same problem.

Perhaps there is a very real sense in which some languages are more powerful; ie, it's easier to write an interpreter for any other language you like. (Lisp would do well by that metric, for example.) This pushes down the constant penalties to get to other languages. However, it *must* push something up when it pushes something down, because we only have finite probability mass to shove around. So, taking this to its conclusion, we would get a programming language that can easily write programming languages, but in which it is very difficult to write anything else...

Eray Ozkural

Tom Everitt

Well, you know what I will say, there should be a minimal energy consuming universal quantum computer, which you can use for these purposes. If that's not good enough, I don't know what is :)

I think that should solve the problem of subjectivity once and for all.And if you use that, you will, in fact, have 1. Don't worry about that :)

Okay, if that's objective, you win. It fits poorly with my intuition of objectivity though:)

Eray Ozkural

Tom Everitt

Sure, give me till tomorrow to formulate it!

Tom Everitt

Either one can view mathematics/logic as something a priori given, by which we try to figure out the world we happen to live. In this view the laws of the world are mere accidents; there is nothing objective about them. (I believe this is the view the cybernetic model tries to capture.)

The alternative is to take the world as the base, we learn about it through induction, and learn to generalize more and more - this process leads to mathematics. This model fits well with how human knowledge has evolved through the ages, I suppose.

The latter view, together with the assumption that mathematics has no "transcendent existence" (is confined to our universe), entails a position much like yours.

(Is this a fair description? I'm only grateful for improvements on these.)

I think I subscribe to the first view, not because I can disprove the positivist position, but because the first (I don't know what to call it) feels slightly more elegant. So perhaps this is a genuine conflict of priors :) (I don't think so though, we would probably converge would we discuss it long enough.)

Eray Ozkural

To backtrack a bit, I believe our differences stem from fundamentally different core philosophies. Following is an attempt to spell them both out:

Either one can view mathematics/logic as something a priori given, by which we try to figure out the world we happen to live. In this view the laws of the world are mere accidents; there is nothing objective about them. (I believe this is the view the cybernetic model tries to capture.)

The alternative is to take the world as the base, we learn about it through induction, and learn to generalize more and more - this process leads to mathematics. This model fits well with how human knowledge has evolved through the ages, I suppose.

The latter view, together with the assumption that mathematics has no "transcendent existence" (is confined to our universe), entails a position much like yours.

(Is this a fair description? I'm only grateful for improvements on these.)

I think I subscribe to the first view, not because I can disprove the positivist position, but because the first (I don't know what to call it) feels slightly more elegant. So perhaps this is a genuine conflict of priors :) (I don't think so though, we would probably converge would we discuss it long enough.)

Tom Everitt

No, cybernetic view is just applied control theory, in construction of artificial animals and the like. It's basically agent models. It does not make a philosophical commitment in itself. What do you mean by cybernetic model?

I didn't mean it forced any philosophical commitments, only that it's natural to come up with a model like that if you believe you are a logically thinking subject, one day presented with some arbitrary world. Even so, I think you may be right, it probably fits well with other ways of thinking as well.

Nowadays, I think one of the most popular philosophy among hard-core scientists is instrumentalism:As it's smart enough not to make dubious metaphysical posits. Just to give a flavor of modern philosophy of science, as opposed to Plato's false theory.

Okay, this is an interesting view, thanks for reminding me about it.

It depends on whether you think mathematics has a privilege. But why should it? From a proper scientific point of view, as an anthropologist would say: at some point we invented numbers to make better calculations, this linguistic and mental invention increased our intelligence. Do you really disagree with the scientific fact that mathematics itself evolved in human culture?

Well, yes, as I say, this empiricist view fits well with how we came to use numbers. But this does not mean that numbers are not existing - it may be that we discovered them, much like we discover things about the universe.