Statistical Process Control

15 views

Skip to first unread message

Ryan Latta

Jan 23, 2019, 11:50:55 AM1/23/19

to Lonely Coaches Sodality

Hey everyone!

I've been quietly watching this group for some time. Thought I'd throw a topic out for discussion. An interesting set of unrelated events have nudged me to learning about statistical process control. I'm fascinated by the approach and tooling around it. Especially since its partially built-in to everyone's favorite tool (Jira).

I've been thinking about how this technique could be used with teams to help them think more about what is happening internally to them as they attempt to improve the way they work. I'm also interested in how this technique could be used with managers and leaders to help them see how they may go about making things better for the team. I think there is more potential than that, but these are the first two things I'm interested in exploring.

I'm also aware that SPC works based off a notion of stability, and that not all software will fit into something that can be stable. For the cases it can or does, how will this technique apply. Can this technique help us to see that we truly are in a more complex space and this gives data to support that idea that our approach and thinking will need to change.

Anyway, I'd love to hear more about what your experience is with this technique or approach.

Ryan Latta

Jan 23, 2019, 11:51:01 AM1/23/19

to Lonely Coaches Sodality

Hey Everyone,

I've watched this group for a long time, and I thought I'd bring up a topic for discussion. Through a series of somewhat unrelated events I've been learning about Statistical Process Control. I'm still new to the technique and how to apply it. I'm looking for experiences that you all have and feedback as to where my head is at with this technique.

In a nutshell, this technique will be able to show process stability and highlight trends and special causes. If you've seen the Control Chart in Jira it's very similar.

For teams I think this could be used in their improvement efforts. They could see the information in and steer their efforts towards recovering from special cases or systemic improvement while also seeing the impact on their process based on their changes.

For leaders and managers I'd consider it in the same use, but the data would be across a larger process. It would provide data and evidence about the impact of their nudges, policies, and changes to the larger system, while also highlighting the impact and difference of one-off cases.

I realize that this technique will not be useful for all teams in all cases. I think this technique will also help point out when that is true, which would help reinforce that we need to think about using different techniques and approaches than before because we are in an unstable system.

Anyway, those are my thoughts, but I'd love to hear how anyone has used this technique before, and any feedback about my interpretation of things.

Thanks,

Ryan

Mark Levison

Jan 23, 2019, 3:43:45 PM1/23/19

to lonely-coaches-sodality

Ryan welcome. Replying from my phone at a client site. Please forgive brevity and errors.

If a team initiated that I would cheer and watch what they learn. It could be very valuable.

If it came from the outside I would worry, that the team would learn to perform to the measure.

Assuming this is owned by the team and not being used to control them look into the work of Troy Mageniss (sp?) his site is focused objective. Also try Daniel Vacanti (sp?), he has a book in the area.

I have a few posts on the subject: https://agilepainrelief.com/notesfromatooluser/2016/09/measurement-for-scrum-what-are-appropriate-measures.html#.XEihmsmvDgA (start with this one and dig from there.). Caveat I'm not actually stats guy, Troy is.

Cheers

Mark

--

---

You received this message because you are subscribed to the Google Groups "Lonely Coaches Sodality" group.

To unsubscribe from this group and stop receiving emails from it, send an email to lonely-coaches-so...@googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

Liz Keogh

Jan 23, 2019, 4:10:23 PM1/23/19

to lonely-coac...@googlegroups.com

Hi Ryan,

If you're doing something new, you'll get more variance than if you're doing something repetitive (like building the same car over and over again). So if you minimize variance, you'll end up minimizing innovation.

There's also the J-curve to consider; performance drops when people are learning new things, but learning new things is good, and we don't want to stifle that either.

The control aspect might be useful for a helpdesk or something like that, but I wouldn't want to see a development team ruled by it. Fine to measure though, as long as the measurements are treated with curiosity instead of judgement.

Cheers,

Liz.

--

---

You received this message because you are subscribed to the Google Groups "Lonely Coaches Sodality" group.

To unsubscribe from this group and stop receiving emails from it, send an email to lonely-coaches-so...@googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

Pierre Neis

Jan 24, 2019, 12:55:18 AM1/24/19

to lonely-coac...@googlegroups.com

Hi

The rule is quite simple:

- statistical refers to system thinking i.e. lean: you try to predict the variability in a dedicated system

- probabilistic refers to sense making systems or agile where we try to discover patterns or trends: ex. analysing a huge collection of data to understand emerging behaviours in a system.

Hope it helps… not sure ;0)

Pierre

Jon Kern

Jan 25, 2019, 12:07:03 PM1/25/19

to lonely-coac...@googlegroups.com

Hi Ryan,

Jon Kern

As Mark said, Daniel Vacanti’s book is great on this entire topic. (Actionable Agile Metrics for Predictability: An Introduction)

While what Liz points out might lean one to think “we are not like manufacturing” — I think we can certainly borrow from that discipline.

My son works in a prototyping (not production) machining center, trying to help drive his team to be lean and agile, collecting data just like Daniel talks about…

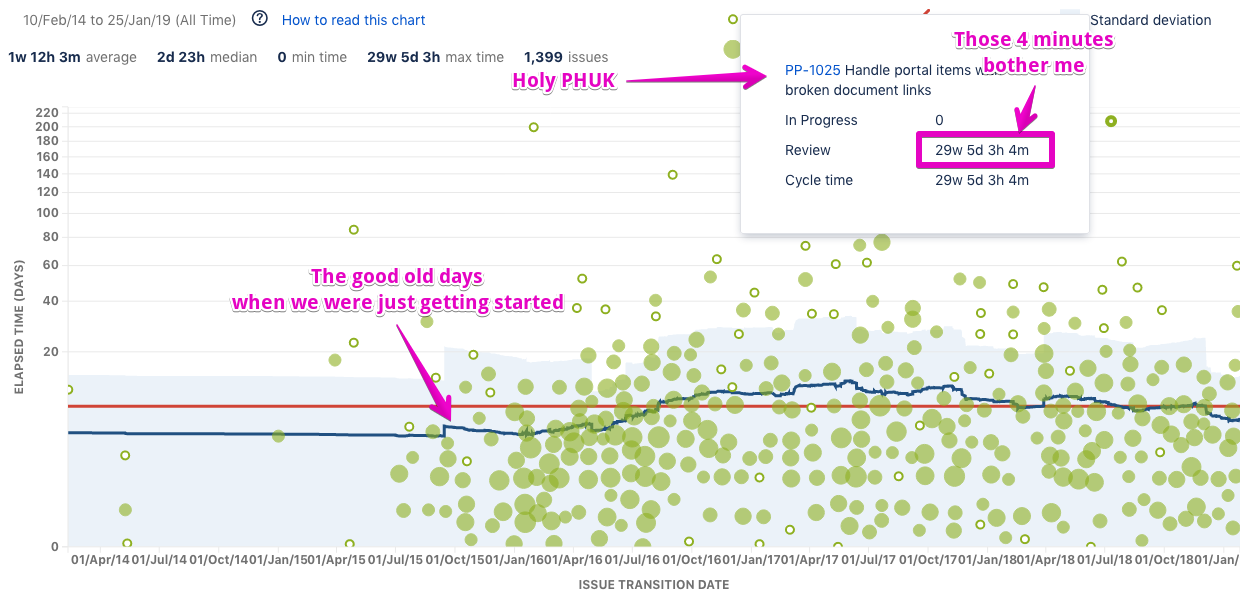

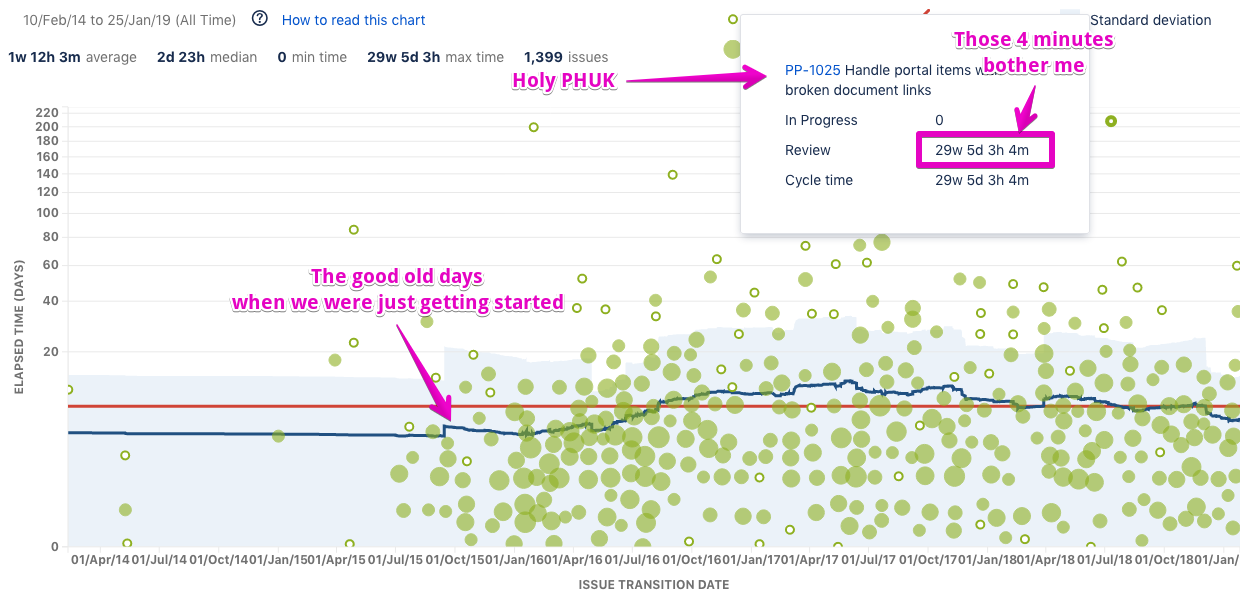

Every now and then (just did it this week) I love to toy around with trying to improve the JIRA control chart just for fun. As it collects data already with no extra effort.

Well, I do this really because I am trying to nudge ourselves to the nirvana of single piece flow.

But of course, as soon as I look at the chart, I want to improve.

Which draws me to outliers… things on the Kanban board with extreme age — one culprit is for a very part-time contractor to do some general work to help improve some things…

So that reminds me that I made a shitty non-deterministic task. And to make it worse, I left it on the Board for weeks, making my control chart look like crap.

Oh, and another issue blocked by another team has been on the board for weeks… oops. I suck.

So my behavior the other day was to go clean out my bad issues and get them back into the backlog so my data looks better. I mean so my Board looks better and is more reflective of reality.

So in some sense, that probably was the right thing to do with those issues. So it can drive better behavior.

Now you gone and made me look… and cringe. Gonna go out and drink now.

Damn you, Ryan!

Jon Kern

blog: http://technicaldebt.com

twitter: http://twitter.com/JonKernPA

github: https://github.com/JonKernPA

Reply all

Reply to author

Forward

0 new messages