I've just been notified about an exciting and important algorithm for procedural generation: the "wave function collapse algorithm", described by Marian Kleineberg in 2019. I want to talk about it here, from the viewpoint of natural language generation. It's a kind of a greedy algorithm, and its an entropy-minimizing algorithm, but what makes it different is that it is explicitly working with "jigsaw-puzzle pieces", and it also has a twist, a trick: its selecting what slot to fill, rather than what to fill in the slot.

Re-read that last sentence, because it's the key innovation that excites me. Everyone who has attempted to do procedural animation has encountered and struggled with the issue of combinatoric explosion. I struggle with it here, in the opencog/generate code base. The existing prototype there uses an odometer-based approach to enumerate all possibilities. Since the jigsaw pieces potentially combine in a recursive fashion, the odometer will enumerate a countable infinite of possibilities. Mathematically interesting, but not very practical for AI/AGI applications.

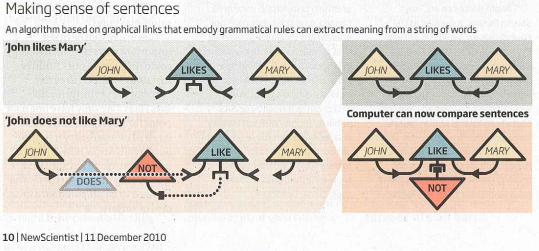

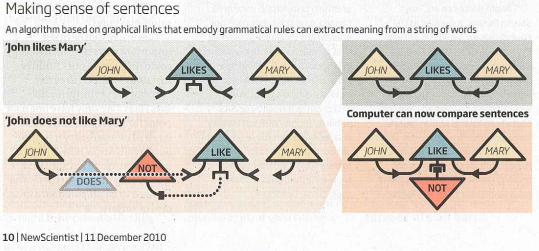

Some background. The prior blog post: "Everything is a Network", argues that all AGI and knowledge representation and reasoning systems can be understood in terms of jigsaw puzzle pieces. Thus, algorithms for working with the same become important.

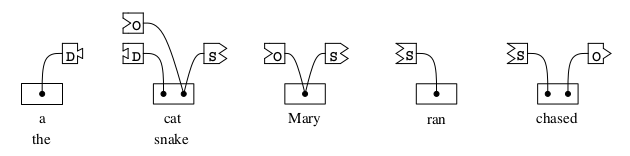

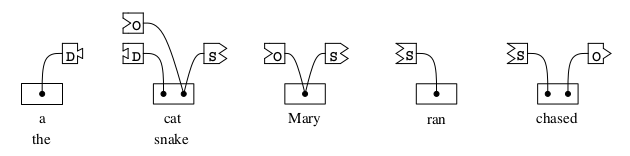

The jigsaw puzzle piece paradigm figures prominently in the theory of Link Grammar (LG). Each natural language (English, Russian, etc.) is represented in terms of a dictionary (lexis) of pieces, each with one word written on it. Parsing a sentence is then converted into the problem of finding appropriate pieces, and assembling them, without gaps or mismatches. The result is a dependency grammar parse (which can be converted to an head phrase structure grammar (HPSG) parse, if you wish.)

Curiously, while many systems distinguish strongly between parsing and generation, this is not the case for the jigsaw assembly paradigm. So, for example, given a fixed sentence, say, "This is a test", one first finds all jigsaw pieces (aka disjuncts) for each word, and then selects among them to find which ones fit. For example, the parse

+----->WV----->+---Os---+

+-->Wd---+-Ss--+ +--Ds-+

| | | | |

LEFT-WALL this.p is.v a test.n

is assembled from five pieces:

LEFT-WALL: Wd+ & WV+;

this: Wd- & Ss+;

is: Ss- & WV-;

a: Ds+;

test: Ds- & Os-;

The plus and minus signs represent the linkage direction (think tab and slot, in a puzzle-piece), while the letters represent the link type (they represent different shapes for the connectors; they are type-theoretical types.) Snap them all together, and bingo! A parse! Some more examples:

Now, lets consider natural language generation. In this case, one needs to merely replace specific locations in a sentence with wild-cards. Rather than working with only those puzzle pieces corresponding to a particular word at that location, one can work with any puzzle pieces that might possible fit in that location. As long as the pieces snap together, one gets a grammatically valid sentence. To find out what sentence one got, one need only read the word off of the piece that was selected to fit. Viola! Parsing and generation are the same thing in the puzzle-piece paradigm!

This is easy to say, and only a little harder to do. However, if one really wants to generate any sentence, one promptly runs into a commonplace issue: combinatorial explosion. The number of possible choices is vast: far too vast to be usable in most practical applications. And so, with that, we've laid the groundwork for this blog post. Sorry for the long detour.

The "wave function collapse algo", which I would rather call the "entropy collapse algo", might work like so. It starts with Kleineberg's core idea, and limits it to a one-dimensional grid -- a sentence, with grid locations for each word. Part of the generation task is to say "I want a sentence with words A,B,C in it, and I want that sentence to be no more than 20 words long".

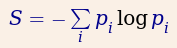

Given a partially generated sentence string, it selects the next word location to fill in. The magic of the algo is in selecting this slot. It computes the entropy for each slot, then picks the one with the lowest entropy. Explicitly, this is

S = - sum_i p_i log p_i

where p_i is the probability of word-disjunct-pair i and -log p_i is the LG cost. In LG, a "word-disjunct pair" is a "jigsaw puzzle piece". The "cost" is something that LG uses to assign priority by mimicking entropy. The sum_i is a sum over all word-disjunct pairs that meet the current boundary condition, i.e. can connect to the neighboring connectors.

Given all possible word locations in the sentence, the algo selects one location, by being greedy: it picks the one with the smallest entropy. Having selected a location to fill in, the "entropy collapse algo" then selects something (some word) for that slot, and then repeats.

I like this algo because it explicitly avoids a combinatoric explosion, and it also explicitly selects the lowest-cost sentences from the get-go. It can generate more than one sentence, by back-tracking, and doing so will generating sentences in cost-increasing order, more or less. This makes it very nice for practical applications.

This sounds like a great way to generate sentences, until you start thinking about how to write the code, at which point, things get a little dicey. In LG, the constraints are non-local, unlike blocks-world, where all constraints are nearest-neighbor constraints. Also, unlike blocks-world, selecting a word can result in the creation of new spaces ("dark energy" for physics aficionados) -- for example, given the partial sentence

+-->Wd--+--S--

| |

LEFT-WALL this

it can be extended as

+-->Wd--+--S--+--O--

| | |

LEFT-WALL this is

or as

+-->Wd--+--S--------+--O--

| | +--Em--+

| | | |

LEFT-WALL this * is

where the star is a new empty slot, which can be filled in with some emphasizing adverb: e.g. "this really is ..." or "this surely is ..." -- so unlike blocks-world, the size of the LG universe can grow.

There are some other subtle points. Suppose I want to generate sentences with specific words in them, but I don't know the word order, yet. The words might be "Ben" "eats" and "pizza" but of course, one could generate: "the pizza was eaten by Ben" or "we saw Ben eating a pizza" or "he was eating a pizza, when we last saw Ben". Worse: we need lexical functions to provide the alternatives eats-eating-eaten. So there's lots of interesting stuff to explore.

None-the-less, the core idea, of assembling the jigsaw-puzzle pieces in such a way that one is working with the minimum-entropy locations first, is a fantastic idea. (Painfully obvious, in retrospect: when one assembles a real-life jigsaw puzzle, one does exactly this: one starts with edges, cause there aren't very many of them. Then one organizes by color, and does the ones with the smallest number of choices, first. And so on. In physics, there is a related idea, called "diffusion limited aggregation".)