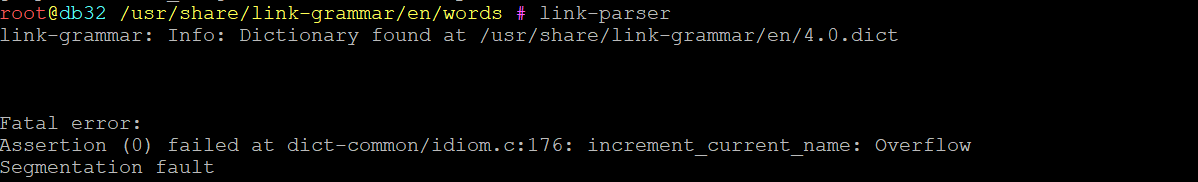

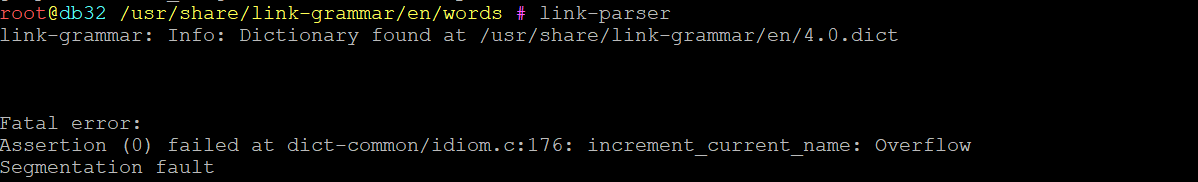

Loading big dictionary => crash.

10 views

Skip to first unread message

Pierre

Apr 18, 2021, 11:33:26 AM4/18/21

to link-grammar

Hello

Loading homemade dictionary does overflow if more than a few 100 000s entries.

My need is 150 000 000 entries....

My server is 250G of Ram, and link-grammar was only using 30G when crached.

Any chance for that to be fixed ?

Linas Vepstas

Apr 18, 2021, 12:58:52 PM4/18/21

to link-grammar

Fascinating!

I can't imagine what you are doing!

Best bet is to open a bug report on github, we can have detailed discussions there. Fixing it needs an example dictionary that triggers the problem.

Those sizes are .. tough. I think that it would be almost surely better to use the sqlite3 storage format for the dictionary, rather than the ascii-file. This is because the ascii file forces *all* of the dictionary to be loaded into RAM. The sqlite3 format only loads that part which is needed for the current parse (and then clears RAM after it's done) so its much much more scalable.

Thinking about it now, its clear that your graphs will never fit into RAM (or, at best, just barely fit) if you uses the plain-file format.

--linas

--

You received this message because you are subscribed to the Google Groups "link-grammar" group.

To unsubscribe from this group and stop receiving emails from it, send an email to link-grammar...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/link-grammar/435aa4b4-f408-43dc-8f45-4f38a47e3bdfn%40googlegroups.com.

--

Patrick: Are they laughing at us?

Sponge Bob: No, Patrick, they are laughing next to us.

Reply all

Reply to author

Forward

0 new messages