Skip to first unread message

Darren Wallace

Apr 17, 2014, 8:35:12 AM4/17/14

to learnin...@googlegroups.com

Hi all,

I'm trying to get my head around how we may go about reporting at scale, thinking about both the mongodb layer and creating some custom backbone views. To do that i need a db containing a serious number of statements. I'd rather use representative data, rather than spamming the api with any old tosh.

Is there a sample db I could use? Can anyone recommend a method of creating a record store of sufficient scale to properly get to grips with reporting? What approaches are the rest of you guys taking?

Thanks in advance for any advice or suggestions

Russell Duhon

Apr 17, 2014, 12:36:58 PM4/17/14

to Darren Wallace, learnin...@googlegroups.com

Hi Darren,

For the foreseeable future you're unlikely to see highly heterogeneous data at scale, so I'd come up with a basic pattern representative of some typical interaction you're likely to see (in many deployments, that's data from rapid authored packages, still) and then replicate that heavily for a lot of different actors in a number of different permutations, plus toss in a much smaller number of statements for overlapping actors that are significantly different (so that you're ready to deal with that when reporting).How much scale you want to prepare for depends on who's involved, but a company with many employees or one with a lot of training per person can easily hit millions of statements per month.

Russell Duhon

CTO, Saltbox

Makers of WaxLRS

--

You received this message because you are subscribed to the Google Groups "Learning Locker" group.

To unsubscribe from this group and stop receiving emails from it, send an email to learning-lock...@googlegroups.com.

To post to this group, send email to learnin...@googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

mrdownes

Apr 17, 2014, 12:52:37 PM4/17/14

to Darren Wallace, learnin...@googlegroups.com

This might still count as "any old tosh" but you could write a simple app that gets statements from the public LRS at tincanapi.com and then fires them directly at learning locker.

Actually, that could be an interesting tool for performance testing.

Andrew

Sent from Samsung Mobile

Darren Wallace

Apr 23, 2014, 6:23:38 AM4/23/14

to learnin...@googlegroups.com, Darren Wallace

Thanks both. I suppose thats one of the questions I have Russell - how heterogeneous, or varied is xApi data in "real world" applications, or at least in the implementations you've seen? Our first task will be to transition from recording semi-formal, SCORM type course interactions to including so more fluid interactions. for us at least there's the need to provide some continuity and parity with our current SCO reporting. Which is why the TinCanApi.com stuff seemed a little too scattered for our purposes.

I have thought about mining the TinCanApi.com statements Andrew. I suppose I'm just unsure about how representative it really is. Maybe i can filter for a specific sub-set of data. Think i need to take another look at that data set.

Thanks for the suggestions. Any more ideas gratefully received, and i'll keep you posted if i make any progress.

Ben Betts

Apr 24, 2014, 3:12:48 AM4/24/14

to Darren Wallace

In our experience thus far Darren I've seen a very wide variation in the quality and normalisation of data. The spec is really open, which is great, but also provides a whole range of challenges. Perhaps check out the CMI-5 schema in development for a look at a group tackling this issue from a formal E-learning point of view. Personally I think we're going to have to publish our schemas and adopt other industry standards along the way to help normalise data between distributed applications. As it is now it gets messy when you cease to control each activity provider.

Ben

Message has been deleted

Darren Wallace

Jul 8, 2014, 7:14:50 AM7/8/14

to learnin...@googlegroups.com, darren....@team.cdsm.co.uk

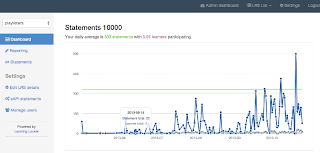

So I've made some progress here. I've taken a sample set of 10000 learning events recorded in our learning platform, associated each event with a random persona generated fromhttp://randomuser.me and run a script to push each event as a statement to LL. So i now have a nice fat data set to start reporting on. Sweet.

My problem is the statements are all shown as happening at the same time, making the dashboard and report views really boring. I included a variable timestamp parameter with each statement, which has been stored, but its clearly not the field thats used to generate the reports.

So my question is - which date field on the statement is being used to generate the dashboard and report views? Also, could you point me at the code for these views please? I'm going to try some jiggery pokery in the db to get some more interesting dashboard views.

Heres a sample statement. You can see the candidate date fields are statement.timestamp, statement.stored or the updated_at and created_at fields.

{ _id: ObjectId("53bbbcc12956b0828d006aec"), lrs: { _id: "53bbb94e2956b0828d006aeb", name: "mls_xapi_fiddle" }, statement: { timestamp: "2013-05-02T00:59:50.737Z", actor: { name: "tinybird585", mbox: "mailto:yolanda...@example.com" }, object: { objectType: "Activity" }, verb: { }, result: { score: "" }, authority: { name: "", mbox: "mailto:bl...@blah.co.uk", objectType: "Agent" }, id: "050e0c4e-364d-4331-88bf-6929c00b5a2f", stored: "2014-07-08T09:41:21.552500+00:00" }, updated_at: ISODate("2014-07-08T09:41:21.553Z"), created_at: ISODate("2014-07-08T09:41:21.553Z")}

dave...@ht2.co.uk

Jul 9, 2014, 11:24:05 AM7/9/14

to learnin...@googlegroups.com, darren....@team.cdsm.co.uk

The latest code (in master) now uses timestamp to generate graphs. After upgrading, will need to run the custom artisan script "php artisan timestamp:convert" - I would back up your data before doing this.

Cheers.

Darren Wallace

Jul 10, 2014, 10:10:21 AM7/10/14

to learnin...@googlegroups.com, darren....@team.cdsm.co.uk, dave...@ht2.co.uk

Thats awesome. i'll give it a go.

Darren Wallace

Jul 10, 2014, 11:43:35 AM7/10/14

to learnin...@googlegroups.com, darren....@team.cdsm.co.uk, dave...@ht2.co.uk

David Tosh

Jul 10, 2014, 12:57:09 PM7/10/14

to learnin...@googlegroups.com, darren....@team.cdsm.co.uk, dave...@ht2.co.uk

No problem, glad it worked. If you find any issues / bugs the best place to report them is our issue tracker on Github https://github.com/LearningLocker/learninglocker/issues?state=open

Cheers.

Reply all

Reply to author

Forward

0 new messages