Lasinfo ERROR: 'end-of-file' after 165191041 of 194351196 points

66 views

Skip to first unread message

BMK

Feb 14, 2023, 5:36:48 AM2/14/23

to LAStools - efficient tools for LiDAR processing

Hello all!

I'm creating a Python script that works with point clouds. Currently, I'm trying to import a 4GB point cloud file in Python: however, laspy refuses to import the point cloud, stating:

ValueError: buffer size must be a multiple of element size

Closer investigation of this error points me to the fact that something is amiss with the header of the file. This is where LAStools comes in: running "lasinfo -i my_las_file.las" yields:

ERROR: 'end-of-file' after 165191041 of 194351196 points for 'my_las_file.las'

WARNING: real number of point records (165191041) is different from header entry (194351196).

WARNING: for return 1 real number of points by return (165191041) is different from header entry (194351196).

WARNING: for return 1 real number of points by return (165191041) is different from header entry (194351196).

My conclusion: the header states that there are more points in the file than the tool is able to read. So, I ran

"lasinfo -i my_las_file.las -repair" to fix it. This was succesful. The file now loads correctly in laspy.

This all sounds good and all, but here is the strange thing: I have included two attachments, both the 'unrepaired' point cloud and the 'repaired' point cloud as displayed in CloudCompare.

But why, if all point numbers between

165191041 and

194351196 don't exist, am I able to properly load and visualize them in CloudCompare without any issues whatsoever?

Both laspy and LAStools indicate that approximately 30.000.000 of the points are corrupt / unreadable / missing, but CloudCompare is able to find and extract these 30.000.000 records perfectly and with finely detailed information on them. So surely, they must exist somewhere in the file?

While I am able to load the repaired point cloud file within Python, these missing squares of points cause high inaccuracies within my algorithm. I need every point to be there. Does anyone know how I can solve this?

Jorge Delgado García

Feb 14, 2023, 6:03:35 AM2/14/23

to last...@googlegroups.com

Could you include the LAZ files? Do you have tried to optimise your files using LASoptimize?

Jorge

--

Download LAStools at

https://rapidlasso.de

Manage your settings at

https://groups.google.com/g/lastools/membership

---

You received this message because you are subscribed to the Google Groups "LAStools - efficient tools for LiDAR processing" group.

To unsubscribe from this group and stop receiving emails from it, send an email to lastools+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/lastools/ece43f5f-4c66-4661-b83a-9ddc68ee2124n%40googlegroups.com.

Michał Kuś

Feb 14, 2023, 6:09:25 AM2/14/23

to last...@googlegroups.com

Hi,

With file size this big I would be concerned about memory, especially with lastools being mainly 32 bit applications.

Some python interpreters are also 32 bit still.

This end of file might apply to end of memory buffer

My regards

Michał Kuś

--

BMK

Feb 14, 2023, 6:58:31 AM2/14/23

to LAStools - efficient tools for LiDAR processing

The .las file that I'm using can be downloaded here: https://mega.nz/file/kFMRDaBT#5AliGHvJO8a_Y0zboK563muM5NCEf4cKG_5B0KmTToY

Optimization using "lasoptimize -i my_las_file.las -cpu64" yields a very similar error:

ERROR: 'end-of-file' after 165191041 of 194351196 points for 'my_las_file.las'

WARNING: number of points 165191041 in file does not match counter 194351196 in LAS header

WARNING: written 165191041 points but expected 194351196 points

WARNING: written 165191041 points but expected 194351196 points

It does produce an output file, which shows the exact same gaps in the point cloud as the repaired file.

All things considered I'm starting to think that this being a memory issue is indeed fairly likely; and future files I intend to work with are much bigger than 4GB, so that's definitely something I'll want to tackle either way.

Is there any workaround to this? Ideally I'd like the process to be entirely automated start to finish, so maybe I'd need to look into a method for loading the point cloud in section by section, or something of the sort?

Op dinsdag 14 februari 2023 om 12:09:25 UTC+1 schreef Michał Kuś:

Stephen Ferrell

Feb 14, 2023, 10:20:39 AM2/14/23

to last...@googlegroups.com

Correct me if I'm wrong, but you should be using lasoptimize64.exe for files that are 4GB and larger.

To view this discussion on the web visit https://groups.google.com/d/msgid/lastools/57e586b8-de89-48c6-871a-30b7b1f4e1ffn%40googlegroups.com.

-- The quality of your thoughts will determine the quality of your life.

-- Never trust the wisdom of someone who pretends for a living.

Jorge Delgado García

Feb 14, 2023, 3:10:25 PM2/14/23

to last...@googlegroups.com

Thank you very much for sending the file, the truth is that the level of detail is incredible.

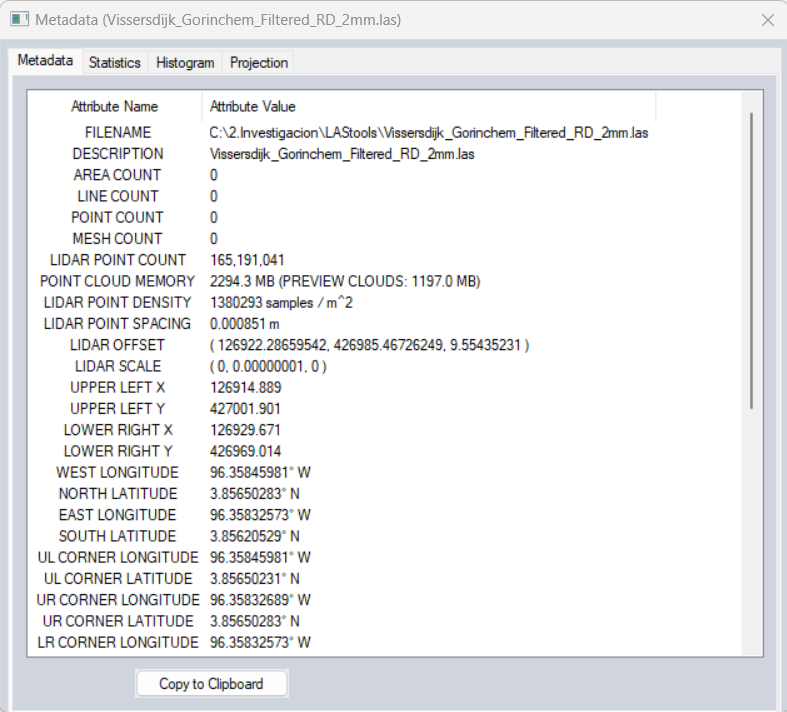

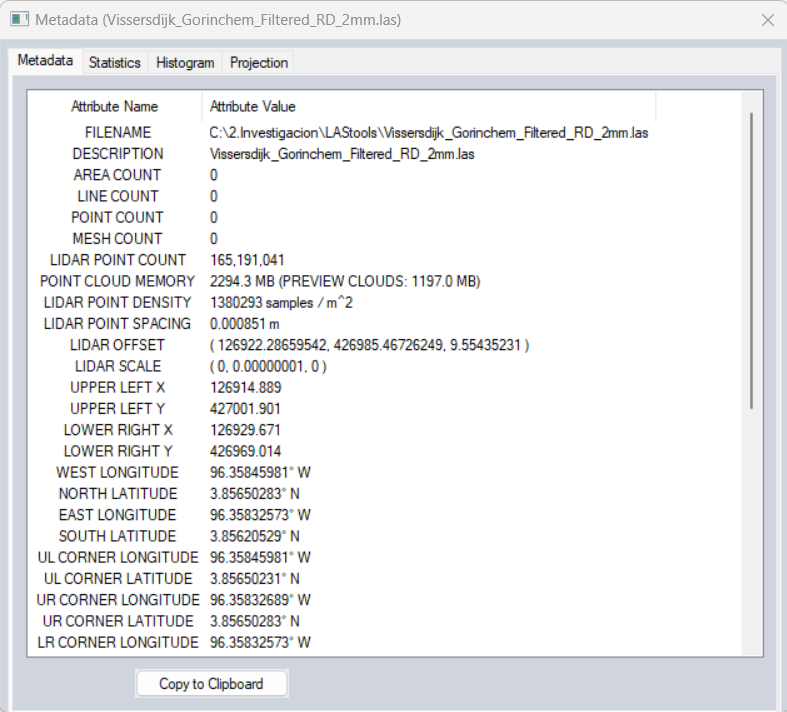

I have been analyzing the file, and actually, it is not a problem of LAStools but of the file itself. The LAS format is a very robust format, and incorporates a record in the header of the number of points that should appear in the file (194351196), and that number does not match the one you have (165191041). LAStools is also very robust and warns you about this problem, the same error I got trying to open the file in other programs (CloudCompare v.2.13 and Global Mapper). I have also tried exporting the file to TXT to see if it was a write problem, but indeed the points do not seem to exist. On the other hand, if you use LASinfo you can see that the xmin,ymin,zmin,xmax,ymax,zmax don't seem to have too logical values either.

I think the only solution is to have the export done again in Reality Capture and verify that the file is correct (e.g. LASinfo). On the other hand, two additional recommendations, the first one would be to use LASoptimize (if you use the -cpu64 option you are actually using the 64 bit option, in response to another comment) to adjust the scales, offsets, etc. and, of course, store everything in LAZ to improve the data storage.

Best regards and if you have any further questions, feel free to raise them, it's an interesting topic. LAS format is more and more used in all programs, but unfortunately not always the optimization of the format parameters is quite adequate in many programs (although here I do not know what could have been the problem).

I have been analyzing the file, and actually, it is not a problem of LAStools but of the file itself. The LAS format is a very robust format, and incorporates a record in the header of the number of points that should appear in the file (194351196), and that number does not match the one you have (165191041). LAStools is also very robust and warns you about this problem, the same error I got trying to open the file in other programs (CloudCompare v.2.13 and Global Mapper). I have also tried exporting the file to TXT to see if it was a write problem, but indeed the points do not seem to exist. On the other hand, if you use LASinfo you can see that the xmin,ymin,zmin,xmax,ymax,zmax don't seem to have too logical values either.

I think the only solution is to have the export done again in Reality Capture and verify that the file is correct (e.g. LASinfo). On the other hand, two additional recommendations, the first one would be to use LASoptimize (if you use the -cpu64 option you are actually using the 64 bit option, in response to another comment) to adjust the scales, offsets, etc. and, of course, store everything in LAZ to improve the data storage.

Best regards and if you have any further questions, feel free to raise them, it's an interesting topic. LAS format is more and more used in all programs, but unfortunately not always the optimization of the format parameters is quite adequate in many programs (although here I do not know what could have been the problem).

Jorge

To view this discussion on the web visit https://groups.google.com/d/msgid/lastools/57e586b8-de89-48c6-871a-30b7b1f4e1ffn%40googlegroups.com.

--

Jochen Rapidlasso

Feb 14, 2023, 3:41:03 PM2/14/23

to LAStools - efficient tools for LiDAR processing

Hi @all and thanks for the analysis.

I think you are right Jorge: Is is not a LAS problem.

For me it seems like a windows file system problem:

You may use a FAT32 storage somewhere in your flow: There the file size is limited to 32 bit (4,294,967,295 Bytes) - this is exactly the size of your file.

Your header tells the file contains 194,351,196 points with a pointsize of 26 bytes.

So the file size should be 5,053,131,096 bytes + header size.

I think your file ends just at point 165,191,041 and for a serious piece of software it will be hard to read behind :)

Best regards,

Jochen @rapidlasso

Jochen Rapidlasso

Feb 14, 2023, 4:00:59 PM2/14/23

to LAStools - efficient tools for LiDAR processing

Another interesting point about this file:

I was wondering about this weird scale factors and max(i32) values at the data range.

I expected may a range violation on the values, because the min/max integer values where at the absolute limit

of the 32 bit signed integer data range.

scale factor x y z: 0.000000003444887 0.000000007661791 0.00000000384642

offset x y z: 126922.28659541991 426985.46726249403 9.554352312348783

min x y z: 126914.88875778105 426969.01369117445 1.29422929789871

max x y z: 126929.68443305876 427001.92083381361 17.814475326798856

reporting minimum and maximum for all LAS point record entries ...

ERROR: 'end-of-file' after 165191041 of 194351196 points for 'Vissersdijk_Gorinchem_Filtered_RD_2mm.las'

X -2147482401 2143696231

Y -2147483647 2144940197

Z -2147483213 2147483647

Having a closer look shows the generating software just extend the integer data range to the maximum

and calculate the scale for every dimension reverse.

So you get maximum resolution - with a maximum blown up dataset.

LAZ this file give just a compression factor of around 2.

Jochen @rapidlasso

I was wondering about this weird scale factors and max(i32) values at the data range.

I expected may a range violation on the values, because the min/max integer values where at the absolute limit

of the 32 bit signed integer data range.

scale factor x y z: 0.000000003444887 0.000000007661791 0.00000000384642

offset x y z: 126922.28659541991 426985.46726249403 9.554352312348783

min x y z: 126914.88875778105 426969.01369117445 1.29422929789871

max x y z: 126929.68443305876 427001.92083381361 17.814475326798856

reporting minimum and maximum for all LAS point record entries ...

ERROR: 'end-of-file' after 165191041 of 194351196 points for 'Vissersdijk_Gorinchem_Filtered_RD_2mm.las'

X -2147482401 2143696231

Y -2147483647 2144940197

Z -2147483213 2147483647

Having a closer look shows the generating software just extend the integer data range to the maximum

and calculate the scale for every dimension reverse.

So you get maximum resolution - with a maximum blown up dataset.

LAZ this file give just a compression factor of around 2.

Jochen @rapidlasso

Jorge Delgado García

Feb 14, 2023, 4:09:35 PM2/14/23

to last...@googlegroups.com

That's what I meant by problems with LAS parameter settings by certain programs. This is something I am encountering very often, and LASinfo and LASoptimize are two great allies for this.

The scaling factors that are applied are also strange, although logically they can be given by the min/max values that you consider in XYZ.

Jorge

To view this discussion on the web visit https://groups.google.com/d/msgid/lastools/3e10c897-919f-4369-83e9-46270a858147n%40googlegroups.com.

--

BMK

Feb 16, 2023, 9:11:47 AM2/16/23

to LAStools - efficient tools for LiDAR processing

Thank you all very much for your replies!

I have e-mailed the company that created the point cloud for us and asked them to send it to us anew. Some quick checks on this 'new' file using both cloudComPy and laspy now immediately confirmed that it had 194,351,196 points, and its size on the disk is 5GB. Loading and manipulating the point cloud in LAStools and Python works flawlessly. Whether it truly was the old computer or another process that cut it off to 32bit, I cannot know for certain, but as it turns out, this was indeed the source of the problem.

Truth be told, I wasn't even familiar with the FAT32 storage type, and wouldn't ever have found this out by myself. Though it caused the necessary headache, I'm glad that I ran into this problem, because I feel like this knowledge will always remain useful in the future. Thank you again for your help!

Firstly, to answer some questions on the coordinates: The x, y and z values are based on the Dutch national coordinate system, GPS-tagged true to reality.

I sadly am not entirely familiar with how the cloud or its parameters have been generated, as this has been done externally by another company. A lot of details regarding point cloud generation are still new to me. These are very good tips, though: I'll have a look into reformatting and/or compressing the file before I continue with it!

Just a quick additional question, if anyone is willing: if the scale factors and data ranges of this file stand out as strange, what ranges do they normally lie in (more or less)? Asking so that in a future situation, I might be able to identify any unusual values myself, should something like this pop up again.

Regarding the issue itself: using your insights, I have done some more digging. I checked the device I currently employ for working on this point cloud, and found that it makes use of NTFS storage. So that couldn't be the problem. However, after asking around, it turns out there was an older device that the point cloud used to briefly be stored on. We don't currently have access to this device anymore, so I cannot check its storage type, but it is very likely that this device indeed made use of a FAT32 system.

I have e-mailed the company that created the point cloud for us and asked them to send it to us anew. Some quick checks on this 'new' file using both cloudComPy and laspy now immediately confirmed that it had 194,351,196 points, and its size on the disk is 5GB. Loading and manipulating the point cloud in LAStools and Python works flawlessly. Whether it truly was the old computer or another process that cut it off to 32bit, I cannot know for certain, but as it turns out, this was indeed the source of the problem.

Truth be told, I wasn't even familiar with the FAT32 storage type, and wouldn't ever have found this out by myself. Though it caused the necessary headache, I'm glad that I ran into this problem, because I feel like this knowledge will always remain useful in the future. Thank you again for your help!

Op dinsdag 14 februari 2023 om 22:09:35 UTC+1 schreef Jorge Delgado García:

Reply all

Reply to author

Forward

0 new messages