"Gogo protobuf looking for new ownership"

725 views

Skip to first unread message

Antoine Pelisse

May 20, 2020, 11:35:30 AM5/20/20

to K8s API Machinery SIG, Clayton Coleman

https://github.com/gogo/protobuf/issues/691

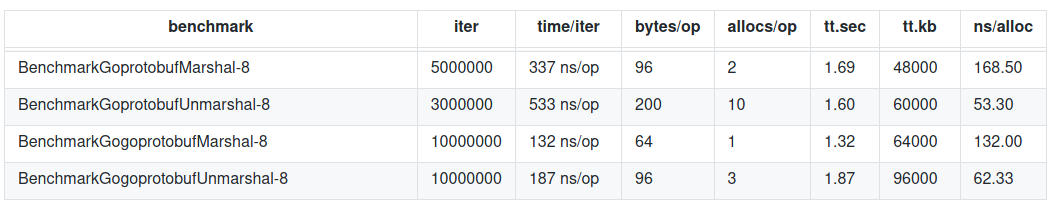

It looks like the life of gogo/protobuf is endangered and we heavily depend on it, without knowing if the golang/protobuf alternative is good enough. Last time I micro-benchmarked the two, there was a massive gap in favor of gogo/protobuf.

We need to be careful and run real-life benchmarks with golang/protobuf to see if this would cause performance degradation sooner rather than later. +Clayton Coleman do you know other reasons than performance why golang/protobuf wouldn't work? I remember that we have the encapsulation in "Unknown" that uses manually written serialization code, not sure if that'd work with golang/protobuf.

That "deprecation notice" already had some consequences on a dependency upgrade that took more efforts than we would have expected. https://github.com/kubernetes/kubernetes/pull/90582

I'm not sure if this is a topic we should bring to architecture since it seems broader than api-machinery.

Thanks,

Antoine

Clayton Coleman

May 20, 2020, 5:25:07 PM5/20/20

to Antoine Pelisse, K8s API Machinery SIG

https://github.com/gogo/protobuf/issues/691It looks like the life of gogo/protobuf is endangered and we heavily depend on it, without knowing if the golang/protobuf alternative is good enough. Last time I micro-benchmarked the two, there was a massive gap in favor of gogo/protobuf.We need to be careful and run real-life benchmarks with golang/protobuf to see if this would cause performance degradation sooner rather than later. +Clayton Coleman do you know other reasons than performance why golang/protobuf wouldn't work? I remember that we have the encapsulation in "Unknown" that uses manually written serialization code, not sure if that'd work with golang/protobuf.

I’m not sure if go proto can work with our innate types and preserve the same serialization. Maybe proto 3 (with default fields) would be sufficient for the build of cases, but more than a very few serialization exceptions would be super expensive.

This definitely needs time and attention. Fortunately we generate our proto, so we can explore other serialization.

Joe Tsai

May 20, 2020, 8:51:45 PM5/20/20

to K8s API Machinery SIG

Hi Clayton,

My name is Joe and I'm one of the maintainers of the golang/protobuf implementation.

> Maybe proto 3 (with default fields) would be sufficient for the build of cases

Can you clarify what you mean by "proto3 with default fields"? Did you mean the ability to preserve presence for certain fields (e.g., control whether the field type is string versus *string)?

JT

Clayton Coleman

May 20, 2020, 9:04:15 PM5/20/20

to Joe Tsai, K8s API Machinery SIG

Right - we picked Gogo early on because we wanted to generate idl from existing structs (to preserve behavior) and for performance on allocations (which pointers destroy). This was pre proto3 support was finalized, so we did a proto schema and explicitly avoided pointer use except on a few fields (over time we’ve added more).

So if via proto3 we can have idl that generates go structs that use value primitives (our current structs) we can bypass that problem. The more specialized use cases we have where we want to maintain compatibility will be their own problems, but are more scoped.

On May 20, 2020, at 8:51 PM, 'Joe Tsai' via K8s API Machinery SIG <kubernetes-sig...@googlegroups.com> wrote:

Hi Clayton,

--

You received this message because you are subscribed to the Google Groups "K8s API Machinery SIG" group.

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-sig-api-m...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-sig-api-machinery/e485a8ad-327f-4065-bbed-c97f3b686512%40googlegroups.com.

Joe Tsai

May 20, 2020, 9:15:27 PM5/20/20

to K8s API Machinery SIG

An upcoming feature to proto3 is the ability to explicitly specify presence.

Today in proto3, if you were to specify "int32 field_foo = 1;" it would be generated as a Go struct field of type int32. For the rare cases where you need to preserve presence, you would be able to explicitly specify the "optional" keyword such that "optional int32 field_foo = 1;" would be generated as a Go struct field of type *int32 (just like proto2).

> This was pre proto3 support was finalized

Proto3 support came in a some time ago (4 years ago). Has there been any substantial performance comparisons between gogo/protobuf and golang/protobuf since there? Since 2016, golang/protobuf has gained a number of performance optimizations.

JT

Clayton Coleman

May 20, 2020, 9:30:58 PM5/20/20

to Joe Tsai, K8s API Machinery SIG

On May 20, 2020, at 9:15 PM, 'Joe Tsai' via K8s API Machinery SIG <kubernetes-sig...@googlegroups.com> wrote:

An upcoming feature to proto3 is the ability to explicitly specify presence.

Today in proto3, if you were to specify "int32 field_foo = 1;" it would be generated as a Go struct field of type int32. For the rare cases where you need to preserve presence, you would be able to explicitly specify the "optional" keyword such that "optional int32 field_foo = 1;" would be generated as a Go struct field of type *int32 (just like proto2).

Good. Then we should at least be able to look and see how different the structs are and the special cases. We reserved the right to change the proto output, but of course we’d have to deal with implications of that as a new serialization api version (proto2 to 3).

> This was pre proto3 support was finalizedProto3 support came in a some time ago (4 years ago). Has there been any substantial performance comparisons between gogo/protobuf and golang/protobuf since there? Since 2016, golang/protobuf has gained a number of performance optimizations.

No - all optimization work in the last four years has been in gogo.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-sig-api-machinery/e9e0e7d3-c06d-4db1-83de-3b9d89f8abdc%40googlegroups.com.

Joe Tsai

May 20, 2020, 9:45:06 PM5/20/20

to Clayton Coleman, K8s API Machinery SIG

course we’d have to deal with implications of that as a new serialization api version (proto2 to 3).

Proto2 and proto3 have the same underlying wire format, so I'm not sure how this would matter. The distinction between proto2 and proto3 primarily applies to API-level semantics and have almost no impact on the wire format.

No - all optimization work in the last four years has been in gogo.

Is that a "No" to my question regarding whether any substantial benchmarking has been performed? or a "No" regarding my statement that golang/protobuf has been optimized?

I'm not discounting anything gogo/protobuf may have done on their end to optimize, but there have been a number of optimization efforts to golang/protobuf. If a substantial comparison hasn't been done in recent times, then now seems like a good time to get those numbers.

JT

You received this message because you are subscribed to a topic in the Google Groups "K8s API Machinery SIG" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/kubernetes-sig-api-machinery/tcwFubV9Boo/unsubscribe.

To unsubscribe from this group and all its topics, send an email to kubernetes-sig-api-m...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-sig-api-machinery/CAH16ShLuU2GercvJOgaqJT884Uf%3Dp1PpWSXyBii2DrjrLn6RWw%40mail.gmail.com.

Clayton Coleman

May 20, 2020, 9:55:46 PM5/20/20

to Joe Tsai, K8s API Machinery SIG

course we’d have to deal with implications of that as a new serialization api version (proto2 to 3).Proto2 and proto3 have the same underlying wire format, so I'm not sure how this would matter. The distinction between proto2 and proto3 primarily applies to API-level semantics and have almost no impact on the wire format.

In gogo we can control wire structure more directly - so it would be places where we have to change the structure to match the proto3 rep to get the structure we want differently than 2.

No - all optimization work in the last four years has been in gogo.Is that a "No" to my question regarding whether any substantial benchmarking has been performed? or a "No" regarding my statement that golang/protobuf has been optimized?

No, all of our effort over the last four years has been directed at gogo.

I'm not discounting anything gogo/protobuf may have done on their end to optimize, but there have been a number of optimization efforts to golang/protobuf. If a substantial comparison hasn't been done in recent times, then now seems like a good time to get those numbers.

The switching cost is possibly so high that even a big perf improvement wouldn’t be worth it. But yes when we test out the proto3 route we’ll want to check the benchmark tests don’t regress significantly.

Joe Tsai

May 20, 2020, 10:10:30 PM5/20/20

to Clayton Coleman, K8s API Machinery SIG

> The switching cost is possibly so high that even a big perf improvement wouldn’t be worth it.

Performance is certainly a consideration, but this thread was instigated by the fact that gogo/protobuf's non-maintenance has been observed for at least half a year, and has just formally announced that the original developers are not maintaining it anymore and need someone to step up to own it.

Since Kubernetes heavily relies on gogo/protobuf, it seems that the project is forced to make a decision on what to do. Possible options are:

- Kubernetes does nothing and hopes that gogo/protobuf finds a good maintainer. A glance through the recent history of gogo/protobuf shows that even in past year, most changes have been submitted with little observable code review. Is this level of maintenance acceptable for a project that is a critical piece of cloud infrastructure?

- Kubernetes' maintainers volunteer to maintain gogo/protobuf, in which case they can provide their own level of support and maintenance.

- Kubernetes switches to golang/protobuf which has a high guarantee of long-term maintenance.

- Kubernetes avoids both gogo/protobuf and golang/protobuf and maintains its own serialization logic.

I don't feel strongly what Kubernetes plans to do, but if the 3rd option is chosen, I'm willing to extend support.

JT

Clayton Coleman

May 20, 2020, 10:17:04 PM5/20/20

to Joe Tsai, K8s API Machinery SIG

> The switching cost is possibly so high that even a big perf improvement wouldn’t be worth it.Performance is certainly a consideration, but this thread was instigated by the fact that gogo/protobuf's non-maintenance has been observed for at least half a year, and has just formally announced that the original developers are not maintaining it anymore and need someone to step up to own it.Since Kubernetes heavily relies on gogo/protobuf, it seems that the project is forced to make a decision on what to do. Possible options are:

- Kubernetes does nothing and hopes that gogo/protobuf finds a good maintainer. A glance through the recent history of gogo/protobuf shows that even in past year, most changes have been submitted with little observable code review. Is this level of maintenance acceptable for a project that is a critical piece of cloud infrastructure?

The 1b option is just fork it under a new name. We haven’t needed much over the last two years, and I suspect we don’t need much more. Not my preferred outcome, but the list of actual fixes we have needed has been small and the amount of effort to stay up to date has been significant.

- Kubernetes' maintainers volunteer to maintain gogo/protobuf, in which case they can provide their own level of support and maintenance.

- Kubernetes switches to golang/protobuf which has a high guarantee of long-term maintenance.

- Kubernetes avoids both gogo/protobuf and golang/protobuf and maintains its own serialization logic.

I don't feel strongly what Kubernetes plans to do, but if the 3rd option is chosen, I'm willing to extend support.

That’s appreciated. We’ll have to discuss in api-machinery for sure.

Joe Tsai

May 20, 2020, 10:20:51 PM5/20/20

to K8s API Machinery SIG

> The 1b option is just fork it under a new name.

My warning against this approach is that gogo/protobuf does not implement protobuf reflection, and the rest of the Go ecosystem will increasingly be moving to a world where users expect that protobuf messages provide protobuf reflection. At least inside Google, my observation is that there are already some users who try to do reflection-based operations on Kubernete's types (to widely varying degrees of success).

JT

johan.br...@gmail.com

May 21, 2020, 4:19:31 AM5/21/20

to K8s API Machinery SIG

Re: performance; https://github.com/alecthomas/go_serialization_benchmarks was last updated in August 2019 with Go 1.12.6. Obviously more kubernetes specific benchmarks would be great, but this is still putting GoGo proto ahead quite significantly:

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-sig-api-machinery+unsub...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-sig-api-machinery/e9e0e7d3-c06d-4db1-83de-3b9d89f8abdc%40googlegroups.com.

--

You received this message because you are subscribed to a topic in the Google Groups "K8s API Machinery SIG" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/kubernetes-sig-api-machinery/tcwFubV9Boo/unsubscribe.

To unsubscribe from this group and all its topics, send an email to kubernetes-sig-api-machinery+unsub...@googlegroups.com.

johan.br...@gmail.com

May 21, 2020, 4:21:12 AM5/21/20

to K8s API Machinery SIG

Image disappeared from my last post:

Daniel Smith

May 21, 2020, 11:30:56 AM5/21/20

to johan.br...@gmail.com, Antoine Pelisse, Jenny Buckley, K8s API Machinery SIG

I vaguely recall +Antoine Pelisse and/or +Jenny Buckley investigating this for server side apply late last year and finding that gogo proto was still better for k8s, but I could be misremembering.

> users expect that protobuf messages provide protobuf reflection

How is this possible, if you do not have the proto descriptor?

We are extremely atypical proto users. Kubernetes users (programmatically) interact with our static types, not proto-generated wrappers. So, this is not meaningful for us in general. Proto is a means to an end for us, not a feature. Proto is actually rather awkward for us as a wire format because it's not self-describing. When humans interact with our data, they use yaml or json, not textpb or anything like that.

(There's a chance we could find a very different use for this since we don't yet have a binary type for custom resources.)

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-sig-api-machinery/e9e0e7d3-c06d-4db1-83de-3b9d89f8abdc%40googlegroups.com.

--

You received this message because you are subscribed to a topic in the Google Groups "K8s API Machinery SIG" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/kubernetes-sig-api-machinery/tcwFubV9Boo/unsubscribe.

To unsubscribe from this group and all its topics, send an email to kubernetes-sig-api-m...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-sig-api-machinery/CAH16ShLuU2GercvJOgaqJT884Uf%3Dp1PpWSXyBii2DrjrLn6RWw%40mail.gmail.com.

--

You received this message because you are subscribed to the Google Groups "K8s API Machinery SIG" group.

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-sig-api-m...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-sig-api-machinery/c490e285-0849-48ed-ac7e-504be056e376%40googlegroups.com.

s...@kubernetes.io

May 29, 2020, 2:18:53 PM5/29/20

to K8s API Machinery SIG

Daniel,

is this what you were talking about?

-- Dims

On Thursday, May 21, 2020 at 11:30:56 AM UTC-4, lavalamp wrote:

I vaguely recall +Antoine Pelisse and/or +Jenny Buckley investigating this for server side apply late last year and finding that gogo proto was still better for k8s, but I could be misremembering.> users expect that protobuf messages provide protobuf reflectionHow is this possible, if you do not have the proto descriptor?We are extremely atypical proto users. Kubernetes users (programmatically) interact with our static types, not proto-generated wrappers. So, this is not meaningful for us in general. Proto is a means to an end for us, not a feature. Proto is actually rather awkward for us as a wire format because it's not self-describing. When humans interact with our data, they use yaml or json, not textpb or anything like that.(There's a chance we could find a very different use for this since we don't yet have a binary type for custom resources.)

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-sig-api-machinery+unsub...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-sig-api-machinery/e9e0e7d3-c06d-4db1-83de-3b9d89f8abdc%40googlegroups.com.

--

You received this message because you are subscribed to a topic in the Google Groups "K8s API Machinery SIG" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/kubernetes-sig-api-machinery/tcwFubV9Boo/unsubscribe.

To unsubscribe from this group and all its topics, send an email to kubernetes-sig-api-machinery+unsub...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-sig-api-machinery/CAH16ShLuU2GercvJOgaqJT884Uf%3Dp1PpWSXyBii2DrjrLn6RWw%40mail.gmail.com.

--

You received this message because you are subscribed to the Google Groups "K8s API Machinery SIG" group.

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-sig-api-machinery+unsub...@googlegroups.com.

Daniel Smith

May 29, 2020, 2:23:31 PM5/29/20

to s...@kubernetes.io, K8s API Machinery SIG

Yeah, it was part of the same effort, I'm not sure if that exact issue has the details I was thinking of though.

-- Dims

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-sig-api-machinery/e9e0e7d3-c06d-4db1-83de-3b9d89f8abdc%40googlegroups.com.

--

You received this message because you are subscribed to a topic in the Google Groups "K8s API Machinery SIG" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/kubernetes-sig-api-machinery/tcwFubV9Boo/unsubscribe.

To unsubscribe from this group and all its topics, send an email to kubernetes-sig-api-m...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-sig-api-machinery/CAH16ShLuU2GercvJOgaqJT884Uf%3Dp1PpWSXyBii2DrjrLn6RWw%40mail.gmail.com.

--

You received this message because you are subscribed to the Google Groups "K8s API Machinery SIG" group.

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-sig-api-m...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-sig-api-machinery/c490e285-0849-48ed-ac7e-504be056e376%40googlegroups.com.

--

You received this message because you are subscribed to the Google Groups "K8s API Machinery SIG" group.

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-sig-api-m...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-sig-api-machinery/0eaf1804-484f-498c-844a-189298deabe6%40googlegroups.com.

Jordan Liggitt

Nov 11, 2022, 4:57:59 PM11/11/22

to K8s API Machinery SIG

Well, the other shoe (finally, slowly) dropped, and gogo/protobuf is officially deprecated.

I started up a document to start to gathering context, enumerate the specific requirements that drove our use of gogo/protobuf and would be needed from a replacement, and enumerate possible solutions. I seeded it with a lot of the points raised in this thread, and added it to next week's agenda. Please take a look and add anything I missed or misstated.

While I don't think this is urgent, exactly (we haven't relied on a high velocity of changes from gogo/protobuf), even the previous state of gogo using underlying protobuf libraries in unsupported ways was fragile. This seems like a good prompt to figure out an approach we can rely on longer-term, before it becomes urgent.

Austin Cawley-Edwards

Dec 1, 2022, 1:48:55 PM12/1/22

to K8s API Machinery SIG

I brought this up with the Prometheus community a while back — have yet to resolve it, but found some good options and other migration examples. Perhaps there's relevant content for this group as well: https://groups.google.com/g/prometheus-developers/c/uFWRyqZaQis/m/Bi28HO4pAgAJ

Best,

Austin

Reply all

Reply to author

Forward

0 new messages