[RFC] Protecting users of kubectl delete

Eddie Zaneski

Hi Kuberfriendos,

We wanted to start a discussion about mitigating some of the potential footguns in kubectl.

Over the years we've heard stories from users who accidentally deleted resources in their clusters. This trend seems to be rising lately as newer folks venture into the Kubernetes/DevOps/Infra world.

First some background.

When a namespace is deleted it also deletes all of the resources under it. The deletion runs without further confirmation, and can be devastating if accidentally run against the wrong namespace (e.g. thanks to hasty tab completion use).

```

kubectl delete namespace prod-backup

```

When all namespaces are deleted essentially all resources are deleted. This deletion is trivial to do with the `--all` flag, and it also runs without further confirmation. It can effectively wipe out a whole cluster.

```

kubectl delete namespace --all

```

The difference between `--all` and `--all-namespaces` can be confusing.

There are certainly things cluster operators should be doing to help prevent this user error (like locking down permissions) but we'd like to explore what we can do to help end users as maintainers.

There are a few changes we'd like to propose to start discussion. We plan to introduce this as a KEP but wanted to gather early thoughts.

Change 1: Require confirmation when deleting with --all and --all-namespaces

Confirmation when deleting with `--all` and `--all-namespaces` is a long requested feature but we've historically determined this to be a breaking change and declined to implement. Existing scripts would require modification or break. While it is indeed breaking, we believe this change is necessary to protect users.

We propose moving towards requiring confirmation for deleting resources with `--all` and `--all-namespaces` over 3 releases (1 year). This gives us ample time to warn users and communicate the change through blogs and release notes.

Alpha

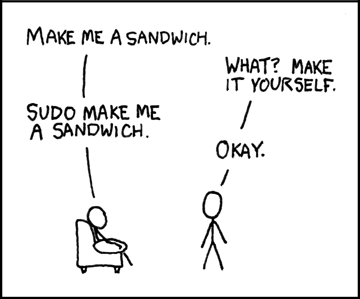

Introduce a flag like `--ask-for-confirmation | -i` that requires confirmation when deleting ANY resource. For example the `rm` command to delete files on a machine has this built in with `-i`. This provides a temporary safety mechanism for users to start using now.

Add a flag to enforce the current behavior and skip confirmation. `--force` is already used for removing stuck resources (see change 3 below) so we may want to use `--auto-approve` (inspired by Terraform). Usage of `--ask-for-confirmation` will always take precedence and ignore `--auto-approve`. We can see this behavior with `rm -rfi`.

-i Request confirmation before attempting to remove each file, regardless of the file's permissions, or whether or not the standard input device is a terminal. The -i option overrides any previous -f options.

Begin warning to stderr that by version x.x.x deleting with `--all` and `--all-namespaces` will require interactive confirmation or the `--auto-approve` flag.

Introduce a 10 second sleep when deleting with `--all` or `--all-namespaces` before proceeding to give the user a chance to react to the warning and interrupt their command.

Beta

Address user feedback from alpha.

GA

Deleting with `--all` or `--all-namespaces` now requires interactive confirmation as the default unless `--auto-approve` is passed.

Remove the 10-second deletion delay introduced in the alpha, and stop printing the deletion warning when interactive mode is disabled.

Change 2: Throw an error when --namespace provided to cluster-scoped resource deletion

Since namespaces are a cluster resource using the `--namespace | -n` flag when deleting them should error. This flag has no effect on cluster resources and confuses users. We believe this to be an implementation bug that should be fixed for cluster scoped resources. Although it is true that this may break scripts that are incorrectly including the flag on intentional mass deletion operations, the inconvenience to those users of removing the misused flag must be weighed against the material harm this implementation mistake is currently causing to other users in production. This will follow a similar rollout to above.

Change 3: Rename related flags that commonly cause confusion

The `--all` flag should be renamed to `--all-instances`. This makes it entirely clear which "all" it refers to. This would follow a 3-release rollout as well, starting with the new flag and warning about deprecation.

The `--force` flag is also a frequent source of confusion, and users do not understand what exactly is being forced. Alongside the `--all` change (in the same releases), we should consider renaming `--force` to something like `--force-reference-removal`.

These are breaking changes that shouldn't be taken lightly. Scripts, docs, and applications will all need to be modified. Putting on our empathy hats we believe that the benefits and protections to users are worth the hassle. We will do all we can to inform users of these impending changes and follow our standard guidelines for deprecating a flag.

Please see the following for examples of users requesting or running into this. This is a sample from a 5 minute search.

From GitHub:

--all should ask for confirmation · Issue #921 · kubernetes/kubectl

Kubectl can wipe a cluster with a single command · Issue #696 · kubernetes/kubectl

Add confirmation for bulk delete operations in kubectl · Issue #4457 · kubernetes/kubernetes

From StackOverflow:

service "kubernetes" deleted - accidentally deleted kubernetes service

Any way to know how a secret got deleted from kubernetes namespace

Eddie Zaneski - on behalf of SIG CLI

Tim Hockin

Hi Kuberfriendos,

We wanted to start a discussion about mitigating some of the potential footguns in kubectl.

Over the years we've heard stories from users who accidentally deleted resources in their clusters. This trend seems to be rising lately as newer folks venture into the Kubernetes/DevOps/Infra world.

First some background.

When a namespace is deleted it also deletes all of the resources under it. The deletion runs without further confirmation, and can be devastating if accidentally run against the wrong namespace (e.g. thanks to hasty tab completion use).

```

kubectl delete namespace prod-backup

```

When all namespaces are deleted essentially all resources are deleted. This deletion is trivial to do with the `--all` flag, and it also runs without further confirmation. It can effectively wipe out a whole cluster.

```

kubectl delete namespace --all

```

The difference between `--all` and `--all-namespaces` can be confusing.

There are certainly things cluster operators should be doing to help prevent this user error (like locking down permissions) but we'd like to explore what we can do to help end users as maintainers.

There are a few changes we'd like to propose to start discussion. We plan to introduce this as a KEP but wanted to gather early thoughts.

Change 1: Require confirmation when deleting with --all and --all-namespaces

Confirmation when deleting with `--all` and `--all-namespaces` is a long requested feature but we've historically determined this to be a breaking change and declined to implement. Existing scripts would require modification or break. While it is indeed breaking, we believe this change is necessary to protect users.

We propose moving towards requiring confirmation for deleting resources with `--all` and `--all-namespaces` over 3 releases (1 year). This gives us ample time to warn users and communicate the change through blogs and release notes.

Change 2: Throw an error when --namespace provided to cluster-scoped resource deletion

Since namespaces are a cluster resource using the `--namespace | -n` flag when deleting them should error. This flag has no effect on cluster resources and confuses users. We believe this to be an implementation bug that should be fixed for cluster scoped resources. Although it is true that this may break scripts that are incorrectly including the flag on intentional mass deletion operations, the inconvenience to those users of removing the misused flag must be weighed against the material harm this implementation mistake is currently causing to other users in production. This will follow a similar rollout to above.

Change 3: Rename related flags that commonly cause confusion

The `--all` flag should be renamed to `--all-instances`. This makes it entirely clear which "all" it refers to. This would follow a 3-release rollout as well, starting with the new flag and warning about deprecation.

Brian Topping

--

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group.

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAN9Ncmx-a6qLr_%3D74Mv%2B%2Bp5rJJkPA%3Dk8vtFNTKs5LY1xB4x_Xw%40mail.gmail.com.

Tim Hockin

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/4E3BAACC-540D-4E73-9544-EC9B109BB26E%40gmail.com.

Jordan Liggitt

On Thu, May 27, 2021 at 12:35 PM Eddie Zaneski <eddi...@gmail.com> wrote:Change 1: Require confirmation when deleting with --all and --all-namespaces

Confirmation when deleting with `--all` and `--all-namespaces` is a long requested feature but we've historically determined this to be a breaking change and declined to implement. Existing scripts would require modification or break. While it is indeed breaking, we believe this change is necessary to protect users.

We propose moving towards requiring confirmation for deleting resources with `--all` and `--all-namespaces` over 3 releases (1 year). This gives us ample time to warn users and communicate the change through blogs and release notes.

Can we start with a request for confirmation when the command is run interactively and a printed warning (and maybe the sleep).

Change 2: Throw an error when --namespace provided to cluster-scoped resource deletion

Since namespaces are a cluster resource using the `--namespace | -n` flag when deleting them should error. This flag has no effect on cluster resources and confuses users. We believe this to be an implementation bug that should be fixed for cluster scoped resources. Although it is true that this may break scripts that are incorrectly including the flag on intentional mass deletion operations, the inconvenience to those users of removing the misused flag must be weighed against the material harm this implementation mistake is currently causing to other users in production. This will follow a similar rollout to above.

The "material harm" here feels very low and I am not convinced it rises to the level of breaking users.

Change 3: Rename related flags that commonly cause confusion

The `--all` flag should be renamed to `--all-instances`. This makes it entirely clear which "all" it refers to. This would follow a 3-release rollout as well, starting with the new flag and warning about deprecation.

I think 3 releases is too aggressive to break users. We know that it takes months or quarters for releases to propagate into providers' stable-channels. In the meantime, docs and examples all over the internet will be wrong.If we're to undertake any such change I think it needs to be more gradual. Consider 6 to 9 releases instead. Start by adding new forms and warning on use of the old forms. Then add small sleeps to the deprecated forms. Then make the sleeps longer and the warnings louder. By the time it starts hurting people there will be ample information all over the internet about how to fix it. Even then, the old commands will still work (even if slowly) for a long time. And in fact, maybe we should leave it in that state permanently. Don't break users, just annoy them.

Clayton Coleman

These are breaking changes that shouldn't be taken lightly. Scripts, docs, and applications will all need to be modified. Putting on our empathy hats we believe that the benefits and protections to users are worth the hassle. We will do all we can to inform users of these impending changes and follow our standard guidelines for deprecating a flag.

Please see the following for examples of users requesting or running into this. This is a sample from a 5 minute search.

From GitHub:

--all should ask for confirmation · Issue #921 · kubernetes/kubectl

Kubectl can wipe a cluster with a single command · Issue #696 · kubernetes/kubectl

Add confirmation for bulk delete operations in kubectl · Issue #4457 · kubernetes/kubernetes

From StackOverflow:

service "kubernetes" deleted - accidentally deleted kubernetes service

Any way to know how a secret got deleted from kubernetes namespace

Eddie Zaneski - on behalf of SIG CLI

--

Brendan Burns

We can add an annotation ("k8s.io/confirm-delete: true") to a Pod and if that annotation is present, prompt for confirmation of the delete. We might also consider "k8s.io/lock" which actively blocks the delete.

Sent: Thursday, May 27, 2021 1:06 PM

To: Eddie Zaneski <eddi...@gmail.com>

Cc: kubernetes-dev <kuberne...@googlegroups.com>; kubernetes-sig-cli <kubernete...@googlegroups.com>

Subject: [EXTERNAL] Re: [RFC] Protecting users of kubectl delete

Jordan Liggitt

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/SA0PR21MB2011CEA6073A236826EC84C3DB239%40SA0PR21MB2011.namprd21.prod.outlook.com.

Daniel Smith

You received this message because you are subscribed to the Google Groups "kubernetes-sig-cli" group.

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-sig-...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-sig-cli/CAMBP-pJwPtDk0Zz6XqTo4XFKox8k3RsfQ2b%2B-rLR%2BeeDrTKG4Q%40mail.gmail.com.

Benjamin Elder

https://github.com/kubernetes-sigs/kind/pull/1479/files

https://github.com/travis-ci/travis-ci/issues/8193

https://github.com/travis-ci/travis-ci/issues/1337

I wouldn't recommend the TTY detection route in particular.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAB_J3bbcZWt6YRHR2J7PjbWWnWxhRqSRX99W6YDpQx2nLmaofQ%40mail.gmail.com.

Antonio Ojea

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAOZRXm8%3Dq3rkbX6t3cQ-uP16Am-bK%2BHL3b16k0bMtvxTMux_ew%40mail.gmail.com.

raghvenders raghvenders

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CABhP%3Dtag6X8100hnU%2BVfmY6ez_mvVgDRHrNtaiKsM8HKuAWsLQ%40mail.gmail.com.

abhishek....@gmail.com

As much as we care about giving utility to all users, it is also a basic need to provide some cover from accidental disasters. RBAC is a very wide topic and I understand kubernetes administrators has responsibility to restrict access.

I am in total favor of having something like "--interactive|-i" or "--ask-for-confirmation" in place as Alpha feature with warning at first, and then slowly graduate it to GA. That would give every one a lot of time to change any breaking automation scripts.

Siyu Wang

Rory McCune

Douglas Schilling Landgraf

kubernetes-sig-cli <kubernete...@googlegroups.com> wrote:

>

> I like the "opt into deletion protection" approach. That got discussed a long time ago (e.g. https://github.com/kubernetes/kubernetes/pull/17740#issuecomment-217461024), but didn't get turned into a proposal/implementation

>

> There's a variety of ways that could be done... server-side and enforced, client-side as a hint, etc.

>

> On Thu, May 27, 2021 at 4:21 PM 'Brendan Burns' via Kubernetes developer/contributor discussion <kuberne...@googlegroups.com> wrote:

>>

>> I'd like to suggest an alternate approach that is more opt-in and is also backward compatible.

>>

>> We can add an annotation ("k8s.io/confirm-delete: true") to a Pod and if that annotation is present, prompt for confirmation of the delete. We might also

> consider "k8s.io/lock" which actively blocks the delete.

feature was enabled in the cluster by the user.

> To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-sig-...@googlegroups.com.

> To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-sig-cli/CAMBP-pJwPtDk0Zz6XqTo4XFKox8k3RsfQ2b%2B-rLR%2BeeDrTKG4Q%40mail.gmail.com.

Tim Hockin

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CA%2BC9-M3Y7qx3HkX%3DGj88p%2B7B9PQZ5wA7-xQXGhJDGeSdzVAvYw%40mail.gmail.com.

Zizon Qiu

I'd like to suggest an alternate approach that is more opt-in and is also backward compatible.

We can add an annotation ("k8s.io/confirm-delete: true") to a Pod and if that annotation is present, prompt for confirmation of the delete. We might also consider "k8s.io/lock" which actively blocks the delete.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/SA0PR21MB2011CEA6073A236826EC84C3DB239%40SA0PR21MB2011.namprd21.prod.outlook.com.

Daniel Smith

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAKTRiEK%3Dbu6HQMT9xZ8PCvhQxJT5AX5WsFO_EkkucS%2Btbf4UBA%40mail.gmail.com.

Tim Hockin

On Fri, May 28, 2021 at 4:21 AM 'Brendan Burns' via Kubernetes developer/contributor discussion <kuberne...@googlegroups.com> wrote:I'd like to suggest an alternate approach that is more opt-in and is also backward compatible.

We can add an annotation ("k8s.io/confirm-delete: true") to a Pod and if that annotation is present, prompt for confirmation of the delete. We might also consider "k8s.io/lock" which actively blocks the delete.Or abuse the existing finalizer mechanism.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAKTRiEK%3Dbu6HQMT9xZ8PCvhQxJT5AX5WsFO_EkkucS%2Btbf4UBA%40mail.gmail.com.

Tim Hockin

I`m thinking of finalizers as some kind of reference counter, like smart pointers in C++ or something like that.Resources are deallocated when the counter turns down to zero(no more finalizer).And keeping alive whenever counter > 0(with any arbitrary finalizer).

Tabitha Sable

WDYT about taking this idea to our friends at sig-security-docs?

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/279091f9-90f8-4b98-91bb-07d33f858c7en%40googlegroups.com.

Abhishek Tamrakar

You received this message because you are subscribed to a topic in the Google Groups "Kubernetes developer/contributor discussion" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/kubernetes-dev/y4Q20V3dyOk/unsubscribe.

To unsubscribe from this group and all its topics, send an email to kubernetes-de...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAO_RewaP8-96m-Tjg4wQ6Gv0yTgL2EeDpmQNaZUK8-BdwM1s7g%40mail.gmail.com.

Zizon Qiu

raghvenders raghvenders

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAKTRiEK%3Dbu6HQMT9xZ8PCvhQxJT5AX5WsFO_EkkucS%2Btbf4UBA%40mail.gmail.com.

raghvenders raghvenders

- Annotation and Delete Prohibitors

- Finalizers

- RBAC and Domain accounts/sudo-like

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAGBZAhGEUQ9bd0zcbt3aB2Z7Z958Wqv9Qx7iHwUfnnWWdHvGkA%40mail.gmail.com.

Josh Berkus

>

> If we're to undertake any such change I think it needs to be more

> gradual. Consider 6 to 9 releases instead. Start by adding new forms

> and warning on use of the old forms. Then add small sleeps to the

> deprecated forms. Then make the sleeps longer and the warnings louder.

> By the time it starts hurting people there will be ample information all

> over the internet about how to fix it. Even then, the old commands will

> still work (even if slowly) for a long time. And in fact, maybe we

> should leave it in that state permanently. Don't break users, just

> annoy them.

doesn't really make any difference ... users just ignore the change

until it goes GA, regardless.

Also consider that releases are currently 4 months, so 6 to 9 releases

means 2 to 3 years.

What I would rather see here is a switch that supports the old behavior

in the kubectl config. Then deprecate that over 3 releases or so. So:

Alpha: feature gate

Beta: feature gate, add config switch (on if not set)

GA: on by default, config switch (off if not set)

GA +3: drop config switch -- or not?

... although, now that I think about it, is it *ever* necessary to drop

the config switch? As a scriptwriter, I prefer things I can put into my

.kube config to new switches.

Also, of vital importance here is: how many current popular CI/CD

platforms rely on automated namespace deletion? If the answer is

"several" then that's gonna slow down rollout.

--

-- Josh Berkus

Kubernetes Community Architect

OSPO, OCTO

Tim Hockin

<raghv...@gmail.com> wrote:

>

> Since client-side changes would potentially go about 6-9 releases as mentioned by Tim and potentially breaking changes, a server-side solution would be a reasonable and worthy option to consider and finalize.

about breaking changes without users EXPLICITLY opting in. You REALLY

can't make something that used to work suddenly stop working, whether

that is client or server implemented.

On the contrary, client-side changes like "ask for confirmation" and

"print stuff in color" are easier because they can distinguish between

interactive and non-interactive execution.

Adding a confirmation to interactive commands should not require any

particular delays in rollout.

Eddie Zaneski

On Tue, Jun 1, 2021 at 8:20 AM raghvenders raghvenders

<raghvenders@gmail.com> wrote:Since client-side changes would potentially go about 6-9 releases as mentioned by Tim and potentially breaking changes, a server-side solution would be a reasonable and worthy option to consider and finalize.

To be clear - the distinction isn't really client vs. server. It's about breaking changes without users EXPLICITLY opting in. You REALLY can't make something that used to work suddenly stop working, whether that is client or server implemented.

On the contrary, client-side changes like "ask for confirmation" and

"print stuff in color" are easier because they can distinguish between interactive and non-interactive execution.Adding a confirmation to interactive commands should not require any particular delays in rollout.

Quickly Summarizing the options discussed so far (Server-side):

Annotation and Delete Prohibitors

Finalizers

RBAC and Domain accounts/sudo-likePlease add, if I missed anything or correct me if it is not the option.

And parallelly continuing with proposed Kubectl client-based changes - Change 1 (Interactive), Change 2, and 3 for the targeted release timelines.

I would be curious to see how will it be like, choosing 1 of 3 options or combine the options, then a WBS and stakeholder approvals, components changes, and release rollouts?

Regards,

Raghvender

On Sat, May 29, 2021 at 12:13 AM Abhishek Tamrakar <abhishek.tamrakar08@gmail.com> wrote:

The current deletion strategy provides is easy but very risky without any gates, the deletion could risk whole cluster, this is where it needs some cover. The reason I would still prefer the client-side approach as mentioned in the original proposal is because the decision of deletion of a certain object or objects should remain in control of the end user at the same time providing the safest for them to operate the cluster.

On Fri, May 28, 2021, 22:25 'Tim Hockin' via Kubernetes developer/contributor discussion <kubernetes-dev@googlegroups.com> wrote:

On Fri, May 28, 2021 at 9:21 AM Zizon Qiu <zzdtsv@gmail.com> wrote:

I`m thinking of finalizers as some kind of reference counter, like smart pointers in C++ or something like that.

Resources are deallocated when the counter turns down to zero(no more finalizer). And keeping alive whenever counter > 0(with any arbitrary finalizer).

That's correct, but there's a fundamental difference between "alive" and "waiting to die". A delete operation moves an object, irrevocably from "alive" to "waiting to die". That is a visible "state" (the deletionTimestamp is set) and there's no way to come back from it. Let's not abuse that to mean something else.

On Sat, May 29, 2021 at 12:14 AM Tim Hockin <thockin@google.com> wrote:

On Fri, May 28, 2021 at 7:58 AM Zizon Qiu <zzdtsv@gmail.com> wrote:

On Fri, May 28, 2021 at 4:21 AM 'Brendan Burns' via Kubernetes developer/contributor discussion <kubernetes-dev@googlegroups.com> wrote:

I'd like to suggest an alternate approach that is more opt-in and is also backward compatible.

We can add an annotation ("k8s.io/confirm-delete: true") to a Pod and if that annotation is present, prompt for confirmation of the delete. We might also consider "k8s.io/lock" which actively blocks the delete.

Or abuse the existing finalizer mechanism.

Finalizers are not "deletion inhibitors" just "deletion delayers". Once you delete, the finalizer might stop it from happening YET but it *is* going to happen. I'd rather see a notion of opt-in delete-inhibit. It is not clear to me what happens if I have a delete-inhibit on something inside a namespace and then try to delete the namespace - we don't have transactions, so we can't abort the whole thing - it would be stuck in a weird partially-deleted state and I expect that to be a never-ending series of bug reports.

We could also support those annotations at a namespace level if we wanted to.

This is similar to Management Locks that we introduced in Azure (https://docs.microsoft.com/en-us/rest/api/resources/managementlocks) for similar reasons to prevent accidental deletes and force an explicit action (remove the lock) for a delete to proceed.

--brendan

________________________________

From: kubernetes-dev@googlegroups.com <kubernetes-dev@googlegroups.com> on behalf of Clayton Coleman <ccoleman@redhat.com> Sent: Thursday, May 27, 2021 1:06 PM

To: Eddie Zaneski <eddiezane@gmail.com>

Cc: kubernetes-dev <kubernetes-dev@googlegroups.com>; kubernetes-sig-cli <kubernetes-sig-cli@googlegroups.com> Subject: [EXTERNAL] Re: [RFC] Protecting users of kubectl delete

--

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group. To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-dev+unsubscribe@googlegroups.com. To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAN9Ncmx-a6qLr_%3D74Mv%2B%2Bp5rJJkPA%3Dk8vtFNTKs5LY1xB4x_Xw%40mail.gmail.com.--

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group. To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-dev+unsubscribe@googlegroups.com. To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAH16ShKfeUTY2L8dq%2BZr0Eagun_AUtOmpC7sExuuvC8OTZ6YSw%40mail.gmail.com.--

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group. To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-dev+unsubscribe@googlegroups.com. To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/SA0PR21MB2011CEA6073A236826EC84C3DB239%40SA0PR21MB2011.namprd21.prod.outlook.com.--

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group. To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-dev+unsubscribe@googlegroups.com. To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAKTRiEK%3Dbu6HQMT9xZ8PCvhQxJT5AX5WsFO_EkkucS%2Btbf4UBA%40mail.gmail.com.--

You received this message because you are subscribed to a topic in the Google Groups "Kubernetes developer/contributor discussion" group. To unsubscribe from this topic, visit https://groups.google.com/d/topic/kubernetes-dev/y4Q20V3dyOk/unsubscribe. To unsubscribe from this group and all its topics, send an email to kubernetes-dev+unsubscribe@googlegroups.com. To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAO_RewaP8-96m-Tjg4wQ6Gv0yTgL2EeDpmQNaZUK8-BdwM1s7g%40mail.gmail.com.--

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group. To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-dev+unsubscribe@googlegroups.com. To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAGBZAhGEUQ9bd0zcbt3aB2Z7Z958Wqv9Qx7iHwUfnnWWdHvGkA%40mail.gmail.com.

Brian Topping

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAN9Ncmwa0zBn1pjvfArcPezj%2B1AupLhwENKpgf1rrQL6p5Nocw%40mail.gmail.com.

fillz...@gmail.com

Fury kerry

On Sat, May 29, 2021 at 12:13 AM Abhishek Tamrakar <abhishek....@gmail.com> wrote:

The current deletion strategy provides is easy but very risky without any gates, the deletion could risk whole cluster, this is where it needs some cover. The reason I would still prefer the client-side approach as mentioned in the original proposal is because the decision of deletion of a certain object or objects should remain in control of the end user at the same time providing the safest for them to operate the cluster.

On Fri, May 28, 2021, 22:25 'Tim Hockin' via Kubernetes developer/contributor discussion <kuberne...@googlegroups.com> wrote:

On Fri, May 28, 2021 at 9:21 AM Zizon Qiu <zzd...@gmail.com> wrote:

I`m thinking of finalizers as some kind of reference counter, like smart pointers in C++ or something like that.

Resources are deallocated when the counter turns down to zero(no more finalizer). And keeping alive whenever counter > 0(with any arbitrary finalizer).

That's correct, but there's a fundamental difference between "alive" and "waiting to die". A delete operation moves an object, irrevocably from "alive" to "waiting to die". That is a visible "state" (the deletionTimestamp is set) and there's no way to come back from it. Let's not abuse that to mean something else.

On Sat, May 29, 2021 at 12:14 AM Tim Hockin <tho...@google.com> wrote:

On Fri, May 28, 2021 at 7:58 AM Zizon Qiu <zzd...@gmail.com> wrote:

On Fri, May 28, 2021 at 4:21 AM 'Brendan Burns' via Kubernetes developer/contributor discussion <kuberne...@googlegroups.com> wrote:

I'd like to suggest an alternate approach that is more opt-in and is also backward compatible.

We can add an annotation ("k8s.io/confirm-delete: true") to a Pod and if that annotation is present, prompt for confirmation of the delete. We might also consider "k8s.io/lock" which actively blocks the delete.

Or abuse the existing finalizer mechanism.

Finalizers are not "deletion inhibitors" just "deletion delayers". Once you delete, the finalizer might stop it from happening YET but it *is* going to happen. I'd rather see a notion of opt-in delete-inhibit. It is not clear to me what happens if I have a delete-inhibit on something inside a namespace and then try to delete the namespace - we don't have transactions, so we can't abort the whole thing - it would be stuck in a weird partially-deleted state and I expect that to be a never-ending series of bug reports.

We could also support those annotations at a namespace level if we wanted to.

This is similar to Management Locks that we introduced in Azure (https://docs.microsoft.com/en-us/rest/api/resources/managementlocks) for similar reasons to prevent accidental deletes and force an explicit action (remove the lock) for a delete to proceed.

--brendan

________________________________

From: kuberne...@googlegroups.com <kuberne...@googlegroups.com> on behalf of Clayton Coleman <ccol...@redhat.com> Sent: Thursday, May 27, 2021 1:06 PM

To: Eddie Zaneski <eddi...@gmail.com>

Cc: kubernetes-dev <kuberne...@googlegroups.com>; kubernetes-sig-cli <kubernete...@googlegroups.com> Subject: [EXTERNAL] Re: [RFC] Protecting users of kubectl delete

--

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group. To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com. To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAN9Ncmx-a6qLr_%3D74Mv%2B%2Bp5rJJkPA%3Dk8vtFNTKs5LY1xB4x_Xw%40mail.gmail.com.--

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group. To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com. To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAH16ShKfeUTY2L8dq%2BZr0Eagun_AUtOmpC7sExuuvC8OTZ6YSw%40mail.gmail.com.--

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group. To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com. To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/SA0PR21MB2011CEA6073A236826EC84C3DB239%40SA0PR21MB2011.namprd21.prod.outlook.com.--

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group. To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com. To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAKTRiEK%3Dbu6HQMT9xZ8PCvhQxJT5AX5WsFO_EkkucS%2Btbf4UBA%40mail.gmail.com.--

You received this message because you are subscribed to a topic in the Google Groups "Kubernetes developer/contributor discussion" group. To unsubscribe from this topic, visit https://groups.google.com/d/topic/kubernetes-dev/y4Q20V3dyOk/unsubscribe. To unsubscribe from this group and all its topics, send an email to kubernetes-de...@googlegroups.com. To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAO_RewaP8-96m-Tjg4wQ6Gv0yTgL2EeDpmQNaZUK8-BdwM1s7g%40mail.gmail.com.--

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group. To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com. To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAGBZAhGEUQ9bd0zcbt3aB2Z7Z958Wqv9Qx7iHwUfnnWWdHvGkA%40mail.gmail.com.

--

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group.

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAN9Ncmwa0zBn1pjvfArcPezj%2B1AupLhwENKpgf1rrQL6p5Nocw%40mail.gmail.com.

Zhen Zhang

Zhejiang University

Yuquan Campus

MSN:Fury_...@hotmail.com

Eddie Zaneski

- Add a new `--interactive | -i` flag to kubectl delete that will require confirmation before deleting resources. This flag will be false by default.

- `kubectl delete [--all | --all-namespaces]` will warn about the destructive action that will be performed and artificially delay for x seconds allowing users a chance to abort.

Tim Hockin

executed interactively discarded?

> You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group.

> To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

Tim Hockin

Did we discard the idea of demanding interactive confirmation when a

"dangerous" command is executed in an interactive session? If so,

why? To me, that seems like the most approachable first vector and

likely to get a good return on investment.

Eddie Zaneski

Rephrasing for clarity:

Did we discard the idea of demanding interactive confirmation when a

"dangerous" command is executed in an interactive session? If so,

why? To me, that seems like the most approachable first vector and

likely to get a good return on investment.

Eddie Zaneski

On Wednesday, June 2, 2021 at 6:08:55 PM UTC-6 Tim Hockin wrote:Rephrasing for clarity:

Did we discard the idea of demanding interactive confirmation when a

"dangerous" command is executed in an interactive session? If so,

why? To me, that seems like the most approachable first vector and

likely to get a good return on investment.Wasn't discarded. I'll include in the KEP and we can dig in for more cases of platforms doing funny things with spoofing TTY's.

Tim Hockin

>

> On Wednesday, June 2, 2021 at 11:40:57 PM UTC-6 Eddie Zaneski wrote:

>>

>> On Wednesday, June 2, 2021 at 6:08:55 PM UTC-6 Tim Hockin wrote:

>>>

>>> Rephrasing for clarity:

>>>

>>> Did we discard the idea of demanding interactive confirmation when a

>>> "dangerous" command is executed in an interactive session? If so,

>>> why? To me, that seems like the most approachable first vector and

>>> likely to get a good return on investment.

>>

>>

>> Wasn't discarded. I'll include in the KEP and we can dig in for more cases of platforms doing funny things with spoofing TTY's.

>

>

> I've done a bit more digging into Ben's comments about TTY detection and think we may need to discard that route.

>

> TravisCI is one provider we know of spoofing a TTY to trick tools into outputting things like color and status bars. It looks like CircleCI may do this as well.

> kind hardcoded some vendor specific environment variables to get around this with Travis but I don't think we can/want to do that for all the vendors.

>

> If we can't reliably detect TTY's inside these pipelines we will indeed break scripts.

> Thoughts?

> --

> You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group.

> To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

Jordan Liggitt

On Thu, Jun 3, 2021 at 4:32 PM Eddie Zaneski <eddi...@gmail.com> wrote:

>

> On Wednesday, June 2, 2021 at 11:40:57 PM UTC-6 Eddie Zaneski wrote:

>>

>> On Wednesday, June 2, 2021 at 6:08:55 PM UTC-6 Tim Hockin wrote:

>>>

>>> Rephrasing for clarity:

>>>

>>> Did we discard the idea of demanding interactive confirmation when a

>>> "dangerous" command is executed in an interactive session? If so,

>>> why? To me, that seems like the most approachable first vector and

>>> likely to get a good return on investment.

>>

>>

>> Wasn't discarded. I'll include in the KEP and we can dig in for more cases of platforms doing funny things with spoofing TTY's.

>

>

> I've done a bit more digging into Ben's comments about TTY detection and think we may need to discard that route.

>

> TravisCI is one provider we know of spoofing a TTY to trick tools into outputting things like color and status bars. It looks like CircleCI may do this as well.

Well. That's unfortunate.

> kind hardcoded some vendor specific environment variables to get around this with Travis but I don't think we can/want to do that for all the vendors.

>

> If we can't reliably detect TTY's inside these pipelines we will indeed break scripts.

Yes, that's the conclusion I come to, also. Harumph.

Tim Hockin

Jordan Liggitt

Abhishek Tamrakar

--

You received this message because you are subscribed to a topic in the Google Groups "Kubernetes developer/contributor discussion" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/kubernetes-dev/y4Q20V3dyOk/unsubscribe.

To unsubscribe from this group and all its topics, send an email to kubernetes-de...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAMBP-p%2BOf1KeQMDdvfcA21cWccSpi7HQULgKeq40ezq-FcydTg%40mail.gmail.com.

Brian Topping

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group.

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAGBZAhGjNTyxDswciNnqQ%2Bx3%3Duco0u9x8NP49oGoYVzMSz_BBw%40mail.gmail.com.

Paco俊杰Junjie 徐Xu

- This start rm (remove files or directories) in interactive and verbose mode to avoid to delete files by mistake.

- Note that the -f (force) option ignore the interactive option.

Bill WANG

raghvenders raghvenders

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/e0256b83-9038-43e0-90af-26114abf49edn%40googlegroups.com.

Eddie Zaneski

Ricardo Katz

--

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group.

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/ca6dc483-39b0-4883-9df8-52e077323172n%40googlegroups.com.

Brendan Burns

Sent: Tuesday, June 22, 2021 10:06 AM

To: Eddie Zaneski <eddi...@gmail.com>

Cc: Kubernetes developer/contributor discussion <kuberne...@googlegroups.com>

Subject: Re: [EXTERNAL] Re: [RFC] Protecting users of kubectl delete

Tim Hockin

admin-directed safety config, perhaps a proper API, rather than flags,

makes more sense.

On Tue, Jun 22, 2021 at 10:21 AM 'Brendan Burns' via Kubernetes

developer/contributor discussion <kuberne...@googlegroups.com>

wrote:

>

Brendan Burns

Sent: Monday, June 28, 2021 9:28 AM

To: Brendan Burns <bbu...@microsoft.com>

Cc: Ricardo Katz <ricard...@gmail.com>; Eddie Zaneski <eddi...@gmail.com>; Kubernetes developer/contributor discussion <kuberne...@googlegroups.com>

> https://nam06.safelinks.protection.outlook.com/?url=https%3A%2F%2Fgithub.com%2Fkubernetes%2Fenhancements%2Fblob%2F0f321138775017d9bd7a44604de59cf399aa67fd%2Fkeps%2Fsig-cli%2F2775-kubectl-delete-interactivity-delay%2FREADME.md&data=04%7C01%7Cbburns%40microsoft.com%7C230a4d79888b4d20c5ee08d93a51d098%7C72f988bf86f141af91ab2d7cd011db47%7C1%7C0%7C637604945333777401%7CUnknown%7CTWFpbGZsb3d8eyJWIjoiMC4wLjAwMDAiLCJQIjoiV2luMzIiLCJBTiI6Ik1haWwiLCJXVCI6Mn0%3D%7C5000&sdata=D94nbYLdQOl1WREsLGieMrlwoJ8GZQZGwJLEdwgrz2Q%3D&reserved=0

>

> --

> Eddie

>

> --

> You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group.

> To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

>

> --

> You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group.

> To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

>

> --

> You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group.

> To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

raghvenders raghvenders

I'd like to suggest an alternate approach that is more opt-in and is also backward compatible.

We can add an annotation ("k8s.io/confirm-delete: true") to a Pod and if that annotation is present, prompt for confirmation of the delete. We might also consider "k8s.io/lock" which actively blocks the delete.

We could also support those annotations at a namespace level if we wanted to.

This is similar to Management Locks that we introduced in Azure (https://docs.microsoft.com/en-us/rest/api/resources/managementlocks) for similar reasons to prevent accidental deletes and force an explicit action (remove the lock) for a delete to proceed.

--brendan

From: kuberne...@googlegroups.com <kuberne...@googlegroups.com> on behalf of Clayton Coleman <ccol...@redhat.com>

Sent: Thursday, May 27, 2021 1:06 PM

To: Eddie Zaneski <eddi...@gmail.com>

--

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group.

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAN9Ncmx-a6qLr_%3D74Mv%2B%2Bp5rJJkPA%3Dk8vtFNTKs5LY1xB4x_Xw%40mail.gmail.com.

--

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group.

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAH16ShKfeUTY2L8dq%2BZr0Eagun_AUtOmpC7sExuuvC8OTZ6YSw%40mail.gmail.com.

--

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group.

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/SA0PR21MB2011CEA6073A236826EC84C3DB239%40SA0PR21MB2011.namprd21.prod.outlook.com.

Eddie Zaneski

Tim Hockin

On Tue, Jul 13, 2021 at 11:15 AM Eddie Zaneski <eddi...@gmail.com> wrote:

>

> I like the idea of adding an API server flag to opt clients into confirmation (or even some of the other breaking changes), say via a header.

>

> What are the thoughts on providers supporting that as an option for cluster creation?

>

> You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group.

> To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

Brendan Burns

Sent: Tuesday, July 13, 2021 2:39 PM

To: Eddie Zaneski <eddi...@gmail.com>

Cc: Kubernetes developer/contributor discussion <kuberne...@googlegroups.com>

Subject: Re: [EXTERNAL] Re: [RFC] Protecting users of kubectl delete

On Tue, Jul 13, 2021 at 11:15 AM Eddie Zaneski <eddi...@gmail.com> wrote:

>

> I like the idea of adding an API server flag to opt clients into confirmation (or even some of the other breaking changes), say via a header.

>

> What are the thoughts on providers supporting that as an option for cluster creation?

>

> --

> You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group.

> To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

--

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group.

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

Tim Hockin

corner cases to be pinned down.

It is not clear to me what happens if I have a delete-inhibit on

something inside a namespace and then try to delete the namespace - we

be stuck in a weird partially-deleted state. We can't pre-check

delete-ability and then delete (TOC-TOU). We'd need a "delete

pending" indicator, so mutations would be blocked, or something like

that, which has yet more corner cases.

Eddie Zaneski

Tim Hockin

>

> > Are we again considering delete-inhibitors?

>

> I think delete inhibitors are a great idea and something we should implement but are out of scope of this KEP.

> > Is this something that needs to be a flag, or should it be an actual API?

>

> I am thinking flag but curious what you think an API for this could look like. Create a cluster level resource that enforces new behavior?

their scope is naturally very wide (or global). E.g. you could never

encode an open-ended list of protected resources in a flag. Managed

providers have to plumb flags through implementations, etc. Changing

your mind about a flag means changing apiserver config, which is kind

of scary.

On the other hand, we've historically shied away from hundreds of tiny

resources, but more recently that seems less of a concern. So, for

example, maybe (not a foregone conclusion) it makes sense to consider

an API policy object which encodes things like this or even has more

details like a selector, a resource type or apigroup, a RoleName, etc.

Tim

Brendan Burns

Sent: Tuesday, July 13, 2021 3:05 PM

To: Brendan Burns <bbu...@microsoft.com>

Cc: Eddie Zaneski <eddi...@gmail.com>; Kubernetes developer/contributor discussion <kuberne...@googlegroups.com>

>

> --

> You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group.

> To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

raghvenders raghvenders

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/MW4PR21MB2002B6AE925C9BFDE8DC80A2DB149%40MW4PR21MB2002.namprd21.prod.outlook.com.

Daniel Smith

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAGycw7uhK%3D4pR8aiuot0M97f2FjgD%2BVJEJaKF-yPNaZXzJ9tMw%40mail.gmail.com.

raghvenders raghvenders

Eddie Zaneski

kfox11...@gmail.com

Tim Hockin

Interesting solution.But it may add to some confusion. Say I use a cluster that has the flag set and 99% of the time I use that cluster. and then I go to a cluster that doesn't, and I accidentally delete something because I was careless and thought the system would protect me because I didn't realize the cluster admin overrode some setting I didn't know existed?Breaking changes on a new version of a software is somewhat expected. That's why there are change logs / breaking change notes. It changing behavior per cluster is a bit less expected.

For other use cases this might be ok. This one feels like it potentially just moves the problem somewhere else? Still though, some protection is still better then no protection, so maybe its still a good idea. Just thinking out loud.Thanks,KevinOn Tuesday, August 31, 2021 at 4:57:55 PM UTC-7 eddi...@gmail.com wrote:Tim and I had a quick chat to expand on the idea of opting users into breaking changes through a cluster scoped resource and I wound up with this poc https://youtu.be/H5yd1dRl44w.The idea is to have some sort of configuration that can be set on a cluster by a cluster administrator. By adding this configuration an admin can add additional safeguards that don't reduce an end user's access but change their user experience of interacting with resources. An approach like this can be consumed by clients other than kubectl (see https://github.com/helm/helm/issues/8981) and other actions (e.g. apply).I think the focus of discussion right now should be, is configuring user experience and client behavior with cluster resources a mechanism we want to use as opposed to the technical implementations of that mechanism.Thoughts?

--

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group.

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/ce4d6d00-a78b-42b8-96c5-40be591f8cf3n%40googlegroups.com.

Maciej Szulik

Tim and I had a quick chat to expand on the idea of opting users into breaking changes through a cluster scoped resource and I wound up with this poc https://youtu.be/H5yd1dRl44w.

The idea is to have some sort of configuration that can be set on a cluster by a cluster administrator. By adding this configuration an admin can add additional safeguards that don't reduce an end user's access but change their user experience of interacting with resources. An approach like this can be consumed by clients other than kubectl (see https://github.com/helm/helm/issues/8981) and other actions (e.g. apply).I think the focus of discussion right now should be, is configuring user experience and client behavior with cluster resources a mechanism we want to use as opposed to the technical implementations of that mechanism.Thoughts?

--

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group.

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/fd2c44b8-ec5b-4ca7-a707-8ed6299f0d0an%40googlegroups.com.

Tim Hockin

On Wed, Sep 1, 2021 at 1:58 AM Eddie Zaneski <eddi...@gmail.com> wrote:Tim and I had a quick chat to expand on the idea of opting users into breaking changes through a cluster scoped resource and I wound up with this poc https://youtu.be/H5yd1dRl44w.The proposed approach, although pretty cool, has an important downside.This mechanism has to be implemented for every client interacting with k8s.

You can implement (and Brendan pointed it out in https://groups.google.com/g/kubernetes-dev/c/y4Q20V3dyOk/m/yb5xfHDiBwAJ?utm_medium=email&utm_source=footer) through simpleadmission + labelling/annotation. The benefit of that approach is that:1. it's centrally managed by the cluster admin who can easily enable, disable or configure it.2. it works with every client, since you're still working with well known delete + label/annotateoperations.

--Lastly, from my own experience, I can say that different administrators havedifferent needs wrt deletion protection and having a central mechanism is notalways desirable. The current extension points we have are pretty goodprotecting our clusters :-) But I agree that it's not always easy to put the piecestogether.The idea is to have some sort of configuration that can be set on a cluster by a cluster administrator. By adding this configuration an admin can add additional safeguards that don't reduce an end user's access but change their user experience of interacting with resources. An approach like this can be consumed by clients other than kubectl (see https://github.com/helm/helm/issues/8981) and other actions (e.g. apply).I think the focus of discussion right now should be, is configuring user experience and client behavior with cluster resources a mechanism we want to use as opposed to the technical implementations of that mechanism.Thoughts?--

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group.

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/fd2c44b8-ec5b-4ca7-a707-8ed6299f0d0an%40googlegroups.com.

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group.

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CALFFA0PPAVrMamW4W6MM-0P-RoXR8biSiH%2BAKxcaGGAbdvPv%3Dw%40mail.gmail.com.

Daniel Smith

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/CAO_RewbrwWLiBO76cmfSmZesMBH9keb5nehSacFzOdjDQVVLTw%40mail.gmail.com.

Josh Berkus

> The proposed approach, although pretty cool, has an important downside.

> This mechanism has to be implemented for every client interacting with k8s.

> You can implement (and Brendan pointed it out in

> https://groups.google.com/g/kubernetes-dev/c/y4Q20V3dyOk/m/yb5xfHDiBwAJ?utm_medium=email&utm_source=footer

> admission + labelling/annotation. The benefit of that approach is that:

> 1. it's centrally managed by the cluster admin who can easily enable,

> disable or configure it.

> 2. it works with every client, since you're still working with well

> known delete + label/annotate

> operations.

- Interactive users should have to confirm cascading deletes.

- CI/CD systems should NOT have to.

So far, all of the proposed solutions -- whether Eddie's or Brendan's --

require modifying every singe CI/CD system that works with Kubernetes in

order to re-enable regular operation. IMHO, that's a showstopper; it's

simply not worth forcing an ecosystem-wide change in order to disable a

footgun.

This is why I suggested upthread to make this a simple .kube/config option.

--

-- Josh Berkus

Kubernetes Community Architect

OSPO, OCTO

Brendan Burns

Sent: Tuesday, September 28, 2021 11:35 AM

To: Maciej Szulik <masz...@redhat.com>; Kubernetes developer/contributor discussion <kuberne...@googlegroups.com>

Subject: Re: [EXTERNAL] Re: [RFC] Protecting users of kubectl delete

> The proposed approach, although pretty cool, has an important downside.

> This mechanism has to be implemented for every client interacting with k8s.

> You can implement (and Brendan pointed it out in

> <https://nam06.safelinks.protection.outlook.com/?url=https%3A%2F%2Fgroups.google.com%2Fg%2Fkubernetes-dev%2Fc%2Fy4Q20V3dyOk%2Fm%2Fyb5xfHDiBwAJ%3Futm_medium%3Demail%26utm_source%3Dfooter&data=04%7C01%7Cbburns%40microsoft.com%7C728839bea9c8482f835f08d982aec4e9%7C72f988bf86f141af91ab2d7cd011db47%7C1%7C0%7C637684509970189623%7CUnknown%7CTWFpbGZsb3d8eyJWIjoiMC4wLjAwMDAiLCJQIjoiV2luMzIiLCJBTiI6Ik1haWwiLCJXVCI6Mn0%3D%7C7000&sdata=XV9LyoY5ivq0y8JrNLmGgu%2FL02TsFE39OibSd%2FSRxN0%3D&reserved=0>)

> admission + labelling/annotation. The benefit of that approach is that:

> 1. it's centrally managed by the cluster admin who can easily enable,

> disable or configure it.

> 2. it works with every client, since you're still working with well

> known delete + label/annotate

> operations.

I don't want to lose track of the 80% use-case here. That use-case is:

- Interactive users should have to confirm cascading deletes.

- CI/CD systems should NOT have to.

So far, all of the proposed solutions -- whether Eddie's or Brendan's --

require modifying every singe CI/CD system that works with Kubernetes in

order to re-enable regular operation. IMHO, that's a showstopper; it's

simply not worth forcing an ecosystem-wide change in order to disable a

footgun.

This is why I suggested upthread to make this a simple .kube/config option.

--

-- Josh Berkus

Kubernetes Community Architect

OSPO, OCTO

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group.

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

Daniel Smith

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/MW4PR21MB2002981100D2FA1767824CD4DBA89%40MW4PR21MB2002.namprd21.prod.outlook.com.

Josh Berkus

> Want to enable locks? Great, add this admission controller.

> Worried about the impact on CI/CD of locks? Don't add the admission

> controller.

interactive developers unless they can be sure that 100% of their

deployment toolchain also has code supporting it. Which means I think

you'd see very few users actually enabling the admission controller for

several years after introducing the feature.

I thought SIG-CLI wanted something folks would be able to use sooner

than that?

Brendan Burns

Sent: Tuesday, September 28, 2021 4:56 PM

To: Brendan Burns <bbu...@microsoft.com>; Maciej Szulik <masz...@redhat.com>; Kubernetes developer/contributor discussion <kuberne...@googlegroups.com>

Subject: Re: [EXTERNAL] Re: [RFC] Protecting users of kubectl delete

Nathan Fisher

1. statefulset - irreplaceable data if you aren't backing it up.

2a. deployment - user facing production workloads.

2b. daemonset - service meshes, observability, and similar fun.

4. cronjob.

2. deleting a namespace.

3. deleting an individual resource.

Is there a significant number of people reporting that they've deleted their prod workload with --all by accident? Feels like one of those mistakes that happens once... and you don't repeat it.

With namespaces I've seen companies prevent foot guns by using RBAC to limit user's access.

The real concern seems to be deletion of an individual resource with state.

I generally agree with Brendan Burns in terms of impact and/or fracturing of behaviour. As it would break a lot of other things in the ecosystem like operators, Helm, etc until they're upgraded to work with such a controller.

--

You received this message because you are subscribed to the Google Groups "Kubernetes developer/contributor discussion" group.

To unsubscribe from this group and stop receiving emails from it, send an email to kubernetes-de...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/kubernetes-dev/MW4PR21MB20025B50D49D5FDD1FDB2BA9DBA99%40MW4PR21MB2002.namprd21.prod.outlook.com.

--