Image Stitching and Exposure Fusion with pv

993 views

Skip to first unread message

kfj

Feb 28, 2021, 5:23:32 AM2/28/21

to hugin and other free panoramic software

Dear group!

I introduced HDR blending with pv some time back, and since that time I felt tempted to tackle both exposure fusion and image stitching as well. Hugin usually delegates these tasks to enfuse and enblend, and I have used both programs to good effect for a long time, so I was aiming at using similar techniques in pv. enfuse and enblend rely on multiresolution blending, as published by Peter J. Burt and Edward H. Adelson in their article 'A Multiresolution Spline With Application to Image Mosaics', which was used for exposure fusion by Tom Mertens, Jan Kautz and Frank Van Reeth, as described in their article 'Exposure Fusion'.

pv uses b-splines for interpolation, and it's use of image pyramids relies on a variant of image pyramids based on b-splines. This has proven effective for viewing images with pv for many years now, and it's also what I use for image pyramids in my implementation of the adapted multiresolution blending algorithm. This provides a fresh, modern implementation based on my library vspline, which is fast because it is multithreaded and uses SIMD code.

I have pushed a prototype to the master branch of my pv repo which offers both exposure fusion and image stitching using this new code, so for now the code is Linux-only - if you feel adventurous, do your own merge to the msys2 or mac branches. To try it out, simply load a PTO containing a registered exposure bracket or a panorama into pv, select the ROI and press 'U' for an exposure fusion or 'P' for a stitch. You may want to pass --snapshot_magnification=... on the command line to get larger output, other snapshot-related parameters should apply.

Consider this a 'sneak preview' - the code is, as of this writing, not yet highly optimized, but should be functional. The output is rendered in the background and may take some time to complete. Best work from the command line, where there is some feedback on the process. I'll tweak the code in the days to come, and I intend to provide a version with alpha channel processing as well. I'll post again once everything is 'production grade' and fully documented.

One large benefit of having these new capabilities in pv is that it does away with the need for intermediate images and external helper programs: the intermediates are produced and processed internally in RAM, so there is no disk traffic, and the internal intermediates have full single precision float resolution, which makes for good image quality. On the down side, due to the RAM-based intermediates, output size is limited.

Kay

Harry van der Wolf

Feb 28, 2021, 1:09:25 PM2/28/21

to hugi...@googlegroups.com

I just build it on a Ubuntu derived 64bit system. It compiles fine. Before testing further I did the same on my Debian 10 buster armhf Raspberry pi 4. Now it ends in error.

Don't put too much effort in it. The rpi4 is my server, but I just wanted to test if it would compile there (and then test it via tightvnc).

clang++ -c -Ofast -std=c++11 -D USE_VC -D VECTORIZE -c -fno-math-errno -Wno-unused-value pv_no_rendering.cc -o pv_no_rendering.o

clang++ -c -Ofast -std=c++11 -D USE_VC -D VECTORIZE -c -fno-math-errno -Wno-unused-value pv_initialize.cc -o pv_initialize.o

clang++ -c -D PV_ARCH=PV_FALLBACK -Ofast -std=c++11 -D USE_VC -D VECTORIZE -c -fno-math-errno -Wno-unused-value pv_rendering.cc -o pv_fallback.o

clang++ -c -mavx -D PV_ARCH=PV_AVX -Ofast -std=c++11 -D USE_VC -D VECTORIZE -c -fno-math-errno -Wno-unused-value pv_rendering.cc -o pv_avx.o

clang: warning: argument unused during compilation: '-mavx' [-Wunused-command-line-argument]

clang++ -c -mavx2 -D PV_ARCH=PV_AVX2 -Ofast -std=c++11 -D USE_VC -D VECTORIZE -c -fno-math-errno -Wno-unused-value pv_rendering.cc -o pv_avx2.o

clang: warning: argument unused during compilation: '-mavx2' [-Wunused-command-line-argument]

clang++ -c -mavx512f -D PV_ARCH=PV_AVX512f -Ofast -std=c++11 -D USE_VC -D VECTORIZE -c -fno-math-errno -Wno-unused-value pv_rendering.cc -o pv_avx512f.o

clang: warning: argument unused during compilation: '-mavx512f' [-Wunused-command-line-argument]

clang++ -c -D PV_ARCH=PV_FALLBACK -Ofast -std=c++11 -D USE_VC -D VECTORIZE -c -fno-math-errno -Wno-unused-value pv_combine.cc -o pv_cmb_fallback.o

clang++ -c -mavx -D PV_ARCH=PV_AVX -Ofast -std=c++11 -D USE_VC -D VECTORIZE -c -fno-math-errno -Wno-unused-value pv_combine.cc -o pv_cmb_avx.o

clang: warning: argument unused during compilation: '-mavx' [-Wunused-command-line-argument]

clang++ -c -mavx2 -D PV_ARCH=PV_AVX2 -Ofast -std=c++11 -D USE_VC -D VECTORIZE -c -fno-math-errno -Wno-unused-value pv_combine.cc -o pv_cmb_avx2.o

clang: warning: argument unused during compilation: '-mavx2' [-Wunused-command-line-argument]

clang++ -c -mavx512f -D PV_ARCH=PV_AVX512f -Ofast -std=c++11 -D USE_VC -D VECTORIZE -c -fno-math-errno -Wno-unused-value pv_combine.cc -o pv_cmb_avx512f.o

clang: warning: argument unused during compilation: '-mavx512f' [-Wunused-command-line-argument]

clang++ -DUSE_TINYFILEDIALOGS -std=c++11 -c -o file_dialog.o file_dialog.cc

clang++ -std=c++11 -o pv pv_no_rendering.o pv_initialize.o pv_fallback.o pv_avx.o pv_avx2.o pv_avx512f.o pv_cmb_fallback.o pv_cmb_avx.o pv_cmb_avx2.o pv_cmb_avx512f.o file_dialog.o -lpthread -lVc -lvigraimpex -lsfml-window -lsfml-graphics -lsfml-system -lexiv2

/usr/bin/ld: pv_no_rendering.o: in function `__cxx_global_var_init.375':

pv_no_rendering.cc:(.text.startup[_ZN4Vc_16detail12RunCpuIdInitILi0EE3tmpE]+0x18): undefined reference to `Vc_1::CpuId::init()'

/usr/bin/ld: pv_no_rendering.o: in function `_GLOBAL__sub_I_pv_no_rendering.cc':

pv_no_rendering.cc:(.text.startup+0x6ec): undefined reference to `Vc_1::CpuId::s_processorFeatures7B'

/usr/bin/ld: pv_no_rendering.cc:(.text.startup+0x6f8): undefined reference to `Vc_1::CpuId::s_processorFeaturesC'

clang: error: linker command failed with exit code 1 (use -v to see invocation)

make: *** [makefile:33: pv] Error 1

clang++ -c -Ofast -std=c++11 -D USE_VC -D VECTORIZE -c -fno-math-errno -Wno-unused-value pv_initialize.cc -o pv_initialize.o

clang++ -c -D PV_ARCH=PV_FALLBACK -Ofast -std=c++11 -D USE_VC -D VECTORIZE -c -fno-math-errno -Wno-unused-value pv_rendering.cc -o pv_fallback.o

clang++ -c -mavx -D PV_ARCH=PV_AVX -Ofast -std=c++11 -D USE_VC -D VECTORIZE -c -fno-math-errno -Wno-unused-value pv_rendering.cc -o pv_avx.o

clang: warning: argument unused during compilation: '-mavx' [-Wunused-command-line-argument]

clang++ -c -mavx2 -D PV_ARCH=PV_AVX2 -Ofast -std=c++11 -D USE_VC -D VECTORIZE -c -fno-math-errno -Wno-unused-value pv_rendering.cc -o pv_avx2.o

clang: warning: argument unused during compilation: '-mavx2' [-Wunused-command-line-argument]

clang++ -c -mavx512f -D PV_ARCH=PV_AVX512f -Ofast -std=c++11 -D USE_VC -D VECTORIZE -c -fno-math-errno -Wno-unused-value pv_rendering.cc -o pv_avx512f.o

clang: warning: argument unused during compilation: '-mavx512f' [-Wunused-command-line-argument]

clang++ -c -D PV_ARCH=PV_FALLBACK -Ofast -std=c++11 -D USE_VC -D VECTORIZE -c -fno-math-errno -Wno-unused-value pv_combine.cc -o pv_cmb_fallback.o

clang++ -c -mavx -D PV_ARCH=PV_AVX -Ofast -std=c++11 -D USE_VC -D VECTORIZE -c -fno-math-errno -Wno-unused-value pv_combine.cc -o pv_cmb_avx.o

clang: warning: argument unused during compilation: '-mavx' [-Wunused-command-line-argument]

clang++ -c -mavx2 -D PV_ARCH=PV_AVX2 -Ofast -std=c++11 -D USE_VC -D VECTORIZE -c -fno-math-errno -Wno-unused-value pv_combine.cc -o pv_cmb_avx2.o

clang: warning: argument unused during compilation: '-mavx2' [-Wunused-command-line-argument]

clang++ -c -mavx512f -D PV_ARCH=PV_AVX512f -Ofast -std=c++11 -D USE_VC -D VECTORIZE -c -fno-math-errno -Wno-unused-value pv_combine.cc -o pv_cmb_avx512f.o

clang: warning: argument unused during compilation: '-mavx512f' [-Wunused-command-line-argument]

clang++ -DUSE_TINYFILEDIALOGS -std=c++11 -c -o file_dialog.o file_dialog.cc

clang++ -std=c++11 -o pv pv_no_rendering.o pv_initialize.o pv_fallback.o pv_avx.o pv_avx2.o pv_avx512f.o pv_cmb_fallback.o pv_cmb_avx.o pv_cmb_avx2.o pv_cmb_avx512f.o file_dialog.o -lpthread -lVc -lvigraimpex -lsfml-window -lsfml-graphics -lsfml-system -lexiv2

/usr/bin/ld: pv_no_rendering.o: in function `__cxx_global_var_init.375':

pv_no_rendering.cc:(.text.startup[_ZN4Vc_16detail12RunCpuIdInitILi0EE3tmpE]+0x18): undefined reference to `Vc_1::CpuId::init()'

/usr/bin/ld: pv_no_rendering.o: in function `_GLOBAL__sub_I_pv_no_rendering.cc':

pv_no_rendering.cc:(.text.startup+0x6ec): undefined reference to `Vc_1::CpuId::s_processorFeatures7B'

/usr/bin/ld: pv_no_rendering.cc:(.text.startup+0x6f8): undefined reference to `Vc_1::CpuId::s_processorFeaturesC'

clang: error: linker command failed with exit code 1 (use -v to see invocation)

make: *** [makefile:33: pv] Error 1

Kay F. Jahnke

Mar 1, 2021, 2:40:46 AM3/1/21

to hugi...@googlegroups.com

Am 28.02.21 um 19:09 schrieb Harry van der Wolf:

> I just build it on a Ubuntu derived 64bit system. It compiles fine.

Great! Thanks for reporting back. Do try it out, and share your

experience. To me, the implementation of the Burt/Adelson Algorithm is a

major breakthrough.

> Before testing further I did the same on my Debian 10 buster armhf

> Raspberry pi 4. Now it ends in error.

> Don't put too much effort in it. The rpi4 is my server, but I just

> wanted to test if it would compile there (and then test it via tightvnc).

pv is currently intel/AMD only, the Raspi uses an ARM processor. I'd

like to try a port to ARM eventually, especially to support newer macs,

but this will require a bit more fiddling than just supporting yet

another intel ISA.

What would be required is quite different compiler flags for the

rendering code (so, not -mavx etc) and setting up the dispatcher to send

rendering jobs to the code with the right ISA.

This should not be too hard if Vc supports ARM well, and if it doesn't,

pv can fall back on code which isn't using Vc. The problem is that I

don't have any ARM hardware, so it's hard for me to test. Would you like

to cooperate on an ARM port? I could produce code which should run on

ARM, but lacking testing hardware I'd probably need several attempts

before it comes out right. I'd set up an ARM branch, you can watch it

for new commits, try the build and communicate via the issue tracker.

Kay

> I just build it on a Ubuntu derived 64bit system. It compiles fine.

experience. To me, the implementation of the Burt/Adelson Algorithm is a

major breakthrough.

> Before testing further I did the same on my Debian 10 buster armhf

> Raspberry pi 4. Now it ends in error.

> Don't put too much effort in it. The rpi4 is my server, but I just

> wanted to test if it would compile there (and then test it via tightvnc).

like to try a port to ARM eventually, especially to support newer macs,

but this will require a bit more fiddling than just supporting yet

another intel ISA.

What would be required is quite different compiler flags for the

rendering code (so, not -mavx etc) and setting up the dispatcher to send

rendering jobs to the code with the right ISA.

This should not be too hard if Vc supports ARM well, and if it doesn't,

pv can fall back on code which isn't using Vc. The problem is that I

don't have any ARM hardware, so it's hard for me to test. Would you like

to cooperate on an ARM port? I could produce code which should run on

ARM, but lacking testing hardware I'd probably need several attempts

before it comes out right. I'd set up an ARM branch, you can watch it

for new commits, try the build and communicate via the issue tracker.

Kay

Monkey

Mar 1, 2021, 8:10:11 AM3/1/21

to hugin and other free panoramic software

>

and it's use of image pyramids relies on a variant of image pyramids based on b-splines.

>

To me, the implementation of the Burt/Adelson Algorithm is a

major breakthrough.

major breakthrough.

Is there a simple way to explain how this/these differ from a standard Laplacian pyramid?

Harry van der Wolf

Mar 1, 2021, 11:07:19 AM3/1/21

to hugi...@googlegroups.com

Hi,

I understand that ARM is different. That's also why I mentioned to not put too much effort into it.

Yes, I'm willing to cooperate in porting to armhf for the raspberry pi' s. I also have another rpi4 which runs Ubuntu Mate 20.04 (currently), but my (headless) server is always on.

But I use the other one for all kind of things, so helping to port pv could be another option.

I have no idea though how much the "normal" ARM chips vary from the new Apple Intel M1. That might be a completely different beast (the 8-core is at least when it comes to performance).

I read that Apple uses the same Rosetta (V2) for running Intel based programs on the new M1. (Just like 10-12 years ago when they switched from PowerPC to Intel to run PowerPC apps on Intel. I also guess the universal binaries will come to life again. At that time I took over from Ippei Ukai for building Hugin on Mac. A platform I left in 2012 or so for being too closed).

So maybe you do not have to port immediately,but can simply run your intel based MacOS version on the new M1' s.

On the RPi4 I also did the:

git clone https://github.com/VcDevel/Vc.git

cd Vc

git checkout 1.4

mkdir build

cd build

cmake -DCMAKE_CXX_COMPILER=clang++ -DBUILD_TESTING=0 ..

make

sudo make install

cd Vc

git checkout 1.4

mkdir build

cd build

cmake -DCMAKE_CXX_COMPILER=clang++ -DBUILD_TESTING=0 ..

make

sudo make install

And then tried to rebuild pv, but that doesn' t make a difference. The exact same error.

======================================

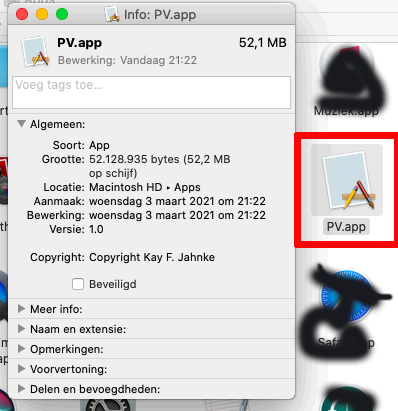

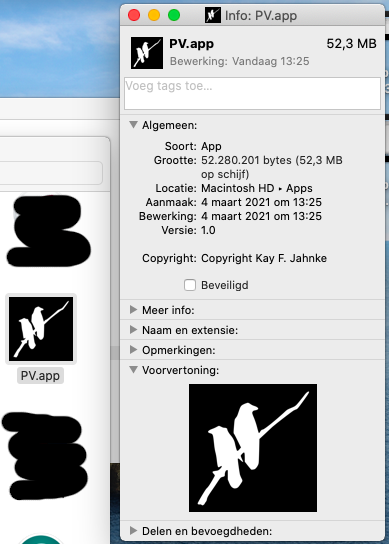

With regard to Intel MacOS: I had just built multiblend for David on my old (by now terribly slow) 2008 MacBook Pro, so I tried the same for pv also using macports (as home brew does not have vigra and I want to keep it simple).

(install macports using pkg)

(from terminal do a: sudo port -v selfupdate)

Getting dependencies:

sudo port install sfml

sudo port install exiv2

sudo port install vigra -python38 -python39

sudo port install Vc

(This installs a whole lot of other dependencies as well. Note also the uppercase in Vc

)

Get and build pv:

git status (to check whether you have the mac branch)

make

It builds fine and I only did some viewing of images until now.

Harry

T. Modes

Mar 1, 2021, 11:59:03 AM3/1/21

to hugin and other free panoramic software

I found this a little bit sad. Instead of merging the developing power everyone is working on its own code.

Yes, it is easier to work on own code. But for the longer one it would be better to combine the developing skills and work on a common code base.

This would also have the advantage that not all code needs to be written and debugging several times in several iterations.

So please consider working at a common code base or at least port the improvements to enblend/enfuse.

Thomas

Kay F. Jahnke

Mar 2, 2021, 5:20:14 AM3/2/21

to hugi...@googlegroups.com

Am 01.03.21 um 14:10 schrieb Monkey:

> > and it's use of image pyramids relies on a variant of image pyramids

> based on b-splines.

>

> > To me, the implementation of the Burt/Adelson Algorithm is a

> major breakthrough.

>

> Is there a simple way to explain how this/these differ from a standard

> Laplacian pyramid?

My code uses a different pyramid generation scheme for the gaussian

pyramids. The laplacian pyramids are not made explicit but calculated

on-the-fly by functor composition - the result is the same, the

difference between a scaled-up 'top' level and the unmodified 'bottom

level' at every stage - but the process is less memory-hungry and uses

vspline's fast multithreaded SIMD code to do the operation in one go.

Standard gaussian pyramids have a set of samples at each level. To get

the next smaller level ('reduce' stage), a digital low-pass filter is

applied - usually a small-kernel FIR filter - and the result is

decimated, discarding every other sample. Everything works along the

initial sampling grid, every pyramid stage is precisely half as large as

the previous. The 'expand' stage uses the same FIR filter for upscaling

from a grid filled with zeros where only every other sample is filled in

from the 'top' level.

My pyramid technique has a b-spline at each level. This is a continuous

function, and low-pass-filtering is done by modifications of the

b-spline evaluation function; the resulting (continuous) low-pass signal

can then be sampled at arbitrary positions. This method is grid-free:

you can choose decimation steps different from two, and you're free to

place the samples which the smaller-level spline is built from in

off-grid positions. I use this in pv to get a set of splines which have

the same boundary behavior, so by slotting in a simple shift+scale, I

can evaluate them with the same coordinates at every level, which

simplifies the code a great deal. The 'expand' stage of the pyramid uses

simple b-spline evaluation, which is good for upscaling a signal.

pv offers the parameter 'pyramid_scaling_step' which allows you to build

'steeper' or 'shallower' pyramids scaling each level with an arbitrary

real factor - 2.0 by default, but sensible values go down to, say, 1.1.

you can simply try it out and see if it makes any difference - the

effect is subtle and hard to spot unless you know what to look for, if

you're interested I can say more about it.

Employing my spline-based pyramid technique to implement an equivalent

of the 'classic' Burt and Adelson (B&A) approach is experimental, but

with the latest pv commits I provide a working implementation so it can

be evaluated and experimented with, to see where it's advantages and

disadvantages are, and to figure out where my initial choices in

mimicking the B&A algorithm can be improved. It may well turn out to be

inferior to the classic approach - the intention in pv is to produce

'decent' stitches and expoure fusions for the currently displayed view -

if I can get the quality up to a level which approaches the standard

technique, this would be great, but I'm not making such claims yet.

To sum it up: The main difference to 'classic' pyramids and B&A in the

current pv code is the off-grid evaluation for up- and downsampling,

arbitrary scaling factor from one pyramid level to the next, use of a

small binomial FIR filter (.25, .5, .25) for low-pass filtering, and use

of b-spline evaluation for upsampling.

I hope this answers your question - the B&A algorithm and image pyramids

are quite involved stuff, and it's hard to explain the differences

'simply' because the algorithm in itself is not simple.

Kay

> > and it's use of image pyramids relies on a variant of image pyramids

> based on b-splines.

>

> > To me, the implementation of the Burt/Adelson Algorithm is a

> major breakthrough.

>

> Is there a simple way to explain how this/these differ from a standard

> Laplacian pyramid?

pyramids. The laplacian pyramids are not made explicit but calculated

on-the-fly by functor composition - the result is the same, the

difference between a scaled-up 'top' level and the unmodified 'bottom

level' at every stage - but the process is less memory-hungry and uses

vspline's fast multithreaded SIMD code to do the operation in one go.

Standard gaussian pyramids have a set of samples at each level. To get

the next smaller level ('reduce' stage), a digital low-pass filter is

applied - usually a small-kernel FIR filter - and the result is

decimated, discarding every other sample. Everything works along the

initial sampling grid, every pyramid stage is precisely half as large as

the previous. The 'expand' stage uses the same FIR filter for upscaling

from a grid filled with zeros where only every other sample is filled in

from the 'top' level.

My pyramid technique has a b-spline at each level. This is a continuous

function, and low-pass-filtering is done by modifications of the

b-spline evaluation function; the resulting (continuous) low-pass signal

can then be sampled at arbitrary positions. This method is grid-free:

you can choose decimation steps different from two, and you're free to

place the samples which the smaller-level spline is built from in

off-grid positions. I use this in pv to get a set of splines which have

the same boundary behavior, so by slotting in a simple shift+scale, I

can evaluate them with the same coordinates at every level, which

simplifies the code a great deal. The 'expand' stage of the pyramid uses

simple b-spline evaluation, which is good for upscaling a signal.

pv offers the parameter 'pyramid_scaling_step' which allows you to build

'steeper' or 'shallower' pyramids scaling each level with an arbitrary

real factor - 2.0 by default, but sensible values go down to, say, 1.1.

you can simply try it out and see if it makes any difference - the

effect is subtle and hard to spot unless you know what to look for, if

you're interested I can say more about it.

Employing my spline-based pyramid technique to implement an equivalent

of the 'classic' Burt and Adelson (B&A) approach is experimental, but

with the latest pv commits I provide a working implementation so it can

be evaluated and experimented with, to see where it's advantages and

disadvantages are, and to figure out where my initial choices in

mimicking the B&A algorithm can be improved. It may well turn out to be

inferior to the classic approach - the intention in pv is to produce

'decent' stitches and expoure fusions for the currently displayed view -

if I can get the quality up to a level which approaches the standard

technique, this would be great, but I'm not making such claims yet.

To sum it up: The main difference to 'classic' pyramids and B&A in the

current pv code is the off-grid evaluation for up- and downsampling,

arbitrary scaling factor from one pyramid level to the next, use of a

small binomial FIR filter (.25, .5, .25) for low-pass filtering, and use

of b-spline evaluation for upsampling.

I hope this answers your question - the B&A algorithm and image pyramids

are quite involved stuff, and it's hard to explain the differences

'simply' because the algorithm in itself is not simple.

Kay

Kay F. Jahnke

Mar 2, 2021, 5:43:16 AM3/2/21

to hugi...@googlegroups.com

Am 01.03.21 um 17:07 schrieb Harry van der Wolf:

*fast* I can make it. I can set up the compilation to not use Vc for the

SIMD code, which makes everything standard C++11, with no hardware

dependencies. If Vc can be used, this should make it faster.

> Yes, I'm willing to cooperate in porting to armhf for the raspberry pi'

> s. I also have another rpi4 which runs Ubuntu Mate 20.04 (currently),

> but my (headless) server is always on.

> But I use the other one for all kind of things, so helping to port pv

> could be another option.

I'll set up a branch called 'native' which will offer three targets:

- scalar version, not making an attempt at SIMD whatsoever

- vspline SIMD implementation, using vspline's SIMD emulation

- Vc SIMD implementation, attempting to use Vc on ARM.

All of the targets will be compiled with '--march=native', telling the

compiler to compile for the ISA it's running on. The binaries will only

run on the build machine or better. Then we can take it from there -

especially the Vc target may not build without tweaking.

I'll get in touch once I have the branch set up, and you can pull the

branch and see whether it compiles on your ARM machine.

> I have no idea though how much the "normal" ARM chips vary from the new

> Apple Intel M1. That might be a completely different beast (the 8-core

> is at least when it comes to performance).

> I read that Apple uses the same Rosetta (V2) for running Intel based

> programs on the new M1./(Just like 10-12 years ago when they switched

your side takes a few minutes, and if it succeeds, we have an ARM port

of pv to play with. I anticipate that the 'native' approach should even

function on all platforms (including intel/AMD), but the resulting

binaries will be hardware-dependent, this is why I went for automatic

ISA detection in the intel/AMD version, to make it easier for users and

packagers.

>

>

> On the RPi4 I also did the:

> git clone https://github.com/VcDevel/Vc.git

> cd Vc

> git checkout 1.4

> mkdir build

> cd build

> cmake -DCMAKE_CXX_COMPILER=clang++ -DBUILD_TESTING=0 ..

> make

> sudo make install

> And then tried to rebuild pv, but that doesn' t make a difference. The

> exact same error.

Wait for the new branch, hopefully the outcome will be better.

> ======================================

> With regard to Intel MacOS: I had just built multiblend for David on my

> old (by now terribly slow) 2008 MacBook Pro, so I tried the same for pv

> also using macports (as home brew does not have vigra and I want to

> keep it simple).

> (install macports using pkg)

> (from terminal do a: sudo port -v selfupdate)

>

> Getting dependencies:

> sudo port install sfml

> sudo port install exiv2

> sudo port install vigra -python38 -python39

> sudo port install Vc

> (This installs a whole lot of other dependencies as well. Note also the

> uppercase in Vc )

>

> Get and build pv:

> git clone https://bitbucket.org/kfj/pv

> cd pv

> git checkout mac

> git status (to check whether you have the mac branch)

> make

>

> It builds fine and I only did some viewing of images until now.

Thank you for confirmig a mac build! This is great, you're now only the

third person I know of who's succeeded in building pv on a mac. I don't

have a mac myself, so it's hard for me to code for it. Please note that

the mac branch has not yet been updated to contain my new code, I'll do

that soon-ish. Old macs are like old pcs, they won't have fast SIMD

units. I routinely build on an old IBM Thinkpad R60e with a core2 Duo,

to confirm that the code works on 32bit intel hardware with SSE only. It

works, but it takes forever... My desktop is a Haswell core-i5, which

has AVX2. This is where it starts being fun :D

Since you're the one who's building hugin on the mac, maybe you can give

me a hint on how to write a 'port file' to provide a pv package for

macports? Is that hard? And is it hard to provide a package which users

can simply download from the app store? I proposed to bundle pv with

hugin, because it would make a good addition to the suite, what do you say?

Kay

> Hi,

>

> I understand that ARM is different. That's also why I mentioned to not

> put too much effort into it.

It should not be too hard to get pv to run on ARM, the question is how

>

> I understand that ARM is different. That's also why I mentioned to not

> put too much effort into it.

*fast* I can make it. I can set up the compilation to not use Vc for the

SIMD code, which makes everything standard C++11, with no hardware

dependencies. If Vc can be used, this should make it faster.

> Yes, I'm willing to cooperate in porting to armhf for the raspberry pi'

> s. I also have another rpi4 which runs Ubuntu Mate 20.04 (currently),

> but my (headless) server is always on.

> But I use the other one for all kind of things, so helping to port pv

> could be another option.

- scalar version, not making an attempt at SIMD whatsoever

- vspline SIMD implementation, using vspline's SIMD emulation

- Vc SIMD implementation, attempting to use Vc on ARM.

All of the targets will be compiled with '--march=native', telling the

compiler to compile for the ISA it's running on. The binaries will only

run on the build machine or better. Then we can take it from there -

especially the Vc target may not build without tweaking.

I'll get in touch once I have the branch set up, and you can pull the

branch and see whether it compiles on your ARM machine.

> I have no idea though how much the "normal" ARM chips vary from the new

> Apple Intel M1. That might be a completely different beast (the 8-core

> is at least when it comes to performance).

> I read that Apple uses the same Rosetta (V2) for running Intel based

> from PowerPC to Intel to run PowerPC apps on Intel. I also guess the

> universal binaries will come to life again. At that time I took over

> from Ippei Ukai for building Hugin on Mac. A platform I left in 2012 or

> so for being too closed)./

> universal binaries will come to life again. At that time I took over

> from Ippei Ukai for building Hugin on Mac. A platform I left in 2012 or

> So maybe you do not have to port immediately,but can simply run your

> intel based MacOS version on the new M1' s.

No, let's give it a go. It's not much work on my side, a first try at

> intel based MacOS version on the new M1' s.

your side takes a few minutes, and if it succeeds, we have an ARM port

of pv to play with. I anticipate that the 'native' approach should even

function on all platforms (including intel/AMD), but the resulting

binaries will be hardware-dependent, this is why I went for automatic

ISA detection in the intel/AMD version, to make it easier for users and

packagers.

>

>

> On the RPi4 I also did the:

> git clone https://github.com/VcDevel/Vc.git

> cd Vc

> git checkout 1.4

> mkdir build

> cd build

> cmake -DCMAKE_CXX_COMPILER=clang++ -DBUILD_TESTING=0 ..

> make

> sudo make install

> And then tried to rebuild pv, but that doesn' t make a difference. The

> exact same error.

> ======================================

> With regard to Intel MacOS: I had just built multiblend for David on my

> old (by now terribly slow) 2008 MacBook Pro, so I tried the same for pv

> also using macports (as home brew does not have vigra and I want to

> keep it simple).

> (install macports using pkg)

> (from terminal do a: sudo port -v selfupdate)

>

> Getting dependencies:

> sudo port install sfml

> sudo port install exiv2

> sudo port install vigra -python38 -python39

> sudo port install Vc

> (This installs a whole lot of other dependencies as well. Note also the

> uppercase in Vc )

>

> Get and build pv:

> git clone https://bitbucket.org/kfj/pv

> cd pv

> git checkout mac

> git status (to check whether you have the mac branch)

> make

>

> It builds fine and I only did some viewing of images until now.

third person I know of who's succeeded in building pv on a mac. I don't

have a mac myself, so it's hard for me to code for it. Please note that

the mac branch has not yet been updated to contain my new code, I'll do

that soon-ish. Old macs are like old pcs, they won't have fast SIMD

units. I routinely build on an old IBM Thinkpad R60e with a core2 Duo,

to confirm that the code works on 32bit intel hardware with SSE only. It

works, but it takes forever... My desktop is a Haswell core-i5, which

has AVX2. This is where it starts being fun :D

Since you're the one who's building hugin on the mac, maybe you can give

me a hint on how to write a 'port file' to provide a pv package for

macports? Is that hard? And is it hard to provide a package which users

can simply download from the app store? I proposed to bundle pv with

hugin, because it would make a good addition to the suite, what do you say?

Kay

Kay F. Jahnke

Mar 2, 2021, 6:00:38 AM3/2/21

to hugi...@googlegroups.com

Am 01.03.21 um 17:59 schrieb T. Modes:

> I found this a little bit sad. Instead of merging the developing power

> everyone is working on its own code.

pv started out as a demo program for my b-spline interpolation library,

vspline. Initially it was only displaying single images. Then I extended

it to display synoptic views of several images, and the natural file

format for this is PTO, which is widely used. So I extended pv to read

PTO format. But all the code in pv and vspline is completely new, it

does not rely on libpano or libhugin at all, and I can see no way of

easily integrating it into hugin or it's helper programs.

> Yes, it is easier to work on own code. But for the longer one it would

> be better to combine the developing skills and work on a common code base.

> This would also have the advantage that not all code needs to be written

> and debugging several times in several iterations.

The code base is totally different. pv and hugin code are quite

incompatible, the only common ground is the use of PTO format. pv is a

panorama viewer, and stitching is only a nice-to-have recent addition.

> So please consider working at a common code base or at least port the

> improvements to enblend/enfuse.

I took nothing from enblend/enfuse but the idea of what I wanted and the

choice of the Burt and Adelson image splining algorithm. My code is for

embedded use in pv, and it's quite alien to enblend and enfuse, as I

have explained in my reply to Monkey concerning the use of image

pyramids. There is nothing to port as of yet.

If enblend/enfuse developers are interested in my new implementation of

the B&A algorithm they are free to use it - it's all FOSS (GPL v3) and

amply documented. For now I recommend to just play with my new code and

to see if it works out fine - it's still experimental and may not turn

out to be as good as the 'classic' implementation in enblend/enfuse. And

enblend/enfuse have a much wider selection of parameters and variants

and integrate well with hugin, so they will be the standard for some

time to come, and they do well what they do.

Kay

> I found this a little bit sad. Instead of merging the developing power

> everyone is working on its own code.

vspline. Initially it was only displaying single images. Then I extended

it to display synoptic views of several images, and the natural file

format for this is PTO, which is widely used. So I extended pv to read

PTO format. But all the code in pv and vspline is completely new, it

does not rely on libpano or libhugin at all, and I can see no way of

easily integrating it into hugin or it's helper programs.

> Yes, it is easier to work on own code. But for the longer one it would

> be better to combine the developing skills and work on a common code base.

> This would also have the advantage that not all code needs to be written

> and debugging several times in several iterations.

incompatible, the only common ground is the use of PTO format. pv is a

panorama viewer, and stitching is only a nice-to-have recent addition.

> So please consider working at a common code base or at least port the

> improvements to enblend/enfuse.

choice of the Burt and Adelson image splining algorithm. My code is for

embedded use in pv, and it's quite alien to enblend and enfuse, as I

have explained in my reply to Monkey concerning the use of image

pyramids. There is nothing to port as of yet.

If enblend/enfuse developers are interested in my new implementation of

the B&A algorithm they are free to use it - it's all FOSS (GPL v3) and

amply documented. For now I recommend to just play with my new code and

to see if it works out fine - it's still experimental and may not turn

out to be as good as the 'classic' implementation in enblend/enfuse. And

enblend/enfuse have a much wider selection of parameters and variants

and integrate well with hugin, so they will be the standard for some

time to come, and they do well what they do.

Kay

Harry van der Wolf

Mar 2, 2021, 7:53:45 AM3/2/21

to hugi...@googlegroups.com

Op di 2 mrt. 2021 om 11:43 schreef 'Kay F. Jahnke' via hugin and other free panoramic software <hugi...@googlegroups.com>:

Thank you for confirmig a mac build! This is great, you're now only the

third person I know of who's succeeded in building pv on a mac. I don't

have a mac myself, so it's hard for me to code for it. Please note that

the mac branch has not yet been updated to contain my new code, I'll do

that soon-ish. Old macs are like old pcs, they won't have fast SIMD

units. I routinely build on an old IBM Thinkpad R60e with a core2 Duo,

to confirm that the code works on 32bit intel hardware with SSE only. It

works, but it takes forever... My desktop is a Haswell core-i5, which

has AVX2. This is where it starts being fun :D

Since you're the one who's building hugin on the mac, maybe you can give

me a hint on how to write a 'port file' to provide a pv package for

macports? Is that hard? And is it hard to provide a package which users

can simply download from the app store? I proposed to bundle pv with

hugin, because it would make a good addition to the suite, what do you say?

Kay

I was not correct, or at least not clear enough, in my answer. I did take over from Ippei Ukai, but also mentioned I abandoned Mac in 2012/2013 or so. I simply never threw my 2008 macbook away.

At that time I also maintained the macports build of hugin and enblend/enfuse, but that is now also long, long ago. But I will have a look at it.

The current Hugin builders on Mac are Niklas Mischkulnig and Bob Campbell. All credits to them (not to me).

I certainly do not have an Apple M1 and will not buy one either. I am a happy Linux user since 1993 (early adapter) and simply wandered off for a couple of years, both to Apple and Windows.

Making a package for the MacOS app store is a different thing.

You have to register as a developer at Apple. I did a complete rant some days ago about how I hate how Apple does things, but also realised at that time that I had registered around 2008, when I took over from Ippei. And yes, that still worked.

- So, you have to register at Apple as a developer giving quite some info.

- You have to offer your bundle to Apple where Apple checks for malware/Apple standards/"other things". That is actually a good thing, but will take time and sometimes your bundle is rejected while you know it is perfectly fine. I have a java app which I also packaged as a MacOS bundle. Apart from the fact that I won't release it via the apple store, it will not be accepted either as it is built outside Xcode thereby lacking some controls/standards (and Apple spyware?).

With regard to bundling with Hugin:

I agree with Thomas that where possible we should integrate. You already explained the big differences in code, so that might not be possible. I can't judge that as I'm not a C/C++ programmer.

As it is all open source, it might still be possible to migrate/integrate it. And otherwise: could it be possible to call pv from hugin to run it as enblend replacement. Just like multiblend can be called from hugin (with a simpler command set) as enblend replacement?

Also: panomatic was first an external tool and most of it is now integrated in cpfind.

I hope we see the same for pv blending/fusing functionality if it really is an improvement.

My personal point of view is to help where I can if "something" can mean an improvement for all of us. And if it is mature try to integrate it.

Harry

Kay F. Jahnke

Mar 3, 2021, 4:32:50 AM3/3/21

to hugi...@googlegroups.com

Am 02.03.21 um 13:53 schrieb Harry van der Wolf:

the mac universe. I've been a linux early adopter as well - working on

unix networks in the nineties changed my views as to what an OS should

be like and I started out with Xenix, then slackware Linux on my home

PC. Later on I used Windows for some time, with CygWin to have a POSIX

environment. Then it was back to Linux again, happy as you are. I keep a

W10 install on one of my Thinkpads to run windows builds of pv, so that

I can distribute Windows binaries to people who don't want to build

themselves.

> The current Hugin builders on Mac are Niklas Mischkulnig and Bob

> Campbell. All credits to them (not to me).

Maybe they'll take note of pv and become interested in the mac version -

after all the branch is already there and you demonstrated that it's

dead easy to create a binary. Sadly, my work so far has not got much

attention.

> I certainly do not have an Apple M1 and will not buy one either. I am a

> happy Linux user since 1993 (early adapter) and simply wandered off for

> a couple of years, both to Apple and Windows.

I must admit that the M1 is very attractive, though. It might be

worthwhile to just buy a small machine with this chip - I'd like a

macbook air, because I don't have an up-to-date laptop - my newest

thinkpad is eight years old or so.

> Making a package for the MacOS app store is a different thing.

> You have to register as a developer at Apple. I did a complete rant some

> days ago about how I hate how Apple does things, but also realised at

> that time that I had registered around 2008, when I took over from

> Ippei. And yes, that still worked.

I wouldn't want to do that if I can help it - that's why I was hoping to

piggyback on the hugin suite. I still think it would make a nice

addition. Where is the good cross-platform FOSS panorama viewer in the

hugin package? I was frustrated because I could not find one, so I wrote

one. I hear others use 'Panini' but I never managed to get it to work

for me. So here's pv.

> - So, you have to register at Apple as a developer giving quite some info.

> - You have to offer your bundle to Apple where Apple checks for

> malware/Apple standards/"other things". That is actually a good thing,

> but will take time and sometimes your bundle is rejected while you know

> it is perfectly fine. I have a java app which I also packaged as a MacOS

> bundle. Apart from the fact that I won't release it via the apple store,

> it will not be accepted either as it is built outside Xcode thereby

> lacking some controls/standards (and Apple spyware?).

I wouldn't be surprised...

> With regard to bundling with Hugin:

> I agree with Thomas that where possible we should integrate. You already

> explained the big differences in code, so that might not be possible. I

> can't judge that as I'm not a C/C++ programmer.

It is in fact completely new technology. Potentially disruptive. It's

probably not too hard to extract the blending and fusing code into a

separate program, but the beauty of pv is in the total integration of

all components - you simply load your PTO file into pv like any other

image, adjust the view until you have what you want, and press 'P' to

have a panorama rendered in the background. No intermediate images on

disk, no helper programs. Once the stitch is rendered, you can simply

open pv's file select dialog (press 'F') and load the freshly-made

panorama in the same session. Same goes for exposure fusions or HDR merges.

> As it is all open source, it might still be possible to

> migrate/integrate it. And otherwise: could it be possible to call pv

> from hugin to run it as enblend replacement. Just like multiblend can

> be called from hugin (with a simpler command set) as enblend replacement?

> Also: panomatic was first an external tool and most of it is now

> integrated in cpfind.

> I hope we see the same for pv blending/fusing functionality if it really

> is an improvement.

Now it's my turn to be 'a bit sad'. I've spent about seven man years

creating a b-spline library and a beautiful, powerful panorama viewer

which now can even do some stitching and exposure fusion on top of

everything else. It would be sad to just rip it apart and take a few

bits as helper programs for hugin. Think of pv as a 'preview on

steroids'. There was a discussion in this group whether it wouldn't make

sense to work more from the preview window. pv is my answer to this

discussion: its what used to be called 'wysiwig'. Pretty much everything

happens immediately, you see all parameter changes 'live'. The only

things which I can't show 'live' are exposure fusions and stitches,

because the B&A algorithm takes a lot of time. I may be able to tweak it

so that I can produce a few frames per second showing stitches and

fusions live, but for now they are rendered by a background thread to a

file on disk. For playing with my stitching and fusion code, this should

be good enough for now.

I even made it easy to use it as a preview for hugin and the likes: if

you work on a panorama in hugin, you can just have a pv window open to

the same PTO. as soon as you save the PTO in hugin, you can simply press

'F1' in pv and it will update to the changes in the PTO. I see pv rather

as a third 'preview' window which hugin etc. can use alongside the other

two, which are good for some tasks where my code is not - I don't handle

all panotools projections, I don't deal with control points, I can't

switch individual imageson and off... - but I sure can give a good view

at the PTO.

> My personal point of view is to help where I can if "something" can mean

> an improvement for all of us. And if it is mature try to integrate it.

If my code turns out to be good, I'd be happy to see it used by others.

I just don't want to go fiddling with other people's software to force

my new stuff in. Integration into extant software is better done by the

original authors, who know their way around their code. As far as pv and

it's new components are concerned, I want to stay upstream and focus on

innovation.

Now for the attempt to port pv to ARM. I have prepared the branch I

announced yesterday, and you can try if it compiles on your Raspi:

git checkout native

make

This will try to build three targets: pv_scalar, pv_vspsimd and

pv_vcsimd - you can also build them separately if you like, it's a phony

'all' target compiling the lot. The first one makes no attempt at using

SIMD code, the second one used vspline's 'implicit' SIMD code, and the

third uses Vc. I think the first two will build on the Raspi, the third

may or may not. If any of the targets build, you should have a

functional pv variant to run on the same machine which it was built on

(it uses --march=native). If the target using Vc builds, it should be

the fastest. Please try it out and let me know how it went!

When I build the 'native' branch targets on my desktop machine, the

performance difference is quite noticeable. My standard test is a

1000-frame automatic pan over a full spherical panorama. The per-frame

rendering times I get are roughly like this:

- pv_vcsimd: 7.1 msec

- pv_vspsimd: 12.4 msec

- pv_scalar: 15.5 msec

So the Vc version is more than twice as fast as the scalar one, and the

'implicit SIMD' version comes out in the middle.

Kay

>

>

> Op di 2 mrt. 2021 om 11:43 schreef 'Kay F. Jahnke' via hugin and other

>

>

> Op di 2 mrt. 2021 om 11:43 schreef 'Kay F. Jahnke' via hugin and other

>

> Since you're the one who's building hugin on the mac, maybe you can

> give

> me a hint on how to write a 'port file' to provide a pv package for

> macports? Is that hard? And is it hard to provide a package which users

> can simply download from the app store? I proposed to bundle pv with

> hugin, because it would make a good addition to the suite, what do

> you say?

>

> Kay

>

>

> I was not correct, or at least not clear enough, in my answer. I did

> take over from Ippei Ukai, but also mentioned I abandoned Mac in

> 2012/2013 or so. I simply never threw my 2008 macbook away.

> At that time I also maintained the macports build of hugin and

> enblend/enfuse, but that is now also long, long ago. But I will have a

> look at it.

Sorry, I did not read your mail properly. I can sympathize with leaving

> give

> me a hint on how to write a 'port file' to provide a pv package for

> macports? Is that hard? And is it hard to provide a package which users

> can simply download from the app store? I proposed to bundle pv with

> hugin, because it would make a good addition to the suite, what do

> you say?

>

> Kay

>

>

> I was not correct, or at least not clear enough, in my answer. I did

> take over from Ippei Ukai, but also mentioned I abandoned Mac in

> 2012/2013 or so. I simply never threw my 2008 macbook away.

> At that time I also maintained the macports build of hugin and

> enblend/enfuse, but that is now also long, long ago. But I will have a

> look at it.

the mac universe. I've been a linux early adopter as well - working on

unix networks in the nineties changed my views as to what an OS should

be like and I started out with Xenix, then slackware Linux on my home

PC. Later on I used Windows for some time, with CygWin to have a POSIX

environment. Then it was back to Linux again, happy as you are. I keep a

W10 install on one of my Thinkpads to run windows builds of pv, so that

I can distribute Windows binaries to people who don't want to build

themselves.

> The current Hugin builders on Mac are Niklas Mischkulnig and Bob

> Campbell. All credits to them (not to me).

after all the branch is already there and you demonstrated that it's

dead easy to create a binary. Sadly, my work so far has not got much

attention.

> I certainly do not have an Apple M1 and will not buy one either. I am a

> happy Linux user since 1993 (early adapter) and simply wandered off for

> a couple of years, both to Apple and Windows.

worthwhile to just buy a small machine with this chip - I'd like a

macbook air, because I don't have an up-to-date laptop - my newest

thinkpad is eight years old or so.

> Making a package for the MacOS app store is a different thing.

> You have to register as a developer at Apple. I did a complete rant some

> days ago about how I hate how Apple does things, but also realised at

> that time that I had registered around 2008, when I took over from

> Ippei. And yes, that still worked.

piggyback on the hugin suite. I still think it would make a nice

addition. Where is the good cross-platform FOSS panorama viewer in the

hugin package? I was frustrated because I could not find one, so I wrote

one. I hear others use 'Panini' but I never managed to get it to work

for me. So here's pv.

> - So, you have to register at Apple as a developer giving quite some info.

> - You have to offer your bundle to Apple where Apple checks for

> malware/Apple standards/"other things". That is actually a good thing,

> but will take time and sometimes your bundle is rejected while you know

> it is perfectly fine. I have a java app which I also packaged as a MacOS

> bundle. Apart from the fact that I won't release it via the apple store,

> it will not be accepted either as it is built outside Xcode thereby

> lacking some controls/standards (and Apple spyware?).

> With regard to bundling with Hugin:

> I agree with Thomas that where possible we should integrate. You already

> explained the big differences in code, so that might not be possible. I

> can't judge that as I'm not a C/C++ programmer.

probably not too hard to extract the blending and fusing code into a

separate program, but the beauty of pv is in the total integration of

all components - you simply load your PTO file into pv like any other

image, adjust the view until you have what you want, and press 'P' to

have a panorama rendered in the background. No intermediate images on

disk, no helper programs. Once the stitch is rendered, you can simply

open pv's file select dialog (press 'F') and load the freshly-made

panorama in the same session. Same goes for exposure fusions or HDR merges.

> As it is all open source, it might still be possible to

> migrate/integrate it. And otherwise: could it be possible to call pv

> from hugin to run it as enblend replacement. Just like multiblend can

> be called from hugin (with a simpler command set) as enblend replacement?

> Also: panomatic was first an external tool and most of it is now

> integrated in cpfind.

> I hope we see the same for pv blending/fusing functionality if it really

> is an improvement.

creating a b-spline library and a beautiful, powerful panorama viewer

which now can even do some stitching and exposure fusion on top of

everything else. It would be sad to just rip it apart and take a few

bits as helper programs for hugin. Think of pv as a 'preview on

steroids'. There was a discussion in this group whether it wouldn't make

sense to work more from the preview window. pv is my answer to this

discussion: its what used to be called 'wysiwig'. Pretty much everything

happens immediately, you see all parameter changes 'live'. The only

things which I can't show 'live' are exposure fusions and stitches,

because the B&A algorithm takes a lot of time. I may be able to tweak it

so that I can produce a few frames per second showing stitches and

fusions live, but for now they are rendered by a background thread to a

file on disk. For playing with my stitching and fusion code, this should

be good enough for now.

I even made it easy to use it as a preview for hugin and the likes: if

you work on a panorama in hugin, you can just have a pv window open to

the same PTO. as soon as you save the PTO in hugin, you can simply press

'F1' in pv and it will update to the changes in the PTO. I see pv rather

as a third 'preview' window which hugin etc. can use alongside the other

two, which are good for some tasks where my code is not - I don't handle

all panotools projections, I don't deal with control points, I can't

switch individual imageson and off... - but I sure can give a good view

at the PTO.

> My personal point of view is to help where I can if "something" can mean

> an improvement for all of us. And if it is mature try to integrate it.

I just don't want to go fiddling with other people's software to force

my new stuff in. Integration into extant software is better done by the

original authors, who know their way around their code. As far as pv and

it's new components are concerned, I want to stay upstream and focus on

innovation.

Now for the attempt to port pv to ARM. I have prepared the branch I

announced yesterday, and you can try if it compiles on your Raspi:

git checkout native

make

This will try to build three targets: pv_scalar, pv_vspsimd and

pv_vcsimd - you can also build them separately if you like, it's a phony

'all' target compiling the lot. The first one makes no attempt at using

SIMD code, the second one used vspline's 'implicit' SIMD code, and the

third uses Vc. I think the first two will build on the Raspi, the third

may or may not. If any of the targets build, you should have a

functional pv variant to run on the same machine which it was built on

(it uses --march=native). If the target using Vc builds, it should be

the fastest. Please try it out and let me know how it went!

When I build the 'native' branch targets on my desktop machine, the

performance difference is quite noticeable. My standard test is a

1000-frame automatic pan over a full spherical panorama. The per-frame

rendering times I get are roughly like this:

- pv_vcsimd: 7.1 msec

- pv_vspsimd: 12.4 msec

- pv_scalar: 15.5 msec

So the Vc version is more than twice as fast as the scalar one, and the

'implicit SIMD' version comes out in the middle.

Kay

yuv

Mar 3, 2021, 7:27:16 AM3/3/21

to hugi...@googlegroups.com

On Wed, 2021-03-03 at 10:32 +0100, 'Kay F. Jahnke' via hugin and other

Sony Vaio, very similar tech specs, is already on the electro-junk heap

with broken parts not worth repairing. 2018 Microsoft Surface Book Pro

(or whatever mindboggling name MSFT's marketing has thought for that

device) is already defective (the contact at the hinge between tablet

and keyboard keeps breaking up); 2019 Lenovo Thinkpad T495s has already

been into warranty once and if left connected to its power brick for

too long has seizures. As a result, I am now the only family member

without an iDevice and the M1 has joined the school-mandated iPad. I

did get a used iPhone to test the experience for myself, and so far it

feels like being in a golden cage. It is still a prison, even if

golden.

Apple does a lot of things very well. Sadly, at the critical junction,

it retains control of the T1 / encryption keys instead of giving

control to the user who should be allowed to choose between Apple's

encryption keys; consumer's own encryption keys; or no encryption at

all.

> > With regard to bundling with Hugin:

> >

missing something. If it is so disruptive, why not adding to pv the

missing functions from Hugin rather than the other way around?

And in both cases, what is the main obstacle to move functionality from

pv to Hugin or from Hugin to pv?

If memory serves me well, the current preview mode in Hugin was born as

a project to improve the existing preview. Turned out that a complete

replacement was not possible/desirable and so the two lived side-by-

side in the same package. The difference here is that pv started life

in a separate GUI toolkit, or am I mistaken?

--

Yuval Levy, JD, MBA, CFA

Ontario-licensed lawyer

free panoramic software wrote:

> I must admit that the M1 is very attractive, though. It might be

> worthwhile to just buy a small machine with this chip - I'd like a

> macbook air, because I don't have an up-to-date laptop - my newest

> thinkpad is eight years old or so.

Guilty as charged. Typing this on a 2013 MacBook Air, when the 2013

> I must admit that the M1 is very attractive, though. It might be

> worthwhile to just buy a small machine with this chip - I'd like a

> macbook air, because I don't have an up-to-date laptop - my newest

> thinkpad is eight years old or so.

Sony Vaio, very similar tech specs, is already on the electro-junk heap

with broken parts not worth repairing. 2018 Microsoft Surface Book Pro

(or whatever mindboggling name MSFT's marketing has thought for that

device) is already defective (the contact at the hinge between tablet

and keyboard keeps breaking up); 2019 Lenovo Thinkpad T495s has already

been into warranty once and if left connected to its power brick for

too long has seizures. As a result, I am now the only family member

without an iDevice and the M1 has joined the school-mandated iPad. I

did get a used iPhone to test the experience for myself, and so far it

feels like being in a golden cage. It is still a prison, even if

golden.

Apple does a lot of things very well. Sadly, at the critical junction,

it retains control of the T1 / encryption keys instead of giving

control to the user who should be allowed to choose between Apple's

encryption keys; consumer's own encryption keys; or no encryption at

all.

> > With regard to bundling with Hugin:

> >

> It is in fact completely new technology. Potentially disruptive.

I have not touched panorama photography for ages, so forgive me if I am

missing something. If it is so disruptive, why not adding to pv the

missing functions from Hugin rather than the other way around?

And in both cases, what is the main obstacle to move functionality from

pv to Hugin or from Hugin to pv?

If memory serves me well, the current preview mode in Hugin was born as

a project to improve the existing preview. Turned out that a complete

replacement was not possible/desirable and so the two lived side-by-

side in the same package. The difference here is that pv started life

in a separate GUI toolkit, or am I mistaken?

--

Yuval Levy, JD, MBA, CFA

Ontario-licensed lawyer

Harry van der Wolf

Mar 3, 2021, 7:33:52 AM3/3/21

to hugi...@googlegroups.com

Op wo 3 mrt. 2021 om 10:32 schreef 'Kay F. Jahnke' via hugin and other free panoramic software <hugi...@googlegroups.com>:

> The current Hugin builders on Mac are Niklas Mischkulnig and Bob

> Campbell. All credits to them (not to me).

Maybe they'll take note of pv and become interested in the mac version -

after all the branch is already there and you demonstrated that it's

dead easy to create a binary. Sadly, my work so far has not got much

attention.

It should not be too difficult to add it to the Hugin bundle package. The hugin package consists of 4 separate bundles (Hugin.app, HuginStitchProject.app, PTBatcherGUI.app, calibrate_lens_gui.app).

If this is not "allowed", it is not too difficult either to build a separate pv bundle. Not difficult (if you know how to do it), but quite some work.

I would use macports for this over homebrew. Homebrew uses as much as possible the libraries available on the system. Macports tries to build as many libraries itself as it can and only uses the real system libraries. That macports build process on installing takes much longer as it will build more libraries. Homebrew uses libiconv, libexpat, libz, liblzma, etcetera from MacOS. Macports builds these itself.

For a "my system only", homebrew is a more convenient, easier and faster way to install new ports.

Macports gives you more portabality and MacOS "cross-version" compatibility if you bundle an app.

Note: Mac bundles do have the Info.plist setting

<key>NSHighResolutionCapable</key>

<string>True</string>

<string>True</string>

The latest Hugin build had issues with this and those were apparently just solved by Bob and Niklas (one of the other conversations about the hugin mac build).

I only have that old Macbook Pro and can't test high resolution functionality of pv.

Now it's my turn to be 'a bit sad'. I've spent about seven man years

creating a b-spline library and a beautiful, powerful panorama viewer

which now can even do some stitching and exposure fusion on top of

everything else. It would be sad to just rip it apart and take a few

bits as helper programs for hugin. Think of pv as a 'preview on

steroids'. There was a discussion in this group whether it wouldn't make

sense to work more from the preview window. pv is my answer to this

discussion: its what used to be called 'wysiwig'. Pretty much everything

happens immediately, you see all parameter changes 'live'. The only

things which I can't show 'live' are exposure fusions and stitches,

because the B&A algorithm takes a lot of time. I may be able to tweak it

so that I can produce a few frames per second showing stitches and

fusions live, but for now they are rendered by a background thread to a

file on disk. For playing with my stitching and fusion code, this should

be good enough for now.

I even made it easy to use it as a preview for hugin and the likes: if

you work on a panorama in hugin, you can just have a pv window open to

the same PTO. as soon as you save the PTO in hugin, you can simply press

'F1' in pv and it will update to the changes in the PTO. I see pv rather

as a third 'preview' window which hugin etc. can use alongside the other

two, which are good for some tasks where my code is not - I don't handle

all panotools projections, I don't deal with control points, I can't

switch individual imageson and off... - but I sure can give a good view

at the PTO.

Sorry to make you sad. ;)

You have some very good arguments (at least in my eyes).

Like in all Open Source projects: "if you feel an itch, scratch it". You simply did what many other developers did (and what I did myself).

Look-alike packages can still have different origins, different goals and different appliances (and based on your arguments: different technology).

And I did not know of all the effort you already put into it.

Now for the attempt to port pv to ARM. I have prepared the branch I

announced yesterday, and you can try if it compiles on your Raspi:

git checkout native

make

This will try to build three targets: pv_scalar, pv_vspsimd and

pv_vcsimd - you can also build them separately if you like, it's a phony

'all' target compiling the lot. The first one makes no attempt at using

SIMD code, the second one used vspline's 'implicit' SIMD code, and the arry

third uses Vc. I think the first two will build on the Raspi, the third

may or may not.

While typing this mail, I compiled your new code on my RPi4 server. All 3 versions compile fine, but as they use (Open)GL code, they won't run in a VNC window and I didn't setup X on my server.

I will do the same on my other RPi4 with a monitor connected, but I don't have time for it now.

Harry

Kay F. Jahnke

Mar 3, 2021, 11:54:16 AM3/3/21

to hugi...@googlegroups.com

Am 03.03.21 um 13:27 schrieb yuv:

> On Wed, 2021-03-03 at 10:32 +0100, 'Kay F. Jahnke' via hugin and other

> free panoramic software wrote:

>

>>> With regard to bundling with Hugin:

>>>

>> It is in fact completely new technology. Potentially disruptive.

>

> I have not touched panorama photography for ages, so forgive me if I am

> missing something. If it is so disruptive, why not adding to pv the

> missing functions from Hugin rather than the other way around?

In a way this is what I've been doing. pv started out as a simple

panorama viewer demo to demonstrate how my library (vspline) was

well-suited for geometric transformations. And then it turned out that

the technology I had developed and which I was using to write the first

version was very well suited to do other image processing jobs. I do a

lot of stuff with images - not only panoramas - and one thing which had

always annoyed me was the break I had between panoramas and 'normal'

images: when a panorama showed in my image viewer, I had to tell the

image viewer to 'open it with the panorama viewer'. And the pano viewer

and the image viewer would have different controls - with panoramas I

like using QTVR mode, and you don't really get that in 'normal' image

viewers. So I added 'normal' image viewing capabilities to pv, in order

to be able to view all images, panoramic or not, with the same software.

And the same UI. And a slide show mode which would show panoramas as

such, with the right projection and FOV read from the metadata. And,

frankly, the image quality most 'normal' viewers provide just isn't

really good enough for my taste. b-splines are simply top notch.

I used a game engine (SFML) for the UI, in fact I decided to implement a

fair amount of 'gamification' and to *not* use a traditional GUI library

like wxWidgets or Qt - instead I programmed a simple 'immediate mode

GUI' with just a few rows of buttons and left the remainder of the UI to

be done with mouse gestures and keystrokes, like a computer game. This

enabled me to 'have nothing between me and my images' - no menus, no

dialog boxes, no popup windows. Of course one has to 'learn to fly' to

enjoy it fully.

So now I could view images/panoramas just fine. What if I wanted to

share them? Would be nice to have a snapshot facility. And when doing

snapshots, why not make them in the background with a very good

interpolator, to several times the screen's size? Turned out to be quite

easy with what I already had.

Oh, I can do snapshots! How about I add viewing and snapshots in

different target projections? I might as well display in e.g. spherical

and when I do a snapshot of that, it's another spherical, maybe with a

fixed horizon or brightness or black point or white balance... and why

only snapshots, why not throw a bit more image processing in the works?

how about HDR? I added HDR blending and live viewing of brackets, so one

can 'explore' the shadows or the clouds by simply varying brightness

(try a horizontal secondary-button click-drag gesture) - and without

having to first create HDR output, but with the *option* to export the

blended bracket to openEXR with a simple keystroke.

Hey, so I can HDR-blend! Maybe I can even do exposure fusion!? How about

just running a B&A image splining algorithm in the background to create

an exposure-fused snapshot? Bingo!

Now, having the B&A code, how about I just feed it spatial masks instead

of brightness-related ones? The code to stitch images should be

precisely the same, give or take a few tweaks... it works!

It all fell into place naturally. No need to take code from somewhere

else. Look at my implementation of the B&A algorithm, and compare it to

'traditional' approaches, and judge for yourself:

https://bitbucket.org/kfj/pv/src/master/pv_combine.cc

Compared to traditional code, this is alien technology. It's all written

using the functional paradigm, you simply compose SIMD-capable functors

and feed them to transform functions, filling in arrays as the functors

are 'rolled out' with efficient multithreading. Once you get used to

work with that technology, you don't want traditional code anymore.

That's why I think it may be disruptive, and why I prefer to code stuff

myself, using vspline.

> And in both cases, what is the main obstacle to move functionality from

> pv to Hugin or from Hugin to pv?

So I just can't see much point of trying to move code from hugin to pv.

I have programmed everything new, from scratch, using multithreaded SIMD

code on the CPU. If I want to, I can shell out to helper programs

myself, that's no big deal, but it's much faster to work within the same

process and keep the data in memory. Hugin is great for getting the

registration right, and it does the job of providing a GUI for panotools

well. But when it comes to adding capabilities to pv, I prefer to use my

own, modern code base. It's more fun like that as well, and I admit that

I like having the say of what is done and what isn't. pv and hugin

function very well side-by-side - I've even experimented with code where

other programs can signal pv to perform a refresh - I don't get the

reaction time faster than, say, 100msec, so it's not enough to control

animations frame by frame, but for an 'external preview' it's perfectly

fine. All that a software like hugin needs to know is pv's PID and then

it can signal pv to update it's image, as if the user had pressed 'F1'.

No need to integrate further, really.

Hugin and pv are *complementary*.

> If memory serves me well, the current preview mode in Hugin was born as

> a project to improve the existing preview. Turned out that a complete

> replacement was not possible/desirable and so the two lived side-by-

> side in the same package. The difference here is that pv started life

> in a separate GUI toolkit, or am I mistaken?

So, no tool kit but an 'immediate mode GUI' -

https://en.wikipedia.org/wiki/Immediate_mode_GUI - SFML did not provide

one, but the community had a few hints ready, so I took it from there

and wrote the GUI myself as well. That was fun, too, and I did hope it

would lure some users to my lair... and not having a fat dependency for

the GUI toolkit makes pv lean.

Personally, I don't use the GUI much, apart from starting slideshows or

setting numerical values precisely - or for 'override arguments' -

command line arguments 'injected' at run time to modify the current

viewing cycle. But it does no harm having it.

Kay

> On Wed, 2021-03-03 at 10:32 +0100, 'Kay F. Jahnke' via hugin and other

> free panoramic software wrote:

>

>>> With regard to bundling with Hugin:

>>>

>> It is in fact completely new technology. Potentially disruptive.

>

> I have not touched panorama photography for ages, so forgive me if I am

> missing something. If it is so disruptive, why not adding to pv the

> missing functions from Hugin rather than the other way around?

panorama viewer demo to demonstrate how my library (vspline) was

well-suited for geometric transformations. And then it turned out that

the technology I had developed and which I was using to write the first

version was very well suited to do other image processing jobs. I do a

lot of stuff with images - not only panoramas - and one thing which had

always annoyed me was the break I had between panoramas and 'normal'

images: when a panorama showed in my image viewer, I had to tell the

image viewer to 'open it with the panorama viewer'. And the pano viewer

and the image viewer would have different controls - with panoramas I

like using QTVR mode, and you don't really get that in 'normal' image

viewers. So I added 'normal' image viewing capabilities to pv, in order

to be able to view all images, panoramic or not, with the same software.

And the same UI. And a slide show mode which would show panoramas as

such, with the right projection and FOV read from the metadata. And,

frankly, the image quality most 'normal' viewers provide just isn't

really good enough for my taste. b-splines are simply top notch.

I used a game engine (SFML) for the UI, in fact I decided to implement a

fair amount of 'gamification' and to *not* use a traditional GUI library

like wxWidgets or Qt - instead I programmed a simple 'immediate mode

GUI' with just a few rows of buttons and left the remainder of the UI to

be done with mouse gestures and keystrokes, like a computer game. This

enabled me to 'have nothing between me and my images' - no menus, no

dialog boxes, no popup windows. Of course one has to 'learn to fly' to

enjoy it fully.

So now I could view images/panoramas just fine. What if I wanted to

share them? Would be nice to have a snapshot facility. And when doing

snapshots, why not make them in the background with a very good

interpolator, to several times the screen's size? Turned out to be quite

easy with what I already had.

Oh, I can do snapshots! How about I add viewing and snapshots in

different target projections? I might as well display in e.g. spherical

and when I do a snapshot of that, it's another spherical, maybe with a

fixed horizon or brightness or black point or white balance... and why

only snapshots, why not throw a bit more image processing in the works?

how about HDR? I added HDR blending and live viewing of brackets, so one

can 'explore' the shadows or the clouds by simply varying brightness

(try a horizontal secondary-button click-drag gesture) - and without

having to first create HDR output, but with the *option* to export the

blended bracket to openEXR with a simple keystroke.

Hey, so I can HDR-blend! Maybe I can even do exposure fusion!? How about

just running a B&A image splining algorithm in the background to create

an exposure-fused snapshot? Bingo!

Now, having the B&A code, how about I just feed it spatial masks instead

of brightness-related ones? The code to stitch images should be

precisely the same, give or take a few tweaks... it works!

It all fell into place naturally. No need to take code from somewhere

else. Look at my implementation of the B&A algorithm, and compare it to

'traditional' approaches, and judge for yourself: