What am I doing wrong optimizing with Hugin 2021.0.0

250 views

Skip to first unread message

johnfi...@gmail.com

Aug 6, 2022, 5:18:33 PM8/6/22

to hugin and other free panoramic software

I'm getting terrible optimize results (on the one .pto I tested)

Operating System: Windows 10 (build 19043), 64-bit edition

Hugin Version: 2021.0.0.52df0f76c700 built by Thomas

Is there some option or control in the UI for how hard Hugin tries when optimizing?

I set out to get some baseline comparisons of optimization speed, before trying some ideas I have for making it faster. I tested a bunch of hugin and hugin++ builds, including

2020.0.0.2f576e5d5b4a built by Thomas

and

2021.0.0.52df0f76c700 built by Thomas

All the others had newer source code from the four repositories built in various ways.

The 2020 version had vastly slower optimize but slightly better results than the cluster of others.

The 2021 version had vastly worse results than all the others and the second fastest time. I know enough about what is under the hood to know it can't have timing nearly as good as any of the hugin++ builds I tried via anything other than just stopping too soon. Stopping too soon is also consistent with the terrible results. But I don't know why it would be stopping too soon (compared both to older and newer source code). Was something changed and then changed back, which explains this?

Is there some setting I might use to get different builds to do the same amount of optimization so I can get a more meaningful comparison of various aspects of algorithm speed and compilation quality?

I don't yet know whether all this is specific to my example (attached). I want to do performance comparisons with more than an average number of images, but don't have many such examples handy.

I don't have decent explanations for any of the differences between versions and hope to understand most of those. But the 2021.0.0 results are just much further outside the pattern.

My own mingw64 build of the latest hugin++ repository contents is the fastest, twice as fast as

Huggin++ Pre-Release 2021.1.0.44f97ebcb075 built by Florian Königstein

but with slightly worse results.

I thought I understood all the source code differences and build differences between Florian's build and mine. But all of that is in other parts of the code and should not affect optimization. I don't know any reason the results should differ at all nor any way the performance difference should be two to one.

I am also testing my own builds of the current contents of the hugin/libpano13 repositories with and without the sparse lev-mar build time option. But trying to interpret those results is pretty useless without understanding the more basic discrepancies.

My example has 26 images and a total of 10591 control points, many of which were accidentally created duplicates that I don't know how to clean up. But all that trash ought to make it a decent stand-in for a bigger example.

Florian Königstein

Aug 8, 2022, 11:32:07 AM8/8/22

to hugin and other free panoramic software

Hi John,

without the images Hugin asks for loading them. I took some dummy image. Hugin++ said that there were invalid CPs, probably due to other image dimensions. Optimization ran fast for me, but I think I know where there is the problem.

First I assume:

** You took the images in a "standard way", i.e. by rotating the camera around a tripod or panoramic head.

** You just want so see how the optimizer works when you select nearly all variables to be optimized.

The problem is that you select both rotation and translation to be optimized. Normally, you should only optimize for yaw, pitch and roll, but not for trannslations - if you rotated the cammera but didn't perform bigger translations.

Instead if you kept the orientation of the camera the same and translated it for the different photos, then you should optimize for translations, but not for yaw, pitch and roll.

The reason is the following:

Suppose you have a FOV that is not big (like you have). Imagine first you rotate the camera a bit horizontally. Then you rotate it back to the original position and trannslate it a bit horizontally. If the amout of rotation and translation have an appropriate ratio, then the control points will move in a similar way (not much difference) in the image both by rotation and by translation. So the optimizer cannot decide whether you rotated or translated the camera.

In some cases you can determine both rotation and translation of the camera, but these cases are usually not relevant for panorama photography: Assume for simplicity you have an ideal (pinhole) camera (no lens distortion). Assume you have 5 (or better 8) points in 3D space that will get control points in the photos. You take two photos of these points by both rotating and translating the camera between these photos. Then there are mathematical algorithms with that you can determine both the relative rotation and the relative translation of the camera. But there are at least two cases in that the algorithms fail or yield bad results (big errors):

1. There is no or little translation in the camera position between the two photos

2. All the points in 3D space are on a plane (e.g. on the wall of a house or on the flat ground plane)

In your case you had probably little or no translation of the camera. The optimization problem is mathematically ill-conditioned. Probably the optimizer tries to change the parameters in some direction, but the error changes equally in several directions, so the optimizer cannot find parameters with a minimal error. So, I can image that the optimizer runs for a long time or yields nonesense results.

johnfi...@gmail.com

Aug 8, 2022, 2:42:58 PM8/8/22

to hugin and other free panoramic software

On Monday, August 8, 2022 at 11:32:07 AM UTC-4 Florian Königstein wrote:

Hi John,

Optimization ran fast for me,

What is "fast" and which hugin did you use?

It runs "fast" (17 to 36 seconds) using any build of pano13 with sparse lev-mar. But I still want it to be faster and I want much bigger examples (even though I don't currently have any) to also be fast.

With one exception, it runs "slow" (85 to 212 seconds) in any build without sparse lev-mar. That is exactly as I'd expect and I'm not much interested in those.

The exception I asked about was:

2021.0.0.52df0f76c700 built by Thomas

I'm pretty sure that does not have sparse lev-mar. It optimizes in 21 seconds producing garbage results (large average, 158 worst case). The worst CP is not a poorly chosen CP. All the other versions have worst CP 30 to 38.

My question was why that build is so fast and bad.

The fact that the average can be below 3 and the worst 30 means I want to be able to do that well regularly. So I have the second issue of why fastPTO does slightly worse. It should simply be faster, not a tradeoff of much faster for slightly worse. But that is too complicated and detailed for me to want to ask here. I'll need to figure it out myself. But why the official hugin build is so much faster and worse than it should be seemed like somethings others might weigh in on.

First I assume:** You took the images in a "standard way", i.e. by rotating the camera around a tripod or panoramic head.

No. I did not set up my tripod for that one. I hand held as carefully as I could and came pretty close. So I certainly needed to optimize the six basic parameters of camera position as well as one lens parameter.

** You just want so see how the optimizer works when you select nearly all variables to be optimized.

I avoided opening the FOV can of worms. The correct FOV is known and using incorrect FOV as a coverup for other issues is one of my frequent tools, but not a valid tool either for this panorama or for this performance test.

Some other parameters are in there mainly for "maybe they help" in addition to performance testing purposes.

The problem is that you select both rotation and translation to be optimized. Normally, you should only optimize for yaw, pitch and roll, but not for trannslations - if you rotated the cammera but didn't perform bigger translations.Instead if you kept the orientation of the camera the same and translated it for the different photos, then you should optimize for translations, but not for yaw, pitch and roll.

I certainly needed all six of those. Otherwise, the panorama is garbage. I did my best to hand hold the camera varying only Yaw and Pitch, not Roll, TrX, TrY, and TrZ. But I didn't do that well enough. Tr value are tiny compared to the physical distance to nearest CP but they still matter.

I haven't yet taken any mural shots, so varying translation without also Yaw is not in any of my examples.

The reason is the following:Suppose you have a FOV that is not big (like you have). Imagine first you rotate the camera a bit horizontally. Then you rotate it back to the original position and trannslate it a bit horizontally. If the amout of rotation and translation have an appropriate ratio, then the control points will move in a similar way (not much difference) in the image both by rotation and by translation. So the optimizer cannot decide whether you rotated or translated the camera.

There is enough difference. If the real world location of the CPs in an image are relatively co planar (with each other) then optimization should be able to distinguish rotation from translation.

I have refined my ideas (but not yet written code) for handling the case that the real world CPs are not actually co planar. I think I will then do vastly better than 3 average and 30 worst case in this example. But before coding that, I want a somewhat better understanding, and maybe do a cleaner re-coding, of what is already there.

In some cases you can determine both rotation and translation of the camera, but these cases are usually not relevant for panorama photography: Assume for simplicity you have an ideal (pinhole) camera (no lens distortion). Assume you have 5 (or better 8) points in 3D space that will get control points in the photos. You take two photos of these points by both rotating and translating the camera between these photos. Then there are mathematical algorithms with that you can determine both the relative rotation and the relative translation of the camera. But there are at least two cases in that the algorithms fail or yield bad results (big errors):1. There is no or little translation in the camera position between the two photos

That is the area in which my current ideas are least solid: Hugin is already good for where all the cameras have little of no translation. The tricky case (but I won't know untill I code an test this) is a CP that is only seen by cameras that have little translation relative to each other, but significant translation relative to the chosen origin for the panorama.

2. All the points in 3D space are on a plane (e.g. on the wall of a house or on the flat ground plane)

I'm not understanding your point. First, that case doesn't need to be fixed. Hugin does it well enough already. I'm trying to create only an alternative for when that isn't true. But, unlike the case of all cameras being in the same place, the new method would also work fine for planar CPs. I can't see any reason that ought to be a problem.

Maybe you are thinking about the case that the chosen origin for the panorama is not among the camera locations (neither matches a single camera as one normally would) nor has cameras generally "around" it. That is a very hard (and pointless) panorama anyway, and maybe the planar CPs make it harder.

But since you are commenting, I'll ask your opinion on a related part of where I'm going with all this (since you seem to understand the case of very many images):

I think I can get a big performance boost by using the tendency to have multiple images per lens. So my question is whether, in the useful cases of very many images, there are several images per lens.

I mean a "lens" in the sense of a set of parameters, so at the optimization level, a group of images within which every lens parameter is either linked to be equal or constant and happens to be equal.

My code idea would speed things up significantly when one set of lens parameters (whether fixed or subject to optimization) applies to multiple images. It would only very slightly slow down optimization if every image has its own lens parameters.

Since I bought by 105mm non-zoom lens. It has been used for almost all my panoramas. If I were an actually competent hugin user, I would separately determine its parameters and use them for all such panoramas. So far I just let each panorama solve whatever lens parameter(s) seem to help, but subject to one lens for all images.

Florian Königstein

Aug 8, 2022, 3:29:44 PM8/8/22

to hugin and other free panoramic software

Before I answer to your post more detailed, could you please send me the images per Mail so that I can load the pano without the need for dummy images. Maybe the optimization was faster for me since some CPs were deleted due to my dummy image. Having your images I can see the speed in exactly your configuration.

I just tried out a pano with only two images. The camera was rotated by an angle of about 22 degrees between the images. I also held the camera by hand, but translation is anyway small compared to rotation. When I optimize only yaw, pitch and roll, the optimizer finds the yaw of about 22 degrees. When I optimize yaw, pitch, roll, TrX, TrY, TrZ, the optimizer finds a yaw of 6 degrees and some rather big translation. That is nonsense. This example confirms that estimating both rotation and translation when the translation is zero or small is very difficult.

David W. Jones

Aug 8, 2022, 4:14:39 PM8/8/22

to hugin-ptx

I shoot lots of handheld panoramas. I'm relatively steady at holding my

camera and things come out fine rotating about the vertical center of my

stance. I have never had to use translation of any sort for these shots,

and optimization works pretty quickly.

I've found I get better results by stepping up through the different

levels of optimization and cleaning control points after each step.

Maybe removing bad control points helps the optimization process?

The only time I used translation was when I had the camera on a tripod

and shot a series of frames down the length of a graffiti in a tunnel. I

moved the tripod down a certain distance (I forget how far) for each

shot, keeping the same distance from the wall.

I also don't remember how long optimization might have taken. That was

long enough ago it was probably on the original AMD Sempron processor on

the old server.

I guess I don't think ultrafast optimization is important, much

preferring accuracy. The present laptop's i9 with 64GB RAM optimizes a

lot faster, anyway. :)

--

David W. Jones

gnome...@gmail.com

wandering the landscape of god

http://dancingtreefrog.com

My password is the last 8 digits of π.

camera and things come out fine rotating about the vertical center of my

stance. I have never had to use translation of any sort for these shots,

and optimization works pretty quickly.

I've found I get better results by stepping up through the different

levels of optimization and cleaning control points after each step.

Maybe removing bad control points helps the optimization process?

The only time I used translation was when I had the camera on a tripod

and shot a series of frames down the length of a graffiti in a tunnel. I

moved the tripod down a certain distance (I forget how far) for each

shot, keeping the same distance from the wall.

I also don't remember how long optimization might have taken. That was

long enough ago it was probably on the original AMD Sempron processor on

the old server.

I guess I don't think ultrafast optimization is important, much

preferring accuracy. The present laptop's i9 with 64GB RAM optimizes a

lot faster, anyway. :)

David W. Jones

gnome...@gmail.com

wandering the landscape of god

http://dancingtreefrog.com

My password is the last 8 digits of π.

Florian Königstein

Aug 8, 2022, 4:51:49 PM8/8/22

to hugin and other free panoramic software

I'd like to explain mathematically why estimating both translation and rotation is difficult when the translation is small and the FOV is small:

Assume you have a CP with an x-coordinate 'x' (x-distance on the CCD image sensor from the middle) , and the focal length is f. The "focal axis" is going perpendicular through the CCD image plane. Consider the line going through the CP on the image sensor and through the focal point (the hole in a simple pinhole camera). This line has an angle

arctan ( x / f ) with the focal axis.

Assume the camera is rotated by a small angle 'alpha' around the y-axis. Then the CP is mapped onto a new point on the image sensor with an angle

arctan ( x / f ) + alpha

or (depending on whether rotating left or right)

arctan ( x / f ) - alpha

This CP has the new x coordinate

f * tan(

arctan ( x / f ) + alpha )

on the image plane.

x/f is 'small' because the FOV is assumed to be small. alpha is also assumed to be small, take alpha = 0.05 (in radiants) and

E.g. x / f = 0.1, so the original point with x-coordinate x = 0.1*f is mapped to x =

f * tan(

arctan ( x / f ) + alpha ) = 0.1507 * f.

Now take another CP with x=0.2*f . It is also rotated by this angle alpha. The new x-coordinate is:

x =

f * tan(

arctan ( x / f ) + alpha ) = 0.252569 * f.

You see that the new x/f-ratio is roughly just the sum of the original x/f ratio and the rotation angle (in radiants). This is roughly the same as if the camera was not rotated, but translated along the x-direction.

Of course this is not exact and the optimizer can use these deviations for distinguishing between rotation and translation. But the estimate will have big errors.

If the FOV is even smaller, it will get more difficult to distinguish it.

johnfi...@gmail.com

Aug 8, 2022, 5:55:27 PM8/8/22

to hugin and other free panoramic software

On Monday, August 8, 2022 at 3:29:44 PM UTC-4 Florian Königstein wrote:

Before I answer to your post more detailed, could you please send me the images per Mail so that I can load the pano without the need for dummy images. Maybe the optimization was faster for me since some CPs were deleted due to my dummy image. Having your images I can see the speed in exactly your configuration.

I put them on a google drive and sent you a link. If I messed that up and you can't access, ask me again.

I just tried out a pano with only two images. The camera was rotated by an angle of about 22 degrees between the images. I also held the camera by hand, but translation is anyway small compared to rotation. When I optimize only yaw, pitch and roll, the optimizer finds the yaw of about 22 degrees. When I optimize yaw, pitch, roll, TrX, TrY, TrZ, the optimizer finds a yaw of 6 degrees and some rather big translation. That is nonsense. This example confirms that estimating both rotation and translation when the translation is zero or small is very difficult.

Do you have enough overlap and control points distributed over the overlap area to make the distinction between translation and roll possible?

Are your lens parameters likely correct so that lens parameter errors are not interfering with with the optimization?

If yes to both, I'd like to see those images and project to try to understand what when wrong.

With a true Yaw of 22 degrees and just two images, and limiting the optimization to

yaw, pitch and roll, I would certainly expect hugin to get near the correct yaw, even if it is getting a fairly poor fit. If the overlap and CPs aren't very good, then it is also understandable that a better fit for the CPs could give a wildly wrong actual result, including a wildly wrong yaw. But that doesn't make the general case against optimizing all 6 basic camera point of view parameters.

With multi-way overlap (as in the group of photos I sent) misinterpreting yaw for TrX in one limited overlap tends to be forced out of the solution by the circular interconnection of those two through other images.

I don't EVER get as good results from hugin or hugin++ as I think I ought to. That was the original reason I started working on the code, though I delayed a long time before recently digging into the optimization. So I don't want to pre judge whether the two photo problem you just described is a fundamental limitation due to low CP overlap vs. hugin not really doing what it should. But I am confident that in the cases with more overlap (including virtual overlap through other connection routes) there is no basic flaw in optimizing all six of those camera point of view parameters.

Florian Königstein

Aug 9, 2022, 2:14:37 AM8/9/22

to hugin and other free panoramic software

Thank you for the photos. I'll look at them in the evening (in German time).

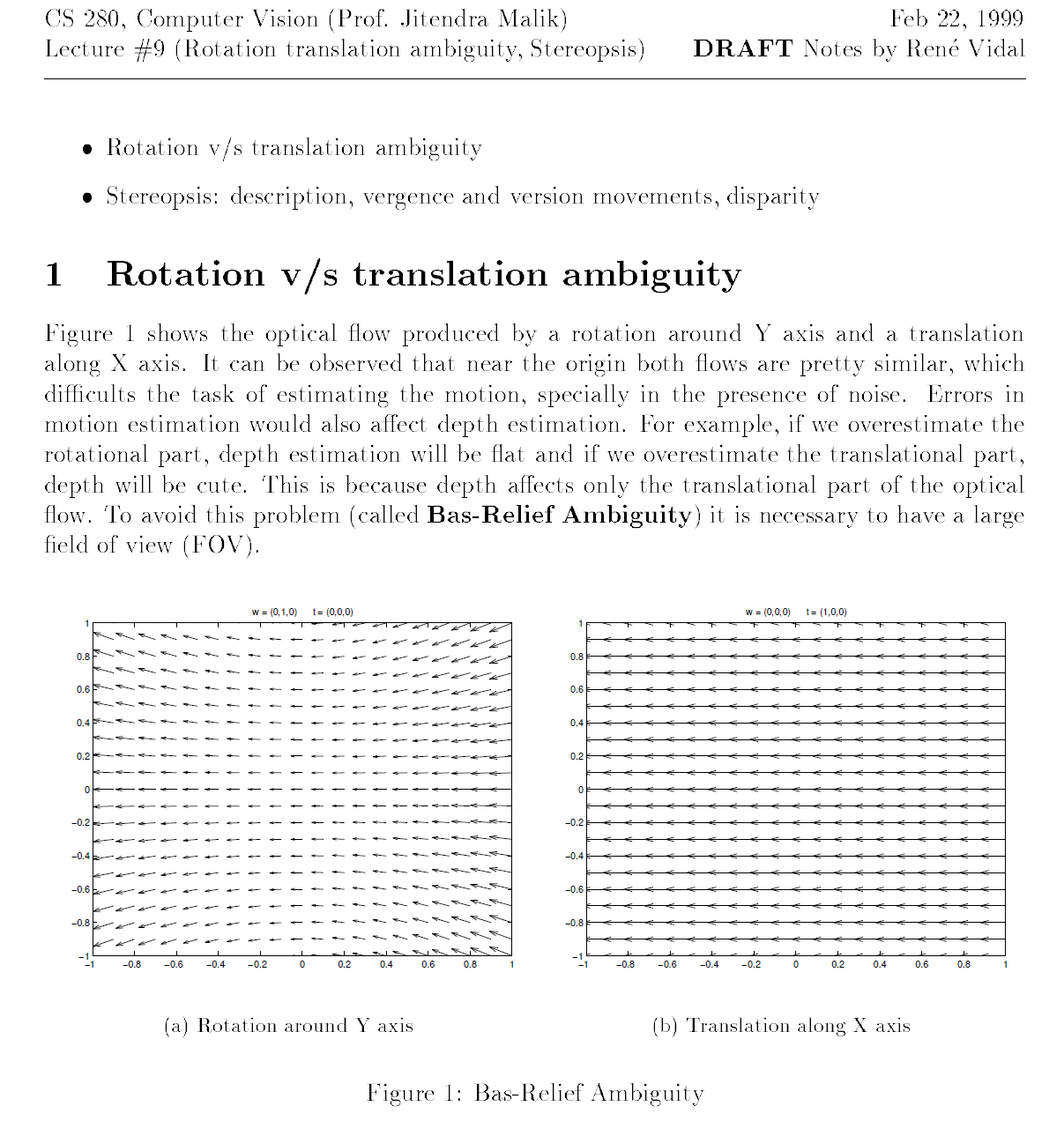

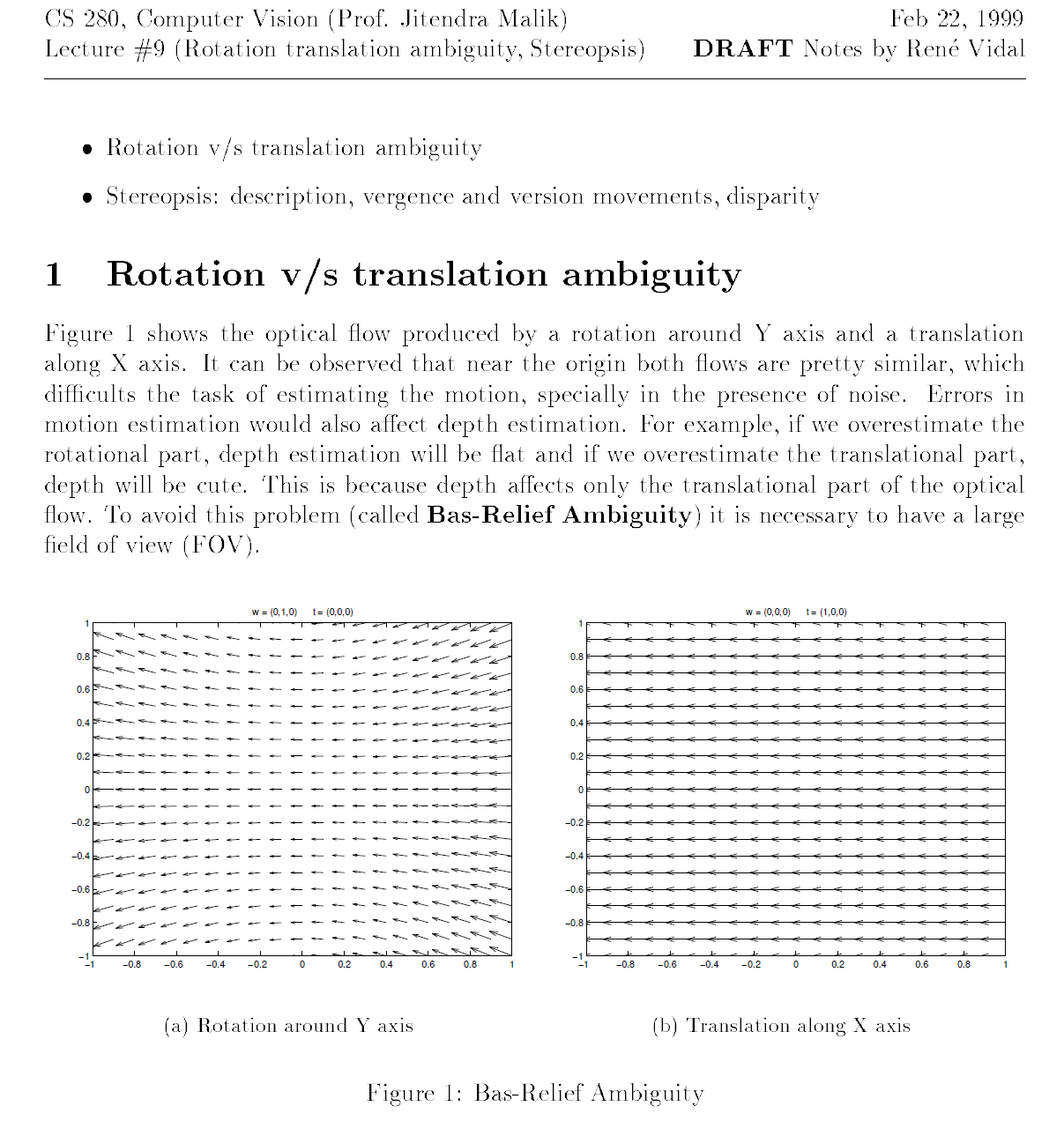

At the moment I'd like to illustrate the rotation / translation ambiguity with the following image that I got from this link:

Since it's in PS format, I converted it to an image:

The arrows indicate how the points in the image move when the camera rotates (left) or translates (right).

Here the arrows in the leftmost and rightmost parts of the image differ enough, so that translation should be distinguable from rotation. But if the FOV is smaller, than the arrows in the left image will get more similar to the arrows in the right image, and rotation cannot be distinguished from translation.

johnfi...@gmail.com

Aug 9, 2022, 8:43:30 AM8/9/22

to hugin and other free panoramic software

I worried this sidetrack of the discussion may be stopping someone who knows more about the answers I'm looking for from replying in this thread.

I really want a better understanding of why results differ so much in the same optimization problem among version and builds of pano13 (and maybe of hugin).

I half remember seeing some user option somewhere for how hard the optimizer tries before stopping. But now I can't find it in the UI or the code. Am I remembering something that wasn't there? (I have worked on a LOT of different optimizers in recent years in different projects). Or am I failing to find something that ought to be obvious? (I've been known to do that too).

What makes the 2021 official version give so much worse results than the 2020 official version?

To the very limited extent to which I understand lev-mar stopping rules, it might stop because it hit

A: A limit in the number of iterations

B: A limit in the number of function evaluations

C: A limit in the rate of change of the parameter values

D: A limit in the rate of change of the total error

?: There seem to be several other possibilities.

The code seems to report which of those it was to the caller. I haven't yet figured out how to get any of that reported to the user.

Use of sparse lev-mar, combined with the choice of where the finite-difference approximation of partial derivatives is done, greatly affects the number of function evaluations.

So comparing identical versions with and without that build option (as I have) and guessing that the stopping condition is B, you would expect the build with sparse lev-mar to do more iterations for the same number of function evaluations (as long as there are 3 or more images). So sparse should get a better final answer. As long as there are 4 or more images, you would expect sparse to take less time per iteration. Above some number of images (much harder to predict and also depending on a bunch of other factors) the factor of faster per iteration should exceed the factor of more iterations, so sparse should take less total time in addition to producing a better result.

In fact doing that comparison, with the current repository contents of hugin and libpano13 (not your fork of them), gives results semi consistent with all that. Sparse is much faster. Sparse gives a much smaller max value, but a slightly worse average value. Since it is a least squares problem, a much better max is a better indicator of a better solution to the optimization problem than the opposite change in average. But the absurdly large number of CP's weighs against that.

Anyway, I think that result supports the guess that the stopping condition is B. For all other stopping conditions, I think the result difference with/without sparse should be smaller (with a timing difference more favorable to sparse).

But I feel like I'm missing other important factors. Other differences among the various versions don't reasonably fit any guess at stopping condition or other cause.

As a development aid, there ought to be a way to adjust the individual stopping conditions, primarily to raise the limit on B to understand the other differences. I think that also would be a good expert-user feature for some situations (and thought I remembered it being there).

I am going into too many coding sidetracks already. But maybe I should sidetrack into finding a way to fit that user option in.

Another BIG sidetrack I'll likely take: During high school in the 70's, I invented a way to compute partial derivatives other than either the finite difference or analytical. It gives more correct partial derivatives than finite difference but doesn't have the potential complexity explosion of analytical. After using it in a couple work situations years later, I took a job on a team that was already using the exact same method, and later interacted with other teams (within a big employer) that also independently came up with it. (Despite that I've never found a description of it online and don't know what it is called). On a basic timing level, for N partial derivatives, you do N+1 times as much work during one evaluation instead of N+1 times as many function evaluations for finite difference. Depending on other factors the total time might range from twice as long as finite difference down to a small fraction as long. Usually it is done for accuracy, not time. I think pano13 doesn't need that improved. But taking advantage of several images per lens in hugin would cause my method to take significantly less time than finite difference. If I do that, I should remember to kludge the counter of function evaluations to pretend it is doing N+1 times as many as it actually is, both in order to keep the stopping condition reasonable and to keep result accuracy comparable. I first wrote that in APL and later in C. But it is really ugly code in C and I won't do that again now that there is a choice. The only decent language for it is C++. It is really annoying that libpano13 is coded in C. (I don't still have any of the code and only the APL version ever belonged to me).

On Tuesday, August 9, 2022 at 2:14:37 AM UTC-4 Florian Königstein wrote:

At the moment I'd like to illustrate the rotation / translation ambiguity with the following image that I got from this link:

For two images, that seems to say the results will be "wrong" but it won't matter: The "wrong" parameters fed into stitching will give the same output as the right parameters.

For a collection of many images either grid or scattered in 2d, I think the problem should not occur.

For a big collection of small images in 1d, I haven't worked out the math, but I expect the results would get ugly. In the best case it should look like an badly chosen projection for the final image, but it could be worse.

So I think all this tells us that for a big collection of small images in 1d, automatic methods aren't going to figure this out and the user needs inject some knowledge. For the "no translation" case specifying no translation is important I think only for this "big collection of small images in 1" (not more generally). I don't believe the "no rotation" (moving tripod along a horizontal mural) really is "no rotation". But that certainly would benefit from very tight bounds on the amount of rotation.

Florian Königstein

Aug 9, 2022, 12:12:09 PM8/9/22

to hugin and other free panoramic software

The image DSC00293.JPG was missing. I took the image DSC00289.JPG as a (false) replacement for it.

First, there are about 20 CPs with distances of about >9000. They are totally wrong correspondences. I deleted them.

I tested optimization only on Hugin++. It took about 10 seconds on my computer (starting from the yaw, pitch and roll values that are already calculated). I also optimized the translation. It seems that in this case there are no problems optimizing both rotation and translation. The average error was about 29.

Then I resetted all positions to zero and restarted optimizing rotations and transations. It takes longer time and gives terrible results, and the errors are varying if you do it again. Normally this should not happen because it's a deterministic algorithm. But maybe SuiteSparse uses some algorithms that are "slightly" not deterministic.

And in ill-conditioned mathematical problems like here small differences in roundoff errors can lead to totally different results.

I see no problem that different versions of Hugin / Hugin++ give different results: It's just because the optimization problem is ill-conditioned.

johnfi...@gmail.com schrieb am Dienstag, 9. August 2022 um 14:43:30 UTC+2:

I half remember seeing some user option somewhere for how hard the optimizer tries before stopping.

E.g. in the file optimize.c of libpano / fastPTOptimizer in the function RunLMOptimizer() there are the following lines:

LM.ftol = 1.0e-6; // used to be DBL_EPSILON; //1.0e-14;

if (istrat == 1) {

LM.ftol = 0.05; // for distance-only strategy, bail out when convergence slows

}

if (istrat == 1) {

LM.ftol = 0.05; // for distance-only strategy, bail out when convergence slows

}

You could e.g. change

LM.ftol

= 1.0E-6 to

LM.ftol = 1.0E-14 if you want the optimizer to try "harder" and longer finding an optimum (in strategy 2).

To the very limited extent to which I understand lev-mar stopping rules, it might stop because it hitA: A limit in the number of iterationsB: A limit in the number of function evaluationsC: A limit in the rate of change of the parameter valuesD: A limit in the rate of change of the total error?: There seem to be several other possibilities.The code seems to report which of those it was to the caller. I haven't yet figured out how to get any of that reported to the user.

Yes, the function lmdif_sparse() returns a code that indicates the stopping criterion that was met. The meaning of the codes is described in the file levmar.c.

Without having checked it, I don't believe that B is met. I believe that ftol is reached (return code 1).

Another BIG sidetrack I'll likely take: During high school in the 70's, I invented a way to compute partial derivatives other than either the finite difference or analytical. It gives more correct partial derivatives than finite difference but doesn't have the potential complexity explosion of analytical. After using it in a couple work situations years later, I took a job on a team that was already using the exact same method, and later interacted with other teams (within a big employer) that also independently came up with it. (Despite that I've never found a description of it online and don't know what it is called). On a basic timing level, for N partial derivatives, you do N+1 times as much work during one evaluation instead of N+1 times as many function evaluations for finite difference. Depending on other factors the total time might range from twice as long as finite difference down to a small fraction as long. Usually it is done for accuracy, not time. I think pano13 doesn't need that improved. But taking advantage of several images per lens in hugin would cause my method to take significantly less time than finite difference. If I do that, I should remember to kludge the counter of function evaluations to pretend it is doing N+1 times as many as it actually is, both in order to keep the stopping condition reasonable and to keep result accuracy comparable. I first wrote that in APL and later in C. But it is really ugly code in C and I won't do that again now that there is a choice. The only decent language for it is C++. It is really annoying that libpano13 is coded in C. (I don't still have any of the code and only the APL version ever belonged to me).

I don't know exactly your idea about computing the partial derivatives, but I think fastPTOptimizer does it quite well.

Before I used the function splm_intern_fdif_jac() (see it's description in levmar.c), but then I used another method inside adjust.c that is at least as fast as

splm_intern_fdif_jac().

Florian Königstein

Aug 9, 2022, 1:38:07 PM8/9/22

to hugin and other free panoramic software

Some time ago I tried whether analytical calculation of the partial derivatives is better (faster convergence). I wrote code for analytical calculation (via automatic differentiation), but it seemed that there was no advantage (longer time and little or no reduction in the number of iterations).

But maybe your idea yields better results ...

John Fine

Aug 9, 2022, 1:40:42 PM8/9/22

to hugi...@googlegroups.com

On Tue, Aug 9, 2022 at 12:12 PM Florian Königstein <niets...@gmail.com> wrote:

The image DSC00293.JPG was missing. I took the image DSC00289.JPG as a (false) replacement for it.First, there are about 20 CPs with distances of about >9000. They are totally wrong correspondences. I deleted them.

I really messed up in posting this. I don't quite understand how, but I can clearly look back and see I did.

293 is not part of the panorama. When I first tried to assemble this (long before selecting it as a test case) I thought 293 was part and got some terrible results due to that. Then I removed it. My testing was with the version I had removed 293 from. But somehow I posted with 293. Looking now, I see all the garbage CPs connect to that image. So removing that image was correct, rather than removing the garbage CPs (but I don't expect anyone should have guessed that). Sorry.

Then I resetted all positions to zero and restarted optimizing rotations and transations. It takes longer time and gives terrible results, and the errors are varying if you do it again. Normally this should not happen because it's a deterministic algorithm. But maybe SuiteSparse uses some algorithms that are "slightly" not deterministic.

Thankyou for reminding me that is the way I should have been testing. I did that for previous performance testing but forgot this time. Your results really bother me. I thought the code was in better shape than that. Maybe the garbage extra image matters despite the CPs you removed. But I doubt it. Seems like something is in much worse shape than I thought. I hope you didn't enable any FOV optimization. I certainly think that is fundamentally broken in hugin and isn't safe to mix into any kind of controlled test.

And in ill-conditioned mathematical problems like here small differences in roundoff errors can lead to totally different results.

I'm very familiar with that in other optimization problems. I'm still not ready to believe that is what is happening here.

I see no problem that different versions of Hugin / Hugin++ give different results: It's just because the optimization problem is ill-conditioned.

I am certainly not surprised at Hugin vs. Hugin++ differences.

I'm surprised at the giant Hugin 2020 vs. Hugin 2021 reduction in accuracy.

I'm more surprised at the difference (despite it being smaller) between your build of hugin++ and my build of hugin++ across modest changes in source code none of which seem relevant to optimization speed or results.

Yes, the function lmdif_sparse() returns a code that indicates the stopping criterion that was met. The meaning of the codes is described in the file levmar.c.

Before I pay too much attention to really understanding what they mean, I want to figure out how to get the code displayed on the (details pull down of the) pop up that gives summary stats for the second strategy. I'd also like a way to keep the summary stats from the first strategy around long enough to read it.

Without having checked it, I don't believe that B is met. I believe that ftol is reached (return code 1).

How do you know the return code?

I guess I had only concluded (by result and timing differences) that B was the non sparse termination reason (except in that 2021 official build). Until you made me rethink that, I was assuming with no valid reason that sparse had the same termination reason.

I don't know exactly your idea about computing the partial derivatives, but I think fastPTOptimizer does it quite well.Before I used the function splm_intern_fdif_jac() (see it's description in levmar.c), but then I used another method inside adjust.c that is at least as fast as splm_intern_fdif_jac().

Both of those are finite difference methods. I read through your code trying to understand why it might be better than having the levmar code do it (why it was worth writing that complicated code). I can see a few ways that accidental extra work is avoided in your code (by knowing the relationship between images and CPs) that would be harder to avoid in more general code. I didn't understand the version you're not using well enough to understand if it does some of that extra work.

johnfi...@gmail.com

Aug 9, 2022, 2:01:35 PM8/9/22

to hugin and other free panoramic software

On Tuesday, August 9, 2022 at 1:38:07 PM UTC-4 Florian Königstein wrote:

Some time ago I tried whether analytical calculation of the partial derivatives is better (faster convergence). I wrote code for analytical calculation (via automatic differentiation), but it seemed that there was no advantage (longer time and little or no reduction in the number of iterations).

I had somehow missed the fact that the term "automatic differentiation" meants that and I thought "analytical" meant symbolic. Now that I googled it again, I see what I'm talking about is within the scope of "automatic differentiation". Of course, the devil is in the details. If you do it right, I would not expect it to be slower per iteration than finite difference in the pano13 problem space (but I won't know until I try, as it can take twice as long in some problem spaces). As you found, I also would not expect a typical reduction in number of iterations. More accurate derivatives typically provides little benefit in the common cases. Its benefit should be in reducing the number of pathological cases.

But I'm more interested now in the possible speedup (per iteration) relative to finite difference (most, but not all, of which comes from assuming an average of more than one image per lens).

Florian Königstein

Aug 10, 2022, 2:44:08 AM8/10/22

to hugin and other free panoramic software

I hope you didn't enable any FOV optimization. I certainly think that is fundamentally broken in hugin and isn't safe to mix into any kind of controlled test.

No, I didn't optimize FOV.

And in ill-conditioned mathematical problems like here small differences in roundoff errors can lead to totally different results.I'm very familiar with that in other optimization problems. I'm still not ready to believe that is what is happening here.

One way to see whether it's really ill-conditioned in this case is the following: In the file levmar.c are several calls of splm_SuiteSparseQR(), there the QR decomposition of the jacobian 'fjac' matrix is performed. In the calculated upper triangular matrix R you can look at the diagonal elements: They are the Eigenvalues of the matrix R, that are equal to the square roots of the Eigenvalues of the Gramian matrix (transpose(fjac) . fjac). The condition number is the ratio of the largest to the smallest diagonal element.

Maybe I will make a test version of PTOptimizer.exe that prints out the condition number of the jacobian matrix. Then you can compare the condition number for that case that only yaw, pitch and roll are optimized and both rotations and translations are optimized.

Before I pay too much attention to really understanding what they mean, I want to figure out how to get the code displayed on the (details pull down of the) pop up that gives summary stats for the second strategy. I'd also like a way to keep the summary stats from the first strategy around long enough to read it.Without having checked it, I don't believe that B is met. I believe that ftol is reached (return code 1).How do you know the return code?

If you want to see the return code you could do the following: First go to the "optimize" tab in Hugin and click on "generate script before optimizing". Copy the script into a text file and store it to disk (with the ending .pto). This file can be read by PTOptimizer.exe . Since the latter is a pure console program it's easier to print out some values or run it in debug mode. E.g. you could just insert a printf(...) after the call of lmdif_sparse().

I don't know exactly your idea about computing the partial derivatives, but I think fastPTOptimizer does it quite well.Before I used the function splm_intern_fdif_jac() (see it's description in levmar.c), but then I used another method inside adjust.c that is at least as fast as splm_intern_fdif_jac().Both of those are finite difference methods. I read through your code trying to understand why it might be better than having the levmar code do it (why it was worth writing that complicated code). I can see a few ways that accidental extra work is avoided in your code (by knowing the relationship between images and CPs) that would be harder to avoid in more general code. I didn't understand the version you're not using well enough to understand if it does some of that extra work.

In fact I used first the function splm_intern_fdif_jac(). Then I programmed analytical calculation of the derivatives via automatic differentiation. For that I couldn't use splm_intern_fdif_jac() of course. I had to write a function that calculates only the derivatives that are necessary (in the function calculateJacobian()). Then I saw that there's no advantage using autodiff. But I kept using

calculateJacobian() with finite differences. It seems that the optimization runs about two times faster if you use calculateJacobian() instead of splm_intern_fdif_jac().

Florian Königstein

Aug 11, 2022, 3:37:11 PM8/11/22

to hugin and other free panoramic software

I have just created a test version of fastPTOptimizer (file: levmar.c) that prints out the condition number of the jacobian matrix when optimizing via PTOptimizer.exe.

I have run it with your panorama in the following cases:

1. Optimize raw, pitch, roll and the translation parameters as preselected in the pano (after resetting all parameters to zero). In this case I created with Hugin's option "edit script file manually before optimizing" a .pto file that can be read by PTOptimizer.exe. I have stored this file with the filename "test.pto".

2.

Optimize only raw, pitch, roll, but no translation parameters.I also created a .pto file for PTOptimizer.exe with name "test2.pto"

The output of my test version of PTOptimizer.exe shows clearly that the condition number (largest (absolute value of the) Eigenvalue / smallest

(absolute value of the)

Eigenvalue) in the case when optimizing both rotation and translation is very high, so that possibly also much numerical accuracy gets lost. The condition number changes with the iterations and is between about 8000 (best case) and 2E9 (2.000.000.000) (worst case).

In the case that only rotations shall be optimized the condition number is between 63 (best case) and 7500 (worst case).

So I'm sure that in this case optimizing both rotation and translation leads in fact to an ill-conditioned problem.

In the attachment I have:

** The files test.pto and test2.pto as inputs for PTOptimizer.exe

** The files test-output.txt and test2-output.txt (outputs of

PTOptimizer.exe when called with test.pto and test2.pto)

** The file adjust.c for the modified PTOptimizer.exe (the other source code of fastPTOptimizer is unchanged).

Florian Königstein

Aug 12, 2022, 12:37:53 PM8/12/22

to hugin and other free panoramic software

Probably it's best here to first optimize only raw, pitch and roll and then only translations.

Another approach is estimating both rotations and translations but "punish" the optimizer with higher error value when the estimated translations are too far away from their initial values. This is possible with my "weights for parameters" feature that is at the moment only present in PTOptimizer.exe but not in Hugin++. When adding weights for the translation parameters, the condition number of the matrices get better (lower). So it helps "regularizing" the optimization problem.

But you must play a bit with the weight in order to find out which weight is suitable. In the example panorama I found that a weight of 1E8 or 1E9 leads to acceptable results. In the attachment I have the file test-with-weights.pto that can by processed by PTOptimizer.exe .

johnfi...@gmail.com

Aug 13, 2022, 7:23:02 AM8/13/22

to hugin and other free panoramic software

On Friday, August 12, 2022 at 12:37:53 PM UTC-4 Florian Königstein wrote:

Probably it's best here to first optimize only raw, pitch and roll and then only translations.

I gave that a lot of thought, and I have not yet coded/tested what I decided to do instead. But I think there is a better way to structure the idea of "first optimize only ...".

My testing so far has caused me to conclude I need to first stabilize the behavior of what is there now, before making the big changes I really want. There is too much tendency for giant changes in performance and result quality from what is essentially luck (chaotic magnification of initial rounding error).

To stabilize what is there now (reduce the luck element in its results):

I think the ambiguity between rotation and translation is not a big problem. The two big problems that I think I understand are:

1) images that are not directly connected to the anchor image

2) the instability of the lens correction and fov optimization.

I don't understand the benefits of the first stage optimizing one distance rather than two directional distances. But for now I'll trust that concept and expand on it.

I want several initial stages, and lens parameters changeable only in the last initial stage. After all the initial stages, the "stage 2" would run exactly as it does now.

As they do now, the initial stages would all run with cutoffs that should make then stop quickly (get only a rough fit).

1) First it would take the anchor image plus all those images that share 3 or more CPs with the anchor.

2-?) For subsequent initial stages, each time add all images that share 3 or more CPs with the union of all images of the prior stage.

?+1) If necessary (implying a very badly connected panorama) add all the rest of the images

?+2) Enable lens and fov if those were specified to be optimized.

In cases where lens correction is major enough, it becomes a user problem to get the lens right (or at least close) first. In normal expert use, I expect the lens was solved earlier and not subject to further optimization. In normal beginner use, I expect the whole lens correction is subtle enough that delaying its optimization (earlier initial stages use whatever values were already there) is fine for the rough estimate intent of those stages.

Each initial stage will only happen if it adds something to the previous initial stage. So simple panoramas with lens and fov already fixed would have the same single initial stage they have now.

BTW, do you have any .pto files (in the format read by PToptimize.exe) that you think I should include in my testing?

Is there some simple way (especially when you don't have the image files) to create a .pto in the

PToptimize.exe format from a .pto in the hugin format?

Florian Königstein

Aug 16, 2022, 3:25:26 PM8/16/22

to hugin and other free panoramic software

Is there some simple way (especially when you don't have the image files) to create a .pto in the PToptimize.exe format from a .pto in the hugin format?

When I did my changes on libpano13, I first changed it so that it could read Hugin files directly and write them back in the Hugin format.

But then I discarded that feature.

But I took parts of the old code and built a command line utility named Hugin2PTOptimizer that converts a Hugin .pto file to a .pto file readable by PTOptimizer.

I have messed a bit around in the code and put all into one file

Hugin2PTOptimize.c. Therefore it's not maintainable, but hopefully it does what it shall do.

I compiled it with VS 2019. If there are problems compiling it with Linux, let me know.

johnfi...@gmail.com

Aug 22, 2022, 7:26:57 AM8/22/22

to hugin and other free panoramic software

On Tuesday, August 16, 2022 at 3:25:26 PM UTC-4 Florian Königstein wrote:

But I took parts of the old code and built a command line utility named Hugin2PTOptimizer that converts a Hugin .pto file to a .pto file readable by PTOptimizer.I have messed a bit around in the code and put all into one file Hugin2PTOptimize.c. Therefore it's not maintainable, but hopefully it does what it shall do.I compiled it with VS 2019. If there are problems compiling it with Linux, let me know.

It does not compile in mingw64 and I'm certain would not in Linux, and there are far too many problems to fix.

I had started a similar program myself, before you sent that. My biggest problem was (and partially still is) identifying the relationship of tags in the .pto file to fields in pano13 data structures and fields in Hugin data structures. I used y

Hugin2PTOptimize.c

as an extra source (on top of the pto reading and writing code in hugin and pano13) for understanding the relationship of fields to tags.

My version reads a .pto file (hugin format or pano13 format) and combines the data from a previous run of optimize (if present) into the image data, then optionally adds a specified amount of random noise to the optimized values, and writes out a new .pto file.

As a tool, this is intended for testing the benefits of changes to optimize. Optimize is an unstable enough process that insignificant differences can cause massive result differences (likely including the case that led me to start this thread). So if you make a small change that ought to on average cause a small improvement, testing giving a giant degradation or giant improvement is more likely than "average" results. So ordinary testing can't tell you whether the coding change doesn't work as expected vs. something is flawed in the example vs. basic instability of optimize.

My theory and hope is that running a program versions to be tested against each optimize problem many times with different random noise added, will give results that show meaningful differences between versions.

As a coding exercise, I built this C++ program to

1) Figure out good methods to use headers and structs from the pano13 C code and ultimately maintain a compatible C interface while rewriting a lot of the operations in C++. There are problems with those headers and those structs for C++, but solutions aren't very difficult.

2) Find a better and more unified way to act generically across fields. This is done and/or not done in many different ways across hugin and pano13 and I did not like any of them. Use of #include tricks and heave use of #define obfuscates that code and makes debugging the code much harder. At the opposite extreme, use of switch statements to split out tags causes massive code bloat and related maintenance problems. I created a better method with templated maps and very minimal use of #define. That will need a bit more touch up (as I get a more complete understanding of the whole system of tags and fields) before I use it in a C++ rewrite of optimize and other parts of pano13.

Like you, I put all the code in one file (except for use of the unmodified filter.h and other headers it pulls in). Once the code is more stable, it should be clear how to split to multiple cpp and hpp files.

If anyone want that code, ask and I'll put it somewhere accessible. I'm open to suggestions. I put a ??? comment in the code in places where I didn't know the right way to do something because I don't understand the related parts of the pano13 package well enough.

Reply all

Reply to author

Forward

0 new messages