The configurations tez.grouping.max-size and tez.grouping.min-size are not effective

204 views

Skip to first unread message

Carol Chapman

Jan 10, 2022, 10:47:46 PM1/10/22

to MR3

Hi,

I found that many tez configurations did not take effect during the run time of hive.For example, no matter how I adjust the values of items a and B, they never take effect.

I submitted a similar issue before :Getting Tez LimitExceededException after dag execution on large query (google.com) . I guess the reasons are the same,A large number of tez configurations use default values.

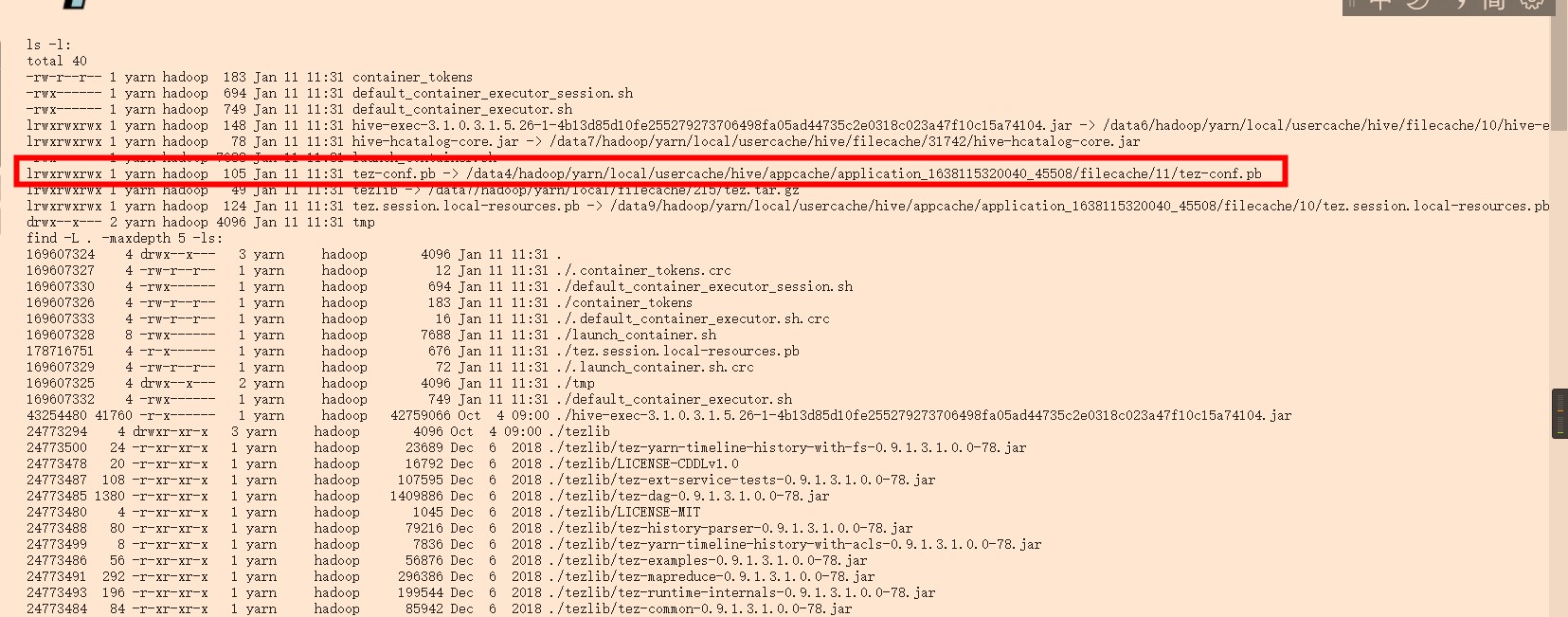

I observed the behavior of the Apache version of hive submitting tez tasks in on yarn mode,

It uploads tez's configuration file as a dependency, so every container can read this file.

mr3 did not upload this file, so according to the current hive code, it cannot read the tez configuration.

However, MR3 is currently compatible with both K8s and yarn mode submission tasks, unlike the Apache version, and this direct upload of configuration files may not be suitable for use in MR3.

So, is there any plan to avoid such problems? In addition, what ideas will MR3 1.4 adopt to solve the problem that tez configuration does not take effect?

Sungwoo Park

Jan 11, 2022, 12:15:19 AM1/11/22

to MR3

Hello,

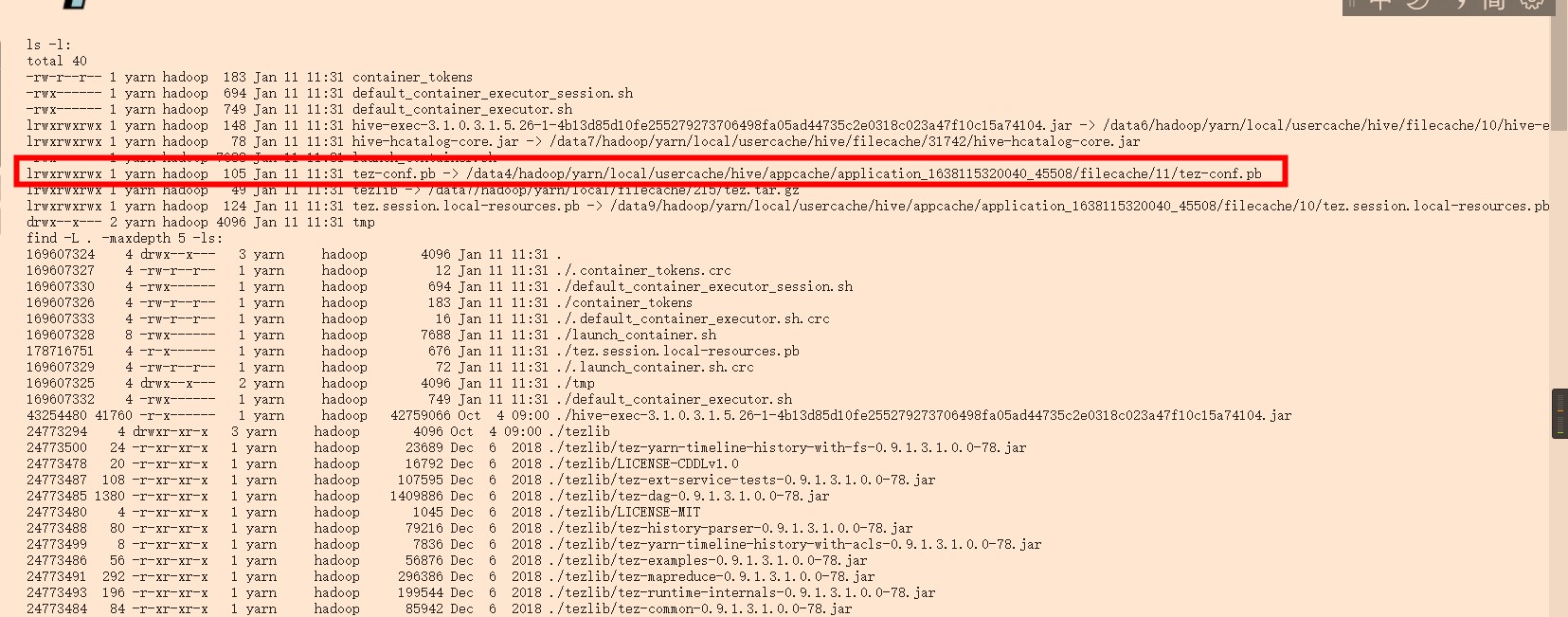

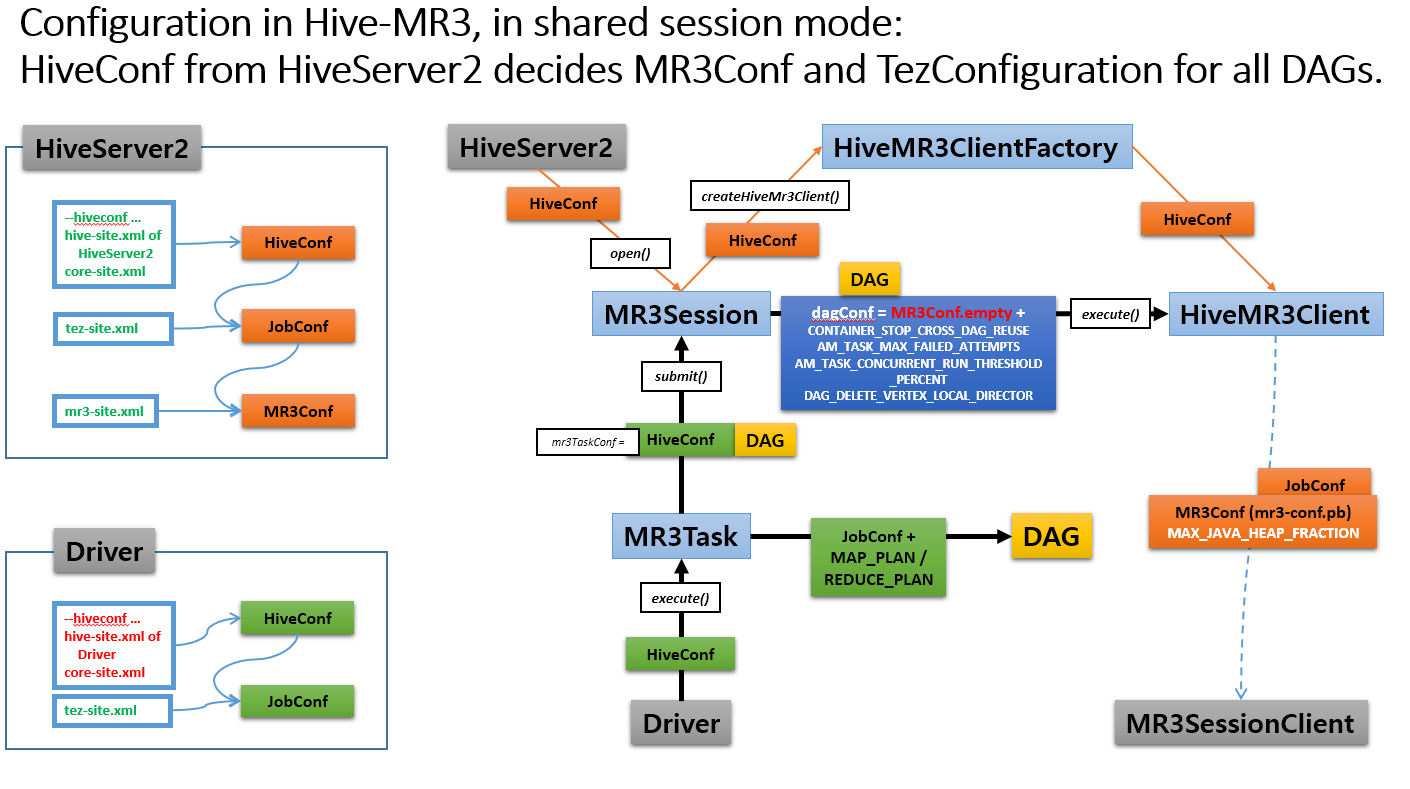

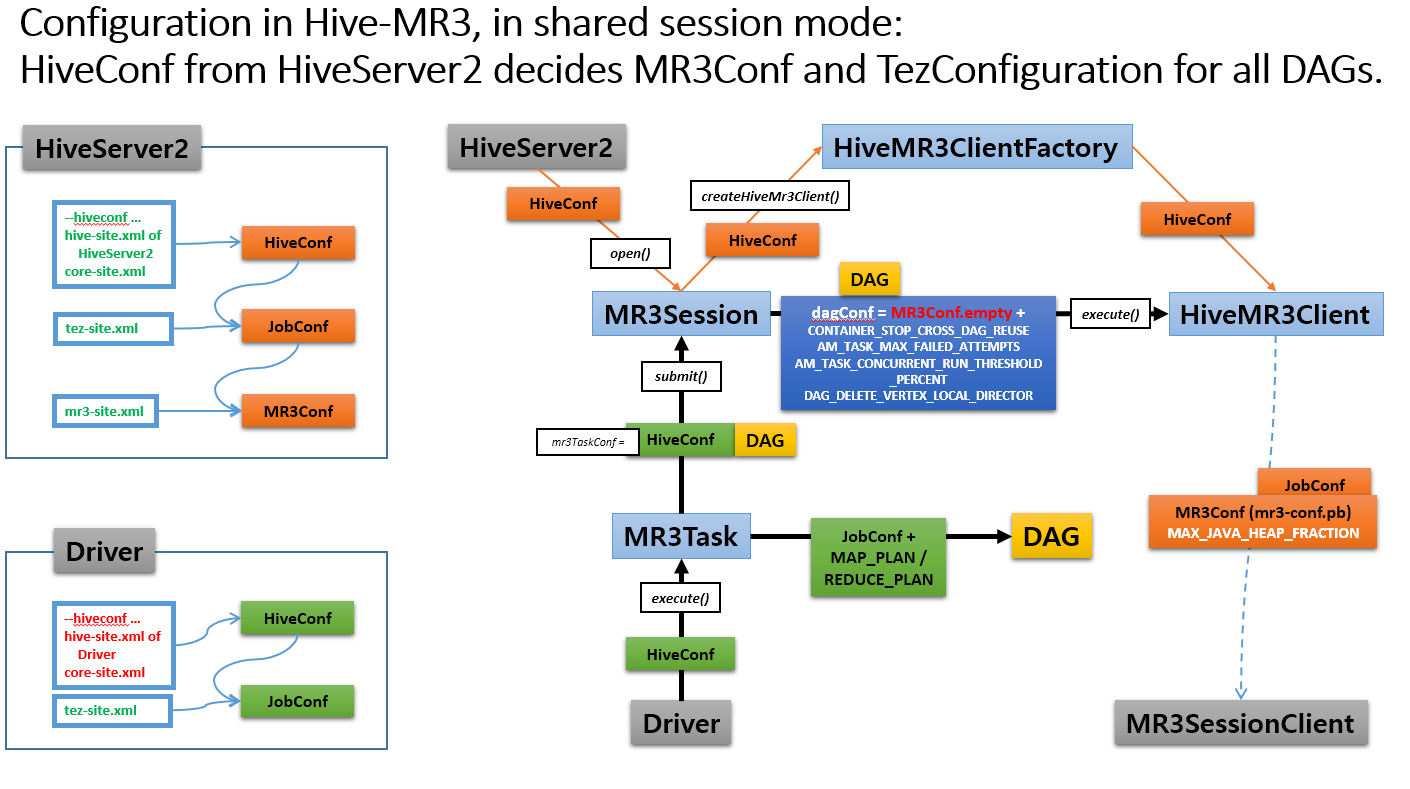

Hive-MR3 reads tez-site.xml, but it does not create tez-conf.pb because all configuration files are merged in MR3Conf. Please see the following diagram, where MR3Conf (mr3-conf.pb) merges hive-site.xml, tez-site.xml, mr3-site.xml, and so on.

You can try a simple scenario where you can update tez.grouping.* configurations.

1) Set in hive-site.xml before running HiveServer2:

<property>

<name>hive.security.authorization.sqlstd.confwhitelist.append</name>

<value>hive\.querylog\.location.*|hive\.mr3\.map\.task.*|hive\.mr3\.reduce\.task.*|tez\.grouping.*</value>

</property>

<name>hive.security.authorization.sqlstd.confwhitelist.append</name>

<value>hive\.querylog\.location.*|hive\.mr3\.map\.task.*|hive\.mr3\.reduce\.task.*|tez\.grouping.*</value>

</property>

Now, the user can update tez.grouping.* inside Beeline.

2) Set in tez-site.xml before running HiveServer2:

<property>

<name>tez.grouping.split-count</name>

<value>99</value>

</property>

<name>tez.grouping.split-count</name>

<value>99</value>

</property>

Now, the default value of tez.grouping.split-count is 99.

3) Run HiveServer2. Inside Beeline session, check the default value.

0: jdbc:hive2://orange1:9852/> set tez.grouping.split-count;

+----------------------------------------+

| set |

+----------------------------------------+

| tez.grouping.split-count is undefined |

+----------------------------------------+

1 row selected (0.316 seconds)

+----------------------------------------+

| set |

+----------------------------------------+

| tez.grouping.split-count is undefined |

+----------------------------------------+

1 row selected (0.316 seconds)

Here, we see that tez.grouping.split-count is not set because Beeline does not set it explicitly.

4) Run a sample query and check the log of DAGAppMaster. You will see that the default value of 99 is actually used.

gitlab-runner@orange1:~$ klog -f mr3master-4776-0-cfc7bbfcd-9cqss | grep "Desired"

2022-01-11T05:01:03,788 INFO [DAG1-Input-7-2] grouper.TezSplitGrouper: Desired numSplits overridden by config to: 99

2022-01-11T05:01:03,788 INFO [DAG1-Input-7-2] grouper.TezSplitGrouper: Desired numSplits overridden by config to: 99

5) Inside Beeline, update the value of tez.grouping.split-count to 101.

0: jdbc:hive2://orange1:9852/> set tez.grouping.split-count=101;

No rows affected (0.024 seconds)

0: jdbc:hive2://orange1:9852/> set tez.grouping.split-count;

+-------------------------------+

| set |

+-------------------------------+

| tez.grouping.split-count=101 |

+-------------------------------+

1 row selected (0.034 seconds)

No rows affected (0.024 seconds)

0: jdbc:hive2://orange1:9852/> set tez.grouping.split-count;

+-------------------------------+

| set |

+-------------------------------+

| tez.grouping.split-count=101 |

+-------------------------------+

1 row selected (0.034 seconds)

6) Run another sample query. You will see that the update value is used.

2022-01-11T05:11:49,703 INFO [DAG2-Input-9-1] grouper.TezSplitGrouper: Desired numSplits overridden by config to: 101

So, actually everything is working as intended. tez.grouping.max-size and tez.grouping.min-size are similar.

If I missed something in your scenario, please let me know.

Cheers,

--- Sungwoo

Reply all

Reply to author

Forward

0 new messages