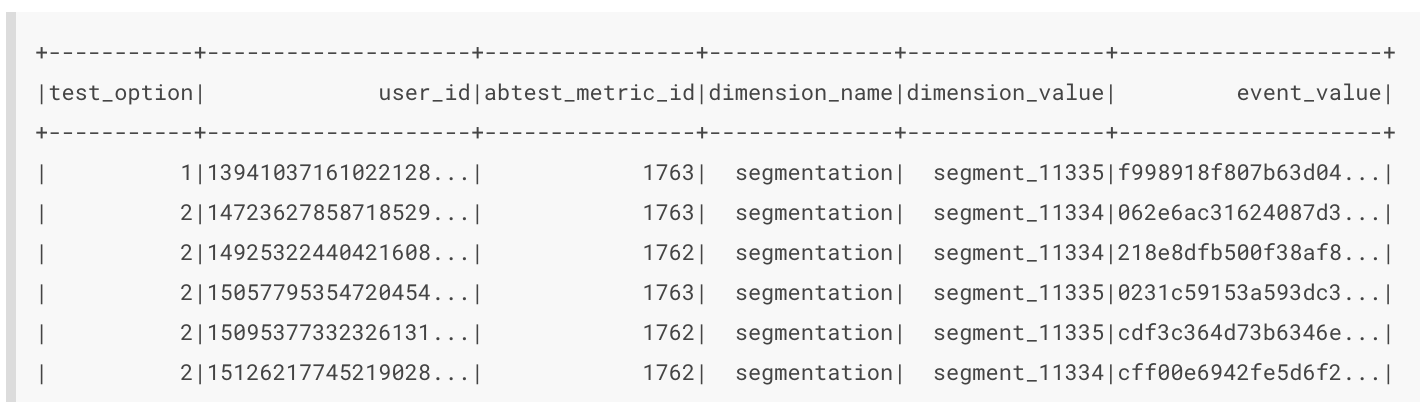

Got out of memory even though my data volume is not that big compared to the server.

2020-09-01T00:51:14,974 INFO [main] org.apache.zookeeper.ZooKeeper - Initiating client connection, connectString=localhost sessionTimeout=30000 watcher=org.apache.curator.ConnectionState@55d99dc3

2020-09-01T00:51:15,035 INFO [main] org.apache.curator.framework.imps.CuratorFrameworkImpl - Default schema

2020-09-01T00:51:15,045 INFO [main-SendThread(localhost:2181)] org.apache.zookeeper.ClientCnxn - Opening socket connection to server localhost/

127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error)

2020-09-01T00:51:15,054 INFO [main-SendThread(localhost:2181)] org.apache.zookeeper.ClientCnxn - Socket connection established to localhost/

127.0.0.1:2181, initiating session

2020-09-01T00:51:15,077 INFO [main-SendThread(localhost:2181)] org.apache.zookeeper.ClientCnxn - Session establishment complete on server localhost/

127.0.0.1:2181, sessionid = 0x1000f97e8a80014, negotiated timeout = 30000

2020-09-01T00:51:15,083 INFO [main-EventThread] org.apache.curator.framework.state.ConnectionStateManager - State change: CONNECTED

2020-09-01T00:51:15,206 INFO [NodeRoleWatcher[COORDINATOR]] org.apache.druid.curator.discovery.CuratorDruidNodeDiscoveryProvider$NodeRoleWatcher - Node[

http://localhost:8081] of role[coordinator] detected.

2020-09-01T00:51:15,206 INFO [NodeRoleWatcher[OVERLORD]] org.apache.druid.curator.discovery.CuratorDruidNodeDiscoveryProvider$NodeRoleWatcher - Node[

http://localhost:8081] of role[overlord] detected.

2020-09-01T00:51:15,206 INFO [NodeRoleWatcher[COORDINATOR]] org.apache.druid.curator.discovery.CuratorDruidNodeDiscoveryProvider$NodeRoleWatcher - Node watcher of role[coordinator] is now initialized.

2020-09-01T00:51:15,206 INFO [NodeRoleWatcher[OVERLORD]] org.apache.druid.curator.discovery.CuratorDruidNodeDiscoveryProvider$NodeRoleWatcher - Node watcher of role[overlord] is now initialized.

2020-09-01T00:51:15,378 INFO [main] org.apache.druid.indexing.worker.executor.ExecutorLifecycle - Running with task: {

"type" : "single_phase_sub_task",

"id" : "single_phase_sub_task_maxRetry3_biinapgd_2020-09-01T00:49:33.063Z",

"groupId" : "index_parallel_maxRetry3_pgibohji_2020-09-01T00:45:45.039Z",

"resource" : {

"availabilityGroup" : "single_phase_sub_task_maxRetry3_biinapgd_2020-09-01T00:49:33.063Z",

"requiredCapacity" : 1

},

"supervisorTaskId" : "index_parallel_maxRetry3_pgibohji_2020-09-01T00:45:45.039Z",

"numAttempts" : 2,

"spec" : {

"dataSchema" : {

"dataSource" : "maxRetry3",

"timestampSpec" : {

"column" : "dt",

"format" : "yyyyMMdd",

"missingValue" : "20200822-01-01T00:00:00.000Z"

},

"dimensionsSpec" : {

"dimensions" : [ {

"type" : "string",

"name" : "test_option",

"multiValueHandling" : "SORTED_ARRAY",

"createBitmapIndex" : true

}, {

"type" : "string",

"name" : "abtest_metric_id",

"multiValueHandling" : "SORTED_ARRAY",

"createBitmapIndex" : true

}, {

"type" : "string",

"name" : "dimension_name",

"multiValueHandling" : "SORTED_ARRAY",

"createBitmapIndex" : true

}, {

"type" : "string",

"name" : "dimension_value",

"multiValueHandling" : "SORTED_ARRAY",

"createBitmapIndex" : true

}, {

"type" : "string",

"name" : "event_value",

"multiValueHandling" : "SORTED_ARRAY",

"createBitmapIndex" : true

}, {

"type" : "string",

"name" : "user_id",

"multiValueHandling" : "SORTED_ARRAY",

"createBitmapIndex" : true

} ],

"dimensionExclusions" : [ "dt", "count" ]

},

"metricsSpec" : [ {

"type" : "count",

"name" : "count"

} ],

"granularitySpec" : {

"type" : "uniform",

"segmentGranularity" : "DAY",

"queryGranularity" : {

"type" : "none"

},

"rollup" : true,

"intervals" : null

},

"transformSpec" : {

"filter" : null,

"transforms" : [ ]

}

},

"ioConfig" : {

"type" : "index_parallel",

"inputSource" : {

"type" : "s3",

"uris" : null,

"prefixes" : null,

"objects" : [ {

"bucket" : "s3-abtest-prod",

"path" : "xpe/daily_unique/area_metric_type=Search/abtest_instance_id=34554/dt=20200822/part-00006-ef28c0f9-f2d4-4f7a-84a9-1e36aea39816-c000.snappy.parquet"

}, {

"bucket" : "s3-abtest-prod",

"path" : "xpe/daily_unique/area_metric_type=Search/abtest_instance_id=34554/dt=20200822/part-00006-ef28c0f9-f2d4-4f7a-84a9-1e36aea39816-c001.snappy.parquet"

}, {

"bucket" : "s3-abtest-prod",

"path" : "xpe/daily_unique/area_metric_type=Search/abtest_instance_id=34554/dt=20200822/part-00007-eccc7759-c75a-43c8-817b-2fa2076967d8-c000.snappy.parquet"

}, {

"bucket" : "s3-abtest-prod",

"path" : "xpe/daily_unique/area_metric_type=Search/abtest_instance_id=34554/dt=20200822/part-00007-ef28c0f9-f2d4-4f7a-84a9-1e36aea39816-c000.snappy.parquet"

}, {

"bucket" : "s3-abtest-prod",

"path" : "xpe/daily_unique/area_metric_type=Search/abtest_instance_id=34554/dt=20200822/part-00007-ef28c0f9-f2d4-4f7a-84a9-1e36aea39816-c001.snappy.parquet"

}, {

"bucket" : "s3-abtest-prod",

"path" : "xpe/daily_unique/area_metric_type=Search/abtest_instance_id=34554/dt=20200822/part-00008-eccc7759-c75a-43c8-817b-2fa2076967d8-c000.snappy.parquet"

}, {

"bucket" : "s3-abtest-prod",

"path" : "xpe/daily_unique/area_metric_type=Search/abtest_instance_id=34554/dt=20200822/part-00008-ef28c0f9-f2d4-4f7a-84a9-1e36aea39816-c000.snappy.parquet"

}, {

"bucket" : "s3-abtest-prod",

"path" : "xpe/daily_unique/area_metric_type=Search/abtest_instance_id=34554/dt=20200822/part-00008-ef28c0f9-f2d4-4f7a-84a9-1e36aea39816-c001.snappy.parquet"

}, {

"bucket" : "s3-abtest-prod",

"path" : "xpe/daily_unique/area_metric_type=Search/abtest_instance_id=34554/dt=20200822/part-00009-eccc7759-c75a-43c8-817b-2fa2076967d8-c000.snappy.parquet"

} ],

"properties" : null

},

"inputFormat" : {

"type" : "parquet",

"flattenSpec" : {

"useFieldDiscovery" : true,

"fields" : [ ]

},

"binaryAsString" : false

},

"appendToExisting" : false

},

"tuningConfig" : {

"type" : "index_parallel",

"maxRowsPerSegment" : null,

"maxRowsInMemory" : 1000000,

"maxBytesInMemory" : 0,

"maxTotalRows" : null,

"numShards" : null,

"splitHintSpec" : null,

"partitionsSpec" : null,

"indexSpec" : {

"bitmap" : {

"type" : "roaring",

"compressRunOnSerialization" : true

},

"dimensionCompression" : "lz4",

"metricCompression" : "lz4",

"longEncoding" : "longs",

"segmentLoader" : null

},

"indexSpecForIntermediatePersists" : {

"bitmap" : {

"type" : "roaring",

"compressRunOnSerialization" : true

},

"dimensionCompression" : "lz4",

"metricCompression" : "lz4",

"longEncoding" : "longs",

"segmentLoader" : null

},

"maxPendingPersists" : 0,

"forceGuaranteedRollup" : false,

"reportParseExceptions" : false,

"pushTimeout" : 0,

"segmentWriteOutMediumFactory" : null,

"maxNumConcurrentSubTasks" : 20,

"maxRetry" : 3,

"taskStatusCheckPeriodMs" : 1000,

"chatHandlerTimeout" : "PT10S",

"chatHandlerNumRetries" : 5,

"maxNumSegmentsToMerge" : 100,

"totalNumMergeTasks" : 10,

"logParseExceptions" : false,

"maxSavedParseExceptions" : 0,

"buildV9Directly" : true,

"partitionDimensions" : [ ]

}

},

"context" : {

"forceTimeChunkLock" : true

},

"dataSource" : "maxRetry3"

}

2020-09-01T00:51:15,380 INFO [main] org.apache.druid.indexing.worker.executor.ExecutorLifecycle - Attempting to lock file[var/druid/task/single_phase_sub_task_maxRetry3_biinapgd_2020-09-01T00:49:33.063Z/lock].

2020-09-01T00:51:15,387 INFO [main] org.apache.druid.indexing.worker.executor.ExecutorLifecycle - Acquired lock file[var/druid/task/single_phase_sub_task_maxRetry3_biinapgd_2020-09-01T00:49:33.063Z/lock] in 2ms.

2020-09-01T00:51:15,394 INFO [main] org.apache.druid.indexing.common.task.AbstractBatchIndexTask - [forceTimeChunkLock] is set to true in task context. Use timeChunk lock

2020-09-01T00:51:15,415 INFO [task-runner-0-priority-0] org.apache.druid.indexing.overlord.SingleTaskBackgroundRunner - Running task: single_phase_sub_task_maxRetry3_biinapgd_2020-09-01T00:49:33.063Z

2020-09-01T00:51:15,418 WARN [task-runner-0-priority-0] org.apache.druid.indexing.common.task.batch.parallel.SinglePhaseSubTask - Intervals are missing in granularitySpec while this task is potentially overwriting existing segments. Forced to use timeChunk lock.

2020-09-01T00:51:15,421 INFO [main] org.apache.druid.java.util.common.lifecycle.Lifecycle - Starting lifecycle [module] stage [SERVER]

2020-09-01T00:51:15,425 INFO [main] org.eclipse.jetty.server.Server - jetty-9.4.12.v20180830; built: 2018-08-30T13:59:14.071Z; git: 27208684755d94a92186989f695db2d7b21ebc51; jvm 1.8.0_265-8u265-b01-0ubuntu2~18.04-b01

2020-09-01T00:51:15,740 INFO [main] org.eclipse.jetty.server.session - DefaultSessionIdManager workerName=node0

2020-09-01T00:51:15,740 INFO [main] org.eclipse.jetty.server.session - No SessionScavenger set, using defaults

2020-09-01T00:51:15,742 INFO [main] org.eclipse.jetty.server.session - node0 Scavenging every 600000ms

2020-09-01T00:51:16,086 INFO [main] com.sun.jersey.server.impl.application.WebApplicationImpl - Initiating Jersey application, version 'Jersey: 1.19.3 10/24/2016 03:43 PM'

2020-09-01T00:51:18,040 INFO [main] org.eclipse.jetty.server.handler.ContextHandler - Started o.e.j.s.ServletContextHandler@2bf4fa1{/,null,AVAILABLE}

2020-09-01T00:51:18,111 INFO [main] org.eclipse.jetty.server.AbstractConnector - Started ServerConnector@40aad17d{HTTP/1.1,[http/1.1]}{

0.0.0.0:8101}

2020-09-01T00:51:18,112 INFO [main] org.eclipse.jetty.server.Server - Started @16126ms

2020-09-01T00:51:18,115 INFO [main] org.apache.druid.java.util.common.lifecycle.Lifecycle - Starting lifecycle [module] stage [ANNOUNCEMENTS]

2020-09-01T00:51:18,115 INFO [main] org.apache.druid.java.util.common.lifecycle.Lifecycle - Successfully started lifecycle [module]

2020-09-01T00:51:21,011 INFO [task-runner-0-priority-0] org.apache.parquet.hadoop.InternalParquetRecordReader - RecordReader initialized will read a total of 2000000 records.

2020-09-01T00:51:21,011 INFO [task-runner-0-priority-0] org.apache.parquet.hadoop.InternalParquetRecordReader - at row 0. reading next block

2020-09-01T00:51:21,134 INFO [task-runner-0-priority-0] org.apache.hadoop.io.compress.CodecPool - Got brand-new decompressor [.snappy]

2020-09-01T00:51:21,150 INFO [task-runner-0-priority-0] org.apache.parquet.hadoop.InternalParquetRecordReader - block read in memory in 139 ms. row count = 2000000

2020-09-01T00:51:21,779 INFO [task-runner-0-priority-0] org.apache.druid.segment.realtime.appenderator.BaseAppenderatorDriver - New segment[maxRetry3_20200822-01-01T00:00:00.000Z_20200822-01-02T00:00:00.000Z_2020-09-01T00:46:01.484Z_8] for sequenceName[single_phase_sub_task_maxRetry3_biinapgd_2020-09-01T00:49:33.063Z].

2020-09-01T00:51:39,503 INFO [task-runner-0-priority-0] org.apache.druid.segment.realtime.appenderator.AppenderatorImpl - Flushing in-memory data to disk because No more rows can be appended to sink,bytesCurrentlyInMemory[171529596] is greater than maxBytesInMemory[171529557].

2020-09-01T00:52:24,863 WARN [main-SendThread(localhost:2181)] org.apache.zookeeper.ClientCnxn - Client session timed out, have not heard from server in 26076ms for sessionid 0x1000f97e8a80014

2020-09-01T00:52:24,864 INFO [main-SendThread(localhost:2181)] org.apache.zookeeper.ClientCnxn - Client session timed out, have not heard from server in 26076ms for sessionid 0x1000f97e8a80014, closing socket connection and attempting reconnect

2020-09-01T00:52:29,704 INFO [main-EventThread] org.apache.curator.framework.state.ConnectionStateManager - State change: SUSPENDED

2020-09-01T00:52:29,705 WARN [NodeRoleWatcher[OVERLORD]] org.apache.druid.curator.discovery.CuratorDruidNodeDiscoveryProvider$NodeRoleWatcher - Ignored event type[CONNECTION_SUSPENDED] for node watcher of role[overlord].

2020-09-01T00:52:29,706 WARN [NodeRoleWatcher[COORDINATOR]] org.apache.druid.curator.discovery.CuratorDruidNodeDiscoveryProvider$NodeRoleWatcher - Ignored event type[CONNECTION_SUSPENDED] for node watcher of role[coordinator].

2020-09-01T00:52:31,883 INFO [main-SendThread(localhost:2181)] org.apache.zookeeper.ClientCnxn - Opening socket connection to server localhost/

127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error)

2020-09-01T00:52:31,884 INFO [main-SendThread(localhost:2181)] org.apache.zookeeper.ClientCnxn - Socket connection established to localhost/

127.0.0.1:2181, initiating session

2020-09-01T00:52:31,886 WARN [main-SendThread(localhost:2181)] org.apache.zookeeper.ClientCnxn - Unable to reconnect to ZooKeeper service, session 0x1000f97e8a80014 has expired

2020-09-01T00:52:31,886 INFO [main-SendThread(localhost:2181)] org.apache.zookeeper.ClientCnxn - Unable to reconnect to ZooKeeper service, session 0x1000f97e8a80014 has expired, closing socket connection

2020-09-01T00:52:31,886 WARN [main-EventThread] org.apache.curator.ConnectionState - Session expired event received

2020-09-01T00:52:31,887 INFO [main-EventThread] org.apache.zookeeper.ZooKeeper - Initiating client connection, connectString=localhost sessionTimeout=30000 watcher=org.apache.curator.ConnectionState@55d99dc3

2020-09-01T00:52:34,162 INFO [main-EventThread] org.apache.curator.framework.state.ConnectionStateManager - State change: LOST

2020-09-01T00:52:34,162 INFO [main-EventThread] org.apache.zookeeper.ClientCnxn - EventThread shut down for session: 0x1000f97e8a80014

2020-09-01T00:52:34,164 WARN [NodeRoleWatcher[OVERLORD]] org.apache.druid.curator.discovery.CuratorDruidNodeDiscoveryProvider$NodeRoleWatcher - Ignored event type[CONNECTION_LOST] for node watcher of role[overlord].

2020-09-01T00:52:34,164 WARN [NodeRoleWatcher[COORDINATOR]] org.apache.druid.curator.discovery.CuratorDruidNodeDiscoveryProvider$NodeRoleWatcher - Ignored event type[CONNECTION_LOST] for node watcher of role[coordinator].

2020-09-01T00:52:36,705 INFO [main-SendThread(localhost:2181)] org.apache.zookeeper.ClientCnxn - Opening socket connection to server localhost/

127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error)

2020-09-01T00:52:36,705 INFO [main-SendThread(localhost:2181)] org.apache.zookeeper.ClientCnxn - Socket connection established to localhost/

127.0.0.1:2181, initiating session

2020-09-01T00:52:36,709 INFO [main-SendThread(localhost:2181)] org.apache.zookeeper.ClientCnxn - Session establishment complete on server localhost/

127.0.0.1:2181, sessionid = 0x1000f97e8a80017, negotiated timeout = 30000

2020-09-01T00:52:36,709 INFO [main-EventThread] org.apache.curator.framework.state.ConnectionStateManager - State change: RECONNECTED

2020-09-01T00:52:36,712 WARN [NodeRoleWatcher[OVERLORD]] org.apache.druid.curator.discovery.CuratorDruidNodeDiscoveryProvider$NodeRoleWatcher - Ignored event type[CONNECTION_RECONNECTED] for node watcher of role[overlord].

2020-09-01T00:52:36,716 WARN [NodeRoleWatcher[COORDINATOR]] org.apache.druid.curator.discovery.CuratorDruidNodeDiscoveryProvider$NodeRoleWatcher - Ignored event type[CONNECTION_RECONNECTED] for node watcher of role[coordinator].

Terminating due to java.lang.OutOfMemoryError: GC overhead limit exceeded