[Python-Dev] Should set objects maintain insertion order too?

Larry Hastings

As of 3.7, dict objects are guaranteed to maintain insertion order. But set objects make no such guarantee, and AFAIK in practice they don't maintain insertion order either. Should they?

I do have a use case for this. In one project I maintain a "ready" list of jobs; I need to iterate over it, but I also want fast lookup because I soemtimes remove jobs when they're subsequently marked "not ready". The existing set object would work fine here, except that it doesn't maintain insertion order. That means multiple runs of the program with the same inputs may result in running jobs in different orders, and this instability makes debugging more difficult. I've therefore switched from a set to a dict with all keys mapped to None, which provides all the set-like functionality I need.

ISTM that all the reasons why dicts should maintain insertion

order also apply to sets, and so I think we should probably do

this. Your thoughts?

/arry

p.s. My dim recollection is that the original set object was a

hacked-up copy of the dict object removing the ability to store

values. So there's some precedent for dicts and sets behaving

similarly. (Note: non-pejorative use of "hacked-up" here, that

was a fine way to start.)

Tim Peters

> objects make no such guarantee, and AFAIK in practice they don't maintain

> insertion order either.

> Should they?

From Raymond, 22 Dec 2017:

https://twitter.com/raymondh/status/944454031870607360

"""

Sets use a different algorithm that isn't as amendable to retaining

insertion order. Set-to-set operations lose their flexibility and

optimizations if order is required. Set mathematics are defined in

terms of unordered sets. In short, set ordering isn't in the immediate

future.

"""

Which is more an answer to "will they?" than "should they?" ;-)

_______________________________________________

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Message archived at https://mail.python.org/archives/list/pytho...@python.org/message/WMIZRFJZXI3CD5YMLOC5Z3LXVXD7HM4R/

Code of Conduct: http://python.org/psf/codeofconduct/

Larry Hastings

That's not a reason why we shouldn't do it. Python is the language where speed, correctness, and readability trump performance every time. We should decide what semantics we want, then do that, period. And anyway, it seems like some genius always figures out how to make it fast sooner or later ;-)

I can believe that, given the current implementation, sets might

give up some of their optimizations if they maintained insertion

order. But I don't understand what "flexibility" they would

lose. Apart from being slower, what would set objects give up if

they maintained insertion order? Are there operations on set

objects that would no longer be possible?

/arry

Raymond Hettinger

> On Dec 15, 2019, at 6:48 PM, Larry Hastings <la...@hastings.org> wrote:

>

> As of 3.7, dict objects are guaranteed to maintain insertion order. But set objects make no such guarantee, and AFAIK in practice they don't maintain insertion order either. Should they?

Several thoughts:

* The corresponding mathematical concept is unordered and it would be weird to impose such as order.

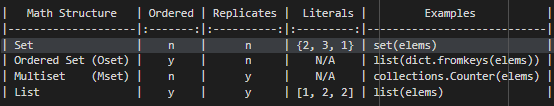

* You can already get membership testing while retaining insertion ordering by running dict.fromkeys(seq).

* Set operations have optimizations that preclude giving a guaranteed order (for example, set intersection loops over the smaller of the two input sets).

* To implement ordering, set objects would have to give-up their current membership testing optimization that exploits cache locality in lookups (it looks at several consecutive hashes at a time before jumping to the next random position in the table).

* The ordering we have for dicts uses a hash table that indexes into a sequence. That works reasonably well for typical dict operations but is unsuitable for set operations where some common use cases make interspersed additions and deletions (that is why the LRU cache still uses a cheaply updated doubly-linked list rather that deleting and reinserting dict entries).

* This idea has been discussed a couple times before and we've decided not to go down this path. I should document prominently because it is inevitable that it will be suggested periodically because it is such an obvious thing to consider.

Raymond

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Guido van Rossum

_______________________________________________

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Code of Conduct: http://python.org/psf/codeofconduct/

Larry Hastings

On Dec 15, 2019, at 6:48 PM, Larry Hastings <la...@hastings.org> wrote:As of 3.7, dict objects are guaranteed to maintain insertion order. But set objects make no such guarantee, and AFAIK in practice they don't maintain insertion order either. Should they?I don't think they should. Several thoughts: * The corresponding mathematical concept is unordered and it would be weird to impose such as order.

I disagree--I assert it's no more or less weird than imposing order on dicts, which we eventually decided to do.

* You can already get membership testing while retaining insertion ordering by running dict.fromkeys(seq).

I'm not sure where you're going with this. The argument "you can do this with other objects" didn't preclude us from, for example, adding ordering to the dict object. We already had collections.OrderedDict, and we decided to add the functionality to the dict object.

* Set operations have optimizations that preclude giving a guaranteed order (for example, set intersection loops over the smaller of the two input sets). * To implement ordering, set objects would have to give-up their current membership testing optimization that exploits cache locality in lookups (it looks at several consecutive hashes at a time before jumping to the next random position in the table). * The ordering we have for dicts uses a hash table that indexes into a sequence. That works reasonably well for typical dict operations but is unsuitable for set operations where some common use cases make interspersed additions and deletions (that is why the LRU cache still uses a cheaply updated doubly-linked list rather that deleting and reinserting dict entries).

These are all performance concerns. As I mentioned previously in

this thread, in my opinion we should figure out what semantics we

want for the object, then implement those, and only after that

should we worry about performance. I think we should decide the

question "should set objects maintain insertion order?" literally

without any consideration about performance implications.

* This idea has been discussed a couple times before and we've decided not to go down this path. I should document prominently because it is inevitable that it will be suggested periodically because it is such an obvious thing to consider.

Please do!

In the email quoted by Tim Peters earlier in the thread, you

stated that set objects "lose their flexibility" if they maintain

order. Can you be more specific about what you meant by that?

/arry

p.s. To be clear, I'm not volunteering to add this feature to the

set object implementation--that's above my pay grade. But I'm

guessing that, if we decided we wanted these semantics for the

dict object, someone would volunteer to implement it.

Inada Naoki

>

> Actually, for dicts the implementation came first.

>

https://github.com/methane/cpython/pull/23

Regards,

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Serhiy Storchaka

> set objects make no such guarantee, and AFAIK in practice they don't

> maintain insertion order either. Should they?

>

> I do have a use case for this. In one project I maintain a "ready" list

> of jobs; I need to iterate over it, but I also want fast lookup because

> I soemtimes remove jobs when they're subsequently marked "not ready".

> The existing set object would work fine here, except that it doesn't

> maintain insertion order. That means multiple runs of the program with

> the same inputs may result in running jobs in different orders, and this

> instability makes debugging more difficult. I've therefore switched

> from a set to a dict with all keys mapped to None, which provides all

> the set-like functionality I need.

>

> ISTM that all the reasons why dicts should maintain insertion order also

> apply to sets, and so I think we should probably do this. Your thoughts?

implementation", because it saves memory in common cases. It was the

initial reason of writing it. At the same time there was a need in

ordered dicts for kwarg and class namespace. We discovered that slightly

modified compact dict implementation preserves order, and that its

possible drawback (performance penalty) is too small if exists.

But ordered set implementation is not more compact that the current set

implementation (because for dicts the item is 3-word, but for sets it is

2-word). Also the current set implementation has some optimizations that

the dict implementation never had, which will be lost in the ordered set

implementation.

Take to account that sets are way less used than dicts, and that you can

use a dict if you need an ordered set-like object.

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Petr Viktorin

[...]

> These are all performance concerns. As I mentioned previously in this

> thread, in my opinion we should figure out what semantics we want for

> the object, then implement those, and only after that should we worry

> about performance. I think we should decide the question "should set

> objects maintain insertion order?" literally without any consideration

> about performance implications.

actually better, semantics-wise.

Originally, making dicts ordered was all about performance (or rather

memory efficiency, which falls in the same bucket.) It wasn't added

because it's better semantics-wise.

Here's one (very simplified and maybe biased) view of the history of dicts:

* 2.x: Dicts are unordered, please don't rely on the order.

* 3.3: Dict iteration order is now randomized. We told you not to rely

on it!

* 3.6: We now use an optimized implementation of dict that saves memory!

As a side effect it makes dicts ordered, but that's an implementation

detail, please don't rely on it.

* 3.7: Oops, people are now relying on dicts being ordered. Insisting on

people not relying on it is battling windmills. Also, it's actually

useful sometimes, and alternate implementations can support it pretty

easily. Let's make it a language feature! (Later it turns out

MicroPython can't support it easily. Tough luck.)

By itself, "we already made dicts do it" is not a great argument in the

set *semantics* debate.

Of course, it may turn out ordering was a great idea semantics-wise as

well, but if I'm reading correctly, so far this thread has one piece of

anectodal evidence for that.

_______________________________________________

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Guido van Rossum

{'baz': 3, 'foo': 1, 'bar': 2}

>>>

Steve Dower

> Originally, making dicts ordered was all about performance (or rather

> memory efficiency, which falls in the same bucket.) It wasn't added

> because it's better semantics-wise.

> Here's one (very simplified and maybe biased) view of the history of dicts:

>

> * 2.x: Dicts are unordered, please don't rely on the order.

> * 3.3: Dict iteration order is now randomized. We told you not to rely

> on it!

> * 3.6: We now use an optimized implementation of dict that saves memory!

> As a side effect it makes dicts ordered, but that's an implementation

> detail, please don't rely on it.

> * 3.7: Oops, people are now relying on dicts being ordered. Insisting on

> people not relying on it is battling windmills. Also, it's actually

> useful sometimes, and alternate implementations can support it pretty

> easily. Let's make it a language feature! (Later it turns out

> MicroPython can't support it easily. Tough luck.)

implementation because it can't maintain insertion order - Yury is

currently proposing it as FrozenMap in PEP 603.

https://discuss.python.org/t/pep-603-adding-a-frozenmap-type-to-collections/2318

Codifying semantics isn't always the kind of future-proof we necessarily

want to have :)

Cheers,

Steve

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

David Mertz

* The corresponding mathematical concept is unordered and it would be weird to impose such as order.

from the bellies of the hungry; books from the hands of the

uneducated; technology from the underdeveloped; and putting

advocates of freedom in prisons. Intellectual property is

to the 21st century what the slave trade was to the 16th.

Barry Warsaw

I’m fine with a decision not to change the ordering semantics of sets, but we should update the Language Reference to describe the language guarantees for both sets and dicts. The Library Reference does document the ordering semantics for both dicts and sets, but I wouldn’t say that information is easy to find. Maybe we can make the latter more clear too.

Cheers,

-Barry

> Python-Dev mailing list -- pytho...@python.org

> To unsubscribe send an email to python-d...@python.org

> https://mail.python.org/mailman3/lists/python-dev.python.org/

Tim Peters

> ...

opportunities": theoretical ones that may or may not be exploited

some day, and those that CPython has _already_ exploited.

That is, we don't have a blank slate here. As Raymond said, the set

implementation already diverged from the dict implementation in

fundamental ways for "go fast" reasons. How much are people willing

to see set operations slow down to get this new feature?

For me, "none" ;-) Really. I have no particular use for "ordered"

sets, but have set-slinging code that benefits _today_ from the "go

fast" work Raymond did for them.

Analogy: it was always obvious that list.sort() is "better" stable

than not, but I ended up crafting a non-stable samplesort variant that

ran faster than any stable sort anyone tried to write. For years. So

we stuck with that, to avoid slowing down sorting across releases.

The stable "timsort" finally managed to be _as_ fast as the older

samplesort in almost all cases, and was very much faster in many

real-world cases. "Goes faster" was the thing that really sold it,

and so much so that its increased memory use didn't count for much

against it in comparison.

Kinda similarly, "ordered dicts" were (as has already been pointed

out) originally introduced as a memory optimization ("compact" dicts),

where the "ordered" part fell out more-or-less for free. The same

approach wouldn't save memory for sets (or so I'm told), so the

original motivation for compact dicts doesn't carry over.

So I'm +1 on ordered sets if and only if there's an implementation

that's at least as fast as what we already have. If not now, then,

like ordered dicts evolved, offer a slower OrderedSet type in the

`collections` module for those who really want it, and wait for magic

;-)

BTW, what should

{1, 2} | {3, 4, 5, 6, 7}

return as ordered sets? Beats me.; The imbalance between the

operands' cardinalities can be arbitrarily large, and "first make a

copy of the larger one, then loop over the smaller one" is the obvious

way to implement union to minimize the number of lookups needed. The

speed penalty for, e.g., considering "the left" operand's elements'

insertion orders to precede all "the right" operand's elements'

insertion orders can be arbitrarily large.

The concept of "insertion order" just doesn't make well-defined sense

to me for any operation the produces a set from multiple input sets,

unless it means no more than "whatever order happens to be used

internally to add elements to the result set". Dicts don't have

anything like that, although dict.update comes close, but in that case

the result is defined by mutating the dict via a one-at-a-time loop

over the argument dict.

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Victor Stinner

applied to set. I had the same idea :-)

If it reduces the memory footprint, keep insertion order and has low

performance overhead, I would be an interesting idea!

Victor

Night gathers, and now my watch begins. It shall not end until my death.

_______________________________________________

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

David Cuthbert via Python-Dev

On Mon 12/16/19, 9:59 AM, "David Mertz" <me...@gnosis.cx> wrote:

Admittedly, I was only lukewarm about making an insertion-order guarantee for dictionaries too. But for sets I feel more strongly opposed. Although it seems unlikely now, if some improved implementation of sets had the accidental side effects of making them ordered, I would still not want that to become a semantic guarantee.

Eek… No accidental side effects whenever possible, please. People come to rely upon them (like that chemistry paper example[*]), and changing the assumptions results in a lot of breakage down the line. Changing the length of AWS identifiers (e.g. instances from i-1234abcd to i-0123456789abcdef) was a huge deal; even though the identifier length was never guaranteed, plenty of folks had created database schemata with VARCHAR(10) for instance ids, for example.

Break assumptions from day 1. If some platforms happen to return sorted results, sort the other platforms or toss in a sorted(key=lambda el: random.randomint()) call on the sorting platform. If you’re creating custom identifiers, allocate twice as many bits as you think you’ll need to store it.

Yes, this is bad user code, but I’m all for breaking bad user code in obvious ways sooner rather than subtle ways later, especially in a language like Python.

David Mertz

On Mon 12/16/19, 9:59 AM, "David Mertz" <me...@gnosis.cx> wrote:

If some improved implementation of sets had the accidental side effects of making them ordered, I would still not want that to become a semantic guarantee.

Eek… No accidental side effects whenever possible, please. People come to rely upon them (like that chemistry paper example[*]), and changing the assumptions results in a lot of breakage down the line.

Larry Hastings

BTW,

what should

{1, 2} | {3, 4, 5, 6, 7}

return as ordered sets? Beats me.;

The obvious answer is {1, 2, 3, 4, 5, 6, 7}. Which is the result

I got in Python 3.8 just now ;-) But that's just a side-effect of

how hashing numbers works, the implementation, etc. It's rarely

stable like this, and nearly any other input would have resulted

in the scrambling we all (currently) expect to see.

>>> {"apples", "peaches", "pumpkin pie"} | {"who's", "not", "ready", "holler", "I" }

{'pumpkin pie', 'peaches', 'I', "who's", 'holler', 'ready', 'apples', 'not'}

/arry

Tim Peters

>

> {1, 2} | {3, 4, 5, 6, 7}

>

> return as ordered sets? Beats me.;

def union(smaller, larger):

if len(larger) < len(smaller):

smaller, larger = larger, smaller

result = larger.copy()

for x in smaller:

result.add(x)

In the example, that would first copy {3, 4, 5, 6, 7}, and then add 1

and 2 (in that order) to it, giving {3, 4, 5, 6, 7, 1, 2} as the

result.

If it's desired that "insertion order" be consistent across runs,

platforms, and releases, then what "insertion order" _means_ needs to

be rigorously defined & specified for all set operations. This was

comparatively trivial for dicts, because there are, e.g., no

commutative binary operators defined on dicts.

If you want to insist that `a | b` first list all the elements of a,

and then all the elements of b that weren't already in a, regardless

of cost, then you create another kind of unintuitive surprise: in

general the result of "a | b" will display differently than the result

of "b | a" (although the results will compare equal), and despite that

the _user_ didn't "insert" anything.

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Tim Peters

> ...

> That works reasonably well for typical dict operations but is unsuitable for set

> operations where some common use cases make interspersed additions

> and deletions (that is why the LRU cache still uses a cheaply updated doubly-l

> linked list rather that deleting and reinserting dict entries).

ordered dict stores hash+key+value records in a contiguous array with

no "holes". That's ("no holes") where the memory savings comes from.

The "holes" are in the hash table, which only hold indices _into_ the

contiguous array (and the smallest width of C integer indices

sufficient to get to every slot in the array).

"The problem" is that deletions leave _two_ kinds of holes: one in

the hash table, and another in the contiguous vector. The latter

holes cannot be filled with subsequent new hash+key+value records

because that would break insertion order.

So in an app that mixes additions and deletions, the ordered dict

needs to be massively rearranged at times to squash out the holes left

behind by deletions, effectively moving all the holes to "the end",

where they can again be used to reflect insertion order.

Unordered dicts never had to be rearranged unless the total size

changed "a lot", and that remains true of the set implementation. But

in apps mixing adds and deletes, ordered dicts can need massive

internal rearrangement at times even if the total size never changes

by more than 1.

Algorithms doing a lot of mixing of adds and deletes seem a lot more

common for sets than for dicts, and so the ordered dict's basic

implementation _approach_ is a lot less suitable for sets. Or, at

least, that's my best attempt to flesh out Raymond's telegraphic

thinking there.

Note: the holes left by deletions _wouldn't_ be "a problem" _except_

for maintaining insertion order. If we were only after the memory

savings, then on deletion "the last" record in the contiguous array

could be moved into the hole at once, leaving the array hole-free

again. But that also changes the order. IIRC, that's what the

original "compact dict" _did_ do.

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Tim Peters

> ...

> memory efficiency, which falls in the same bucket.) It wasn't added

> because it's better semantics-wise.

"compact dict" idea got all the memory savings, but did _not_ maintain

insertion order. Maintaining insertion order too complicated

deletions (see recent message), but was deliberately done because

people really did want "ordered dicts".

> Here's one (very simplified and maybe biased) view of the history of dicts:

>

> * 2.x: Dicts are unordered, please don't rely on the order.

> * 3.3: Dict iteration order is now randomized. We told you not to rely

> on it!

> * 3.6: We now use an optimized implementation of dict that saves memory!

> As a side effect it makes dicts ordered, but that's an implementation

> detail, please don't rely on it.

> * 3.7: Oops, people are now relying on dicts being ordered. Insisting on

> people not relying on it is battling windmills. Also, it's actually

> useful sometimes, and alternate implementations can support it pretty

> easily. Let's make it a language feature! (Later it turns out

> MicroPython can't support it easily. Tough luck.)

implementation doesn't maintain insertion order naturally, _unless_

there are no deletions (which is, e.g., true of dicts constructed to

pass keyword arguments). The code got hairier to maintain insertion

order in the presence of mixing insertions and deletions.

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Larry Hastings

If it's desired that "insertion order" be consistent across runs, platforms, and releases, then what "insertion order" _means_ needs to be rigorously defined & specified for all set operations. This was comparatively trivial for dicts, because there are, e.g., no commutative binary operators defined on dicts.

If you want to insist that `a | b` first list all the elements of a, and then all the elements of b that weren't already in a, regardless of cost, then you create another kind of unintuitive surprise: in general the result of "a | b" will display differently than the result of "b | a" (although the results will compare equal), and despite that the _user_ didn't "insert" anything.

Call me weird--and I won't disagree--but I find nothing

unintuitive about that. After all, that's already the world we

live in: there are any number of sets that compare as equal but

display differently. In current Python:

>>> a = {'a', 'b', 'c'}

>>> d = {'d', 'e', 'f'}

>>> a | d

{'f', 'e', 'd', 'a', 'b', 'c'}

>>> d | a

{'f', 'b', 'd', 'a', 'e', 'c'}

>>> a | d == d | a

True

This is also true for dicts, in current Python, which of course do maintain insertion order. Dicts don't have the | operator, so I approximate the operation by duplicating the dict (which AFAIK preserves insertion order) and using update.

>>> aa = {'a': 1, 'b': 1, 'c': 1}

>>> dd = {'d': 1, 'e': 1, 'f': 1}

>>> x = dict(aa)

>>> x.update(dd)

>>> y = dict(dd)

>>> y.update(aa)

>>> x

{'a': 1, 'b': 1, 'c': 1, 'd': 1, 'e': 1, 'f': 1}

>>> y

{'d': 1, 'e': 1, 'f': 1, 'a': 1, 'b': 1, 'c': 1}

>>> x == y

True

Since dicts already behave in exactly that way, I don't think it would be too surprising if sets behaved that way too. In fact, I think it's a little surprising that they behave differently, which I suppose was my whole thesis from the beginning.

Cheers,

/arry

Larry Hastings

Here's one (very simplified and maybe biased) view of the history of dicts: * 2.x: Dicts are unordered, please don't rely on the order. * 3.3: Dict iteration order is now randomized. We told you not to rely on it! * 3.6: We now use an optimized implementation of dict that saves memory! As a side effect it makes dicts ordered, but that's an implementation detail, please don't rely on it. * 3.7: Oops, people are now relying on dicts being ordered. Insisting on people not relying on it is battling windmills. Also, it's actually useful sometimes, and alternate implementations can support it pretty easily. Let's make it a language feature! (Later it turns out MicroPython can't support it easily. Tough luck.)A very nice summary! My only quibble is as above: the "compact dict" implementation doesn't maintain insertion order naturally, _unless_ there are no deletions (which is, e.g., true of dicts constructed to pass keyword arguments). The code got hairier to maintain insertion order in the presence of mixing insertions and deletions.

Didn't some paths also get sliiiiightly slower as a result of maintaining insertion order when mixing insertions and deletions? My recollection is that that was part of the debate--not only "are we going to regret inflicting these semantics on posterity, and on other implementations?", but also "are these semantics worth the admittedly-small performance hit, in Python's most important and most-used data structure?".

Also, I don't recall anything about us resigning ourselves to explicitly maintain ordering on dicts because people were relying on it, "battling windmills", etc. Dict ordering had never been guaranteed, a lack of guarantee Python had always taken particularly seriously. Rather, we decided it was a desirable feature, and one worth pursuing even at the cost of a small loss of performance. One prominent Python core developer** wanted this feature for years, and I recall them saying something like:

Guido says, "When a programmer iterates over a dictionary and they see the keys shift around when the dictionary changes, they learn something!" To that I say--"Yes! They learn that Python is unreliable and random!"

/arry

** I won't give their name here because I fear I'm misquoting

everybody involved. Apologies in advance if that's the case!

Tim Peters

>> platforms, and releases, then what "insertion order" _means_ needs to

>> be rigorously defined & specified for all set operations. This was

>> comparatively trivial for dicts, because there are, e.g., no

>> commutative binary operators defined on dicts.

> trivial, but hardly impossible. If this proposal goes anywhere I'd be

> willing to contribute to figuring it out.

docs, and every constraint also constrains possible implementations.

You snipped my example of implementing union, which you should really

think about instead ;-)

>> If you want to insist that `a | b` first list all the elements of a,

>> and then all the elements of b that weren't already in a, regardless

>> of cost, then you create another kind of unintuitive surprise: in

>> general the result of "a | b" will display differently than the result

>> of "b | a" (although the results will compare equal), and despite that

>> the _user_ didn't "insert" anything.

> Call me weird--and I won't disagree--but I find nothing unintuitive about that.

> After all, that's already the world we live in: there are any number of sets

> that compare as equal but display differently. In current Python:

>

> >>> a = {'a', 'b', 'c'}

> >>> d = {'d', 'e', 'f'}

> >>> a | d

> {'f', 'e', 'd', 'a', 'b', 'c'}

> >>> d | a

> {'f', 'b', 'd', 'a', 'e', 'c'}

would never happen when the sets had different cardinalities (the

different sizes are used to force a "standard" order then). For

mathematical sets, | is commutative (it makes no difference to the

result if the arguments are swapped - but can make a _big_ difference

to implementation performance unless the implementation is free to

pick the better order).

> ...

> insertion order. Dicts don't have the | operator, so I approximate the

> operation by duplicating the dict (which AFAIK preserves insertion order)

> and using update.

Too different to be interesting here - update() isn't commutative.

For sets, union, intersection, and symmetric difference are

commutative.

> ...

> surprising if sets behaved that way too. In fact, I think it's a little surprising

> that they behave differently, which I suppose was my whole thesis from

> the beginning.

now. It just doesn't bother me enough that I'm cool with slowing set

operations to "fix that".

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Tim Peters

> insertion order when mixing insertions and deletions?

"ordered dict", deletion all by itself got marginally cheaper. The

downside was the need to rearrange the whole dict when too many

"holes" built up. "Compact (but unordered) dict" doesn't need that.

> My recollection is that that was part of the debate--not only "are we going to

> regret inflicting these semantics on posterity, and on other implementations?",

> but also "are these semantics worth the admittedly-small performance hit, in

> Python's most important and most-used data structure?".

distributed across two arrays. In non-compact unordered dicts,

everything is in a single array. Cache effects may or may not make a

measurable difference then, depending on all sorts of things.

> Also, I don't recall anything about us resigning ourselves to explicitly

> maintain ordering on dicts because people were relying on it, "battling

> windmills", etc. Dict ordering had never been guaranteed, a lack

> of guarantee Python had always taken particularly seriously. Rather, we

> decided it was a desirable feature, and one worth pursuing even at the

> cost of a small loss of performance.

the implementation supplying ordered dicts, and the language

guaranteeing it, was, I suspect, more a matter of getting extensive

real-world experience about whether the possible new need to massively

rearrange dict internals to remove "holes" would bite someone too

savagely to live with. But, again, I wasn't paying attention at the

time.

> One prominent Python core developer** wanted this feature for years, and I recall

> them saying something like:

>

> Guido says, "When a programmer iterates over a dictionary and they see the keys

> shift around when the dictionary changes, they learn something!" To that I say--"Yes!

> They learn that Python is unreliable and random!"

in all, given that _I_ haven't seen a performance degradation, I like

that they're more predictable, and love the memory savings.

But as Raymond said (& I elaborated on), there's reason to believe

that the implementation of ordered dicts is less suited to sets, where

high rates of mixing adds and deletes is more common (thus triggering

high rates of massive internal dict rearranging). Which is why I said

much earlier that I'd be +1 on ordered sets only when there's an

implementation that's as fast as what we have now.

Backing up:

> Python is the language where speed, correctness, and readability trump

> performance every time.

So assuming you didn't intend to type "speed", I think you must have,

say, Scheme or Haskell in mind there. "Practicality beats purity" is

never seen on forums for those languages. Implementation speed & pain

have played huge rules in many Python decisions. As someone who has

wrestled with removing the GIL, you should know that better than

anyone ;-)

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Larry Hastings

[Larry]

Python is the language where speed, correctness, and readability trump performance every time.Speed trumping performance didn't make any sense ;-)

Sorry, that was super unclear! I honestly meant speed of

development.

D'oh,

/arry

Steven D'Aprano

> I think we should decide the question "should set

> objects maintain insertion order?" literally without any consideration

> about performance implications.

> I also want FAST lookup because I soemtimes remove jobs when they're

So do you care about performance or not?

:-)

If you do care (a bit) about performance, what slowdown would you be

willing to take to get ordered sets? That would give us a good idea of

the potential trade-offs that might be acceptable.

Without being facetious[1] if you don't care about performance, you

don't need a set, you could use a list.

There's a serious point here: there's nothing sets can do that couldn't

be implemented using lists. The reason we have sets, rather than a

bunch of extra methods like intersection and symmetric_difference on

lists, is because we considered performance.

[1] Well... maybe a tiny bit... *wink*

--

Steven

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Larry Hastings

Without being facetious[1] if you don't care about performance, you don't need a set, you could use a list.

Lists don't enforce uniqueness. Apart from that a list would

probably work fine for my needs; in my admittedly-modest workloads

I would probably never notice a performance difference. My

anecdote was merely a jumping-off point for the discussion.

"I don't care about performance" is not because I'm aching for

Python to run my code slowly. It's because I'm 100% confident

that the Python community will lovingly optimize the

implementation. So when I have my language designer hat on, I

really don't concern myself with performance. As I thought I said

earlier in the thread, I think we should figure out the semantics

we want first, and then we figure out how to make

it fast.

I'll also cop to "a foolish consistency is the hobgoblin of little minds". I lack this strongly mathematical view of sets others have espoused; instead I view them more like "dicts without values". I'm therefore disgruntled by this inconsistency between what are I see as closely related data structures, and it makes sense to me that they'd maintain their insertion order the same way that dictionaries now do.

/arry

Paul Moore

> I lack this strongly mathematical view of sets others have espoused; instead I view them more like "dicts without values". I'm therefore disgruntled by this inconsistency between what are I see as closely related data structures, and it makes sense to me that they'd maintain their insertion order the same way that dictionaries now do.

views. But I view sets as "collections" of values - values are "in"

the set or not. I don't see them operationally, in the sense that you

add and remove things from the set. Adding and removing are mechanisms

for changing the set, but they aren't fundamentally what sets are

about (which for me is membership). So insertion order on sets is

largely meaningless for me (it doesn't matter *how* it got there, what

matters is whether it's there now).

Having said that, I also see dictionaries as mappings from key to

value, so insertion order is mostly not something I care about for

dictionaries either. I can see the use cases for ordered dictionaries,

and now we have insertion order, it's useful occasionally, but it's

not something that was immediately obvious to me based on my

intuition. Similarly, I don't see sets as *needing* insertion order,

although I concede that there probably are use cases, similar to

dictionaries. The question for me, as with dictionaries, is whether

the cost (in terms of clear semantics, constraints on future

implementation choices, and actual performance now) is justified by

the additional capabilities (remembering that personally, I'd consider

insertion order preservation as very much a niche requirement).

Paul

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Ivan Levkivskyi

On Tue, 17 Dec 2019 at 11:13, Larry Hastings <la...@hastings.org> wrote:

> I lack this strongly mathematical view of sets others have espoused; instead I view them more like "dicts without values". I'm therefore disgruntled by this inconsistency between what are I see as closely related data structures, and it makes sense to me that they'd maintain their insertion order the same way that dictionaries now do.

I'll admit to a mathematical background, which probably influences my

views. But I view sets as "collections" of values - values are "in"

the set or not. I don't see them operationally, in the sense that you

add and remove things from the set. Adding and removing are mechanisms

for changing the set, but they aren't fundamentally what sets are

about (which for me is membership). So insertion order on sets is

largely meaningless for me (it doesn't matter *how* it got there, what

matters is whether it's there now).

Having said that, I also see dictionaries as mappings from key to

value, so insertion order is mostly not something I care about for

dictionaries either. I can see the use cases for ordered dictionaries,

and now we have insertion order, it's useful occasionally, but it's

not something that was immediately obvious to me based on my

intuition. Similarly, I don't see sets as *needing* insertion order,

although I concede that there probably are use cases, similar to

dictionaries. The question for me, as with dictionaries, is whether

the cost (in terms of clear semantics, constraints on future

implementation choices, and actual performance now) is justified by

the additional capabilities (remembering that personally, I'd consider

insertion order preservation as very much a niche requirement).

Serhiy Storchaka

> remove duplicates

> from the list while preserving the order of first appearance.

> This is for example needed to get stability in various type-checking

> situations (like union items, type variables in base classes, type

> queries etc.)

>

> One can write a four line helper to achieve this, but I can see that

> having order preserving set could be useful.

> So again, it is "nice to have but not really critical".

list(dict.fromkeys(iterable))

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Tim Peters

[Larry]

>> them saying something like:

>>

>> Guido says, "When a programmer iterates over a dictionary and they see the keys

>> shift around when the dictionary changes, they learn something!" To that I say--"Yes!

>> They learn that Python is unreliable and random!"

> in all, given that _I_ haven't seen a performance degradation, I like

> that they're more predictable, and love the memory savings.

He wasn't quoting me, and I didn't think he was. What he did quote

was delightfully snarky enough that I didn't want to snip it, but not

substantial enough to be worth the bother of addressing. So I just

moved on to summarize what I said at the time (i.e., nothing).

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Tim Peters

> run my code slowly. It's because I'm 100% confident that the Python

> community will lovingly optimize the implementation.

> So when I have my language designer hat on, I really don't concern myself

> with performance. As I thought I said earlier in the thread, I think we should

> figure out the semantics we want first, and then we figure out how to make it fast.

and dicts were deliberately designed to have dirt simple

implementations, in reaction against ABC's "theoretically optimal"

data structures that were a nightmare to maintain, and had so much

overhead to support "optimality" in all cases that they were, in fact,

much slower in common cases.

There isn't magic waiting to be uncovered here: if you want O(1)

deletion at arbitrary positions in an ordered sequence, a doubly

linked list is _the_ way to do it. That's why, e.g., Raymond said he

still uses a doubly linked list, instead of an ordered dict, in the

LRU cache implementation. If that isn't clear, a cache can be

maintained in least-to-most recently accessed order with an ordered

dict like so:

if key in cache:

cached_value = cache.pop(key) # remove key

else:

compute cached_value

assert key not in cache

cache[key] = cached_value # most recently used at the end now

return cached_value

and deleting the least recently used is just "del

cache[next(iter(cache))]" (off topic, just noting this is a fine use

for the "first()" function that's the subject of a different thread).

We _could_ structure an ordered dict's hash+key+value records as a

doubly linked list instead (and avoid the need for O(N)

reorganizations). But then we piss away much of the memory savings

(to store the new links) that was the _point_ of compact dicts to

begin with.

So there was a compromise. No links, deletion on its own is O(1), but

can periodically require O(N) under-the-covers table reorganizations

to squash out the holes. "Suitable enough" for ordered dicts, but

_thought_ to be unsuitable for ordered sets (which appear to have

higher rates of mixing deletions with insertions - the LRU cache

example being an exception).

But there are also other optimizations in the current set

implementation, so "fine, add the doubly linked list to sets but not

to dicts" is only part of it.

Which may or may not be possible to match, let alone beat, in an

ordered set implementation. A practical barrier now is that Python is

too mature to bank on loving optimizations _after_ a change to a core

feature is released. It's going to need a highly polished

implementation first.

I certainly don't object to anyone trying, but it won't be me ;-)

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Nick Coghlan

But there are also other optimizations in the current set

implementation, so "fine, add the doubly linked list to sets but not

to dicts" is only part of it.

Which may or may not be possible to match, let alone beat, in an

ordered set implementation. A practical barrier now is that Python is

too mature to bank on loving optimizations _after_ a change to a core

feature is released. It's going to need a highly polished

implementation first.

______________________________

Tim Peters

> though - that way "like a built-in set, but insertion order preserving" will

> have an obvious and readily available answer, and it should also

> make performance comparisons easier.

The problem is that the "use case" here isn't really a matter of

functionality, but of consistency: "it would be nice if", like dicts

enjoy now, the iteration order of sets was defined in an

implementation-independent way. That itch isn't scratched unless the

builtin set type defines it.

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Wes Turner

- https://github.com/methane/cpython/pull/23/files

- https://pypi.org/search/?q=orderedset

- https://pypi.org/project/orderedset/

- https://code.activestate.com/recipes/576694/

- https://pypi.org/project/ordered-set/

- https://github.com/pandas-dev/pandas/blob/master/pandas/core/indexes/base.py#L172

(pandas' Index types)

- https://pypi.org/project/sortedcollections/

[Ordered] Sets and some applications:

- https://en.wikipedia.org/wiki/Set_(mathematics)

- https://en.wikipedia.org/wiki/Set_notation

- https://en.wikipedia.org/wiki/Set-builder_notation

- https://en.wikipedia.org/wiki/Glossary_of_set_theory

- https://en.wikipedia.org/wiki/Set_(abstract_data_type)

- Comparators

- https://en.wikipedia.org/wiki/Total_order

- https://en.wikipedia.org/wiki/Partially_ordered_set

- https://en.wikipedia.org/wiki/Causal_sets

Nick Coghlan

[Nick Coghlan <ncog...@gmail.com>]

> Starting with "collections.OrderedSet" seems like a reasonable idea,

> though - that way "like a built-in set, but insertion order preserving" will

> have an obvious and readily available answer, and it should also

> make performance comparisons easier.

Ya, I suggested starting with collections.OrderedSet earlier, but gave up on it.

The problem is that the "use case" here isn't really a matter of

functionality, but of consistency: "it would be nice if", like dicts

enjoy now, the iteration order of sets was defined in an

implementation-independent way. That itch isn't scratched unless the

builtin set type defines it.

David Mertz

I took Larry's request a slightly different way: he has a use case where he wants order preservation (so built in sets aren't good), but combined with low cost duplicate identification and elimination and removal of arbitrary elements (so lists and collections.deque aren't good). Organising a work queue that way seems common enough to me to be worthy of a stdlib solution that doesn't force you to either separate a "job id" from the "job" object in an ordered dict, or else use an ordered dict with "None" values.

Out[8]: SortedSet(['Drink Coffee', 'Make Breakfast', 'Wake Up'])

In [9]: sortedcontainers.SortedSet(['Wake Up', 'Drink Coffee', 'Make Breakfast'], key=lambda s: s[-1])

Out[9]: SortedSet(['Drink Coffee', 'Wake Up', 'Make Breakfast'], key=<function <lambda> at 0x7f68f5985400>)

In [10]: 'Make Breakfast' in tasks

Out[10]: True

Tim Peters

to be the overwhelming thrust of the _entirety_ of this thread so far,

not Larry's original request.

> he has a use case where he wants order preservation (so built in sets aren't

> good), but combined with low cost duplicate identification and elimination and

> removal of arbitrary elements (so lists and collections.deque aren't good).

> Organising a work queue that way seems common enough to me to be

> worthy of a stdlib solution that doesn't force you to either separate a

> "job id" from the "job" object in an ordered dict, or else use an ordered

> dict with "None" values.

>

> So while it means answering "No" to the "Should builtin sets preserve

> order?" question (at least for now), adding collections.OrderedSet *would*

> address that "duplicate free pending task queue" use case.

_guess_ is that he wouldn't know OrderedSet existed, and, even if he

did, he'd use a dict with None values anyway (because it's less hassle

and does everything he wanted).

But I have to add that I don't know enough about his original use case

to be sure it wasn't "an XY problem":

https://meta.stackexchange.com/questions/66377/what-is-the-xy-problem

"""

The XY problem is asking about your attempted solution rather than

your actual problem.

That is, you are trying to solve problem X, and you think solution Y

would work, but instead of asking about X when you run into trouble,

you ask about Y.

"""

Because I've never had a "job queue" problem where an OrderedSet would

have helped. Can't guess what's different about Larry's problem.

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Tim Peters

> most commonly relevant sort order. I'm sure it is for Larry's program, but

> often a work queue might want some other order. Very often queues

> might instead, for example, have a priority number assigned to them.

(rather than just consistency) of an ordered set are missing an XY

problem. The only "natural" value of insertion-order ordering for a

dynamic ordered set is that it supports FIFO queues. Well, that's one

thing that a deque already excels at, but much more obviously,

flexibly, and space- and time- efficiently.

For dicts the motivating use cases were much more "static", like

preserving textual order of keyword argument specifications, and

avoiding gratuitous changing of order when round-tripping key-value

encodings like much of JSON.

So what problem(s) would a dynamic ordered set really be aiming at? I

don't know. Consistency & predictability I understand and appreciate,

but little beyond that. Even FIFO ordering is somewhat a PITA, since

`next(iter(set))` is an overly elaborate way to need to spell "get the

first" if a FIFO queue _is_ a prime dynamic use case. And there's no

efficient way at all to access the other end (although I suppose

set.pop() would - like dict.popitem() - change to give a _destructive_

way to get at "the other end").

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Nick Coghlan

So what problem(s) would a dynamic ordered set really be aiming at? I

don't know. Consistency & predictability I understand and appreciate,

but little beyond that. Even FIFO ordering is somewhat a PITA, since

`next(iter(set))` is an overly elaborate way to need to spell "get the

first" if a FIFO queue _is_ a prime dynamic use case. And there's no

efficient way at all to access the other end (although I suppose

set.pop() would - like dict.popitem() - change to give a _destructive_

way to get at "the other end").

Tim Peters

> implementation would support the MutableSequence interface.

requirement, My bet: basic operations would need to change from O(1)

to O(log(N)).

BTW, in previous msgs there are links to various implementations

calling themselves "ordered sets". One of them supplies O(1)

indexing, but at the expense of making deletion O(N) (!):

https://pypi.org/project/ordered-set/

If efficient indexing is really wanted, then the original "use case"

Larry gave was definitely obscuring an XY problem ;-)

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Wes Turner

Inada Naoki

>

> How slow and space-inefficient would it be to just implement the set methods on top of dict?

next(iter(D)) repeatedly is O(N) in worst case.

Space: It waste 8bytes per member.

>

> Do dicts lose insertion order when a key is deleted? AFAIU, OrderedDict do not lose insertion order on delete.

>Would this limit the utility of an ordered set as a queue? What set methods does a queue need to have?

would be better maybe).

--

Inada Naoki <songof...@gmail.com>

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Peter Wang

> It's not obvious to me that insertion order is even the most obvious or

> most commonly relevant sort order. I'm sure it is for Larry's program, but

> often a work queue might want some other order. Very often queues

> might instead, for example, have a priority number assigned to them.

Indeed, and it makes me more cautious that claims for the _usefulness_

(rather than just consistency) of an ordered set are missing an XY

problem. The only "natural" value of insertion-order ordering for a

dynamic ordered set is that it supports FIFO queues. Well, that's one

thing that a deque already excels at, but much more obviously,

flexibly, and space- and time- efficiently.

Wes Turner

Tim Peters

>> on top of dict?

> next(iter(D)) repeatedly is O(N) in worst case.

See also Raymond's (only) message in this thread. We would also lose

low-level speed optimizations specific to the current set

implementation. And we would need to define what "insertion order"

_means_ for operators (union, intersection, symmetric difference) that

build a new set out of other sets without a user-level "insert" in

sight. However that's defined, it may constrain possible

implementations in ways that are inherently slower (for example, may

require the implementation to iterate over the larger operand where

they currently iterate over the smaller).

And the current set implementation (like the older dict

implementation) never needs to "rebuild the table from scratch" unless

the cardinality of the set keeps growing. As Raymond telegraphically

hinted, the current dict implementation can, at times, in the presence

of deletions, require rebuilding the table from scratch even if the

dict's maximum size remains bounded.

That last can't be seen directly from Python code (rebuilding the

table is invisible at the Python level). Here's a short example:

d = dict.fromkeys(range(3))

while True:

del d[0]

d[0] = None

Run that with a breakpoint set on dictobject.c's `dictresize()`

function. You'll see that it rebuilds the table from scratch every

3rd time through the loop. In effect, for the purpose of deciding

whether it needs to rebuild, the current dict implementation sees no

difference between adding a new element and deleting an existing

element Deleting leaves behind "holes" that periodically have to be

squashed out of existence so that insertion order can be maintained in

a dead simple way upon future additions.

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Inada Naoki

>

> [Wes Turner <wes.t...@gmail.com>]

> >> How slow and space-inefficient would it be to just implement the set methods

> >> on top of dict?

>

> [Inada Naoki <songof...@gmail.com>]

> > Speed: Dict doesn't cache the position of the first item. Calling

> > next(iter(D)) repeatedly is O(N) in worst case.

> > ...

>

> See also Raymond's (only) message in this thread. We would also lose

> low-level speed optimizations specific to the current set

> implementation.

I just meant the performance of the next(iter(D)) is the most critical part

when you implement orderdset on top of the current dict and use it as a queue.

This code should be O(N), but it's O(N^2) if q is implemented on top

of the dict.

while q:

item = q.popleft()

Sorry for the confusion.

>

> And the current set implementation (like the older dict

> implementation) never needs to "rebuild the table from scratch" unless

> the cardinality of the set keeps growing.

set requires the rebuild in low frequency if the its items are random.

Anyway, it is a smaller problem than next(iter(D)) because of the

amortized cost is O(1).

Current dict is not optimized for queue usage.

Regards,

--

Inada Naoki <songof...@gmail.com>

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Tim Peters

> when you implement orderdset on top of the current dict and use it as a queue.

even mention queues in his question:

[Wes Turner <wes.t...@gmail.com>]

>>>> How slow and space-inefficient would it be to just implement the set methods

>>>> on top of dict?

of answers ;-)

> This code should be O(N), but it's O(N^2) if q is implemented on top

> of the dict.

>

> while q:

> item = q.popleft()

>

> Sorry for the confusion.

contiguous vector, with no "holes" in the middle. Pushing a new item

appends "to the right" (higher index in the vector), while popping an

item leaves "a hole" at the left. But there are no holes "in the

middle" in this case.

So the first real entry is at a higher index with every pop, but

iteration starts at index 0 every time. The more pops there are, the

more holes iteration has to skip over, one at a time, to find the

first real entry remaining.

Until pushing a new item would fall off the right end of the vector.

Then the table is rebuilt from scratch, moving all the real entries to

form a contiguous block starting at index 0 again.

>> And the current set implementation (like the older dict

>> implementation) never needs to "rebuild the table from scratch" unless

>> the cardinality of the set keeps growing.

> It is a bit misleading. If "the cardinality of the set" means len(S),

> set requires the rebuild in low frequency if the its items are random.

>

> Anyway, it is a smaller problem than next(iter(D)) because of the

> amortized cost is O(1).

period". is more desirable than "amortized O(1)". This is especially

true when sets get very large.

> Current dict is not optimized for queue usage.

pops, at both ends, always time O(1) period. No holes, no internal

rearrangements, and O(1) "wasted" space.

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Larry Hastings

(mixing together messages in the thread, sorry threaded-view

readers)

[Nick]

I took Larry's request a slightly different way:

[...]

Only Larry can answer whether that would meet his perceived need. My _guess_ is that he wouldn't know OrderedSet existed, and, even if he did, he'd use a dict with None values anyway (because it's less hassle and does everything he wanted).

At last, something I can speak knowledgeably about: Larry's use case! Regarding Larry, I'd say

- his use case was small enough that almost anything maintaining

order would have worked, even a list,

- he often does have a pretty good idea what goodies are salted away in the Python standard library, and

- a hypothetical collections.OrderedSet would probably work just

fine. And he'd probably use it too, as that makes the code

clearer / easier to read. If you read code using an OrderedSet,

you know what the intent was and what the semantics are. If you

see code using a dict but setting the values to None, you think

"that's crazy" and now you have a puzzle to solve. Let's hope

those comments are accurate!

Also, speaking personally, at least once (maybe twice?) in this thread folks have asked "would YOU, Mr Larry, really want ordered sets if it meant sets were slightly slower?"

The first thing I'd say is, I'm not sure why people should care about what's best for me. That's sweet of you! But you really shouldn't.

The second thing is, my needs are modest, so the speed hit we're talking about would likely be statistically insignificant, for me.

And the third thing is, I don't really put the set() API through

much of a workout anyway. I build sets, I add and remove items, I

iterate over them, I do the occasional union, and only very rarely

do I use anything else. Even then, when I write code where I

reach for a fancy operation like intersection or

symmetric_difference--what tends to happen is, I have a rethink

and a refactor, and after I simplify my code I don't need the

fancy operations anymore. I can't remember the last time I used

one of these fancy operations where the code really stuck around

for a long time.

So, personally? Sure, I'd take that tradeoff. As already

established, I like that dict objects maintain their order, and I

think it'd be swell if sets maintained their order too. I suspect

the performance hit wouldn't affect me in any meaningful

way, and I could benefit from the order-maintaining semantics. I

bet it'd have other benefits too, like making regression tests

more stable. And my (admittedly uninformed!) assumption is, the

loss of performance would mostly be in these sophisticated

operations that I never seem to use anyway.

But I don't mistake my needs for the needs of the Python

community at large. I'd be mighty interested to read other folks

coming forward and saying, for example, "please don't make set

objects any slower!" and talking us through neat real-world use

cases. Bonus points if you include mind-boggling numbers and

benchmarks!

As Larry pointed out earlier, ordered dicts posed a problem for MicroPython.

Just a minor correction: that wasn't me, that was Petr Viktorin.

Ten days left until we retire 2.7,

/arry

Kyle Stanley

_______________________________________________

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Message archived at https://mail.python.org/archives/list/pytho...@python.org/message/JYSJ55CWUE2FFK2K2Y75EWE7R7M6ZDGG/

Guido van Rossum

Code of Conduct: http://python.org/psf/codeofconduct/

David Mertz

Message archived at https://mail.python.org/archives/list/pytho...@python.org/message/2KEAXZBQP6YJ2EV4YURQZ7NFFRJZQRFH/

Kyle Stanley

4.878508095000143

4.9192055170024105

7.992674231001729

>>> timeit.timeit("random.randint(0, 999) in d", setup="import random; d = {i: None for i in range(1000)}", number=10_000_000)

8.032404395999038

13.330938750001224

5.788865385999088

6.2428832090008655

>>> timeit.timeit("d.update(other_d)", setup="d = {}; other_d = {i: None for i in range(1000)}", number=1_000_000)

13.031371458000649

5.24364303600305

>>> timeit.timeit("list(d)", setup="d = {i: None for i in range(1000)}", number=10_00_000)

6.303017043999716

Chris Angelico

> Add (much faster for dicts):

> >>> timeit.timeit("s = set(); s.add(0)", number=100_000_000)

> 13.330938750001224

> >>> timeit.timeit("d = {}; d[0] = None", number=100_000_000)

> 5.788865385999088

>>> timeit.timeit("s = set(); s.add(0)", number=100_000_000)

>>> timeit.timeit("d = dict(); d[0] = None", number=100_000_000)

13.044076398015022

>>> timeit.timeit("s = {1}; s.add(0)", number=100_000_000)

9.260965215042233

>>> timeit.timeit("d = {1:2}; d[0] = None", number=100_000_000)

8.75433829985559

When both use the constructor call or both use a literal, the

difference is far smaller. I'd call this one a wash.

ChrisA

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Stephen J. Turnbull

> Even though I was the first person in this thread to suggest

> collections.OrderedSet, I'm "meh" about it now. As I read more and played

> with the sortedcollections package, it seemed to me that while I might want

> a set that iterated in a determinate and meaningful order moderately often,

> insertion order would make up a small share of those use cases.

determinate meaningful orders where you would have to do ugly things

to use "sorted" to get that order. Any application where you have an

unreliable message bus feeding a queue (so that you might get

duplicate objects but it's bad to process the same object twice) would

be a potential application of insertion-ordered sets.

Steve

_______________________________________________

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Tim Peters

_______________________________________________

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Tim Peters

Very briefly:

> >>> timeit.timeit("set(i for i in range(1000))", number=100_000)

The collision resolution strategy for sets evolved to be fancier than

for dicts, to reduce cache misses. This is important for sets because

the _only_ interesting thing about an element wrt a set is whether or

not the set contains it. Lookup speed is everything for sets.

If you use a contiguous range of "reasonable" integers for keys, the

integer hash function is perfect: there's never a collision. So any

such test misses all the work Raymond did to speed set lookups.

String keys have sufficiently "random" hashes to reliably create

collisions, though. Cache misses can, of course, have massive effects

on timing.

> Add (much faster for dicts):

> >>> timeit.timeit("s = set(); s.add(0)", number=100_000_000)

> 13.330938750001224

> >>> timeit.timeit("d = {}; d[0] = None", number=100_000_000)

> 5.788865385999088

"add" method of sets (that's more expensive than adding 0 to the set).

A better comparison would, e.g., move `add = s.add` to a setup line,

and use plain "add(0)" in the loop.

That's it!

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Kyle Stanley

> difference is far smaller. I'd call this one a wash.

2.1038335599987477

>>> timeit.timeit("dict()", number=100_000_000)

10.225583500003268

Kyle Stanley

> for dicts, to reduce cache misses. This is important for sets because

> the _only_ interesting thing about an element wrt a set is whether or

> not the set contains it. Lookup speed is everything for sets.

> integer hash function is perfect: there's never a collision. So any

> such test misses all the work Raymond did to speed set lookups.

> String keys have sufficiently "random" hashes to reliably create

> collisions, though. Cache misses can, of course, have massive effects

> In the former case you're primarily measuring the time to look up the

> "add" method of sets (that's more expensive than adding 0 to the set).

> A better comparison would, e.g., move `add = s.add` to a setup line,

> and use plain "add(0)" in the loop.

Tim Peters

> ...

> giving it much thought) that the average collision rate would be the

> same for set items and dict keys. Thanks for the useful information.

anymore. The latter use a mix of probe strategies now, "randomish"

jumps (same as for dicts) but also purely linear ("up by 1") probing

to try to exploit L1 cache.

It's not _apparent_ to me that the mix actually helps ;-) I just

trust that Raymond timed stuff until he was sure it does.

> ...

> integers. A better comparison to demonstrate the collision differences would

> likely use random strings.

same integer.

> Also, I believe that max "reasonable" integer range of no collision

Any range that does _not_ contain both -2 and -1 (-1 is an annoying

special case, with hash(-1) == hash(-2) == -2), and spans no more than

sys.hash_info.modulus integers. Apart from that, the sign and

magnitude of the start of the range don't matter; e.g.,

>>> len(set(hash(i) for i in range(10**5000, 10**5000 + 1000000)))

1000000

>>> len(set(hash(i) for i in range(-10**5000, -10**5000 + 1000000)))

1000000

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Tim Peters

>> is (-2305843009213693951, 2305843009213693951), ...

> Any range that does _not_ contain both -2 and -1 (-1 is an annoying

> special case, with hash(-1) == hash(-2) == -2), and spans no more than

> sys.hash_info.modulus integers. Apart from that, the sign and

> magnitude of the start of the range don't matter; e.g.,

>

> >>> len(set(hash(i) for i in range(10**5000, 10**5000 + 1000000)))

> 1000000

> >>> len(set(hash(i) for i in range(-10**5000, -10**5000 + 1000000)))

> 1000000

and sets use only the last N bits to determine the start of the probe

sequence, for some value of N >= 3 (depending on the table size). So

for a table of size a million, the last 20 bits (1000000 ~= 2**20) are

interesting. But same thing:

>>> MASK = (1 << 20) - 1

>>> len(set(hash(i) & MASK for i in range(-10**5000, -10**5000 + 1000000)))

1000000

>>> len(set(hash(i) & MASK for i in range(-10**5000, -10**5000 + 1000000)))

_______________________________________________

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Larry Hastings

Chris Angelico wrote:

> I think this is an artifact of Python not having an empty set literal.

> [snip]

> When both use the constructor call or both use a literal, the

> difference is far smaller. I'd call this one a wash.

Ah, good catch. I hadn't considered that it would make a substantial difference, but that makes sense. Here's an additional comparison between "{}" and "dict()" to confirm it:

>>> timeit.timeit("{}", number=100_000_000)

2.1038335599987477

>>> timeit.timeit("dict()", number=100_000_000)

10.225583500003268

We could also go the other way with it, set / dict-map-to-dontcare with one element, because that way we can have a set literal:

>>> timeit.timeit("set()", number=100_000_000)

8.579900023061782

>>> timeit.timeit("dict()", number=100_000_000)

10.473437276901677

>>> timeit.timeit("{0}", number=100_000_000)

5.969995185965672

>>> timeit.timeit("{0:0}", number=100_000_000)

6.24465325800702

(ran all four of those just so you see a relative sense on my

laptop, which I guess is only sliiiightly slower than Kyle's)

Happy holidays,

/arry

Wes Turner

_______________________________________________

Python-Dev mailing list -- pytho...@python.org

To unsubscribe send an email to python-d...@python.org

https://mail.python.org/mailman3/lists/python-dev.python.org/

Message archived at https://mail.python.org/archives/list/pytho...@python.org/message/MQAKLPKWECT22NVPRITAD5XQBIFUS4UA/

Tim Peters

> anymore. The latter use a mix of probe strategies now, "randomish"

> jumps (same as for dicts) but also purely linear ("up by 1") probing

> to try to exploit L1 cache.

>

> It's not _apparent_ to me that the mix actually helps ;-) I just

> trust that Raymond timed stuff until he was sure it does.

pay off. Output (3.8.1, Win 10 Pro, quad core box with plenty of

RAM):

11184810 nice keys

dict build 0.54

dict iter 0.07

dict lookup 0.81

set build 0.54

set iter 0.08

set lookup 0.82

11184810 nasty keys

dict build 23.32

dict iter 0.09

dict lookup 13.26

set build 9.79

set iter 0.28

set lookup 8.46

I didn't make heroic efforts to keep the machine quiet, and there's

substantial variation across runs. For example, there's no real

difference beteen "0.07" and "0.08".

Some things to note:

- The "nice" keys act much the same regardless of whether dict or set.

Dicts have an advantage for iteration "in theory" in the absence of

deletions because there are no "holes" in the area storing the

hash+key+value records. But for these specific keys, the same is true

of sets: the initial hash probes are at indices 0, 1, 2, ...,

len(the_set)-1, and there are no holes in its hash+key records either

(all the empty slots are together "at the right end").

- Sets get a lot of value out of their fancier probe sequence for the

nasty keys. There are lots of collisions, and sets can much more

often resolve them from L1 cache. Better than a factor of 2 for build

time is nothing to sneeze at.