Debezium oracle connector v(1.7.1) Timeout expired while fetching topic metadata

1,408 views

Skip to first unread message

Chaiyapon

Oct 31, 2021, 12:01:49 PM10/31/21

to debezium

Hello everyone

i use debezium oracle connector , After i was created and found something like this

i don't know where is the problem

Chaiyapon

Oct 31, 2021, 12:03:41 PM10/31/21

to debezium

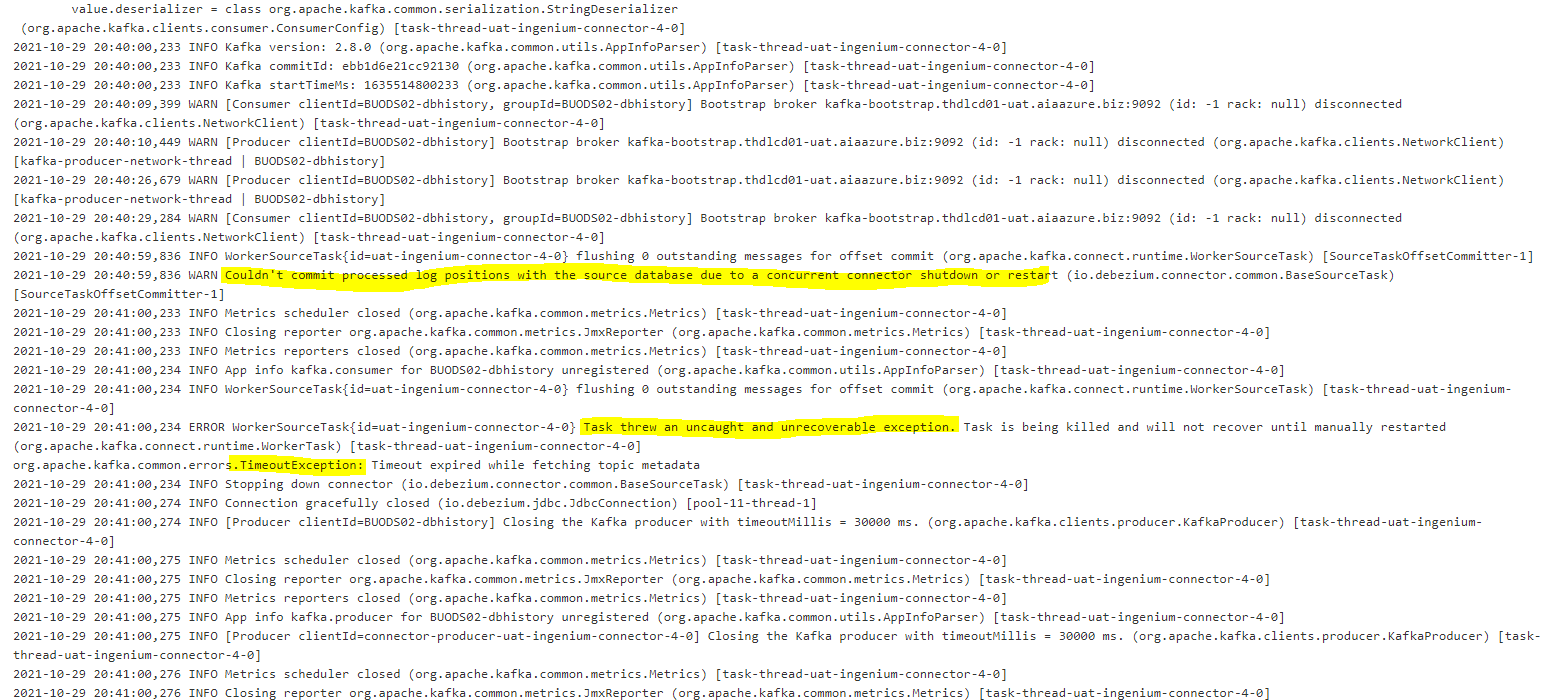

2021-10-29 20:40:00,233 INFO Kafka version: 2.8.0 (org.apache.kafka.common.utils.AppInfoParser) [task-thread-uat-ingenium-connector-4-0]

2021-10-29 20:40:00,233 INFO Kafka commitId: ebb1d6e21cc92130 (org.apache.kafka.common.utils.AppInfoParser) [task-thread-uat-ingenium-connector-4-0]

2021-10-29 20:40:00,233 INFO Kafka startTimeMs: 1635514800233 (org.apache.kafka.common.utils.AppInfoParser) [task-thread-uat-ingenium-connector-4-0]

2021-10-29 20:40:09,399 WARN [Consumer clientId=BUODS02-dbhistory, groupId=BUODS02-dbhistory] Bootstrap broker kafka-bootstrap.thdlcd01-uat.aiaazure.biz:9092 (id: -1 rack: null) disconnected (org.apache.kafka.clients.NetworkClient) [task-thread-uat-ingenium-connector-4-0]

2021-10-29 20:40:10,449 WARN [Producer clientId=BUODS02-dbhistory] Bootstrap broker kafka-bootstrap.thdlcd01-uat.aiaazure.biz:9092 (id: -1 rack: null) disconnected (org.apache.kafka.clients.NetworkClient) [kafka-producer-network-thread | BUODS02-dbhistory]

2021-10-29 20:40:26,679 WARN [Producer clientId=BUODS02-dbhistory] Bootstrap broker kafka-bootstrap.thdlcd01-uat.aiaazure.biz:9092 (id: -1 rack: null) disconnected (org.apache.kafka.clients.NetworkClient) [kafka-producer-network-thread | BUODS02-dbhistory]

2021-10-29 20:40:29,284 WARN [Consumer clientId=BUODS02-dbhistory, groupId=BUODS02-dbhistory] Bootstrap broker kafka-bootstrap.thdlcd01-uat.aiaazure.biz:9092 (id: -1 rack: null) disconnected (org.apache.kafka.clients.NetworkClient) [task-thread-uat-ingenium-connector-4-0]

2021-10-29 20:40:59,836 INFO WorkerSourceTask{id=uat-ingenium-connector-4-0} flushing 0 outstanding messages for offset commit (org.apache.kafka.connect.runtime.WorkerSourceTask) [SourceTaskOffsetCommitter-1]

2021-10-29 20:40:59,836 WARN Couldn't commit processed log positions with the source database due to a concurrent connector shutdown or restart (io.debezium.connector.common.BaseSourceTask) [SourceTaskOffsetCommitter-1]

2021-10-29 20:41:00,233 INFO Metrics scheduler closed (org.apache.kafka.common.metrics.Metrics) [task-thread-uat-ingenium-connector-4-0]

2021-10-29 20:41:00,233 INFO Closing reporter org.apache.kafka.common.metrics.JmxReporter (org.apache.kafka.common.metrics.Metrics) [task-thread-uat-ingenium-connector-4-0]

2021-10-29 20:41:00,233 INFO Metrics reporters closed (org.apache.kafka.common.metrics.Metrics) [task-thread-uat-ingenium-connector-4-0]

2021-10-29 20:41:00,234 INFO App info kafka.consumer for BUODS02-dbhistory unregistered (org.apache.kafka.common.utils.AppInfoParser) [task-thread-uat-ingenium-connector-4-0]

2021-10-29 20:41:00,234 INFO WorkerSourceTask{id=uat-ingenium-connector-4-0} flushing 0 outstanding messages for offset commit (org.apache.kafka.connect.runtime.WorkerSourceTask) [task-thread-uat-ingenium-connector-4-0]

2021-10-29 20:41:00,234 ERROR WorkerSourceTask{id=uat-ingenium-connector-4-0} Task threw an uncaught and unrecoverable exception. Task is being killed and will not recover until manually restarted (org.apache.kafka.connect.runtime.WorkerTask) [task-thread-uat-ingenium-connector-4-0]

org.apache.kafka.common.errors.TimeoutException: Timeout expired while fetching topic metadata

2021-10-29 20:41:00,234 INFO Stopping down connector (io.debezium.connector.common.BaseSourceTask) [task-thread-uat-ingenium-connector-4-0]

2021-10-29 20:41:00,274 INFO Connection gracefully closed (io.debezium.jdbc.JdbcConnection) [pool-11-thread-1]

2021-10-29 20:41:00,274 INFO [Producer clientId=BUODS02-dbhistory] Closing the Kafka producer with timeoutMillis = 30000 ms. (org.apache.kafka.clients.producer.KafkaProducer) [task-thread-uat-ingenium-connector-4-0]

2021-10-29 20:41:00,275 INFO Metrics scheduler closed (org.apache.kafka.common.metrics.Metrics) [task-thread-uat-ingenium-connector-4-0]

2021-10-29 20:41:00,275 INFO Closing reporter org.apache.kafka.common.metrics.JmxReporter (org.apache.kafka.common.metrics.Metrics) [task-thread-uat-ingenium-connector-4-0]

2021-10-29 20:41:00,275 INFO Metrics reporters closed (org.apache.kafka.common.metrics.Metrics) [task-thread-uat-ingenium-connector-4-0]

2021-10-29 20:41:00,275 INFO App info kafka.producer for BUODS02-dbhistory unregistered (org.apache.kafka.common.utils.AppInfoParser) [task-thread-uat-ingenium-connector-4-0]

2021-10-29 20:41:00,275 INFO [Producer clientId=connector-producer-uat-ingenium-connector-4-0] Closing the Kafka producer with timeoutMillis = 30000 ms. (org.apache.kafka.clients.producer.KafkaProducer) [task-thread-uat-ingenium-connector-4-0]

2021-10-29 20:41:00,276 INFO Metrics scheduler closed (org.apache.kafka.common.metrics.Metrics) [task-thread-uat-ingenium-connector-4-0]

2021-10-29 20:41:00,276 INFO Closing reporter org.apache.kafka.common.metrics.JmxReporter (org.apache.kafka.common.metrics.Metrics) [task-thread-uat-ingenium-connector-4-0]

2021-10-29 20:41:00,276 INFO Metrics reporters closed (org.apache.kafka.common.metrics.Metrics) [task-thread-uat-ingenium-connector-4-0]

2021-10-29 20:41:00,276 INFO App info kafka.producer for connector-producer-uat-ingenium-connector-4-0 unregistered (org.apache.kafka.common.utils.AppInfoParser) [task-thread-uat-ingenium-connector-4-0]

2021-10-29 20:41:00,276 INFO App info kafka.admin.client for connector-adminclient-uat-ingenium-connector-4-0 unregistered (org.apache.kafka.common.utils.AppInfoParser) [kafka-admin-client-thread | connector-adminclient-uat-ingenium-connector-4-0]

2021-10-29 20:41:00,276 INFO Metrics scheduler closed (org.apache.kafka.common.metrics.Metrics) [kafka-admin-client-thread | connector-adminclient-uat-ingenium-connector-4-0]

2021-10-29 20:41:00,276 INFO Closing reporter org.apache.kafka.common.metrics.JmxReporter (org.apache.kafka.common.metrics.Metrics) [kafka-admin-client-thread | connector-adminclient-uat-ingenium-connector-4-0]

ในวันที่ วันอาทิตย์ที่ 31 ตุลาคม ค.ศ. 2021 เวลา 23 นาฬิกา 01 นาที 49 วินาที UTC+7 Chaiyapon เขียนว่า:

La familia Diez

Nov 11, 2021, 9:57:53 AM11/11/21

to debezium

I have the same problem

did you find the issue?

Gunnar Morling

Nov 11, 2021, 10:09:51 AM11/11/21

to debe...@googlegroups.com

This error typically indicates a connectivity issue between Kafka Connect and Kafka itself.

--Gunnar

--

You received this message because you are subscribed to the Google Groups "debezium" group.

To unsubscribe from this group and stop receiving emails from it, send an email to debezium+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/debezium/a14ea67a-3a29-4235-92c3-6fdf1c5008c0n%40googlegroups.com.

Chris Cranford

Nov 11, 2021, 10:12:27 AM11/11/21

to debe...@googlegroups.com, La familia Diez

Hi Chaiyapon et al -

So the error "Timeout expired while fetching topic metadata" can occur for a variety of reasons but it normally happens when there is a problem communicating with the Kafka broker. This can be because the broker expects SSL connections but you didn't specify that in the connector's configuration or it could simply be due to some network problems. What is your connector configurations and does the broker require SSL?

Chris

So the error "Timeout expired while fetching topic metadata" can occur for a variety of reasons but it normally happens when there is a problem communicating with the Kafka broker. This can be because the broker expects SSL connections but you didn't specify that in the connector's configuration or it could simply be due to some network problems. What is your connector configurations and does the broker require SSL?

Chris

--

La familia Diez

Nov 16, 2021, 10:37:49 AM11/16/21

to debezium

Hi Chris, my setup is using Azure EH + Oracle CDC Debezium

when I start kafka connect via: connect-distributed.sh , it works fine, connects to EH and creates 3 topics, dbz8-connect-cluster-configs/offsets/status

The problem is when I register the connector via debezium plugin.

[2021-11-16 14:58:26,561] WARN [dbz8-oracle-connector|task-0|offsets] Couldn't commit processed log positions with the source database due to a concurrent connector shutdown or restart (io.debezium.connector.common.BaseSourceTask:292)

[2021-11-16 14:58:26,788] INFO [dbz8-oracle-connector|task-0] Database Version: Oracle Database 12c Enterprise Edition Release 12.2.0.1.0 - 64bit Production (io.debezium.connector.oracle.OracleConnection:76)

...

[2021-11-16 14:58:26,830] INFO [dbz8-oracle-connector|task-0] Kafka version: 3.0.0 (org.apache.kafka.common.utils.AppInfoParser:119)

[2021-11-16 14:58:26,830] INFO [dbz8-oracle-connector|task-0] Kafka commitId: 8cb0a5e9d3441962 (org.apache.kafka.common.utils.AppInfoParser:120)

[2021-11-16 14:58:26,830] INFO [dbz8-oracle-connector|task-0] Kafka startTimeMs: 1637074706830 (org.apache.kafka.common.utils.AppInfoParser:121)

[2021-11-16 14:58:26,857] WARN [dbz8-oracle-connector|task-0] [Producer clientId=server1-dbhistory] Bootstrap broker xyzeh2.servicebus.windows.net:9093 (id: -1 rack: null) disconnected (org.apache.kafka.clients.NetworkClient:1050)

looking at the debezium java code, that error:

boolean locked = stateLock.tryLock();

if (locked) {

try {

if (coordinator != null && lastOffset != null) {

coordinator.commitOffset(lastOffset);

}

}

finally {

stateLock.unlock();

}

}

else {

LOGGER.warn("Couldn't commit processed log positions with the source database due to a concurrent connector shutdown or restart");

...and the thing is that tryLock() returns always "false"

Also looking at FLINK-15557 ticket, the error seems to occur since kafka 2.2.2 and they don't mention if it has been fixed yet or not. They say it is related to using entity level connecion instead of namespace level connection string. But this is not my case.

file connect-distributed.properties:

bootstrap.servers=xyzeh2.servicebus.windows.net:9093

group.id=dbzconnect8-cluster-group

# connect internal topic names, auto-created if not exists

config.storage.topic=dbz8-connect-cluster-configs

offset.storage.topic=dbz8-connect-cluster-offsets

status.storage.topic=dbz8-connect-cluster-status

# internal topic replication factors - auto 3x replication in Azure Storage

config.storage.replication.factor=1

offset.storage.replication.factor=1

status.storage.replication.factor=1

key.converter=org.apache.kafka.connect.json.JsonConverter

value.converter=org.apache.kafka.connect.json.JsonConverter

key.converter.schemas.enable=false

value.converter.schemas.enable=false

# required EH Kafka security settings

security.protocol=SASL_SSL

sasl.mechanism=PLAIN

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="$ConnectionString" password="Endpoint=sb://xyzeh2.servicebus.windows.net/;SharedAccessKeyName=mysasoracle;SharedAccessKey=xxxx";

producer.security.protocol=SASL_SSL

producer.sasl.mechanism=PLAIN

producer.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="$ConnectionString" password="Endpoint=sb://xyzeh2.servicebus.windows.net/;SharedAccessKeyName=mysasoracle;SharedAccessKey=xxxx";

consumer.security.protocol=SASL_SSL

consumer.sasl.mechanism=PLAIN

consumer.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="$ConnectionString" password="Endpoint=sb://xyzeh2.servicebus.windows.net/;SharedAccessKeyName=mysasoracle;SharedAccessKey=xxxx";

plugin.path=/home/claudio/kafka_2.12-3.0.0/libs

file oracle connector properties:

{

"name": "dbz8-oracle-connector",

"config": {

"connector.class" : "io.debezium.connector.oracle.OracleConnector",

"database.hostname" : "x.y.z.x",

"database.port" : "49154",

"database.user" : "c##dbzuser",

"database.password" : "dbzxyz",

"database.dbname" : "ORCLCDB.localdomain",

"database.pdb.name" : "ORCLPDB1.localdomain",

"database.connection.adapter": "logminer",

"database.server.name" : "server1",

"tasks.max" : "1",

"database.history.kafka.topic": "schema-changesdbz.inventory",

"database.history.kafka.bootstrap.servers": "xyzeh2.servicebus.windows.net:9093",

"snapshot.mode" : "schema_only",

"schema.include.list" : "OT"

}

}

Chris Cranford

Nov 16, 2021, 12:18:52 PM11/16/21

to debe...@googlegroups.com, La familia Diez

Hi

Would you do me a favor and set the logging to TRACE or DEBUG and start the connector and let it get to a point it stops and then send me the logs in a direct email? I'll send you my email details separately.

Thanks,

Chris

Would you do me a favor and set the logging to TRACE or DEBUG and start the connector and let it get to a point it stops and then send me the logs in a direct email? I'll send you my email details separately.

Thanks,

Chris

To view this discussion on the web visit https://groups.google.com/d/msgid/debezium/9b4434fc-90f7-40d2-a818-7ec626c1c6d4n%40googlegroups.com.

La familia Diez

Nov 17, 2021, 11:04:28 AM11/17/21

to debezium

found the issue

it seems that for EH we need to set the following: (in the connector properties file)

database.history.consumer.sasl.jaas.config

database.history.consumer.security.protocol

database.history.consumer.sasl.mechanism

(same for the producer ones)

I found them in Zulip, Rene Rutter copied his config file and I realize that those properties are missing in my config.

I thought it was enough with the plugin settings:

security.protocol=SASL_SSL

sasl.mechanism=PLAIN

sasl.jaas.config=org.apach....

producer.security.protocol=SASL_SSL

producer.sasl.mechanism=PLAIN

producer.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule.....

Also for the EH particular case you need to create the database.history.kafka.topic manually, independently that you set to true the automatic create topic setting, topic.creation.enable.

thanks a lot Chris

Reply all

Reply to author

Forward

0 new messages