Debezium SQL Server Connector

2,683 views

Skip to first unread message

John T

Sep 6, 2018, 9:42:24 AM9/6/18

to debezium

Hi,

I followed the tutorial to setup the Debezium for SQL Server ( using Windows 10 ) :

https://github.com/debezium/debezium-examples/blob/master/tutorial/README.md#using-sql-server

Everything worked fine, but it seams like CDC is not working, I'm only getting the initial table content as output.

When I create more inputs in the database I'm not getting any output in the console consumer.

Another unrelated problem:

I changed the database to an external SQL Server and wasn't getting any output at all, not even the topics got created after I sent the connector to kafka-connect.

Do I have to change the docker-compose file in any way to make this work?

Greetings John

I followed the tutorial to setup the Debezium for SQL Server ( using Windows 10 ) :

https://github.com/debezium/debezium-examples/blob/master/tutorial/README.md#using-sql-server

Everything worked fine, but it seams like CDC is not working, I'm only getting the initial table content as output.

When I create more inputs in the database I'm not getting any output in the console consumer.

Another unrelated problem:

I changed the database to an external SQL Server and wasn't getting any output at all, not even the topics got created after I sent the connector to kafka-connect.

Do I have to change the docker-compose file in any way to make this work?

Greetings John

hansh1212

Sep 6, 2018, 11:06:31 AM9/6/18

to debezium

Hi John,

I am also trying to set up Debezium for SQL Server and also running into issues. My set up is quite a bit different as I have everything running on Minikube. I am using Confluent's Kafka and 2017 Developers edition of SQL Server. I am also using either the debezium/connect:0.9 or debezium/connect:0.9.0Alpha1 container images.

It appears that CDC is working on the data base. The topics are being created for the tables, however they contain no events associated with changed data. My logs show something to the effect of :

org.apache.kafka.connect.errors.ConnectException: An exception ocurred in the change event producer. This connector will be stopped.

at io.debezium.connector.base.ChangeEventQueue.throwProducerFailureIfPresent(ChangeEventQueue.java:168)

at io.debezium.connector.base.ChangeEventQueue.poll(ChangeEventQueue.java:149)

at io.debezium.connector.sqlserver.SqlServerConnectorTask.poll(SqlServerConnectorTask.java:141)

at org.apache.kafka.connect.runtime.WorkerSourceTask.poll(WorkerSourceTask.java:244)

at org.apache.kafka.connect.runtime.WorkerSourceTask.execute(WorkerSourceTask.java:220)

at org.apache.kafka.connect.runtime.WorkerTask.doRun(WorkerTask.java:175)

at org.apache.kafka.connect.runtime.WorkerTask.run(WorkerTask.java:219)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: org.apache.kafka.connect.errors.ConnectException: com.microsoft.sqlserver.jdbc.SQLServerException: Invalid object name 'cdc.fn_cdc_get_all_changes_dbo_ErrorLog'.

at io.debezium.connector.sqlserver.SqlServerStreamingChangeEventSource.execute(SqlServerStreamingChangeEventSource.java:149)

at io.debezium.pipeline.ChangeEventSourceCoordinator.lambda$start$0(ChangeEventSourceCoordinator.java:68)

... 5 more

Caused by: com.microsoft.sqlserver.jdbc.SQLServerException: Invalid object name 'cdc.fn_cdc_get_all_changes_dbo_ErrorLog'.

at com.microsoft.sqlserver.jdbc.SQLServerException.makeFromDatabaseError(SQLServerException.java:259)

at com.microsoft.sqlserver.jdbc.SQLServerStatement.getNextResult(SQLServerStatement.java:1547)

at com.microsoft.sqlserver.jdbc.SQLServerPreparedStatement.doExecutePreparedStatement(SQLServerPreparedStatement.java:548)

at com.microsoft.sqlserver.jdbc.SQLServerPreparedStatement$PrepStmtExecCmd.doExecute(SQLServerPreparedStatement.java:479)

at com.microsoft.sqlserver.jdbc.TDSCommand.execute(IOBuffer.java:7344)

at com.microsoft.sqlserver.jdbc.SQLServerConnection.executeCommand(SQLServerConnection.java:2713)

at com.microsoft.sqlserver.jdbc.SQLServerStatement.executeCommand(SQLServerStatement.java:224)

at com.microsoft.sqlserver.jdbc.SQLServerStatement.executeStatement(SQLServerStatement.java:204)

at com.microsoft.sqlserver.jdbc.SQLServerPreparedStatement.executeQuery(SQLServerPreparedStatement.java:401)

at io.debezium.jdbc.JdbcConnection.prepareQuery(JdbcConnection.java:448)

at io.debezium.connector.sqlserver.SqlServerConnection.getChangesForTables(SqlServerConnection.java:159)

at io.debezium.connector.sqlserver.SqlServerStreamingChangeEventSource.execute(SqlServerStreamingChangeEventSource.java:84)

... 6 more

Are you getting something similar in your logs?

BTW - the logs were taken from Landoop's Kafka-Connect-UI (https://github.com/Landoop/kafka-connect-ui) - which rocks. It is much easier to work with then trying to make rest calls directly to the Debezium connector.

Jiri Pechanec

Sep 6, 2018, 11:34:49 PM9/6/18

to debezium

Hi,

have you enabled CDC for both database and tables as described in the docs? https://debezium.io/docs/connectors/sqlserver/#setting-up-sqlserver

Could you please post the list of the objects (tables, functions, etc.) in CDC schema?

Thanks

J.

John T

Sep 7, 2018, 8:47:13 AM9/7/18

to debezium

ok, seams like I missed this part. I enabled cdc on the table now its working fine, solved one of my problems. thanks.

CF AWS

Sep 22, 2018, 1:07:44 PM9/22/18

to debezium

@John, can you please tell which SQL Server version you are using?

Or more specifically, will i work with 2008 version?

Gunnar Morling

Sep 24, 2018, 8:53:43 AM9/24/18

to debezium

Hi,

+1 Would be cool to hear back from John.

So far we've tested that connector only with the current version of SQL Server; the question is whether the structure of CDC tables and/or stored procedures are the same with 2008. If there also is a Docker image with that version, we can set up a job for testing against it, if not, we'd have to rely on feedback from the community for the time being.

--Gunnar

John T

Oct 5, 2018, 7:11:36 AM10/5/18

to debezium

Sry for the late answer, I'm using SQL Server 2017, haven't tested it for any other version so far

Nikhil Mahajan

May 13, 2019, 6:26:29 AM5/13/19

to debezium

Hi,

I am also facing same problem. I am using SQL server 2017, and debezium sql server driver version 0.9.4 and 0.9.5

I can see my topic in kafka topic list. When I try to consume the topic using kafka-console-consumer , I am only seeing table schema listed in console app. Other than that no message is shown in console app.

Jiri Pechanec

May 13, 2019, 6:28:24 AM5/13/19

to debezium

Hi,

these are typical symptoms of not having CDC enabled for table/database or not having replication agent running on the instance.

J.

Nikhil Mahajan

May 13, 2019, 6:30:43 AM5/13/19

to debezium

Hi Jiri,

Thanks for quick response. However is_tracked_by_cdc is set to 1 for that table in SQL server 2017

Jiri Pechanec

May 13, 2019, 6:37:04 AM5/13/19

to debezium

Could you please verify that SQL Server Agent (https://docs.microsoft.com/en-us/sql/ssms/agent/sql-server-agent?view=sql-server-2017) is up and running?

J.

Nikhil Mahajan

May 13, 2019, 6:46:41 AM5/13/19

to debe...@googlegroups.com

Yes, agent is up and running.

is there any other configuration check that I need to verify? because when I consume message using console, I am see first output as table schema.. So that means connectivity is good with SQL server.

here is output I am getting when I try to consume topic :

{

"source" : {

"server" : "abcdb5"

},

"position" : {

"commit_lsn" : "0008af22:00000144:0004",

"change_lsn" : "0008af22:00000144:0002"

},

"databaseName" : "

db_mpass",

"schemaName" : "dbo",

"ddl" : "N/A",

"tableChanges" : [ {

"type" : "CREATE",

"id" : "\"db_mpass\".\"dbo\".\"test2\"",

"table" : {

"defaultCharsetName" : null,

"primaryKeyColumnNames" : [ "id" ],

"columns" : [ {

"name" : "id",

"jdbcType" : 4,

"typeName" : "int",

"typeExpression" : "int",

"charsetName" : null,

"length" : 10,

"scale" : 0,

"position" : 1,

"optional" : false,

"autoIncremented" : false,

"generated" : false

}, {

"name" : "name",

"jdbcType" : 12,

"typeName" : "varchar",

"typeExpression" : "varchar",

"charsetName" : null,

"length" : 30,

"position" : 2,

"optional" : true,

"autoIncremented" : false,

"generated" : false

} ]

}

} ]

}

--

You received this message because you are subscribed to the Google Groups "debezium" group.

To unsubscribe from this group and stop receiving emails from it, send an email to debezium+u...@googlegroups.com.

To post to this group, send email to debe...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/debezium/529ef9a1-8cc2-4e11-81d3-98fc155eb92a%40googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

With Regards,

Nikhil Mahajan

Nikhil Mahajan

May 13, 2019, 6:50:57 AM5/13/19

to debe...@googlegroups.com

Just to add more information, I can see in CDC table created for that table in SQL Server, Output is there for my insert/update commands

select * from cdc.dbo_test2_CT :

__$start_lsn __$end_lsn __$seqval __$operation __$update_mask id name __$command_id

0x0008AF22000002080005 NULL 0x0008AF22000002080002 3 0x02 3 try3 1

0x0008AF22000002080005 NULL 0x0008AF22000002080002 4 0x02 3 try31 1

0x0008AF22000003550004 NULL 0x0008AF22000003550002 2 0x03 4 try4 1

Gunnar Morling

May 13, 2019, 7:11:02 AM5/13/19

to debezium

What state is the connector in? Any exception in the logs?

--Gunnar

To unsubscribe from this group and stop receiving emails from it, send an email to debe...@googlegroups.com.

To post to this group, send email to debe...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/debezium/529ef9a1-8cc2-4e11-81d3-98fc155eb92a%40googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

--

With Regards,

Nikhil Mahajan

Nikhil Mahajan

May 13, 2019, 7:20:52 AM5/13/19

to debezium

How do I check logs ?

I can see connector using curl command : curl -H "Accept:application/json" localhost:8083/connectors/test1

response is :

{"name":"test1","config":{"connector.class":"io.debezium.connector.sqlserver.SqlServerConnector","database.user":"sql_testdb","database.dbname":"db_mpass","database.hostname":"x.x.x.x","database.password":"pass","database.history.kafka.bootstrap.servers":"localhost:9092","database.history.kafka.topic":"test-topic2","name":"test1","database.server.name":"servername","database.port":"1433","table.whitelist":"dbo.test2"},"tasks":[{"connector":"test1","task":0}],"type":"source"}r

Jiri Pechanec

May 13, 2019, 7:26:25 AM5/13/19

to debezium

Do you run Debezium in Kafka COnnect container? If yes then logs are the output of the container. If you use standalon Kafka Connect then there should be a logs directory with log files.

J.

Nikhil Mahajan

May 13, 2019, 7:45:20 AM5/13/19

to debe...@googlegroups.com

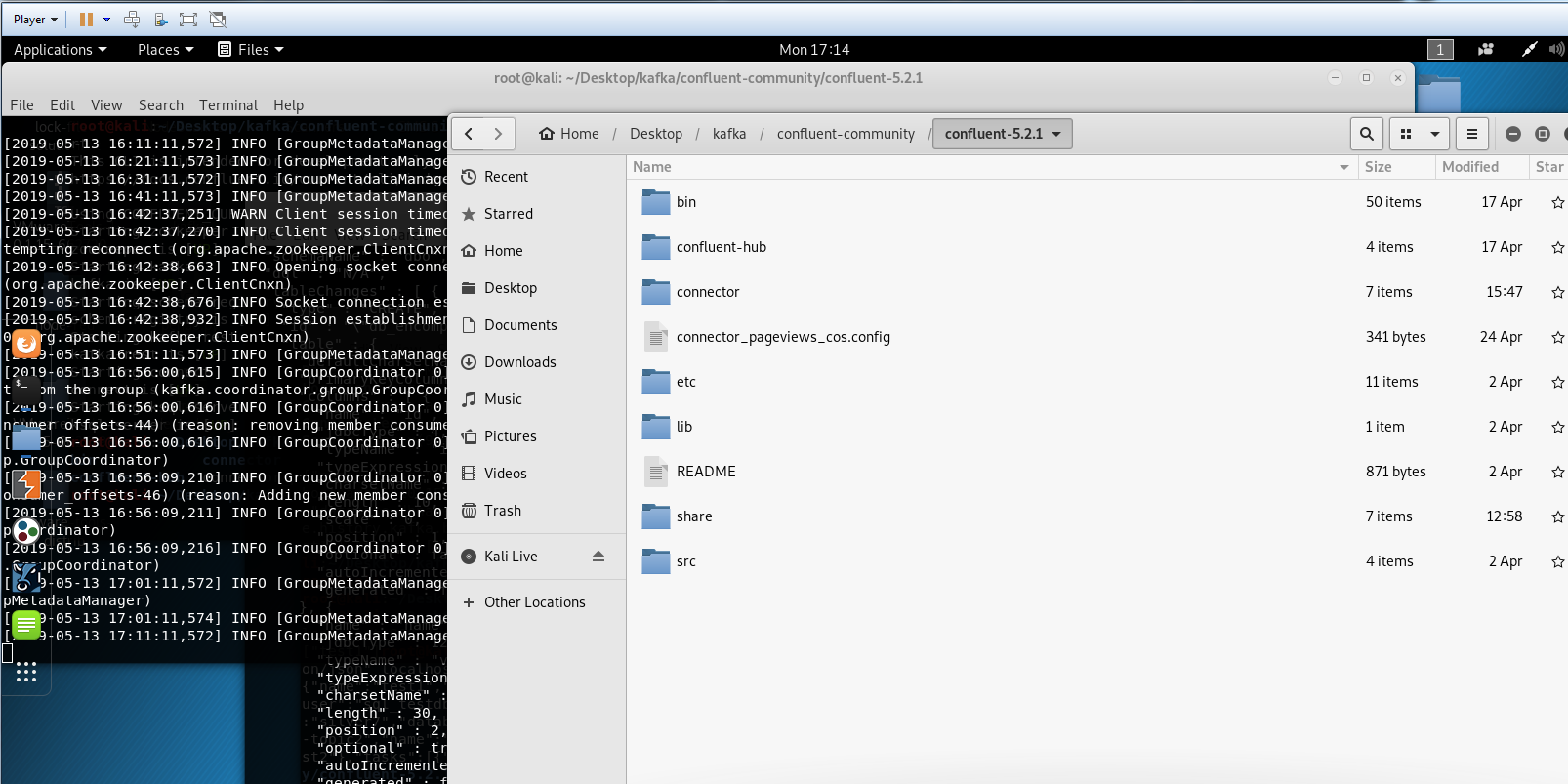

I am using Confluent kafka.. (not on docker) So If I run confluent log kafka -f ,I don't see any error in logs

To unsubscribe from this group and stop receiving emails from it, send an email to debezium+u...@googlegroups.com.

To post to this group, send email to debe...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/debezium/8917408e-dbb2-4316-8650-0cbff0e5839b%40googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

Jiri Pechanec

May 13, 2019, 11:39:08 PM5/13/19

to debezium

COuld you please share the whole log then?

J.

To view this discussion on the web visit https://groups.google.com/d/msgid/debezium/8917408e-dbb2-4316-8650-0cbff0e5839b%40googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

Nikhil Mahajan

May 14, 2019, 2:34:44 AM5/14/19

to debe...@googlegroups.com

Hi,

I am able to read logs of connect.. here are complete logs once I register my debezium SQL server CDC driver

[2019-05-14 11:48:31,387] INFO Connector test1 config updated (org.apache.kafka.connect.runtime.distributed.DistributedHerder:1097)

[2019-05-14 11:48:31,880] INFO Rebalance started (org.apache.kafka.connect.runtime.distributed.DistributedHerder:1238)

[2019-05-14 11:48:31,885] INFO Finished stopping tasks in preparation for rebalance (org.apache.kafka.connect.runtime.distributed.DistributedHerder:1268)

[2019-05-14 11:48:31,885] INFO [Worker clientId=connect-1, groupId=connect-cluster] (Re-)joining group (org.apache.kafka.clients.consumer.internals.AbstractCoordinator:491)

[2019-05-14 11:48:31,900] INFO [Worker clientId=connect-1, groupId=connect-cluster] Successfully joined group with generation 2 (org.apache.kafka.clients.consumer.internals.AbstractCoordinator:455)

[2019-05-14 11:48:31,901] INFO Joined group and got assignment: Assignment{error=0, leader='connect-1-59301092-51a9-4278-9293-4e642d965cb1', leaderUrl='http://127.0.1.1:8083/', offset=1, connectorIds=[test1], taskIds=[]} (org.apache.kafka.connect.runtime.distributed.DistributedHerder:1216)

[2019-05-14 11:48:31,902] INFO Starting connectors and tasks using config offset 1 (org.apache.kafka.connect.runtime.distributed.DistributedHerder:850)

[2019-05-14 11:48:31,906] INFO Starting connector test1 (org.apache.kafka.connect.runtime.distributed.DistributedHerder:904)

[2019-05-14 11:48:31,907] INFO ConnectorConfig values:

config.action.reload = restart

connector.class = io.debezium.connector.sqlserver.SqlServerConnector

errors.log.enable = false

errors.log.include.messages = false

errors.retry.delay.max.ms = 60000

errors.retry.timeout = 0

errors.tolerance = none

header.converter = null

key.converter = null

name = test1

tasks.max = 1

transforms = []

value.converter = null

(org.apache.kafka.connect.runtime.ConnectorConfig:279)

[2019-05-14 11:48:31,908] INFO EnrichedConnectorConfig values:

config.action.reload = restart

connector.class = io.debezium.connector.sqlserver.SqlServerConnector

errors.log.enable = false

errors.log.include.messages = false

errors.retry.delay.max.ms = 60000

errors.retry.timeout = 0

errors.tolerance = none

header.converter = null

key.converter = null

name = test1

tasks.max = 1

transforms = []

value.converter = null

(org.apache.kafka.connect.runtime.ConnectorConfig$EnrichedConnectorConfig:279)

[2019-05-14 11:48:31,908] INFO Creating connector test1 of type io.debezium.connector.sqlserver.SqlServerConnector (org.apache.kafka.connect.runtime.Worker:225)

[2019-05-14 11:48:31,929] INFO Instantiated connector test1 with version 0.9.5.Final of type class io.debezium.connector.sqlserver.SqlServerConnector (org.apache.kafka.connect.runtime.Worker:228)

[2019-05-14 11:48:31,962] INFO Finished creating connector test1 (org.apache.kafka.connect.runtime.Worker:247)

[2019-05-14 11:48:31,970] INFO SourceConnectorConfig values:

config.action.reload = restart

connector.class = io.debezium.connector.sqlserver.SqlServerConnector

errors.log.enable = false

errors.log.include.messages = false

errors.retry.delay.max.ms = 60000

errors.retry.timeout = 0

errors.tolerance = none

header.converter = null

key.converter = null

name = test1

tasks.max = 1

transforms = []

value.converter = null

(org.apache.kafka.connect.runtime.SourceConnectorConfig:279)

[2019-05-14 11:48:31,979] INFO EnrichedConnectorConfig values:

config.action.reload = restart

connector.class = io.debezium.connector.sqlserver.SqlServerConnector

errors.log.enable = false

errors.log.include.messages = false

errors.retry.delay.max.ms = 60000

errors.retry.timeout = 0

errors.tolerance = none

header.converter = null

key.converter = null

name = test1

tasks.max = 1

transforms = []

value.converter = null

(org.apache.kafka.connect.runtime.ConnectorConfig$EnrichedConnectorConfig:279)

[2019-05-14 11:48:31,979] INFO 0:0:0:0:0:0:0:1 - - [14/May/2019:06:18:31 +0000] "POST /connectors/ HTTP/1.1" 201 457 785 (org.apache.kafka.connect.runtime.rest.RestServer:60)

[2019-05-14 11:48:32,926] INFO Tasks [test1-0] configs updated (org.apache.kafka.connect.runtime.distributed.DistributedHerder:1112)

[2019-05-14 11:48:32,931] INFO Finished starting connectors and tasks (org.apache.kafka.connect.runtime.distributed.DistributedHerder:860)

[2019-05-14 11:48:32,932] INFO Rebalance started (org.apache.kafka.connect.runtime.distributed.DistributedHerder:1238)

[2019-05-14 11:48:32,935] INFO Stopping connector test1 (org.apache.kafka.connect.runtime.Worker:328)

[2019-05-14 11:48:32,949] INFO Stopped connector test1 (org.apache.kafka.connect.runtime.Worker:344)

[2019-05-14 11:48:32,950] INFO Finished stopping tasks in preparation for rebalance (org.apache.kafka.connect.runtime.distributed.DistributedHerder:1268)

[2019-05-14 11:48:32,950] INFO [Worker clientId=connect-1, groupId=connect-cluster] (Re-)joining group (org.apache.kafka.clients.consumer.internals.AbstractCoordinator:491)

[2019-05-14 11:48:32,954] INFO [Worker clientId=connect-1, groupId=connect-cluster] Successfully joined group with generation 3 (org.apache.kafka.clients.consumer.internals.AbstractCoordinator:455)

[2019-05-14 11:48:32,954] INFO Joined group and got assignment: Assignment{error=0, leader='connect-1-59301092-51a9-4278-9293-4e642d965cb1', leaderUrl='http://127.0.1.1:8083/', offset=3, connectorIds=[test1], taskIds=[test1-0]} (org.apache.kafka.connect.runtime.distributed.DistributedHerder:1216)

[2019-05-14 11:48:32,954] INFO Starting connectors and tasks using config offset 3 (org.apache.kafka.connect.runtime.distributed.DistributedHerder:850)

[2019-05-14 11:48:32,964] INFO Starting connector test1 (org.apache.kafka.connect.runtime.distributed.DistributedHerder:904)

[2019-05-14 11:48:32,965] INFO ConnectorConfig values:

config.action.reload = restart

connector.class = io.debezium.connector.sqlserver.SqlServerConnector

errors.log.enable = false

errors.log.include.messages = false

errors.retry.delay.max.ms = 60000

errors.retry.timeout = 0

errors.tolerance = none

header.converter = null

key.converter = null

name = test1

tasks.max = 1

transforms = []

value.converter = null

(org.apache.kafka.connect.runtime.ConnectorConfig:279)

[2019-05-14 11:48:32,971] INFO EnrichedConnectorConfig values:

config.action.reload = restart

connector.class = io.debezium.connector.sqlserver.SqlServerConnector

errors.log.enable = false

errors.log.include.messages = false

errors.retry.delay.max.ms = 60000

errors.retry.timeout = 0

errors.tolerance = none

header.converter = null

key.converter = null

name = test1

tasks.max = 1

transforms = []

value.converter = null

(org.apache.kafka.connect.runtime.ConnectorConfig$EnrichedConnectorConfig:279)

[2019-05-14 11:48:32,971] INFO Creating connector test1 of type io.debezium.connector.sqlserver.SqlServerConnector (org.apache.kafka.connect.runtime.Worker:225)

[2019-05-14 11:48:32,971] INFO Starting task test1-0 (org.apache.kafka.connect.runtime.distributed.DistributedHerder:864)

[2019-05-14 11:48:32,971] INFO Creating task test1-0 (org.apache.kafka.connect.runtime.Worker:386)

[2019-05-14 11:48:32,979] INFO ConnectorConfig values:

config.action.reload = restart

connector.class = io.debezium.connector.sqlserver.SqlServerConnector

errors.log.enable = false

errors.log.include.messages = false

errors.retry.delay.max.ms = 60000

errors.retry.timeout = 0

errors.tolerance = none

header.converter = null

key.converter = null

name = test1

tasks.max = 1

transforms = []

value.converter = null

(org.apache.kafka.connect.runtime.ConnectorConfig:279)

[2019-05-14 11:48:32,980] INFO EnrichedConnectorConfig values:

config.action.reload = restart

connector.class = io.debezium.connector.sqlserver.SqlServerConnector

errors.log.enable = false

errors.log.include.messages = false

errors.retry.delay.max.ms = 60000

errors.retry.timeout = 0

errors.tolerance = none

header.converter = null

key.converter = null

name = test1

tasks.max = 1

transforms = []

value.converter = null

(org.apache.kafka.connect.runtime.ConnectorConfig$EnrichedConnectorConfig:279)

[2019-05-14 11:48:32,973] INFO Instantiated connector test1 with version 0.9.5.Final of type class io.debezium.connector.sqlserver.SqlServerConnector (org.apache.kafka.connect.runtime.Worker:228)

[2019-05-14 11:48:32,986] INFO Finished creating connector test1 (org.apache.kafka.connect.runtime.Worker:247)

[2019-05-14 11:48:32,987] INFO SourceConnectorConfig values:

config.action.reload = restart

connector.class = io.debezium.connector.sqlserver.SqlServerConnector

errors.log.enable = false

errors.log.include.messages = false

errors.retry.delay.max.ms = 60000

errors.retry.timeout = 0

errors.tolerance = none

header.converter = null

key.converter = null

name = test1

tasks.max = 1

transforms = []

value.converter = null

(org.apache.kafka.connect.runtime.SourceConnectorConfig:279)

[2019-05-14 11:48:32,999] INFO EnrichedConnectorConfig values:

config.action.reload = restart

connector.class = io.debezium.connector.sqlserver.SqlServerConnector

errors.log.enable = false

errors.log.include.messages = false

errors.retry.delay.max.ms = 60000

errors.retry.timeout = 0

errors.tolerance = none

header.converter = null

key.converter = null

name = test1

tasks.max = 1

transforms = []

value.converter = null

(org.apache.kafka.connect.runtime.ConnectorConfig$EnrichedConnectorConfig:279)

[2019-05-14 11:48:33,003] INFO TaskConfig values:

task.class = class io.debezium.connector.sqlserver.SqlServerConnectorTask

(org.apache.kafka.connect.runtime.TaskConfig:279)

[2019-05-14 11:48:33,004] INFO Instantiated task test1-0 with version 0.9.5.Final of type io.debezium.connector.sqlserver.SqlServerConnectorTask (org.apache.kafka.connect.runtime.Worker:401)

[2019-05-14 11:48:33,026] INFO AvroConverterConfig values:

schema.registry.url = [http://localhost:8081]

basic.auth.user.info = [hidden]

auto.register.schemas = true

max.schemas.per.subject = 1000

basic.auth.credentials.source = URL

schema.registry.basic.auth.user.info = [hidden]

value.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

key.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

(io.confluent.connect.avro.AvroConverterConfig:179)

[2019-05-14 11:48:33,058] INFO KafkaAvroSerializerConfig values:

schema.registry.url = [http://localhost:8081]

basic.auth.user.info = [hidden]

auto.register.schemas = true

max.schemas.per.subject = 1000

basic.auth.credentials.source = URL

schema.registry.basic.auth.user.info = [hidden]

value.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

key.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

(io.confluent.kafka.serializers.KafkaAvroSerializerConfig:179)

[2019-05-14 11:48:33,070] INFO KafkaAvroDeserializerConfig values:

schema.registry.url = [http://localhost:8081]

basic.auth.user.info = [hidden]

auto.register.schemas = true

max.schemas.per.subject = 1000

basic.auth.credentials.source = URL

schema.registry.basic.auth.user.info = [hidden]

specific.avro.reader = false

value.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

key.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

(io.confluent.kafka.serializers.KafkaAvroDeserializerConfig:179)

[2019-05-14 11:48:33,403] INFO AvroDataConfig values:

schemas.cache.config = 1000

enhanced.avro.schema.support = false

connect.meta.data = true

(io.confluent.connect.avro.AvroDataConfig:179)

[2019-05-14 11:48:33,406] INFO Set up the key converter class io.confluent.connect.avro.AvroConverter for task test1-0 using the worker config (org.apache.kafka.connect.runtime.Worker:424)

[2019-05-14 11:48:33,406] INFO AvroConverterConfig values:

schema.registry.url = [http://localhost:8081]

basic.auth.user.info = [hidden]

auto.register.schemas = true

max.schemas.per.subject = 1000

basic.auth.credentials.source = URL

schema.registry.basic.auth.user.info = [hidden]

value.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

key.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

(io.confluent.connect.avro.AvroConverterConfig:179)

[2019-05-14 11:48:33,406] INFO KafkaAvroSerializerConfig values:

schema.registry.url = [http://localhost:8081]

basic.auth.user.info = [hidden]

auto.register.schemas = true

max.schemas.per.subject = 1000

basic.auth.credentials.source = URL

schema.registry.basic.auth.user.info = [hidden]

value.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

key.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

(io.confluent.kafka.serializers.KafkaAvroSerializerConfig:179)

[2019-05-14 11:48:33,407] INFO KafkaAvroDeserializerConfig values:

schema.registry.url = [http://localhost:8081]

basic.auth.user.info = [hidden]

auto.register.schemas = true

max.schemas.per.subject = 1000

basic.auth.credentials.source = URL

schema.registry.basic.auth.user.info = [hidden]

specific.avro.reader = false

value.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

key.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

(io.confluent.kafka.serializers.KafkaAvroDeserializerConfig:179)

[2019-05-14 11:48:33,407] INFO AvroDataConfig values:

schemas.cache.config = 1000

enhanced.avro.schema.support = false

connect.meta.data = true

(io.confluent.connect.avro.AvroDataConfig:179)

[2019-05-14 11:48:33,407] INFO Set up the value converter class io.confluent.connect.avro.AvroConverter for task test1-0 using the worker config (org.apache.kafka.connect.runtime.Worker:430)

[2019-05-14 11:48:33,407] INFO Set up the header converter class org.apache.kafka.connect.storage.SimpleHeaderConverter for task test1-0 using the worker config (org.apache.kafka.connect.runtime.Worker:436)

[2019-05-14 11:48:33,421] INFO Initializing: org.apache.kafka.connect.runtime.TransformationChain{} (org.apache.kafka.connect.runtime.Worker:486)

[2019-05-14 11:48:33,424] INFO ProducerConfig values:

acks = all

batch.size = 16384

bootstrap.servers = [localhost:9092]

buffer.memory = 33554432

client.dns.lookup = default

client.id =

compression.type = none

connections.max.idle.ms = 540000

delivery.timeout.ms = 2147483647

enable.idempotence = false

interceptor.classes = []

key.serializer = class org.apache.kafka.common.serialization.ByteArraySerializer

linger.ms = 0

max.block.ms = 9223372036854775807

max.in.flight.requests.per.connection = 1

max.request.size = 1048576

metadata.max.age.ms = 300000

metric.reporters = []

metrics.num.samples = 2

metrics.recording.level = INFO

metrics.sample.window.ms = 30000

partitioner.class = class org.apache.kafka.clients.producer.internals.DefaultPartitioner

receive.buffer.bytes = 32768

reconnect.backoff.max.ms = 1000

reconnect.backoff.ms = 50

request.timeout.ms = 2147483647

retries = 2147483647

retry.backoff.ms = 100

sasl.client.callback.handler.class = null

sasl.jaas.config = null

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin = 60000

sasl.kerberos.service.name = null

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.login.callback.handler.class = null

sasl.login.class = null

sasl.login.refresh.buffer.seconds = 300

sasl.login.refresh.min.period.seconds = 60

sasl.login.refresh.window.factor = 0.8

sasl.login.refresh.window.jitter = 0.05

sasl.mechanism = GSSAPI

security.protocol = PLAINTEXT

send.buffer.bytes = 131072

ssl.cipher.suites = null

ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1]

ssl.endpoint.identification.algorithm = https

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.protocol = TLS

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

transaction.timeout.ms = 60000

transactional.id = null

value.serializer = class org.apache.kafka.common.serialization.ByteArraySerializer

(org.apache.kafka.clients.producer.ProducerConfig:279)

[2019-05-14 11:48:33,451] INFO Kafka version: 2.2.0-cp2 (org.apache.kafka.common.utils.AppInfoParser:109)

[2019-05-14 11:48:33,452] INFO Kafka commitId: 325e9879cbc6d612 (org.apache.kafka.common.utils.AppInfoParser:110)

[2019-05-14 11:48:33,473] INFO Finished starting connectors and tasks (org.apache.kafka.connect.runtime.distributed.DistributedHerder:860)

[2019-05-14 11:48:33,508] INFO Starting SqlServerConnectorTask with configuration: (io.debezium.connector.common.BaseSourceTask:43)

[2019-05-14 11:48:33,509] INFO connector.class = io.debezium.connector.sqlserver.SqlServerConnector (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 11:48:33,519] INFO database.user = sql_testdb (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 11:48:33,519] INFO database.dbname = db_encompass (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 11:48:33,520] INFO task.class = io.debezium.connector.sqlserver.SqlServerConnectorTask (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 11:48:33,520] INFO database.hostname = 10.154.51.196 (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 11:48:33,520] INFO database.history.kafka.bootstrap.servers = localhost:9092 (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 11:48:33,520] INFO database.history.kafka.topic = test-topic2 (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 11:48:33,520] INFO database.password = ******** (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 11:48:33,520] INFO name = test1 (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 11:48:33,520] INFO database.server.name = houncadb5 (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 11:48:33,520] INFO database.port = 1433 (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 11:48:33,520] INFO table.whitelist = test1 (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 11:48:33,540] INFO Cluster ID: KG2QLBvWRDmVs0QlykeK3Q (org.apache.kafka.clients.Metadata:365)

[2019-05-14 11:48:36,039] INFO KafkaDatabaseHistory Consumer config: {enable.auto.commit=false, value.deserializer=org.apache.kafka.common.serialization.StringDeserializer, group.id=houncadb5-dbhistory, auto.offset.reset=earliest, session.timeout.ms=10000, bootstrap.servers=localhost:9092, client.id=houncadb5-dbhistory, key.deserializer=org.apache.kafka.common.serialization.StringDeserializer, fetch.min.bytes=1} (io.debezium.relational.history.KafkaDatabaseHistory:164)

[2019-05-14 11:48:36,043] INFO KafkaDatabaseHistory Producer config: {bootstrap.servers=localhost:9092, value.serializer=org.apache.kafka.common.serialization.StringSerializer, buffer.memory=1048576, retries=1, key.serializer=org.apache.kafka.common.serialization.StringSerializer, client.id=houncadb5-dbhistory, linger.ms=0, batch.size=32768, max.block.ms=10000, acks=1} (io.debezium.relational.history.KafkaDatabaseHistory:165)

[2019-05-14 11:48:36,045] INFO ProducerConfig values:

acks = 1

batch.size = 32768

bootstrap.servers = [localhost:9092]

buffer.memory = 1048576

client.dns.lookup = default

client.id = houncadb5-dbhistory

compression.type = none

connections.max.idle.ms = 540000

delivery.timeout.ms = 120000

enable.idempotence = false

interceptor.classes = []

key.serializer = class org.apache.kafka.common.serialization.StringSerializer

linger.ms = 0

max.block.ms = 10000

max.in.flight.requests.per.connection = 5

max.request.size = 1048576

metadata.max.age.ms = 300000

metric.reporters = []

metrics.num.samples = 2

metrics.recording.level = INFO

metrics.sample.window.ms = 30000

partitioner.class = class org.apache.kafka.clients.producer.internals.DefaultPartitioner

receive.buffer.bytes = 32768

reconnect.backoff.max.ms = 1000

reconnect.backoff.ms = 50

request.timeout.ms = 30000

retries = 1

retry.backoff.ms = 100

sasl.client.callback.handler.class = null

sasl.jaas.config = null

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin = 60000

sasl.kerberos.service.name = null

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.login.callback.handler.class = null

sasl.login.class = null

sasl.login.refresh.buffer.seconds = 300

sasl.login.refresh.min.period.seconds = 60

sasl.login.refresh.window.factor = 0.8

sasl.login.refresh.window.jitter = 0.05

sasl.mechanism = GSSAPI

security.protocol = PLAINTEXT

send.buffer.bytes = 131072

ssl.cipher.suites = null

ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1]

ssl.endpoint.identification.algorithm = https

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.protocol = TLS

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

transaction.timeout.ms = 60000

transactional.id = null

value.serializer = class org.apache.kafka.common.serialization.StringSerializer

(org.apache.kafka.clients.producer.ProducerConfig:279)

[2019-05-14 11:48:36,063] INFO Kafka version: 2.2.0-cp2 (org.apache.kafka.common.utils.AppInfoParser:109)

[2019-05-14 11:48:36,064] INFO Kafka commitId: 325e9879cbc6d612 (org.apache.kafka.common.utils.AppInfoParser:110)

[2019-05-14 11:48:36,064] INFO ConsumerConfig values:

auto.commit.interval.ms = 5000

auto.offset.reset = earliest

bootstrap.servers = [localhost:9092]

check.crcs = true

client.dns.lookup = default

client.id = houncadb5-dbhistory

connections.max.idle.ms = 540000

default.api.timeout.ms = 60000

enable.auto.commit = false

exclude.internal.topics = true

fetch.max.bytes = 52428800

fetch.max.wait.ms = 500

fetch.min.bytes = 1

group.id = houncadb5-dbhistory

heartbeat.interval.ms = 3000

interceptor.classes = []

internal.leave.group.on.close = true

isolation.level = read_uncommitted

key.deserializer = class org.apache.kafka.common.serialization.StringDeserializer

max.partition.fetch.bytes = 1048576

max.poll.interval.ms = 300000

max.poll.records = 500

metadata.max.age.ms = 300000

metric.reporters = []

metrics.num.samples = 2

metrics.recording.level = INFO

metrics.sample.window.ms = 30000

partition.assignment.strategy = [class org.apache.kafka.clients.consumer.RangeAssignor]

receive.buffer.bytes = 65536

reconnect.backoff.max.ms = 1000

reconnect.backoff.ms = 50

request.timeout.ms = 30000

retry.backoff.ms = 100

sasl.client.callback.handler.class = null

sasl.jaas.config = null

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin = 60000

sasl.kerberos.service.name = null

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.login.callback.handler.class = null

sasl.login.class = null

sasl.login.refresh.buffer.seconds = 300

sasl.login.refresh.min.period.seconds = 60

sasl.login.refresh.window.factor = 0.8

sasl.login.refresh.window.jitter = 0.05

sasl.mechanism = GSSAPI

security.protocol = PLAINTEXT

send.buffer.bytes = 131072

session.timeout.ms = 10000

ssl.cipher.suites = null

ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1]

ssl.endpoint.identification.algorithm = https

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.protocol = TLS

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

value.deserializer = class org.apache.kafka.common.serialization.StringDeserializer

(org.apache.kafka.clients.consumer.ConsumerConfig:279)

[2019-05-14 11:48:36,074] INFO Kafka version: 2.2.0-cp2 (org.apache.kafka.common.utils.AppInfoParser:109)

[2019-05-14 11:48:36,079] INFO Kafka commitId: 325e9879cbc6d612 (org.apache.kafka.common.utils.AppInfoParser:110)

[2019-05-14 11:48:36,122] INFO AdminClientConfig values:

bootstrap.servers = [localhost:9092]

client.dns.lookup = default

client.id = houncadb5-dbhistory

connections.max.idle.ms = 300000

metadata.max.age.ms = 300000

metric.reporters = []

metrics.num.samples = 2

metrics.recording.level = INFO

metrics.sample.window.ms = 30000

receive.buffer.bytes = 65536

reconnect.backoff.max.ms = 1000

reconnect.backoff.ms = 50

request.timeout.ms = 120000

retries = 1

retry.backoff.ms = 100

sasl.client.callback.handler.class = null

sasl.jaas.config = null

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin = 60000

sasl.kerberos.service.name = null

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.login.callback.handler.class = null

sasl.login.class = null

sasl.login.refresh.buffer.seconds = 300

sasl.login.refresh.min.period.seconds = 60

sasl.login.refresh.window.factor = 0.8

sasl.login.refresh.window.jitter = 0.05

sasl.mechanism = GSSAPI

security.protocol = PLAINTEXT

send.buffer.bytes = 131072

ssl.cipher.suites = null

ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1]

ssl.endpoint.identification.algorithm = https

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.protocol = TLS

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

(org.apache.kafka.clients.admin.AdminClientConfig:279)

[2019-05-14 11:48:36,125] WARN The configuration 'value.serializer' was supplied but isn't a known config. (org.apache.kafka.clients.admin.AdminClientConfig:287)

[2019-05-14 11:48:36,127] WARN The configuration 'batch.size' was supplied but isn't a known config. (org.apache.kafka.clients.admin.AdminClientConfig:287)

[2019-05-14 11:48:36,128] WARN The configuration 'max.block.ms' was supplied but isn't a known config. (org.apache.kafka.clients.admin.AdminClientConfig:287)

[2019-05-14 11:48:36,128] WARN The configuration 'acks' was supplied but isn't a known config. (org.apache.kafka.clients.admin.AdminClientConfig:287)

[2019-05-14 11:48:36,129] WARN The configuration 'buffer.memory' was supplied but isn't a known config. (org.apache.kafka.clients.admin.AdminClientConfig:287)

[2019-05-14 11:48:36,129] WARN The configuration 'key.serializer' was supplied but isn't a known config. (org.apache.kafka.clients.admin.AdminClientConfig:287)

[2019-05-14 11:48:36,129] WARN The configuration 'linger.ms' was supplied but isn't a known config. (org.apache.kafka.clients.admin.AdminClientConfig:287)

[2019-05-14 11:48:36,129] INFO Kafka version: 2.2.0-cp2 (org.apache.kafka.common.utils.AppInfoParser:109)

[2019-05-14 11:48:36,130] INFO Kafka commitId: 325e9879cbc6d612 (org.apache.kafka.common.utils.AppInfoParser:110)

[2019-05-14 11:48:36,152] INFO Cluster ID: KG2QLBvWRDmVs0QlykeK3Q (org.apache.kafka.clients.Metadata:365)

[2019-05-14 11:48:36,198] INFO Database history topic '(name=test-topic2, numPartitions=1, replicationFactor=1, replicasAssignments=null, configs={cleanup.policy=delete, retention.ms=9223372036854775807, retention.bytes=-1})' created (io.debezium.relational.history.KafkaDatabaseHistory:357)

[2019-05-14 11:48:36,606] INFO Requested thread factory for connector SqlServerConnector, id = houncadb5 named = error-handler (io.debezium.util.Threads:250)

[2019-05-14 11:48:36,609] INFO Requested thread factory for connector SqlServerConnector, id = houncadb5 named = change-event-source-coordinator (io.debezium.util.Threads:250)

[2019-05-14 11:48:36,611] INFO Creating thread debezium-sqlserverconnector-houncadb5-change-event-source-coordinator (io.debezium.util.Threads:267)

[2019-05-14 11:48:36,611] INFO WorkerSourceTask{id=test1-0} Source task finished initialization and start (org.apache.kafka.connect.runtime.WorkerSourceTask:200)

[2019-05-14 11:48:36,624] INFO No previous offset has been found (io.debezium.connector.sqlserver.SqlServerSnapshotChangeEventSource:62)

[2019-05-14 11:48:36,630] INFO According to the connector configuration both schema and data will be snapshotted (io.debezium.connector.sqlserver.SqlServerSnapshotChangeEventSource:64)

[2019-05-14 11:48:36,630] INFO Snapshot step 1 - Preparing (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:102)

[2019-05-14 11:48:36,631] INFO Snapshot step 2 - Determining captured tables (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:113)

[2019-05-14 11:48:37,036] INFO Snapshot step 3 - Locking captured tables (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:120)

[2019-05-14 11:48:37,556] INFO Executing schema locking (io.debezium.connector.sqlserver.SqlServerSnapshotChangeEventSource:114)

[2019-05-14 11:48:37,556] INFO Snapshot step 4 - Determining snapshot offset (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:126)

[2019-05-14 11:48:37,816] INFO Snapshot step 5 - Reading structure of captured tables (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:129)

[2019-05-14 11:48:37,817] INFO Snapshot step 6 - Persisting schema history (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:133)

[2019-05-14 11:48:38,076] INFO Schema locks released. (io.debezium.connector.sqlserver.SqlServerSnapshotChangeEventSource:139)

[2019-05-14 11:48:38,077] INFO Snapshot step 7 - Snapshotting data (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:145)

[2019-05-14 11:48:38,341] INFO Snapshot step 8 - Finalizing (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:174)

[2019-05-14 11:48:38,902] INFO CDC is enabled for table Capture instance "dbo_test2" [sourceTableId=db_encompass.dbo.test2, changeTableId=db_encompass.cdc.dbo_test2_CT, startLsn=0008af20:000002f5:0001, changeTableObjectId=265872114, stopLsn=NULL] but the table is not whitelisted by connector (io.debezium.connector.sqlserver.SqlServerStreamingChangeEventSource:258)

[2019-05-14 11:48:38,902] INFO CDC is enabled for table Capture instance "dbo_testtable2" [sourceTableId=db_encompass.dbo.testtable2, changeTableId=db_encompass.cdc.dbo_testtable2_CT, startLsn=0008af20:000002f5:0001, changeTableObjectId=1885353881, stopLsn=NULL] but the table is not whitelisted by connector (io.debezium.connector.sqlserver.SqlServerStreamingChangeEventSource:258)

[2019-05-14 11:48:38,902] INFO CDC is enabled for table Capture instance "dbo_treq_vehicle" [sourceTableId=db_encompass.dbo.treq_vehicle, changeTableId=db_encompass.cdc.dbo_treq_vehicle_CT, startLsn=0008af20:000002f5:0001, changeTableObjectId=1533352627, stopLsn=NULL] but the table is not whitelisted by connector (io.debezium.connector.sqlserver.SqlServerStreamingChangeEventSource:258)

[2019-05-14 11:48:38,902] INFO CDC is enabled for table Capture instance "dbo_newtable1" [sourceTableId=db_encompass.dbo.newtable1, changeTableId=db_encompass.cdc.dbo_newtable1_CT, startLsn=0008af20:000002f5:0001, changeTableObjectId=2013354337, stopLsn=NULL] but the table is not whitelisted by connector (io.debezium.connector.sqlserver.SqlServerStreamingChangeEventSource:258)

[2019-05-14 11:48:38,902] INFO CDC is enabled for table Capture instance "dbo_newtest2" [sourceTableId=db_encompass.dbo.newtest2, changeTableId=db_encompass.cdc.dbo_newtest2_CT, startLsn=0008af20:000002f5:0001, changeTableObjectId=2141354793, stopLsn=NULL] but the table is not whitelisted by connector (io.debezium.connector.sqlserver.SqlServerStreamingChangeEventSource:258)

[2019-05-14 11:48:38,903] INFO CDC is enabled for table Capture instance "dbo_test1" [sourceTableId=db_encompass.dbo.test1, changeTableId=db_encompass.cdc.dbo_test1_CT, startLsn=0008af20:000002f5:0001, changeTableObjectId=153871715, stopLsn=NULL] but the table is not whitelisted by connector (io.debezium.connector.sqlserver.SqlServerStreamingChangeEventSource:258)

[2019-05-14 11:48:38,903] INFO CDC is enabled for table Capture instance "dbo_testtable1" [sourceTableId=db_encompass.dbo.testtable1, changeTableId=db_encompass.cdc.dbo_testtable1_CT, startLsn=0008af20:000002f5:0001, changeTableObjectId=1757353425, stopLsn=NULL] but the table is not whitelisted by connector (io.debezium.connector.sqlserver.SqlServerStreamingChangeEventSource:258)

[2019-05-14 11:48:38,903] INFO CDC is enabled for table Capture instance "dbo_customer_detail" [sourceTableId=db_encompass.dbo.customer_detail, changeTableId=db_encompass.cdc.dbo_customer_detail_CT, startLsn=0008af20:000002f5:0001, changeTableObjectId=1629352969, stopLsn=NULL] but the table is not whitelisted by connector (io.debezium.connector.sqlserver.SqlServerStreamingChangeEventSource:258)

[2019-05-14 11:48:38,903] INFO Last position recorded in offsets is 0008af22:00003bdb:0001(NULL) (io.debezium.connector.sqlserver.SqlServerStreamingChangeEventSource:94)

[2019-05-14 11:49:33,472] INFO WorkerSourceTask{id=test1-0} Committing offsets (org.apache.kafka.connect.runtime.WorkerSourceTask:398)

[2019-05-14 11:49:33,477] INFO WorkerSourceTask{id=test1-0} flushing 0 outstanding messages for offset commit (org.apache.kafka.connect.runtime.WorkerSourceTask:415)

^[[F[2019-05-14 11:50:33,479] INFO WorkerSourceTask{id=test1-0} Committing offsets (org.apache.kafka.connect.runtime.WorkerSourceTask:398)

[2019-05-14 11:50:33,479] INFO WorkerSourceTask{id=test1-0} flushing 0 outstanding messages for offset commit (org.apache.kafka.connect.runtime.WorkerSourceTask:415)

[2019-05-14 11:51:33,480] INFO WorkerSourceTask{id=test1-0} Committing offsets (org.apache.kafka.connect.runtime.WorkerSourceTask:398)

[2019-05-14 11:51:33,480] INFO WorkerSourceTask{id=test1-0} flushing 0 outstanding messages for offset commit (org.apache.kafka.connect.runtime.WorkerSourceTask:415)

[2019-05-14 11:52:33,481] INFO WorkerSourceTask{id=test1-0} Committing offsets (org.apache.kafka.connect.runtime.WorkerSourceTask:398)

[2019-05-14 11:52:33,481] INFO WorkerSourceTask{id=test1-0} flushing 0 outstanding messages for offset commit (org.apache.kafka.connect.runtime.WorkerSourceTask:415)

[2019-05-14 11:53:33,482] INFO WorkerSourceTask{id=test1-0} Committing offsets (org.apache.kafka.connect.runtime.WorkerSourceTask:398)

[2019-05-14 11:53:33,483] INFO WorkerSourceTask{id=test1-0} flushing 0 outstanding messages for offset commit (org.apache.kafka.connect.runtime.WorkerSourceTask:415)

[2019-05-14 11:54:33,484] INFO WorkerSourceTask{id=test1-0} Committing offsets (org.apache.kafka.connect.runtime.WorkerSourceTask:398)

[2019-05-14 11:54:33,485] INFO WorkerSourceTask{id=test1-0} flushing 0 outstanding messages for offset commit (org.apache.kafka.connect.runtime.WorkerSourceTask:415)

[2019-05-14 11:55:33,485] INFO WorkerSourceTask{id=test1-0} Committing offsets (org.apache.kafka.connect.runtime.WorkerSourceTask:398)

[2019-05-14 11:55:33,485] INFO WorkerSourceTask{id=test1-0} flushing 0 outstanding messages for offset commit (org.apache.kafka.connect.runtime.WorkerSourceTask:415)

[2019-05-14 11:56:33,486] INFO WorkerSourceTask{id=test1-0} Committing offsets (org.apache.kafka.connect.runtime.WorkerSourceTask:398)

[2019-05-14 11:56:33,486] INFO WorkerSourceTask{id=test1-0} flushing 0 outstanding messages for offset commit (org.apache.kafka.connect.runtime.WorkerSourceTask:415)

To unsubscribe from this group and stop receiving emails from it, send an email to debezium+u...@googlegroups.com.

To post to this group, send email to debe...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/debezium/a64a3fe0-9b62-4cb7-b157-925cf412c624%40googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

Jiri Pechanec

May 14, 2019, 2:41:44 AM5/14/19

to debezium

Your whitelist is incrrect, please see messagase like

[2019-05-14 11:48:38,902] INFO CDC is enabled for table Capture instance "dbo_test2" [sourceTableId=db_encompass.dbo.test2, changeTableId=db_encompass.cdc.dbo_test2_CT, startLsn=0008af20:000002f5:0001, changeTableObjectId=265872114, stopLsn=NULL] but the table is not whitelisted by connector (io.debezium.connector.sqlserver.SqlServerStreamingChangeEventSource:258)

J.

On Tuesday, May 14, 2019 at 8:34:44 AM UTC+2, Nikhil Mahajan wrote:

Hi,

...

Nikhil Mahajan

May 14, 2019, 2:45:25 AM5/14/19

to debe...@googlegroups.com

I also checked that.. but working ... as per message.. if I take example of test1 table .

In driver configuration, I tried dbo.test1, test1 and dbo_test1 .. one at a time.. but didn't work.. here is configuration line

"table.whitelist": "dbo_test1,test1,dbo.test1",

if you check message for this table.. its INFO message

[2019-05-14 11:48:38,903] INFO CDC is enabled for table Capture instance "dbo_test1" [sourceTableId=db_encompass.dbo.test1, changeTableId=db_encompass.cdc.dbo_test1_CT, startLsn=0008af20:000002f5:0001, changeTableObjectId=153871715, stopLsn=NULL] but the table is not whitelisted by connector (io.debezium.connector.sqlserver.SqlServerStreamingChangeEventSource:258)

--

You received this message because you are subscribed to the Google Groups "debezium" group.

To unsubscribe from this group and stop receiving emails from it, send an email to debezium+u...@googlegroups.com.

To post to this group, send email to debe...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/debezium/317890b8-cc6a-48a7-8247-690a85c0e2f1%40googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

Jiri Pechanec

May 14, 2019, 3:02:41 AM5/14/19

to debezium

Hi,

but in the log file above there is

[2019-05-14 11:48:33,520] INFO table.whitelist = test1 (io.debezium.connector.common.BaseSourceTask:45)

And should be `dbo.test1`

J

...

Nikhil Mahajan

May 14, 2019, 3:12:51 AM5/14/19

to debe...@googlegroups.com

because that was the logs when I had mentioned "table.whitelist": "dbo.test1" in driver configuration.

I have again copied all logs with dbo.test1 table. Now this time.. If I check CDC message.. I don't see any info for test1 table..

and there is message as well : Finished exporting 8 records for table 'db_encompass.dbo.test1';

But when I try to consume this topic using console app using command : bin/kafka-console-consumer --topic test-topic2 --from-beginning --bootstrap-server localhost:9092

I am only getting table schema :

{

"source" : {

"server" : "cadb5"

},

"position" : {

"commit_lsn" : "0008af22:00003ed4:0001",

"change_lsn" : "NULL"

},

"databaseName" : "db_encompass",

"schemaName" : "dbo",

"tableChanges" : [ {

"type" : "CREATE",

"id" : "\"db_encompass\".\"dbo\".\"test1\"",

}, {

"name" : "name2",

"jdbcType" : 12,

"typeName" : "varchar",

"typeExpression" : "varchar",

"charsetName" : null,

"length" : 100,

"position" : 3,

"optional" : true,

"autoIncremented" : false,

"generated" : false

} ]

}

} ]

}

[2019-05-14 12:36:35,925] INFO Snapshot step 1 - Preparing (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:102)

[2019-05-14 12:36:35,925] INFO Snapshot step 2 - Determining captured tables (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:113)

[2019-05-14 12:36:36,200] INFO Snapshot step 3 - Locking captured tables (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:120)

[2019-05-14 12:36:38,411] INFO Executing schema locking (io.debezium.connector.sqlserver.SqlServerSnapshotChangeEventSource:114)

[2019-05-14 12:36:38,412] INFO Locking table db_encompass.dbo.test1 (io.debezium.connector.sqlserver.SqlServerSnapshotChangeEventSource:121)

[2019-05-14 12:36:38,673] INFO Snapshot step 4 - Determining snapshot offset (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:126)

[2019-05-14 12:36:38,929] INFO Snapshot step 5 - Reading structure of captured tables (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:129)

[2019-05-14 12:36:38,930] INFO Reading structure of schema 'db_encompass' (io.debezium.connector.sqlserver.SqlServerSnapshotChangeEventSource:167)

[2019-05-14 12:36:46,012] INFO Snapshot step 6 - Persisting schema history (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:133)

[2019-05-14 12:36:46,351] INFO Schema locks released. (io.debezium.connector.sqlserver.SqlServerSnapshotChangeEventSource:139)

[2019-05-14 12:36:46,352] INFO Snapshot step 7 - Snapshotting data (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:145)

[2019-05-14 12:36:46,352] INFO Exporting data from table 'db_encompass.dbo.test1' (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:311)

[2019-05-14 12:36:46,352] INFO For table 'db_encompass.dbo.test1' using select statement: 'SELECT * FROM [dbo].[test1]' (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:314)

[2019-05-14 12:36:46,643] INFO Finished exporting 8 records for table 'db_encompass.dbo.test1'; total duration '00:00:00.289' (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:347)

[2019-05-14 12:36:46,901] INFO Snapshot step 8 - Finalizing (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:174)

[2019-05-14 12:36:47,481] INFO Last position recorded in offsets is 0008af22:00003ed4:0001(NULL) (io.debezium.connector.sqlserver.SqlServerStreamingChangeEventSource:94)

[2019-05-14 12:36:47,833] WARN [Producer clientId=producer-10] Error while fetching metadata with correlation id 3 : {houncadb5.dbo.test1=LEADER_NOT_AVAILABLE} (org.apache.kafka.clients.NetworkClient:1023)

[2019-05-14 12:36:29,757] INFO 0:0:0:0:0:0:0:1 - - [14/May/2019:07:06:29 +0000] "POST /connectors/ HTTP/1.1" 201 491 534 (org.apache.kafka.connect.runtime.rest.RestServer:60)

[2019-05-14 12:36:29,766] INFO Starting task test1-0 (org.apache.kafka.connect.runtime.distributed.DistributedHerder:864)

[2019-05-14 12:36:29,766] INFO Creating task test1-0 (org.apache.kafka.connect.runtime.Worker:386)

[2019-05-14 12:36:29,766] INFO ConnectorConfig values:

config.action.reload = restart

connector.class = io.debezium.connector.sqlserver.SqlServerConnector

errors.log.enable = false

errors.log.include.messages = false

errors.retry.delay.max.ms = 60000

errors.retry.timeout = 0

errors.tolerance = none

header.converter = null

key.converter = null

name = test1

tasks.max = 1

transforms = []

value.converter = null

(org.apache.kafka.connect.runtime.ConnectorConfig:279)

[2019-05-14 12:36:29,766] INFO EnrichedConnectorConfig values:

config.action.reload = restart

connector.class = io.debezium.connector.sqlserver.SqlServerConnector

errors.log.enable = false

errors.log.include.messages = false

errors.retry.delay.max.ms = 60000

errors.retry.timeout = 0

errors.tolerance = none

header.converter = null

key.converter = null

name = test1

tasks.max = 1

transforms = []

value.converter = null

(org.apache.kafka.connect.runtime.ConnectorConfig$EnrichedConnectorConfig:279)

[2019-05-14 12:36:29,767] INFO TaskConfig values:

task.class = class io.debezium.connector.sqlserver.SqlServerConnectorTask

(org.apache.kafka.connect.runtime.TaskConfig:279)

[2019-05-14 12:36:29,767] INFO Instantiated task test1-0 with version 0.9.5.Final of type io.debezium.connector.sqlserver.SqlServerConnectorTask (org.apache.kafka.connect.runtime.Worker:401)

[2019-05-14 12:36:29,767] INFO AvroConverterConfig values:

schema.registry.url = [http://localhost:8081]

basic.auth.user.info = [hidden]

auto.register.schemas = true

max.schemas.per.subject = 1000

basic.auth.credentials.source = URL

schema.registry.basic.auth.user.info = [hidden]

value.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

key.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

(io.confluent.connect.avro.AvroConverterConfig:179)

[2019-05-14 12:36:29,769] INFO KafkaAvroSerializerConfig values:

schema.registry.url = [http://localhost:8081]

basic.auth.user.info = [hidden]

auto.register.schemas = true

max.schemas.per.subject = 1000

basic.auth.credentials.source = URL

schema.registry.basic.auth.user.info = [hidden]

value.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

key.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

(io.confluent.kafka.serializers.KafkaAvroSerializerConfig:179)

[2019-05-14 12:36:29,769] INFO KafkaAvroDeserializerConfig values:

schema.registry.url = [http://localhost:8081]

basic.auth.user.info = [hidden]

auto.register.schemas = true

max.schemas.per.subject = 1000

basic.auth.credentials.source = URL

schema.registry.basic.auth.user.info = [hidden]

specific.avro.reader = false

value.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

key.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

(io.confluent.kafka.serializers.KafkaAvroDeserializerConfig:179)

[2019-05-14 12:36:29,769] INFO AvroDataConfig values:

schemas.cache.config = 1000

enhanced.avro.schema.support = false

connect.meta.data = true

(io.confluent.connect.avro.AvroDataConfig:179)

[2019-05-14 12:36:29,769] INFO Set up the key converter class io.confluent.connect.avro.AvroConverter for task test1-0 using the worker config (org.apache.kafka.connect.runtime.Worker:424)

[2019-05-14 12:36:29,770] INFO AvroConverterConfig values:

schema.registry.url = [http://localhost:8081]

basic.auth.user.info = [hidden]

auto.register.schemas = true

max.schemas.per.subject = 1000

basic.auth.credentials.source = URL

schema.registry.basic.auth.user.info = [hidden]

value.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

key.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

(io.confluent.connect.avro.AvroConverterConfig:179)

[2019-05-14 12:36:29,770] INFO KafkaAvroSerializerConfig values:

schema.registry.url = [http://localhost:8081]

basic.auth.user.info = [hidden]

auto.register.schemas = true

max.schemas.per.subject = 1000

basic.auth.credentials.source = URL

schema.registry.basic.auth.user.info = [hidden]

value.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

key.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

(io.confluent.kafka.serializers.KafkaAvroSerializerConfig:179)

[2019-05-14 12:36:29,766] INFO Starting connector test1 (org.apache.kafka.connect.runtime.distributed.DistributedHerder:904)

[2019-05-14 12:36:29,783] INFO ConnectorConfig values:

config.action.reload = restart

connector.class = io.debezium.connector.sqlserver.SqlServerConnector

errors.log.enable = false

errors.log.include.messages = false

errors.retry.delay.max.ms = 60000

errors.retry.timeout = 0

errors.tolerance = none

header.converter = null

key.converter = null

name = test1

tasks.max = 1

transforms = []

value.converter = null

(org.apache.kafka.connect.runtime.ConnectorConfig:279)

[2019-05-14 12:36:29,783] INFO EnrichedConnectorConfig values:

config.action.reload = restart

connector.class = io.debezium.connector.sqlserver.SqlServerConnector

errors.log.enable = false

errors.log.include.messages = false

errors.retry.delay.max.ms = 60000

errors.retry.timeout = 0

errors.tolerance = none

header.converter = null

key.converter = null

name = test1

tasks.max = 1

transforms = []

value.converter = null

(org.apache.kafka.connect.runtime.ConnectorConfig$EnrichedConnectorConfig:279)

[2019-05-14 12:36:29,783] INFO Creating connector test1 of type io.debezium.connector.sqlserver.SqlServerConnector (org.apache.kafka.connect.runtime.Worker:225)

[2019-05-14 12:36:29,783] INFO Instantiated connector test1 with version 0.9.5.Final of type class io.debezium.connector.sqlserver.SqlServerConnector (org.apache.kafka.connect.runtime.Worker:228)

[2019-05-14 12:36:29,784] INFO Finished creating connector test1 (org.apache.kafka.connect.runtime.Worker:247)

[2019-05-14 12:36:29,784] INFO SourceConnectorConfig values:

config.action.reload = restart

connector.class = io.debezium.connector.sqlserver.SqlServerConnector

errors.log.enable = false

errors.log.include.messages = false

errors.retry.delay.max.ms = 60000

errors.retry.timeout = 0

errors.tolerance = none

header.converter = null

key.converter = null

name = test1

tasks.max = 1

transforms = []

value.converter = null

(org.apache.kafka.connect.runtime.SourceConnectorConfig:279)

[2019-05-14 12:36:29,784] INFO EnrichedConnectorConfig values:

config.action.reload = restart

connector.class = io.debezium.connector.sqlserver.SqlServerConnector

errors.log.enable = false

errors.log.include.messages = false

errors.retry.delay.max.ms = 60000

errors.retry.timeout = 0

errors.tolerance = none

header.converter = null

key.converter = null

name = test1

tasks.max = 1

transforms = []

value.converter = null

(org.apache.kafka.connect.runtime.ConnectorConfig$EnrichedConnectorConfig:279)

[2019-05-14 12:36:29,789] INFO KafkaAvroDeserializerConfig values:

schema.registry.url = [http://localhost:8081]

basic.auth.user.info = [hidden]

auto.register.schemas = true

max.schemas.per.subject = 1000

basic.auth.credentials.source = URL

schema.registry.basic.auth.user.info = [hidden]

specific.avro.reader = false

value.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

key.subject.name.strategy = class io.confluent.kafka.serializers.subject.TopicNameStrategy

(io.confluent.kafka.serializers.KafkaAvroDeserializerConfig:179)

[2019-05-14 12:36:29,789] INFO AvroDataConfig values:

schemas.cache.config = 1000

enhanced.avro.schema.support = false

connect.meta.data = true

(io.confluent.connect.avro.AvroDataConfig:179)

[2019-05-14 12:36:29,789] INFO Set up the value converter class io.confluent.connect.avro.AvroConverter for task test1-0 using the worker config (org.apache.kafka.connect.runtime.Worker:430)

[2019-05-14 12:36:29,790] INFO Set up the header converter class org.apache.kafka.connect.storage.SimpleHeaderConverter for task test1-0 using the worker config (org.apache.kafka.connect.runtime.Worker:436)

[2019-05-14 12:36:29,790] INFO Initializing: org.apache.kafka.connect.runtime.TransformationChain{} (org.apache.kafka.connect.runtime.Worker:486)

[2019-05-14 12:36:29,791] INFO ProducerConfig values:

[2019-05-14 12:36:29,808] INFO Kafka version: 2.2.0-cp2 (org.apache.kafka.common.utils.AppInfoParser:109)

[2019-05-14 12:36:29,808] INFO Kafka commitId: 325e9879cbc6d612 (org.apache.kafka.common.utils.AppInfoParser:110)

[2019-05-14 12:36:29,824] INFO Starting SqlServerConnectorTask with configuration: (io.debezium.connector.common.BaseSourceTask:43)

[2019-05-14 12:36:29,825] INFO connector.class = io.debezium.connector.sqlserver.SqlServerConnector (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 12:36:29,831] INFO database.user = sql_testdb (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 12:36:29,832] INFO database.dbname = db_encompass (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 12:36:29,832] INFO task.class = io.debezium.connector.sqlserver.SqlServerConnectorTask (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 12:36:29,832] INFO database.hostname = 10.154.51.196 (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 12:36:29,832] INFO database.history.kafka.bootstrap.servers = localhost:9092 (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 12:36:29,832] INFO database.history.kafka.topic = test-topic2 (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 12:36:29,832] INFO database.password = ******** (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 12:36:29,832] INFO name = test1 (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 12:36:29,832] INFO database.server.name = houncadb5 (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 12:36:29,832] INFO database.port = 1433 (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 12:36:29,833] INFO table.whitelist = db_encompass.dbo.test1 (io.debezium.connector.common.BaseSourceTask:45)

[2019-05-14 12:36:29,898] INFO Cluster ID: KG2QLBvWRDmVs0QlykeK3Q (org.apache.kafka.clients.Metadata:365)

[2019-05-14 12:36:30,767] INFO Tasks [test1-0] configs updated (org.apache.kafka.connect.runtime.distributed.DistributedHerder:1112)

[2019-05-14 12:36:30,768] INFO Finished starting connectors and tasks (org.apache.kafka.connect.runtime.distributed.DistributedHerder:860)

[2019-05-14 12:36:30,768] INFO Rebalance started (org.apache.kafka.connect.runtime.distributed.DistributedHerder:1238)

[2019-05-14 12:36:30,768] INFO Stopping connector test1 (org.apache.kafka.connect.runtime.Worker:328)

[2019-05-14 12:36:30,768] INFO Stopped connector test1 (org.apache.kafka.connect.runtime.Worker:344)

[2019-05-14 12:36:30,768] INFO Stopping task test1-0 (org.apache.kafka.connect.runtime.Worker:588)

[2019-05-14 12:36:31,662] INFO KafkaDatabaseHistory Consumer config: {enable.auto.commit=false, value.deserializer=org.apache.kafka.common.serialization.StringDeserializer, group.id=houncadb5-dbhistory, auto.offset.reset=earliest, session.timeout.ms=10000, bootstrap.servers=localhost:9092, client.id=houncadb5-dbhistory, key.deserializer=org.apache.kafka.common.serialization.StringDeserializer, fetch.min.bytes=1} (io.debezium.relational.history.KafkaDatabaseHistory:164)

[2019-05-14 12:36:31,663] INFO KafkaDatabaseHistory Producer config: {bootstrap.servers=localhost:9092, value.serializer=org.apache.kafka.common.serialization.StringSerializer, buffer.memory=1048576, retries=1, key.serializer=org.apache.kafka.common.serialization.StringSerializer, client.id=houncadb5-dbhistory, linger.ms=0, batch.size=32768, max.block.ms=10000, acks=1} (io.debezium.relational.history.KafkaDatabaseHistory:165)

[2019-05-14 12:36:31,663] INFO ProducerConfig values:

[2019-05-14 12:36:31,666] INFO Kafka version: 2.2.0-cp2 (org.apache.kafka.common.utils.AppInfoParser:109)

[2019-05-14 12:36:31,666] INFO Kafka commitId: 325e9879cbc6d612 (org.apache.kafka.common.utils.AppInfoParser:110)

[2019-05-14 12:36:31,666] INFO ConsumerConfig values:

[2019-05-14 12:36:31,669] INFO Kafka version: 2.2.0-cp2 (org.apache.kafka.common.utils.AppInfoParser:109)

[2019-05-14 12:36:31,669] INFO Kafka commitId: 325e9879cbc6d612 (org.apache.kafka.common.utils.AppInfoParser:110)

[2019-05-14 12:36:31,682] INFO Cluster ID: KG2QLBvWRDmVs0QlykeK3Q (org.apache.kafka.clients.Metadata:365)

[2019-05-14 12:36:31,690] INFO AdminClientConfig values:

[2019-05-14 12:36:31,691] WARN The configuration 'value.serializer' was supplied but isn't a known config. (org.apache.kafka.clients.admin.AdminClientConfig:287)

[2019-05-14 12:36:31,695] WARN The configuration 'batch.size' was supplied but isn't a known config. (org.apache.kafka.clients.admin.AdminClientConfig:287)

[2019-05-14 12:36:31,696] WARN The configuration 'max.block.ms' was supplied but isn't a known config. (org.apache.kafka.clients.admin.AdminClientConfig:287)

[2019-05-14 12:36:31,696] WARN The configuration 'acks' was supplied but isn't a known config. (org.apache.kafka.clients.admin.AdminClientConfig:287)

[2019-05-14 12:36:31,696] WARN The configuration 'buffer.memory' was supplied but isn't a known config. (org.apache.kafka.clients.admin.AdminClientConfig:287)

[2019-05-14 12:36:31,696] WARN The configuration 'key.serializer' was supplied but isn't a known config. (org.apache.kafka.clients.admin.AdminClientConfig:287)

[2019-05-14 12:36:31,697] WARN The configuration 'linger.ms' was supplied but isn't a known config. (org.apache.kafka.clients.admin.AdminClientConfig:287)

[2019-05-14 12:36:31,697] INFO Kafka version: 2.2.0-cp2 (org.apache.kafka.common.utils.AppInfoParser:109)

[2019-05-14 12:36:31,697] INFO Kafka commitId: 325e9879cbc6d612 (org.apache.kafka.common.utils.AppInfoParser:110)

[2019-05-14 12:36:31,735] INFO Database history topic '(name=test-topic2, numPartitions=1, replicationFactor=1, replicasAssignments=null, configs={cleanup.policy=delete, retention.ms=9223372036854775807, retention.bytes=-1})' created (io.debezium.relational.history.KafkaDatabaseHistory:357)

[2019-05-14 12:36:31,773] INFO Cluster ID: KG2QLBvWRDmVs0QlykeK3Q (org.apache.kafka.clients.Metadata:365)

[2019-05-14 12:36:31,900] INFO Requested thread factory for connector SqlServerConnector, id = houncadb5 named = error-handler (io.debezium.util.Threads:250)

[2019-05-14 12:36:31,901] INFO Requested thread factory for connector SqlServerConnector, id = houncadb5 named = change-event-source-coordinator (io.debezium.util.Threads:250)

[2019-05-14 12:36:31,901] INFO Creating thread debezium-sqlserverconnector-houncadb5-change-event-source-coordinator (io.debezium.util.Threads:267)

[2019-05-14 12:36:31,903] INFO WorkerSourceTask{id=test1-0} Source task finished initialization and start (org.apache.kafka.connect.runtime.WorkerSourceTask:200)

[2019-05-14 12:36:31,905] INFO No previous offset has been found (io.debezium.connector.sqlserver.SqlServerSnapshotChangeEventSource:62)

[2019-05-14 12:36:31,905] INFO According to the connector configuration both schema and data will be snapshotted (io.debezium.connector.sqlserver.SqlServerSnapshotChangeEventSource:64)

[2019-05-14 12:36:31,907] INFO Snapshot step 1 - Preparing (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:102)

[2019-05-14 12:36:31,910] INFO Snapshot step 2 - Determining captured tables (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:113)

[2019-05-14 12:36:32,240] INFO Snapshot step 3 - Locking captured tables (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:120)

[2019-05-14 12:36:32,760] INFO Executing schema locking (io.debezium.connector.sqlserver.SqlServerSnapshotChangeEventSource:114)

[2019-05-14 12:36:32,761] INFO Snapshot step 4 - Determining snapshot offset (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:126)

[2019-05-14 12:36:33,017] INFO Snapshot step 5 - Reading structure of captured tables (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:129)

[2019-05-14 12:36:33,019] INFO Snapshot step 6 - Persisting schema history (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:133)

[2019-05-14 12:36:33,275] INFO Schema locks released. (io.debezium.connector.sqlserver.SqlServerSnapshotChangeEventSource:139)

[2019-05-14 12:36:33,275] INFO Snapshot step 7 - Snapshotting data (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:145)

[2019-05-14 12:36:33,532] INFO Snapshot step 8 - Finalizing (io.debezium.relational.HistorizedRelationalSnapshotChangeEventSource:174)

[2019-05-14 12:36:33,794] INFO [Producer clientId=houncadb5-dbhistory] Closing the Kafka producer with timeoutMillis = 9223372036854775807 ms. (org.apache.kafka.clients.producer.KafkaProducer:1139)

[2019-05-14 12:36:33,796] INFO WorkerSourceTask{id=test1-0} Committing offsets (org.apache.kafka.connect.runtime.WorkerSourceTask:398)

[2019-05-14 12:36:33,796] INFO WorkerSourceTask{id=test1-0} flushing 0 outstanding messages for offset commit (org.apache.kafka.connect.runtime.WorkerSourceTask:415)

[2019-05-14 12:36:33,796] INFO [Producer clientId=producer-9] Closing the Kafka producer with timeoutMillis = 30000 ms. (org.apache.kafka.clients.producer.KafkaProducer:1139)

[2019-05-14 12:36:33,804] INFO Finished stopping tasks in preparation for rebalance (org.apache.kafka.connect.runtime.distributed.DistributedHerder:1268)

[2019-05-14 12:36:33,804] INFO [Worker clientId=connect-1, groupId=connect-cluster] (Re-)joining group (org.apache.kafka.clients.consumer.internals.AbstractCoordinator:491)

[2019-05-14 12:36:33,812] INFO [Worker clientId=connect-1, groupId=connect-cluster] Successfully joined group with generation 12 (org.apache.kafka.clients.consumer.internals.AbstractCoordinator:455)

[2019-05-14 12:36:33,812] INFO Joined group and got assignment: Assignment{error=0, leader='connect-1-59301092-51a9-4278-9293-4e642d965cb1', leaderUrl='http://127.0.1.1:8083/', offset=18, connectorIds=[test1], taskIds=[test1-0]} (org.apache.kafka.connect.runtime.distributed.DistributedHerder:1216)

[2019-05-14 12:36:33,812] INFO Starting connectors and tasks using config offset 18 (org.apache.kafka.connect.runtime.distributed.DistributedHerder:850)

[2019-05-14 12:36:33,813] INFO Starting connector test1 (org.apache.kafka.connect.runtime.distributed.DistributedHerder:904)

[2019-05-14 12:36:33,813] INFO ConnectorConfig values:

config.action.reload = restart

connector.class = io.debezium.connector.sqlserver.SqlServerConnector

errors.log.enable = false

errors.log.include.messages = false

errors.retry.delay.max.ms = 60000

errors.retry.timeout = 0

errors.tolerance = none

header.converter = null

key.converter = null

name = test1

tasks.max = 1

transforms = []

value.converter = null

(org.apache.kafka.connect.runtime.ConnectorConfig:279)

[2019-05-14 12:36:33,813] INFO EnrichedConnectorConfig values:

config.action.reload = restart

connector.class = io.debezium.connector.sqlserver.SqlServerConnector

errors.log.enable = false

errors.log.include.messages = false

errors.retry.delay.max.ms = 60000

errors.retry.timeout = 0

errors.tolerance = none