Debezium Oracle connector failed with java.sql.SQLException: ORA-01327: failed to exclusively lock system dictionary as required by build

3,043 views

Skip to first unread message

Rakesh kumar Das

Sep 15, 2021, 2:29:21 PM9/15/21

to debezium

My debezium oracle connector got failed with error (java.sql.SQLException: ORA-01327: failed to exclusively lock system dictionary as required by build) what could be the possible error and how i can avoid it in future.

{

"name": "debezium-connector",

"connector": {

"state": "RUNNING",

"worker_id": "*************:8083"

},

"tasks": [

{

"id": 0,

"state": "FAILED",

"worker_id": "*************:8083",

"trace": "org.apache.kafka.connect.errors.ConnectException: An exception occurred in the change event producer. This connector will be stopped.\n\tat io.debezium.pipeline.ErrorHandler.setProducerThrowable(ErrorHandler.java:42)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:211)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:63)\n\tat io.debezium.pipeline.ChangeEventSourceCoordinator.streamEvents(ChangeEventSourceCoordinator.java:159)\n\tat io.debezium.pipeline.ChangeEventSourceCoordinator.lambda$start$0(ChangeEventSourceCoordinator.java:122)\n\tat java.base/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:514)\n\tat java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)\n\tat java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1135)\n\tat java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:635)\n\tat java.base/java.lang.Thread.run(Thread.java:844)\nCaused by: java.sql.SQLException: ORA-01327: failed to exclusively lock system dictionary as required by build\nORA-06512: at \"SYS.DBMS_LOGMNR_INTERNAL\", line 7384\nORA-06512: at \"SYS.DBMS_LOGMNR_INTERNAL\", line 7398\nORA-06512: at \"SYS.DBMS_LOGMNR_INTERNAL\", line 7554\nORA-06512: at \"SYS.DBMS_LOGMNR_D\", line 12\nORA-06512: at line 1\n\n\tat oracle.jdbc.driver.T4CTTIoer11.processError(T4CTTIoer11.java:509)\n\tat oracle.jdbc.driver.T4CTTIoer11.processError(T4CTTIoer11.java:461)\n\tat oracle.jdbc.driver.T4C8Oall.processError(T4C8Oall.java:1104)\n\tat oracle.jdbc.driver.T4CTTIfun.receive(T4CTTIfun.java:553)\n\tat oracle.jdbc.driver.T4CTTIfun.doRPC(T4CTTIfun.java:269)\n\tat oracle.jdbc.driver.T4C8Oall.doOALL(T4C8Oall.java:655)\n\tat oracle.jdbc.driver.T4CCallableStatement.doOall8(T4CCallableStatement.java:265)\n\tat oracle.jdbc.driver.T4CCallableStatement.doOall8(T4CCallableStatement.java:86)\n\tat oracle.jdbc.driver.T4CCallableStatement.executeForRows(T4CCallableStatement.java:965)\n\tat oracle.jdbc.driver.OracleStatement.doExecuteWithTimeout(OracleStatement.java:1205)\n\tat oracle.jdbc.driver.OraclePreparedStatement.executeInternal(OraclePreparedStatement.java:3666)\n\tat oracle.jdbc.driver.T4CCallableStatement.executeInternal(T4CCallableStatement.java:1358)\n\tat oracle.jdbc.driver.OraclePreparedStatement.execute(OraclePreparedStatement.java:3778)\n\tat oracle.jdbc.driver.OracleCallableStatement.execute(OracleCallableStatement.java:4251)\n\tat oracle.jdbc.driver.OraclePreparedStatementWrapper.execute(OraclePreparedStatementWrapper.java:1081)\n\tat io.debezium.connector.oracle.logminer.LogMinerHelper.executeCallableStatement(LogMinerHelper.java:701)\n\tat io.debezium.connector.oracle.logminer.LogMinerHelper.buildDataDictionary(LogMinerHelper.java:99)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.initializeRedoLogsForMining(LogMinerStreamingChangeEventSource.java:246)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:167)\n\t... 8 more\nCaused by: Error : 1327, Position : 0, Sql = BEGIN DBMS_LOGMNR_D.BUILD (options => DBMS_LOGMNR_D.STORE_IN_REDO_LOGS); END;, OriginalSql = BEGIN DBMS_LOGMNR_D.BUILD (options => DBMS_LOGMNR_D.STORE_IN_REDO_LOGS); END;, Error Msg = ORA-01327: failed to exclusively lock system dictionary as required by build\nORA-06512: at \"SYS.DBMS_LOGMNR_INTERNAL\", line 7384\nORA-06512: at \"SYS.DBMS_LOGMNR_INTERNAL\", line 7398\nORA-06512: at \"SYS.DBMS_LOGMNR_INTERNAL\", line 7554\nORA-06512: at \"SYS.DBMS_LOGMNR_D\", line 12\nORA-06512: at line 1\n\n\tat oracle.jdbc.driver.T4CTTIoer11.processError(T4CTTIoer11.java:513)\n\t... 26 more\n"

}

],

"type": "source"

}

Chris Cranford

Sep 15, 2021, 5:02:55 PM9/15/21

to debe...@googlegroups.com, Rakesh kumar Das

Hi Rakesh -

Typically when Oracle throws the ORA-01327 error, this means that a concurrent DDL operation is in progress and is blocking access to the data dictionary. Normally this should not be a problem with LogMiner as it has an internal retry mechanism after a small timeout window when a BUILD is requested; however if LogMiner continues to be denied access to the data dictionary, it will eventually error with this message.

I suppose the one takeaway here might be that when we detect the ORA-01327 error that we automatically mark the exception as retriable and we allow Kafka Connect / Debezium Server to restart the connector safely. Could you open a Jira enhancement request with this error code and message to be allowed to be retried by the connector?

Thanks,

CC

Typically when Oracle throws the ORA-01327 error, this means that a concurrent DDL operation is in progress and is blocking access to the data dictionary. Normally this should not be a problem with LogMiner as it has an internal retry mechanism after a small timeout window when a BUILD is requested; however if LogMiner continues to be denied access to the data dictionary, it will eventually error with this message.

I suppose the one takeaway here might be that when we detect the ORA-01327 error that we automatically mark the exception as retriable and we allow Kafka Connect / Debezium Server to restart the connector safely. Could you open a Jira enhancement request with this error code and message to be allowed to be retried by the connector?

Thanks,

CC

Chris Cranford

Sep 16, 2021, 11:16:11 AM9/16/21

to debe...@googlegroups.com, Rakesh kumar Das

Rakesh kumar Das

Sep 16, 2021, 10:01:11 PM9/16/21

to Chris Cranford, debe...@googlegroups.com

Thanks Chris.

Rakesh kumar Das

Sep 17, 2021, 2:59:10 AM9/17/21

to Chris Cranford, debe...@googlegroups.com

Hi Chris,

We received one more new error i.e mentioned below. What be the possible issue and how we can avoid it.

org.apache.kafka.connect.errors.ConnectException: An exception occurred in the change event producer. This connector will be stopped.\n\tat io.debezium.pipeline.ErrorHandler.setProducerThrowable(ErrorHandler.java:42)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:211)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:63)\n\tat io.debezium.pipeline.ChangeEventSourceCoordinator.streamEvents(ChangeEventSourceCoordinator.java:159)\n\tat io.debezium.pipeline.ChangeEventSourceCoordinator.lambda$start$0(ChangeEventSourceCoordinator.java:122)\n\tat java.base/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:514)\n\tat java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)\n\tat java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1135)\n\tat java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:635)\n\tat java.base/java.lang.Thread.run(Thread.java:844)\nCaused by: java.sql.SQLException: ORA-01343: LogMiner encountered corruption in the logstream\n\n\tat oracle.jdbc.driver.T4CTTIoer11.processError(T4CTTIoer11.java:509)\n\tat oracle.jdbc.driver.T4CTTIoer11.processError(T4CTTIoer11.java:461)\n\tat oracle.jdbc.driver.T4C8Oall.processError(T4C8Oall.java:1104)\n\tat oracle.jdbc.driver.T4CTTIfun.receive(T4CTTIfun.java:553)\n\tat oracle.jdbc.driver.T4CTTIfun.doRPC(T4CTTIfun.java:269)\n\tat oracle.jdbc.driver.T4C8Oall.doOALL(T4C8Oall.java:655)\n\tat oracle.jdbc.driver.T4CPreparedStatement.doOall8(T4CPreparedStatement.java:270)\n\tat oracle.jdbc.driver.T4CPreparedStatement.doOall8(T4CPreparedStatement.java:91)\n\tat oracle.jdbc.driver.T4CPreparedStatement.executeForRows(T4CPreparedStatement.java:970)\n\tat oracle.jdbc.driver.OracleStatement.executeMaybeDescribe(OracleStatement.java:1012)\n\tat oracle.jdbc.driver.OracleStatement.doExecuteWithTimeout(OracleStatement.java:1168)\n\tat oracle.jdbc.driver.OraclePreparedStatement.executeInternal(OraclePreparedStatement.java:3666)\n\tat oracle.jdbc.driver.T4CPreparedStatement.executeInternal(T4CPreparedStatement.java:1426)\n\tat oracle.jdbc.driver.OraclePreparedStatement.executeQuery(OraclePreparedStatement.java:3713)\n\tat oracle.jdbc.driver.OraclePreparedStatementWrapper.executeQuery(OraclePreparedStatementWrapper.java:1167)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:184)\n\t... 8 more\nCaused by: Error : 1343, Position : 0, Sql = SELECT SCN, SQL_REDO, OPERATION_CODE, TIMESTAMP, XID, CSF, TABLE_NAME, SEG_OWNER, OPERATION, USERNAME, ROW_ID, ROLLBACK, RS_ID, ORA_HASH(SCN||OPERATION||RS_ID||SEQUENCE#||RTRIM(SUBSTR(SQL_REDO,1,256))) FROM V$LOGMNR_CONTENTS WHERE SCN > :1 AND SCN <= :2 AND ((OPERATION_CODE IN (6,7,34,36) OR (OPERATION_CODE = 5 AND USERNAME NOT IN ('SYS','SYSTEM','RKPAYDEBEZIUM_USER') AND INFO NOT LIKE 'INTERNAL DDL%' AND (TABLE_NAME IS NULL OR TABLE_NAME NOT LIKE 'ORA_TEMP_%')) ) OR (OPERATION_CODE IN (1,2,3) AND TABLE_NAME != 'LOG_MINING_FLUSH' AND SEG_OWNER NOT IN ('APPQOSSYS','AUDSYS','CTXSYS','DVSYS','DBSFWUSER','DBSNMP','GSMADMIN_INTERNAL','LBACSYS','MDSYS','OJVMSYS','OLAPSYS','ORDDATA','ORDSYS','OUTLN','SYS','SYSTEM','WMSYS','XDB') AND (REGEXP_LIKE(SEG_OWNER || '.' || TABLE_NAME,'^RKPAYADMIN.PAYMENT$','i') OR REGEXP_LIKE(SEG_OWNER || '.' || TABLE_NAME,'^RKPAYADMIN.MERCHANT$','i')) )), OriginalSql = SELECT SCN, SQL_REDO, OPERATION_CODE, TIMESTAMP, XID, CSF, TABLE_NAME, SEG_OWNER, OPERATION, USERNAME, ROW_ID, ROLLBACK, RS_ID, ORA_HASH(SCN||OPERATION||RS_ID||SEQUENCE#||RTRIM(SUBSTR(SQL_REDO,1,256))) FROM V$LOGMNR_CONTENTS WHERE SCN > ? AND SCN <= ? AND ((OPERATION_CODE IN (6,7,34,36) OR (OPERATION_CODE = 5 AND USERNAME NOT IN ('SYS','SYSTEM','RKPAYDEBEZIUM_USER') AND INFO NOT LIKE 'INTERNAL DDL%' AND (TABLE_NAME IS NULL OR TABLE_NAME NOT LIKE 'ORA_TEMP_%')) ) OR (OPERATION_CODE IN (1,2,3) AND TABLE_NAME != 'LOG_MINING_FLUSH' AND SEG_OWNER NOT IN ('APPQOSSYS','AUDSYS','CTXSYS','DVSYS','DBSFWUSER','DBSNMP','GSMADMIN_INTERNAL','LBACSYS','MDSYS','OJVMSYS','OLAPSYS','ORDDATA','ORDSYS','OUTLN','SYS','SYSTEM','WMSYS','XDB') AND (REGEXP_LIKE(SEG_OWNER || '.' || TABLE_NAME,'^RKPAYADMIN.PAYMENT$','i') OR REGEXP_LIKE(SEG_OWNER || '.' || TABLE_NAME,'^RKPAYADMIN.MERCHANT$','i')) )), Error Msg = ORA-01343: LogMiner encountered corruption in the logstream\n\n\tat oracle.jdbc.driver.T4CTTIoer11.processError(T4CTTIoer11.java:513)\n\t... 23 more\n"

org.apache.kafka.connect.errors.ConnectException: An exception occurred in the change event producer. This connector will be stopped.\n\tat io.debezium.pipeline.ErrorHandler.setProducerThrowable(ErrorHandler.java:42)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:211)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:63)\n\tat io.debezium.pipeline.ChangeEventSourceCoordinator.streamEvents(ChangeEventSourceCoordinator.java:159)\n\tat io.debezium.pipeline.ChangeEventSourceCoordinator.lambda$start$0(ChangeEventSourceCoordinator.java:122)\n\tat java.base/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:514)\n\tat java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)\n\tat java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1135)\n\tat java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:635)\n\tat java.base/java.lang.Thread.run(Thread.java:844)\nCaused by: java.sql.SQLException: ORA-01343: LogMiner encountered corruption in the logstream\n\n\tat oracle.jdbc.driver.T4CTTIoer11.processError(T4CTTIoer11.java:509)\n\tat oracle.jdbc.driver.T4CTTIoer11.processError(T4CTTIoer11.java:461)\n\tat oracle.jdbc.driver.T4C8Oall.processError(T4C8Oall.java:1104)\n\tat oracle.jdbc.driver.T4CTTIfun.receive(T4CTTIfun.java:553)\n\tat oracle.jdbc.driver.T4CTTIfun.doRPC(T4CTTIfun.java:269)\n\tat oracle.jdbc.driver.T4C8Oall.doOALL(T4C8Oall.java:655)\n\tat oracle.jdbc.driver.T4CPreparedStatement.doOall8(T4CPreparedStatement.java:270)\n\tat oracle.jdbc.driver.T4CPreparedStatement.doOall8(T4CPreparedStatement.java:91)\n\tat oracle.jdbc.driver.T4CPreparedStatement.executeForRows(T4CPreparedStatement.java:970)\n\tat oracle.jdbc.driver.OracleStatement.executeMaybeDescribe(OracleStatement.java:1012)\n\tat oracle.jdbc.driver.OracleStatement.doExecuteWithTimeout(OracleStatement.java:1168)\n\tat oracle.jdbc.driver.OraclePreparedStatement.executeInternal(OraclePreparedStatement.java:3666)\n\tat oracle.jdbc.driver.T4CPreparedStatement.executeInternal(T4CPreparedStatement.java:1426)\n\tat oracle.jdbc.driver.OraclePreparedStatement.executeQuery(OraclePreparedStatement.java:3713)\n\tat oracle.jdbc.driver.OraclePreparedStatementWrapper.executeQuery(OraclePreparedStatementWrapper.java:1167)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:184)\n\t... 8 more\nCaused by: Error : 1343, Position : 0, Sql = SELECT SCN, SQL_REDO, OPERATION_CODE, TIMESTAMP, XID, CSF, TABLE_NAME, SEG_OWNER, OPERATION, USERNAME, ROW_ID, ROLLBACK, RS_ID, ORA_HASH(SCN||OPERATION||RS_ID||SEQUENCE#||RTRIM(SUBSTR(SQL_REDO,1,256))) FROM V$LOGMNR_CONTENTS WHERE SCN > :1 AND SCN <= :2 AND ((OPERATION_CODE IN (6,7,34,36) OR (OPERATION_CODE = 5 AND USERNAME NOT IN ('SYS','SYSTEM','RKPAYDEBEZIUM_USER') AND INFO NOT LIKE 'INTERNAL DDL%' AND (TABLE_NAME IS NULL OR TABLE_NAME NOT LIKE 'ORA_TEMP_%')) ) OR (OPERATION_CODE IN (1,2,3) AND TABLE_NAME != 'LOG_MINING_FLUSH' AND SEG_OWNER NOT IN ('APPQOSSYS','AUDSYS','CTXSYS','DVSYS','DBSFWUSER','DBSNMP','GSMADMIN_INTERNAL','LBACSYS','MDSYS','OJVMSYS','OLAPSYS','ORDDATA','ORDSYS','OUTLN','SYS','SYSTEM','WMSYS','XDB') AND (REGEXP_LIKE(SEG_OWNER || '.' || TABLE_NAME,'^RKPAYADMIN.PAYMENT$','i') OR REGEXP_LIKE(SEG_OWNER || '.' || TABLE_NAME,'^RKPAYADMIN.MERCHANT$','i')) )), OriginalSql = SELECT SCN, SQL_REDO, OPERATION_CODE, TIMESTAMP, XID, CSF, TABLE_NAME, SEG_OWNER, OPERATION, USERNAME, ROW_ID, ROLLBACK, RS_ID, ORA_HASH(SCN||OPERATION||RS_ID||SEQUENCE#||RTRIM(SUBSTR(SQL_REDO,1,256))) FROM V$LOGMNR_CONTENTS WHERE SCN > ? AND SCN <= ? AND ((OPERATION_CODE IN (6,7,34,36) OR (OPERATION_CODE = 5 AND USERNAME NOT IN ('SYS','SYSTEM','RKPAYDEBEZIUM_USER') AND INFO NOT LIKE 'INTERNAL DDL%' AND (TABLE_NAME IS NULL OR TABLE_NAME NOT LIKE 'ORA_TEMP_%')) ) OR (OPERATION_CODE IN (1,2,3) AND TABLE_NAME != 'LOG_MINING_FLUSH' AND SEG_OWNER NOT IN ('APPQOSSYS','AUDSYS','CTXSYS','DVSYS','DBSFWUSER','DBSNMP','GSMADMIN_INTERNAL','LBACSYS','MDSYS','OJVMSYS','OLAPSYS','ORDDATA','ORDSYS','OUTLN','SYS','SYSTEM','WMSYS','XDB') AND (REGEXP_LIKE(SEG_OWNER || '.' || TABLE_NAME,'^RKPAYADMIN.PAYMENT$','i') OR REGEXP_LIKE(SEG_OWNER || '.' || TABLE_NAME,'^RKPAYADMIN.MERCHANT$','i')) )), Error Msg = ORA-01343: LogMiner encountered corruption in the logstream\n\n\tat oracle.jdbc.driver.T4CTTIoer11.processError(T4CTTIoer11.java:513)\n\t... 23 more\n"

Chris Cranford

Sep 17, 2021, 10:03:22 AM9/17/21

to Rakesh kumar Das, debe...@googlegroups.com

Hi Rakesh -

This error indicates that the mining range that the connector attempted to mine requires access to an archive/redo log that is no longer available. Typically this is a result of the connector needing access to an archive/redo log that has been removed prematurely. If you enable DEBUG logging, the connector will dump some database state to the logs and part of that state includes the output from V$LOGMNR_LOGS and V$LOGMNR_PARAMETERS. This output will tell you specifically which log is missing. If you have a backup of those logs you can restore them and restart the connector; however if the logs are no longer available, you will need to re-snapshot.

Thanks,

Chris

This error indicates that the mining range that the connector attempted to mine requires access to an archive/redo log that is no longer available. Typically this is a result of the connector needing access to an archive/redo log that has been removed prematurely. If you enable DEBUG logging, the connector will dump some database state to the logs and part of that state includes the output from V$LOGMNR_LOGS and V$LOGMNR_PARAMETERS. This output will tell you specifically which log is missing. If you have a backup of those logs you can restore them and restart the connector; however if the logs are no longer available, you will need to re-snapshot.

Thanks,

Chris

Rakesh kumar Das

Sep 17, 2021, 3:00:26 PM9/17/21

to Chris Cranford, debe...@googlegroups.com

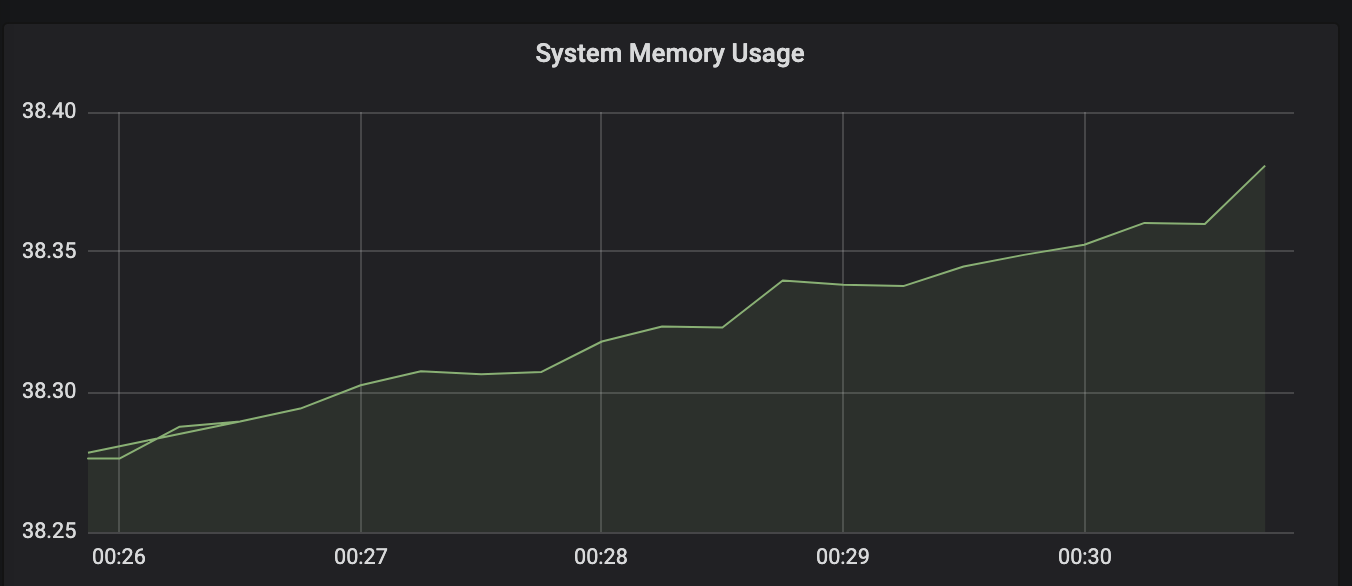

Thank you for your response. Also need one more help from you as we are have a kafka connect of cluster setup but my system memory keeps increasing. Even after having the proper retention on topics. What could be the possible error.

Thanks in Advance.

Rakesh kumar Das

Sep 17, 2021, 3:02:19 PM9/17/21

to Chris Cranford, debe...@googlegroups.com

PFA

Chris Cranford

Sep 20, 2021, 10:45:13 AM9/20/21

to Rakesh kumar Das, debe...@googlegroups.com

Hi Rakesh -

There is a known problem where the connector's heap continues to grow unbounded particularly fast on high volume systems with how the TransactionCache stores in-progress transactions. We're actively looking into this as a part of DBZ-3808 [1].

Thanks,

Chris

[1]: https://issues.redhat.com/browse/DBZ-3808

There is a known problem where the connector's heap continues to grow unbounded particularly fast on high volume systems with how the TransactionCache stores in-progress transactions. We're actively looking into this as a part of DBZ-3808 [1].

Thanks,

Chris

[1]: https://issues.redhat.com/browse/DBZ-3808

Chris Cranford

Sep 20, 2021, 11:15:12 AM9/20/21

to Rakesh kumar Das, debe...@googlegroups.com

HI Rakesh -

The only workaround currently would be to restart the connector.

HTH,

CC

The only workaround currently would be to restart the connector.

HTH,

CC

Rakesh kumar Das

Sep 24, 2021, 3:10:07 AM9/24/21

to Chris Cranford, debe...@googlegroups.com

Hi Chris,

I have one more query, right now we are seeing lot of delay in pushing data to topic i.e around ~1.5 days. How can we use our connector or reduce the latency. Is it due to the configuration we used in connector or any other thing we need to look into. My connector conf is below.

{

"name": "debezium-schemaonly-connector-1.3",

"config": {

"connector.class" : "io.debezium.connector.oracle.OracleConnector",

"tasks.max" : "1",

"database.hostname" : "****************",

"database.port" : "2028",

"database.user" : "****************",

"database.password" : "****************",

"database.dbname" : "****************",

"database.history.kafka.bootstrap.servers" : "****************",

"database.history.kafka.topic": "****************",

"database.version": "12.1.0.2.0",

"include.schema.changes": "true",

"table.whitelist": "****************",

"errors.log.enable": "true",

"topic.creation.default.replication.factor": "3",

"topic.creation.default.partitions": "10",

"topic.creation.default.cleanup.policy": "delete",

"topic.creation.default.compression.type": "lz4",

"topic.creation.groups": "oracle",

"topic.creation.oracle.include": "****************",

"topic.creation.oracle.replication.factor": "3",

"topic.creation.oracle.partitions": "20",

"topic.creation.oracle.cleanup.policy": "delete",

"topic.creation.oracle.retention.ms": "1200000",

"topic.creation.oracle.retention.bytes": "300000000",

"topic.creation.oracle.compression.type": "producer",

"key.converter.schemas.enable":"false",

"value.converter.schemas.enable":"false",

"key.converter":"org.apache.kafka.connect.json.JsonConverter",

"value.converter":"org.apache.kafka.connect.json.JsonConverter",

"topic.creation.enable":"true",

"database.allowPublicKeyRetrieval":"true",

"decimal.handling.mode" : "double",

"max.queue.size" : "819200",

"max.batch.size" : "204800",

"poll.interval.ms" : "10",

"log.mining.batch.size.min" : "10",

"log.mining.batch.size.max" : "1000000",

"log.mining.batch.size.default" : "20000",

"log.mining.sleep.time.min.ms" : "0",

"log.mining.sleep.time.max.ms" : "300",

"log.mining.sleep.time.default.ms" : "10",

"log.mining.view.fetch.size" : "100000",

"database.history.skip.unparseable.ddl" : "true",

"event.processing.failure.handling.mode" : "warn",

"snapshot.mode" : "schema_only"

}

}

Chris Cranford

Sep 24, 2021, 11:20:43 AM9/24/21

to Rakesh kumar Das, debe...@googlegroups.com

Hi Rakesh -

Nothing in the connector configuration looks really out of the ordinary. Most often latency is a product of Oracle tuning. What version of Debezium are you using? Also, if you have tools to track JMX metrics for the connector, I would suggest checking these metrics:

- SwitchCounter

- MaxDurationOfFetchQueryInMilliseconds

- TotalParseTimeInMilliseconds

- TotalMiningSessionStartTimeInMilliseconds

- MaxMiningSessionStartTimeInMilliseconds

- TotalProcessingTimeInMilliseconds

- TotalProcessedRows

- NumberOfActiveTransactions

- LagFromSourceInMilliseconds

If you notice large jumps in the SwitchCounter metric, that chances are your redo logs are sized too small and causing an unnecessary number of log switches. When a log switch occurs not only does the Oracle LGWR and ARC process must coordinate switching the redo log reference and archiving the log to disk, but this leads to lots of Disk IO which can be a concern if archive logs are written to the same disk as the redo logs. But more importantly as it relates to the connector, each log switch forces the connector to stop & restart the mining session. This means that Oracle LogMiner will need to re-evaluate SCN ranges as a part of the mining session, verify if the logs are available, and prepare any necessary metadata for the session. When using the default mining strategy, part of the metadata collection is to build and gather the data dictionary from the redo logs. This can take anywhere from 20s to a few minutes to complete depending on the size of the data dictionary and how fast data can be read from disk. So minimizing log switches would be the first thing I would check and verify isn't a problem.

Now in some environments, high archive log generation is normal and there isn't much you can do about it. To speed up the connector, you can use the online_catalog mining strategy, but this comes at a risk. This strategy does not support tracking DDL changes to tables and so we don't expect that you will change the structure of the tables you're capturing changes for when using this method. So if your tables are static and won't change, this is certainly a viable option and will greatly improve the performance of Oracle LogMiner start-up since the data dictionary won't be written to the redo logs and won't need to be read during the metadata collection phase of each new mining session.

Next I would look at the MaxDurationOfFetchQueryInMilliseconds and see how this value fairs. You have a pretty large fetch size specified so you want to make sure that the larger fetch size doesn't translate into forcing the query to take too long to return initially so the first set of LogMiner results can be processed by the connector. If you notice the duration is too high, you can reduce the fetch size.

Lastly, could you ask your DBA to provide you the output from this SQL:

SELECT GROUP#, bytes/1024/1024 AS size_mb, archived, status, first_change#, next_change# FROM v$log;

Thanks,

Chris

Nothing in the connector configuration looks really out of the ordinary. Most often latency is a product of Oracle tuning. What version of Debezium are you using? Also, if you have tools to track JMX metrics for the connector, I would suggest checking these metrics:

- SwitchCounter

- MaxDurationOfFetchQueryInMilliseconds

- TotalParseTimeInMilliseconds

- TotalMiningSessionStartTimeInMilliseconds

- MaxMiningSessionStartTimeInMilliseconds

- TotalProcessingTimeInMilliseconds

- TotalProcessedRows

- NumberOfActiveTransactions

- LagFromSourceInMilliseconds

If you notice large jumps in the SwitchCounter metric, that chances are your redo logs are sized too small and causing an unnecessary number of log switches. When a log switch occurs not only does the Oracle LGWR and ARC process must coordinate switching the redo log reference and archiving the log to disk, but this leads to lots of Disk IO which can be a concern if archive logs are written to the same disk as the redo logs. But more importantly as it relates to the connector, each log switch forces the connector to stop & restart the mining session. This means that Oracle LogMiner will need to re-evaluate SCN ranges as a part of the mining session, verify if the logs are available, and prepare any necessary metadata for the session. When using the default mining strategy, part of the metadata collection is to build and gather the data dictionary from the redo logs. This can take anywhere from 20s to a few minutes to complete depending on the size of the data dictionary and how fast data can be read from disk. So minimizing log switches would be the first thing I would check and verify isn't a problem.

Now in some environments, high archive log generation is normal and there isn't much you can do about it. To speed up the connector, you can use the online_catalog mining strategy, but this comes at a risk. This strategy does not support tracking DDL changes to tables and so we don't expect that you will change the structure of the tables you're capturing changes for when using this method. So if your tables are static and won't change, this is certainly a viable option and will greatly improve the performance of Oracle LogMiner start-up since the data dictionary won't be written to the redo logs and won't need to be read during the metadata collection phase of each new mining session.

Next I would look at the MaxDurationOfFetchQueryInMilliseconds and see how this value fairs. You have a pretty large fetch size specified so you want to make sure that the larger fetch size doesn't translate into forcing the query to take too long to return initially so the first set of LogMiner results can be processed by the connector. If you notice the duration is too high, you can reduce the fetch size.

Lastly, could you ask your DBA to provide you the output from this SQL:

SELECT GROUP#, bytes/1024/1024 AS size_mb, archived, status, first_change#, next_change# FROM v$log;

Thanks,

Chris

Rakesh kumar Das

Sep 27, 2021, 7:11:05 AM9/27/21

to Chris Cranford, debe...@googlegroups.com

Hi Chris,

Is there any way to use an oracle standby machine to use as a datasource. As standby doesn't provide any write access so the connector doesn't work in such use cases. Is there any way to store the LOG_MINING_FLUSH table in kafka so we can use standby as our datasource.

Chris Cranford

Sep 27, 2021, 8:37:14 AM9/27/21

to Rakesh kumar Das, debe...@googlegroups.com

Hi Rakesh -

Unfortunately a standby (physical or logical) cannot be used with Debezium for Oracle. We're aware of the limitation and need for this and its tracked in DBZ-3866 [1].

Thanks

Chris

[1]: https://issues.redhat.com/browse/DBZ-3866

Unfortunately a standby (physical or logical) cannot be used with Debezium for Oracle. We're aware of the limitation and need for this and its tracked in DBZ-3866 [1].

Thanks

Chris

[1]: https://issues.redhat.com/browse/DBZ-3866

Rakesh kumar Das

Oct 7, 2021, 9:57:55 AM10/7/21

to Chris Cranford, debe...@googlegroups.com

Hi Chris,

Yesterday we got the below error. Please let us know how we can avoid it in future.

org.apache.kafka.connect.errors.ConnectException: An exception occurred in the change event producer. This connector will be stopped.\n\tat io.debezium.pipeline.ErrorHandler.setProducerThrowable(ErrorHandler.java:42)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:211)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:63)\n\tat io.debezium.pipeline.ChangeEventSourceCoordinator.streamEvents(ChangeEventSourceCoordinator.java:159)\n\tat io.debezium.pipeline.ChangeEventSourceCoordinator.lambda$start$0(ChangeEventSourceCoordinator.java:122)\n\tat java.base/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:514)\n\tat java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)\n\tat java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1135)\n\tat java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:635)\n\tat java.base/java.lang.Thread.run(Thread.java:844)\nCaused by: java.lang.IllegalStateException: None of log files contains offset SCN: 1631225069667, re-snapshot is required.\n\tat io.debezium.connector.oracle.logminer.LogMinerHelper.setLogFilesForMining(LogMinerHelper.java:480)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.initializeRedoLogsForMining(LogMinerStreamingChangeEventSource.java:248)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:167)\n\t... 8 more\n"

Chris Cranford

Oct 7, 2021, 10:27:50 AM10/7/21

to Rakesh kumar Das, debe...@googlegroups.com

Hi Rakesh -

If you restarted the connector and it continues to fail with a similar message, then that indicates that the SCN 1631225069667 is in a log that has been deleted or cannot be read from disk. As the message indicates, unfortunately you need to re-take a snapshot to avoid any data loss in the topics since the logs the connector needs aren't available to continue from where it left off.

Chris

If you restarted the connector and it continues to fail with a similar message, then that indicates that the SCN 1631225069667 is in a log that has been deleted or cannot be read from disk. As the message indicates, unfortunately you need to re-take a snapshot to avoid any data loss in the topics since the logs the connector needs aren't available to continue from where it left off.

Chris

Rakesh kumar Das

Oct 20, 2021, 9:17:36 AM10/20/21

to Chris Cranford, debe...@googlegroups.com

Hi Chris,

We are having some data loss in our source connector. Not sure if it's due to the source or sink connector. Please check the below difference hour-wise.

| Hour | Mysql Count | Oracle Count | Diff |

| 5 | 6815 | 6815 | 0 |

| 6 | 22372 | 22379 | 7 |

| 7 | 59381 | 59737 | 356 |

| 8 | 82761 | 83617 | 856 |

| 9 | 55447 | 55890 | 443 |

| 10 | 46945 | 46946 | 1 |

| 11 | 64561 | 64591 | 30 |

| 12 | 115479 | 123698 | 8219 |

| 13 | 72980 | 73027 | 47 |

| 14 | 61329 | 61329 | 0 |

| 15 | 61155 | 61155 | 0 |

| 16 | 61016 | 61016 | 0 |

| 17 | 76354 | 76756 | 402 |

| 18 | 92669 | 93152 | 483 |

| 19 | 80078 | 80078 | 0 |

| 20 | 60161 | 60161 | 0 |

Our source is Oracle and the sink is at Mysql.

Also we are using "database.history.skip.unparseable.ddl": "true" in our source connector. Please help us with how can we avoid data loss. If you need any more details please let me know.

Also we are using "database.history.skip.unparseable.ddl": "true" in our source connector. Please help us with how can we avoid data loss. If you need any more details please let me know.

Chris Cranford

Oct 20, 2021, 11:28:45 AM10/20/21

to Rakesh kumar Das, debe...@googlegroups.com

Hi Rakesh -

I'm not sure from our discussion here which version of Debezium you're on so its possible you've stumbled onto a bug we've since fixed. There are two situations that come to mind that I know of that we've addressed.

The first was a situation where the flushing of the Oracle in-memory LGWR buffer to redo logs could be delayed due to disk IO time and this lead to a situation where events that were of interest and close to the edge of the end of the mining session boundary could be missed. In DBZ-4067 [1] we addressed this by specifically tracking the last processed SCN and calculating future points from this point.

The second was a regression introduced in Debezium 1.6 to support LOB columns. When LOB support is enabled, there is a need to handle mining sessions a bit differently and this logic required some duplicate detection. We explored using a hashing algorithm hoping that would suffice but as indicated DBZ-3834 [2], it was unsatisfactory. In the next release of Debezium 1.8, 1.7, and 1.6, the Oracle connector will use an approach that is superior to the prior implementation, is stable, and doesn't cause any false positives in duplicate event detection.

I would suggest that anyone who has encountered this error to upgrade as soon as possible. I've outlined timelines below as to when you can expect these changes in releases.

1.6.3.Final - will be released this week

1.8.0.Alpha1 - will be released next week

1.7.1.Final - will be released in the next few weeks

You can also grab the latest 1.8 SNAPSHOT release which has all these changes as well.

Thanks,

Chris

[1]: https://issues.redhat.com/browse/DBZ-4067

[2]: https://issues.redhat.com/browse/DBZ-3834

I'm not sure from our discussion here which version of Debezium you're on so its possible you've stumbled onto a bug we've since fixed. There are two situations that come to mind that I know of that we've addressed.

The first was a situation where the flushing of the Oracle in-memory LGWR buffer to redo logs could be delayed due to disk IO time and this lead to a situation where events that were of interest and close to the edge of the end of the mining session boundary could be missed. In DBZ-4067 [1] we addressed this by specifically tracking the last processed SCN and calculating future points from this point.

The second was a regression introduced in Debezium 1.6 to support LOB columns. When LOB support is enabled, there is a need to handle mining sessions a bit differently and this logic required some duplicate detection. We explored using a hashing algorithm hoping that would suffice but as indicated DBZ-3834 [2], it was unsatisfactory. In the next release of Debezium 1.8, 1.7, and 1.6, the Oracle connector will use an approach that is superior to the prior implementation, is stable, and doesn't cause any false positives in duplicate event detection.

I would suggest that anyone who has encountered this error to upgrade as soon as possible. I've outlined timelines below as to when you can expect these changes in releases.

1.6.3.Final - will be released this week

1.8.0.Alpha1 - will be released next week

1.7.1.Final - will be released in the next few weeks

You can also grab the latest 1.8 SNAPSHOT release which has all these changes as well.

Thanks,

Chris

[1]: https://issues.redhat.com/browse/DBZ-4067

[2]: https://issues.redhat.com/browse/DBZ-3834

Rakesh kumar Das

Nov 8, 2021, 3:33:54 AM11/8/21

to Chris Cranford, debe...@googlegroups.com

Hi Chris,

We are getting a couple of different warnings. Can you help me to avoid those?

1. LAST MESSAGE RECEIVED BY CONSUMER IS BEYOND 5 MINUTES, but continuous transactions are happening in our system.

2. We are getting multiple alerts for Lag from source greater than 1 min for more than 5 minutes.

How can we avoid this? If you need any more details please let me know.

We are getting a couple of different warnings. Can you help me to avoid those?

1. LAST MESSAGE RECEIVED BY CONSUMER IS BEYOND 5 MINUTES, but continuous transactions are happening in our system.

2. We are getting multiple alerts for Lag from source greater than 1 min for more than 5 minutes.

How can we avoid this? If you need any more details please let me know.

Rakesh kumar Das

Nov 11, 2021, 10:41:18 AM11/11/21

to Chris Cranford, debe...@googlegroups.com

Hi Chris,

Can plz help me here.

Chris Cranford

Nov 11, 2021, 11:42:54 AM11/11/21

to Rakesh kumar Das, debe...@googlegroups.com

HI Rakesh -

A couple of questions:

- What version of Debezium Oracle connector are you using?

- How many tables are you capturing changes for?

- Are you running more than one connector against the same Oracle instance?

- Do you have any long running transactions?

Chris

A couple of questions:

- What version of Debezium Oracle connector are you using?

- How many tables are you capturing changes for?

- Are you running more than one connector against the same Oracle instance?

- Do you have any long running transactions?

Chris

Rakesh kumar Das

Nov 11, 2021, 10:59:38 PM11/11/21

to Chris Cranford, debe...@googlegroups.com

Hi Chris,

- What version of Debezium Oracle connector are you using? - 1.6.1.Final

- How many tables are you capturing changes for? - 2 tables using schema_only mode

- Are you running more than one connector against the same Oracle instance? - No

- Do you have any long running transactions? - No

If you need any more details, please let me know.

- What version of Debezium Oracle connector are you using? - 1.6.1.Final

- How many tables are you capturing changes for? - 2 tables using schema_only mode

- Are you running more than one connector against the same Oracle instance? - No

- Do you have any long running transactions? - No

If you need any more details, please let me know.

Chris Cranford

Nov 15, 2021, 10:12:26 AM11/15/21

to Rakesh kumar Das, debe...@googlegroups.com

Hi Rakesh -

There has been a lot of development and bugfixes in the Oracle connector lately, could you please upgrade to 1.7.1.Final and see if the problem continues?

Thanks,

Chris

There has been a lot of development and bugfixes in the Oracle connector lately, could you please upgrade to 1.7.1.Final and see if the problem continues?

Thanks,

Chris

Message has been deleted

Rakesh kumar Das

Jan 17, 2022, 12:10:36 AM1/17/22

to Chris Cranford, debe...@googlegroups.com

Hi Chris,

{

"name": "******************",

"connector": {

"state": "RUNNING",

"worker_id": "********************:8083"

We are getting the below error for someday. Can you help me how we can avoid this?

{

"name": "******************",

"connector": {

"state": "RUNNING",

"worker_id": "********************:8083"

},

"tasks": [

{

"id": 0,

"state": "FAILED",

"worker_id": "****************:8083",

"trace": "org.apache.kafka.connect.errors.ConnectException: An exception occurred in the change event producer. This connector will be stopped.\n\tat io.debezium.pipeline.ErrorHandler.setProducerThrowable(ErrorHandler.java:42)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:181)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:57)\n\tat io.debezium.pipeline.ChangeEventSourceCoordinator.streamEvents(ChangeEventSourceCoordinator.java:172)\n\tat io.debezium.pipeline.ChangeEventSourceCoordinator.executeChangeEventSources(ChangeEventSourceCoordinator.java:139)\n\tat io.debezium.pipeline.ChangeEventSourceCoordinator.lambda$start$0(ChangeEventSourceCoordinator.java:108)\n\tat java.base/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:514)\n\tat java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)\n\tat java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1135)\n\tat java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:635)\n\tat java.base/java.lang.Thread.run(Thread.java:844)\nCaused by: java.sql.SQLException: ORA-00310: archived log contains sequence 86019; sequence 86015 required\nORA-00334: archived log: '+************/ONLINELOG/group_7.280.968132295'\n\n\tat oracle.jdbc.driver.T4CTTIoer11.processError(T4CTTIoer11.java:509)\n\tat oracle.jdbc.driver.T4CTTIoer11.processError(T4CTTIoer11.java:461)\n\tat oracle.jdbc.driver.T4C8Oall.processError(T4C8Oall.java:1104)\n\tat oracle.jdbc.driver.T4CTTIfun.receive(T4CTTIfun.java:553)\n\tat oracle.jdbc.driver.T4CTTIfun.doRPC(T4CTTIfun.java:269)\n\tat oracle.jdbc.driver.T4C8Oall.doOALL(T4C8Oall.java:655)\n\tat oracle.jdbc.driver.T4CPreparedStatement.doOall8(T4CPreparedStatement.java:270)\n\tat oracle.jdbc.driver.T4CPreparedStatement.doOall8(T4CPreparedStatement.java:91)\n\tat oracle.jdbc.driver.T4CPreparedStatement.executeForDescribe(T4CPreparedStatement.java:807)\n\tat oracle.jdbc.driver.OracleStatement.executeMaybeDescribe(OracleStatement.java:983)\n\tat oracle.jdbc.driver.OracleStatement.doExecuteWithTimeout(OracleStatement.java:1168)\n\tat oracle.jdbc.driver.OraclePreparedStatement.executeInternal(OraclePreparedStatement.java:3666)\n\tat oracle.jdbc.driver.T4CPreparedStatement.executeInternal(T4CPreparedStatement.java:1426)\n\tat oracle.jdbc.driver.OraclePreparedStatement.executeQuery(OraclePreparedStatement.java:3713)\n\tat oracle.jdbc.driver.OraclePreparedStatementWrapper.executeQuery(OraclePreparedStatementWrapper.java:1167)\n\tat io.debezium.connector.oracle.logminer.processor.AbstractLogMinerEventProcessor.process(AbstractLogMinerEventProcessor.java:181)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:168)\n\t... 9 more\nCaused by: Error : 310, Position : 0, Sql = SELECT SCN, SQL_REDO, OPERATION_CODE, TIMESTAMP, XID, CSF, TABLE_NAME, SEG_OWNER, OPERATION, USERNAME, ROW_ID, ROLLBACK, RS_ID FROM V$LOGMNR_CONTENTS WHERE SCN > :1 AND SCN <= :2 AND ((OPERATION_CODE IN (6,7,34,36) OR (OPERATION_CODE = 5 AND USERNAME NOT IN ('SYS','SYSTEM') AND INFO NOT LIKE 'INTERNAL DDL%' AND (TABLE_NAME IS NULL OR TABLE_NAME NOT LIKE 'ORA_TEMP_%')) ) OR (OPERATION_CODE IN (1,2,3) AND TABLE_NAME != 'LOG_MINING_FLUSH' AND SEG_OWNER NOT IN ('APPQOSSYS','AUDSYS','CTXSYS','DVSYS','DBSFWUSER','DBSNMP','GSMADMIN_INTERNAL','LBACSYS','MDSYS','OJVMSYS','OLAPSYS','ORDDATA','ORDSYS','OUTLN','SYS','SYSTEM','WMSYS','XDB') AND (REGEXP_LIKE(SEG_OWNER || '.' || TABLE_NAME,'^***************$','i') OR REGEXP_LIKE(SEG_OWNER || '.' || TABLE_NAME,'^***************$','i')) )), OriginalSql = SELECT SCN, SQL_REDO, OPERATION_CODE, TIMESTAMP, XID, CSF, TABLE_NAME, SEG_OWNER, OPERATION, USERNAME, ROW_ID, ROLLBACK, RS_ID FROM V$LOGMNR_CONTENTS WHERE SCN > ? AND SCN <= ? AND ((OPERATION_CODE IN (6,7,34,36) OR (OPERATION_CODE = 5 AND USERNAME NOT IN ('SYS','SYSTEM') AND INFO NOT LIKE 'INTERNAL DDL%' AND (TABLE_NAME IS NULL OR TABLE_NAME NOT LIKE 'ORA_TEMP_%')) ) OR (OPERATION_CODE IN (1,2,3) AND TABLE_NAME != 'LOG_MINING_FLUSH' AND SEG_OWNER NOT IN ('APPQOSSYS','AUDSYS','CTXSYS','DVSYS','DBSFWUSER','DBSNMP','GSMADMIN_INTERNAL','LBACSYS','MDSYS','OJVMSYS','OLAPSYS','ORDDATA','ORDSYS','OUTLN','SYS','SYSTEM','WMSYS','XDB') AND (REGEXP_LIKE(SEG_OWNER || '.' || TABLE_NAME,'^*************$','i') OR REGEXP_LIKE(SEG_OWNER || '.' || TABLE_NAME,'^************$','i')) )), Error Msg = ORA-00310: archived log contains sequence 86019; sequence 86015 required\nORA-00334: archived log: '+*****************/ONLINELOG/group_7.280.968132295'\n\n\tat oracle.jdbc.driver.T4CTTIoer11.processError(T4CTTIoer11.java:513)\n\t... 25 more\n"

}

],

"type": "source"

}

If you need any other details, plz let me know.

"trace": "org.apache.kafka.connect.errors.ConnectException: An exception occurred in the change event producer. This connector will be stopped.\n\tat io.debezium.pipeline.ErrorHandler.setProducerThrowable(ErrorHandler.java:42)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:181)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:57)\n\tat io.debezium.pipeline.ChangeEventSourceCoordinator.streamEvents(ChangeEventSourceCoordinator.java:172)\n\tat io.debezium.pipeline.ChangeEventSourceCoordinator.executeChangeEventSources(ChangeEventSourceCoordinator.java:139)\n\tat io.debezium.pipeline.ChangeEventSourceCoordinator.lambda$start$0(ChangeEventSourceCoordinator.java:108)\n\tat java.base/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:514)\n\tat java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)\n\tat java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1135)\n\tat java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:635)\n\tat java.base/java.lang.Thread.run(Thread.java:844)\nCaused by: java.sql.SQLException: ORA-00310: archived log contains sequence 86019; sequence 86015 required\nORA-00334: archived log: '+************/ONLINELOG/group_7.280.968132295'\n\n\tat oracle.jdbc.driver.T4CTTIoer11.processError(T4CTTIoer11.java:509)\n\tat oracle.jdbc.driver.T4CTTIoer11.processError(T4CTTIoer11.java:461)\n\tat oracle.jdbc.driver.T4C8Oall.processError(T4C8Oall.java:1104)\n\tat oracle.jdbc.driver.T4CTTIfun.receive(T4CTTIfun.java:553)\n\tat oracle.jdbc.driver.T4CTTIfun.doRPC(T4CTTIfun.java:269)\n\tat oracle.jdbc.driver.T4C8Oall.doOALL(T4C8Oall.java:655)\n\tat oracle.jdbc.driver.T4CPreparedStatement.doOall8(T4CPreparedStatement.java:270)\n\tat oracle.jdbc.driver.T4CPreparedStatement.doOall8(T4CPreparedStatement.java:91)\n\tat oracle.jdbc.driver.T4CPreparedStatement.executeForDescribe(T4CPreparedStatement.java:807)\n\tat oracle.jdbc.driver.OracleStatement.executeMaybeDescribe(OracleStatement.java:983)\n\tat oracle.jdbc.driver.OracleStatement.doExecuteWithTimeout(OracleStatement.java:1168)\n\tat oracle.jdbc.driver.OraclePreparedStatement.executeInternal(OraclePreparedStatement.java:3666)\n\tat oracle.jdbc.driver.T4CPreparedStatement.executeInternal(T4CPreparedStatement.java:1426)\n\tat oracle.jdbc.driver.OraclePreparedStatement.executeQuery(OraclePreparedStatement.java:3713)\n\tat oracle.jdbc.driver.OraclePreparedStatementWrapper.executeQuery(OraclePreparedStatementWrapper.java:1167)\n\tat io.debezium.connector.oracle.logminer.processor.AbstractLogMinerEventProcessor.process(AbstractLogMinerEventProcessor.java:181)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:168)\n\t... 9 more\nCaused by: Error : 310, Position : 0, Sql = SELECT SCN, SQL_REDO, OPERATION_CODE, TIMESTAMP, XID, CSF, TABLE_NAME, SEG_OWNER, OPERATION, USERNAME, ROW_ID, ROLLBACK, RS_ID FROM V$LOGMNR_CONTENTS WHERE SCN > :1 AND SCN <= :2 AND ((OPERATION_CODE IN (6,7,34,36) OR (OPERATION_CODE = 5 AND USERNAME NOT IN ('SYS','SYSTEM') AND INFO NOT LIKE 'INTERNAL DDL%' AND (TABLE_NAME IS NULL OR TABLE_NAME NOT LIKE 'ORA_TEMP_%')) ) OR (OPERATION_CODE IN (1,2,3) AND TABLE_NAME != 'LOG_MINING_FLUSH' AND SEG_OWNER NOT IN ('APPQOSSYS','AUDSYS','CTXSYS','DVSYS','DBSFWUSER','DBSNMP','GSMADMIN_INTERNAL','LBACSYS','MDSYS','OJVMSYS','OLAPSYS','ORDDATA','ORDSYS','OUTLN','SYS','SYSTEM','WMSYS','XDB') AND (REGEXP_LIKE(SEG_OWNER || '.' || TABLE_NAME,'^***************$','i') OR REGEXP_LIKE(SEG_OWNER || '.' || TABLE_NAME,'^***************$','i')) )), OriginalSql = SELECT SCN, SQL_REDO, OPERATION_CODE, TIMESTAMP, XID, CSF, TABLE_NAME, SEG_OWNER, OPERATION, USERNAME, ROW_ID, ROLLBACK, RS_ID FROM V$LOGMNR_CONTENTS WHERE SCN > ? AND SCN <= ? AND ((OPERATION_CODE IN (6,7,34,36) OR (OPERATION_CODE = 5 AND USERNAME NOT IN ('SYS','SYSTEM') AND INFO NOT LIKE 'INTERNAL DDL%' AND (TABLE_NAME IS NULL OR TABLE_NAME NOT LIKE 'ORA_TEMP_%')) ) OR (OPERATION_CODE IN (1,2,3) AND TABLE_NAME != 'LOG_MINING_FLUSH' AND SEG_OWNER NOT IN ('APPQOSSYS','AUDSYS','CTXSYS','DVSYS','DBSFWUSER','DBSNMP','GSMADMIN_INTERNAL','LBACSYS','MDSYS','OJVMSYS','OLAPSYS','ORDDATA','ORDSYS','OUTLN','SYS','SYSTEM','WMSYS','XDB') AND (REGEXP_LIKE(SEG_OWNER || '.' || TABLE_NAME,'^*************$','i') OR REGEXP_LIKE(SEG_OWNER || '.' || TABLE_NAME,'^************$','i')) )), Error Msg = ORA-00310: archived log contains sequence 86019; sequence 86015 required\nORA-00334: archived log: '+*****************/ONLINELOG/group_7.280.968132295'\n\n\tat oracle.jdbc.driver.T4CTTIoer11.processError(T4CTTIoer11.java:513)\n\t... 25 more\n"

}

],

"type": "source"

}

If you need any other details, plz let me know.

Chris Cranford

Jan 17, 2022, 10:05:35 AM1/17/22

to Rakesh kumar Das, debe...@googlegroups.com

Hi Rakesh -

If you're still using 1.6.x I would suggest you upgrade to 1.8. Also, are you using Oracle RAC or Oracle standalone with multiple redo threads?

Chris

If you're still using 1.6.x I would suggest you upgrade to 1.8. Also, are you using Oracle RAC or Oracle standalone with multiple redo threads?

Chris

On 1/17/22 00:04, Rakesh kumar Das

wrote:

Hi Chris,

I hope you are doing well.

We are getting the below error for someday. Can you help me how we can avoid this?

Hi Connector got failed.

{

"name": "******************",

"connector": {

"state": "RUNNING",

"worker_id": "********************:8083"

},

"tasks": [

{

"id": 0,

"state": "FAILED",

"worker_id": "100.99.205.14:8083",

"trace": "org.apache.kafka.connect.errors.ConnectException: An exception occurred in the change event producer. This connector will be stopped.\n\tat io.debezium.pipeline.ErrorHandler.setProducerThrowable(ErrorHandler.java:42)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:181)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:57)\n\tat io.debezium.pipeline.ChangeEventSourceCoordinator.streamEvents(ChangeEventSourceCoordinator.java:172)\n\tat io.debezium.pipeline.ChangeEventSourceCoordinator.executeChangeEventSources(ChangeEventSourceCoordinator.java:139)\n\tat io.debezium.pipeline.ChangeEventSourceCoordinator.lambda$start$0(ChangeEventSourceCoordinator.java:108)\n\tat java.base/java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:514)\n\tat java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)\n\tat java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1135)\n\tat java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:635)\n\tat java.base/java.lang.Thread.run(Thread.java:844)\nCaused by: java.sql.SQLException: ORA-00310: archived log contains sequence 86019; sequence 86015 required\nORA-00334: archived log: '+************/ONLINELOG/group_7.280.968132295'\n\n\tat oracle.jdbc.driver.T4CTTIoer11.processError(T4CTTIoer11.java:509)\n\tat oracle.jdbc.driver.T4CTTIoer11.processError(T4CTTIoer11.java:461)\n\tat oracle.jdbc.driver.T4C8Oall.processError(T4C8Oall.java:1104)\n\tat oracle.jdbc.driver.T4CTTIfun.receive(T4CTTIfun.java:553)\n\tat oracle.jdbc.driver.T4CTTIfun.doRPC(T4CTTIfun.java:269)\n\tat oracle.jdbc.driver.T4C8Oall.doOALL(T4C8Oall.java:655)\n\tat oracle.jdbc.driver.T4CPreparedStatement.doOall8(T4CPreparedStatement.java:270)\n\tat oracle.jdbc.driver.T4CPreparedStatement.doOall8(T4CPreparedStatement.java:91)\n\tat oracle.jdbc.driver.T4CPreparedStatement.executeForDescribe(T4CPreparedStatement.java:807)\n\tat oracle.jdbc.driver.OracleStatement.executeMaybeDescribe(OracleStatement.java:983)\n\tat oracle.jdbc.driver.OracleStatement.doExecuteWithTimeout(OracleStatement.java:1168)\n\tat oracle.jdbc.driver.OraclePreparedStatement.executeInternal(OraclePreparedStatement.java:3666)\n\tat oracle.jdbc.driver.T4CPreparedStatement.executeInternal(T4CPreparedStatement.java:1426)\n\tat oracle.jdbc.driver.OraclePreparedStatement.executeQuery(OraclePreparedStatement.java:3713)\n\tat oracle.jdbc.driver.OraclePreparedStatementWrapper.executeQuery(OraclePreparedStatementWrapper.java:1167)\n\tat io.debezium.connector.oracle.logminer.processor.AbstractLogMinerEventProcessor.process(AbstractLogMinerEventProcessor.java:181)\n\tat io.debezium.connector.oracle.logminer.LogMinerStreamingChangeEventSource.execute(LogMinerStreamingChangeEventSource.java:168)\n\t... 9 more\nCaused by: Error : 310, Position : 0, Sql = SELECT SCN, SQL_REDO, OPERATION_CODE, TIMESTAMP, XID, CSF, TABLE_NAME, SEG_OWNER, OPERATION, USERNAME, ROW_ID, ROLLBACK, RS_ID FROM V$LOGMNR_CONTENTS WHERE SCN > :1 AND SCN <= :2 AND ((OPERATION_CODE IN (6,7,34,36) OR (OPERATION_CODE = 5 AND USERNAME NOT IN ('SYS','SYSTEM') AND INFO NOT LIKE 'INTERNAL DDL%' AND (TABLE_NAME IS NULL OR TABLE_NAME NOT LIKE 'ORA_TEMP_%')) ) OR (OPERATION_CODE IN (1,2,3) AND TABLE_NAME != 'LOG_MINING_FLUSH' AND SEG_OWNER NOT IN ('APPQOSSYS','AUDSYS','CTXSYS','DVSYS','DBSFWUSER','DBSNMP','GSMADMIN_INTERNAL','LBACSYS','MDSYS','OJVMSYS','OLAPSYS','ORDDATA','ORDSYS','OUTLN','SYS','SYSTEM','WMSYS','XDB') AND (REGEXP_LIKE(SEG_OWNER || '.' || TABLE_NAME,'^***************$','i') OR REGEXP_LIKE(SEG_OWNER || '.' || TABLE_NAME,'^***************$','i')) )), OriginalSql = SELECT SCN, SQL_REDO, OPERATION_CODE, TIMESTAMP, XID, CSF, TABLE_NAME, SEG_OWNER, OPERATION, USERNAME, ROW_ID, ROLLBACK, RS_ID FROM V$LOGMNR_CONTENTS WHERE SCN > ? AND SCN <= ? AND ((OPERATION_CODE IN (6,7,34,36) OR (OPERATION_CODE = 5 AND USERNAME NOT IN ('SYS','SYSTEM') AND INFO NOT LIKE 'INTERNAL DDL%' AND (TABLE_NAME IS NULL OR TABLE_NAME NOT LIKE 'ORA_TEMP_%')) ) OR (OPERATION_CODE IN (1,2,3) AND TABLE_NAME != 'LOG_MINING_FLUSH' AND SEG_OWNER NOT IN ('APPQOSSYS','AUDSYS','CTXSYS','DVSYS','DBSFWUSER','DBSNMP','GSMADMIN_INTERNAL','LBACSYS','MDSYS','OJVMSYS','OLAPSYS','ORDDATA','ORDSYS','OUTLN','SYS','SYSTEM','WMSYS','XDB') AND (REGEXP_LIKE(SEG_OWNER || '.' || TABLE_NAME,'^*************$','i') OR REGEXP_LIKE(SEG_OWNER || '.' || TABLE_NAME,'^************$','i')) )), Error Msg = ORA-00310: archived log contains sequence 86019; sequence 86015 required\nORA-00334: archived log: '+*****************/ONLINELOG/group_7.280.968132295'\n\n\tat oracle.jdbc.driver.T4CTTIoer11.processError(T4CTTIoer11.java:513)\n\t... 25 more\n"

}

],

"type": "source"

}

If you need any other details, plz let me know.

Rakesh kumar Das

Jan 17, 2022, 10:21:12 AM1/17/22

to Chris Cranford, debe...@googlegroups.com

Hi Chris,

We are using 1.8.0final.

Chris Cranford

Jan 17, 2022, 5:04:42 PM1/17/22

to Rakesh kumar Das, debe...@googlegroups.com

What about the second half of my questions,

Rakesh?

Reply all

Reply to author

Forward

0 new messages