DoFTools::make_periodicity_constraints<dim, dim> error when working with FEColleciton<dim>

166 views

Skip to first unread message

jose.a...@gmail.com

Jun 29, 2022, 10:45:04 AM6/29/22

to deal.II User Group

Dear dealii community,

I am working on a gradient enhanced crystal plasticity code where the displacement field is continuous but the slip fields can be discontinuous. To this end, I am using a hp::FECollection<dim>. For example, in the case of two crystals with one slip system I have a hp::FECollection<2> with

fe_collection[0] = [FE_Q<2> FE_Q<2> FE_Q<2> FE_Nothing<2>]

fe_collection[1] = [FE_Q<2> FE_Q<2> FE_Nothing<2> FE_Q<2> ]

where the first two FE_Q<2> correspond to the displacement field in 2D and the latter to the slip field.

My reference problem consist of the unit square under simple shear with periodic boundary conditions on the x-direction. When I call the DoFTools::make_periodicity_constraints<dim, dim> method, I am getting the following error when I have subdomains with different values of fe_index

An error occurred in line <1690> of file </calculate/temp/iwtm009/spack-stage-dealii-9.3.3-h7vrbckbqhxjllhyiwpmxjdp3242wjvv/spack-src/include/deal.II/dofs/dof_accessor.templates.h> in function

const dealii::FiniteElement<dim, spacedim>& dealii::DoFAccessor<structdim, dim, spacedim, lda>::get_fe(unsigned int) const [with int structdim = 1; int dim = 2; int spacedim = 2; bool level_dof_access = false]

The violated condition was:

fe_index_is_active(fe_index) == true

Additional information:

This function can only be called for active FE indices

const dealii::FiniteElement<dim, spacedim>& dealii::DoFAccessor<structdim, dim, spacedim, lda>::get_fe(unsigned int) const [with int structdim = 1; int dim = 2; int spacedim = 2; bool level_dof_access = false]

The violated condition was:

fe_index_is_active(fe_index) == true

Additional information:

This function can only be called for active FE indices

I wrote a minimum working example based on my code to showcase the error, which I have attached to this post. The executable takes a true or false argument to assign different material identifiers to different subdomains or not. If there are no subdomains and therefore, and more importantly, the size of the hp::FECollection<dim> is 1, the code runs with no problem.

My question is if my algorithm is wrong or does the method DoFTools::make_periodicity_constraints<dim, dim> does not currently support hp::FECollection<dim> with sizes bigger than one.

Cheers,

Jose

jose.a...@gmail.com

Jul 4, 2022, 12:09:41 PM7/4/22

to deal.II User Group

Dear dealii community,

I am still trying to decipher what causes the error. It occurs in the first assert of make_periodicity_constraints:

AssertDimension(

face_1->get_fe(face_1->nth_active_fe_index(0)).n_unique_faces(), 1);

So I created vectors to store the active_fe_index and the nth_active_fe_index(0), replicated the fe_index_is_active assert and the get_fe() call:

active_fe_index.reinit(triangulation.n_active_cells());

nth_active_fe_index.reinit(triangulation.n_active_cells());

active_fe_index = -1.0;

nth_active_fe_index = -1.0;

for (const auto &cell :

dof_handler.active_cell_iterators())

if (cell->is_locally_owned())

{

active_fe_index(cell->active_cell_index()) = cell->active_fe_index();

if (cell->at_boundary())

for (const auto &face_id : cell->face_indices())

if (cell->face(face_id)->at_boundary())

{

AssertThrow(cell->face(face_id)->get_active_fe_indices().size() == 1, dealii::ExcMessage("Error!"));

nth_active_fe_index(cell->active_cell_index()) = cell->face(face_id)->nth_active_fe_index(0);

AssertThrow(cell->face(face_id)->fe_index_is_active(cell->face(face_id)->nth_active_fe_index(0)), dealii::ExcMessage("Error!"));

cell->face(face_id)->get_fe(cell->face(face_id)->nth_active_fe_index(0));

}

}

I don't get any error from this loop and the from the visual inspection of .vtu file containing both vectors one can see that they indeed coincide. Maybe the problem is coming from GridTools::collect_periodic_faces as the face iterators are created by it?

Furthermore I also noticed that if no global refinement is performed the executable runs without error in serial but still gets the error in parallel.

Attached is the updated MWE. A true/false argument can be passed to the executable to perform one global refinement or not.

Cheers,

Jose

Peter Munch

Jul 4, 2022, 1:25:51 PM7/4/22

to deal.II User Group

Hi Rose,

the assert was definitely not positioned at the right place and checked on every face - even on non-active ones. I have moved it in the PR https://github.com/dealii/dealii/pull/14091. Now this assert is not triggered.

Generally, I am wondering if hp and PBC is working. At least, I cannot find a test in the hp test folder. Such a test should have triggered the assert.

Hope the patch works!

Peter

jose.a...@gmail.com

Jul 5, 2022, 4:36:25 AM7/5/22

to deal.II User Group

Hi Peter,

Thanks! I will install your dealii fork and test it out.

Cheers,

Jose

jose.a...@gmail.com

Jul 7, 2022, 4:23:54 AM7/7/22

to deal.II User Group

Hi Peter,

The assert is no longer triggered in serial (independently of the triangulation is globally refined or not) but it is still triggered in parallel (Line 2336). I also tested in release mode. In serial the MWE runs and in parallel it only runs if the triangulation is globally refined. If it is not, one gets a segmentation fault

--------------------------------------------------------------------------

Primary job terminated normally, but 1 process returned

a non-zero exit code. Per user-direction, the job has been aborted.

--------------------------------------------------------------------------

--------------------------------------------------------------------------

mpirun noticed that process rank 0 with PID 0 on node fautm95 exited on signal 11 (Segmentation fault).

--------------------------------------------------------------------------

Primary job terminated normally, but 1 process returned

a non-zero exit code. Per user-direction, the job has been aborted.

--------------------------------------------------------------------------

--------------------------------------------------------------------------

mpirun noticed that process rank 0 with PID 0 on node fautm95 exited on signal 11 (Segmentation fault).

--------------------------------------------------------------------------

In summary:

- Debug: ./make_periodicity_constraints true -> works

- Debug: ./make_periodicity_constraints false -> works

- Debug: mpirun -np 2 ./make_periodicity_constraints true -> Assertion gets thrown

- Debug: mpirun -np 2 ./make_periodicity_constraints false -> Assertion gets thrown

- Release: ./make_periodicity_constraints true -> works

- Release: ./make_periodicity_constraints false -> works

- Release: mpirun -np 2 ./make_periodicity_constraints true -> works

- Release: mpirun -np 2 ./make_periodicity_constraints false -> Segmentation fault

Jose

jose.a...@gmail.com

Mar 23, 2023, 10:57:42 AM3/23/23

to deal.II User Group

Hi dealii community,

I tested a reduced version of the MWE (attached to this E-Mail) on v9.4.0. In debug mode it runs in serial only when no global refinement is performed. Otherwise the assertion mentioned in the first E-Mail gets thrown. In parallel the assertion gets thrown in both cases. In release mode the code runs in serial but it parallel it leads to a segmentation fault in both cases (with and without refinement). Could it be that make_periodicity_constraints and hp are currently not compatible?

Cheers,

Jose

jose.a...@gmail.com

Mar 29, 2023, 8:06:11 AM3/29/23

to deal.II User Group

Hi dealii community,

I think I found where the problem lies. In order to reproduce the assertion that gets thrown, i.e.,

An error occurred

in line <1690> of file

</calculate/temp/iwtm009/spack-stage-dealii-9.3.3-h7vrbckbqhxjllhyiwpmxjdp3242wjvv/spack-src/include/deal.II/dofs/dof_accessor.templates.h>

in function

const dealii::FiniteElement<dim, spacedim>& dealii::DoFAccessor<structdim, dim, spacedim, lda>::get_fe(unsigned int) const [with int structdim = 1; int dim = 2; int spacedim = 2; bool level_dof_access = false]

The violated condition was:

fe_index_is_active(fe_index) == true

Additional information:

This function can only be called for active FE indices

const dealii::FiniteElement<dim, spacedim>& dealii::DoFAccessor<structdim, dim, spacedim, lda>::get_fe(unsigned int) const [with int structdim = 1; int dim = 2; int spacedim = 2; bool level_dof_access = false]

The violated condition was:

fe_index_is_active(fe_index) == true

Additional information:

This function can only be called for active FE indices

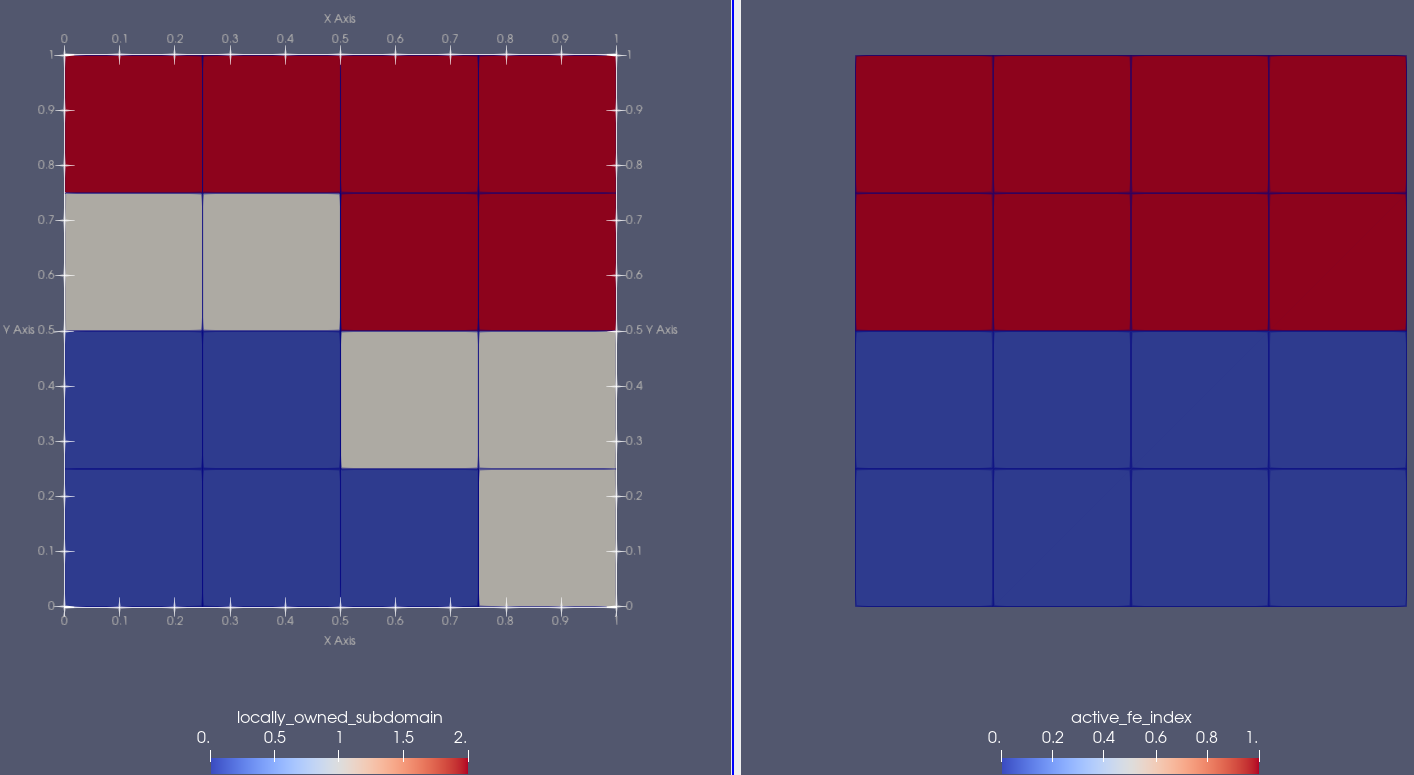

which comes from calls face_x->get_fe(face_x->nth_active_fe_index(0)) in make_periodicity_constraints and its internal methods, I wrote a MWE on the unit cube with the left and right faces (face_1 and face_2, respectively) being periodic and with subdomains_id (when run with mpirun -np 3) and active_fe_index as seen below

and printed out the face's height, mpi rank, the number of active fe_indices and the first active fe index of the face pair when the assertion's condition is not met. The terminal output is

face_1->center()[1]= 0.875, rank = 0, face_1->n_active_fe_indices() = 0, face_1->nth_active_fe_index(0) = 1, face_2->n_active_fe_indices() = 0, face_2->nth_active_fe_index(0) = 0

face_2->center()[1]= 0.875, rank = 0, face_1->n_active_fe_indices() = 0, face_1->nth_active_fe_index(0) = 1, face_2->n_active_fe_indices() = 0, face_2->nth_active_fe_index(0) = 0

face_1->center()[1]= 0.125, rank = 2, face_1->n_active_fe_indices() = 0, face_1->nth_active_fe_index(0) = 0, face_2->n_active_fe_indices() = 0, face_2->nth_active_fe_index(0) = 0

face_2->center()[1]= 0.125, rank = 2, face_1->n_active_fe_indices() = 0, face_1->nth_active_fe_index(0) = 0, face_2->n_active_fe_indices() = 0, face_2->nth_active_fe_index(0) = 0

face_2->center()[1]= 0.875, rank = 0, face_1->n_active_fe_indices() = 0, face_1->nth_active_fe_index(0) = 1, face_2->n_active_fe_indices() = 0, face_2->nth_active_fe_index(0) = 0

face_1->center()[1]= 0.125, rank = 2, face_1->n_active_fe_indices() = 0, face_1->nth_active_fe_index(0) = 0, face_2->n_active_fe_indices() = 0, face_2->nth_active_fe_index(0) = 0

face_2->center()[1]= 0.125, rank = 2, face_1->n_active_fe_indices() = 0, face_1->nth_active_fe_index(0) = 0, face_2->n_active_fe_indices() = 0, face_2->nth_active_fe_index(0) = 0

As can be seen, both n_active_fe_indices() and nth_active_fe_index(0) print out erroneous values. What seems to happen is that a processor tries to access hp-information of a cell which is neither locally owned nor a ghost cell; thus, triggering the assertion. If that is the case, a probable solution would be to store the pertinent hp-information of the faces at the periodic boundaries in a container which is shared by all processors and relay all the calls made to obtain the hp-information from the face_iterator to the container. Said container should be passed to all the internal methods of make_periodicity_constraints. I will post an update when I have tested this.

Attached is the minimum working example with which the .vtu and terminal output was obtained.

Cheers,

Jose

Reply all

Reply to author

Forward

0 new messages