Dataverse and S3 Buckets

Zacarias Benta

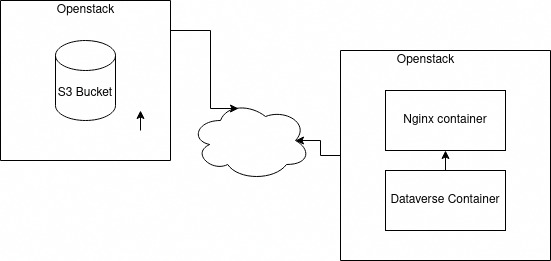

We a currently deploying a Dataverse instance and are having some issues regarding the integration of S3 in our implementation.

The Dataverse web interface keeps hanging randomly for about 20 seconds and it only does that when we deploy it with a S3 bucket as the default storage medium.

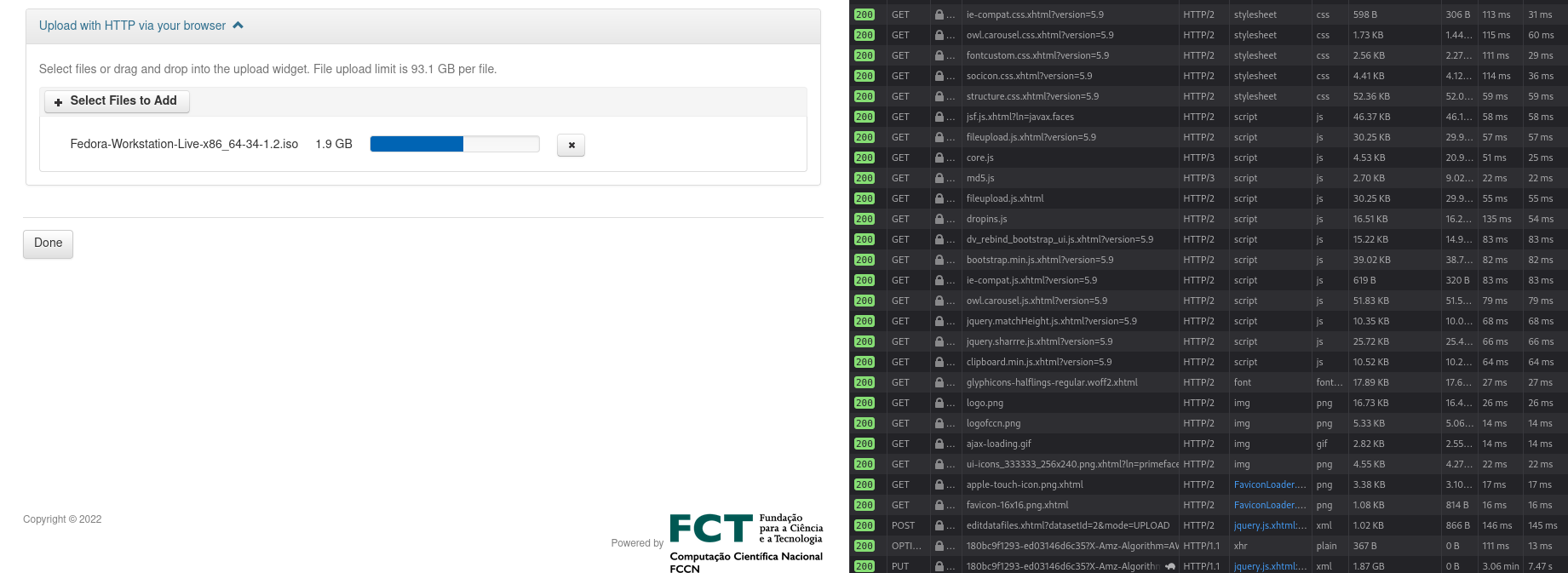

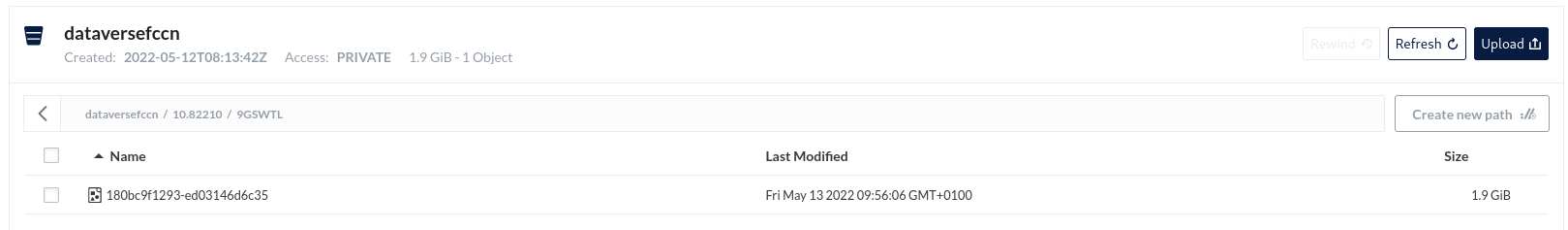

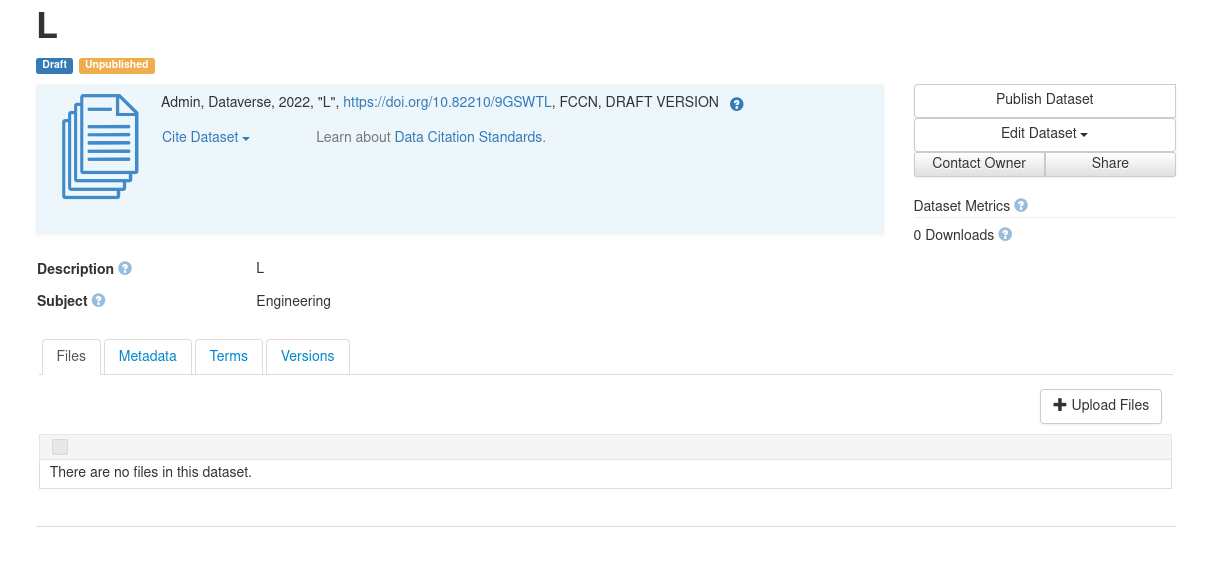

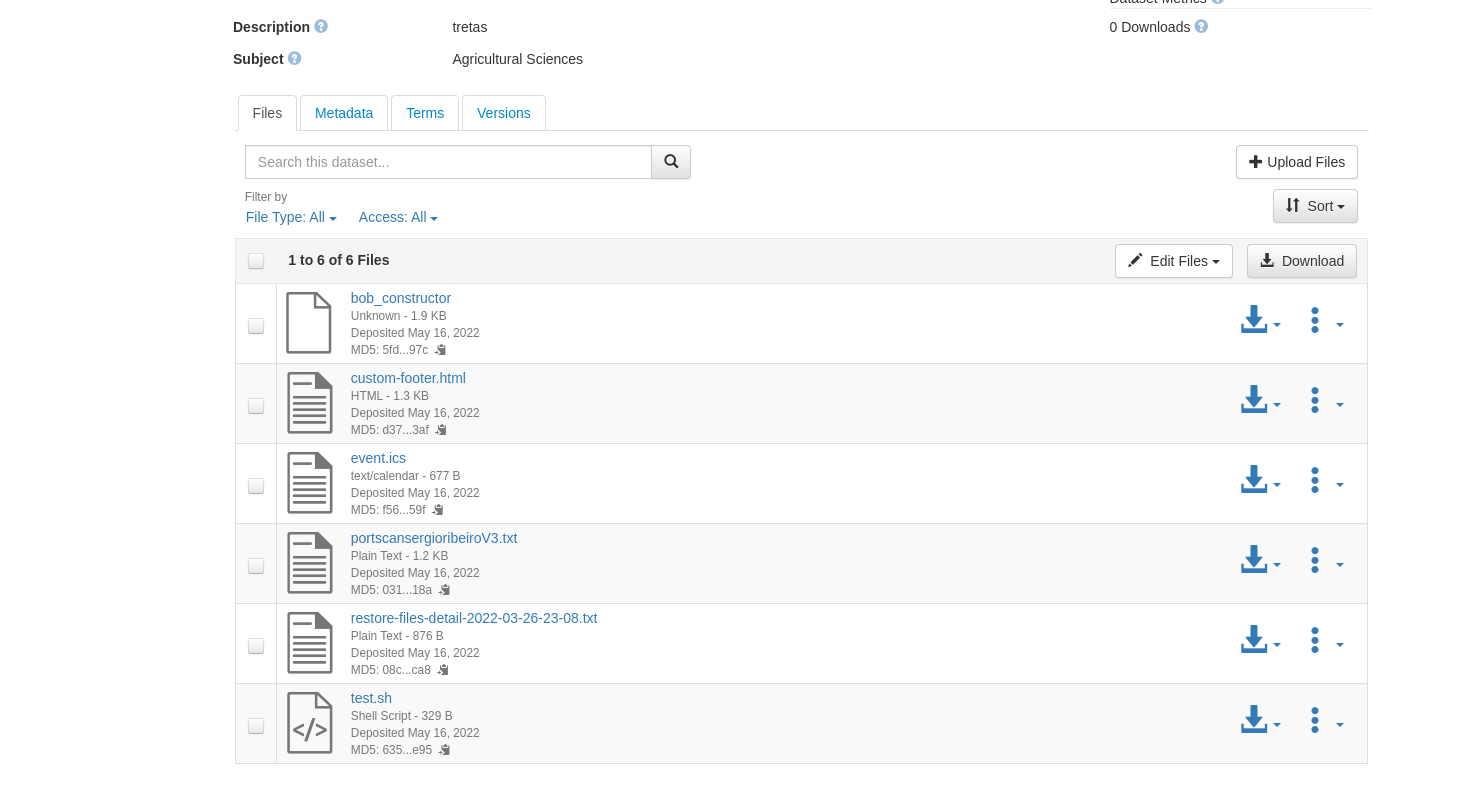

It also has a strange behavior when we try to upload files to a dataset, sometimes when it finishes uploading them there are no files to be found anywhere, whether we look for them in the web interface or the S3 bucket.

If we try using only local storage, it works like a charm, no random hangs and no timeouts.

The weird part is that it sometimes works like a charm and we can't seem to find a pattern that triggers the "freezing" of the web interface.

Did you guys ever experience any similar situation?

j-n-c

- Does the Dataverse server have any issues connecting the internet?

- Can you list your buckets and their contents from the Dataverse server using the AWS CLI?

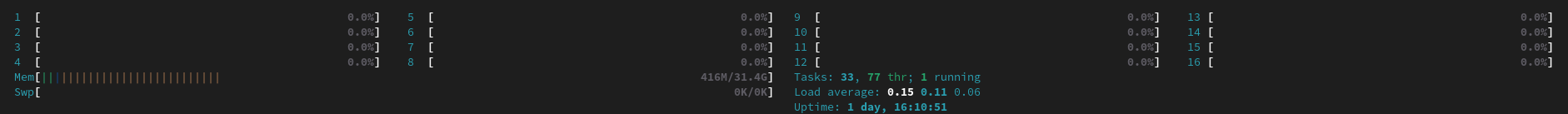

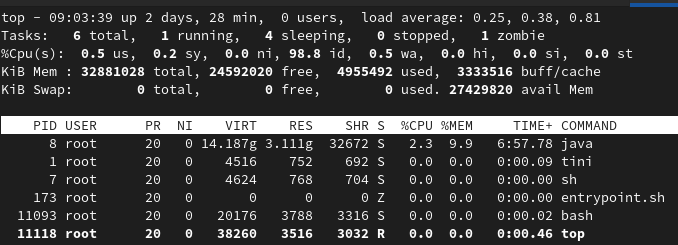

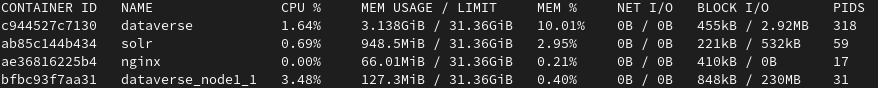

- What is the servers CPU, RAM and I/O consumption? Could it be that the server is too busy?

Don Sizemore

--

You received this message because you are subscribed to the Google Groups "Dataverse Users Community" group.

To unsubscribe from this group and stop receiving emails from it, send an email to dataverse-commu...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/dataverse-community/8e058504-cc23-413c-9bb3-7b9ae9651400n%40googlegroups.com.

Zacarias Benta

Zacarias Benta

James Myers

Another thought would be to set dataverse.files.<id>.connection-pool-size to >256. That was introduced in v5.1.1 after Dataverse changed to using a pool of S3 connections in 5.1 to be more efficient. If you are on Dataverse >=5.1 and you are doing tests that open many S3 connections (uploads, thumbnail retrievals, etc.) and/or those connections aren’t getting closed quickly (more possibilities for that in older Dataverse versions as we have found/fixed cases where Dataverse leaves a connection open for a while, but also possibly something where your Ceph implementation has a longer timeout than AWS that might make these worse for you), then increasing this value should help. Although increasing the pool uses some memory, making it 10-20 times bigger should still be fine if that helps the freezing problem.

-- Jim

To view this discussion on the web visit https://groups.google.com/d/msgid/dataverse-community/8ee0f522-222a-4581-a971-62bfa342961dn%40googlegroups.com.

Zacarias Benta

"CORSRules": [

{

"AllowedHeaders": [

"x-requested-with"

],

"AllowedMethods": [

"GET",

"PUT",

"DELETE",

"POST"

],

"AllowedOrigins": [

"https://mydataverse.com"

],

"ExposeHeaders": [

"ETag"

]

}

]

}

James Myers

The simplest solution would be to open up the CORS origin as discussed in https://guides.dataverse.org/en/latest/developers/big-data-support.html. (You can drop POST and DELETE though.). FWIW: Direct up and download both use signed URLs created by Dataverse only for users who should be able to get access to a given resource and which are only valid for a short time (configurable), hence allowing * does not open access as much as it would with endpoints that offer public access or have simple username/password controls, etc.)

If you want to tighten things up, you may also want to look at https://github.com/GlobalDataverseCommunityConsortium/dataverse-previewers/wiki/Using-Previewers-with-download-redirects-from-S3 which discusses other security mechanisms you’d have to address (specifically, Content-Security-Policy by default prohibits Javascript (used for direct upload) from adding an Origin header, which I think is why you’re seeing ‘missing’ in the error). If you figure out what’s needed there, we’d be happy to have a PR to get that into the guides for others to follow.

To view this discussion on the web visit https://groups.google.com/d/msgid/dataverse-community/92d863bb-389a-47b9-a8a1-80f2c8b85d5dn%40googlegroups.com.

Zacarias Benta

James Myers

Direct upload via the UI includes a second pass through the file to calculate the file hash (usable to verify that Dataverse/future downloaders have the exact same file as what was on the uploader’s disk). Depending on the relative speed of your network and machine, the first half (uploading to S3) and the second half (calculating the hash) of the bar can proceed at fairly different rates.

Also, as with normal upload, the files are uploaded prior to being added to the dataset, i.e. it is only when you hit save that the dataset registers that the new files are part of it.

FWIW: The DVUploader combines upload with hash calculation in one pass through the file, hence it can be somewhat faster. The decision to upload and hash sequentially in the UI was solely due to not knowing of any Javascript library that would allow doing both in parallel (which is straight forward in Java/the DVUploader.)

To view this discussion on the web visit https://groups.google.com/d/msgid/dataverse-community/9cc4f66e-3f19-4904-a3ad-e07d728f691dn%40googlegroups.com.

Zacarias Benta

dataverse | Deleting minio://dataversefccn:180cc1987b5-9974eb3ad4a8|#]

dataverse |

nginx | 192.168.1.101 - - [16/May/2022:09:02:52 +0000] "GET /dataversefccn/?acl HTTP/1.1" 200 309 "-" "aws-sdk-java/1.11.762 Linux/5.4.0-109-generic OpenJDK_64-Bit_Server_VM/11.0.7+10-LTS java/11.0.7 vendor/Azul_Systems,_Inc." "-"

nginx | 192.168.1.101 - - [16/May/2022:09:02:52 +0000] "GET /dataversefccn/?acl HTTP/1.1" 200 309 "-" "aws-sdk-java/1.11.762 Linux/5.4.0-109-generic OpenJDK_64-Bit_Server_VM/11.0.7+10-LTS java/11.0.7 vendor/Azul_Systems,_Inc." "-"

nginx | 192.168.1.101 - - [16/May/2022:09:02:52 +0000] "DELETE /dataversefccn/10.82210/H70SOA/180cc1987b5-9974eb3ad4a8 HTTP/1.1" 204 0 "-" "aws-sdk-java/1.11.762 Linux/5.4.0-109-generic OpenJDK_64-Bit_Server_VM/11.0.7+10-LTS java/11.0.7 vendor/Azul_Systems,_Inc." "-"

nginx | 192.168.1.101 - - [16/May/2022:09:02:52 +0000] "GET /dataversefccn/?prefix=10.82210%2FH70SOA%2F180cc1987b5-9974eb3ad4a8.&encoding-type=url HTTP/1.1" 200 335 "-" "aws-sdk-java/1.11.762 Linux/5.4.0-109-generic OpenJDK_64-Bit_Server_VM/11.0.7+10-LTS java/11.0.7 vendor/Azul_Systems,_Inc." "-"

dataverse | [#|2022-05-16T09:02:52.421+0000|INFO|Payara 5.2021.1|edu.harvard.iq.dataverse.util.FileUtil|_ThreadID=134;_ThreadName=http-thread-pool::http-listener-1(8);_TimeMillis=1652691772421;_LevelValue=800;|

dataverse | Deleting minio://dataversefccn:180cc1989a3-74473b01e127|#]

dataverse |

nginx | 192.168.1.101 - - [16/May/2022:09:02:52 +0000] "GET /dataversefccn/?acl HTTP/1.1" 200 309 "-" "aws-sdk-java/1.11.762 Linux/5.4.0-109-generic OpenJDK_64-Bit_Server_VM/11.0.7+10-LTS java/11.0.7 vendor/Azul_Systems,_Inc." "-"

nginx | 192.168.1.101 - - [16/May/2022:09:02:52 +0000] "GET /dataversefccn/?acl HTTP/1.1" 200 309 "-" "aws-sdk-java/1.11.762 Linux/5.4.0-109-generic OpenJDK_64-Bit_Server_VM/11.0.7+10-LTS java/11.0.7 vendor/Azul_Systems,_Inc." "-"

nginx | 192.168.1.101 - - [16/May/2022:09:02:52 +0000] "DELETE /dataversefccn/10.82210/H70SOA/180cc1989a3-74473b01e127 HTTP/1.1" 204 0 "-" "aws-sdk-java/1.11.762 Linux/5.4.0-109-generic OpenJDK_64-Bit_Server_VM/11.0.7+10-LTS java/11.0.7 vendor/Azul_Systems,_Inc." "-"

nginx | 192.168.1.101 - - [16/May/2022:09:02:52 +0000] "GET /dataversefccn/?prefix=10.82210%2FH70SOA%2F180cc1989a3-74473b01e127.&encoding-type=url HTTP/1.1" 200 335 "-" "aws-sdk-java/1.11.762 Linux/5.4.0-109-generic OpenJDK_64-Bit_Server_VM/11.0.7+10-LTS java/11.0.7 vendor/Azul_Systems,_Inc." "-"

dataverse | [#|2022-05-16T09:02:52.441+0000|INFO|Payara 5.2021.1|edu.harvard.iq.dataverse.util.FileUtil|_ThreadID=134;_ThreadName=http-thread-pool::http-listener-1(8);_TimeMillis=1652691772441;_LevelValue=800;|

dataverse | Deleting minio://dataversefccn:180cc198c63-322a6658b1e3|#]

dataverse |

nginx | 192.168.1.101 - - [16/May/2022:09:02:52 +0000] "GET /dataversefccn/?acl HTTP/1.1" 200 309 "-" "aws-sdk-java/1.11.762 Linux/5.4.0-109-generic OpenJDK_64-Bit_Server_VM/11.0.7+10-LTS java/11.0.7 vendor/Azul_Systems,_Inc." "-"

nginx | 192.168.1.101 - - [16/May/2022:09:02:52 +0000] "GET /dataversefccn/?acl HTTP/1.1" 200 309 "-" "aws-sdk-java/1.11.762 Linux/5.4.0-109-generic OpenJDK_64-Bit_Server_VM/11.0.7+10-LTS java/11.0.7 vendor/Azul_Systems,_Inc." "-"

nginx | 192.168.1.101 - - [16/May/2022:09:02:52 +0000] "DELETE /dataversefccn/10.82210/H70SOA/180cc198c63-322a6658b1e3 HTTP/1.1" 204 0 "-" "aws-sdk-java/1.11.762 Linux/5.4.0-109-generic OpenJDK_64-Bit_Server_VM/11.0.7+10-LTS java/11.0.7 vendor/Azul_Systems,_Inc." "-"

nginx | 192.168.1.101 - - [16/May/2022:09:02:52 +0000] "GET /dataversefccn/?prefix=10.82210%2FH70SOA%2F180cc198c63-322a6658b1e3.&encoding-type=url HTTP/1.1" 200 335 "-" "aws-sdk-java/1.11.762 Linux/5.4.0-109-generic OpenJDK_64-Bit_Server_VM/11.0.7+10-LTS java/11.0.7 vendor/Azul_Systems,_Inc." "-"

nginx | 192.168.1.101 - - [16/May/2022:09:02:52 +0000] "GET /dataversefccn/?acl HTTP/1.1" 200 309 "-" "aws-sdk-java/1.11.762 Linux/5.4.0-109-generic OpenJDK_64-Bit_Server_VM/11.0.7+10-LTS java/11.0.7 vendor/Azul_Systems,_Inc." "-"

nginx | 192.168.1.101 - - [16/May/2022:09:02:52 +0000] "GET /dataversefccn/10.82210/H70SOA/dataset_logo.thumb140 HTTP/1.1" 404 405 "-" "aws-sdk-java/1.11.762 Linux/5.4.0-109-generic OpenJDK_64-Bit_Server_VM/11.0.7+10-LTS java/11.0.7 vendor/Azul_Systems,_Inc." "-"

Don Sizemore

https://github.com/IQSS/dataverse/pull/8409

To view this discussion on the web visit https://groups.google.com/d/msgid/dataverse-community/cdfb8fa8-2913-437d-8633-b5c10c37b3a0n%40googlegroups.com.