question about datasets not appearing in Google Dataset Search:

298 views

Skip to first unread message

Jamie Jamison

Sep 28, 2021, 12:06:15 PM9/28/21

to Dataverse Users Community

Datasets in Dataverse.ucla.edu are not appearing in Google Dataset Search. Our material in the Social Science Data Archive (Hosted at Harvard.dataverse) do appear.

I found some information at DataCite (<https://support.datacite.org/docs/how-do-i-expose-my-datasets-to-google-dataset-search>) : "...Dataset as the resourceTypeGeneral in the metadata ".

I exported some dataverse.ucla metatdata and don't see the resourceTypeGeneral. Is this something I have to add?

I exported some dataverse.ucla metatdata and don't see the resourceTypeGeneral. Is this something I have to add?

Thank you,

Jamie Jamison

Don Sizemore

Sep 28, 2021, 12:29:43 PM9/28/21

to dataverse...@googlegroups.com

Hi Jamie,

I thought we had to generate a sitemap and submit it to the Goog?

(if there's a newer/better way I'm all ears)

Don

--

You received this message because you are subscribed to the Google Groups "Dataverse Users Community" group.

To unsubscribe from this group and stop receiving emails from it, send an email to dataverse-commu...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/dataverse-community/239a51c4-cefb-4499-9dae-352f9ff501can%40googlegroups.com.

Jamie Jamison

Sep 28, 2021, 12:34:44 PM9/28/21

to Dataverse Users Community

That gives me something to look for - will report back what happens. - Jamie

Sherry Lake

Sep 28, 2021, 12:37:13 PM9/28/21

to dataverse...@googlegroups.com

Here’s a thread from a previous google group discussion

Looks like a special robots.txt file is needed?

This is something UVa needs to set up too. So would love to hear if it works for you.

—

Sherry

On Tue, Sep 28, 2021 at 12:06 PM Jamie Jamison <jam...@g.ucla.edu> wrote:

--

Philip Durbin

Sep 28, 2021, 2:24:59 PM9/28/21

to dataverse...@googlegroups.com

Yes, the out of the box robots.txt will block bots like Google, etc. We do this so that if people spin up test installations of Dataverse they (hopefully) won't get indexed by search engines.

The link Don sent gets you in the right place but if you back up a little bit to "Letting Search Engines Crawl Your Installation" there's info you (both) need under "Ensure robots.txt Is Not Blocking Search Engines" - https://guides.dataverse.org/en/latest/installation/config.html#letting-search-engines-crawl-your-installation

If you compare https://dataverse.ucla.edu/robots.txt and https://dataverse.lib.virginia.edu/robots.txt to https://dataverse.harvard.edu/robots.txt you'll see that we've updated robots.txt on Harvard Dataverse to allow the Google bot. The details are in the guides above, including a sample robots.txt for production use (with comments).

I hope this helps!

Phil

To view this discussion on the web visit https://groups.google.com/d/msgid/dataverse-community/CADL9p-U8z9KpM9FgaYTytF4bR-VbVPirtwOExzaXQbRS2HF1MA%40mail.gmail.com.

--

Philip Durbin

Software Developer for http://dataverse.org

http://www.iq.harvard.edu/people/philip-durbin

Software Developer for http://dataverse.org

http://www.iq.harvard.edu/people/philip-durbin

Julian Gautier

Sep 28, 2021, 3:47:40 PM9/28/21

to Dataverse Users Community

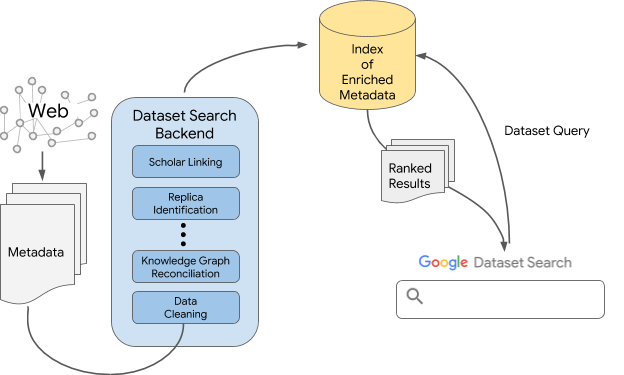

At least some of the datasets in http://dataverse.ucla.edu are already on DataCite Search, which also used to be crawled by Google Dataset Search. So even if Google Dataset Search couldn't index records from a Dataverse repository, if the repository registered DOIs, Google Dataset Search used to get those records from DataCite Search. I haven't seen any news about this changing. Does anyone in the community know? If not, maybe we could contact the DataCite team to find out more.

I think it's be better that each repository takes steps to be crawled by Google Dataset Search. But it might be worth figuring out if anything changed about how DataCite Search exposes dataset metadata to Google Dataset Search. Maybe DataCite made a change for repositories that don't want their records appearing in Google Dataset Search.

Philipp at UiT

Oct 3, 2021, 4:02:39 AM10/3/21

to Dataverse Users Community

Does anyone know how frequently Google Dataset Search (GDS) crawles repositories and other metadata providers? I know that DataverseNO is crawled and I also find DataverseNO datasets in GDS, also more recent ones from 2021, but when I tried to search for this dataset: https://doi.org/10.18710/WGWLSE, published on September 17, I couldn't find it in GDS. (I could find it in DataCite Search: https://search.datacite.org/).

Best, Philipp

Eunice Soh

Jan 7, 2022, 3:40:09 AM1/7/22

to Dataverse Users Community

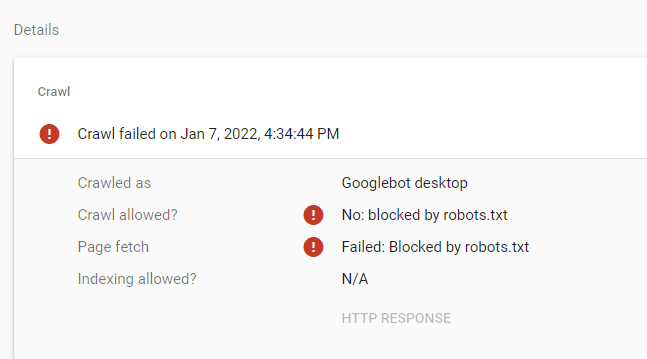

@Philip, I just tried your URL with https://search.google.com/test/rich-results: https://search.google.com/test/rich-results/result?id=NVEYVYlDm7TQMrGAVafoag

Google's saying that it's blocked by robots.txt. See https://dataverse.no/robots.txt too, it blocks all bots.

Here's Google's documentation on how to submit a repository for indexing... https://datasetsearch.research.google.com/help

Sherry Lake

Jan 19, 2022, 10:52:27 AM1/19/22

to Dataverse Users Community

UVa is still working on this. We now have a sitemap, but it doesn't look like Harvard's:

compare: https://dataverse.lib.virginia.edu/sitemap.xml AND https://dataverse.harvard.edu/sitemap.xml

My sysadmin set up robots.txt (on VirtualHost), but this still shows our old one.

So is our robots.txt in the wrong place?

OR did my sysadmin forget to do the Apache config update?

All suggestions welcomed.

Thanks.

Sherry Lake

Don Sizemore

Jan 19, 2022, 12:37:50 PM1/19/22

to dataverse...@googlegroups.com

Odum has a sitemap and a robots.txt which looks exactly like Harvard's, but GoogleBot stays mad at our datasets.

Right now we have 35 valid URLs, 152 valid with warnings, 859 errors and 3,820 excluded.

Google blames our robots.txt for blocking everything.

Don

To view this discussion on the web visit https://groups.google.com/d/msgid/dataverse-community/b45706fe-fe41-4141-9203-ff4d0d0d183an%40googlegroups.com.

Eunice Soh

Jan 19, 2022, 8:29:30 PM1/19/22

to Dataverse Users Community

Hi Sherry,

I'm not an expert on this, but have some 2 cents on Google's indexing.

sitemap.xml

Your sitemap looks alright, I think. It needs to be updated on a regular basis though with a crontab. You could submit it to Google, as well: https://developers.google.com/search/docs/advanced/sitemaps/build-sitemap.

robots.txt

The robots.txt that's being served is still blocking all bots. The template robots.txt for Dataverse can be found here: https://guides.dataverse.org/en/latest/installation/config.html?highlight=admin#ensure-robots-txt-is-not-blocking-search-engines.

There is also a robots.txt found in the application folder in Payara. It could still be serving that, rather than the one from Apache. On Apache, the rules need to be configured to redirect traffic to Apache's robots.txt (e.g., https://confluence.jaytaala.com/display/TKB/Global+robots.txt+on+Apache+reverse+proxy).

Google search console

To figure out whether Google is crawling your site, you can consider using Google Search console: https://support.google.com/webmasters/answer/9128668?hl=en. Setup is easy if you already have Google Analytics plugged into your system.

Google Dataset Search

However, I'm not too sure how much of Google indexing also translates to indexing for Google Dataset Search aka GDS, as it utilises page metadata (in form of json-ld) as well - see https://support.google.com/webmasters/thread/1960710/faq-structured-data-markup-for-datasets.

I think there are some issues with the json-ld on Dataverse, e.g. https://github.com/IQSS/dataverse/issues/5029 and https://github.com/IQSS/dataverse/issues/7349. Some of it are not major (e.g. warnings, wrong classification of people as "Things") but others causing errors, such as the license @type property as "Dataset" not "CreativeWorks". This may result in GDS not picking up the datasets.

--

If anyone has more info on GDS indexing and json-ld, do share. If I made any mistake, apologies in advance as I'm not an expert here, and feel free to correct me.

Eunice Soh

Jan 19, 2022, 8:34:12 PM1/19/22

to Dataverse Users Community

So it relies on Google's indexing, and enriched metadata in form of json-ld. So both need to be in order for datasets to be picked up.

Reply all

Reply to author

Forward

0 new messages