Caffe terrible accuracy (same net, same data) compared to matlab NNToolBox

502 views

Skip to first unread message

Rick Feynman

Feb 24, 2015, 10:09:19 AM2/24/15

to caffe...@googlegroups.com

Hi Everyone,

I seem to be in a bit of a fix. I was trying to test caffe with HDF5 image classification and it was not converging. So, to eliminate variables and pin point potential issues, I intended to do a comparison with the matlab nn toolbox. To this end, I extracted an 1764 length HOG descriptor for each 64x64 sized input images. The total number of samples was 23667 images. Then I split it into train and validation ratio of 80:20.

Then I saved the images and labels into two HDF5 files for training and testing respectively.Download data from onedrive. (~127 MB).

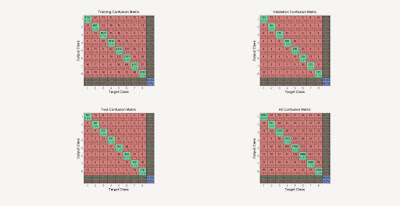

I used the same data to train the nn toolbox in matlab. The following is the image of the network. (1764 Input, 100 Tanh hidden, 8 linear output) And I am also attaching the confusion matrices. It can be seen that the net achieves around ~80% accuracy.

Now I ran the same experiment with caffe. I am attaching the solver prototext, the network file and also the stderr output. However after 70000 iterations the network only achieves 35% accuracy which is a far cry from the matlab toolbox accuracy.

What is going wrong?

I had to do this because the convnet was not converging on the original images. Now I see that even a simple MLP is also not converging. I have tried lowering the learning rate. I have tried both gaussian and xavier weight fillers, I also tried both TanH and ReLU activations. But it is not improving.

I have a paper deadline looming and I need to do this comparison fast. Any help in this regard would be appreciated.

Kind Regards,

Rick

I seem to be in a bit of a fix. I was trying to test caffe with HDF5 image classification and it was not converging. So, to eliminate variables and pin point potential issues, I intended to do a comparison with the matlab nn toolbox. To this end, I extracted an 1764 length HOG descriptor for each 64x64 sized input images. The total number of samples was 23667 images. Then I split it into train and validation ratio of 80:20.

Then I saved the images and labels into two HDF5 files for training and testing respectively.Download data from onedrive. (~127 MB).

I used the same data to train the nn toolbox in matlab. The following is the image of the network. (1764 Input, 100 Tanh hidden, 8 linear output) And I am also attaching the confusion matrices. It can be seen that the net achieves around ~80% accuracy.

Now I ran the same experiment with caffe. I am attaching the solver prototext, the network file and also the stderr output. However after 70000 iterations the network only achieves 35% accuracy which is a far cry from the matlab toolbox accuracy.

What is going wrong?

I had to do this because the convnet was not converging on the original images. Now I see that even a simple MLP is also not converging. I have tried lowering the learning rate. I have tried both gaussian and xavier weight fillers, I also tried both TanH and ReLU activations. But it is not improving.

I have a paper deadline looming and I need to do this comparison fast. Any help in this regard would be appreciated.

Kind Regards,

Rick

Nam Vo

Feb 24, 2015, 1:01:34 PM2/24/15

to caffe...@googlegroups.com

Were the data preprocessings the same, were the params similar in both cases (layer, activation function, weigh filler, bias, lr, momentum, weighdecay, gamma, etc)?

Rick Feynman

Feb 24, 2015, 1:31:53 PM2/24/15

to caffe...@googlegroups.com

The data processing was the same along with all other parameters except gamma and weight filler as matlab has no option to set those. Like I said I have fiddled with all the parameters and yet I get no better accuracy than 35% compared to ~80% in matlab. I have run the same experiment in theano and get similar results to matlab. Only in caffe there seems to be this issue.

Overclock

Feb 25, 2015, 11:37:37 PM2/25/15

to caffe...@googlegroups.com

It seems to me that Caffe iterations just go through the training data in a fixed order. So if your input has all the same class data points next to each other, that might be a problem. I had the same experience. The accuracy went back to normal after I randomly shuffle the training data. Hope this helps.

Caio Mendes

Feb 26, 2015, 2:08:04 PM2/26/15

to caffe...@googlegroups.com

So, I would remove the weight decay and test different learning rates. Caffe is very sensible to learning rates.

Reply all

Reply to author

Forward

0 new messages