counting no. of flops for a single iteration of alexnet

4,763 views

Skip to first unread message

Sam

Mar 4, 2016, 1:26:15 PM3/4/16

to Caffe Users

Hi,

I want to calculate the number of flops for a single iteration of alexnet. Can anyone tell me how can I get it?

Evan Shelhamer

Mar 4, 2016, 3:51:10 PM3/4/16

to Sam, Caffe Users

One method to do this is to compute the FLOPs from the network blob and param shapes in pycaffe. I can't turn up the script right now, but the idea is that `net.blobs['top'].shape` gives the dimensions of an output/next layer input and `net.blobs['param'][0]` and `net.blobs['param'][1]` gives the dimensions of the weights and biases respectively and from this you can layerwise calculate the number of FLOPs/multiply-adds/whatever from knowledge of the layer operation. With similar bookkeeping you can determine the computational cost of the backward pass, and finally the computational cost of the solver updates.

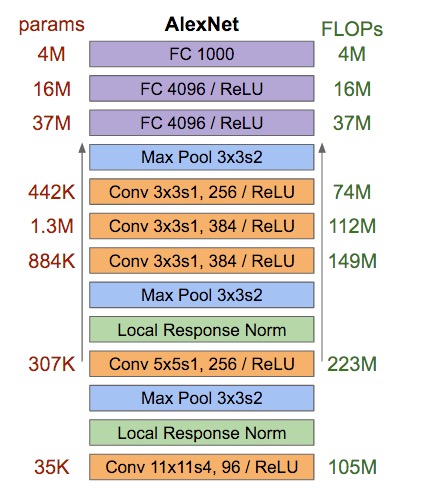

Here are a few notes on the AlexNet forward pass with batch size 1 that could help you check your work. (Note this is the original AlexNet, and not CaffeNet, which is slightly cheaper to compute.)

FLOPS:

- 725,066,088 for all conv + fc w/ biases

- 000,659,272 for ReLU

- 000,027,000 for pooling

- 000,020,000 for LRN

layer, weight ops, bias ops

conv1 105415200 290400

conv2 223948800 186624

conv3 149520384 64896

conv4 112140288 64896

conv5 74760192 43264

fc6 37748736 4096

fc7 16777216 4096

fc8 4096000 1000

For example, conv2 has 256 * (96 / 2) * 5^2 = 307,200 params

Schematic of params and forward FLOPs (on one 3x227x227 image):

Hope that helps,

Evan Shelhamer

On Fri, Mar 4, 2016 at 10:26 AM, Sam <samir...@gmail.com> wrote:

Hi,I want to calculate the number of flops for a single iteration of alexnet. Can anyone tell me how can I get it?

--

You received this message because you are subscribed to the Google Groups "Caffe Users" group.

To unsubscribe from this group and stop receiving emails from it, send an email to caffe-users...@googlegroups.com.

To post to this group, send email to caffe...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/caffe-users/565761b2-2cdf-4b55-8dcb-d7598eec11b3%40googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

Peter Neher

Apr 20, 2016, 2:24:00 AM4/20/16

to Caffe Users, samir...@gmail.com

You are only counting the number of multiplications here, right? I count both multiplications and additions and I get roughly double of your values.

Nicolae Titus

Jul 6, 2016, 1:31:57 PM7/6/16

to Caffe Users, samir...@gmail.com

Doesn't the CPU use multiply add operations anyway? counting additions shouldn't matter (just making a guess, haven't measured)

Istrate Roxana

Apr 12, 2017, 9:32:29 AM4/12/17

to Caffe Users, samir...@gmail.com

I was trying to redo the computation myself and starting from the second layer of convolution i get different results.

the output of the first convolution is [55.0, 55.0, 96].

the output of the pooling is [27.0, 27.0, 96]. 96! not 96/2! Pooling doesn't modify the depth, no?

=> the number of parameters in conv 2 = 256 * 96 * 5^2.

Am i missing something?

Hosea Lee

Sep 28, 2017, 7:56:38 AM9/28/17

to Caffe Users

Hi, now I get the same problem. Have you figured out why there should be 96/2 ? Could you please tell me your understanding? Thanks a lot~

在 2017年4月12日星期三 UTC+8下午9:32:29,Istrate Roxana写道:

在 2017年4月12日星期三 UTC+8下午9:32:29,Istrate Roxana写道:

Istrate Roxana

Sep 28, 2017, 8:13:22 AM9/28/17

to Caffe Users

I think it was a mistake. I haven't figure out a reason why it should be 96/2

Hosea Lee

Sep 28, 2017, 8:17:02 AM9/28/17

to Caffe Users

Thank you, then do you know why there are 307K param in conv2?

在 2017年9月28日星期四 UTC+8下午8:13:22,Istrate Roxana写道:

在 2017年9月28日星期四 UTC+8下午8:13:22,Istrate Roxana写道:

Istrate Roxana

Sep 28, 2017, 8:39:08 AM9/28/17

to Caffe Users

Theoretically, the number of weights in a convolution layer is given by the following formula:

number_filters * filter_width * filter_height * filter_depth, where filter_depth is wrongly computed here as 96/2, when it should only be 96.

Since they computed with filter_depth=96/2 -> 256 * (96 / 2) * 5^2 = 307,200 params.

I think it should have been: 256 * 96 * 5 * 5 the correct answer.

What do you think? Makes sense?

Hosea Lee

Sep 28, 2017, 9:03:00 AM9/28/17

to Caffe Users

But I found that if we use the same method to calculate those other layers, in conv5 we get 3^2*256*384, which is 2 times as 442K. So the division of 2 seems not be an error. Besides, the FLOPs is correct, when calculating in this way. May be there are some other factors in the first and last convolution layers?

Reference also available here: http://dgschwend.github.io/netscope/#/preset/alexnet

在 2017年9月28日星期四 UTC+8下午8:39:08,Istrate Roxana写道:

在 2017年9月28日星期四 UTC+8下午8:39:08,Istrate Roxana写道:

Daniel Villamizar

Oct 11, 2017, 5:52:58 PM10/11/17

to Caffe Users

Did anyone figure out the answer to this? The AlexNet paper shows the depth of the 2nd layer as 48 but the description states there are 96 kernel filters. Where does the divide by 2 come from?

Grant Zietsman

May 17, 2018, 4:42:39 PM5/17/18

to Caffe Users

This is pretty late but I did the calculations independently and my results correlated with Evan's, although I didn't divide by 2.

I think the division by 2 is related to the fact that the AlexNet training was split across two GPUs. So if you don't have two GPUs, you can't divide by 2.

Accounting for both multiplications and accumulations while only considering the convolutional and pooling layers, my answer was a little over 2 GFLOPs. I would estimate that worst case implementation would require 2.5 GFLOPs.

There is a lot of overlap in the computation so if any optimization is performed you can expect a significantly lower number of required FLOPs.

I think the division by 2 is related to the fact that the AlexNet training was split across two GPUs. So if you don't have two GPUs, you can't divide by 2.

Accounting for both multiplications and accumulations while only considering the convolutional and pooling layers, my answer was a little over 2 GFLOPs. I would estimate that worst case implementation would require 2.5 GFLOPs.

There is a lot of overlap in the computation so if any optimization is performed you can expect a significantly lower number of required FLOPs.

Reply all

Reply to author

Forward

0 new messages