Bonsai.Shaders: floating point versus integer textures

213 views

Skip to first unread message

jonathan...@gmail.com

Jul 1, 2021, 10:15:54 AM7/1/21

to Bonsai Users

Hi,

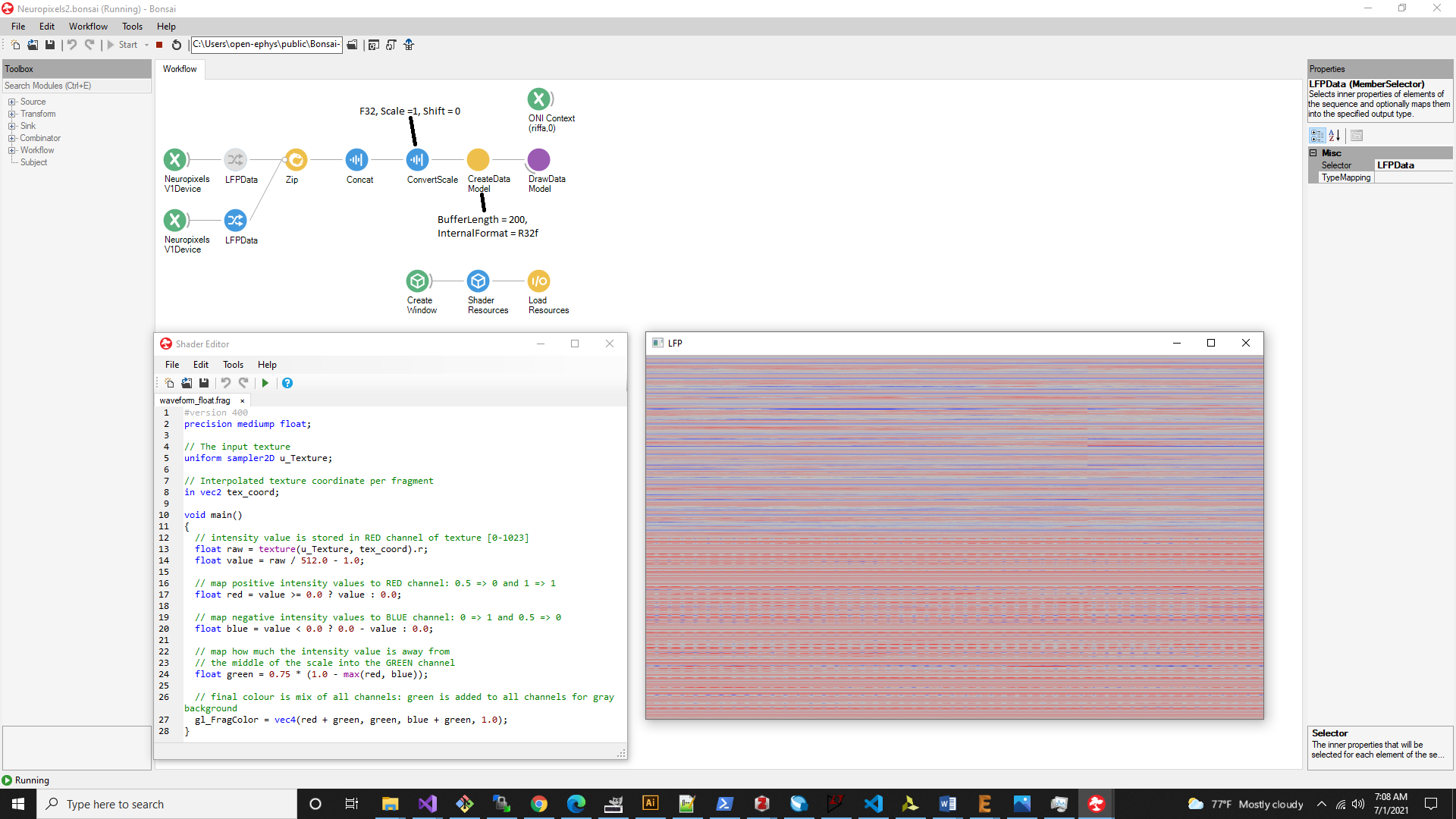

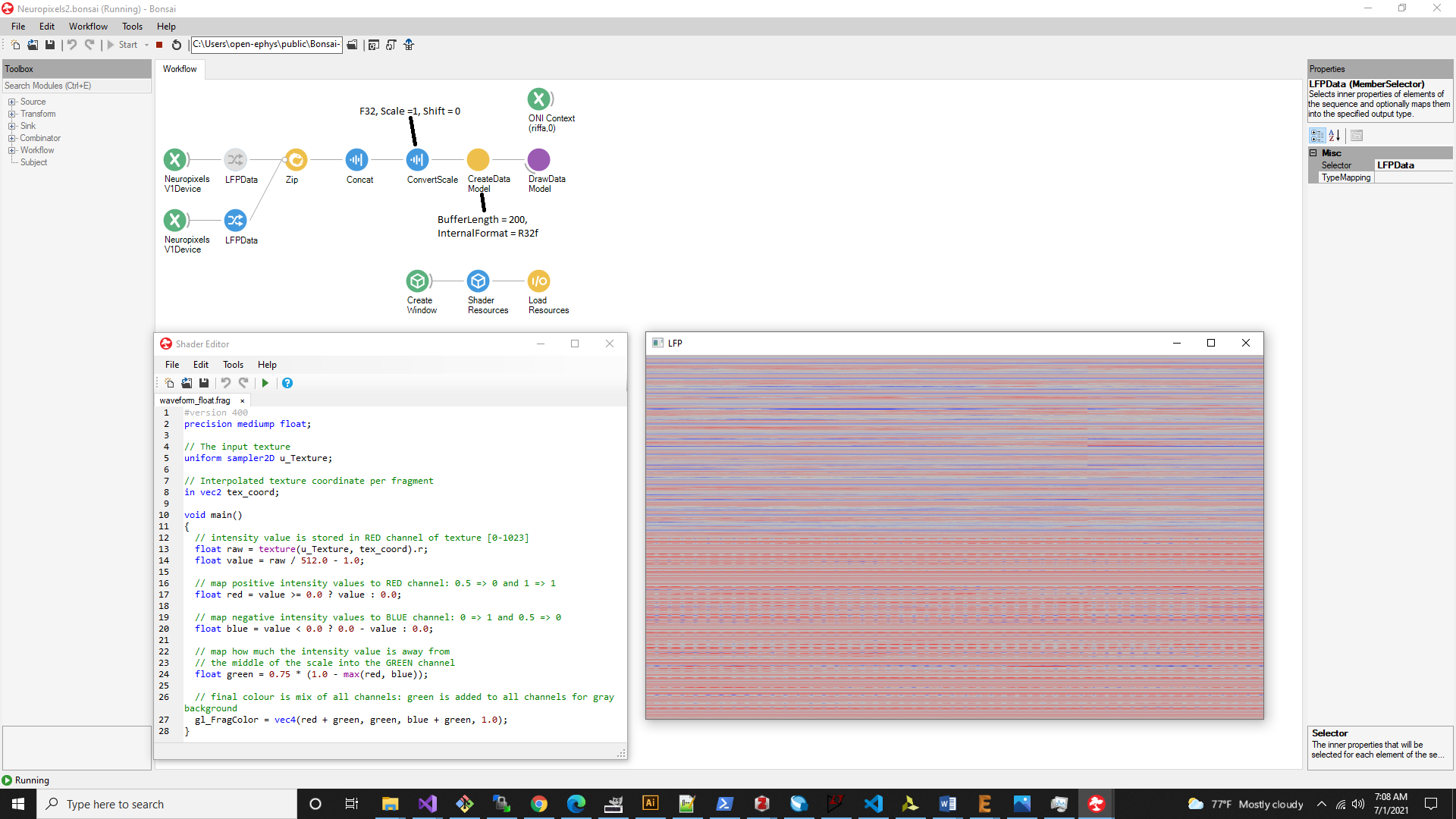

I'm making progress on using Bonsai.Shaders and C# OpenGL bindings to create a Neuropixels data visualizer based on the NeuroSeeker library and guidance from my original post:

and learning a lot about OpenGL along the way. I can see now why people are excited about Vulkan :). I'm very close to getting the behavior I want, but I'm stuck at a last detail that I'm failing to understand. The issue comes down to this: I can get proper visualization if I provide:

- OpenCV Mat's with elements of Depth.F32

- Use a sampler2D in my fragment shader

- Use R32f internal texture data format

- OpenCV Mat's with elements of Depth.U16

- Use a usampler2D in my fragment shader

- Use R16ui internal texture data format

Here is the non-functional ushort pipeline with the conversion step removed (CV.Mats have Depth.U16):

Any insight would be great.

- Jon

Gonçalo Lopes

Jul 4, 2021, 8:12:00 PM7/4/21

to jonathan...@gmail.com, Bonsai Users

Hi Jon,

I'm pretty sure I was able to upload U16 data directly to shaders in the past, I think there is a way where OpenGL will even automatically convert data to floating point, so you can use the normal sampler instead of a usampler.

To try and reconstruct this point I butchered a bit the waveform visualizer sample below and was able to upload a U16 microphone mat to a fragment shader by using either Luminance or R16 as the internal format (but not R16i).

I believe internally OpenGL / GLSL will scale these formats to the range [0, 1] matching the dynamic range of the declared internal format, but it's all done in the GPU/driver level so quite fast and maybe useful for your purposes?

Can you send your current example workflow so I can monkey around with it a bit? Otherwise maybe these examples will be useful.

--

You received this message because you are subscribed to the Google Groups "Bonsai Users" group.

To unsubscribe from this group and stop receiving emails from it, send an email to bonsai-users...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/bonsai-users/8a5dd640-123d-4088-9bdf-efae044461een%40googlegroups.com.

jonathan...@gmail.com

Jul 6, 2021, 4:14:12 PM7/6/21

to Bonsai Users

Hi Goncalo,

Yep! That does it. I have such a hard time figuring out how data type conversion works out in this OpenGL world. I guess reading the red book or playing with this stuff for a while would allow me to figure it out but its a pretty intimidating API. In any case, this is now working great so thanks. Here is the relevant functional workflow and shaders for those that might benefit from it:

I'm now going to try to make versions of the CreateDataModel and ViewDataModel nodes that plot linestrips for actual waveform views. Or do you suggest trying to create this functionality in Bonsai using Bonsai.Shaders and creating a workflow node? I don't know if I can get the same level of buffering performance this way though.

- Jon

Gonçalo Lopes

Jul 11, 2021, 8:51:40 PM7/11/21

to jonathan...@gmail.com, Bonsai Users

It will definitely be slower to buffer all data as line vertices... I once tried an alternative approach to render line drawings with similar performance to the textures.

The idea is to model lines parametrically in the fragment shader, by turning the rendering of lines upside down. The idea is to ask the following question for each pixel: am I inside or outside the waveform? if I am inside, the output is color, otherwise the output is transparent.

To check this it is enough to use the data sampled from the texture by testing whether the height of the pixel (texCoord.y) falls within some distance of the value stored in the texture at that point horizontally (texCoord.x). See below (also attached):

#version 400 uniform float thickness = 0.01; uniform sampler2D tex; in vec2 texCoord; out vec4 fragColor; void main() { vec4 texel = texture(tex, texCoord); float value = abs(texCoord.y - texel.r) <= thickness ? 1 : 0; fragColor = vec4(value, value, value, 1); }

I find it quite satisfying that it takes about the same complexity to render the line version as the texture version :)

There are a few caveats to solve though, since the lines are not connected if there is not enough horizontal sampling then the waveform will start to "tear" and reveal it is made of discrete points rather than a line. This would require some interpolation strategy to fill in the gaps.

There is also some work to think about when rendering multiple channels. One approach I tried at the time was to use the vertical position of the texCoord.y to decide which sample to get from the texture. You need to use NearestNeighbor for this to work properly.

I never took this route to its logical conclusion, but given how much work I've seen done on parametric modelling of curves in shaders it looks feasible and might be really performant.

To view this discussion on the web visit https://groups.google.com/d/msgid/bonsai-users/0938600e-88c0-43c9-9372-9a686a079045n%40googlegroups.com.

Reply all

Reply to author

Forward

0 new messages