A scenario of tcp performance killer

678 views

Skip to first unread message

mingkun bian

May 7, 2021, 3:51:24 AM5/7/21

to BBR Development

Hi,

I found that some tcp packets of http/1.1 are sent very slowly in linux version 4.18.0, then I use bcc to collect some tcp stack and bbr info in tcp_ack、tcp_v4_send_check and tcp_retransmit_timer, then I find some surprising packet as following:

1. In line 66, why did not continue to send the remaining lost packets marked by rack but using rto?(we have changing the max rto to 1 second)

2. After first rto, why did ack not to trigger a new send but using tcp_retransmit_timer?

GET/ACK _time(us)|seq |ack |size |snd_nxt |rwnd |cwnd|sndbuf_pacing |min_rtt_us|rtt_cnt|round_start|full_bw|max_full_bw|write_seq|snd_una|packets_out|sacked_out|lost_out|retrans_out|tcp_state|bbr_state|srtt_us/8

1 GET _0 _0 _0 _822 _0 _83968 _47 _0 _9169873 _19745 _0 _0_0 _0 _0 _0 _0 _0 _0 _0 _0 _19745

2 SND _10 _0 _822 _0 _0 _83968 _47 _0 _9169873 _19745 _0 _0_0 _0 _0 _0 _0 _0 _0 _0 _0 _19745

3 SND _3170 _0 _822 _5313 _0 _83968 _47 _5313 _9169873 _19745 _0 _0_0 _0 _0 _0 _0 _0 _0 _0 _0 _19745

4 ACK _27370 _822 _5313 _0 _5313 _83968 _47 _5313 _9169873 _19745 _0 _0_0 _0 _0 _4 _0 _0 _0 _0 _0 _19745

5 GET _0 _0 _0 _904 _0 _94208 _51 _0 _9169873 _19745 _1 _1_0 _2773 _0 _0 _0 _0 _0 _0 _0 _20301

6 SND _5739 _0 _904 _8088 _0 _94208 _51 _45832 _9169873 _19745 _1 _1_0 _2773 _0 _0 _0 _0 _0 _0 _0 _20301

7 SND _6632 _8088 _904 _8088 _8088 _94208 _51 _230252_9169873 _19745 _1 _1_0 _2773 _0 _6 _0 _0 _0 _0 _0 _20301

8 SND _7527 _16176 _904 _8088 _16176 _94208 _51 _230252_9169873 _19745 _1 _1_0 _2773 _0 _12 _0 _0 _0 _0 _0 _20301

9 SND _8421 _24264 _904 _8088 _24264 _94208 _51 _230252_9169873 _19745 _1 _1_0 _2773 _0 _18 _0 _0 _0 _0 _0 _20301

10 SND _9314 _32352 _904 _8088 _32352 _94208 _51 _230252_9169873 _19745 _1 _1_0 _2773 _0 _24 _0 _0 _0 _0 _0 _20301

11 SND _10207 _40440 _904 _8088 _40440 _94208 _51 _230252_9169873 _19745 _1 _1_0 _2773 _0 _30 _0 _0 _0 _0 _0 _20301

12 SND _11101 _48528 _904 _5392 _48528 _94208 _51 _230252_9169873 _19745 _1 _1_0 _2773 _0 _36 _0 _0 _0 _0 _0 _20301

13 SND _11700 _53920 _904 _8088 _53920 _94208 _51 _230252_9169873 _19745 _1 _1_0 _2773 _0 _40 _0 _0 _0 _0 _0 _20301

14 SND _12592 _62008 _904 _6740 _62008 _94208 _51 _230252_9169873 _19745 _1 _1_0 _2773 _0 _46 _0 _0 _0 _0 _0 _20301

15 ACK _23791 _904 _1348 _0 _68748 _94208 _51 _230252_9169873 _19745 _1 _1_0 _2773 _0 _51 _0 _0 _0 _0 _0 _20301

16 SND _23799 _68748 _904 _2696 _68748 _93184 _52 _228904_9169873 _18053 _2 _1_0 _2773 _1348 _50 _0 _0 _0 _0 _0 _20020

17 ACK _33807 _904 _8088 _0 _71444 _93184 _52 _228904_9169873 _18053 _2 _1_0 _2773 _1348 _52 _0 _0 _0 _0 _0 _20020

18 SND _33817 _71444 _904 _6740 _71444 _86528 _52 _222164_9169873 _18053 _2 _0_0 _3586 _8088 _47 _0 _0 _0 _0 _0 _21026

19 ACK _33827 _904 _14828 _0 _78184 _86528 _52 _222164_9169873 _18053 _2 _0_0 _3586 _8088 _52 _0 _0 _0 _0 _0 _21026

20 SND _34583 _78184 _904 _6740 _78184 _79872 _52 _215424_9169873 _18053 _2 _0_0 _6570 _14828 _47 _0 _0 _0 _0 _0 _21797

21 ACK _59762 _904 _24264 _0 _84924 _79872 _52 _215424_9169873 _18053 _2 _0_0 _6570 _14828 _52 _0 _0 _0 _0 _0 _21797

22 SND _59773 _84924 _904 _8088 _84924 _70656 _52 _205988_9169873 _18053 _2 _0_0 _6570 _24264 _45 _0 _0 _0 _0 _0 _25714

23 SND _60672 _93012 _904 _1348 _93012 _70656 _52 _205988_9169873 _18053 _2 _0_0 _6570 _24264 _51 _0 _0 _0 _0 _0 _25714

24 ACK _64780 _904 _26960 _0 _94360 _70656 _52 _205988_9169873 _18053 _2 _0_0 _6570 _24264 _52 _0 _0 _0 _0 _0 _25714

25 ACK _64789 _904 _32352 _0 _94360 _68096 _52 _203292_9169873 _18053 _2 _0_0 _6570 _26960 _50 _0 _0 _0 _0 _0 _29545

26 ACK _68764 _904 _40440 _0 _94360 _62976 _52 _197900_9169873 _18053 _2 _0_0 _6818 _32352 _46 _0 _0 _0 _0 _0 _32898

27 SND _68773 _94360 _904 _1376 _94360 _55296 _52 _189812_9169873 _18053 _2 _0_0 _7985 _40440 _40 _0 _0 _0 _0 _0 _36217

28 ACK _68782 _904 _43136 _0 _95736 _55296 _52 _189812_9169873 _18053 _2 _0_0 _7985 _40440 _42 _0 _0 _0 _0 _0 _36217

29 ACK _68789 _904 _48528 _0 _95736 _52736 _52 _187116_9169873 _18053 _2 _0_0 _8515 _43136 _40 _0 _0 _0 _0 _0 _39012

30 ACK _68805 _904 _68748 _0 _95736 _47616 _52 _181724_9169873 _18053 _2 _0_0 _9579 _48528 _36 _0 _0 _0 _0 _0 _41458

31 ACK _68813 _904 _83576 _0 _95736 _65536 _67 _161504_9169873 _18053 _2 _0_0 _13567 _68748 _21 _0 _0 _0 _0 _0 _43489

32 SND _68933 _95736 _904 _4016 _95736 _65536 _78 _146676_9169873 _18053 _3 _1_24456 _24456 _83576 _10 _0 _0 _0 _0 _0 _43679

33 SND _69381 _99752 _904 _8088 _99752 _65536 _78 _146676_9169873 _18053 _3 _1_24456 _24456 _83576 _13 _0 _0 _0 _0 _0 _43679

34 SND _70274 _107840 _904 _8088 _107840 _65536 _78 _146676_9169873 _18053 _3 _1_24456 _24456 _83576 _19 _0 _0 _0 _0 _0 _43679

35 SND _71167 _115928 _904 _8088 _115928 _65536 _78 _146676_9169873 _18053 _3 _1_24456 _24456 _83576 _25 _0 _0 _0 _0 _0 _43679

36 SND _72061 _124016 _904 _8088 _124016 _65536 _78 _146676_9169873 _18053 _3 _1_24456 _24456 _83576 _31 _0 _0 _0 _0 _0 _43679

37 SND _72955 _132104 _904 _8088 _132104 _65536 _78 _146676_9169873 _18053 _3 _1_24456 _24456 _83576 _37 _0 _0 _0 _0 _0 _43679

38 SND _73848 _140192 _904 _5392 _140192 _65536 _78 _146676_9169873 _18053 _3 _1_24456 _24456 _83576 _43 _0 _0 _0 _0 _0 _43679

39 SND _74448 _145584 _904 _3528 _145584 _65536 _78 _146676_9169873 _18053 _3 _1_24456 _24456 _83576 _47 _0 _0 _0 _0 _0 _43679

40 ACK _81885 _904 _86272 _0 _149112 _65536 _78 _146676_9169873 _18053 _3 _1_24456 _24456 _83576 _50 _0 _0 _0 _0 _0 _43679

41 SND _81894 _149112 _904 _2696 _149112 _65536 _80 _143980_9169873 _18053 _3 _0_24456 _24456 _86272 _48 _0 _0 _0 _0 _0 _44132

42 ACK _98831 _904 _115928 _0 _151808 _65536 _80 _143980_9169873 _18053 _3 _0_24456 _24456 _86272 _50 _0 _0 _0 _0 _0 _44132

43 SND _98844 _151808 _904 _8088 _151808 _65536 _103 _114324_9169873 _18053 _4 _1_24456 _24456 _115928 _27 _0 _0 _0 _0 _0 _43498

44 SND _99738 _159896 _904 _8088 _159896 _65536 _103 _114324_9169873 _18053 _4 _1_24456 _24456 _115928 _33 _0 _0 _0 _0 _0 _43498

45 SND _100631 _167984 _904 _8088 _167984 _65536 _103 _114324_9169873 _18053 _4 _1_24456 _24456 _115928 _39 _0 _0 _0 _0 _0 _43498

46 SND _101525 _176072 _904 _5392 _176072 _65536 _103 _114324_9169873 _18053 _4 _1_24456 _24456 _115928 _45 _0 _0 _0 _0 _0 _43498

47 ACK _106852 _904 _132104 _0 _181464 _65536 _103 _114324_9169873 _18053 _4 _1_24456 _24456 _115928 _49 _0 _0 _0 _0 _0 _43498

48 SND _106861 _181464 _904 _8088 _181464 _65536 _103 _98148 _9169873 _18053 _4 _0_24456 _24456 _132104 _37 _0 _0 _0 _0 _0 _42521

49 SND _107753 _189552 _904 _1864 _189552 _65536 _103 _98148 _9169873 _18053 _4 _0_24456 _24456 _132104 _43 _0 _0 _0 _0 _0 _42521

50 ACK _111779 _904 _146932 _0 _191416 _65536 _103 _98148 _9169873 _18053 _4 _0_24456 _24456 _132104 _45 _0 _0 _0 _0 _0 _42521

51 SND _111788 _191416 _904 _8088 _191416 _65536 _103 _83320 _9169873 _18053 _4 _0_24456 _24456 _146932 _34 _0 _0 _0 _0 _0 _42059

52 SND _112681 _199504 _904 _8088 _199504 _65536 _103 _83320 _9169873 _18053 _4 _0_24456 _24456 _146932 _40 _0 _0 _0 _0 _0 _42059

53 SND _113576 _207592 _904 _4876 _207592 _65536 _103 _83320 _9169873 _18053 _4 _0_24456 _24456 _146932 _46 _0 _0 _0 _0 _0 _42059

54 ACK _116768 _904 _151808 _0 _212468 _65536 _103 _83320 _9169873 _18053 _4 _0_24456 _24456 _146932 _50 _0 _0 _0 _0 _0 _42059

55 SND _116776 _212468 _904 _4876 _212468 _65536 _103 _78444 _9169873 _18053 _4 _0_24456 _24456 _151808 _46 _0 _0 _0 _0 _0 _42092

56 ACK _131762 _904 _178768 _0 _217344 _65536 _103 _78444 _9169873 _18053 _4 _0_24456 _24456 _151808 _50 _0 _0 _0 _0 _0 _42092

57 SND _131773 _217344 _904 _8088 _217344 _65536 _103 _51484 _9169873 _18053 _5 _1_24456 _24456 _178768 _30 _0 _0 _0 _0 _0 _40945

58 SND _132666 _225432 _904 _4820 _225432 _65536 _103 _51484 _9169873 _18053 _5 _1_24456 _24456 _178768 _36 _0 _0 _0 _0 _0 _40945

59 ACK _136771 _904 _194112 _0 _230252 _65536 _103 _51484 _9169873 _18053 _5 _1_24456 _24456 _178768 _40 _0 _0 _0 _0 _0 _40945

60 ACK _144761 _904 _196808 _0 _230252 _65536 _103 _36140 _9169873 _18053 _5 _0_24456 _24456 _194112 _28 _0 _0 _0 _0 _0 _40233

61 ACK _153778 _904 _196808 _0 _230252 _62976 _103 _33444 _9169873 _18053 _5 _0_24456 _24456 _196808 _26 _1 _0 _0 _1 _0 _39325

62 SND _153789 _196808 _904 _1348 _230252 _63488 _10 _33444 _1945318 _18053 _6 _1_24456 _24456 _196808 _26 _2 _15_0 _3 _2 _37160

63 ACK _158763 _904 _196808 _0 _230252 _63488 _10 _33444 _1945318 _18053 _6 _1_24456 _24456 _196808 _26 _2 _15_1 _3 _2 _37160

64 SND _158774 _198156 _904 _1348 _230252 _63488 _2 _33444 _2431647 _18053 _6 _0_24456 _24456 _196808 _26 _3 _23_1 _3 _2 _35778

65 ACK _171767 _904 _198156 _0 _230252 _63488 _2 _33444 _2431647 _18053 _6 _0_24456 _24456 _196808 _26 _3 _23_2 _3 _2 _35778

66 ACK _181819 _904 _199504 _0 _230252 _62464 _4 _32096 _2431647 _18053 _7 _1_24456 _24456 _198156 _25 _3 _22_1 _3 _2 _75805

67 RTSND _889192 _-100789098_-1348593670_0 _230252 _61440 _100 _30748 _1458988 _18053 _7 _0_0 _24456 _199504 _24 _3 _0 _0 _1 _2 _111455

68 SND _889202 _199504 _904 _1348 _230252 _61440 _1 _30748 _1458988 _18053 _7 _1_0 _24456 _199504 _24 _3 _21_0 _4 _2 _111455

69 ACK _913780 _904 _200852 _0 _230252 _61440 _1 _30748 _1458988 _18053 _7 _1_0 _24456 _199504 _24 _3 _21_1 _4 _2 _111455

70 RTSND _1721191 _-100789098_-1348593670_0 _230252 _60928 _100 _29400 _2431647 _18053 _8 _1_0 _24456 _200852 _23 _3 _0 _0 _1 _2 _142898

71 SND _1721201 _200852 _904 _1348 _230252 _60928 _1 _29400 _2431647 _18053 _8 _1_0 _24456 _200852 _23 _3 _20_0 _4 _2 _142898

72 ACK _1741783 _904 _202200 _0 _230252 _60928 _1 _29400 _2431647 _18053 _8 _1_0 _24456 _200852 _23 _3 _20_1 _4 _2 _142898

73 RTSND _2682200 _-100789098_-1348593670_0 _230252 _60928 _100 _28052 _2431647 _18053 _9 _1_0 _24456 _202200 _22 _3 _0 _0 _1 _2 _169911

74 SND _2682209 _202200 _904 _1348 _230252 _60928 _1 _28052 _2431647 _18053 _9 _1_0 _24456 _202200 _22 _3 _19_0 _4 _2 _169911

75 ACK _2701763 _904 _203548 _0 _230252 _60928 _1 _28052 _2431647 _18053 _9 _1_0 _24456 _202200 _22 _3 _19_1 _4 _2 _169911

76 RTSND _3642192 _-100789098_-1348593670_0 _230252 _60928 _88 _26704 _2431647 _18053 _10 _1_0 _24456 _203548 _21 _3 _0 _0 _1 _2 _193422

77 SND _3642204 _203548 _904 _1348 _230252 _60928 _1 _26704 _2431647 _18053 _10 _1_0 _24456 _203548 _21 _3 _18_0 _4 _2 _193422

78 ACK _3666780 _904 _204896 _0 _230252 _60928 _1 _26704 _2431647 _18053 _10 _1_0 _24456 _203548 _21 _3 _18_1 _4 _2 _193422

79 RTSND _4665208 _-100789098_-1348593670_0 _230252 _60928 _88 _25356 _2431647 _18053 _11 _1_0 _24456 _204896 _20 _3 _0 _0 _1 _2 _214619

80 SND _4665217 _204896 _904 _1348 _230252 _60928 _1 _25356 _2431647 _18053 _11 _1_0 _24456 _204896 _20 _3 _17_0 _4 _2 _214619

81 ACK _4684821 _904 _206244 _0 _230252 _60928 _1 _25356 _2431647 _18053 _11 _1_0 _24456 _204896 _20 _3 _17_1 _4 _2 _214619

82 RTSND _5690190 _-100789098_-1348593670_0 _230252 _60928 _88 _24008 _2431647 _18053 _12 _1_0 _24456 _206244 _19 _3 _0 _0 _1 _2 _232542

83 SND _5690199 _206244 _904 _1348 _230252 _60928 _1 _24008 _2431647 _18053 _12 _1_0 _24456 _206244 _19 _3 _16_0 _4 _2 _232542

84 ACK _5709796 _904 _207592 _0 _230252 _60928 _1 _24008 _2431647 _18053 _12 _1_0 _24456 _206244 _19 _3 _16_1 _4 _2 _232542

85 RTSND _6713191 _-100789098_-1348593670_0 _230252 _60928 _88 _22660 _2431647 _18053 _13 _1_0 _24456 _207592 _18 _3 _0 _0 _1 _2 _248224

86 SND _6713202 _207592 _904 _1348 _230252 _60928 _1 _22660 _2431647 _18053 _13 _1_0 _24456 _207592 _18 _3 _15_0 _4 _2 _248224

87 ACK _6737764 _904 _208940 _0 _230252 _60928 _1 _22660 _2431647 _18053 _13 _1_0 _24456 _207592 _18 _3 _15_1 _4 _2 _248224

This may have nothing to do with bbr, but maybe you know this very well.

Thanks.

Neal Cardwell

May 7, 2021, 9:12:15 AM5/7/21

to mingkun bian, BBR Development

Hi,

Thanks for posting.

A few questions to get a little more context/info:

+ What is the exact kernel version you are using? ("uname -r -v")

+ What's the congestion control algorithm you are using? ("sysctl net.ipv4.tcp_congestion_control")

+ What is your qdisc setup? ("tc qdisc show")

+ When you say, "we have changing the max rto to 1 second", can you please post any custom patches or sysctl settings you have made, either as attachments or a branch on github?

+ Can you please provide a tcpdump packet trace of this traffic? ("tcpdump -w /tmp/test.pcap -s 120 -i $DEVICE -c 100000 port $PORT")

thanks,

neal

--

You received this message because you are subscribed to the Google Groups "BBR Development" group.

To unsubscribe from this group and stop receiving emails from it, send an email to bbr-dev+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/bbr-dev/7b1b87cd-c927-4589-ac91-b60a55bae7adn%40googlegroups.com.

mingkun bian

May 8, 2021, 2:28:03 AM5/8/21

to BBR Development

Hi Neal,

+ What is the exact kernel version you are using? ("uname -r -v")

>>The original kernel version is “linux-4.18.0-147.el8”, I download source code from “https://access.redhat.com/downloads/content/479/ver=/rhel---8/8.1/x86_64/packages”, then rebuild the kernel.

+ What's the congestion control algorithm you are using? ("sysctl net.ipv4.tcp_congestion_control")

>>net.ipv4.tcp_congestion_control = bbr

+ What is your qdisc setup? ("tc qdisc show")

>>

...

qdisc mq 0: dev enp5s0f1 root

qdisc fq 0: dev enp5s0f1 parent :20 limit 10000p flow_limit 100p buckets 1024 orphan_mask 1023 quantum 3028 initial_quantum 15140 refill_delay 40.0ms

qdisc fq 0: dev enp5s0f1 parent :1f limit 10000p flow_limit 100p buckets 1024 orphan_mask 1023 quantum 3028 initial_quantum 15140 refill_delay 40.0ms

qdisc fq 0: dev enp5s0f1 parent :1e limit 10000p flow_limit 100p buckets 1024 orphan_mask 1023 quantum 3028 initial_quantum 15140 refill_delay 40.0ms

qdisc fq 0: dev enp5s0f1 parent :1d limit 10000p flow_limit 100p buckets 1024 orphan_mask 1023 quantum 3028 initial_quantum 15140 refill_delay 40.0ms

qdisc fq 0: dev enp5s0f1 parent :1c limit 10000p flow_limit 100p buckets 1024 orphan_mask 1023 quantum 3028 initial_quantum 15140 refill_delay 40.0ms

...

+ When you say, "we have changing the max rto to 1 second", can you please post any custom patches or sysctl settings you have made, either as attachments or a branch on github?

>>

1. In tcp_timer.c->tcp_retransmit_timer, modification is as follows:

--- a/tcp_timer.c_bak

+++ b/tcp_timer.c

@@ -559,6 +559,9 @@ out_reset_timer:

} else {

/* Use normal (exponential) backoff */

icsk->icsk_rto = min(icsk->icsk_rto << 1, TCP_RTO_MAX);

+ if (icsk->icsk_rto > 1*HZ) {

+ icsk->icsk_rto = 1*HZ;

+ }

}

inet_csk_reset_xmit_timer(sk, ICSK_TIME_RETRANS,

tcp_clamp_rto_to_user_timeout(sk), TCP_RTO_MAX);

2. another modification, I change the max zero window probes to 1 second.

In tcp.h->tcp_probe0_when

--- a/tcp.h_bak

+++ b/tcp.h

@@ -1233,6 +1233,9 @@ static inline unsigned long tcp_probe0_when(const struct sock *sk,

unsigned long max_when)

{

u64 when = (u64)tcp_probe0_base(sk) << inet_csk(sk)->icsk_backoff;

+ if(when > 1*HZ){

+ when = 1*HZ;

+ }

return (unsigned long)min_t(u64, when, max_when);

}

+ Can you please provide a tcpdump packet trace of this traffic? ("tcpdump -w /tmp/test.pcap -s 120 -i $DEVICE -c 100000 port $PORT")

>>

The current network traffic is relatively large, it may take a time to catch packet trace by tcpdump, I will provide it as quickly as possible.

Now the data sampled by bcc is basically the same as tcpdump,and there is more detailed information than tcpdump,I have uploaded the aligned data to the attachment, it may look better.

For example:

"GET" means that an http GET request was received from client

"ACK" means that an tcp ACK was received from client

"SND" means that server send body(size is not 0) or ACK(size is 0) to client

"RTSND" means rto timeout, which enter "tcp_retransmit_timer", and next "SND" is the real send.

line 1, “GET” means that an http GET request was received from client, time is 0, seq and ack is 0, size is 822, rwnd(client_cwnd) is 83968 and so on.

line 2, "SND" means that server send response to client, time is 10 us, seq is 0, ack is 822, size is 0(Server ack client's "GET")

line3, "SND" means that server send response to client, time is 3170 us, seq is 0, ack is 822, size is 5313

line4, "ACK" means that an tcp ACK was received from client.

...

line 67, "RTSND" means rto timeout, which enter "tcp_retransmit_timer", the seq and ack is not a real value.

line 68, "SND" means the real send which is marked by line 67.

bcc collect tcp stack and bbr info at the entrance of tcp_ack、tcp_v4_send_check and tcp_retransmit_timer,so some fields need to update at next line,such as packets_out、sacked_out、lost_out、retrans_out.

Neal Cardwell

May 8, 2021, 12:26:35 PM5/8/21

to mingkun bian, BBR Development, Yuchung Cheng

Thanks for the info!

I have a theory: for the RTSND event in line 67 (enterring tcp_retransmit_timer()), it logs:

cwnd:100 ... lost_out:0 retrans_out:0 tcp_state:1 (TCP_CA_Disorder)

This suggests that at the time the RTO timer fired the flow was in TCP_CA_Disorder, with 0 packets marked as lost, and a big cwnd of 100. AFAICT this suggests that the previous ACK caused an undo event (probably based on TCP timestamps, aka an Eifel undo), which took the flow out of tcp_state:TCP_CA_Recovery and restored a large cwnd. Given that the flow was not in lost recovery, and did not think any packets were lost, that would explain why it's not retransmitting anything.

Hmm. My guess here is that the connection had reordering and then loss in the same flight of data, and triggered loss recovery, but when the connection detected the reordering and undid the loss recovery, it was left without any RACK reordering timer in place for a quick detection of the real loss.

Upon an undo event, it seems if something is SACKed in the scoreboard we should schedule the RACK reorder timer based on the previously SACKed data, rather than falling back to an RTO as it seems we do here.

We will try to look into this to see if the loss recovery behavior can be improved.

Thanks for the report!

best,

neal

ps: In general, to know why packets were not sent, we'd need to know the cwnd and pacing rate and packets_in_flight before the connection started to decide whether to transmit something. So I would suggest that instead of instrumenting the entrance of tcp_ack(), it would shed more like to instrument the entrance of tcp_xmit_recovery(), which would show the state variables after the connection has updated its SACK scoreboard, cwnd, and pacing rate, slightly before the point at which it decides how much to send.

To view this discussion on the web visit https://groups.google.com/d/msgid/bbr-dev/f4cd8535-8209-45ab-b052-f08864c7937dn%40googlegroups.com.

mingkun bian

May 10, 2021, 9:05:16 AM5/10/21

to BBR Development

Hi Neal,

I have updated the more detailed bcc info(add tsval、tsecr and tcp_sock' recv_sack_cache[4] ) to attachment, and add more bcc samples.

In tcp_ack,

tsecr = ((struct tcp_sock*)sk)->rx_opt.rcv_tsecr + ((struct tcp_sock*)sk)->tsoffset, but it does not look like

accurate sometimes.

For example:

1. In tcptrace.txt:

In line 66, you say that "AFAICT this suggests that the previous ACK caused an undo event (probably based on TCP timestamps, aka an Eifel undo)", and the tsecr is 2344362543(In SND lines, I can not find the same tsval, so I am not sure whether the calculation of tsecr is correct ),

In line 66, you say that "AFAICT this suggests that the previous ACK caused an undo event (probably based on TCP timestamps, aka an Eifel undo)", and the tsecr is 2344362543(In SND lines, I can not find the same tsval, so I am not sure whether the calculation of tsecr is correct ),

In line 62, the

timestamp of the first retransmission is 2344362876, so 2344362543 < 2344362876, Eifel will undo recovery.

A. “Given that the flow was not in lost recovery, and did not think any packets were lost, that would explain why it's not retransmitting anything"

>>In line 67, sndbuf is 30748, snd_una is 199504, snd_nxt is 230252, so there is not any more new date to send. assuming that there is still new data(sndbuf is bigger than 30748) to send at this time, should new data be sent?

B. “My guess here is that the connection had reordering and then loss in the same flight of data, and triggered loss recovery, but when the connection detected the reordering and undid the loss recovery, it was left without any RACK reordering timer in place for a quick detection of the real loss.”

>>According to the analysis of the above timestamp, Eifel undo the recovery in tcp_try_undo_partial,Is "without any RACK reordering timer" the logic of the red position?

static void tcp_fastretrans_alert(struct sock *sk, const u32 prior_snd_una,

bool is_dupack, int *ack_flag, int *rexmit)

...

/* E. Process state. */

switch (icsk->icsk_ca_state) {

case TCP_CA_Recovery:

if (!(flag & FLAG_SND_UNA_ADVANCED)) {

if (tcp_is_reno(tp) && is_dupack)

tcp_add_reno_sack(sk);

} else {

if (tcp_try_undo_partial(sk, prior_snd_una))

return;

/* Partial ACK arrived. Force fast retransmit. */

do_lost = tcp_is_reno(tp) ||

tcp_force_fast_retransmit(sk);

}

if (tcp_try_undo_dsack(sk)) {

tcp_try_keep_open(sk);

return;

}

tcp_identify_packet_loss(sk, ack_flag);

break;

C. In line 67 - 87, after rto sending, why does tcp state change to 1? In 67-69, Is it caused by the same reason Eifel(2344363273 < 2344363611)?

But it seems obvious that 69 line‘s "ACK" is the response of line 68' "SND". 2. In

tcptrace2 .txt:

"TLPS" means that TLP send, which enter "tcp_send_loss_probe", the next line "SND" is the real send.

In line 16, the timestamp of the first retransmission is 57722313

In line 17, the ACK's tsecr is the same 57722313, It does not satisfy

Eifel algorithm,but why does it still undo recovery?Is it because of DSACK(line 17‘s recv_sack_cache will update in line 18) “0-1348”.

In line 18 RTSND, tcp state is 1, but lost_out is 46 not 0, it's different from tcprate.txt.

3. In

tcptrace3 .txt:

In line 1067, the starting tcp state of the problem is 4.

4. In tcptrace8 .txt:

In line 403 404, the tsval and tsecr is the same 1250242242, Is this undo caused by DSACK?

Thanks

Neal Cardwell

May 10, 2021, 8:59:40 PM5/10/21

to mingkun bian, BBR Development

On Mon, May 10, 2021 at 9:05 AM mingkun bian <bianm...@gmail.com> wrote:

Hi Neal,

I have updated the more detailed bcc info(add tsval、tsecr and tcp_sock' recv_sack_cache[4] ) to attachment, and add more bcc samples.

I'm afraid that given this does not seem like a BBR bug, I don't have time to parse through all of the 8 traces you have provided. But I will try to offer some high-level thoughts.

In tcp_ack, tsecr = ((struct tcp_sock*)sk)->rx_opt.rcv_tsecr + ((struct tcp_sock*)sk)->tsoffset, but it does not look like accurate sometimes.For example:1. In tcptrace.txt:

In line 66, you say that "AFAICT this suggests that the previous ACK caused an undo event (probably based on TCP timestamps, aka an Eifel undo)", and the tsecr is 2344362543(In SND lines, I can not find the same tsval, so I am not sure whether the calculation of tsecr is correct ),In line 62, the timestamp of the first retransmission is 2344362876, so 2344362543 < 2344362876, Eifel will undo recovery.

So you can confirm that Eifel undo should happen in this case? Is this the same example with which you started this thread, or a new one?

A. “Given that the flow was not in lost recovery, and did not think any packets were lost, that would explain why it's not retransmitting anything">>In line 67, sndbuf is 30748, snd_una is 199504, snd_nxt is 230252, so there is not any more new date to send. assuming that there is still new data(sndbuf is bigger than 30748) to send at this time, should new data be sent?

If there is data in the sndbuf, and cwnd > packets_in_flight, then yes, generally something should be sent, unless pacing, the receive window, TSO deferral, TSQ, or Nagle algorithm prevent the transmission.

B. “My guess here is that the connection had reordering and then loss in the same flight of data, and triggered loss recovery, but when the connection detected the reordering and undid the loss recovery, it was left without any RACK reordering timer in place for a quick detection of the real loss.”>>According to the analysis of the above timestamp, Eifel undo the recovery in tcp_try_undo_partial,Is "without any RACK reordering timer" the logic of the red position?static void tcp_fastretrans_alert(struct sock *sk, const u32 prior_snd_una,bool is_dupack, int *ack_flag, int *rexmit).../* E. Process state. */switch (icsk->icsk_ca_state) {case TCP_CA_Recovery:if (!(flag & FLAG_SND_UNA_ADVANCED)) {if (tcp_is_reno(tp) && is_dupack)tcp_add_reno_sack(sk);} else {if (tcp_try_undo_partial(sk, prior_snd_una))return;/* Partial ACK arrived. Force fast retransmit. */do_lost = tcp_is_reno(tp) ||tcp_force_fast_retransmit(sk);}if (tcp_try_undo_dsack(sk)) {tcp_try_keep_open(sk);return;}tcp_identify_packet_loss(sk, ack_flag);break;

There are two red positions, so I'm not sure which one you are referring to. But in addition to the issue you are pointing out, that losses would have been identified at a point in the code *after* the tcp_try_undo_partial() short-circuits, there is the issue that tcp_clean_rtx_queue() has the following code, which prevents RACK decisions based on ACKs matching spuriously-retransmitted sequence ranges:

if (!tcp_skb_spurious_retrans(tp, skb))

tcp_rack_advance(tp, sacked, scb->end_seq,

tcp_skb_timestamp_us(skb));

tcp_rack_advance(tp, sacked, scb->end_seq,

tcp_skb_timestamp_us(skb));

C. In line 67 - 87, after rto sending, why does tcp state change to 1?

The value 1 is TCP_CA_Disorder, so if the state is changing to TCP_CA_Disorder then this is because there are SACKed sequence ranges without the sender having decided that something is lost.

In 67-69, Is it caused by the same reason Eifel(2344363273 < 2344363611)?But it seems obvious that 69 line‘s "ACK" is the response of line 68' "SND".

Sorry, I don't have time to parse that trace.

2. In tcptrace2 .txt:"TLPS" means that TLP send, which enter "tcp_send_loss_probe", the next line "SND" is the real send.In line 16, the timestamp of the first retransmission is 57722313In line 17, the ACK's tsecr is the same 57722313, It does not satisfy Eifel algorithm,but why does it still undo recovery?Is it because of DSACK(line 17‘s recv_sack_cache will update in line 18) “0-1348”.

If you are wondering why it undoes recovery, I would suggest instrumenting the undo-related functions (with "undo" in their name). DSACK would be one possible reason.

In line 18 RTSND, tcp state is 1, but lost_out is 46 not 0, it's different from tcprate.txt.3. In tcptrace3 .txt:In line 1067, the starting tcp state of the problem is 4.

Sorry, I don't have time to parse that trace.

4. In tcptrace8 .txt:In line 403 404, the tsval and tsecr is the same 1250242242, Is this undo caused by DSACK?

Here again, if you are wondering why it undoes recovery, I would suggest instrumenting the undo-related functions. DSACK would be one possible reason.

neal

To view this discussion on the web visit https://groups.google.com/d/msgid/bbr-dev/09e59d34-3cf4-4483-9840-e08c15c58a81n%40googlegroups.com.

Alexey Ivanov

May 10, 2021, 10:06:24 PM5/10/21

to Neal Cardwell, mingkun bian, BBR Development

Sorry for randomly jumping in. In case you are looking into possible

sources of network reordering I would suggest pinning your server

threads to CPUs (or trying enabling RFS in the kernel). In case your

are using Intel NICs you may consider disabling FlowDirector's ATR:

```

# ethtool --set-priv-flags eth0 flow-director-atr off

```

PS. We were recently troubleshooting a similar problem with

reordering, DSACKs, RACK heuristics, and Windows TCP stack[1].

[1] https://dropbox.tech/infrastructure/boosting-dropbox-upload-speed

> To view this discussion on the web visit https://groups.google.com/d/msgid/bbr-dev/CADVnQy%3DzK3szB3NKKPuNMyTVf91jSj%2Bqv-3KL4s%2BBc5dTp-VHA%40mail.gmail.com.

--

Sincerely,

Alexey Ivanov

sources of network reordering I would suggest pinning your server

threads to CPUs (or trying enabling RFS in the kernel). In case your

are using Intel NICs you may consider disabling FlowDirector's ATR:

```

# ethtool --set-priv-flags eth0 flow-director-atr off

```

PS. We were recently troubleshooting a similar problem with

reordering, DSACKs, RACK heuristics, and Windows TCP stack[1].

[1] https://dropbox.tech/infrastructure/boosting-dropbox-upload-speed

--

Sincerely,

Alexey Ivanov

mingkun bian

May 11, 2021, 9:39:00 AM5/11/21

to BBR Development

Hi Neal,

I have updated three tcpdump packet traces and corresponding bcc traces to

attachment, which represent three different scenarios.

1. tcptrace_10(undo because of DACK, then cyclic rto timeout):

a. In tcptrace_10.txt, in line 57, ack is 61405, and DSACK is 59977 - 61405(update in line 58), then undo recovery, but now all sndbuf data have sent,

and at this time, lost_out is 0, snd_una is 61405, snd_nxt is 66552, so now it can only wait rto timeout.

At similar scene, in line 60,DSACK cause undo.

b. Corresponding tcpdump is tcptrace_10.pacp, line is 58.

2. tcptrace_13(undo because of

Eifel, then cyclic rto timeout):

a. In tcptrace_13.txt, in line 1768, ack is 388225, packets_out is 17, sacked_out is 17, lost_out is 0(update in line 1769), this means that client have received all the data,

but in line 1769 - 1779, server continue to send data(ack's tsecr is smaller than timestamp of the first retransmission), so Eifel undo lost. it seems like that tcp core stack

does not realize that all the data has been sent successly.

b. Corresponding tcpdump is tcptrace_13.pacp, line is 1770.

3. tcptrace_14(undo because of DSACK, then continue to send new data):

a. In tcptrace_14.txt, in line 115 - 116, DSACK cause undo, but after line 127, line 130 can send new data.

b. Corresponding tcpdump is tcptrace_14.pacp, line is 112.

Yuchung Cheng

May 11, 2021, 12:45:04 PM5/11/21

to mingkun bian, BBR Development

Thank mingkun. I think the issue is as Neal has spotted that undo / Eifel could transition the state from Recovery -> undo -> Ca_disorder, and RACK was not engaged leading to timeout recovery. Neal and I will discuss internally how to address this as the code change is tricky with undo [undo is very complicated] and get back to you.

To view this discussion on the web visit https://groups.google.com/d/msgid/bbr-dev/9cf4f4eb-431f-4d93-a00f-0ab8340fc750n%40googlegroups.com.

Message has been deleted

Message has been deleted

Message has been deleted

Message has been deleted

mingkun bian

May 13, 2021, 10:13:13 AM5/13/21

to BBR Development

Hi,

I hvae reproduced this problem through packetdrill(packetdrill_16.txt), and upload the tcpdump and bcc info.

In tcptrace_16.txt:

RTOSET means the entrance of tcp_rearm_rto.

TLPSH means the entrance of tcp_schedule_loss_probe.

RACKMK means the entrance of tcp_rack_mark_lost.

RACKTM means the entrance of tcp_rack_reo_timeout.(not sampled)

1. In line 13, ACK is SACK(10000 - 11000), then RACK marked lost, lost_out is 10, packet_out is 11, sacket_out is 1.

2. In line 15, retransmit one packet(1-1000) because cwnd is 1(bbr_set_cwnd_to_recover_or_restore set cwnd 1).

3. In line 16, ACK is 1000(DSACK is 0 - 1000), in tcp_ack, tcp_set_xmit_timer will be executed,change the timer from RACK to RTO.

static int tcp_ack(struct sock *sk, const struct sk_buff *skb, int flag)

{

...

/* If needed, reset TLP/RTO timer; RACK may later override this. */

if (flag & FLAG_SET_XMIT_TIMER)

tcp_set_xmit_timer(sk);

}

Then exec tcp_fastretrans_alert's red position, undo_retrans is 0, undo_marker is not 0, so tcp_try_undo_dsa->cktcp_undo_cwnd_reduction will be executed,

then undo_marker is 0, cktcp_undo_cwnd_reduction return true, then tcp_try_keep_open will be executed, which change tcp_state from 3 to 1, but it does not

have change to exec tcp_identify_packet_loss, so now it can only wait for rto timeout(timer is set by RTO in tcp_ack's tcp_set_xmit_timer).

static void tcp_fastretrans_alert(struct sock *sk, const u32 prior_snd_una,

bool is_dupack, int *ack_flag, int *rexmit) {

...

/* E. Process state. */

switch (icsk->icsk_ca_state) {

case TCP_CA_Recovery:

if (!(flag & FLAG_SND_UNA_ADVANCED)) {

if (tcp_is_reno(tp) && is_dupack)

tcp_add_reno_sack(sk);

} else {

if (tcp_try_undo_partial(sk, prior_snd_una))

return;

/* Partial ACK arrived. Force fast retransmit. */

do_lost = tcp_is_reno(tp) ||

tcp_force_fast_retransmit(sk);

}

if (tcp_try_undo_dsack(sk)) {

tcp_try_keep_open(sk);

return;

}

tcp_identify_packet_loss(sk, ack_flag);

break;

}

4. In line 19-20, RTO timeout, then send packet(1000 - 2000)

5. In line 21, ACK is DSACK(1000 - 2000), tcp_fastretrans_alert->tcp_process_loss will be executed, tcp_process_loss->tcp_try_undo_loss will be executed because snd_una have been updated.

At the time, tp->undo_marker is not 0, undo_retrans is 0(because DSACK), so tcp_try_undo_loss->tcp_undo_cwnd_reduction will be executed, then lost_out is 0, undo_marker is 0, and tcp_state is 0(TCP_CA_Open) from 4.

Then tcp_identify_packet_loss->tcp_rack_mark_lost->tcp_rack_detect_loss(line 24) will be executed, but now tp->tsorted_sent_queue is empty(because rack in line 13 have deleted the tcp_tsorted_anchors), so reo_timeout is 0,

then it does not set rack timer, and can only wait rto timeout.

At switch's default case, tcp_identify_packet_loss will be executed again(line 25), but tp->rack.advanced is 0, so it will do nothing.

Then tcp_try_to_open will be executed(blue positon), it will change tcp_state from 0 to 1.

static void tcp_fastretrans_alert(struct sock *sk, const u32 prior_snd_una,

bool is_dupack, int *ack_flag, int *rexmit) {

...

/* E. Process state. */

switch (icsk->icsk_ca_state) {

case TCP_CA_Recovery:

...

break;

case TCP_CA_Loss:

tcp_process_loss(sk, flag, is_dupack, rexmit);

tcp_identify_packet_loss(sk, ack_flag);

if (!(icsk->icsk_ca_state == TCP_CA_Open ||

(*ack_flag & FLAG_LOST_RETRANS)))

return;

/* Change state if cwnd is undone or retransmits are lost */

/* fall through */

default:

...

tcp_identify_packet_loss(sk, ack_flag);

if (!tcp_time_to_recover(sk, flag)) {

tcp_try_to_open(sk, flag);

return;

Yuchung Cheng

May 17, 2021, 8:32:15 PM5/17/21

to mingkun bian, BBR Development

Hi Mingkun,

Would you be able to try / test if we provide you a 4.18.0 kernel patch?

To view this discussion on the web visit https://groups.google.com/d/msgid/bbr-dev/e001e8fa-ca00-4d85-8f67-3506abd13b25n%40googlegroups.com.

mingkun bian

May 17, 2021, 9:20:54 PM5/17/21

to BBR Development

Hi,

I would like to test it.

I would like to test it.

Thanks.

Yuchung Cheng

May 18, 2021, 7:16:25 PM5/18/21

to mingkun bian, BBR Development

Great could you try this patch we have rebased to 4.18.0

To view this discussion on the web visit https://groups.google.com/d/msgid/bbr-dev/76933e21-1959-4bbb-a7cb-fccef2d82c39n%40googlegroups.com.

mingkun bian

May 18, 2021, 9:49:48 PM5/18/21

to BBR Development

Hi,

I will test it, then report the result.

Thanks.

在2021年5月18日星期二 UTC+8 上午8:32:15<Yuchung Cheng> 写道:

mingkun bian

May 19, 2021, 7:26:22 AM5/19/21

to BBR Development

Hi,

I have incorporated the patch, but check successly by packetdrill(packetdrill_16.txt, tcp will occur rto)as mentioned “https://groups.google.com/g/bbr-dev/c/OFHADvJbTEI/m/gZN5j9dbAwAJ” , because undo is caused by dsack(In tcp_try_undo_dsack) not Eifel(In tcp_try_undo_partial),

/* E. Process state. */

switch (icsk->icsk_ca_state) {

case TCP_CA_Recovery:

if (!(flag & FLAG_SND_UNA_ADVANCED)) {

if (tcp_is_reno(tp) && is_dupack)

tcp_add_reno_sack(sk);

} else {

if (tcp_try_undo_partial(sk, prior_snd_una, &do_lost))

return;

}

if (tcp_try_undo_dsack(sk)) {

tcp_try_keep_open(sk);

return;

}

tcp_identify_packet_loss(sk, ack_flag);

if (icsk->icsk_ca_state != TCP_CA_Recovery) {

if (!tcp_time_to_recover(sk, flag))

return;

/* Undo reverts the recovery state. If loss is evident,

starts a new recovery (e.g. reordering then loss); */

tcp_enter_recovery(sk, (flag & FLAG_ECE));

}

break;

So I delete a line in your patch as following(red position), which can check whether loss after "dsack undo" is evident too, then packetdrill_16.txt check failed(tcp does not occur rto, but fastretrans)

/* E. Process state. */

switch (icsk->icsk_ca_state) {

case TCP_CA_Recovery:

if (!(flag & FLAG_SND_UNA_ADVANCED)) {

if (tcp_is_reno(tp) && is_dupack)

tcp_add_reno_sack(sk);

} else {

if (tcp_try_undo_partial_5(sk, prior_snd_una, &do_lost))

return;

}

if (tcp_try_undo_dsack(sk)) {

tcp_try_keep_open(sk);

//return;

}

tcp_identify_packet_loss(sk, ack_flag);

if (icsk->icsk_ca_state != TCP_CA_Recovery) {

if (!tcp_time_to_recover(sk, flag))

return;

/* Undo reverts the recovery state. If loss is evident,

starts a new recovery (e.g. reordering then loss); */

tcp_enter_recovery(sk, (flag & FLAG_ECE));

}

break;

I use these two ways to build the kernel, which can switch different mode by /proc, then upgrade tomorrow night, and I will sample the info by tcpdump and bcc to compare the difference of those two ways.

Thanks.

在2021年5月12日星期三 UTC+8 上午12:45:04<Yuchung Cheng> 写道:

Neal Cardwell

May 19, 2021, 9:24:14 AM5/19/21

to mingkun bian, BBR Development, Yuchung Cheng

Thanks. That approach for running the RACK logic after DSACK undo sounds reasonable to me. It is similar to one rev of an internal patch we considered during this process.

One related question: may we ask whether these traces are from synthetic workloads or real production user traffic? The DSACK behavior in the traces is quite strange, and seems to suggest either a bugger receiver TCP stack or a buggy middlebox.

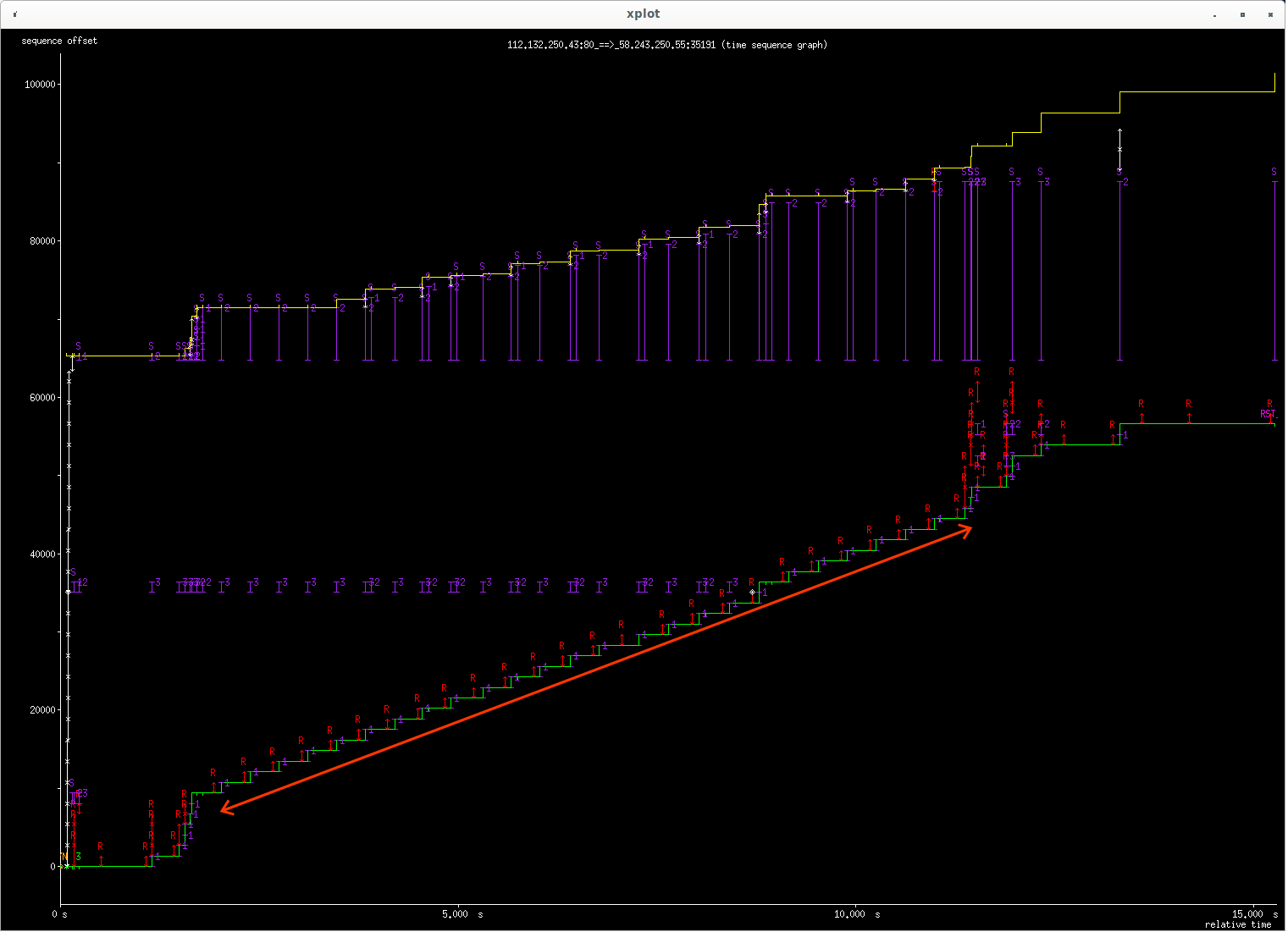

For example, in tcptrace_10.pcap (time-sequence plot attached) the receiver sends DSACKs for many consecutive segments (one DSACKed segment in response to each RTO retransmit), without the sender having first seen cumulative ACKs or SACKs for those segments. That looks like buggy behavior from the receiver or some middlebox.

thanks,

neal

To view this discussion on the web visit https://groups.google.com/d/msgid/bbr-dev/5a62c7c0-8064-42e7-9ed7-92341ca376a8n%40googlegroups.com.

mingkun bian

May 19, 2021, 11:14:40 PM5/19/21

to BBR Development

Hi Neal,

Almost all the traces are real production user traffic except tcptrace_16(simulated by packetdrill,whose dest port is 8080).

There are more scenarios like tcptrace10 because of DSACK without the sender having first seen cumulative ACKs or SACKs for those segments.

As you said, that does not looks like sender's buggy , but it caused the slow speed of the tcp protocol stack.

Thanks.

Yuchung Cheng

May 20, 2021, 8:20:15 PM5/20/21

to mingkun bian, BBR Development

Mingkun,

Here is v2 patch to address this buggy receiver issue. I've verified it passes the (modified version) of your packetdrill.

However this patch is really a workaround to a receiver or middlebox problem, kinda hard to call it a "feature" and definitely not a fix for TCP loss recovery.

To view this discussion on the web visit https://groups.google.com/d/msgid/bbr-dev/2bf09b36-c103-4f8a-891e-776deaadb739n%40googlegroups.com.

Message has been deleted

mingkun bian

May 22, 2021, 6:55:36 AM5/22/21

to BBR Development

Hi Yuchung,

I have upgraded the patch to real production, issue(rto timeout because of undo from TCP_CA_Recovery to TCP_CA_Disorder) have already solved, but the rto timeout once per second caused by other reasons still exists.

For example tcptrace_24.txt:

line 42-45, lost_out is set 4 from 45, tcp_state is set 3 from 4:

line 42, lost_out is 45, undo_retrans is 11(updated in line 43), tcpstate is 4, retrans_out is 4(updated in line 43)

line 43, RTSND means the entrance of tcp_retransmit_timer, then exec tcp_enter_loss->tcp_timeout_mark_lost->tcp_mark_skb_lost, it will set tp->retrans_out 0.

Then exec tcp_enter_loss->tcp_init_undo, then undo_retrans will be set -1(update in line 44).

line 44, lost_out is 45(updated in line 45), undo_retrans is 1(updated in line 45), tcpstate is 4(updated in line 45), retrans_out is 1(updated in line 45, because resend a segment)

line 45, lost_out is 4,(updated in line 46), undo_retrans is -1(updated in line 46), tcpstate is 3(updated in line 46), ackis 2697, sack is dsack(1349- 2697)(updated in line 46), retrans_out is 0(updated in line 46, becase dsack undo)

/* E. Process state. */

switch (icsk->icsk_ca_state) {

case TCP_CA_Recovery:

...

break;

case TCP_CA_Loss:

tcp_process_loss(sk, flag, is_dupack, rexmit);//ack is advanced, undo_retrans is 0(because of DSACK), so tcp_process_loss->tcp_try_undo_loss->tcp_undo_cwnd_reduction is executed, then lost_out is 0, tcp_state is TCP_CA_Open.

tcp_identify_packet_loss(sk, ack_flag);// it will marked new lost seg, then lost_out is 4

if (!(icsk->icsk_ca_state == TCP_CA_Open ||

(*ack_flag & FLAG_LOST_RETRANS)))

return;

/* Change state if cwnd is undone or retransmits are lost */

/* fall through */

default:

...

if (icsk->icsk_ca_state <= TCP_CA_Disorder)

tcp_try_undo_dsack(sk);

tcp_identify_packet_loss(sk, ack_flag);

if (!tcp_time_to_recover(sk, flag)) {//tcp_time_to_recover will return true becase lost_out is 4.

tcp_try_to_open(sk, flag);

return;

}

...

/* Otherwise enter Recovery state */

tcp_enter_recovery(sk, (flag & FLAG_ECE));//tcp_state is set TCP_CA_Recovery from TCP_CA_Open

line 61, tcp_state is TCP_CA_Disorder, snd_una is 9437, snd_nxt is 71445, rwnd is 62080, so it will not send a new segment becase of rwnd limit, it will only wait a rto timeout.

line 62 - 92, the rto timeout happen once per second, the reason is that tcp_state is set to 1 from 4

/* E. Process state. */

switch (icsk->icsk_ca_state) {

case TCP_CA_Recovery:

...

break;

case TCP_CA_Loss:

tcp_process_loss(sk, flag, is_dupack, rexmit);//ack is advanced, undo_retrans is 0(because of DSACK), so tcp_process_loss->tcp_try_undo_loss->tcp_undo_cwnd_reduction is executed,then lost_out is 0, tcp_state is TCP_CA_Open.

tcp_identify_packet_loss(sk, ack_flag);// no new segment is sent, so it does not mark new lost segment, lost_out is 0.

if (!(icsk->icsk_ca_state == TCP_CA_Open ||

(*ack_flag & FLAG_LOST_RETRANS)))

return;

/* Change state if cwnd is undone or retransmits are lost */

/* fall through */

default:

...

if (icsk->icsk_ca_state <= TCP_CA_Disorder)

tcp_try_undo_dsack(sk);

tcp_identify_packet_loss(sk, ack_flag);

if (!tcp_time_to_recover(sk, flag)) {//tcp_time_to_recover will return false becase lost_out is 0

tcp_try_to_open(sk, flag);//tcp_state is set TCP_CA_Disorder from TCP_CA_Open, then it will not contine to retranmit data, only wait next rto timeout.

return;

}

Thanks

Yuchung Cheng

May 24, 2021, 9:41:02 PM5/24/21

to mingkun bian, BBR Development

On Mon, May 24, 2021 at 6:37 PM Yuchung Cheng <ych...@google.com> wrote:

Hi Mingkun,Thanks for testing the patch. We'll upstream that then.For the new issue you've reported, unfortunately the problem is again the receiver / middlebox. The long recovery was due to this repeated behavior on a heavy loss situation:1. TCP timeout and retransmit2. Receiver acknowledges the retransmit with an DSACK indicating the retransmit was spurious<rinse and repeat many times until every hole is RTO recoveried>If these retransmits are all truly spurious, there should have been enough chances for a single cumulative ACK to ack all the original transmission. Not seeing such an ACK is a strong indication either the receiver or some middlebox is misbehaving. I do not think it's the sender's responsibility to further workaround this with more complexity. The undo logic is the most complicated part of TCP congestion control and has incurred dozens of bugs. Ultimately TCP needs a properly behavior receiver or middleboxes to perform well.Hence I think it's the receiver or the network to address this issue, or take the penalty of slowing their users down.

--

You received this message because you are subscribed to the Google Groups "BBR Development" group.

To unsubscribe from this group and stop receiving emails from it, send an email to bbr-dev+u...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/bbr-dev/4745d75c-5bdc-4a5a-b893-a5cec994ba27n%40googlegroups.com.

mingkun bian

May 25, 2021, 8:45:48 AM5/25/21

to BBR Development

Hi

Yuchung,

We don't have permission to address the issue of receiver / middlebox, this is really not a problem with the tcp protocol stack, but it may affect our customer metrics.

Two possible ways(it will increase the retransmission rate,

we will test them if the increase rate of retransmission is relatively low):

1. Recognize this kind of scenario(send one segment per rto time) by counting simply, then make tcp_try_undo_loss->tcp_undo_cwnd_reduction's second parameter false when certain conditions are met, then sender will retranmit more(tcpstate is 4 not 1) because lost_out is not 0.

2. No longer reduce the count of retrans_out in tcp_mark_skb_lost, make sure undo_retrans not to set -1 in tcp_init_undo.

Thanks.

Reply all

Reply to author

Forward

0 new messages