Meshroom Tutorial

Alban-Brice Pimpaud

Philmore971

Fabien Castan

Envoyé : vendredi 26 mars 2021 01:40

À : AliceVision <alice...@googlegroups.com>

Objet : Re: Meshroom Tutorial

You received this message because you are subscribed to the Google Groups "AliceVision" group.

To unsubscribe from this group and stop receiving emails from it, send an email to alicevision...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/alicevision/b1189b4c-424d-4a9e-8c11-199112bc6bfdn%40googlegroups.com.

Alban-Brice Pimpaud

Hello,

Thanks for the link, I have seen a similar post in the issues tab

on the Github

(https://github.com/alicevision/meshroom/issues/1223), but I found

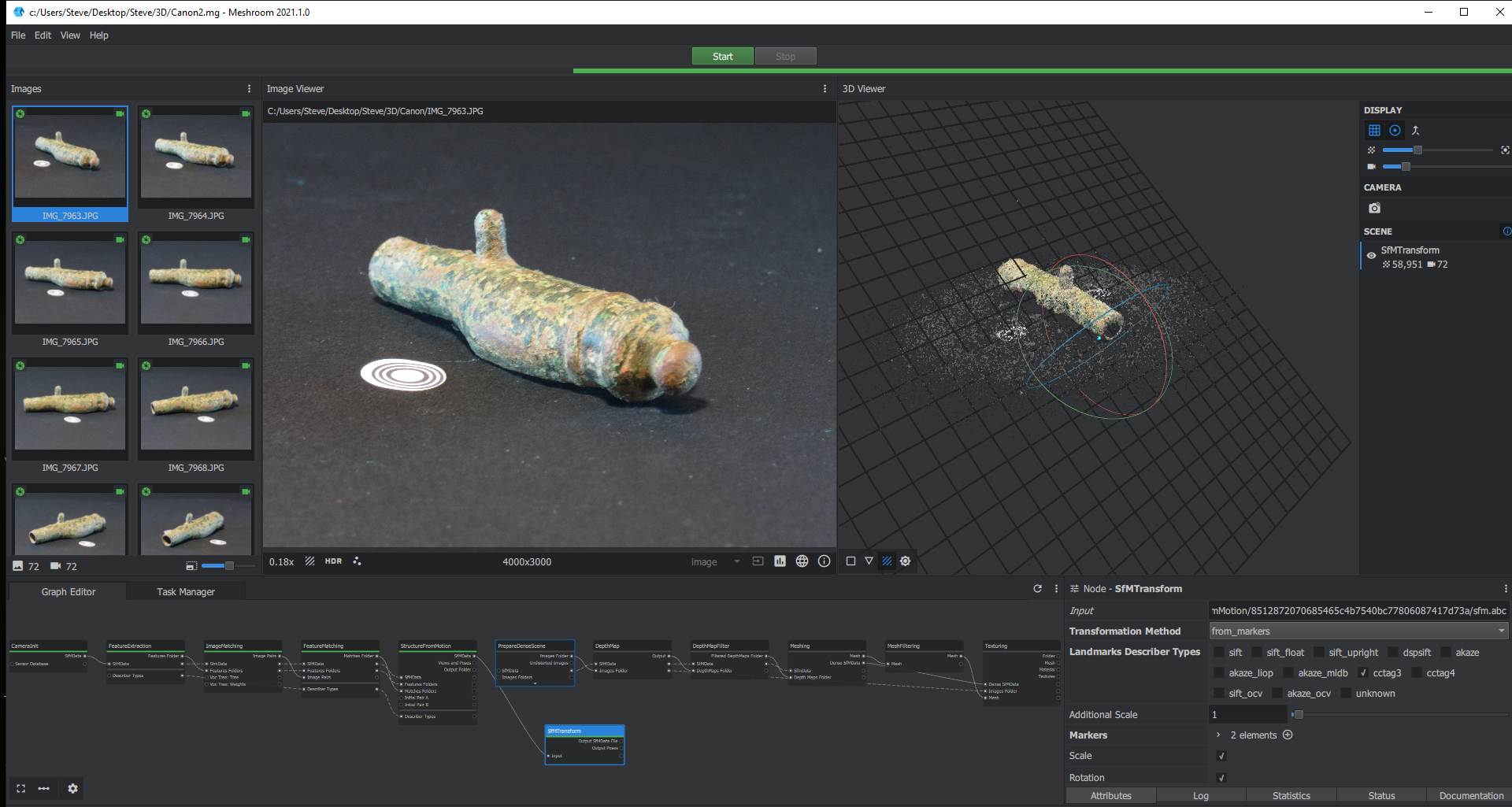

one of the pic possibly misleading, especially when you branch in

parallel SfMTransform and PrepareDenseScene from the

StructureFromMotion box. In my case, although performing a correct

SfMTransform on the sparse cloud, the whole process ended trying

to produce the mesh from the untransformed alignement...

Steven Lancaster

Alban-Brice Pimpaud

Hello,

I am sorry to hear that you get stuck following this workflow,

and unfortunately I am not going to be allowed to share the

photoset used, since this material doesn't belong to me...

Anyhow, if you don't mind, and if your photo set isn't too huge,

maybe can I, or someone else here, have a look to check what's

wrong with your set or with my method (that possibly does not work

for all use cases... lack of testing). Or maybe some screen

captures may help to understand what's wrong. Please let me know.

You received this message because you are subscribed to a topic in the Google Groups "AliceVision" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/alicevision/J_uzEE0OeQk/unsubscribe.

To unsubscribe from this group and all its topics, send an email to alicevision...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/alicevision/5d5087d5-4ee5-4817-80fd-abdd19edc3e3n%40googlegroups.com.

Steven Lancaster

Steven Lancaster

Fabien Castan

Envoyé : jeudi 8 avril 2021 21:24

You received this message because you are subscribed to the Google Groups "AliceVision" group.

To unsubscribe from this group and stop receiving emails from it, send an email to alicevision...@googlegroups.com.

Alban-Brice Pimpaud

Hello,

Some other comments that work in photogrammetric processing in general :

- even if you don't use all of them for the scaling, it would be a good idea to add more markers (or other features that add some textures to your picture) ; it helps at creating feature points during alignement and reconstruction processes ; at the very least, try to have 3 coordinates (then you'll get your object horizontally aligned, and your plate will match the scene grid in the end). Even if CCTags are circular, crop and print them as squares with a confortable margin. Regarding you object, I would have print a CCTag grid on kind of a A4 sheet.

- you should try to increase your depth of field ; here, the

usable field of your pics is quite low (blurred in both fore- and

background, therefore no way to anchor some robust key points out

of the object, which doesn't populate enough room in each photo).

- metallic objects have a high specularity , you can decrease it with the help of a polarized filter (and optionnally complemented with a cross-polarized lightsource, but it might be a bit advanced for now) ;

- lights are a bit to sharp... a screen attached in front of your

light sources will help at getting a more diffuse light ; or you

can try to bounce them with white reflectors (i.e. white sheets of

A0 paper, not to be seen, just to contribute in the overall

illumination)

- you are a little bit over exposed : if you shot in RAWs, you could try to lesser this quickly...

Good luck!

You received this message because you are subscribed to a topic in the Google Groups "AliceVision" group.

To unsubscribe from this topic, visit https://groups.google.com/d/topic/alicevision/J_uzEE0OeQk/unsubscribe.

To unsubscribe from this group and all its topics, send an email to alicevision...@googlegroups.com.

To view this discussion on the web visit https://groups.google.com/d/msgid/alicevision/CY4PR02MB2215CED8D622504FD9378FE8F0749%40CY4PR02MB2215.namprd02.prod.outlook.com.

Steven Lancaster

Steven Lancaster

Steven Lancaster

Alban-Brice Pimpaud

Yes, I think that's. it. Now you have to connect your SfMAlignement 'Output SFM File' to both PrepareDenseScene and DepthMap, and your PrepareDenseScene 'Image Folder' output to DepthMap 'Image Folder' Input...

I am not sure but it seems there's only 1 CCTag extracted...

If you were to add 2 other markers (let's say at the mouth and

the bottom of your cannon), you could easily find their

coordinates by triangulate their relative distances, since they

are rigourously on the same plane.

To view this discussion on the web visit https://groups.google.com/d/msgid/alicevision/d50d2c8d-4ae4-441d-8d12-1669264fcae6n%40googlegroups.com.