Adding bad rows to elastic search not working

53 views

Skip to first unread message

Jimit Modi

Mar 2, 2016, 4:20:38 AM3/2/16

to Snowplow

Hello All,

I was trying to add bad rows data to elastic search following steps here.

I wanted to run only elastic search step so I used below.

ubuntu@ip-x-x-x-x:~/emr-tools$ ./snowplow-emr-etl-runner -c config/config-es.yml -r config/resolver.json -n enrichments --skip staging,s3distcp,enrich,shred,archive_raw

D, [2016-03-02T09:08:57.436000 #7138] DEBUG -- : Initializing EMR jobflow

D, [2016-03-02T09:09:00.622000 #7138] DEBUG -- : EMR jobflow j-1I55K53DYNLFJ started, waiting for jobflow to complete...

D, [2016-03-02T09:09:01.086000 #7138] DEBUG -- : EMR jobflow j-1I55K53DYNLFJ completed successfully.

I, [2016-03-02T09:09:01.087000 #7138] INFO -- : Completed successfully

ubuntu@ip-x-x-x-x:~/emr-tools$

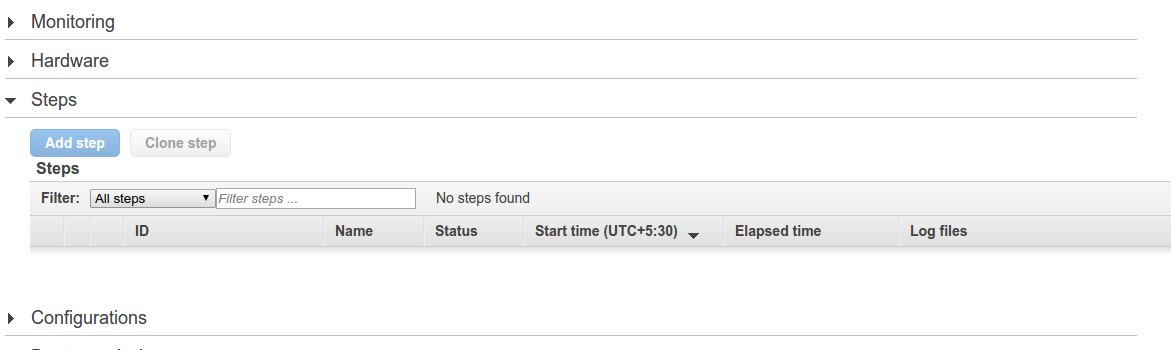

This started a EMR as output above. The the EMR was created with empty steps:

Any ideas ?

Thanks

Jimit Modi

Fred Blundun

Mar 2, 2016, 4:43:18 AM3/2/16

to Snowplow

Hi Jimit,

Could you share your configuration YAML (with AWS credentials removed)?

Thanks,

Fred

Jimit Modi

Mar 2, 2016, 4:54:25 AM3/2/16

to snowpl...@googlegroups.com

aws:

# Credentials can be hardcoded or set in environment variables

access_key_id: XXXXXXXXXX

secret_access_key: XXXXXXXXXX

s3:

region: us-east-1

buckets:

assets: s3://snowplow-hosted-assets # DO NOT CHANGE unless you are hosting the jarfiles etc yourself in your own bucket

jsonpath_assets: s3://snowplow-assets/custom-events-jsonpaths/ # If you have defined your own JSON Schemas, add the s3:// path to your own JSON Path files in your own bucket here

log: s3://snowplow-emr-logs/

raw:

in: # Multiple in buckets are permitted

- s3://elasticbeanstalk-us-east-1-238537778401/resources/environments/logs/publish/ # e.g. s3://my-archive-bucket/raw

processing: s3://snowplow-etl-emr-runner/processing

archive: s3://snowplow-etl-emr-runner/archive # e.g. s3://my-archive-bucket/raw

enriched:

good: s3://snowplow-etl-emr-runner/enriched/good # e.g. s3://my-out-bucket/enriched/good

bad: s3://snowplow-etl-emr-runner/enriched/bad # e.g. s3://my-out-bucket/enriched/bad

errors: # Leave blank unless :continue_on_unexpected_error: set to true below

archive: s3://snowplow-etl-emr-runner/enriched/archive # Where to archive enriched events to, e.g. s3://my-out-bucket/enriched/archive

shredded:

good: s3://snowplow-etl-emr-runner/shredded/good # e.g. s3://my-out-bucket/shredded/good

bad: s3://snowplow-etl-emr-runner/shredded/bad # e.g. s3://my-out-bucket/shredded/bad

errors: # Leave blank unless :continue_on_unexpected_error: set to true below

archive: s3://snowplow-etl-emr-runner/shredded/archive # Not required for Postgres currently

emr:

ami_version: 4.3.0 # Was 3.7.0 # Don't change this

region: us-east-1 # Always set this

jobflow_role: EMR_EC2_DefaultRole # Created using $ aws emr create-default-roles

service_role: EMR_DefaultRole # Created using $ aws emr create-default-roles

placement: # Set this if not running in VPC. Leave blank otherwise

ec2_subnet_id: # Set this if running in VPC. Leave blank otherwise

ec2_key_name: xxxx

bootstrap: [] # Set this to specify custom boostrap actions. Leave empty otherwise

software:

hbase: # To launch on cluster, provide version, "0.92.0", keep quotes

lingual: # To launch on cluster, provide version, "1.1", keep quotes

# Adjust your Hadoop cluster below

jobflow:

master_instance_type: m1.medium

core_instance_count: 2

core_instance_type: m1.medium

task_instance_count: 0 # Increase to use spot instances

task_instance_type: m1.medium

task_instance_bid: 0.015 # In USD. Adjust bid, or leave blank for non-spot-priced (i.e. on-demand) task instances

bootstrap_failure_tries: 3 # Number of times to attempt the job in the event of bootstrap failures

collectors:

format: clj-tomcat # Or 'clj-tomcat' for the Clojure Collector, or 'thrift' for Thrift records, or 'tsv/com.amazon.aws.cloudfront/wd_access_log' for Cloudfront access logs

enrich:

job_name: Snowplow ETL # Give your job a name

versions:

hadoop_enrich: 1.6.0 # Was 1.5.1 # Version of the Hadoop Enrichment process

hadoop_shred: 0.8.0 # Was 0.7.0 # Version of the Hadoop Shredding process

hadoop_elasticsearch: 0.1.0 # Version of the Hadoop to Elasticsearch copying process

continue_on_unexpected_error: false # Set to 'true' (and set :out_errors: above) if you don't want any exceptions thrown from ETL

output_compression: NONE # Compression only supported with Redshift, set to NONE if you have Postgres targets. Allowed formats: NONE, GZIP

storage:

download:

folder: # Postgres-only config option. Where to store the downloaded files. Leave blank for Redshift

targets:

- name: " Redshift database"

type: redshift

host: xxxxxxxxx.us-east-1.redshift.amazonaws.com # The endpoint as shown in the Redshift console

database: database # Name of database

port: 5439 # Default Redshift port

ssl_mode: disable # One of disable (default), require, verify-ca or verify-full

table: atomic.events

username: snowplow

password: xxxxxxxxxx

maxerror: 1 # Stop loading on first error, or increase to permit more load errors

comprows: 200000 # Default for a 1 XL node cluster. Not used unless --include compupdate specified

- name: "ELK Elasticsearch cluster" # Name for the target - used to label the corresponding jobflow step

type: elasticsearch # Marks the database type as Elasticsearch

host: "xxxxxxxxxx" # Elasticsearch host

database: snowplow # The Elasticsearch index

port: 9200 # Port used to connect to Elasticsearch

table: bad_rows # The Elasticsearch type

es_nodes_wan_only: false # Set to true if using Amazon Elasticsearch Service

username: # Not required for Elasticsearch

password: # Not required for Elasticsearch

sources: # Leave blank or specify: ["s3://out/enriched/bad/run=xxx", "s3://out/shred/bad/run=yyy"]

maxerror: # Not required for Elasticsearch

comprows: # Not required for Elasticsearch

monitoring:

tags: {} # Name-value pairs describing this job

logging:

level: DEBUG # You can optionally switch to INFO for production

--

Jim(y || it)

--

You received this message because you are subscribed to the Google Groups "Snowplow" group.

To unsubscribe from this group and stop receiving emails from it, send an email to snowplow-use...@googlegroups.com.

For more options, visit https://groups.google.com/d/optout.

Fred Blundun

Mar 2, 2016, 4:59:25 AM3/2/16

to snowpl...@googlegroups.com

The problem is that you haven't specified any sources for the Elasticsearch target. If you run a job with enrichment or shred steps, then the bad rows generated by those steps will automatically be loaded into Elasticsearch. But since you are only running the Elasticsearch step, the app doesn't know where to load bad rows from. You need something like this:

sources: ["s3://out/enriched/bad/run=xxx", "s3://out/shred/bad/run=yyy"]

Hope that helps,

Fred

Jimit Modi

Mar 2, 2016, 5:04:22 AM3/2/16

to snowpl...@googlegroups.com

Thanks Fred for quick reply.

So is it ok if I pass this way sources: ["s3://out/enriched/bad/", "s3://out/shred/bad/"] (removing run subdirectory, as I wanted to process of many previous run)

Thanks

--

Jim(y || it)

Fred Blundun

Mar 2, 2016, 5:14:03 AM3/2/16

to snowpl...@googlegroups.com

I'm afraid we don't currently support configuring higher-level directories in this way - you will need to specify each run by its ID. I have created a ticket to improve this here.

Regards,

Fred

Jimit Modi

Mar 2, 2016, 5:21:37 AM3/2/16

to snowpl...@googlegroups.com

So that mean, For every run directory I will have to run a different EMR for it ?

--

Jim(y || it)

Fred Blundun

Mar 2, 2016, 5:31:01 AM3/2/16

to snowpl...@googlegroups.com

The elements of the array won't correspond to different EMR jobs - just different steps within a single EMR job.

Regards,

Fred

Jimit Modi

Mar 2, 2016, 8:07:04 AM3/2/16

to snowpl...@googlegroups.com

Hey Fred,

It failed with below error. Any clue ?

ubuntu@ip-:~/emr-tools$ ./snowplow-emr-etl-runner -c config/config-es.yml -r config/resolver.json -n enrichments --skip staging,s3distcp,enrich,shred,archive_raw

D, [2016-03-02T12:03:07.308000 #7246] DEBUG -- : Initializing EMR jobflow

D, [2016-03-02T12:03:11.145000 #7246] DEBUG -- : EMR jobflow j-1V0UXPYB5YEMY started, waiting for jobflow to complete...

F, [2016-03-02T12:59:16.698000 #7246] FATAL -- :

Snowplow::EmrEtlRunner::EmrExecutionError (EMR jobflow j-1V0UXPYB5YEMY failed, check Amazon EMR console and Hadoop logs for details (help: https://github.com/snowplow/snowplow/wiki/Troubleshooting-jobs-on-Elastic-MapReduce). Data files not archived.

Snowplow ETL: TERMINATING [STEP_FAILURE] ~ elapsed time n/a [2016-03-02 12:10:52 +0000 - ]

- 1. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/enriched/bad/run=2016-02-15-13-14-11/ -> Elasticsearch: ELK Elasticsearch cluster: FAILED ~ 00:45:16 [2016-03-02 12:10:56 +0000 - 2016-03-02 12:56:13 +0000]

- 2. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/shredded/bad/run=2016-03-01-12-18-42/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 3. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/shredded/bad/run=2016-02-29-11-48-48/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 4. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/shredded/bad/run=2016-02-29-06-28-22/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 5. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/shredded/bad/run=2016-02-26-07-56-53/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 6. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/shredded/bad/run=2016-02-25-13-15-53/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 7. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/shredded/bad/run=2016-02-23-12-26-19/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 8. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/shredded/bad/run=2016-02-23-09-59-22/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 9. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/shredded/bad/run=2016-02-23-08-22-39/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 10. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/shredded/bad/run=2016-02-23-07-22-39/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 11. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/shredded/bad/run=2016-02-22-13-55-08/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 12. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/shredded/bad/run=2016-02-22-12-40-18/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 13. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/shredded/bad/run=2016-02-22-07-25-58/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 14. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/shredded/bad/run=2016-02-15-13-14-11/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 15. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/enriched/bad/run=2016-03-02-06-14-09/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 16. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/enriched/bad/run=2016-03-01-12-18-42/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 17. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/enriched/bad/run=2016-02-29-11-48-48/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 18. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/enriched/bad/run=2016-02-29-06-28-22/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 19. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/enriched/bad/run=2016-02-26-07-56-53/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 20. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/enriched/bad/run=2016-02-25-13-15-53/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 21. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/enriched/bad/run=2016-02-23-12-26-19/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 22. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/enriched/bad/run=2016-02-23-09-59-22/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 23. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/enriched/bad/run=2016-02-23-08-22-39/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 24. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/enriched/bad/run=2016-02-23-07-22-39/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 25. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/enriched/bad/run=2016-02-22-13-55-08/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 26. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/enriched/bad/run=2016-02-22-12-40-18/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 27. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/enriched/bad/run=2016-02-22-07-25-58/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]

- 28. Elasticity Scalding Step: Errors in s3://snowplow-etl-emr-runner/shredded/bad/run=2016-03-02-06-14-09/ -> Elasticsearch: ELK Elasticsearch cluster: CANCELLED ~ elapsed time n/a [ - ]):

/home/ubuntu/emr-tools/snowplow-emr-etl-runner!/emr-etl-runner/lib/snowplow-emr-etl-runner/emr_job.rb:471:in `run'

/home/ubuntu/emr-tools/snowplow-emr-etl-runner!/gems/contracts-0.7/lib/contracts/method_reference.rb:46:in `send_to'

/home/ubuntu/emr-tools/snowplow-emr-etl-runner!/gems/contracts-0.7/lib/contracts.rb:305:in `call_with'

/home/ubuntu/emr-tools/snowplow-emr-etl-runner!/gems/contracts-0.7/lib/contracts/decorators.rb:159:in `common_method_added'

/home/ubuntu/emr-tools/snowplow-emr-etl-runner!/emr-etl-runner/lib/snowplow-emr-etl-runner/runner.rb:68:in `run'

/home/ubuntu/emr-tools/snowplow-emr-etl-runner!/gems/contracts-0.7/lib/contracts/method_reference.rb:46:in `send_to'

/home/ubuntu/emr-tools/snowplow-emr-etl-runner!/gems/contracts-0.7/lib/contracts.rb:305:in `call_with'

/home/ubuntu/emr-tools/snowplow-emr-etl-runner!/gems/contracts-0.7/lib/contracts/decorators.rb:159:in `common_method_added'

file:/home/ubuntu/emr-tools/snowplow-emr-etl-runner!/emr-etl-runner/bin/snowplow-emr-etl-runner:39:in `(root)'

org/jruby/RubyKernel.java:1091:in `load'

file:/home/ubuntu/emr-tools/snowplow-emr-etl-runner!/META-INF/main.rb:1:in `(root)'

org/jruby/RubyKernel.java:1072:in `require'

file:/home/ubuntu/emr-tools/snowplow-emr-etl-runner!/META-INF/main.rb:1:in `(root)'

/tmp/jruby6137640058805975763extract/jruby-stdlib-1.7.20.1.jar!/META-INF/jruby.home/lib/ruby/shared/rubygems/core_ext/kernel_require.rb:1:in `(root)'

--

Jim(y || it)

Fred Blundun

Mar 2, 2016, 9:03:09 AM3/2/16

to snowpl...@googlegroups.com

Hello Jimit,

The EMR Console may have more information about exactly what went wrong - have a look at this wiki page for more information on how to debug failing jobs.

Regards,

Fred

Jimit Modi

Mar 2, 2016, 9:11:06 AM3/2/16

to snowpl...@googlegroups.com

Ok will check and let you know.

Also if below gives you some headstart. This is the stderr of the step in EMR

Exception in thread "main" cascading.flow.FlowException: step failed: (1/1) snowplow/bad_rows, with job id: job_1456920518439_0001, please see cluster logs for failure messages at cascading.flow.planner.FlowStepJob.blockOnJob(FlowStepJob.java:221) at cascading.flow.planner.FlowStepJob.start(FlowStepJob.java:149) at cascading.flow.planner.FlowStepJob.call(FlowStepJob.java:124) at cascading.flow.planner.FlowStepJob.call(FlowStepJob.java:43) at java.util.concurrent.FutureTask.run(FutureTask.java:262) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) at java.lang.Thread.run(Thread.java:745)

--

Jim(y || it)

Jimit Modi

Mar 4, 2016, 7:08:53 AM3/4/16

to Snowplow

Hey Fred,

Are you able to point me some where to fix this error ?

Ihor Tomilenko

Mar 4, 2016, 11:03:58 AM3/4/16

to Snowplow

Hi Jimit,

You might need to dig more to get more descriptive error.

If you go to EMR service on AWS you should be able to see the cluster list with the name you gave to it in the configuration file. It will be accompanied by the job ID. Click on the one which has failed and extend the Steps section. It should list all the attempted steps and their statuses. From there you should be able to access the corresponding logs too. Mind you it might not be sufficient to examine only the stderr. You might need to check the other logs too (syslog, controller).

Regards,

Ihor

Fred Blundun

Mar 8, 2016, 8:16:38 AM3/8/16

to Snowplow

Hi Jimit,

Those files don't look very informative, but they aren't the full logs. The EMR console page for the cluster should tell you the bucket in which the full logs for the job are stored. I suggest downloading them and grepping for errors. (More information on the location of the full logs is available here.)

Regards,

Fred

Reply all

Reply to author

Forward

0 new messages